Compression of Deep Convolutional Neural Network Using Additional Importance-Weight-Based Filter Pruning Approach

Abstract

1. Introduction

- Appropriate importance weights are assigned to different filters, which influence the contributions of different feature maps to the final decision.

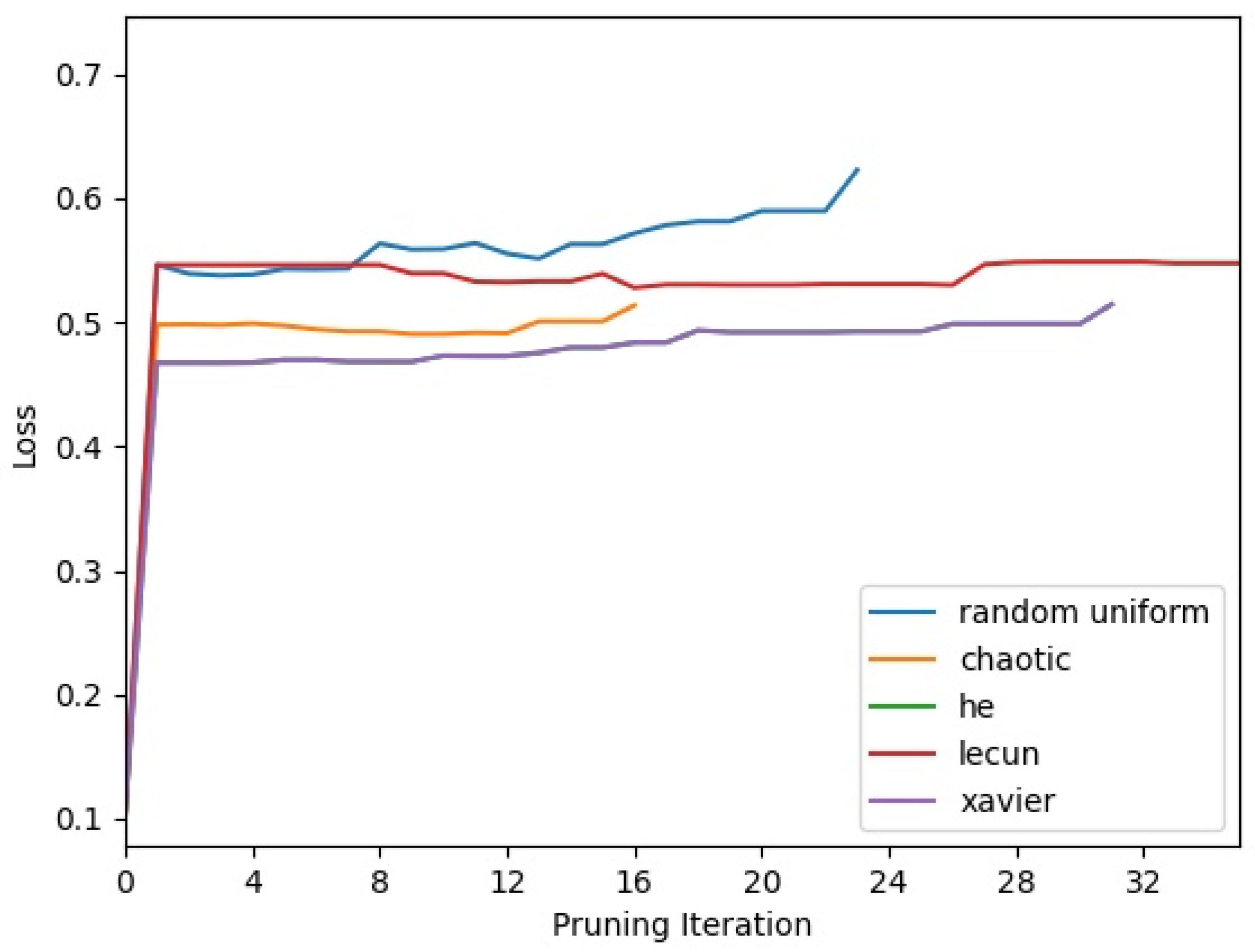

- The effect of different weight initialization strategies such as random, chaotic, He, LeCun, and Xavier initializations on the decision performance is analyzed.

- The pruned network is assessed on TernausNet and U-Net with the Inria and AIRS dataset for image segmentation application. Extensive experiments conducted on TernausNet and U-Net validate the improvement in the performance over unpruned networks.

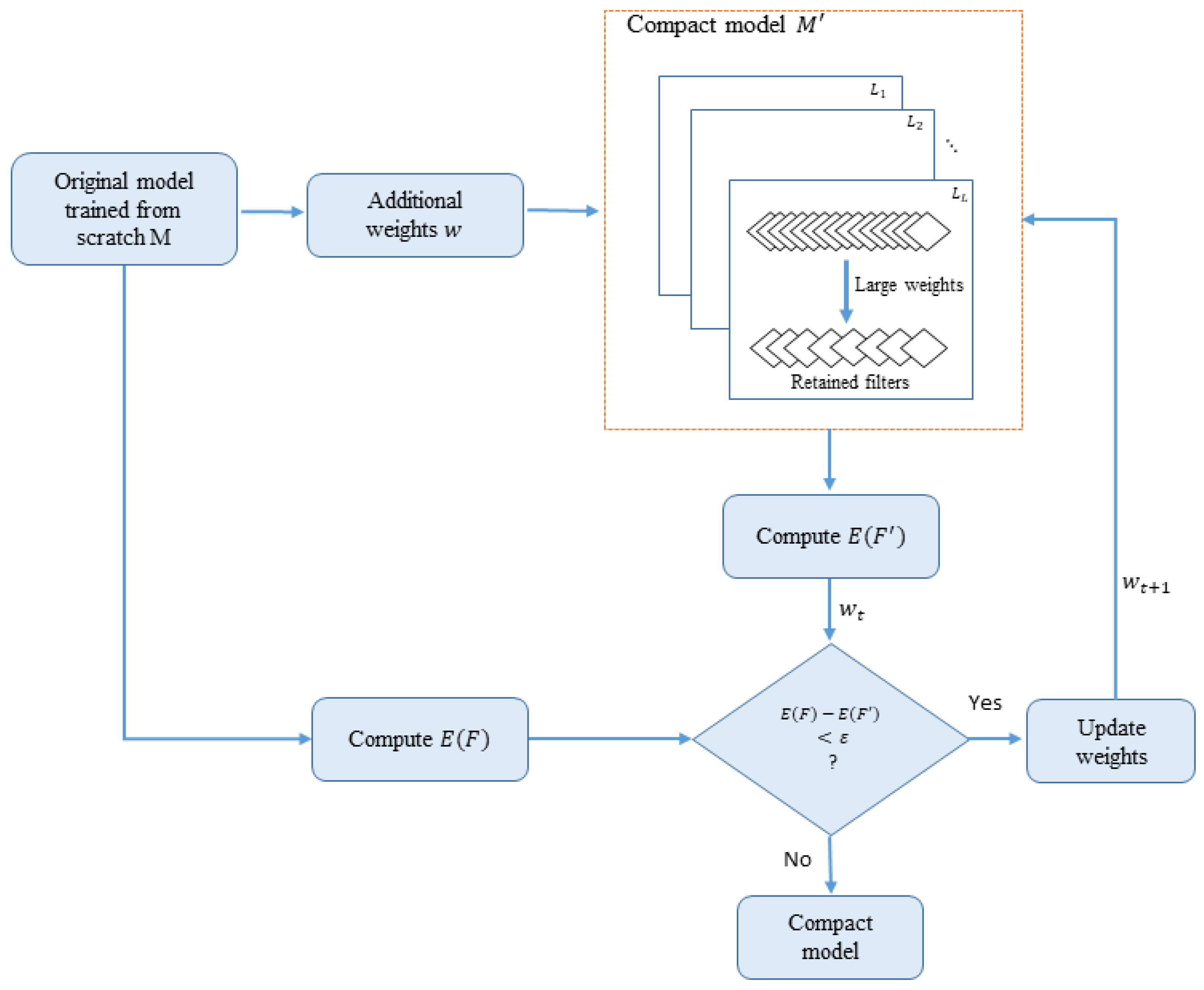

2. Proposed Methodology

- 1.

- Train the original unpruned model M from scratch and calculate its loss .

- 2.

- Add importance weights to each convolutional filter using any of the weight initialization strategies such as random uniform initialization, chaotic initialization, i.e., logistic map, He initialization, LeCun uniform initialization, and Xavier initialization.

- 3.

- Sort the filters based on the additional importance weights.

- 4.

- Prune the filters whose weight values (additional importance weights) are less than a pre-defined threshold. Eliminate the filters in the next convolutional layer corresponding to pruned feature maps (pruned filters), and obtain a compact model using the remaining filters.

- 5.

- Compute of the pruned model .

- 6.

- Check if , where denotes the error tolerance level:

- i.

- If the loss difference is less than , update the importance weights using Equation (1) and go to Step 3.

- ii.

- Else, stop the process.

- 7.

- Obtain the final pruned model (or compact model).

- 8.

- Fine-tune the pruned model to recover the loss.

3. Experiments

3.1. Experimental Settings

3.2. Evaluation Metrics

3.3. Experiments on Inria Dataset

3.3.1. TernausNet on Inria

3.3.2. U-Net on Inria

3.3.3. Comparison with Other Filter Pruning Approaches

- i.

- -norm: A score is computed for each filter using the -norm as . Filters with a low score are considered weak filters and, hence, pruned [50].

- ii.

- Random pruning: Filters are removed randomly [41].

- iii.

- Entropy-based pruning: The filter importance is calculated based on Shannon’s entropy measure. If a filter has low entropy, this filter is considered unimportant and, consequently, removed [40].

3.4. Experiments on AIRS Dataset

3.4.1. TernausNet on AIRS

3.4.2. U-Net on AIRS

3.4.3. Comparison with Other Filter Pruning Approaches

3.5. Ablation Study

3.5.1. Influence of Different Initialization Strategies on Performance

3.5.2. Sensitivity of Hyperparameter

3.5.3. Effects of Threshold Selection

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Chen, H.; Fang, M.; Yijia, C.; Yijun, X.; Tao, C. A Hyperspectral Image Classification Method Using Multifeature Vectors and Optimized KELM. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 2781. [Google Scholar] [CrossRef]

- Hasan, A.M.; Shin, J. Online Kanji Characters Based Writer Identification Using Sequential Forward Floating Selection and Support Vector Machine. Appl. Sci. 2022, 12, 10249. [Google Scholar] [CrossRef]

- Sawant, S.S.; Manoharan, P. Unsupervised band selection based on weighted information entropy and 3D discrete cosine transform for hyperspectral image classification. Int. J. Remote Sens. 2020, 41, 3948–3969. [Google Scholar] [CrossRef]

- Song, Y.; Cai, X.; Zhou, X.; Zhang, B.; Chen, H.; Li, Y.; Deng, W.; Deng, W. Dynamic hybrid mechanism-based differential evolution algorithm and its application. Expert Syst. Appl. 2023, 213, 118834, ISSN 0957-4174. [Google Scholar] [CrossRef]

- Roy, A.M. Adaptive transfer learning-based multiscale feature fused deep convolutional neural network for EEG MI multiclassification in brain–computer interface. Eng. Appl. Artif. Intell. 2022, 116, 105347, ISSN 0952-1976. [Google Scholar] [CrossRef]

- Pius, K.; Li, Y.; Agyekum, E.A.; Zhang, T.; Liu, Z.; Yamak, P.T.; Essaf, F. SD-UNET: Stripping down U-Net for Segmentation of Biomedical Images on Platforms with Low Computational Budgets. Diagnostics 2021, 10, 110. [Google Scholar] [CrossRef]

- Yaohui, L.; Gross, L.; Li, Z.; Li, X.; Fan, X.; Qi, W. Automatic Building Extraction on High-Resolution Remote Sensing Imagery Using Deep Convolutional Encoder-Decoder with Spatial Pyramid Pooling. IEEE Access 2019, 7, 128774–128786. [Google Scholar] [CrossRef]

- Lawal, M.O. Tomato detection based on modified YOLOv3 framework. Sci. Rep. 2021, 11. [Google Scholar] [CrossRef]

- Wu, Y.; Wan, G.; Liu, L.; Wei, Z.; Wang, S. Intelligent Crater Detection on Planetary Surface Using Convolutional Neural Network. In Proceedings of the 2021 IEEE 5th Advanced Information Technology, Electronic and Automation Control Conference (IAEAC), Chongqing, China, 12–14 March 2021; pp. 1229–1234. [Google Scholar]

- Zhang, M.; Li, W.; Du, Q. Diverse Region-Based CNN for Hyperspectral Image Classification. IEEE Trans. Image Process. 2018, 27, 2623–2634. [Google Scholar] [CrossRef]

- Zhou, Z.; Siddiquee, M.M.R.; Tajbakhsh, N.; Liang, J. UNet++: Redesigning Skip Connections to Exploit Multiscale Features in Image Segmentation. arXiv 2019, 39, 1856–1867. [Google Scholar] [CrossRef]

- Yu-Wei, H.; Leu, J.; Faisal, M.; Prakosa, S.W. Analysis of Model Compression Using Knowledge Distillation. IEEE Access 2022, 10, 85095–85105. [Google Scholar] [CrossRef]

- Wang, Z.; Lin, S.; Xie, J.; Lin, Y. Pruning Blocks for CNN Compression and Acceleration via Online Ensemble Distillation. IEEE Access 2019, 7, 175703–175716. [Google Scholar] [CrossRef]

- Zhou, Y.; Yen, G.G.; Yi, Z. Evolutionary Shallowing Deep Neural Networks at Block Levels. IEEE Trans. Neural Netw. Learn. Syst. 2021, 1–13. [Google Scholar] [CrossRef] [PubMed]

- Song, H.; Mao, H.; Dally, W.J. Deep Compression: Compressing Deep Neural Networks with Pruning, Trained Quantization and Huffman Coding. In Proceedings of the 4th International Conference on Learning Representations, ICLR 2016—Conference Track Proceedings, San Juan, Puerto Rico, 2–4 May 2016; pp. 1–14. [Google Scholar]

- Dong, Z.; Zhang, R.; Shao, X.; Kuang, Z. Learning Sparse Features with Lightweight ScatterNet for Small Sample Training. Knowl. Based Syst. 2020, 205, 106315. [Google Scholar] [CrossRef]

- Andrew, H.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv preprint 2017, arXiv:1704.04861. [Google Scholar]

- Luo, J.H.; Zhang, H.; Zhou, H.Y.; Xie, C.W.; Wu, J.; Lin, W. ThiNet: Pruning CNN Filters for a Thinner Net. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 41, 2525–2538. [Google Scholar] [CrossRef]

- Mehta, S.; Rastegari, M.; Caspi, A.; Shapiro, L.; Hajishirzi, H. ESPNet: Efficient Spatial Pyramid of Dilated Convolutions for Semantic Segmentation; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Computer Vision—ECCV ECCV 2018. Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2018; Volume 1. [Google Scholar] [CrossRef]

- Chen, Z.; Chen, Z.; Lin, J.; Liu, S.; Li, W. Deep Neural Network Acceleration Based on Low-Rank Approximated Channel Pruning. IEEE Trans. Circuits Syst. I Regul. Pap. 2020, 67, 1232–1244. [Google Scholar] [CrossRef]

- Swaminathan, S.; Garg, D.; Kannan, R.; Andres, F. Sparse Low Rank Factorization for Deep Neural Network Compression. Neurocomputing 2020, 398, 185–196. [Google Scholar] [CrossRef]

- Babak, H.; Stork, D.G.; Ivolff, G.J.; Hill, S.; Suite, R. Optiml Brain Surgeon and General Xetlwork Pruning. In Proceedings of the IEEE International Conference on Neural Networks, Nagoya, Japan, 25–29 October 1993; pp. 293–299. [Google Scholar]

- Wu, T.; Li, X.; Zhou, D.; Li, N.; Shi, J. Differential Evolution Based Layer-Wise Weight Pruning for Compressing Deep Neural Networks. Sensors 2021, 21, 880. [Google Scholar] [CrossRef]

- Xu, Y.; Fang, Y.; Peng, W.; Wu, Y. An Efficient Gaussian Sum Filter Based on Prune-Cluster-Merge Scheme. IEEE Access 2019, 7, 150992–150995. [Google Scholar] [CrossRef]

- Yeom, S.-K.; Seegerer, P.; Lapuschkin, S.; Binder, A.; Wiedemann, S.; Müller, K.-R.; Samek, W. Pruning by Explaining: A Novel Criterion for Deep Neural Network Pruning. Pattern Recognit. 2021, 115. [Google Scholar] [CrossRef]

- Forrest, I.; Song, H.; Mattew, M.; Khalid, A.; Wiliam, D.; Kurt, K. Squeezenet: Alexnet-level accuracy with 50x fewer parameters and <0.5. In International Conference on Learning Representations; IEEE: Piscataway, NJ, USA, 2017; pp. 1–13. [Google Scholar]

- Amir, G.; Kiseok, K.; Bichen, W.; Zizheng, T. SqueezeNext: Hardware-Aware Neural Network Design. IEEE/CVF Conf. Comput. Vis. Pattern Recognit. Work. (CVPRW) 2018, 2018, 1719–171909. [Google Scholar] [CrossRef]

- Song, H.; Pool, J.; Tran, J.; Dally, W.J. Learning Both Weights and Connections for Efficient Neural Networks. Adv. Neural Inf. Process. Syst. 2015, 1135–1143. [Google Scholar] [CrossRef]

- Wang, H.; Zhang, Q.; Wang, Y.; Yu, L.; Hu, H. Structured Pruning for Efficient ConvNets via Incremental Regularization. In Proceedings of the 2019 International Joint Conference on Neural Networks (IJCNN), Budapest, Hungary, 14–19 July 2019; pp. 1–8. [Google Scholar] [CrossRef]

- Wen, L.; Zhang, X.; Bai, H.; Xu, Z. Structured pruning of recurrent neural networks through neuron selection. Neural Netw. 2020, 123, 134–141, ISSN 0893-6080. [Google Scholar] [CrossRef]

- Kang, H.-J. Accelerator-Aware Pruning for Convolutional Neural Networks. IEEE Trans. Circuits Syst. Video Technol. 2020, 30, 2093–2103. [Google Scholar] [CrossRef]

- Liu, J.Y.; Hui, J.F.; Sun, M.Y.; Liu, X.S.; Lu, W.H.; Ma, C.H.; Zhang, Q.B. A Multiplier-Less Convolutional Neural Network Inference Accelerator for Intelligent Edge Devices. IEEE J. Emerg. Sel. Top. Circuits Syst. 2021, 11, 739–750. [Google Scholar] [CrossRef]

- Russo, E.; Palesi, M.; Monteleone, S.; Patti, D.; Mineo, A.; Ascia, G.; Catania, V. DNN Model Compression for IoT Domain-Specific Hardware Accelerators. IEEE Internet Things J. 2021, 9, 6650–6662. [Google Scholar] [CrossRef]

- Liu, J.Y.; Hui, J.F.; Sun, M.Y.; Liu, X.S.; Lu, W.H.; Ma, C.H.; Zhang, Q.B. Libraries of Approximate Circuits: Automated Design and Application in CNN Accelerators. IEEE J. Emerg. Sel. Top. Circuits Syst. 2020, 10, 406–418. [Google Scholar] [CrossRef]

- Li, G.; Ma, F.; Guo, J.; Zhao, H. A Flexible and Efficient FPGA Accelerator for Various Large-Scale and Lightweight CNNs. IEEE Trans. Circuits Syst. I: Regul. Pap. 2021, 69, 1185–1198. [Google Scholar]

- Liu, J.Y.; Hui, J.F.; Sun, M.Y.; Liu, X.S.; Lu, W.H.; Ma, C.H.; Zhang, Q.B. An Efficient and Flexible Accelerator Design for Sparse Convolutional Neural Networks. IEEE Trans. Circuits Syst. I: Regul. Pap. 2021, 68, 2936–2949. [Google Scholar] [CrossRef]

- Francisco, E.; Gary, G.Y. Pruning Deep Convolutional Neural Networks Architectures with Evolution Strategy. Inf. Sci. 2021, 552, 29–47. [Google Scholar] [CrossRef]

- Götz, T.I.; Göb, S.; Sawant, S.; Erick, X.F.; Wittenberg, T.; Schmidkonz, C.; Tomé, A.M.; Lang, E.W.; Ramming, A. Number of Necessary Training Examples for Neural Networks with Different Number of Trainable Parameters. J. Pathol. Inform. 2022, 13, 100114. [Google Scholar] [CrossRef] [PubMed]

- Yang, H.; Kang, G.; Dong, X.; Fu, Y.; Yang, Y. Soft Filter Pruning for Accelerating Deep Convolutional Neural Networks. IJCAI Int. Jt. Conf. Artif. Intell. 2018, 2234–2240. [Google Scholar] [CrossRef]

- Luo, J.H.; Wu, J. An Entropy-Based Pruning Method for CNN Compression. arXiv 2017, arXiv:1706.05791v1. [Google Scholar] [CrossRef]

- Deepak, M.; Bhardwaj, S.; Khapra, M.M.; Ravindran, B. Studying the Plasticity in Deep Convolutional Neural Networks Using Random Pruning. Mach. Vis. Appl. 2019, 30, 203–216. [Google Scholar] [CrossRef]

- Sawant, S.S.; Bauer, J.; Erick, F.X.; Ingaleshwar, S.; Holzer, N.; Ramming, A.; Lang, E.W.; Götz, T. An optimal-score-based filter pruning for deep convolutional neural networks. Appl. Intell. 2022. [Google Scholar] [CrossRef]

- Shi, J.; Xu, J.; Tasaka, K.; Chen, Z. SASL: Saliency-Adaptive Sparsity Learning for Neural Network Acceleration. IEEE Trans. Circuits Syst. Video Technol. 2020, 31, 2008–2019. [Google Scholar] [CrossRef]

- Lin, S.; Ji, R.; Li, Y.; Deng, C.; Li, X. Toward Compact ConvNets via Structure-Sparsity Regularized Filter Pruning. IEEE Trans. Neural Netw. Learn. Syst. 2020, 31, 574–588. [Google Scholar] [CrossRef]

- Tian, G.; Chen, J.; Zeng, X.; Liu, Y. Pruning by Training: A Novel Deep Neural Network Compression Framework for Image Processing. IEEE Signal Process. Lett. 2021, 28, 344–348. [Google Scholar] [CrossRef]

- Zheng, Y.-J.; Chen, S.-B.; Ding, C.H.Q.; Luo, B. Model Compression Based on Differentiable Network Channel Pruning. IEEE Trans. Neural Netw. Learn. Syst. 2022. [Google Scholar] [CrossRef]

- Zuo, Y.; Chen, B.; Shi, T.; Sun, M. Filter Pruning without Damaging Networks Capacity. IEEE Access 2020, 8, 90924–90930. [Google Scholar] [CrossRef]

- Li, G.; Wang, J.; Shen, H.; Chen, K.; Shan, G.; Lu, Z. CNNPruner: Pruning Convolutional Neural Networks with Visual Analytics. IEEE Trans. Vis. Comput. Graph. 2021, 27, 1364–1373. [Google Scholar] [CrossRef] [PubMed]

- Yang, H.; Liu, P.; Wang, Z.; Hu, Z.; Yang, Y. Filter Pruning via Geometric Median for Deep Convolutional Neural Networks Acceleration. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; p. 4335. [Google Scholar] [CrossRef]

- Hao, L.; Samet, H.; Kadav, A.; Durdanovic, I.; Graf, H.P. Pruning Filters for Efficient Convnets. In Proceedings of the 5th International Conference on Learning Representations 2017, ICLR 2017—Conference Track Proceedings, Toulon, France, 24–26 April 2017. [Google Scholar]

- Jun, M.; Sun, K.; Liao, X.; Leng, L.; Chu, J. Human Segmentation Based on Compressed Deep Convolutional Neural Network. IEEE Access 2020, 8, 167585–167595. [Google Scholar] [CrossRef]

- Chang, J.; Lu, Y.; Xue, P.; Xu, Y.; Wei, Z. ACP: Automatic Channel Pruning via Clustering and Swarm Intelligence Optimization for CNN. arXiv 2021, arXiv:2101.06407. [Google Scholar]

- Sijie, N.; Gao, K.; Ma, P.; Gao, X.; Zhao, H.; Dong, J.; Chen, Y.; Chen, D. Exploiting Sparse Self-Representation and Particle Swarm Optimization for CNN Compression. IEEE Trans. Neural Netw. Learn. Syst. 2022, 1447. [Google Scholar] [CrossRef]

- Wang, Z.; Li, F.; Shi, G.; Xie, X.; Wang, F. Network Pruning Using Sparse Learning and Genetic Algorithm. Neurocomputing 2020, 404, 247–256. [Google Scholar] [CrossRef]

- Zhou, Y.; Yen, G.G.; Yi, Z. Evolutionary Compression of Deep Neural Networks for Biomedical Image Segmentation. IEEE Trans. Neural Netw. Learn. Syst. 2020, 31, 2916–2929. [Google Scholar] [CrossRef]

- Singh, P.; Verma, V.K.; Rai, P.; Namboodiri, V.P. Namboodiri. Acceleration of Deep Convolutional Neural Networks Using Adaptive Filter Pruning. IEEE J. Sel. Top. Signal Process. 2020, 14, 838–847. [Google Scholar] [CrossRef]

- Sarfaraz, M.; Doja, M.N.; Chandra, P. Chaos Based Network Initialization Approach for Feed Forward Artificial Neural Networks. J. Comput. Theor. Nanosci. 2020, 17, 418–424. [Google Scholar] [CrossRef]

- Kaiming, H.; Zhang, X.; Ren, S.; Sun, J. Delving Deep into Rectifiers: Surpassing Human-Level Performance on ImageNet Classificatio. Int. J. Robot. Res. 2015. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436444. [Google Scholar] [CrossRef] [PubMed]

- Glorot, X.; Bordes, A.; Bengio, Y. Deep sparse rectifier neural networks. In Proceedings of the 14th International Conference on Artificial Intelligence and Statistics, Ft. Lauderdale, FL, USA, 11–13 April 2011; pp. 315–323. [Google Scholar]

- Vladimir, I.; Shvets, A. TernausNet: U-Net with VGG11 Encoder Pre-Trained on Imagenet for Image Segmentation. arXiv 2018, arXiv:1801.05746. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention 2015; Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F., Eds.; Springer International Publishing: Cham, Switzerland, 2015. [Google Scholar] [CrossRef]

- Emmanuel, M.; Tarabalka, Y.; Charpiat, G.; Alliez, P. Can Semantic Labeling Methods Generalize to Any City? The Inria Aerial Image Labeling Benchmark. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; pp. 3226–3229. [Google Scholar] [CrossRef]

- Chen, Q.; Wang, L.; Wu, Y.; Wu, G.; Guo, Z.; Waslander, S.L. Aerial Imagery for Roof Segmentation: A Large-Scale Dataset towards Automatic Mapping of Buildings. ISPRS J. Photogramm. Remote Sens. 2021, 147, 42–55. [Google Scholar] [CrossRef]

- Liu, L.; Liu, X.; Wang, N.; Zou, P. Modified Cuckoo Search Algorithm with Variational Parameters and Logistic Map. Algorithms 2018, 11, 30. [Google Scholar] [CrossRef]

- Yang, D.; Li, G.; Cheng, G. On the Efficiency of Chaos Optimization Algorithms for Global Optimization. Chaos Solitons Fractals 2007, 34, 1366–1375. [Google Scholar] [CrossRef]

- Liu, X.; Wu, L.; Dai, C.; Chao, H.C. Compressing CNNs Using Multi-Level Filter Pruning for the Edge Nodes of Multimedia Internet of Things. IEEE Internet Things J. 2021, 4662, 1–11. [Google Scholar] [CrossRef]

- Bischke, B.; Helber, P.; Folz, J.; Borth, D.; Dengel, A. Multi-Task Learning for Segmentation of Building Footprints with Deep Neural Networks. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; pp. 1480–1484. [Google Scholar] [CrossRef]

| Network | Model | Val. Loss | Val. Acc. (%) | Val. Acc. ↓ (%) | FLOPs | FLOPs ↓ (%) | # Param. | # Param. ↓ (%) |

|---|---|---|---|---|---|---|---|---|

| TernausNet | Baseline | 0.1037 | 96.00 | - | 32.3 B | - | 22.9 M | - |

| Proposed (Random) | 0.1121 | 95.68 (±0.001) | 0.32 | 4.85 B | 84.98 | 3.77 M | 83.54 | |

| Proposed (Chaotic) | 0.1061 | 95.91 (±0.001) | 0.09 | 6.27 B | 80.59 | 4.33 M | 81.12 | |

| Proposed (He) | 0.1046 | 96.04 (±0.001) | −0.04 | 11.6 B | 64.18 | 6.14 M | 73.20 | |

| Proposed (Lecun) | 0.1046 | 96.00 (±0.001) | 0.00 | 11.0 B | 66.02 | 6.36 M | 72.25 | |

| Proposed (Xavier) | 0.1039 | 96.04 (±0.001) | −0.04 | 11.6 B | 64.18 | 6.14 M | 73.20 | |

| U-Net | Baseline | 0.1025 | 96.08 | - | 54.6 B | - | 31.0 M | - |

| Proposed (Random) | 0.1079 | 95.80 (±0.001) | 0.27 | 8.85 B | 83.80 | 3.10 M | 83.68 | |

| Proposed (Chaotic) | 0.1066 | 95.88 (±0.001) | 0.21 | 10.2 B | 81.28 | 5.06 M | 81.15 | |

| Proposed (He) | 0.1070 | 95.89 (±0.001) | 0.20 | 13.6 B | 75.17 | 5.85 M | 75.32 | |

| Proposed (Lecun) | 0.1042 | 96.04 (±0.001) | 0.04 | 14.0 B | 74.46 | 7.66 M | 74.70 | |

| Proposed (Xavier) | 0.1056 | 95.94 (±0.001) | 0.15 | 13.9 B | 74.59 | 7.85 M | 74.39 |

| Network | Method | VAL. Loss | Val. Acc. ↓ (%) | FLOPs ↓ (%) | # Param. ↓ (%) |

|---|---|---|---|---|---|

| TernausNet | Random Pruning | 0.1059 | 0.09 | 74.30 | 75.08 |

| -Norm | 0.4030 | 10.19 | 81.74 | 66.47 | |

| Entropy | 0.1066 | 0.07 | 71.87 | 69.87 | |

| Proposed (Random) | 0.1121 | 0.32 | 84.98 | 83.54 | |

| Proposed (Chaotic) | 0.1061 | 0.09 | 80.59 | 81.12 | |

| Proposed (He) | 0.1046 | −0.04 | 64.18 | 73.20 | |

| Proposed (Lecun) | 0.1046 | 0.00 | 66.02 | 72.25 | |

| Proposed (Xavier) | 0.1039 | −0.04 | 64.18 | 73.20 | |

| U-Net | Random Pruning | 0.1055 | 0.15 | 74.21 | 74.19 |

| -Norm | 0.3113 | 9.50 | 87.23 | 57.32 | |

| Entropy | 0.1062 | 0.12 | 68.79 | 65.94 | |

| Proposed (Random) | 0.1079 | 0.28 | 83.80 | 83.68 | |

| Proposed (Chaotic) | 0.1066 | 0.21 | 81.28 | 81.15 | |

| Proposed (He) | 0.1042 | 0.04 | 74.46 | 74.70 | |

| Proposed (Lecun) | 0.1070 | 0.20 | 75.17 | 75.32 | |

| Proposed (Xavier) | 0.1056 | 0.16 | 74.59 | 74.39 |

| Network | Model | Val. Loss | Val. Acc. (%) | Val. Acc. ↓ (%) | FLOPs | FLOPs ↓ (%) | # Param. | # Param. ↓ (%) |

|---|---|---|---|---|---|---|---|---|

| TernausNet | Baseline | 0.0174 | 99.32 | - | 32.3 B | - | 22.9 M | - |

| Proposed (Random) | 0.0190 | 99.27 | 0.05 | 5.06 B | 84.34 | 3.78 M | 83.50 | |

| Proposed (Chaotic) | 0.0190 | 99.24 | 0.07 | 7.31 B | 77.37 | 4.27 M | 81.36 | |

| Proposed (He) | 0.0181 | 99.27 | 0.05 | 7.80 B | 75.84 | 5.64 M | 75.42 | |

| Proposed (Lecun) | 0.0178 | 99.29 | 0.03 | 8.08 B | 74.96 | 5.90 M | 74.27 | |

| Proposed (Xavier) | 0.0184 | 99.27 | 0.04 | 7.80 B | 75.84 | 5.64 M | 75.42 | |

| U-Net | Baseline | 0.0169 | 99.34 | - | 54.6 B | - | 31.0 M | - |

| Proposed (Random) | 0.0178 | 99.28 | 0.06 | 8.95 B | 83.63 | 5.02 M | 83.84 | |

| Proposed (Chaotic) | 0.0179 | 99.28 | 0.06 | 10.1 B | 81.46 | 5.97 M | 80.75 | |

| Proposed (He) | 0.0170 | 99.31 | 0.02 | 13.0 B | 76.13 | 7.50 M | 75.82 | |

| Proposed (Lecun) | 0.0173 | 99.31 | 0.03 | 13.4 B | 75.41 | 7.78 M | 74.92 | |

| Proposed (Xavier) | 0.0170 | 99.32 | 0.02 | 13.8 B | 74.68 | 7.61 M | 75.47 |

| Network | Method | Val. Loss | Val. Acc. ↓ (%) | FLOPs ↓ (%) | # Param. ↓ (%) |

|---|---|---|---|---|---|

| TernausNet | Random Pruning | 0.0180 | 0.05 | 74.73 | 74.13 |

| -Norm | 0.1491 | 4.66 | 80.91 | 65.45 | |

| Entropy | 0.0174 | 0.02 | 69.86 | 72.47 | |

| Proposed (Random) | 0.0190 | 0.05 | 84.34 | 83.50 | |

| Proposed (Chaotic) | 0.0190 | 0.07 | 77.37 | 81.36 | |

| Proposed (He) | 0.0181 | 0.05 | 75.84 | 75.42 | |

| Proposed (Lecun) | 0.0178 | 0.03 | 74.96 | 74.27 | |

| Proposed (Xavier) | 0.0184 | 0.04 | 75.84 | 75.42 | |

| U-Net | Random Pruning | 0.0170 | 0.03 | 73.28 | 73.98 |

| -Norm | 0.2578 | 6.29 | 87.12 | 57.02 | |

| Entropy | 0.0167 | 0.00 | 67.93 | 72.14 | |

| Proposed (Random) | 0.0178 | 0.06 | 83.63 | 83.84 | |

| Proposed (Chaotic) | 0.0179 | 0.06 | 81.46 | 80.75 | |

| Proposed (He) | 0.0170 | 0.02 | 76.13 | 75.82 | |

| Proposed (Lecun) | 0.0173 | 0.03 | 75.41 | 74.92 | |

| Proposed (Xavier) | 0.0170 | 0.02 | 74.68 | 75.47 |

| Layer | Baseline | Random | Chaotic | He | Lecun | Xavier | |||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| #Filters | #Filters | FLOPs ↓ (%) | #Filters | FLOPs ↓ (%) | #Filters | FLOPs ↓ (%) | #Filters | FLOPs ↓ (%) | #Filters | FLOPs ↓ (%) | |

| conv1 | 64 | 11 | 99.94 | 30 | 99.83 | 64 | 99.64 | 64 | 99.64 | 64 | 99.64 |

| conv2 | 128 | 49 | 99.75 | 58 | 99.20 | 128 | 96.25 | 128 | 96.25 | 128 | 96.25 |

| conv3 | 256 | 103 | 99.42 | 107 | 99.29 | 256 | 96.26 | 256 | 96.26 | 256 | 96.26 |

| conv4 | 256 | 106 | 98.75 | 112 | 98.63 | 132 | 96.14 | 133 | 96.11 | 132 | 96.14 |

| conv5 | 512 | 209 | 99.37 | 230 | 99.26 | 262 | 99.01 | 265 | 98.99 | 262 | 99.01 |

| conv6 | 512 | 211 | 98.74 | 223 | 98.54 | 245 | 98.17 | 265 | 98.00 | 245 | 98.17 |

| conv7 | 512 | 203 | 99.69 | 211 | 99.66 | 261 | 99.54 | 258 | 99.51 | 261 | 99.54 |

| conv8 | 512 | 214 | 99.69 | 220 | 99.67 | 249 | 99.54 | 255 | 99.53 | 249 | 99.54 |

| conv9 | 512 | 206 | 99.92 | 230 | 99.91 | 275 | 99.88 | 248 | 99.89 | 275 | 99.88 |

| convT_1 | 256 | 105 | 99.85 | 104 | 99.83 | 125 | 99.75 | 134 | 99.76 | 125 | 99.75 |

| conv10 | 512 | 203 | 99.54 | 227 | 99.48 | 254 | 99.32 | 260 | 99.28 | 254 | 99.32 |

| convT_2 | 256 | 104 | 99.40 | 109 | 99.29 | 127 | 99.08 | 134 | 99.01 | 127 | 99.08 |

| conv11 | 512 | 209 | 98.12 | 230 | 97.82 | 250 | 97.35 | 259 | 97.05 | 250 | 97.35 |

| convT_3 | 128 | 51 | 98.78 | 54 | 98.58 | 61 | 98.26 | 62 | 98.17 | 61 | 98.26 |

| conv12 | 256 | 96 | 98.28 | 115 | 97.82 | 128 | 97.18 | 129 | 97.13 | 128 | 97.18 |

| convT_4 | 64 | 25 | 98.90 | 28 | 98.53 | 31 | 98.19 | 30 | 98.23 | 31 | 98.19 |

| conv13 | 128 | 51 | 98.27 | 57 | 97.76 | 63 | 95.42 | 61 | 95.60 | 63 | 95.42 |

| convT_5 | 32 | 11 | 98.97 | 14 | 98.54 | 31 | 96.43 | 1 | 99.89 | 31 | 96.43 |

| conv14 | 32 | 10 | 99.60 | 13 | 98.95 | 7 | 98.78 | 19 | 97.74 | 7 | 98.78 |

| conv15 | 1 | 1 | 0 | 1 | 0 | 1 | 0 | 1 | 0 | 1 | 0 |

| # Remaining Filters | 5441 | 2178 | 2373 | 2950 | 2962 | 2950 | |||||

| Val. Acc. | 96.00% | 95.68% | 95.91% | 96.04% | 96.00% | 96.04% | |||||

| # Param. | 22.9 M | 3.77 M | 4.33 M | 6.14 M | 6.36 M | 6.14 M | |||||

| FLOPs | 32.3 B | 4.85 B | 6.27 B | 11.6 B | 11.0 B | 11.6 B | |||||

| Model Size (MB) | 89.58 | 14.76 | 16.93 | 24.02 | 24.87 | 24.02 | |||||

| Initialization Strategy | Compression Ratio | Acceleration Ratio |

|---|---|---|

| Random | 6.1× | 6.7× |

| Chaotic | 5.3× | 5.2× |

| He | 3.7× | 2.8× |

| LeCun | 3.6× | 2.9× |

| Xavier | 3.7× | 2.8× |

| Initialization Strategy | Val. Acc. (%) | FLOPs ↓ (%) | # Param. ↓ (%) | |

|---|---|---|---|---|

| Random | 0.3 | 95.84 | 84.34 | 83.50 |

| 0.35 | 95.85 | 84.34 | 83.50 | |

| 0.4 | 95.77 | 84.34 | 83.50 | |

| 0.45 | 95.78 | 84.34 | 83.50 | |

| 0.5 | 95.68 | 84.98 | 83.54 | |

| Chaotic (logistic map) | 0.3 | 95.92 | 80.59 | 81.12 |

| 0.35 | 95.82 | 80.59 | 81.12 | |

| 0.4 | 95.83 | 80.59 | 81.12 | |

| 0.45 | 95.81 | 80.59 | 81.12 | |

| 0.5 | 95.91 | 80.59 | 81.12 | |

| He | 0.3 | 95.99 | 75.84 | 75.42 |

| 0.35 | 95.91 | 75.84 | 75.42 | |

| 0.4 | 95.80 | 77.30 | 75.50 | |

| 0.45 | 96.03 | 64.18 | 73.20 | |

| 0.5 | 96.04 | 64.18 | 73.20 | |

| LeCun | 0.3 | 95.84 | 74.96 | 74.27 |

| 0.35 | 95.97 | 74.96 | 74.27 | |

| 0.4 | 95.93 | 74.96 | 74.27 | |

| 0.45 | 95.75 | 76.48 | 74.66 | |

| 0.5 | 96.00 | 66.02 | 72.25 | |

| Xavier | 0.3 | 95.88 | 75.84 | 75.42 |

| 0.35 | 96.03 | 75.84 | 75.42 | |

| 0.4 | 95.82 | 77.30 | 75.50 | |

| 0.45 | 96.07 | 64.18 | 73.20 | |

| 0.5 | 96.04 | 64.18 | 73.20 |

| Mean of Weights | 1.2-Times the Mean of Weights | |||||

|---|---|---|---|---|---|---|

| Val. Acc. (%) | FLOPs ↓ (%) | # Param. ↓ (%) | Val. Acc. (%) | FLOPs ↓ (%) | # Param. ↓ (%) | |

| Random | 95.92 | 74.78 | 74.29 | 95.76 | 84.34 | 83.50 |

| Chaotic | 95.93 | 74.43 | 74.72 | 95.83 | 80.59 | 81.12 |

| He | 95.82 | 77.19 | 75.50 | 95.79 | 77.30 | 75.50 |

| LeCun | 95.91 | 74.78 | 74.29 | 95.93 | 74.96 | 74.31 |

| Xavier | 95.79 | 77.19 | 75.50 | 95.82 | 77.30 | 75.50 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sawant, S.S.; Wiedmann, M.; Göb, S.; Holzer, N.; Lang, E.W.; Götz, T. Compression of Deep Convolutional Neural Network Using Additional Importance-Weight-Based Filter Pruning Approach. Appl. Sci. 2022, 12, 11184. https://doi.org/10.3390/app122111184

Sawant SS, Wiedmann M, Göb S, Holzer N, Lang EW, Götz T. Compression of Deep Convolutional Neural Network Using Additional Importance-Weight-Based Filter Pruning Approach. Applied Sciences. 2022; 12(21):11184. https://doi.org/10.3390/app122111184

Chicago/Turabian StyleSawant, Shrutika S., Marco Wiedmann, Stephan Göb, Nina Holzer, Elmar W. Lang, and Theresa Götz. 2022. "Compression of Deep Convolutional Neural Network Using Additional Importance-Weight-Based Filter Pruning Approach" Applied Sciences 12, no. 21: 11184. https://doi.org/10.3390/app122111184

APA StyleSawant, S. S., Wiedmann, M., Göb, S., Holzer, N., Lang, E. W., & Götz, T. (2022). Compression of Deep Convolutional Neural Network Using Additional Importance-Weight-Based Filter Pruning Approach. Applied Sciences, 12(21), 11184. https://doi.org/10.3390/app122111184