Automatic Movie Tag Generation System for Improving the Recommendation System

Abstract

1. Introduction

2. Background

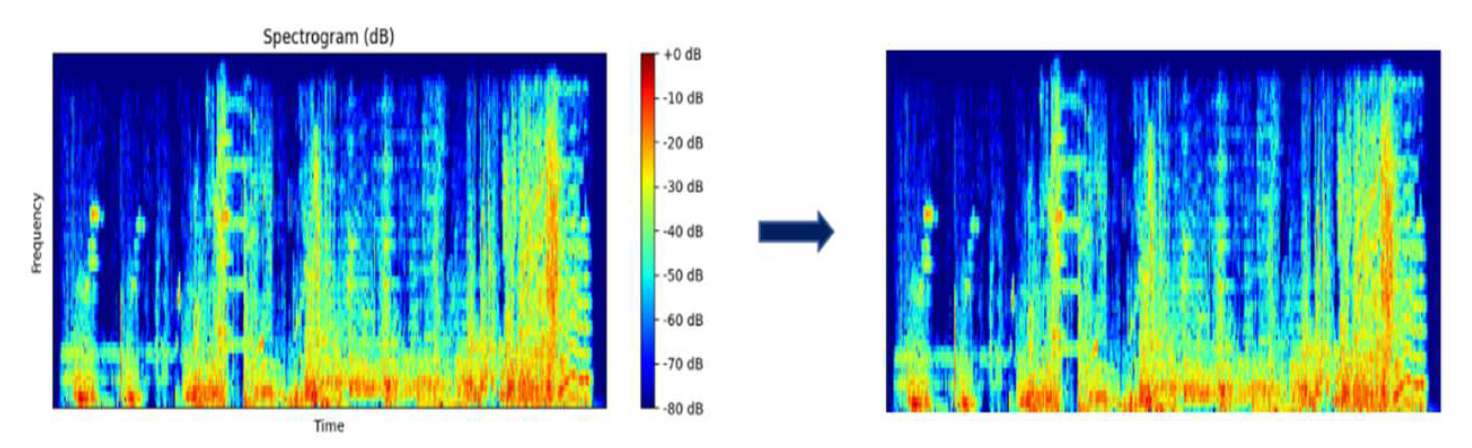

2.1. Sound Signal Processing

2.1.1. STFT (Short—Time Fourier Transform)

2.1.2. ZCR (Zero-Crossing Rate)

2.1.3. MFCCs (Mel-Frequency Cepstral Coefficient)

2.1.4. Spectral Feature

- Spectral Centroid

- Spectral Roll-off

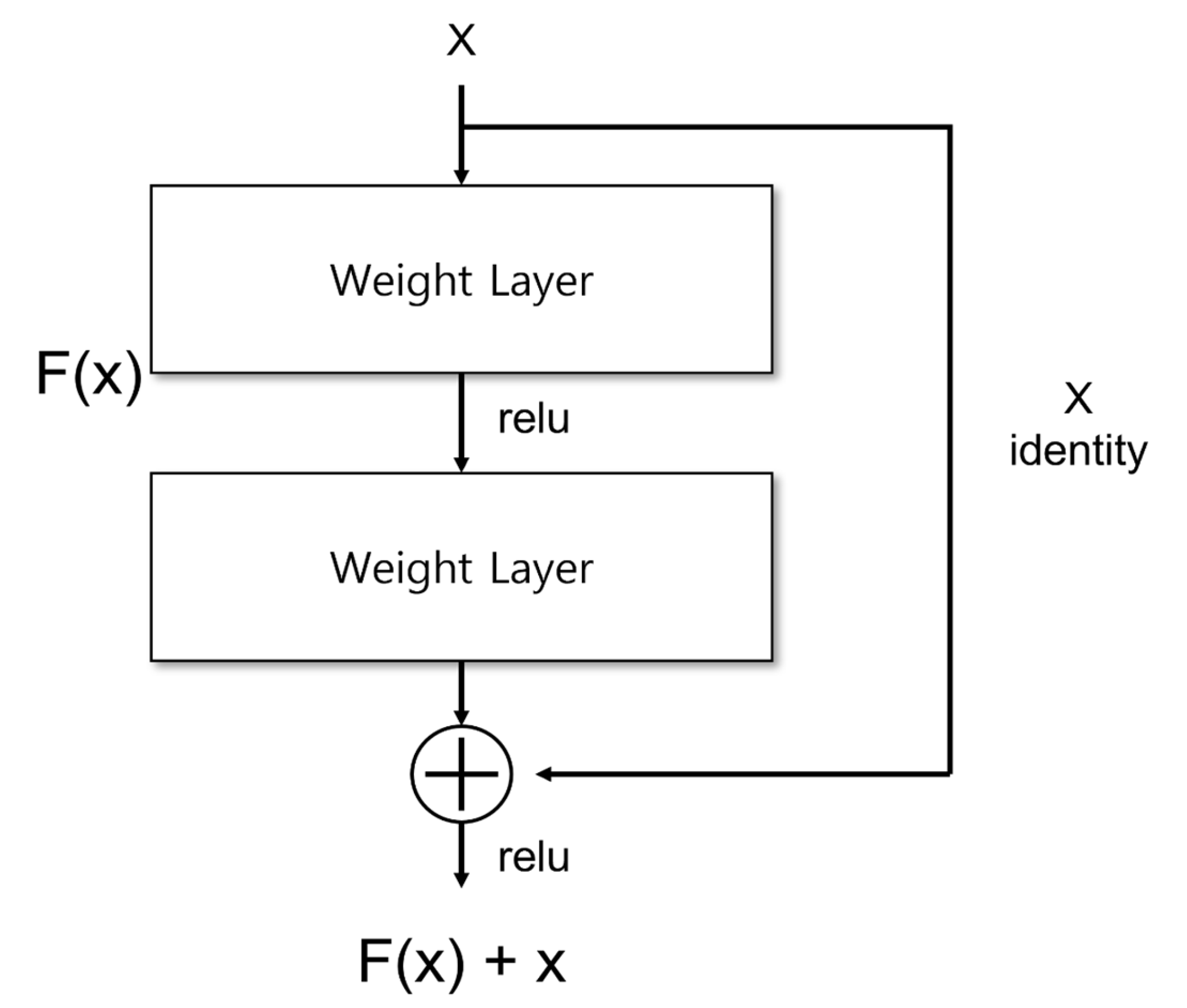

2.2. ResNet34

3. Related Works

3.1. Automatic Metadata Generation System

3.2. Video Metadata Tagging

3.3. Relevance between Audio and Movie

3.4. Emotional Classification of Music

4. Research Method

4.1. Extracting Video Data

4.2. Pre-Data Processing

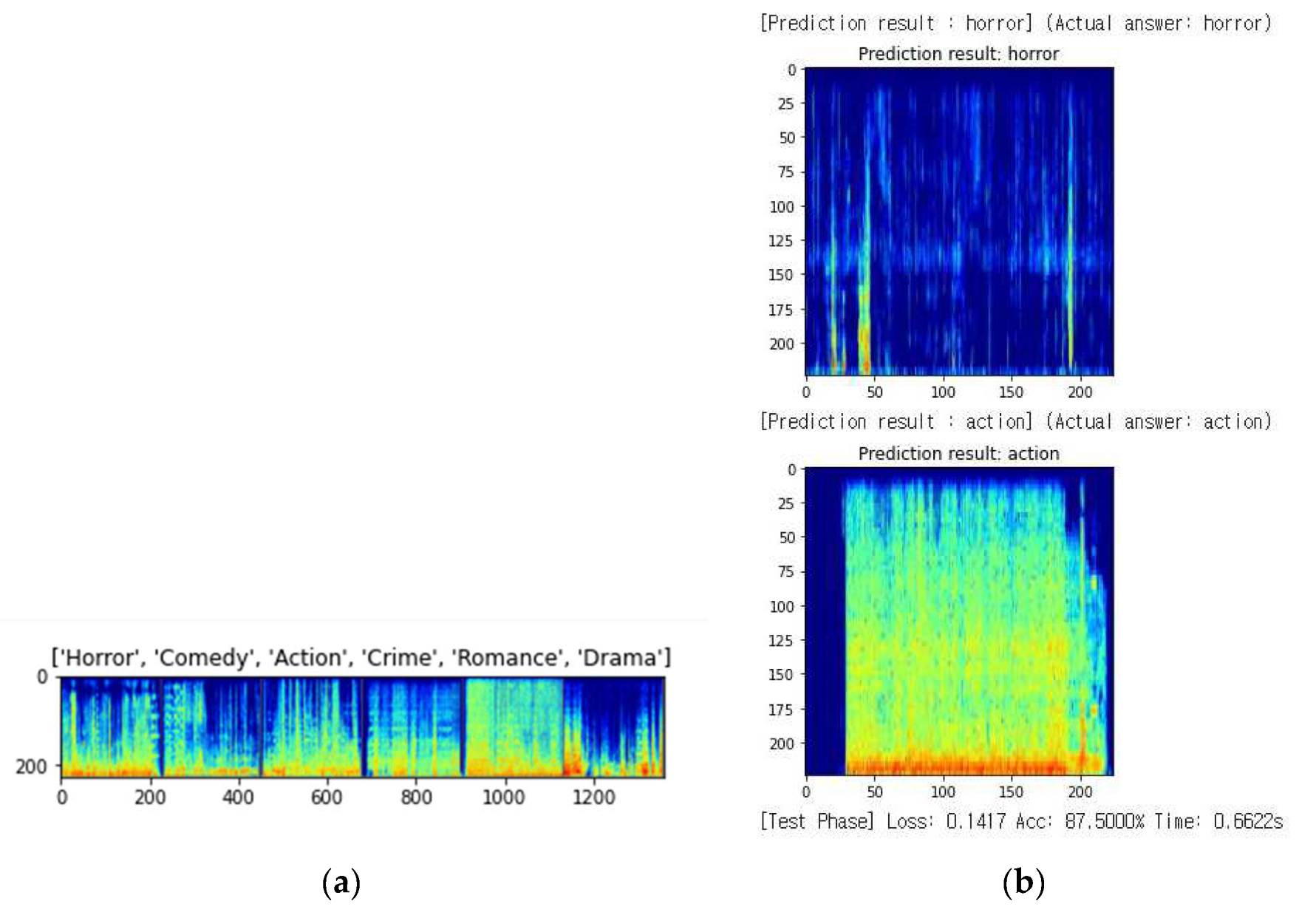

4.3. Genre Analysis

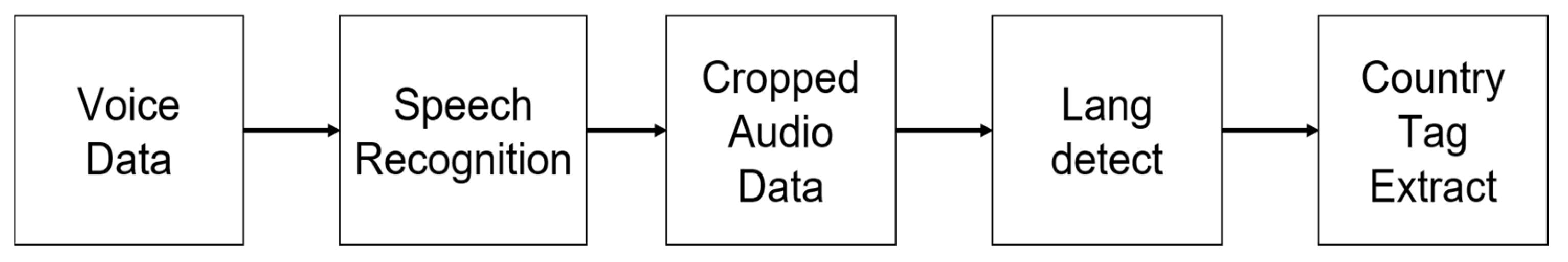

4.4. Country of Manufacture Analysis

4.5. Background Sound Analysis

5. Results

5.1. Results of Genre Analysis

5.2. Results of Country of Production Analysis

5.3. Results of Genre Prediction with Musical Features and 10 Tags

5.4. Results of Automatic Tag Generation System

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Debashis, D.; Laxman, S.; Sujoy, D. A Survey on Recommendation System. Int. J. Comput. Appl. 2017, 160, 6–10. [Google Scholar]

- Sanpechuda, T.; Kovavisaruch, L. Evaluations of Museum Recommender System Based on Different Visitor Trip Times. J. Inf. Commun. Converg. Eng. 2022, 20, 131–136. [Google Scholar]

- Bang, J.; Hwang, D.; Jung, H. Product Recommendation System based on User Purchase Priority. J. Inf. Commun. Converg. Eng. 2020, 18, 55–60. [Google Scholar]

- Mahesh, G.; Neha, C. A Review of Movie Recommendation System: Limitations, Survey and Challenges. Electron. Lett. Comput. Vis. Image Anal. 2020, 19, 18–37. [Google Scholar]

- Sunghwan, M.; Ingoo, H. Detection of the customer time-variant pattern for improving recommender systems. Expert Syst. Appl. 2005, 28, 188–199. [Google Scholar]

- Sunil, W.; Yili, H.; Munir, M.; Abhijit, J. Technology Diffusion in the Society: Analyzing Digital Divide in the Context of Social Class. In Proceedings of the 2011 44th Hawaii International Conference on System Sciences, Kauai, HI, USA, 4–7 January 2011. [Google Scholar]

- Mikael, G.; Gunnar, K. Measurements on the Spotify peer-assisted music-On-Demand streaming system. In Proceedings of the 2011 IEEE International Conference on Peer-to-Peer Computing, Kyoto, Japan, 31 August–2 September 2011. [Google Scholar]

- Manoj, K.; Yadav, K.K.; Ankur, S.; Vijay, K.G. A Movie Recommender System: MOVREC. Int. J. Comput. Appl. 2015, 124, 7–11. [Google Scholar]

- Zhengshun, W.; Ping, S.; Qiang, T.; Yan, R. A Non-Stationary Signal Preprocessing Method based on STFT for CW Radio Doppler Signal. In Proceedings of the 2020 4th International Conference on Vision, ICVISP 2020, Bangkok, Thailand, 9–11 December 2020. [Google Scholar]

- Kunpeng, L.; Lihua, G.; Nuo, T.; Feixiang, G.; Qi, W. Feature Extraction Method of Power Grid Load Data Based on STFT-CRNN. In Proceedings of the 6th International Conference on Big Data and Computing, ICBDC’21, Shenzhen, China, 22–24 May 2021. [Google Scholar]

- Garima, S.; Kartikeyan, U.; Sridhar, K. Trends in Audio Signal Feature Extraction Methods. Appl. Acoust. 2020, 158, 1–21. [Google Scholar]

- Hossan, A.; Memon, S.; Gregory, M. A Novel Approach for MFCC Feature Extraction. In Proceedings of the 2010 4th International Conference on Signal Processing and Communication Systems, Gold Coast, QLD, Australia, 13–15 December 2010. [Google Scholar]

- Monir, R.; Kostrzewa, D.; Mrozek, D. Singing Voice Detection: A Survey. Entropy 2022, 24, 2–4. [Google Scholar] [CrossRef]

- Kos, M.; Kacic., Z.; Vlaj, D. Acoustic classification and segmentation using modified spectral roll-Off and variance-Based features. Digit. Signal Process. 2013, 23, 659–675. [Google Scholar] [CrossRef]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. ImageNet Large Scale Visual Recognition Challenge. arXiv 2014, arXiv:1409.0575. [Google Scholar] [CrossRef]

- Kaiming, H.; Xiangyu, Z.; Shaoqing, R.; Jian, S. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Marko, A.R.; Johan, B.; Herbert, V.D.S. Automatic metadata generation using associative networks. ACM Trans. Inf. Syst. 2009, 27, 1–20. [Google Scholar]

- Wangsung, C.; Youngmin, C.; Wonseock, C. Automatic generation of the keyword metadata in each scenes using the script of a video content. In Proceedings of the Journal of the Korea Communications Association’s Comprehensive Academic Presentation (Summer), Jeju, Korea, 26 June 2009; Available online: https://www.dbpia.co.kr/journal/articleDetail?nodeId=NODE02088587 (accessed on 23 August 2022).

- Islam, M.M.; Bertasius, G. Long Movie Clip Classification with State-Space Video Models. arXiv 2022, arXiv:2204.01692. [Google Scholar]

- Antoine, M.; Dimitri, Z.; Jean-Baptiste, A.; Makarand, T.; Ivan, L.; Josef, S. How To 100M: Learning a Text-Video Embedding by Watching Hundred Million Narrated Video Clips. arXiv 2019, arXiv:1906.03327. [Google Scholar]

- Gorbman, C. Unheard Melodies: Narrative Film Music; Indiana University Press: Bloomington, IN, USA, 1987; Volume 7, p. 186. [Google Scholar]

- Jon, G.; David, B. Telling Stories with Soundtracks: An Empirical Analysis of Music in Film. In Proceedings of the First Workshop on Storytelling; Association for Computational Linguistics: Stroudsburg, PA, USA, 2018; pp. 33–42. [Google Scholar]

- Barbara, M.; Juan, C.; Soyeon, A. Soundtrack design: The impact of music on visual attention and affective responses. Appl. Ergon. 2021, 93, 103301. [Google Scholar]

- Görne, T. The Emotional Impact of Sound: A Short Theory of Film Sound Design. EPiC Ser. Technol. 2019, 1, 17–30. [Google Scholar]

- Trohidis, K.; Tsoumakas, G.; Kalliris, G.; Vlahavas, L. Multi-Label classification of music by emotion. EURASIP J. Audio Speech Music. Process. 2011, 1, 1–9. [Google Scholar] [CrossRef]

- Deepti, C.; Niraj, P.S.; Sachin, S. Development of music emotion classification system using convolution neural network. Int. J. Speech Technol. 2021, 24, 571–580. [Google Scholar]

- Hizlisoy, S.; Yildirim, S.; Tufekci, Z. Music emotion recognition using convolutional long short term memory deep neural networks. Eng. Sci. Technol. Int. J. 2021, 24, 760–767. [Google Scholar] [CrossRef]

- Sudipta, K.; Suraj, M.A.; Pastor, L.M.; Thamar, S. MPST: A Corpus of Movie Plot Synopses with Tags. In Proceedings of the 11th Edition of its Language Resources and Evaluation Conference (LREC) 2018, Miyzaki, Japan, 9–11 May 2018. [Google Scholar]

- Harper, F.M.; Joseph, A.K. The MovieLens Datasets: History and Context. ACM Trans. Intell. Syst. 2016, 5, 1–19. [Google Scholar] [CrossRef]

- IMDb Datasets. Available online: https://www.imdb.com/interfaces/ (accessed on 16 August 2022).

- Qingqiu, H.; Yu, X.; Anyi, R.; Jiaze, W.; Dahua, L. MovieNet: A Holistic Dataset for Movie Understanding. In Proceedings of the 2020 European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020. [Google Scholar]

- Tomasz, C.; Szymon, R.; Dawid, W.; Adam, K.; Bozena, K. Classifying Emotions in Film Music-A Deep Learning Approach. Electronics 2021, 10, 2955. [Google Scholar]

- Jiyoung, J. The Correlation of Bach Music and the Scene as Seen in Films. Master’s Thesis, Music in Modern Media the Graduate School of Ewha Womans University, Seoul, Korea, January 2007. [Google Scholar]

- Umair, A.K.; Miguel, A.M.-D.-A.; Saleh, M.A.; Adnan, A.; Atiq, U.R.; Najm, U.S.; Khalid, H.; Naveed, I. Movie Tags Prediction and Segmentation Using Deep Learning. IEEE Access 2020, 8, 6071–6086. [Google Scholar]

- Gaurav, A.; Hari, O. An efficient supervised framework for music mood recognition using autoencoder-based optimized support vector regression model. IET Signal Process. 2021, 15, 98–121. [Google Scholar]

- Jordi, P.; Xavier, S. musicnn: Pre-Trained convolutional neural networks for music audio tagging. In Proceedings of the 20th International Society for Music Information Retrieval Conference (ISMIR), Delft, The Netherlands, 4–8 November 2019. [Google Scholar]

| Company | Benefit through Recommendation System |

|---|---|

| Netflix | Two-thirds of the movies users watch are recommended |

| Google News | recommendations generate 38% more click-throughs |

| Amazon | 35% sales from recommendations |

| Choice stream | 28% of people would buy more music if they found what they liked |

| Accuracy (Initial Epochs) | Max Accuracy | Learning Time | |

|---|---|---|---|

| ResNet34 | 28.0822% | 38.3562% | 1311.7810 s |

| VGG-19 | 21.9178% | 30.1370% | 4423.7360 s |

| MobileNet | 22.6027% | 32.8767% | 990.3590 s |

| No. | Title | Country |

|---|---|---|

| 1 | Super Girl | Anglosphere |

| 2 | Speed | Anglosphere |

| 3 | Star Trek 3 | Anglosphere |

| 4 | The Shining | Anglosphere |

| 5 | Tomboy | Anglosphere |

| 6 | Treasure Island | Anglosphere |

| 7 | Urban Cowboy | Anglosphere |

| 8 | Wolf | Anglosphere |

| No. | Title | Genre | Movie Tag | Country | Result | |

|---|---|---|---|---|---|---|

| 1 | Assassins | Real Tag | Action, Crime, Thriller | violence, romantic, suspenseful, sadist | US | PM |

| Proposed Tag | Crime | violence, romantic | Anglosphere | |||

| 2 | Beautiful Girls | Real Tag | Comedy, Drama, Romances | violence, romantic, humor, atmospheric | US | PM |

| Proposed Tag | Comedy | romantic | Anglosphere | |||

| 3 | Blood sport 3 | Real Tag | Action, Sport | violence | US | PM |

| Proposed Tag | Action | violence, comedy | Anglosphere | |||

| 4 | Bottle Rocket | Real Tag | Comedy, Crime, Drama | romantic, humor | US | PM |

| Proposed Tag | Comedy | romantic, comedy | Anglosphere | |||

| 5 | Braveheart | Real Tag | Biography, Drama, History | violence, romantic, action, dramatic, inspiring | US | PM |

| Proposed Tag | Drama | violence, suspenseful, atmospheric | Anglosphere | |||

| 6 | Desperado | Real Tag | Action, Crime, Thriller | violence, romantic, comedy, humor, action, mystery | US | PM |

| Proposed Tag | Action | violence | Anglosphere | |||

| 7 | Drive | Real Tag | Action, Adventure, Comedy | violence, suspenseful | US | PM |

| Proposed Tag | Action | violence, comedy | Anglosphere | |||

| 8 | Escape from L.A. | Real Tag | Action, Adventure, Sci-Fi | violence, humor, mystery | US | PM |

| Proposed Tag | Action | violence, comedy | Anglosphere | |||

| 9 | Executive Decision | Real Tag | Action, Adventure, Thriller | violence, suspenseful, mystery | US | PM |

| Proposed Tag | Action | violence | Anglosphere | |||

| 10 | House Arrest | Real Tag | Comedy, Family | romantic | US | AM |

| Proposed Tag | Comedy | romantic | Anglosphere | |||

| 11 | In the Mouth of Madness | Real Tag | Drama, Fantasy, Horror | violence, comedy, suspenseful, fantasy | US | PM |

| Proposed Tag | Drama | violence | Anglosphere | |||

| 12 | Kids | Real Tag | Drama | violence | US | PM |

| Proposed Tag | Drama | violence, suspenseful | Anglosphere | |||

| 13 | Leaving Las Vegas | Real Tag | Drama, Romance | romantic, dramatic, dark, atmospheric, depressing | US | PM |

| Proposed Tag | Drama | romantic | Anglosphere | |||

| 14 | Mallrats | Real Tag | Comedy, Romance | romantic, comedy, humor, comic | US | PM |

| Proposed Tag | Comedy | violence, comedy | Anglosphere | |||

| 15 | Tommy Boy | Real Tag | Adventure, Comedy | comedy, humor | US | PM |

| Proposed Tag | Comedy | comedy | Anglosphere | |||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Park, H.; Yong, S.; You, Y.; Lee, S.; Moon, I.-Y. Automatic Movie Tag Generation System for Improving the Recommendation System. Appl. Sci. 2022, 12, 10777. https://doi.org/10.3390/app122110777

Park H, Yong S, You Y, Lee S, Moon I-Y. Automatic Movie Tag Generation System for Improving the Recommendation System. Applied Sciences. 2022; 12(21):10777. https://doi.org/10.3390/app122110777

Chicago/Turabian StylePark, Hyogyeong, Sungjung Yong, Yeonhwi You, Seoyoung Lee, and Il-Young Moon. 2022. "Automatic Movie Tag Generation System for Improving the Recommendation System" Applied Sciences 12, no. 21: 10777. https://doi.org/10.3390/app122110777

APA StylePark, H., Yong, S., You, Y., Lee, S., & Moon, I.-Y. (2022). Automatic Movie Tag Generation System for Improving the Recommendation System. Applied Sciences, 12(21), 10777. https://doi.org/10.3390/app122110777