Default Detection Rate-Dependent Software Reliability Model with Imperfect Debugging

Abstract

1. Introduction

2. Imperfect Debugging and FDR

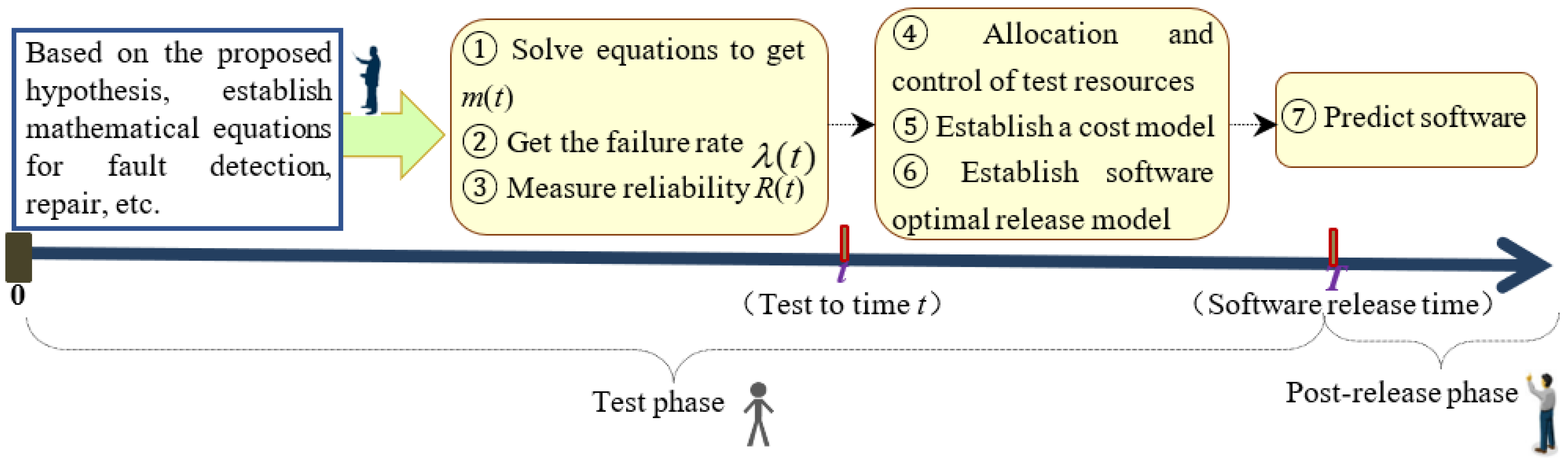

3. Fault Detection Rate-Dependent SRGM with Imperfect Debugging

3.1. Basic Assumptions

- The fault detection and repair process obeys the nonhomogeneous Poisson distribution process NHPP (nonhomogeneous Poisson process);

- Software failure is caused by the remaining faults in the software;

- In the time interval , at most one fault occurs, detected faults are proportional to remaining faults, with a proportional coefficient of p(t);

- In the time interval , the repaired faults are proportional to the number of faults detected;

- In the process of fault repair, new faults are introduced. The number of introduced faults is proportional to the number of accumulated repaired faults, and the proportional coefficient is R(t).

3.2. Imperfect Debugging Type I: Unified Fault Detection and Repair Framework Model

3.3. Imperfect Debugging Type II: Imperfect Debugging Framework Model Considering Fault Detection, Repair, and Introduction under Fault Detection Rate

4. FDR-Related Reliability Model

5. Numerical Example

5.1. Model and Failure Data Set

5.2. Comparative Standard

5.3. Performance Verification

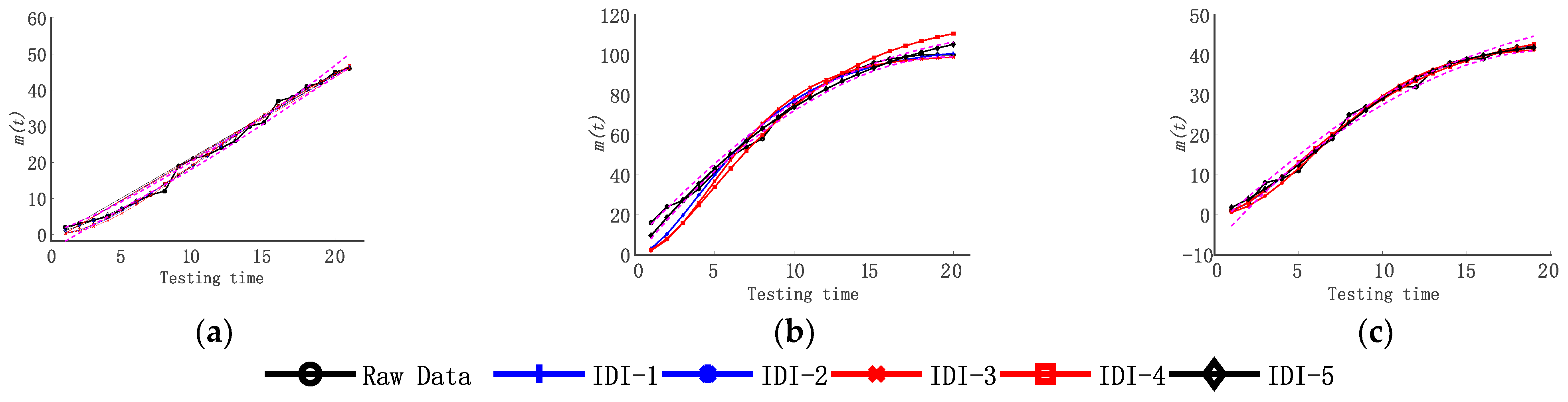

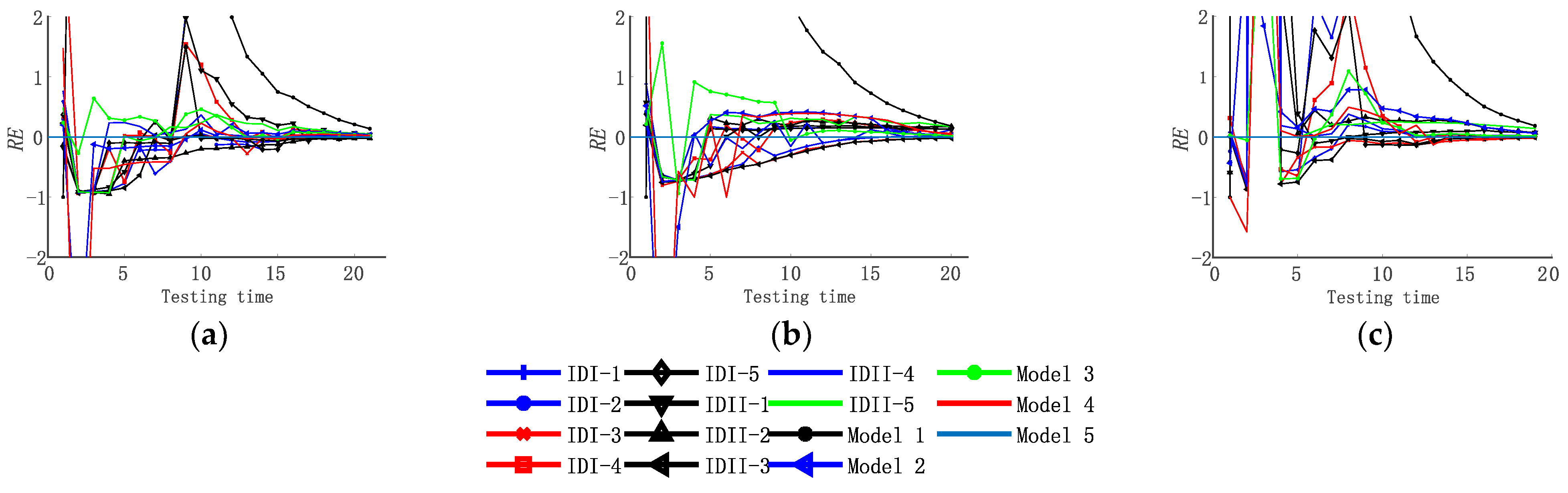

5.3.1. Performance Analysis of the FDR-Related Imperfect Debugging Model

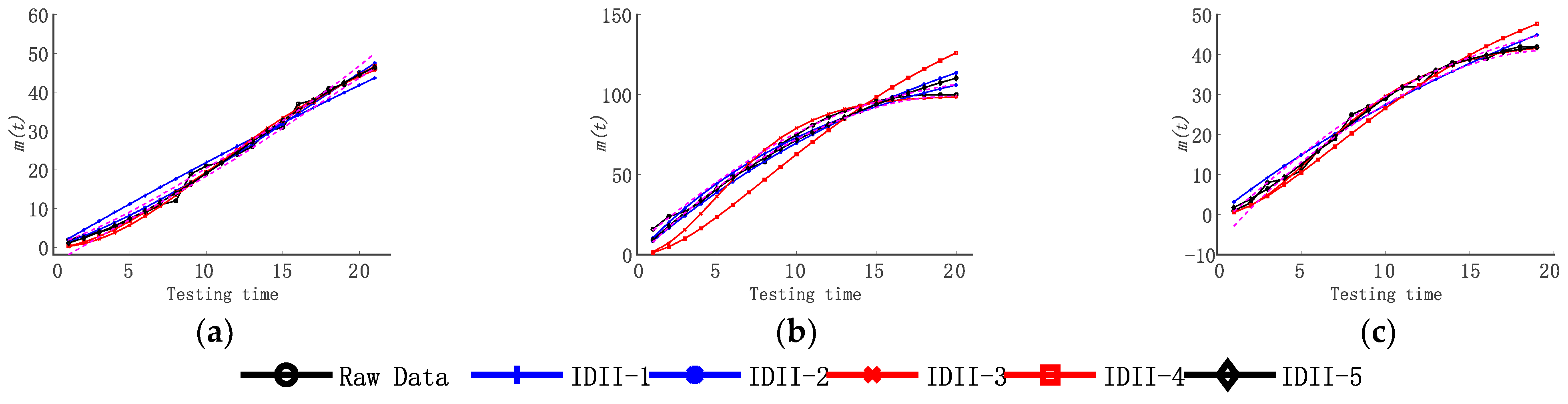

5.3.2. Performance Analysis of the Type II Imperfect Debugging Model

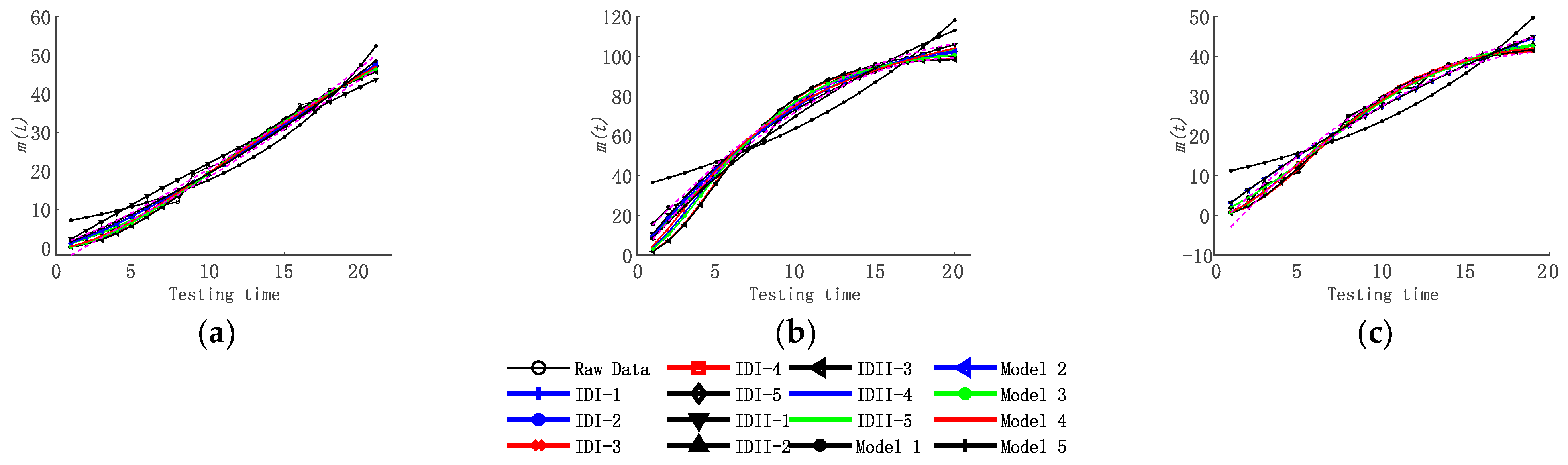

5.4. Performance Comparison between the Type II Imperfect Debugging Model and the Type I Imperfect Debugging Model

- On DS1, IDII_5 has three indicators (Variation, RMS-PE, BMMRE) that are optimal, one indicator (MSE) is suboptimal, and one indicator (R-square) is very close to its optimal indicator;

- On DS2, IDII_5 has one indicator (MSE) that is optimal, and the other four indicators (R-square, Variation, RMS-PE, BMMRE) are suboptimal, and four indicators differ from the optimal value only in the fifth or sixth decimal places.

- On DS3, IDII_5 has three indicators (MSE, variation, RMS-PE) that are optimal. The R-square differs from the optimal value only between 0.01 and 0.02, and the BMMRE differs from the optimal value between 0.03 and 0.04.

5.5. Comparison of the Type II Imperfect Debugging Model and Other Models

6. Conclusions and Future Research

Author Contributions

Funding

Conflicts of Interest

References

- Almering, V.; van Genuchten, M.; Cloudt, G.; Sonnemans, P.J. Using Software Reliability Growth Models in Practice. IEEE Softw. 2007, 24, 82–88. [Google Scholar] [CrossRef]

- Yadav, S.S.; Kumar, A.; Johri, P.; Singh, J.N. Testing effort-dependent software reliability growth model using time lag functions under distributed environment. Syst. Assur. 2022, 85–102. [Google Scholar] [CrossRef]

- Nagaraju, V.; Wandji, T.; Fiondella, L. Improved algorithm for non-homogeneous poisson process software reliability growth models incorporating testing-effort. Int. J. Perform. Eng. 2019, 15, 1265–1272. [Google Scholar] [CrossRef]

- Pradhan, V.; Kumar, A.; Dhar, J. Modelling software reliability growth through generalized inflection S-shaped fault reduction factor and optimal release time. Proc. Inst. Mech. Eng. Part O J. Risk Reliab. 2022, 236, 18–36. [Google Scholar] [CrossRef]

- Peng, R.; Ma, X.; Zhai, Q.; Gao, K. Software reliability growth model considering first-step and second-step fault dependency. J. Shanghai Jiaotong Univ. (Sci.) 2019, 24, 477–479. [Google Scholar] [CrossRef]

- Kim, Y.S.; Song, K.Y.; Pham, H.; Chang, I.H. A software reliability model with dependent failure and optimal release time. Symmetry 2022, 14, 343. [Google Scholar] [CrossRef]

- Munde, A. An empirical validation for predicting bugs and the release time of open source software using entropy measures—Software reliability growth models. Syst. Assur. 2022, 41–49. [Google Scholar]

- Haque, M.A.; Ahmad, N. An effective software reliability growth model. Saf. Reliab. 2021, 40, 209–220. [Google Scholar] [CrossRef]

- Kapur, P.K.; Pham, H.; Anand, S.; Tadav, K. A Unified Approach for Developing Software Reliability Growth Models in the Presence of Imperfect Debugging and Error Generation. IEEE Trans. Reliab. 2011, 60, 331–340. [Google Scholar] [CrossRef]

- Singh, O.; Kapur, R.; Singh, J. Considering the effect of learning with two types of imperfect debugging in software reliability growth modeling. Commun. Dependability Qual. Manag. 2010, 13, 29–39. [Google Scholar]

- Kumar, D.; Kapur, R.; Sehgal, V.K.; Jha, P.C. On the development of software reliability growth models with two types of imperfect debugging. Commun. Dependability Qual. Manag. 2007, 10, 105–122. [Google Scholar]

- Kapur, P.K.; Shatnawi, O.; Aggarwal, A.G.; Kumar, R. Unified Framework for Development Testing Effort Dependent Software Reliability Growth Models. Wseas Trans. Syst. 2009, 8, 521–531. [Google Scholar]

- Goseva-Popstojanova, K.; Trivedi, K. Failure correlation in software reliability models. IEEE Trans. Reliab. 2000, 49, 37–48. [Google Scholar] [CrossRef]

- Pham, H. System Software Reliability, 1st ed.; Springer: Berlin/Heidelberg, Germany, 2006. [Google Scholar]

- Huang, C.-Y.; Kuo, S.-Y.; Lyu, M.R. An Assessment of Testing-Effort Dependent Software Reliability Growth Models. IEEE Trans. Reliab. 2007, 56, 198–211. [Google Scholar] [CrossRef]

- Ahmad, N.; Khan, M.; Rafi, L. A study of testing-effort dependent inflection S-shaped software reliability growth models with imperfect debugging. Int. J. Qual. Reliab. Manag. 2010, 27, 89–110. [Google Scholar] [CrossRef]

- Zhang, C.; Yuan, Y.; Jiang, W.; Sun, Z.; Ding, Y.; Fan, M.; Li, W.; Wen, Y.; Song, W.; Liu, K. Software Reliability Model Related to Total Number of Faults Under Imperfect Debugging. Adv. Intell. Autom. Soft Comput. 2022, 80, 48–60. [Google Scholar]

- Aggarwal, A.G.; Gandhi, N.; Verma, V.; Tandon, A. Multi-Release software reliability growth assessment: An approach incorporating fault reduction factor and imperfect debugging. Int. J. Math. Oper. Res. 2019, 15, 446–463. [Google Scholar] [CrossRef]

- Saraf, I.; Lqbal, J. Generalized multi-release modelling of software reliability growth models from the perspective of two types of imperfect debugging and change point. Qual. Reliab. Eng. Int. 2019, 35, 2358–2370. [Google Scholar] [CrossRef]

- Saraf, I.; Lqbal, J. Generalized software fault detection and correction modeling framework through imperfect debugging, error generation and change point. Int. J. Inf. Technol. 2019, 11, 751–757. [Google Scholar] [CrossRef]

- Huang, Y.S.; Chiu, K.C.; Chen, W.M. A software reliability growth model for imperfect debugging. J. Syst. Softw. 2022, 188, 111267. [Google Scholar] [CrossRef]

- Chatterjee, S.; Saha, D.; Sharma, A.; Verma, Y. Reliability and optimal release time analysis for multi up-gradation software with imperfect debugging and varied testing coverage under the effect of random field environments. Ann. Oper. Res. 2022, 312, 65–85. [Google Scholar] [CrossRef]

- Zhang, C.; Lv, W.; Qiu, Z.; Gao, T.; Jiang, W.; Meng, F. Testing coverage software reliability model under imperfect debugging. J. Hunan Univ. Nat. Sci. 2021, 48, 26–35. [Google Scholar]

- Chin, Y.; Huang, W. Software reliability analysis and measurement using finite and infinite server queueing models. IEEE Trans Reliab. 2008, 57, 192–203. [Google Scholar] [CrossRef]

- Xie, M.; Yang, B. A study of the effect of imperfect debugging on software development cost. IEEE Trans. Softw. Eng. 2003, 29, 471–473. [Google Scholar]

- Shyur, H.J. A stochastic software reliability model with imperfect-debugging and change-point. J. Syst. Softw. 2003, 66, 135–141. [Google Scholar] [CrossRef]

- Wu, Y.P.; Hu, Q.P.; Xie, M.; Ng, S.H. Modeling and Analysis of Software Fault Detection and Correction Process by Considering Time Dependency. IEEE Trans. Reliab. 2007, 56, 629–642. [Google Scholar] [CrossRef]

- Xie, M.; Hu, Q.P.; Wu, Y.P.; Ng, S.H. A study of the modeling and analysis of software fault-detection and fault-correction processes. Qual. Reliab. Eng. Int. 2007, 23, 459–470. [Google Scholar] [CrossRef]

- Goel, L.; Okumoto, K. Time-dependent error-detection rate model for software reliability and other performance measures. IEEE Trans. Reliab. 1979, 28, 206–211. [Google Scholar] [CrossRef]

- Huang, C.Y.; Lyu, M.R.; Kuo, S.Y. A Unified Scheme of Some Nonhomogenous Poisson Process Models for Software Reliability Estimation. IEEE Trans. Softw. Eng. 2003, 29, 261–269. [Google Scholar] [CrossRef]

- Hsu, C.J.; Huang, C.Y.; Chang, J.R. Enhancing software reliability modeling and prediction through the introduction of time-variable fault reduction factor. Appl. Math. Model. 2011, 35, 506–521. [Google Scholar] [CrossRef]

- Pham, H. Software reliability and cost models: Perspectives, comparison, and practice. Eur. J. Oper. Res. 2003, 149, 475–489. [Google Scholar] [CrossRef]

- Chiu, K.C.; Huang, Y.S.; Lee, T.Z. A study of software reliability growth from the perspective of learning effects. Reliab. Eng. Syst. Saf. 2008, 93, 1410–1421. [Google Scholar] [CrossRef]

- Pham, H.; Nordmann, L.; Zhang, X. A general imperfect-software-debugging model with S-shaped fault-detection rate. IEEE Trans. Reliab. 1999, 48, 169–175. [Google Scholar] [CrossRef]

- Pham, H.; Zhang, X.M. NHPP software reliability and cost models with testing coverage. Eur. J. Oper. Res. 2003, 145, 443–454. [Google Scholar] [CrossRef]

- Pham, H. Software Reliability; Springer: Singapore, 2000. [Google Scholar]

- Wood, A. Predicting software reliability. Computer 1996, 29, 69–77. [Google Scholar] [CrossRef]

- Yamada, S.; Tokuno, K.; Osaki, S. Imperfect debugging models with fault introduction rate for software reliability assessment. Int. J. Syst. Sci. 1992, 23, 2241–2252. [Google Scholar] [CrossRef]

- Ohba, M.; Chou, X.M. Does imperfect debugging affect software reliability growth? In Proceedings of the 11th International Conference on Software Engineering, Pittsburgh, PA, USA, 15–18 May 1989; pp. 237–244. [Google Scholar]

| Model | Cumulative Fault Detection Quantity m(t) |

|---|---|

| M-1: Y-Exp [38] | ; b(t) = b |

| M-2: Y-Lin [38] | ; b(t) = b. |

| M-3: Pham Zhang IFD [14] | ; b(t) = b. |

| M-4: P-Z [35] | ; b(t) = b. |

| M-5: Ohba-Chou [39] | ; b(t) = b. |

| IDI Frame Series Model: IDI_1—IDI_5 | |

| IDII Frame Series Model: IDII_1—IDII_5 |

| Model | DS | MSE | R-Square | Variation | RMS-PE | BMMRE |

|---|---|---|---|---|---|---|

| IDI_1 | DS1 | 1.511895 | 1.045318 | 1.335027 | 1.357991 | 0.382202 |

| IDI_2 | 1.194023 | 1.001295 | 1.121184 | 1.121655 | 0.092767 | |

| IDI_3 | 1.860862 | 1.071312 | 1.546524 | 1.590827 | 0.524525 | |

| IDI_4 | 1.431813 | 1.035174 | 1.274901 | 1.289997 | 0.329218 | |

| IDI_5 | 1.194023 | 1.001209 | 1.121203 | 1.12168 | 0.092741 | |

| IDI_1 | DS2 | 25.64698 | 1.211092 | 5.779541 | 5.952458 | 0.325066 |

| IDI_2 | 9.980156 | 1.047866 | 3.264629 | 3.272011 | 0.078299 | |

| IDI_3 | 39.52952 | 1.301613 | 7.372471 | 7.641251 | 0.550163 | |

| IDI_4 | 51.54921 | 1.528432 | 7.635519 | 7.718815 | 0.491672 | |

| IDI_5 | 9.980156 | 1.047869 | 3.264629 | 3.272011 | 0.078299 | |

| IDI_1 | DS3 | 0.968407 | 0.99874 | 1.011346 | 1.011442 | 0.057902 |

| IDI_2 | 0.934999 | 0.980322 | 0.998502 | 1.000092 | 0.095439 | |

| IDI_3 | 1.425435 | 1.060463 | 1.299476 | 1.321645 | 0.127577 | |

| IDI_4 | 0.9799 | 0.995025 | 1.017026 | 1.017026 | 0.057254 | |

| IDI_5 | 0.934999 | 0.98032 | 0.998502 | 1.000092 | 0.09544 |

| Model | DS | MSE | R-Square | Variation | RMS-PE | BMMRE |

|---|---|---|---|---|---|---|

| IDII_1 | DS1 | 8.117125 | 0.739869 | 3.414002 | 3.55666 | 0.232516 |

| IDII_2 | 1.654242 | 0.955286 | 1.329353 | 1.332958 | 0.095028 | |

| IDII_3 | 1.975284 | 1.076242 | 1.60773 | 1.657388 | 0.544859 | |

| IDII_4 | 1.431813 | 1.035186 | 1.274885 | 1.289977 | 0.329222 | |

| IDII_5 | 1.195144 | 0.998241 | 1.120726 | 1.120886 | 0.091808 | |

| IDII_1 | DS2 | 12.34467 | 0.996335 | 3.607085 | 3.607817 | 0.078214 |

| IDII_2 | 31.55117 | 1.243937 | 6.084704 | 6.183099 | 0.118332 | |

| IDII_3 | 40.92279 | 1.313284 | 7.530967 | 7.812453 | 0.568511 | |

| IDII_4 | 211.9678 | 2.033129 | 17.18076 | 17.83243 | 1.040678 | |

| IDII_5 | 9.980156 | 1.047863 | 3.264631 | 3.272013 | 0.078299 | |

| IDII_1 | DS3 | 4.558851 | 0.860654 | 2.315218 | 2.352301 | 0.261431 |

| IDII_2 | 0.934999 | 0.980322 | 0.998502 | 1.000092 | 0.09544 | |

| IDII_3 | 1.186456 | 1.044917 | 1.170387 | 1.186124 | 0.11259 | |

| IDII_4 | 7.295519 | 1.249245 | 2.868974 | 2.898006 | 0.16295 | |

| IDII_5 | 0.934999 | 0.980321 | 0.998502 | 1.000092 | 0.09544 |

| Model | DS | MSE | R-Square | Variation | RMS-PE | BMMRE |

|---|---|---|---|---|---|---|

| M-1 | DS1 | 12.99494 | 0.838505 | 3.78606 | 3.81486 | 0.431939 |

| M-2 | 1.642027 | 0.964688 | 1.32519 | 1.329018 | 0.103872 | |

| M-3 | 1.480686 | 1.042056 | 1.313155 | 1.333504 | 0.365081 | |

| M-4 | 1.417172 | 1.022353 | 1.245078 | 1.252981 | 0.276839 | |

| M-5 | 2.516028 | 0.938045 | 1.679348 | 1.696125 | 0.116178 | |

| M-1 | DS2 | 124.2525 | 0.743409 | 11.48903 | 11.50564 | 0.22644 |

| M-2 | 11.61711 | 1.001203 | 3.50065 | 3.501827 | 0.077979 | |

| M-3 | 25.25638 | 1.211331 | 5.739474 | 5.912213 | 0.319313 | |

| M-4 | 18.92156 | 1.120702 | 4.707464 | 4.782304 | 0.195724 | |

| M-5 | 11.61711 | 1.001135 | 3.500646 | 3.501821 | 0.077979 | |

| M-1 | DS3 | 29.02455 | 0.720307 | 5.592244 | 5.610177 | 0.894779 |

| M-2 | 4.474233 | 0.858111 | 2.302146 | 2.341391 | 0.260841 | |

| M-3 | 0.975441 | 1.001077 | 1.015523 | 1.01578 | 0.065226 | |

| M-4 | 1.305707 | 0.969718 | 1.183524 | 1.18652 | 0.068236 | |

| M-5 | 4.474233 | 0.858111 | 2.302155 | 2.341403 | 0.260842 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, C.; Lv, W.-G.; Sheng, S.; Wang, J.-Y.; Su, J.-Y.; Meng, F.-C. Default Detection Rate-Dependent Software Reliability Model with Imperfect Debugging. Appl. Sci. 2022, 12, 10736. https://doi.org/10.3390/app122110736

Zhang C, Lv W-G, Sheng S, Wang J-Y, Su J-Y, Meng F-C. Default Detection Rate-Dependent Software Reliability Model with Imperfect Debugging. Applied Sciences. 2022; 12(21):10736. https://doi.org/10.3390/app122110736

Chicago/Turabian StyleZhang, Ce, Wei-Gong Lv, Sheng Sheng, Jin-Yong Wang, Jia-Yao Su, and Fan-Chao Meng. 2022. "Default Detection Rate-Dependent Software Reliability Model with Imperfect Debugging" Applied Sciences 12, no. 21: 10736. https://doi.org/10.3390/app122110736

APA StyleZhang, C., Lv, W.-G., Sheng, S., Wang, J.-Y., Su, J.-Y., & Meng, F.-C. (2022). Default Detection Rate-Dependent Software Reliability Model with Imperfect Debugging. Applied Sciences, 12(21), 10736. https://doi.org/10.3390/app122110736