Abstract

Intelligent vehicle-following control presents a great challenge in autonomous driving. In vehicle-intensive roads of city environments, frequent starting and stopping of vehicles is one of the important cause of front-end collision accidents. Therefore, this paper proposes a subsection proximal policy optimization method (Subsection-PPO), which divides the vehicle-following process into the start–stop and steady stages and provides control at different stages with two different actor networks. It improves security in the vehicle-following control using the proximal policy optimization algorithm. To improve the training efficiency and reduce the variance of advantage function, the weighted importance sampling method is employed instead of the importance sampling method to estimate the data distribution. Finally, based on the TORCS simulation engine, the advantages and robustness of the method in vehicle-following control is verified. The results show that compared with other deep learning learning, the Subsection-PPO algorithm has better algorithm efficiency and higher safety than PPO and DDPG in vehicle-following control.

1. Introduction

Nowadays, with the rapid development of autonomous driving technology, an increasing number of enterprises and universities are investing in the research and development of autonomous driving technology, and the future mode of travel will bear great changes. However, the current autonomous driving technology is not mature enough, and there are many areas that need to be developed. Especially in vehicle-intensive roads of city environments, traffic congestion is a frequently encountered situation, and the frequent start and stop of vehicles and instabilities in vehicle speed lead to a large number of front-end collision accidents. Therefore, a safe vehicle-following control method is of great significance for driving safety and alleviating traffic congestion.

Vehicle following is the most basic microscopic driving behavior in vehicle driving. It mainly deals with the interaction between the front and rear vehicles when the vehicles are platooning in a single lane [1]. It includes longitudinal control and lateral control. There are various methods of vehicle-following control, such as Model-Predictive-Control (MPC) [2], Proportional Integral Derivative (PID) control [3], fuzzy control method [4] and methods based on deep neural networks [5,6]. The deep network-based learning control method has been studied in dealing with complex road scenes. The methods based on deep networks can be roughly divided into two categories, supervised learning methods that use expert data to train deep networks [7], and deep reinforcement learning methods that explore and find high-reward strategies continuously during interacting with the environment [8]. The former trains the controller by collecting a large amount of expert driving data, while the latter trains the control policy by continuous exploration and trial-and-error in the environment.

The data for training using expert data need to be prepared with much human efforts. It is difficult to manually assess and screen unsafe driving data, which can lead to extreme security risks. Deep reinforcement learning can obtain optimized driving policies in a self-learning way. It learns through continuous exploration in the environment. Exploratory ability determines the ability to learn from the environment.

Most accidents that occur when vehicle-following on densely packed roads in a city environment are mainly in the stop–start phase, while the accident rate is lower in the slow-moving phase. Therefore, this paper proposes to divide the entire vehicle-following process into two stages: start–stop, steady driving. And we modify the training of the single policy network of the PPO algorithm to train two policy networks corresponding to different vehicle-following stages, respectively. Furthermore, the PPO algorithm uses the importance sampling method to estimate the distribution of the advantage function. We found that using the importance sampling method to estimate the distribution can lead to inconsistency, resulting in a large variance, which is detrimental to the optimization efficiency of the policy. Hence this paper proposes to use weighted importance sampling instead of importance sampling, which can effectively reduce the variance between the resampled data distribution and the real distribution, and improve the training efficiency of the policy network. Our work contributions can be summarized as follows:

- According to the characteristics of vehicle-following in dense urban roads, the vehicle-following process is divided into two stages: start–stop, steady driving. In order to improve the PPO algorithm, a subsection policy optimization algorithm (Subsection-PPO) is proposed to use two different policy networks for training in different following stages.

- The weighted importance sampling method is used instead of the importance sampling method when estimating the objective function.

- In order to evaluate the effectiveness of the Section-PPO method in vehicle-following control, this paper uses the TORCS (The Open Racing Car Simulator) simulation environment to simulate urban traffic flow for verification. The experimental results show that the method has a very good effect in vehicle-following scenarios.

2. Related Work

Vehicle-following research has been an important research direction in traffic flow analysis and autonomous driving research. The related research on vehicle-following model can be traced back to the middle of the last century, and there have been many research advances since then. In terms of vehicle-following model research, the first vehicle-following model was proposed by Pips [9] and was widely used in the description of vehicle flow. Later, different types of vehicle-following models based on different directions and fields were also proposed. Gazis et al. [10] jointly proposed the GM model, which is based on the driver’s stimulus response while taking into account the safe distance. The subsequent stimulus-response vehicle-following models are mostly based on this model. The Gipps [11] model as the earliest safe distance model was proposed by Kometani and Sasaki. Based on the Gipps model, AyresTJ et al. [12] proposed a safety distance model based on the headway. Jamson et al. [13] proposed a driver state vehicle distance model based on kinematics analysis, and a safe distance model based on the difference of driver response delay in emergency state. Treiber, Helbing et al. proposed the classic IDM model [14]. The IDM model can fully describe the change of vehicle-following behavior from free flow to congested state with a unified structure. With the development of neural networks, the use of fuzzy logic to establish a neural network vehicle-following model has been realized. The results of the neural network vehicle-following model proposed by Mathew et al. [15] after comparing with Gipps show that its prediction accuracy is higher than the latter. The vehicle-following model based on sequence-to-sequence proposed by Sharma et al. [16] has memory effect and response delay capabilities, which further expands the spatial expectation and improves the accuracy of platoon simulation and the stability of traffic flow. Li et al. [17] proposed a novel platoon formation and optimization model combining graph theory and safety potential field (G-SPF) theory which can form a collision-free platoon in a short time. Zhu and Zhang [18] proposed an improved forward-considered vehicle-following model that uses an average expected velocity field to describe the flow of autonomous vehicles. The new model has three key parameters: adjustable sensitivity, intensity factor, and average expected velocity field size, which in general bear a large impact on the stability and congestion state of autonomous vehicle flow.

The purpose of vehicle-following decision-making is to form a vehicle-following decision that can ensure the safety and rationality of vehicle-following according to the state information of the vehicle-following vehicle or the vehicle-following queue and certain decision-making methods. In terms of vehicle-following decision research, Li et al. [19] proposed an adaptive hierarchical control structure, in which the upper control layer is used to obtain the sliding mode control law of the required acceleration according to the inter-vehicle state information. In the lower control layer, switching logic with hysteresis boundaries is developed to ensure ride comfort, and the desired torque is calculated in real-time based on an inverse dynamics model to track the desired acceleration planned by the upper control layer. Zhang et al. [20] proposed a behavior estimation method based on contextual traffic information to identify and predict lane change intentions, and optimize the acceleration sequence by combining the lane change intentions of other vehicles. The above methods are based on traditional control methods and do not have the robustness to adapt to most scenarios, so many teams have turned their attention to deep reinforcement learning. The intelligent vehicle-following process can be abstracted into a state transition process that conforms to the Markovian property [21], so it is possible to use the deep reinforcement learning realize the vehicle-following. Guerrieri et al. [22] proposed a new automatic traffic data acquisition method mom-dl based on the deep learning method and yolov3 algorithm. This method can automatically detect vehicles in the traffic flow and estimate the traffic variable flow, spatial average speed and vehicle density of the expressway under static and uniform traffic conditions. Masmoudi et al. [23] used the vision algorithm YOLO to identify the current state, the reinforcement learning algorithm Q-learning and the DQN algorithm to control the following vehicles. After conducting a simulation experiment, they concluded that the following vehicle can make reasonable decisions. However, the Q-learning algorithm has the problem of dimension explosion in continuous problems such as vehicle-following, so Zhu et al. [24] and others chose the DDPG [25] algorithm that can output continuous actions to improve and verify it in the vehicle-following scene, while showing good generalization ability. Reinforcement learning shows great potential in sequential decision optimization problems, but there are certain difficulties in the design of reward functions. Gao et al. [26] used an inverse reinforcement learning algorithm to establish a reward function for each driver’s data, and analyzed the driving characteristics and following policy, and subsequent simulations in a highway environment demonstrated the effectiveness of the method.

3. Problem Formulation

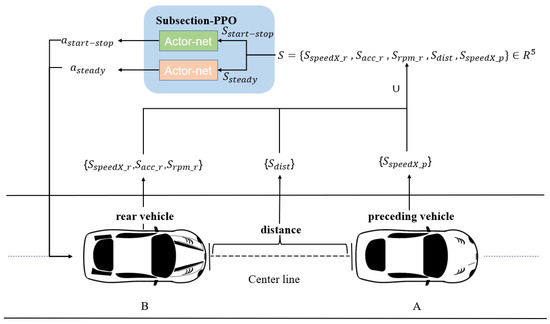

Vehicle-following on city roads is different from cruise control since the vehicle will start, stop, and shift frequently. Therefore, it is necessary to adjust the accelerator or brake according to the state of the vehicle in front. The ability to maintain a safe driving distance is the most important indicator of vehicle-following control. Following is a random and interactive process, so vehicle-following control can be modeled as a Markov decision process (MDP). During the MDP process, the following vehicle needs to continuously observe the current state and make decisions. MDP can be represented by a tuple , where S is a set of states. We divide state S into two states as the input of the policy network, namely the start–stop state and steady state . A is the action set and we divide A into start–stop phase action and stable phase action . P is the state transition probability, and R is the immediate reward obtained after performing action A. Figure 1 shows a schematic diagram of car following control. Below, we will introduce the state space and action space in the process of vehicle-following.

Figure 1.

Vehicle-following control.

3.1. State Space

Vehicle-following constantly explores the environment for learning, so it needs to continuously obtain the current state as input. The simulation environment in this paper is TORCS, and Table 1 shows the state space.

Table 1.

State space.

Because the state space data obtained by the sensor bear different dimensions, we adopt normalization to [0,1] in the experimental stage to eliminate the adverse influence caused by the singular sample data.

3.2. Action Space

Intelligent vehicle-following as longitudinal control requires reasonable control of the accelerator opening and braking force to maintain a safe and stable vehicle spacing. The accelerator opening and braking force constitute the action space vector, as shown in Table 2.

Table 2.

Action Space.

3.3. Reward Function

The reward function in reinforcement learning is an incentive mechanism that enables the agent to learn a behavioral strategy to meet the ultimate goal. Two policy networks are used in this paper but need to maintain a consistent estimate of the advantage function, so a reward function is used uniformly:

- where , . A positive reward is given when the vehicle maintains a safe spacing , , and is the Minimum Time Headway.

- . On vehicle-intensive roads, the vehicle speed is generally less than 30 km/h. In this paper, the maximum speed of the preceding vehicle is set as . Since the vehicle uses the sensor to obtain the current state information, the accuracy and speed are much higher than that of a human driver. Maintaining a similar speed can stably follow the distance to ensure safety. Therefore, the speed of the vehicle is kept as consistent as possible in the experiment.

- is the vehicle acceleration, .

4. Methodology

Intelligent vehicle-following control can be described as a Markov decision process. This paper proposes the Subsection-PPO algorithm, which divides a set of trajectories into a start–stop part and a steady part and uses a weighted importance sampling method to calculate the objective function. In order to provide the training vehicle with initial power and exploration capability during the training phase, this paper uses Ornstein–Uhlenbeck (OU) [27] noise with random process.

4.1. Noise

Since the use of time-series-related noise can increase the exploration efficiency, this paper adopts the time-series-related Ornstein–Uhlenbeck (OU) noise. OU noise is a stochastic process and its differential equation is as follows:

where is usually one dimension of agent action, represents the mean value of action, and , is Wiener process. The magnitude of is directly proportional to the degree that tends to , and is the magnification of the disturbance in the Wiener process.

4.2. Proximal Policy Optimization Algorithm

The proximal policy optimization algorithm is a reinforcement learning algorithm based on policy gradient, which is evolved from the trust region policy optimization (TRPO) [28]. If the agent’s reward in the environment is higher, it means that they have a stronger ability to complete tasks, and the ultimate goal of all policy gradient methods is to maximize the cumulative reward, that is, to maximize , among them, is the discount factor, indicating that the farther away from the current state, the smaller the impact on the current state, refers to the cumulative reward obtained when performing actions according to the policy . represents the state transition of the agent in the environment, treat state transitions as a given distribution . The TRPO algorithm proposes to use the advantage function to evaluate the quality of executing an action, where is the value-action pair, and is the state value. It has been proved that the cumulative reward of a new policy can be expressed as:

That is, the cumulative reward of the old policy plus the cumulative advantage function of the new policy.

Therefore, if can be guaranteed to be greater than or equal to 0, the monotonic increase of the cumulative reward can be guaranteed, that is, the optimization of the strategy. Since the cumulative advantage function cannot be calculated directly, TRPO uses the importance sampling method to estimate the advantage function and uses the KL divergence to limit the update range of the policy to ensure the monotony of the cumulative reward, that is, to ensure the continuous optimization of policy. The core problem of TRPO algorithm is defined as:

The TRPO algorithm uses the KL divergence method to calculate the confidence region, and the update range of the control policy network is within a certain range to ensure that the value of the cumulative advantage function is greater than or equal to 0. However, the method of updating the policy in the trust region is complex and inefficient, so the PPO algorithm proposes to use the method of clipping the importance weight to limit the updating range of the policy. The maximizing objective "replacement" function of the PPO algorithm is:

where, the superscript indicates conservative strategy iteration [29], is the importance weight obtained by importance sampling. The objective function of the PPO algorithm is finally:

The value of is limited to , where is a hyperparameter.

4.3. Subsection-PPO

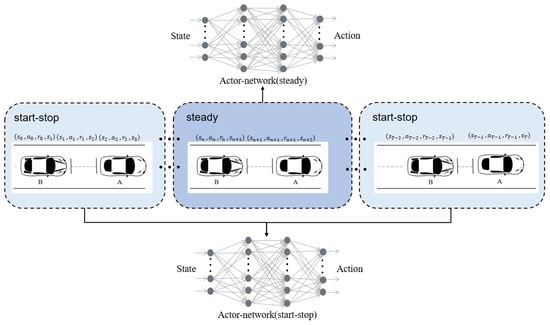

In urban congested roads, due to the high density of vehicles and the slow speed, frequent starts and stops are often caused, and accidents also occur. Therefore, this paper proposes to divide vehicle-following into two stages: start–stop and steady driving. Therefore, the collected trajectories data need to be divided into two categories and processed separately. The original data are divided into two categories, the algorithm also needs to make corresponding changes. Hence this paper proposes the Subsection-PPO algorithm on the basis of the proximal policy optimization algorithm (PPO), using two Actor networks to learn different stages policy, as shown in Figure 2. It is worth noting that in order to ensure consistent state value estimation, we only use one Critic network to estimate the state value of the two stages. Afterwards, simulation experiments show that this method is effective. The following will introduce the division method of different stages:

Figure 2.

Actor-network at different stages.

- start-stop stageThe trajectories generated in this stage are start–stop data.

- steady stage: whenThe trajectories generated in this stage are steady driving data.

In the PPO algorithm, the importance sampling method changes the algorithm from on-policy to off-policy, which improves the utilization of data, and controls the update region of the policy network by clipping the importance weight to ensure that the update process is monotonous unabated. Although the importance sampling method is unbiased in estimating the distribution of the data, using a new distribution to estimate the old distribution will lead to large variance, so the model training efficiency is not as good as the sampling method that is both unbiased and consistent. To solve this problem, this paper proposes to use the weighted importance sampling method instead of the importance sampling method to estimate the actual objective function. Then, the final objective function is transformed from Equation (5) to:

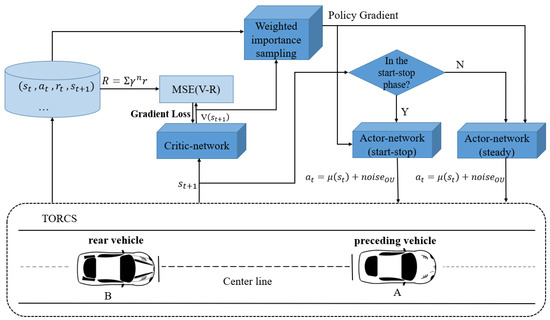

where . The weighted importance sampling is both unbiased and consistent. Hence as the sampling volume increases, the method can render the estimated value increasingly close to the true distribution of the objective function. Figure 3 shows the overall framework of the subsection-PPO algorithm.

Figure 3.

Subsection-PPO.

5. Experimental Simulation

This experiment is based on the TORCS (The Open Racing Car Simulator) simulation platform, which provides rich road data and comprehensive vehicle radars. We use the python language to implement the reinforcement learning code, use UDP protocol to achieve data interaction with the simulation platform and control the simulated vehicle. To verify the effectiveness of the algorithm proposed in this paper, we simulate the actual urban traffic flow in the TORCS simulation platform, and only consider the longitudinal control of the vehicle in the experiment. Our experiments include:

- The experiment compares the cumulative reward of the Subsection-PPO algorithm proposed in this paper with PPO and DDPG.

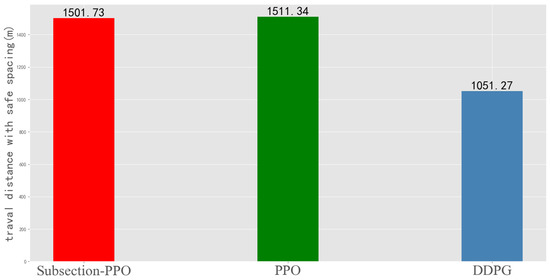

- The total distance of vehicle-following by different algorithms while maintaining a safe spacing is compared.

- The effect of the proposed algorithm in vehicle-following control is described using the relationship between speed and distance.

5.1. Hardware Configuration

The experimental platform employs an Ubuntu20.04 operating system with 16GB DDR4, the processor is Intel Core i5-10200h CPU @ 2.40 GHz sixteen core, and the graphics card is NVIDIA Quadro RTX 5000. The learning rates of actor_network (start–stop) and actor_network (steady) are both . The learning rate of critic_network . Training timesteps are 10,000.

5.2. Experiment and Comparison

We choose to compare with two reinforcement learning algorithms, namely: PPO and DDPG. Both of their algorithms are based on the basic Actor–Critic architecture. In simple terms, the Actor network is used for action output, and the Critic network is used for state or action value evaluation.

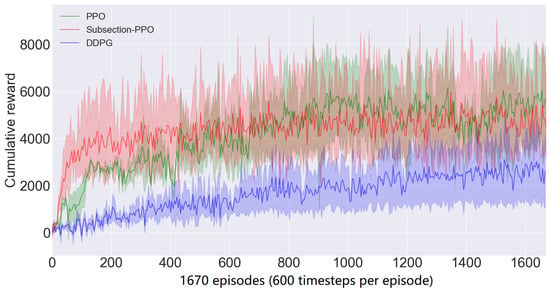

Firstly, the comparison of cumulative rewards is essential. The change of cumulative rewards shows the exploration ability and learning ability of the reinforcement learning algorithm. The cumulative reward is opposite to the loss value. The higher the cumulative reward obtained after convergence, the better the learning of the agent. The perception of the environment is also richer.

In this paper, the method of random acceleration and braking is used to longitudinally control the preceding vehicle to simulate the state of the preceding vehicle, and the reinforcement learning method is used to control the following vehicle. The reward function is introduced in Section 3.3. The variation of cumulative reward during training phase is shown in Figure 4.

Figure 4.

Cumulative rewards.

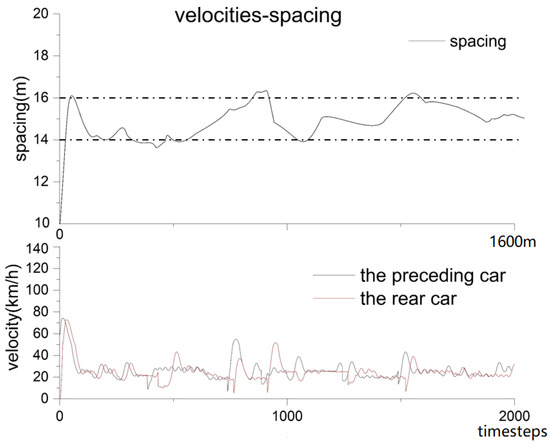

The experiment simulates vehicle-following in a crowded road, so the Minimum Time Headway (MTH) in the strong vehicle-following state is selected to calculate the safe vehicle-following spacing. It is considered safe when the vehicle-following state spacing is within the range . Figure 5 shows the driving distance of different control methods under the condition of maintaining safe spacing. The whole journey is 1600 m. Figure 6 shows the relationship between vehicle velocity and spacing.

Figure 5.

Driving distance with safe spacing.

Figure 6.

The dotted line indicates the spacing range in the ideal state. Initial vehicle spacing is 10 m. The distance traveled using the Subsection-PPO algorithm while maintaining a safe spacing accounted for 93.8% of the total mileage.

6. Conclusions

Based on the PPO algorithm, according to the characteristics of different stages of vehicle-following control we divide the trajectories into two parts: stop–start, steady driving. And we use the weighted importance sampling method instead of the importance sampling method. To sum up, we propose the Subsection-PPO algorithm for vehicle-following control. Subsection-PPO algorithm uses a dual actor network, but in order to avoid the training non convergence caused by inconsistent value estimates, we choose to employ a critic network for value estimation. The action vectors of different vehicle-following stages are calculated by the corresponding actor network, which makes our method well applicable to vehicle-following problems. Furthermore, the weighted importance sampling method improves the training efficiency. We simulate the vehicle-following situation of urban roads in the TORCS simulation environment and subsequently compare and verify the methods we propose. These results prove the feasibility and advantages of our proposed vehicle-following safety of the method. However, there are still shortcomings in our work. At this stage, the technology of autonomous driving is constantly developing. In the case of ensuring safety, it is necessary to consider the acceleration changes of the vehicle, which affects the energy consumption and ride comfort of the vehicle. This will be the direction of our future work.

Author Contributions

Y.H. wrote the manuscript and designed research methods; X.Z. (Xinglong Zhang), X.X. and X.Z. (Xiaochuan Zhang) edited and revised the manuscript; Y.L. (Yao Liu) and Y.L. (Yong Li) analyzed the data. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by research on key technologies of internet of things platform for smart city (Grant No. 2020ZDXM12) of the key program and research on the basic support system of urban management comprehensive law enforcement (Grant No. 2021ZDXM17) of China Coal Technology Engineering Group Chongqing Research Institute.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data used to support the findings of this study are available from the corresponding author upon request.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Paschalidis, E.; Choudhury, C.F.; Hess, S. Combining driving simulator and physiological sensor data in a latent variable model to incorporate the effect of stress in car-following behaviour. Anal. Methods Accid. Res. 2019, 22, 100089. [Google Scholar] [CrossRef]

- Liu, Z.; Yuan, Q.; Nie, G.; Tian, Y. A multi-objective model predictive control for vehicle adaptive cruise control system based on a new safe distance model. Int. J. Automot. Technol. 2021, 22, 475–487. [Google Scholar] [CrossRef]

- Farag, W. Complex Trajectory Tracking Using PID Control for Autonomous Driving. Int. J. Intell. Transp. Syst. Res. 2019, 18, 356–366. [Google Scholar] [CrossRef]

- Choomuang, R.; Afzulpurkar, N. Hybrid Kalman filter/fuzzy logic based position control of autonomous mobile robot. Int. J. Adv. Robot. Syst. 2005, 2, 20. [Google Scholar] [CrossRef]

- Fayjie, A.R.; Hossain, S.; Oualid, D.; Lee, D.J. Driverless car: Autonomous driving using deep reinforcement learning in urban environment. In Proceedings of the IEEE 2018 15th International Conference on Ubiquitous Robots (UR), Honolulu, HI, USA, 26–30 June 2018; pp. 896–901. [Google Scholar] [CrossRef]

- Colombaroni, C.; Fusco, G.; Isaenko, N. Modeling car following with feed-forward and long-short term memory neural networks. Transp. Res. Procedia 2021, 52, 195–202. [Google Scholar] [CrossRef]

- Bhattacharyya, R.; Wulfe, B.; Phillips, D.; Kuefler, A.; Morton, J.; Senanayake, R.; Kochenderfer, M. Modeling human driving behavior through generative adversarial imitation learning. arXiv 2020, arXiv:2006.06412. [Google Scholar] [CrossRef]

- Lin, Y.; McPhee, J.; Azad, N.L. Longitudinal dynamic versus kinematic models for car-following control using deep reinforcement learning. In Proceedings of the 2019 IEEE Intelligent Transportation Systems Conference (ITSC), Auckland, New Zealand, 27–30 October 2019; pp. 1504–1510. [Google Scholar] [CrossRef]

- Pipes, L.A. An operational analysis of traffic dynamics. J. Appl. Phys. 1953, 24, 274–281. [Google Scholar] [CrossRef]

- Gazis, D.C.; Herman, R.; Potts, R.B. Car-following theory of steady-state traffic flow. Oper. Res. 1959, 7, 499–505. [Google Scholar] [CrossRef]

- Cattin, J.; Leclercq, L.; Pereyron, F.; El Faouzi, N.E. Calibration of Gipps’ car-following model for trucks and the impacts on fuel consumption estimation. IET Intell. Transp. Syst. 2019, 13, 367–375. [Google Scholar] [CrossRef]

- Ayres, T.; Li, L.; Schleuning, D.; Young, D. Preferred time-headway of highway drivers. In Proceedings of the ITSC 2001, Oakland, CA, USA, 25–29 August 2001; 2001 IEEE Intelligent Transportation Systems. Proceedings (Cat. No. 01TH8585). pp. 826–829. [Google Scholar] [CrossRef]

- Jamson, A.H.; Merat, N. Surrogate in-vehicle information systems and driver behaviour: Effects of visual and cognitive load in simulated rural driving. Transp. Res. Part F Traffic Psychol. Behav. 2005, 8, 79–96. [Google Scholar] [CrossRef]

- Treiber, M.; Kesting, A. Traffic flow dynamics: data, models and simulation. Phys. Today 2014, 67, 54. [Google Scholar]

- Mathew, T.V.; Ravishankar, K. Neural Network Based Vehicle-Following Model for Mixed Traffic Conditions. Eur. Transp.-Trasp. Eur. 2012, 52, 1–15. [Google Scholar]

- Sharma, O.; Sahoo, N.; Puhan, N. Highway Discretionary Lane Changing Behavior Recognition Using Continuous and Discrete Hidden Markov Model. In Proceedings of the 2021 IEEE International Intelligent Transportation Systems Conference (ITSC), Indianapolis, IN, USA, 19–22 September 2021; pp. 1476–1481. [Google Scholar] [CrossRef]

- Li, L.; Gan, J.; Qu, X.; Mao, P.; Yi, Z.; Ran, B. A novel graph and safety potential field theory-based vehicle platoon formation and optimization method. Appl. Sci. 2021, 11, 958. [Google Scholar] [CrossRef]

- Zhu, W.X.; Zhang, L.D. A new car-following model for autonomous vehicles flow with mean expected velocity field. Phys. A: Stat. Mech. Its Appl. 2018, 492, 2154–2165. [Google Scholar] [CrossRef]

- Li, W.; Chen, T.; Guo, J.; Wang, J. Adaptive car-following control of intelligent electric vehicles. In Proceedings of the 2018 IEEE 4th International Conference on Control Science and Systems Engineering (ICCSSE), Wuhan, China, 21–23 August 2018; pp. 86–89. [Google Scholar] [CrossRef]

- Zhang, Y.; Lin, Q.; Wang, J.; Verwer, S.; Dolan, J.M. Lane-change intention estimation for car-following control in autonomous driving. IEEE Trans. Intell. Veh. 2018, 3, 276–286. [Google Scholar] [CrossRef]

- Kamrani, M.; Srinivasan, A.R.; Chakraborty, S.; Khattak, A.J. Applying Markov decision process to understand driving decisions using basic safety messages data. Transp. Res. Part Emerg. Technol. 2020, 115, 102642. [Google Scholar] [CrossRef]

- Guerrieri, M.; Parla, G. Deep learning and yolov3 systems for automatic traffic data measurement by moving car observer technique. Infrastructures 2021, 6, 134. [Google Scholar] [CrossRef]

- Masmoudi, M.; Friji, H.; Ghazzai, H.; Massoud, Y. A Reinforcement Learning Framework for Video Frame-based Autonomous Car-following. IEEE Open J. Intell. Transp. Syst. 2021, 2, 111–127. [Google Scholar] [CrossRef]

- Zhu, M.; Wang, X.; Wang, Y. Human-like autonomous car-following model with deep reinforcement learning. Transp. Res. Part C Emerg. Technol. 2018, 97, 348–368. [Google Scholar] [CrossRef]

- Silver, D.; Lever, G.; Heess, N.; Degris, T.; Wierstra, D.; Riedmiller, M. Deterministic policy gradient algorithms. In Proceedings of the International Conference on Machine Learning (PMLR), Beijing, China, 21–26 June 2014; pp. 387–395. [Google Scholar]

- Gao, H.; Shi, G.; Xie, G.; Cheng, B. Car-following method based on inverse reinforcement learning for autonomous vehicle decision-making. Int. J. Adv. Robot. Syst. 2018, 15, 1729881418817162. [Google Scholar] [CrossRef]

- Ngoduy, D.; Lee, S.; Treiber, M.; Keyvan-Ekbatani, M.; Vu, H. Langevin method for a continuous stochastic car-following model and its stability conditions. Transp. Res. Part C Emerg. Technol. 2019, 105, 599–610. [Google Scholar] [CrossRef]

- Schulman, J.; Levine, S.; Abbeel, P.; Jordan, M.; Moritz, P. Trust region policy optimization. In Proceedings of the International Conference on Machine Learning, PMLR, Lille, France, 6–11 July 2015; pp. 1889–1897. [Google Scholar] [CrossRef]

- Kakade, S.; Langford, J. Approximately optimal approximate reinforcement learning. In Proceedings of the 19th International Conference on Machine Learning, Sydney, Australia, 8–12 July 2002. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).