Machine Learning Approach Regarding the Classification and Prediction of Dog Sounds: A Case Study of South Indian Breeds

Abstract

1. Introduction

2. Data Collection and Analysis

2.1. Data Collection

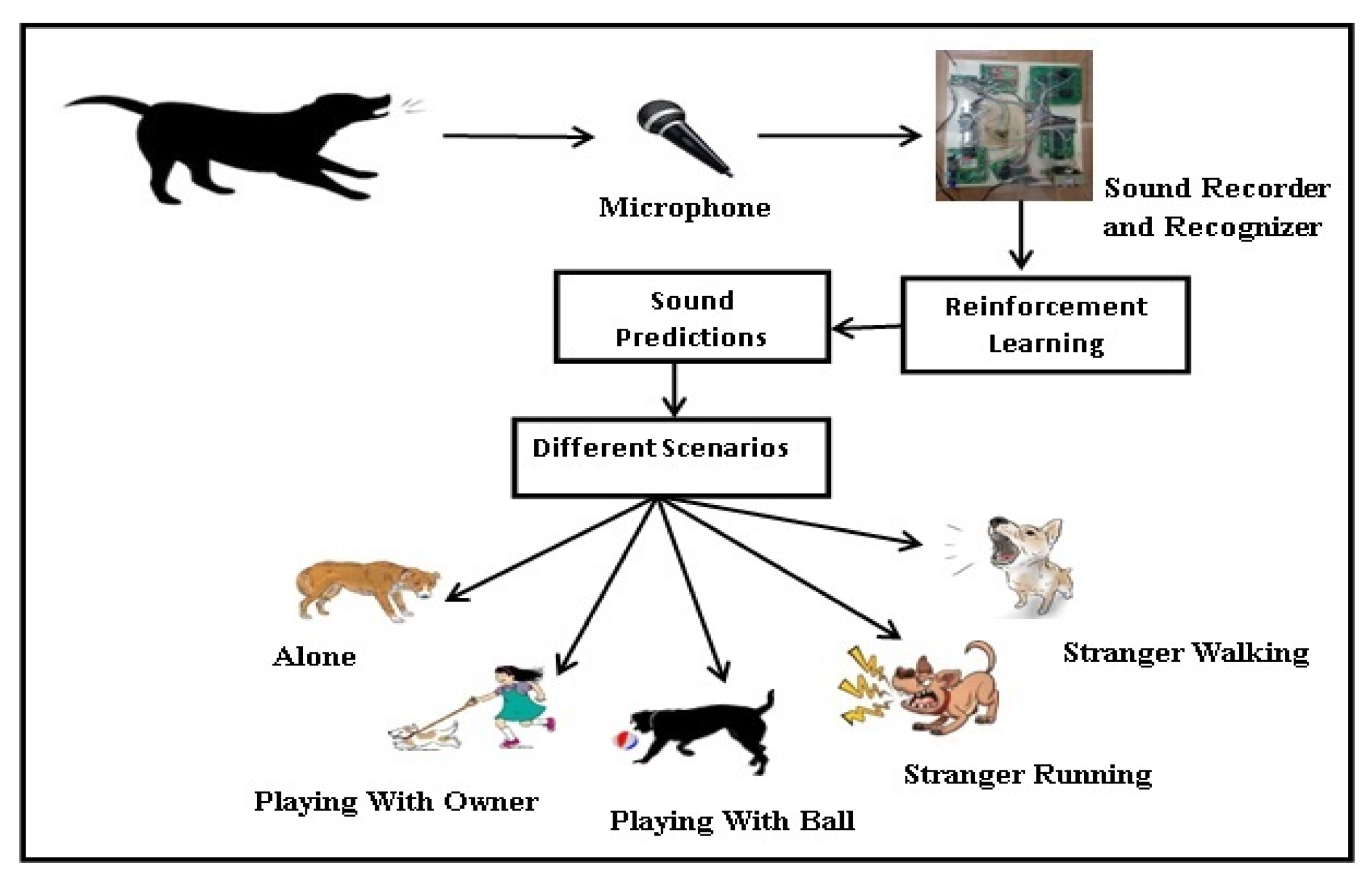

2.2. Sound Recorded Situations

2.2.1. Behavior Reaction: Sensing a Stranger

2.2.2. Behavior Reaction: Getting into a Fight

2.2.3. Behavior Reaction: Being Alone

2.2.4. Behavior Reaction: In Friendly Play

2.2.5. Behavior Reaction: During a Walk

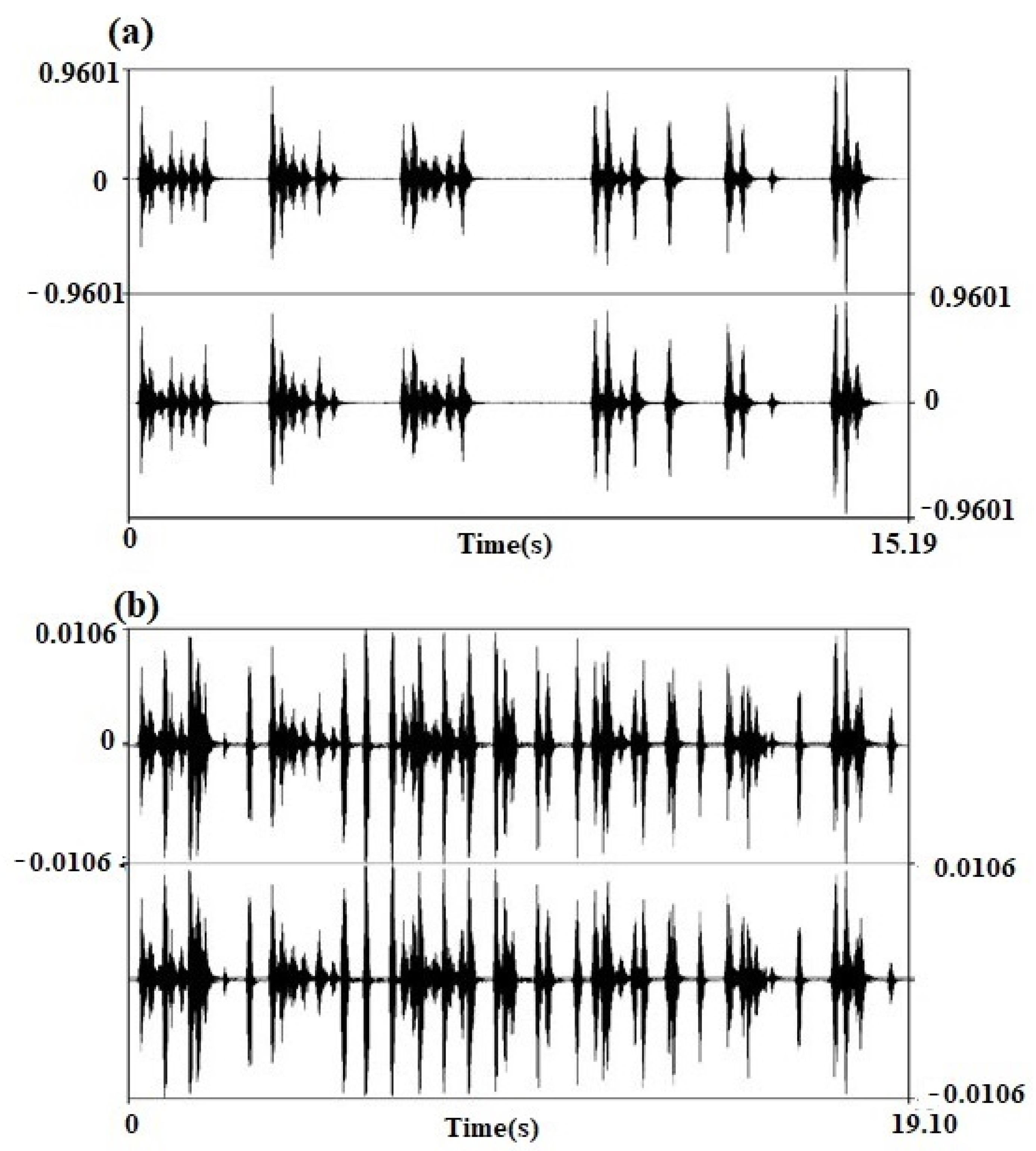

2.3. Recording Vocalization and Observation

2.4. Sound Parameters

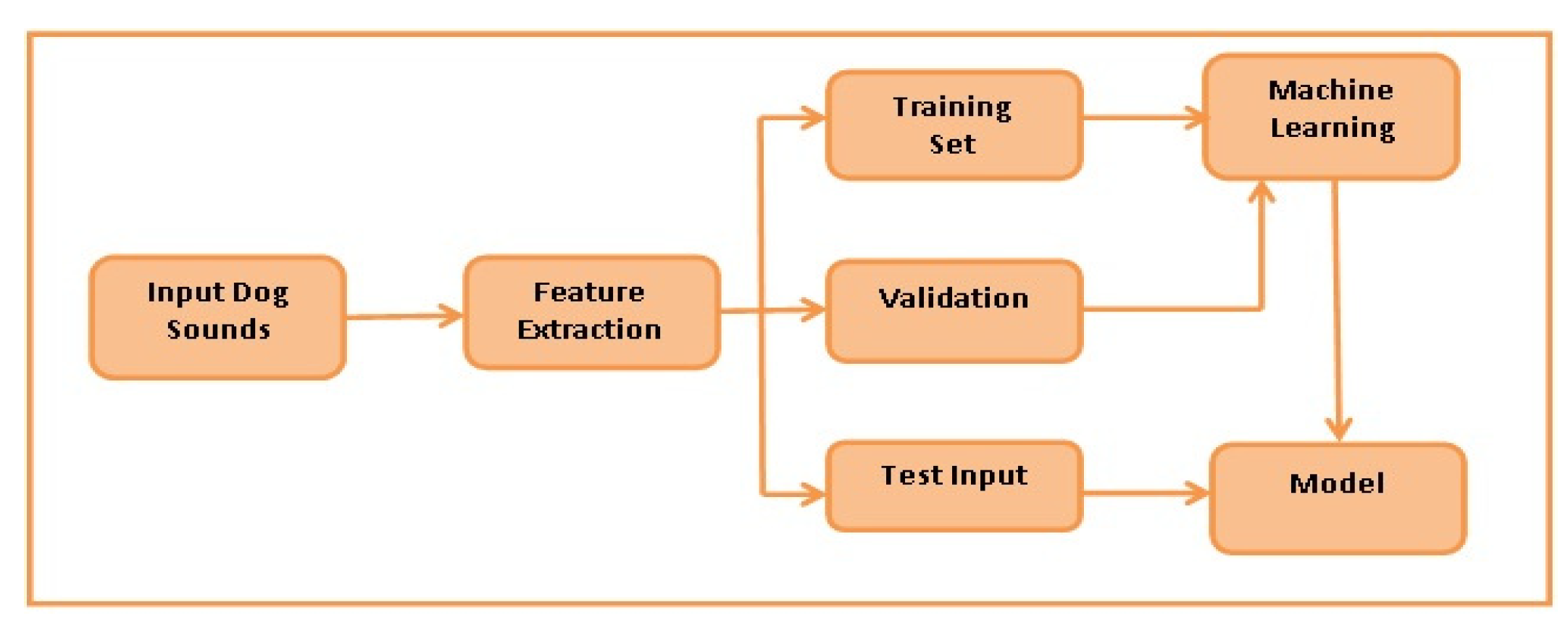

3. Approach and Analysis: Architecture and Methodology

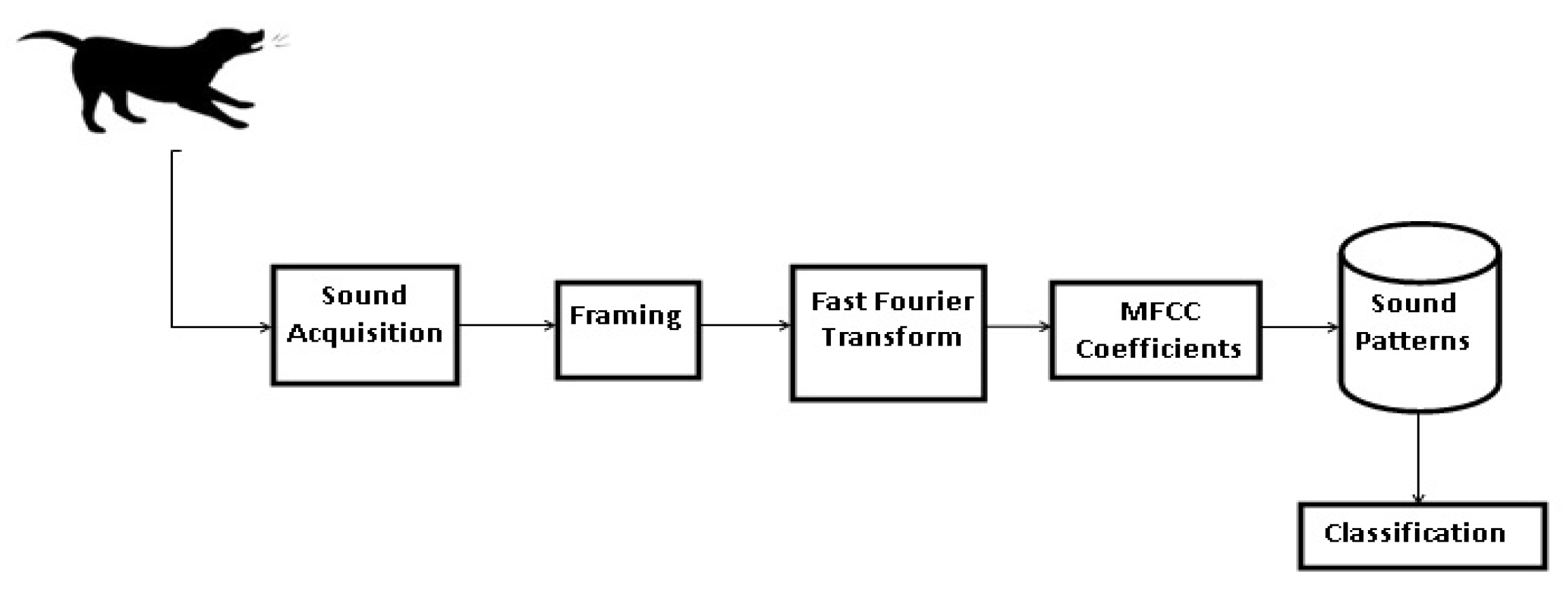

3.1. Architecture of Sound Analysis

3.2. Multivariate Analysis

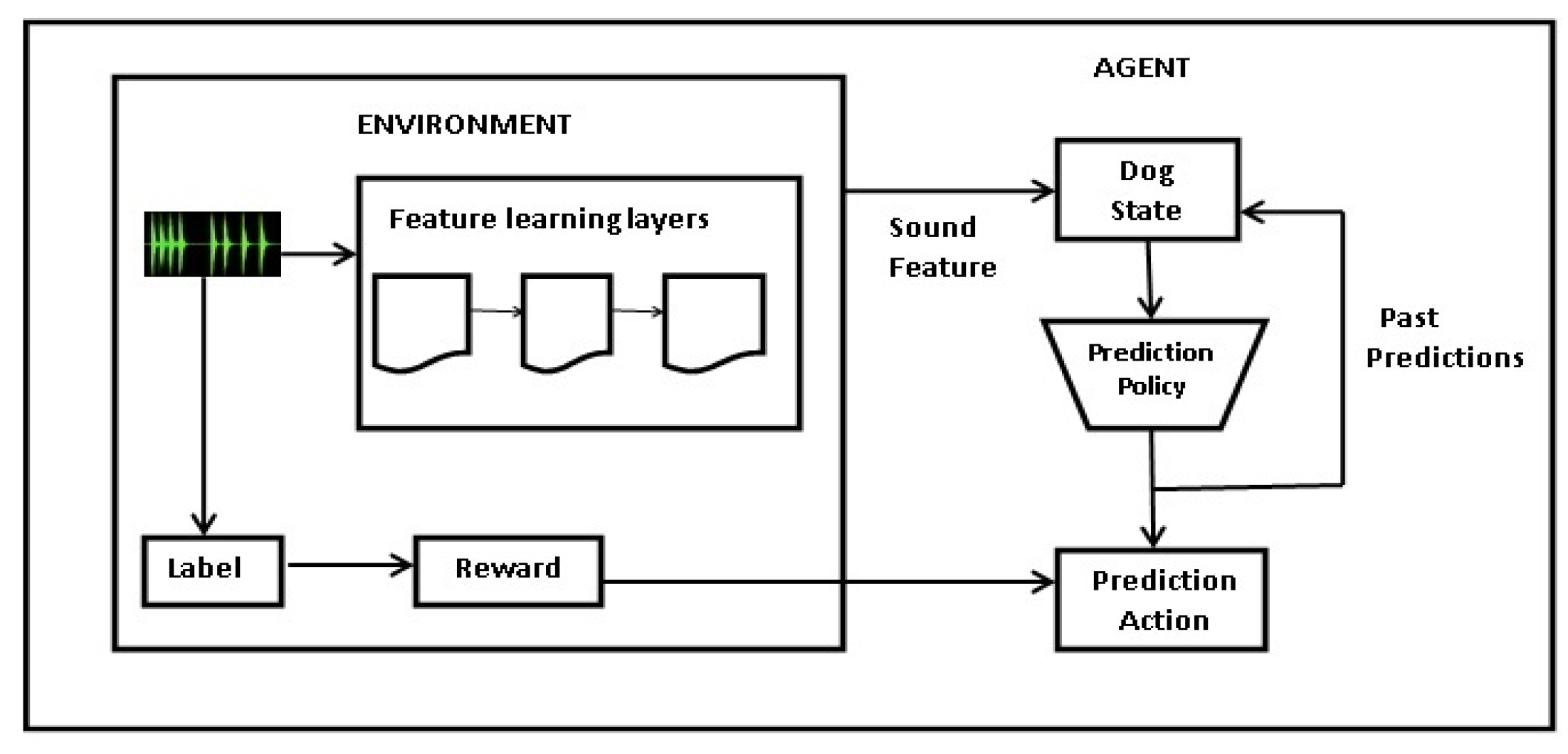

3.3. Methodology: Machine Learning-Based Approach

3.3.1. Classification

3.3.2. Environment

3.3.3. Input Sound

3.3.4. Feature Learning Layers

3.3.5. Label

3.3.6. Reward

3.3.7. Agents

3.4. Learning Algorithm for Sound Classification

3.4.1. Training

3.4.2. Testing

4. Different Scenario and Observation: A Real Time Case Study

4.1. Different Scenario and Observation

- (1)

- Dog barking while a stranger walked in;

- (2)

- Dog barking while a stranger was running;

- (3)

- Dog playing with a ball;

- (4)

- Dog playing with the owner;

- (5)

- When the dog was alone.

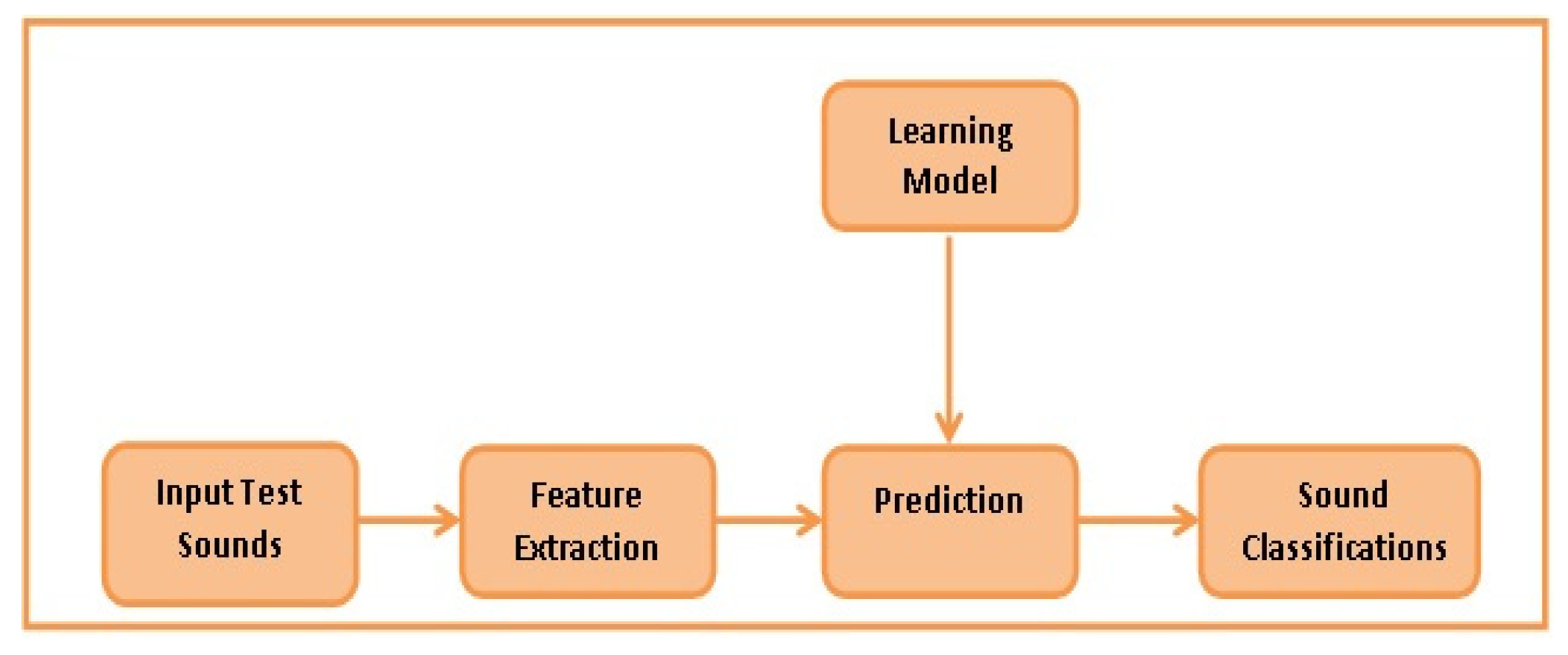

4.1.1. Dog Barking While Stranger Walked in

4.1.2. Dog Barking While Stranger Is Running

4.1.3. Dog Playing with a Ball

4.1.4. Dog Playing with the Owner

4.1.5. Dog When Alone

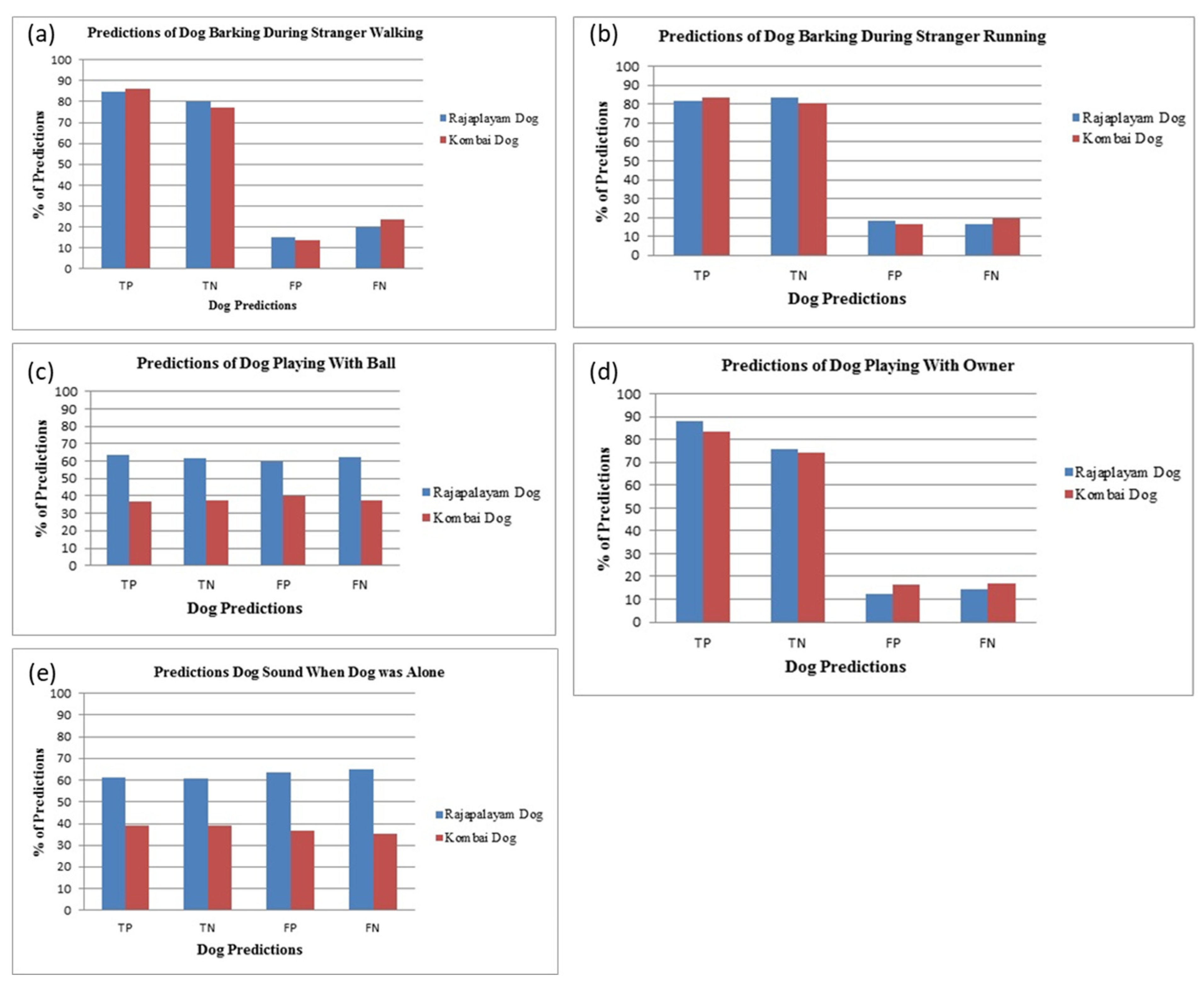

5. Result and Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| Algorithm A1: Learning Algorithm |

| Input: Initial state , Action set , update sound , learning rate , discount factor |

| Notations:—time interval between sounds, —action in time for the specific sounds, —state of time for the dog sound, —reward for the state, —Sample set for updating, —to determine update functioning of |

| initiate = 0, = 0, |

| for time interval between sounds do |

| ← action selection y, |

| ← get reward ( ) |

| // Updating function |

| ← // Adding a new sample |

| if= |

| then |

| for |

| do |

| end for |

| ← 0 |

| end if |

| ← 1 |

| end for |

Appendix B

| Dog Type | Gender | Age (in Years) | Day 1 | Day 2 | Day 3 | Day 4 | Day 5 |

|---|---|---|---|---|---|---|---|

| Rajapalayam Hound | Female | 1 | 3 | 2 | 0 | 1 | 2 |

| Female | 6 | 18 | 10 | 22 | 13 | 26 | |

| Male | 2 | 2 | 5 | 7 | 6 | 1 | |

| Male | 8 | 22 | 17 | 14 | 18 | 21 | |

| Kombai Hound | Female | 2 | 4 | 1 | 0 | 0 | 2 |

| Female | 9 | 8 | 21 | 13 | 5 | 10 | |

| Male | 2 | 0 | 0 | 1 | 2 | 1 | |

| Male | 10 | 15 | 19 | 16 | 11 | 8 |

| Dog Type | Gender | Age (in Years) | Day 1 | Day 2 | Day 3 | Day 4 | Day 5 |

|---|---|---|---|---|---|---|---|

| Rajapalayam Hound | Female | 1 | 2 | 2 | 1 | 3 | 2 |

| Female | 6 | 19 | 14 | 17 | 8 | 11 | |

| Male | 2 | 4 | 0 | 0 | 2 | 1 | |

| Male | 8 | 25 | 22 | 12 | 19 | 23 | |

| Kombai Hound | Female | 2 | 3 | 1 | 2 | 4 | 3 |

| Female | 9 | 17 | 21 | 13 | 19 | 10 | |

| Male | 2 | 1 | 3 | 1 | 4 | 3 | |

| Male | 10 | 23 | 17 | 14 | 21 | 18 |

| Dog Type | Gender | Age (in Years) | Day 1 | Day 2 | Day 3 | Day 4 | Day 5 |

|---|---|---|---|---|---|---|---|

| Rajapalayam Hound | Female | 1 | 4 | 4 | 1 | 3 | 2 |

| Female | 6 | 8 | 7 | 10 | 11 | 9 | |

| Male | 2 | 2 | 0 | 3 | 1 | 1 | |

| Male | 8 | 12 | 4 | 16 | 13 | 10 | |

| Kombai Hound | Female | 2 | 6 | 5 | 5 | 2 | 2 |

| Female | 9 | 8 | 10 | 17 | 5 | 9 | |

| Male | 2 | 5 | 7 | 4 | 3 | 3 | |

| Male | 10 | 10 | 8 | 13 | 9 | 7 |

| Dog Type | Gender | Age (in Years) | Day 1 | Day 2 | Day 3 | Day 4 | Day 5 |

|---|---|---|---|---|---|---|---|

| Rajapalayam Hound | Female | 1 | 11 | 4 | 8 | 14 | 9 |

| Female | 6 | 8 | 13 | 0 | 8 | 11 | |

| Male | 2 | 7 | 11 | 9 | 8 | 6 | |

| Male | 8 | 5 | 9 | 6 | 0 | 8 | |

| Kombai Hound | Female | 2 | 4 | 1 | 3 | 5 | 7 |

| Female | 9 | 13 | 0 | 11 | 6 | 8 | |

| Male | 2 | 6 | 8 | 11 | 9 | 7 | |

| Male | 10 | 9 | 13 | 7 | 7 | 11 |

| Dog Type | Gender | Age (in Years) | Day 1 | Day 2 | Day 3 | Day 4 | Day 5 |

|---|---|---|---|---|---|---|---|

| Rajapalayam Hound | Female | 1 | 2 | 2 | 1 | 3 | 1 |

| Female | 6 | 15 | 13 | 17 | 10 | 9 | |

| Male | 2 | 2 | 0 | 0 | 1 | 0 | |

| Male | 8 | 13 | 19 | 17 | 10 | 12 | |

| Kombai Hound | Female | 2 | 3 | 0 | 1 | 0 | 1 |

| Female | 9 | 15 | 18 | 13 | 14 | 11 | |

| Male | 2 | 1 | 2 | 0 | 2 | 1 | |

| Male | 10 | 11 | 19 | 16 | 9 | 12 |

References

- Yin, S. A New Perspective on Barking Dogs. J. Comp. Psychol. 2002, 116, 189–193. [Google Scholar] [CrossRef]

- Feddersen-Petersen, D.U. Vocalization of European Wolves (Canis lupus lupus L.) and Various Dog Breeds (Canius lupus f. fam.). Arch. Anim. Breed. 2000, 43, 387–398. [Google Scholar] [CrossRef]

- Maros, K.; Pongrácz, P.; Bárdos, G.; Molnár, C.; Faragó, T.; Miklósi, Á. Dogs can discriminate barks from different situations. Appl. Anim. Behav. Sci. 2008, 114, 159–167. [Google Scholar] [CrossRef]

- Slobodchikoff, C.N.; Andrea, P.; Jennifer, L.V. Prairie Dog Alarm Calls Encode Labels about Predator Colors. Anim. Cogn. 2009, 12, 435–439. [Google Scholar] [CrossRef]

- Taylor, A.M.; David, R.; Karen, M. Context-Related Variation in the Vocal Growling Behaviour of the Domestic Dog (Canis familiaris). Int. J. Behav. Biol. Ethol. 2009, 115, 905–915. [Google Scholar] [CrossRef]

- Scheider, L.; Grassmann, S.; Kaminski, J.; Tomasello, M. Domestic Dogs Use Contextual Information and Tone of Voice when following a Human Pointing Gesture. PLoS ONE 2011, 6, e21676. [Google Scholar] [CrossRef][Green Version]

- Pongrácz, P.; Miklósi, A.; Timár-Geng, K.; Csányi, V. Preference of Copying Unambiguous Demonstrations in Dogs. J. Comp. Psychol. 2003, 117, 337–343. [Google Scholar] [CrossRef]

- Pérez-Espinosa, H.; Pérez-Martınez, J.M.; Durán-Reynoso, J.Á.; Reyes-Meza, V. Automatic Classification of Context in Induced Barking. Res. Comput. Sci. 2015, 100, 63–74. [Google Scholar] [CrossRef]

- Quervel-Chaumette, M.; Faerber, V.; Faragó, T.; Marshall-Pescini, S.; Range, F. Investigating Empathy-Like Responding to Conspecifics Distress in Pet Dogs. PLoS ONE 2016, 11, e0152920. [Google Scholar] [CrossRef]

- Peter, A. I saw where you have been—The topography of human demonstration affects dogs’ search patterns and perseverative errors. Behav. Process. 2016, 125, 51–62. [Google Scholar] [CrossRef]

- Albuquerque, N. Dogs recognize dog and human emotions. R. Soc. Biol. Lett. 2016, 12, 1–5. [Google Scholar] [CrossRef]

- Hall, J. Persistence and resistance to extinction in the domestic dog: Basic research and applications to canine training. Behav. Process. 2017, 141, 67–74. [Google Scholar] [CrossRef]

- Sarah, M.P.; Chiara, F.; Paola, V. The effect of training and breed group on problem-solving behaviours in dogs. Anim. Cogn. 2016, 19, 571–579. [Google Scholar]

- Yin, S.; McCowan, B. Barking in Domestic Dogs: Context Specificity and Individual Identification. Anim. Behav. 2004, 68, 343–355. [Google Scholar] [CrossRef]

- Larranaga, A.; Bielza, C.; Pongrácz, P.; Faragó, T.; Bálint, A.; Larranaga, P. Comparing supervised learning methods for classifying sex, age, context and individual Mudi dogs from barking. Anim. Cogn. 2015, 18, 405–421. [Google Scholar] [CrossRef]

- Anisha, R.P.; Anita, H.P. Detection of Strangers Based on Dog’s Sound. Int. J. Innov. Technol. Explor. Eng. 2019, 8, 111–114. [Google Scholar]

- Demir, F.; Abdullah, D.A.; Sengur, A. A New Deep CNN Model for Environmental Sound Classification. IEEE Access 2020, 8, 66529–66537. [Google Scholar] [CrossRef]

- Piczak, K.J. Environmental sound classification with convolutional neural networks. In Proceedings of the 2015 IEEE 25th International Workshop on Machine Learning for Signal Processing (MLSP), Boston, MA, USA, 17–20 September 2015; pp. 1–6. [Google Scholar]

- Peter, P.; Csaba, M.; Adam, M. Acoustic Parameters of Dog Barks Carry Emotional Information for Humans. Appl. Anim. Behav. Sci. 2006, 100, 228–240. [Google Scholar]

- Peter, P.; Csaba, M.; Miklosi, A. Barking in family dogs: An ethological approach. Vet. J. 2010, 183, 141–147. [Google Scholar]

- Range, F.; Aust, U.; Steurer, M.; Huber, L. Visual Categorization of Natural Stimuli by Domestic Dogs. Anim. Cogn. 2008, 11, 339–347. [Google Scholar] [CrossRef]

- Siniscalchi, M.; Lusito, R.; Sasso, R.; Quaranta, A. Are temporal features crucial acoustic cues in dog vocal recognition? Anim. Cogn. 2012, 15, 815–821. [Google Scholar] [CrossRef]

- Colbert-White, E.N.; Tullis, A.; Andresen, D.R.; Parker, K.M.; Patterson, K.E. Can dogs use vocal intonation as a social referencing cue in an object choice task? Anim. Cogn. 2018, 21, 253–265. [Google Scholar] [CrossRef]

- Wallis, L.J.; Range, F.; Müller, C.A.; Serisier, S.; Huber, L.; Virányi, Z. Training for eye contact modulates gaze following in dogs. Anim. Behav. 2015, 106, 25–37. [Google Scholar] [CrossRef]

- Chijiiwa, H.; Kuroshima, H.; Hori, Y.; Anderson, J.R.; Fujita, K. Dogs avoid people who behave negatively to their owner: Third-party affective evaluation. Anim. Behav. 2015, 106, 123–127. [Google Scholar] [CrossRef]

- Huber, A.; Barber, A.L.; Faragó, T.; Müller, C.A.; Huber, L. Investigating emotional contagion in dogs (Canis familiaris) to emotional sounds of humans and conspecifics. Anim. Cogn. 2017, 20, 703–715. [Google Scholar] [CrossRef]

- Molnár, C.; Pongrácz, P.; Faragó, T.; Dóka, A.; Miklósi, Á. Dogs discriminate between barks: The effect of context and identity of the caller. Behav. Process. 2009, 82, 198–201. [Google Scholar] [CrossRef]

- Faragó, T.; Takács, N.; Miklósi, Á.; Pongracz, P. Dog growls express various contextual and affective content for human listeners. R. Soc. Open Sci. 2017, 4, 170134. [Google Scholar] [CrossRef]

- Khamparia, A.; Gupta, D.; Nguyen, N.G.; Khanna, A.; Pandey, B.; Tiwari, P. Sound Classification Using Convolutional Neural Network and Tensor Deep Stacking Network. IEEE Access 2019, 7, 7717–7727. [Google Scholar] [CrossRef]

- Ullo, S.L.; Khare, S.K.; Bajaj, V.; Sinha, G.R. Hybrid Computerized Method for Environmental Sound Classification. IEEE Access 2020, 8, 124055–124065. [Google Scholar] [CrossRef]

- Pongrácz, P.; Molnár, C.; Miklósi, A.; Csányi, V. Human Listeners are Able to Classify Dog Barks Recorded in Different Situations. J. Comp. Psychol. 2005, 119, 136–144. [Google Scholar] [CrossRef]

- Munir, H.; Vogel, B.; Jacobsson, A. Artificial Intelligence and Machine Learning Approaches in Digital Education: A Systematic Revision. Information 2022, 13, 203. [Google Scholar] [CrossRef]

- Zhang, Z. Speech feature selection and emotion recognition based on weighted binary cuckoo search. Alex. Eng. J. 2021, 60, 1499–1507. [Google Scholar] [CrossRef]

- Heidari, A.; Navimipour, N.J.; Unal, M.; Toumaj, S. Machine learning applications for COVID-19 outbreak management. Neural Comput. Appl. 2022, 34, 15313–15348. [Google Scholar] [CrossRef] [PubMed]

- Heidari, A.; Navimipour, N.J.; Unal, M. Applications of ML/DL in the management of smart cities and societies based on new trends in information technologies: A systematic literature review. Sustain. Cities Soc. 2022, 85, 104089. [Google Scholar] [CrossRef]

- Slobodchikoff, C.N.; Placer, J. Acoustic Structures in the Alarm Calls of Gunnison’s Prairie Dogs. Anim. Behav. 2006, 42, 712–719. [Google Scholar] [CrossRef]

- Riede, T.; Mitchell, B.R.; Tokuda, I.; Owren, M.J. Characterization Noise in Non-Human Vocalizations: Acoustic Analysis and Human Perception of Barks by Coyotes and Dog. J. Acoust. Soc. Am. 2005, 118, 514–522. [Google Scholar] [CrossRef]

- Pongrácz, P.; Molnár, C.; Dóka, A.; Miklósi, Á. Do Children Understand Man’s Best Friend? Classification of Dog Barks by Pre-Adolescents and Adults. Appl. Anim. Behav. Sci. 2011, 135, 95–102. [Google Scholar] [CrossRef]

- Bjorck, J.; Rappazzo, B.H.; Chen, D.; Bernstein, R.; Wrege, P.H.; Gomes, C.P. Automatic Detection and Compression for Passive Acoustic Monitoring of the African Forest Elephant. Assoc. Adv. Artif. Intell. 2019, 33, 476–484. [Google Scholar] [CrossRef]

- Nossier, S.A.; Rizk, M.; Moussa, N.D.; el Shehaby, S. Enhanced smart hearing aid using deep neural networks. Alex. Eng. J. 2019, 58, 539–550. [Google Scholar] [CrossRef]

- Wang, H.; Xu, Y.; Li, M. Study on the MFCC similarity-based voice activity detection algorithm. In Proceedings of the 2nd International Conference on AIMSEC, Dengleng, China, 8–10 August 2011; pp. 4391–4394. [Google Scholar]

- Kotenko, I.; Izrailov, K.; Buinevich, M. Static Analysis of Information Systems for IoT Cyber Security: A Survey of Machine Learning Approaches. Sensors 2022, 22, 1335. [Google Scholar] [CrossRef]

- Sun, F.; Wang, X.; Zhang, R. Improved Q-Learning Algorithm Based on Approximate State Matching in Agricultural Plant Protection Environment. Entropy 2021, 23, 737. [Google Scholar] [CrossRef] [PubMed]

- Zheng, Q.; Yang, M.; Yang, J.; Zhang, Q.; Zhang, X. Improvement of Generalization Ability of Deep CNN via Implicit Regularization in Two-Stage Training Process. IEEE Access 2018, 6, 15844–15869. [Google Scholar] [CrossRef]

- Jin, B.; Cruz, L.; Goncalves, N. Deep Facial Diagnosis: Deep Transfer Learning from Face Recognition to Facial Diagnosis. IEEE Access 2020, 8, 123649–123661. [Google Scholar] [CrossRef]

- You, L.; Jiang, H.; Hu, J.; Chang, C.H.; Chen, L.; Cui, X.; Zhao, M. GPU-accelerated Faster Mean Shift with euclidean distance metrics. In Proceedings of the 2022 IEEE 46th Annual Computers, Software, and Applications Conference (COMPSAC), Los Alamitos, CA, USA, 27 June–1 July 2022; pp. 211–216. [Google Scholar] [CrossRef]

| Dog Type | Gender | Age (in Years) | Alone | Fight | Play | Stranger Walking | Stranger Running | Total |

|---|---|---|---|---|---|---|---|---|

| Rajapalayam Hound | Female | 1 | 48 | 90 | 58 | 80 | 59 | 325 |

| Female | 6 | 40 | 88 | 55 | 87 | 55 | 325 | |

| Male | 2 | 35 | 90 | 70 | 80 | 50 | 325 | |

| Male | 8 | 42 | 91 | 57 | 95 | 40 | 325 | |

| Kombai Hound | Female | 2 | 47 | 87 | 52 | 90 | 49 | 325 |

| Female | 9 | 45 | 85 | 48 | 87 | 60 | 325 | |

| Male | 2 | 41 | 87 | 55 | 85 | 57 | 325 | |

| Male | 10 | 40 | 90 | 58 | 94 | 43 | 325 |

| Predictions | Rajapalyam Dog | % | Kombai Dog | % |

|---|---|---|---|---|

| True Positive | 345 | 86.25 | 336 | 84 |

| False Positive | 15 | 3.75 | 21 | 5.2 |

| True Negative | 30 | 7.5 | 27 | 6.8 |

| False Negative | 10 | 2.5 | 16 | 4 |

| States | Actions | ||||

|---|---|---|---|---|---|

| Stranger Walking | Stranger Running | Ball | Owner | Alone | |

| 0 | 0 | 0 | 0 | 0 | 0 |

| 25 | 0 | 0 | 0 | 0 | 0 |

| 50 | 0 | 0 | 0 | 0 | 0 |

| 75 | 0 | 0 | 0 | 0 | 0 |

| 100 | 0 | 0 | 0 | 0 | 0 |

| Training | |||||

| 0 | 13 | 12 | 8 | 14 | 10 |

| 25 | 17 | 18 | 13 | 18 | 12 |

| 50 | 21 | 18 | 17 | 24 | 13 |

| 75 | 27 | 19 | 18 | 25 | 15 |

| 100 | 34 | 21 | 19 | 28 | 16 |

| Scenario | Prediction (%) | |

|---|---|---|

| Rajapalayam Dog | Kombai Dog | |

| Stranger Walk | 87.00 | 88.42 |

| Stranger Run | 87.85 | 85.98 |

| Play with Ball | 81.53 | 82.08 |

| Play with Owner | 83.95 | 85.52 |

| Alone | 83.63 | 85.96 |

| Predicted | Actual Sounds | Stranger Walk | Stranger Run | Play with Ball | Play with Owner | Alone | Prediction (%) | |

|---|---|---|---|---|---|---|---|---|

| Actual | ||||||||

| Stranger Walk | 200 | 171 | 21 | 3 | 0 | 0 | 97.50 | |

| Stranger Run | 220 | 20 | 186 | 8 | 0 | 0 | 97.27 | |

| Play with Ball | 140 | 0 | 9 | 108 | 15 | 0 | 94.28 | |

| Play with Owner | 160 | 0 | 4 | 23 | 130 | 0 | 98.12 | |

| Alone | 120 | 0 | 0 | 10 | 7 | 95 | 93.33 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mohandas, P.; Anni, J.S.; Hasikin, K.; Velauthapillai, D.; Raj, V.; Murugathas, T.; Azizan, M.M.; Thanasekaran, R. Machine Learning Approach Regarding the Classification and Prediction of Dog Sounds: A Case Study of South Indian Breeds. Appl. Sci. 2022, 12, 10653. https://doi.org/10.3390/app122010653

Mohandas P, Anni JS, Hasikin K, Velauthapillai D, Raj V, Murugathas T, Azizan MM, Thanasekaran R. Machine Learning Approach Regarding the Classification and Prediction of Dog Sounds: A Case Study of South Indian Breeds. Applied Sciences. 2022; 12(20):10653. https://doi.org/10.3390/app122010653

Chicago/Turabian StyleMohandas, Prabu, Jerline Sheebha Anni, Khairunnisa Hasikin, Dhayalan Velauthapillai, Veena Raj, Thanihaichelvan Murugathas, Muhammad Mokhzaini Azizan, and Rajkumar Thanasekaran. 2022. "Machine Learning Approach Regarding the Classification and Prediction of Dog Sounds: A Case Study of South Indian Breeds" Applied Sciences 12, no. 20: 10653. https://doi.org/10.3390/app122010653

APA StyleMohandas, P., Anni, J. S., Hasikin, K., Velauthapillai, D., Raj, V., Murugathas, T., Azizan, M. M., & Thanasekaran, R. (2022). Machine Learning Approach Regarding the Classification and Prediction of Dog Sounds: A Case Study of South Indian Breeds. Applied Sciences, 12(20), 10653. https://doi.org/10.3390/app122010653