A Survey on Depth Ambiguity of 3D Human Pose Estimation

Abstract

1. Introduction

2. Status of Research

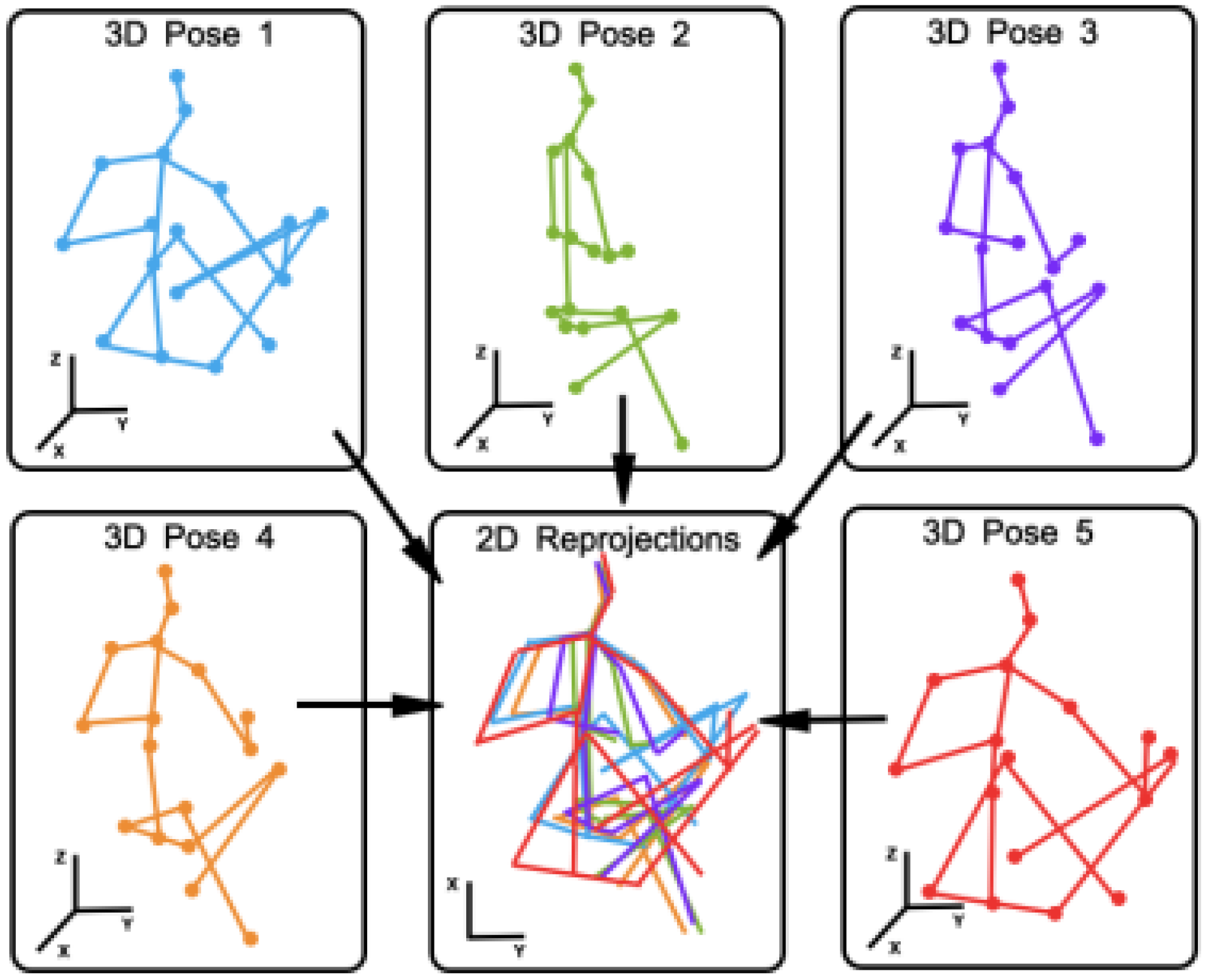

2.1. Camera Parameter Constraints

2.1.1. Method Content

2.1.2. Performance Analysis

2.2. Temporal Consistency Constraints

2.2.1. Method Content

2.2.2. Performance Analysis

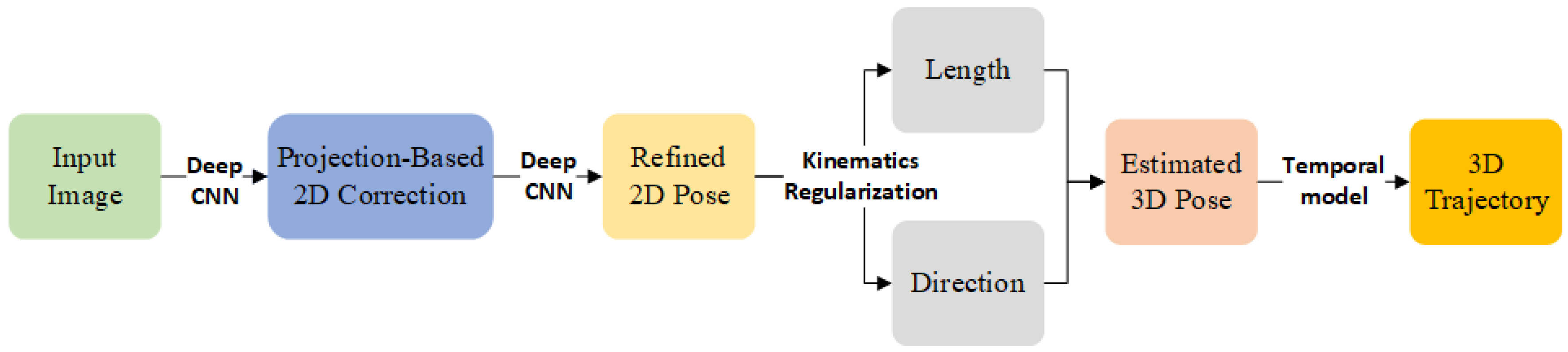

2.3. Kinematic Constraints

2.3.1. Method Content

2.3.2. Performance Analysis

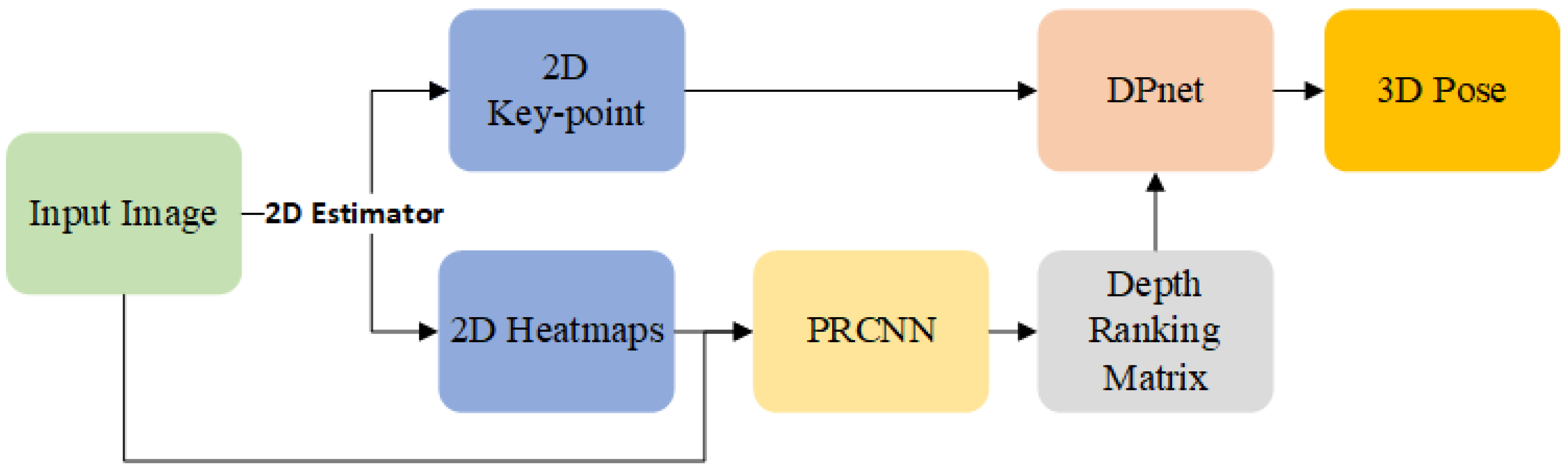

2.4. Image Cues Constraints

2.4.1. Method Content

2.4.2. Performance Analysis

3. Challenges

- Existing methods based on camera parameter constraints generally simplify the imaging model and directly treat the estimation task as a coordinate regression without adequate consideration of the inherent kinematic structure of the human body, which may lead to invalid results.

- Due to the lack of the original input image, the two-stage methods place overreliance on the 2D detector and discard the rich spatial information in the image when estimating the 3D pose. The error of the first stage will be amplified in the 3D estimation. The algorithm is ultimately limited if the 2D pose estimation is not updated with the 3D.

- The one-stage methods usually fix the scale of the 3D pose. The 3D pose is constructed from the 2D pose and depth, which may make estimation fail when the height of the subject is far from the height in the training set.

- The interframe continuity and stability constraints used by methods based on temporal consistency constraints lead to smoothness effects, which may cause inaccurate estimation of each frame. The estimated results will have floating, jitter, and sliding problems.

- Some kinematic constraints belong to simple structural constraints and are commonly treated as auxiliary losses in research, such as symmetry loss, joint angles limit, e.g., could only make marginal improvements to the estimation results, and limited disambiguating effect.

- The monocular image belongs to a two-dimensional representation and carries no depth information, which is challenging for image cue methods to learn depth information. In addition, as the depth is highly sensitive to camera parameters, translation and rotation will make joint depth prediction more difficult.

4. Future Potential Development

- Weakly supervised and unsupervised methods. The traditional deep convolutional neural network requires adequate manual annotations.The weakly supervised method does not need a large number of 3D annotations but 2D annotated data does, which reduces algorithm costs. Unsupervised methods follow this trend even more so.

- Interaction and reconstruction between scene object and human. The contact between human and object is the essential visual cue for inferring 3D movement. The current works based on a single RGB image generally first identify a human target using a bounding box and then estimate the cropped body, rarely paying attention to the scenes and objects that contain rich clues. The 3D HPE can utilize interpenetration constraints to limit the intersection between the body and the surrounding 3D scene.

- The 3D HPE from multi-view images. Multi-view images can significantly mitigate ambiguity, and their typical methods include fusing multi-view 2D heatmaps, enforcing consistency constraints between multiple views, and triangulation measurement, etc.

- Sensor technology. Some works use sensors, such as RGB-D depth cameras, inertial measurements units (IMUs) and radio frequency (RF) to add the collected depth, joint direction and other information [36,37,38]. Compared with depth images, using sensors to capture point clouds can provide more information.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Liu, Y. Research progress of two-dimensional human pose estimation based on deep learning. Comput. Eng. 2021, 47, 1–16. [Google Scholar]

- Han, G.J. A survey of two dimension pose estimation. J. Xi’an Univ. Posts Telecommun. 2017, 22, 1–9. [Google Scholar]

- Gamra, M.B. A review of deep learning techniques for 2D and 3D human pose estimation. Image Vis. Comput. 2021, 114, 104282. [Google Scholar] [CrossRef]

- Wang, J. Deep 3D human pose estimation: A review. Comput. Vis. Image Underst. 2021, 210, 103225–103246. [Google Scholar] [CrossRef]

- Kocabas, M.; Athanasiou, N.; Black, M. VIBE: Video Inference for Human Body Pose and Shape Estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 5252–5262. [Google Scholar]

- Yang, W.; Ouyang, W.; Wang, X. 3D human pose estimation in the wild by adversarial learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 5255–5264. [Google Scholar]

- Chen, Y. Monocular human pose estimation: A survey of deep learning-based methods. Comput. Vis. Image Underst. 2020, 192, 102897. [Google Scholar] [CrossRef]

- AID: Pushing the Performance Boundary of Human Pose Estimation with Information Dropping Augmentation. Available online: https://arxiv.org/abs/2008.07139 (accessed on 23 July 2022).

- Ionescu, C. Human3.6M: Large Scale Datasets and Predictive Methods for 3D Human Sensing in Natural Environments. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 1325–1339. [Google Scholar] [CrossRef] [PubMed]

- Pavllo, D.; Feichtenhofer, C.; Grangier, D. 3d human pose estimation in video with temporal convolutions and semi-supervised training. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 7753–7762. [Google Scholar]

- Heng, W. Calibration and Rapid Optimizing of Imaging Model for a Two-camera Vision System. J. App. Sci. 2002, 20, 225–229. [Google Scholar]

- Habibie, I.; Xu, W.; Mehta, D.; Pons-Moll, G.; Theobalt, C. In the Wild Human Pose Estimation Using Explicit 2D Features and Intermediate 3D Representations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 10897–10906. [Google Scholar]

- Li, C.; Lee, G.H. Generating Multiple Hypotheses for 3D Human Pose Estimation with Mixture Density Network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 9879–9887. [Google Scholar]

- Moon, G.; Chang, J.Y.; Lee, K.M. Camera Distance-Aware Top-Down Approach for 3D Multi-Person Pose Estimation From a Single RGB Image. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 10132–10141. [Google Scholar]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M. Generative adversarial nets. In Proceedings of the 27th International Conference on Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; pp. 2672–2680. [Google Scholar]

- Wandt, B.; Rosenhahn, J. RepNet: Weakly supervised training of an adversarial reprojection network for 3D human pose estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 7774–7783. [Google Scholar]

- Hossain, M.; Little, J.J. Exploiting temporal information for 3D pose estimation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 69–86. [Google Scholar]

- Cai, Y.J.; Ge, L.H.; Liu, J.; Cai, J.F.; Cham, T.J.; Yuan, J.S.; Thalmann, N.M. Exploiting spatial-temporal relationships for 3D pose estimation via graph convolutional networks. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 2272–2281. [Google Scholar]

- Zhang, J.H. Learning Dynamical Human-Joint Affinity for 3D Pose Estimation in Videos. IEEE Trans. Image Process. 2021, 30, 7914–7925. [Google Scholar] [CrossRef] [PubMed]

- Chen, T.L. Anatomy-aware 3D Human Pose Estimation with Bone-based Pose Decomposition. IEEE Trans. Circuits Syst. Video Technol. 2021, 32, 198–209. [Google Scholar] [CrossRef]

- Pose Estimation of a Human Arm Using Kinematic Constraints. Available online: http://www.cvmt.dk/projects/puppet/html/publications/publica-tions.html (accessed on 23 July 2022).

- Liu, G.Y. Video-Based 3D Human Pose Motion Capture. J. Comput.-Aided Des. Comput. Graph. 2006, 18, 82–88. [Google Scholar]

- Moreno-Noguer, F. 3d human pose estimation from a single image via distance matrix regression. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2823–2832. [Google Scholar]

- Lee, K.; Lee, I.; Lee, S. Propagating LSTM: 3D Pose Estimation Based on Joint Interdependency. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 123–141. [Google Scholar]

- Wang, J.; Huang, S.; Wang, X.; Tao, D. Not All Parts Are Created Equal: 3D Pose Estimation by Modeling Bi-Directional Dependencies of Body Parts. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 7770–7779. [Google Scholar]

- Ma, X.; Su, J.; Wang, C.; Ci, H.; Wang, Y. Context Modeling in 3D Human Pose Estimation: A Unified Perspective. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 6234–6243. [Google Scholar]

- Ashish, V.; Noam, S.; Niki, P. Attention is all you need. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 6000–6010. [Google Scholar]

- Angjoo, M.; Michael, J.B.; David, W.J.; Jitendra, M. End-to-end recovery of human shape and pose. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 7122–7131. [Google Scholar]

- Loper, M. SMPL: A skinned multi-person linear model. ACM Trans. Graph. 2015, 34, 1–16. [Google Scholar] [CrossRef]

- Xu, J.; Yu, Z.; Ni, B.; Yang, X.; Zhang, W. Deep Kinematics Analysis for Monocular 3D Human Pose Estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 896–905. [Google Scholar]

- Loper, M. An Image Cues Coding Approach for 3D Human Pose Estimation. ACM Trans. Multimed. Comput. Commun. Appl. 2019, 15, 1–20. [Google Scholar]

- Pavlakos, G.; Zhou, X.; Daniilidis, K. Ordinal Depth Supervision for 3D Human Pose Estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 7307–7316. [Google Scholar]

- Monocular 3D Human Pose Estimation by Generation and Ordinal Ranking. Available online: https://arxiv.org/abs/1904.01324 (accessed on 23 July 2022).

- Wang, M.; Chen, X.P.; Liu, W.T. DRPose3D: Depth ranking in 3D human pose estimation. In Proceedings of the International Joint Conference on Artificial Intelligence, Stockholm, Sweden, 13–19 July 2018; pp. 978–984. [Google Scholar]

- Wu, H.; Xiao, B. 3D Human Pose Estimation via Explicit Compositional Depth Maps. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; pp. 12378–12385. [Google Scholar]

- Henry, P. RGB-D mapping: Using Kinect-style depth cameras for dense 3D modeling of indoor environments. Int. J. Robot. Res. 2013, 31, 647–663. [Google Scholar] [CrossRef]

- Shotton, J.; Fitzgibbon, A.; Cook, M. Real-time human pose recognition in parts from single depth images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Colorado Springs, CO, USA, 20–25 June 2011; pp. 1297–1304. [Google Scholar]

- Yi, X.Y. TransPose: Real-time 3D Human Translation and Pose Estimation with Six Inertial Sensors. ACM Trans. Graph. 2021, 40, 1–13. [Google Scholar] [CrossRef]

| Methods | Dir. | Disc. | Eat | Greet | Phone | Photo | Pose | Purch. | Sit | SitD. | Smoke | Wait | WalkD. | Walk | WalkT. | Avg |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Habibie et al. [12] | 54 | 65.1 | 58.5 | 62.9 | 67.9 | 75 | 54 | 60.6 | 82.7 | 98.2 | 63.3 | 61.2 | 66.9 | 50 | 56.5 | 65.7 |

| Li et al. [13] | 43.8 | 48.6 | 49.1 | 49.8 | 57.6 | 61.5 | 45.9 | 48.3 | 62 | 73.4 | 54.8 | 50.6 | 56 | 43.4 | 45.5 | 52.7 |

| Moon et al. [14] | 31 | 30.6 | 39.9 | 35.5 | 34.8 | 37.6 | 30.2 | 32.1 | 35 | 43.8 | 35.7 | 30.1 | 35.7 | 24.6 | 29.3 | 34 |

| Wandt et al. [16] | 33.6 | 38.8 | 32.6 | 37.5 | 36 | 44.1 | 37.8 | 34.9 | 39.2 | 52 | 37.5 | 39.8 | 40.3 | 34.1 | 34.9 | 38.2 |

| Methods | Dir. | Disc. | Eat | Greet | Phone | Photo | Pose | Purch. | Sit | SitD. | Smoke | Wait | WalkD. | Walk | WalkT. | Avg |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Hossain et al. [17] | 48.4 | 50.7 | 57.2 | 55.2 | 63.1 | 72.6 | 53.0 | 51.7 | 66.1 | 80.9 | 59.0 | 57.3 | 62.4 | 46.6 | 49.6 | 58.3 |

| Pavllo et al. [10], single-frame | 47.1 | 50.6 | 49 | 51.8 | 53.6 | 61.4 | 49.4 | 47.4 | 59.3 | 67.4 | 52.4 | 49.5 | 55.3 | 39.5 | 42.7 | 51.8 |

| Pavllo et al. [10], 243-frames | 45.2 | 46.7 | 43.3 | 45.6 | 48.1 | 55.1 | 44.6 | 44.3 | 57.3 | 65.8 | 47.1 | 44 | 49 | 32.8 | 33.9 | 46.8 |

| Cai et al. [18] | 44.6 | 47.4 | 45.6 | 48.8 | 50.8 | 59.0 | 47.2 | 43.9 | 57.9 | 61.9 | 49.7 | 46.6 | 51.3 | 37.1 | 39.4 | 48.8 |

| Zhang et al. [19] | 28.5 | 33.5 | 28.1 | 28.9 | 32.6 | 35.5 | 33.3 | 30 | 37.4 | 39.9 | 31.4 | 30.2 | 29.5 | 23.9 | 25.5 | 31.2 |

| Chen et al. [20] | 41.4 | 43.5 | 40.1 | 42.9 | 46.6 | 51.9 | 41.7 | 42.3 | 53.9 | 60.2 | 45.4 | 41.7 | 46 | 31.5 | 32.7 | 44.1 |

| Methods | Dir. | Disc. | Eat | Greet | Phone | Photo | Pose | Purch. | Sit | SitD. | Smoke | Wait | WalkD. | Walk | WalkT. | Avg |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Moreno et al. [23] | 69.5 | 80.2 | 78.2 | 87.0 | 100.8 | 102.7 | 76.0 | 69.7 | 104.7 | 113.9 | 89.7 | 98.5 | 82.4 | 79.2 | 77.2 | 87.3 |

| Lee et al. [24] | 43.8 | 51.7 | 48.8 | 53.1 | 52.2 | 74.9 | 52.7 | 44.6 | 56.9 | 74.3 | 56.7 | 66.4 | 68.4 | 47.5 | 45.6 | 55.8 |

| Wang et al. [25] | 44.7 | 48.9 | 47 | 49 | 56.4 | 67.7 | 48.7 | 47 | 63 | 78.1 | 51.1 | 50.1 | 54.5 | 40.1 | 43 | 52.6 |

| Wang et al. [26] | 36.3 | 42.8 | 39.5 | 40 | 43.9 | 48.8 | 36.7 | 44 | 51 | 63.1 | 44.3 | 40.6 | 44.4 | 34.9 | 36.7 | 43.4 |

| Xu et al. [30] | 37.4 | 43.5 | 42.7 | 42.7 | 46.6 | 59.7 | 41.3 | 45.1 | 52.7 | 60.2 | 45.8 | 43.1 | 47.7 | 33.7 | 37.1 | 45.6 |

| Methods | Dir. | Disc. | Eat | Greet | Phone | Photo | Pose | Purch. | Sit | SitD. | Smoke | Wait | WalkD. | Walk | WalkT. | Avg |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Xing et al. [31] | 60.6 | 54.1 | 60.0 | 59.6 | 60.7 | 78.8 | 56.2 | 52.6 | 58.0 | 82.9 | 66.7 | 62.0 | 51.5 | 60.9 | 42.8 | 60.1 |

| Pavlakos et al. [32] | 48.5 | 54.4 | 54.4 | 52 | 59.4 | 65.3 | 49.9 | 52.9 | 65.8 | 71.1 | 56.6 | 52.9 | 60.9 | 44.7 | 47.8 | 56.2 |

| Sharma et al. [33] | 48.6 | 54.5 | 54.2 | 55.7 | 62.6 | 72 | 50.5 | 54.3 | 70 | 78.3 | 58.1 | 55.4 | 61.4 | 45.2 | 49.7 | 58 |

| Wang et al. [34] | 49.2 | 55.5 | 53.6 | 53.4 | 63.8 | 67.7 | 50.2 | 51.9 | 70.3 | 81.5 | 57.7 | 51.5 | 58.6 | 44.6 | 47.2 | 57.8 |

| Wu et al. [35] | 34.9 | 40.8 | 37.5 | 47.2 | 41.5 | 46.6 | 35.9 | 39.5 | 52.6 | 72.5 | 42.3 | 45.8 | 42 | 31.6 | 33.8 | 43.2 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, S.; Wang, C.; Dong, W.; Fan, B. A Survey on Depth Ambiguity of 3D Human Pose Estimation. Appl. Sci. 2022, 12, 10591. https://doi.org/10.3390/app122010591

Zhang S, Wang C, Dong W, Fan B. A Survey on Depth Ambiguity of 3D Human Pose Estimation. Applied Sciences. 2022; 12(20):10591. https://doi.org/10.3390/app122010591

Chicago/Turabian StyleZhang, Siqi, Chaofang Wang, Wenlong Dong, and Bin Fan. 2022. "A Survey on Depth Ambiguity of 3D Human Pose Estimation" Applied Sciences 12, no. 20: 10591. https://doi.org/10.3390/app122010591

APA StyleZhang, S., Wang, C., Dong, W., & Fan, B. (2022). A Survey on Depth Ambiguity of 3D Human Pose Estimation. Applied Sciences, 12(20), 10591. https://doi.org/10.3390/app122010591