1. Introduction

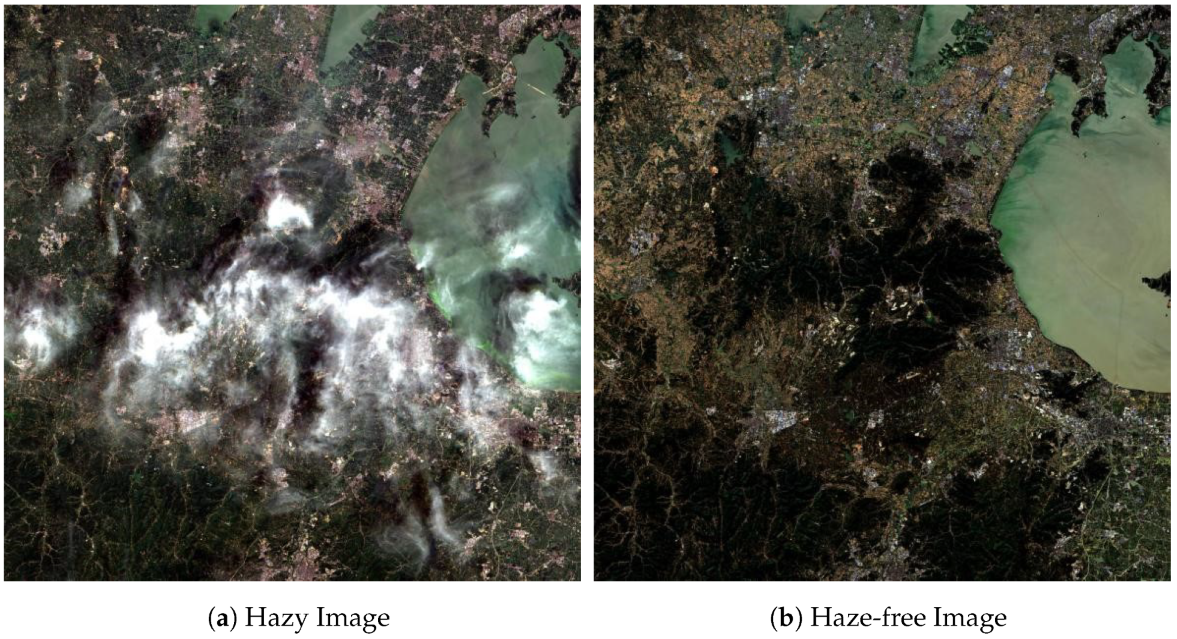

In recent years, the quality and quantity of satellite data have tremendously increased. However, the impact of haze has been a common issue with optical remote sensing data. Haze can severely interfere with the transmittance in all optical spectral bands, which impacts the reflected signal and hinders the observation of the underlying surface of the haze. This further results in huge data loss in both the spatial and temporal domains. Haze becomes serious interference for applications requiring time consistency (such as agricultural monitoring) and applications requiring observation of a scene at a specific time (such as disaster monitoring). Therefore, effective recovery from haze will greatly increase the usability of remote sensing data.

Early studies on the dehazing problem of remote sensing images use different methods to eliminate the influence of haze [

1,

2,

3,

4]. They use multi-source or multi-temporal images in the same area as auxiliary data, the complementary relationship between images, image fusion, pixel replacement, etc. All these methods have achieved good results. However, the need to obtain multiple sets of data from the same area as auxiliary data leads to poor applicability; especially for some remote sensing data with long collection interval, it will be more difficult to obtain available auxiliary data. Therefore, the single image dehazing method has attracted more and more attention. Some studies on single image dehazing use image enhancement methods, including processing the histogram of the image [

5], and enhancing the contrast [

6] and saturation [

7] of the image. Additionally, some dehazing methods are based on homomorphic filtering [

8] and the retinex color constancy theory [

9].

Image enhancement lacks the hazy imaging mechanism, which could lead to a certain degree of distortion in the restored image. Researchers build an image dehazing method based on the Atmospheric Scattering Model (ASM). The most popular ASM model was proposed by McCartney and further improved by Narasimhan [

10] and Nayar [

6]. The model can usually be written as in Formula (

1):

where

is the image disturbed by haze;

is the haze-free image that needs to be restored;

is the transmittance of light passing through the atmospheric medium;

x represents the image pixel;

A represents the global constant: atmospheric light. To obtain the haze-free image

, we first need to obtain the transmittance

and the global atmospheric light

A. However, using hazy images to estimate transmittance requires prior information. At present, studies on prior information are mainly based on statistical properties of hazy images, such as contrast prior [

11], dark channel prior (DCP) [

12], color attenuation prior [

13], etc. However, this prior information will very likely become less applicable in images of different scenes, which affects the dehazing results.

The development of deep learning brings new ideas to the dehazing research: convolutional neural network (CNN). Some earlier studies use neural networks to replace prior information to estimate the parameters of Formula (

1) [

14,

15,

16] and to obtain the dehazed image. Since the real transmittance value of the hazy image cannot be obtained, the training data can be achieved through simulated parameters, which could impact the accuracy of the estimated transmittance. Meanwhile, the transmittance model is a simplified expression for hazy imaging. The advantage of feature extraction capability of neural networks cannot be fully utilized.

Some research uses end-to-end networks to directly explore the mapping relationship from hazy images to haze-free images and, finally, generate dehazed images [

17,

18,

19,

20]. This research obtained prominent dehazing effects. However, there are limitations. First, the dataset used in the dehazing models is usually less disturbed by haze, which is relatively uniformly distributed. In remote sensing images, the distribution of haze is often uneven, and thin clouds often exist, which makes the image more disturbed than the images studied in their research. Second, remote sensing images usually have more than three channels (RGB) as in ordinary natural images. For example, the images obtained by the Operational Land Imager (OLI) of landsat8 have nine bands. The images acquired by the Multispectral Imager (MSI) of Sentinel-2 have 13 bands. The bands beyond RGB also interfere with haze.

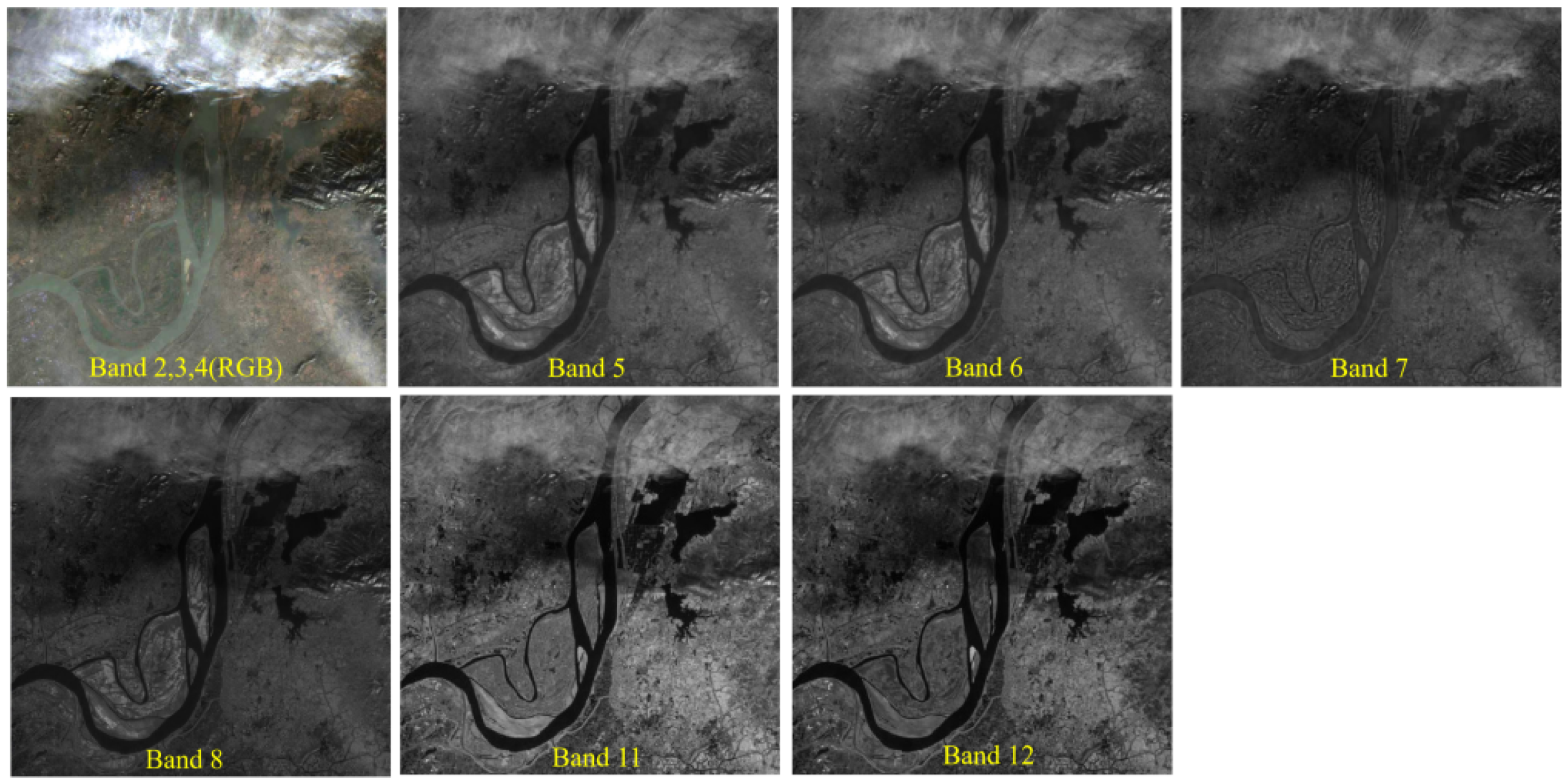

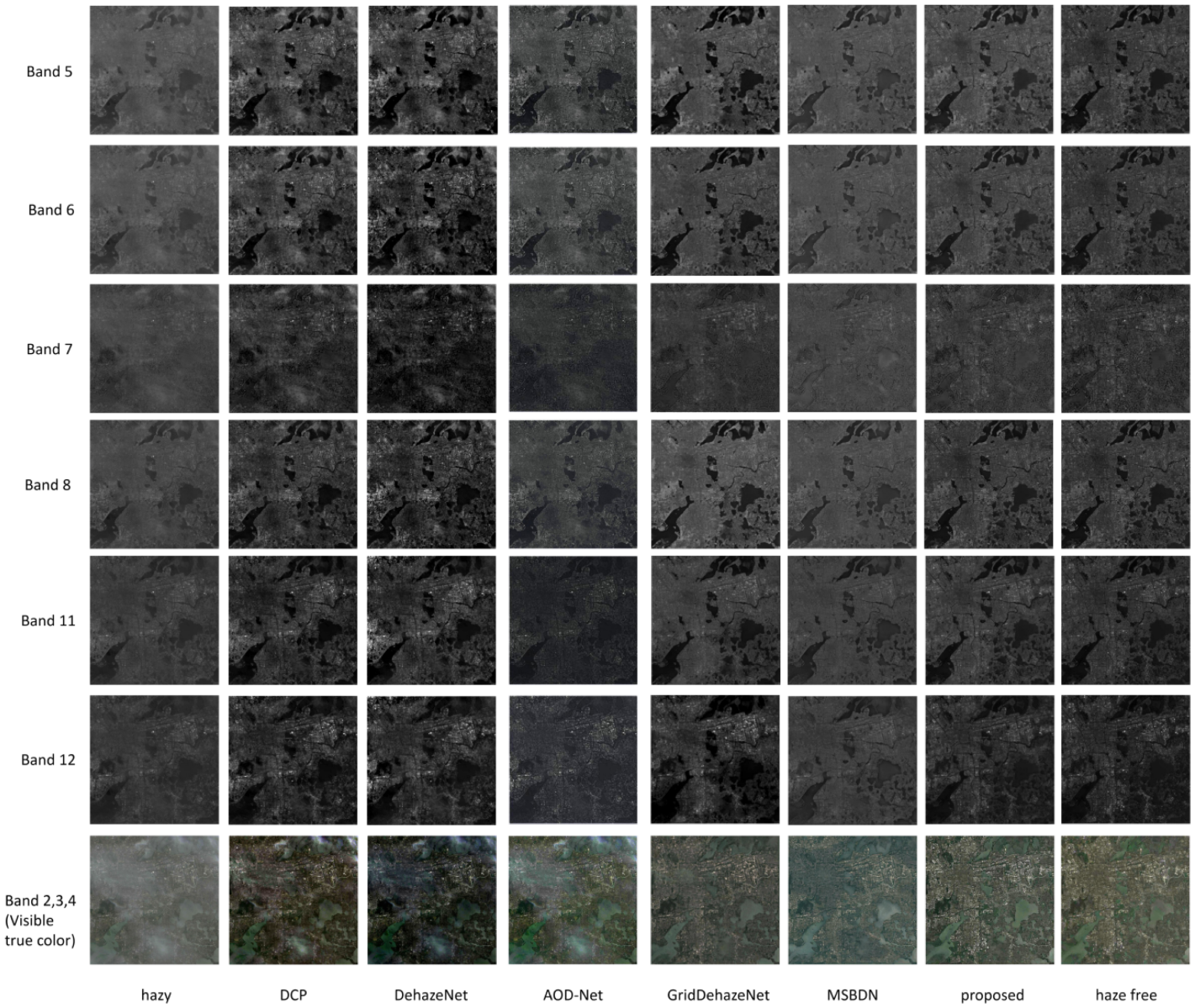

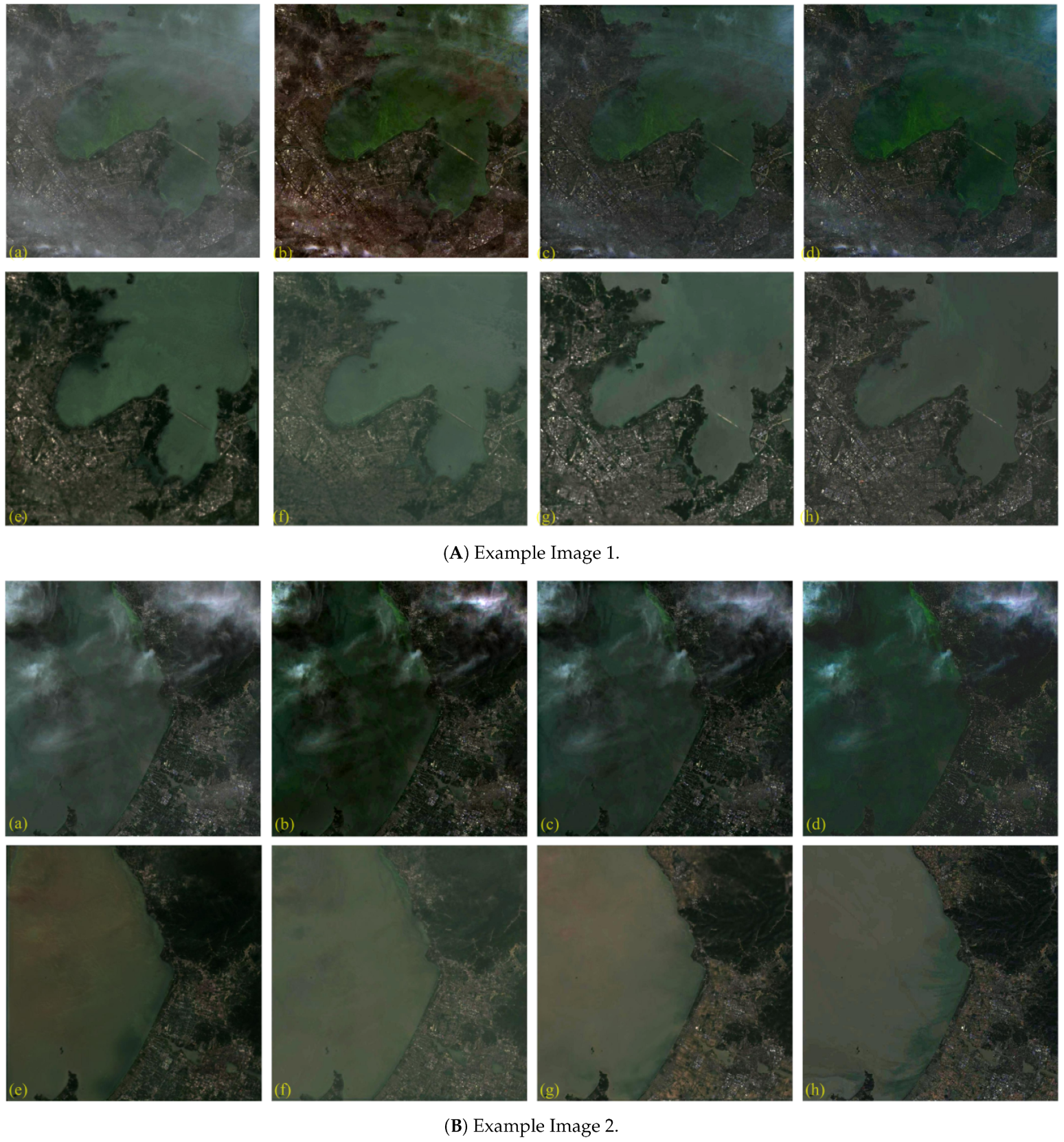

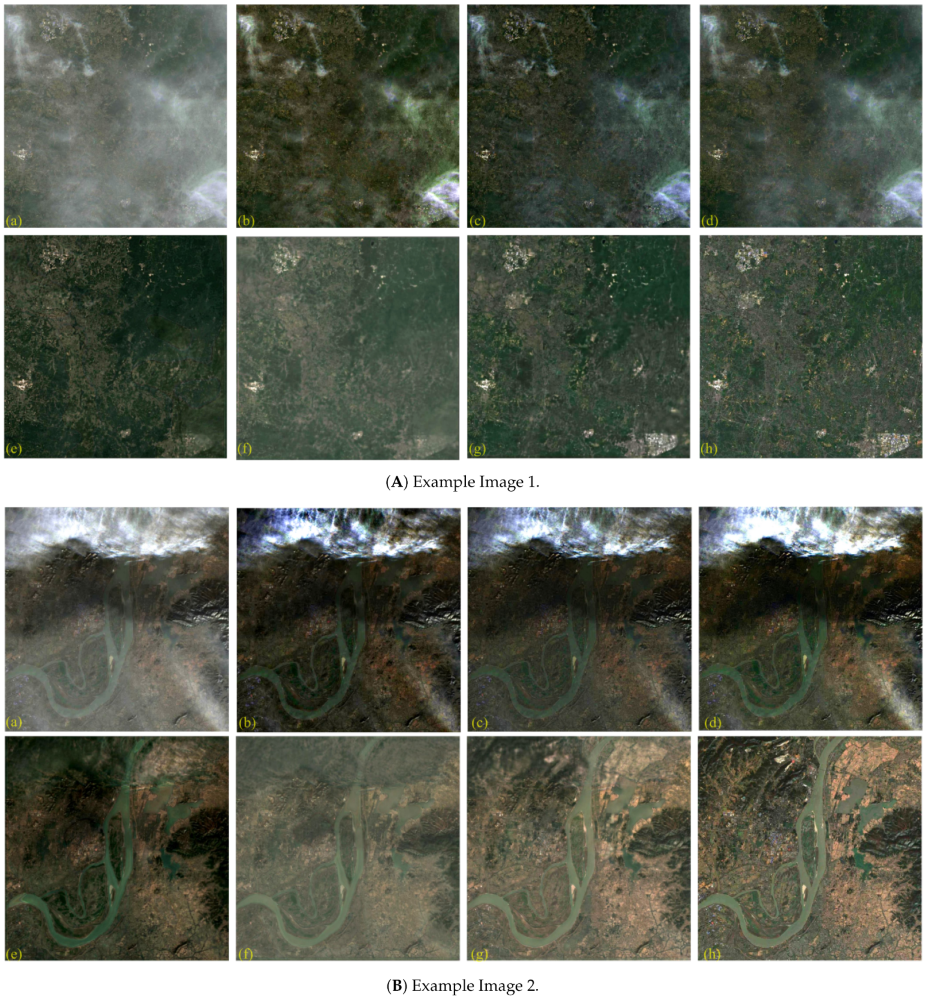

Figure 1 shows an example of an image captured by Sentinel-2. It can be observed that the infrared bands, such as Band11 and Band12, have stronger penetrating power and are less impacted by haze.

Most of the previous dehazing methods cannot effectively remove non-uniformly distributed haze. They cannot deal with the impact on the bands beyond RGB as well. Inspired by the dehazing of natural images using convolutional neural networks (CNNs), some researchers apply CNNs to the dehazing of remote sensing images [

21,

22,

23,

24]. Meanwhile, the infrared bands and Synthetic Aperture Radar (SAR) microwave bands in multispectral remote sensing images can penetrate haze more easily compared to visible bands. They can better reserve the ground information in the area with haze. Therefore, some research uses infrared band images and SAR images as auxiliary information, which is used as input for CNNs to obtain dehazing models [

25,

26,

27]. These methods can better handle the non-uniform distribution of haze. However, most of them focus on the RGB band or a few near-infrared bands, instead of the more abundant infrared bands. Furthermore, most of the training data are synthetic hazy images, which could be different from the actual hazy images with a lot more complexity.

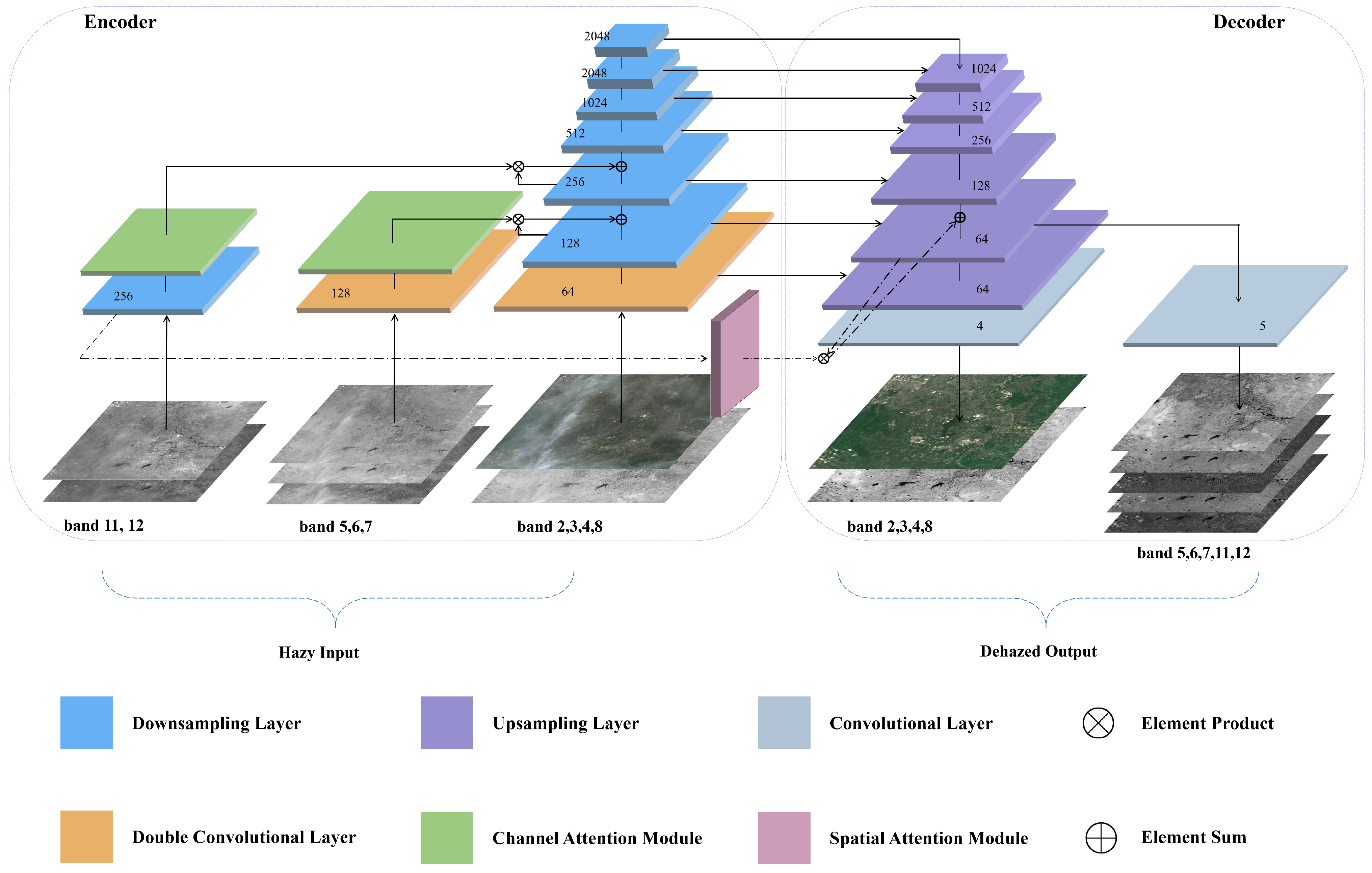

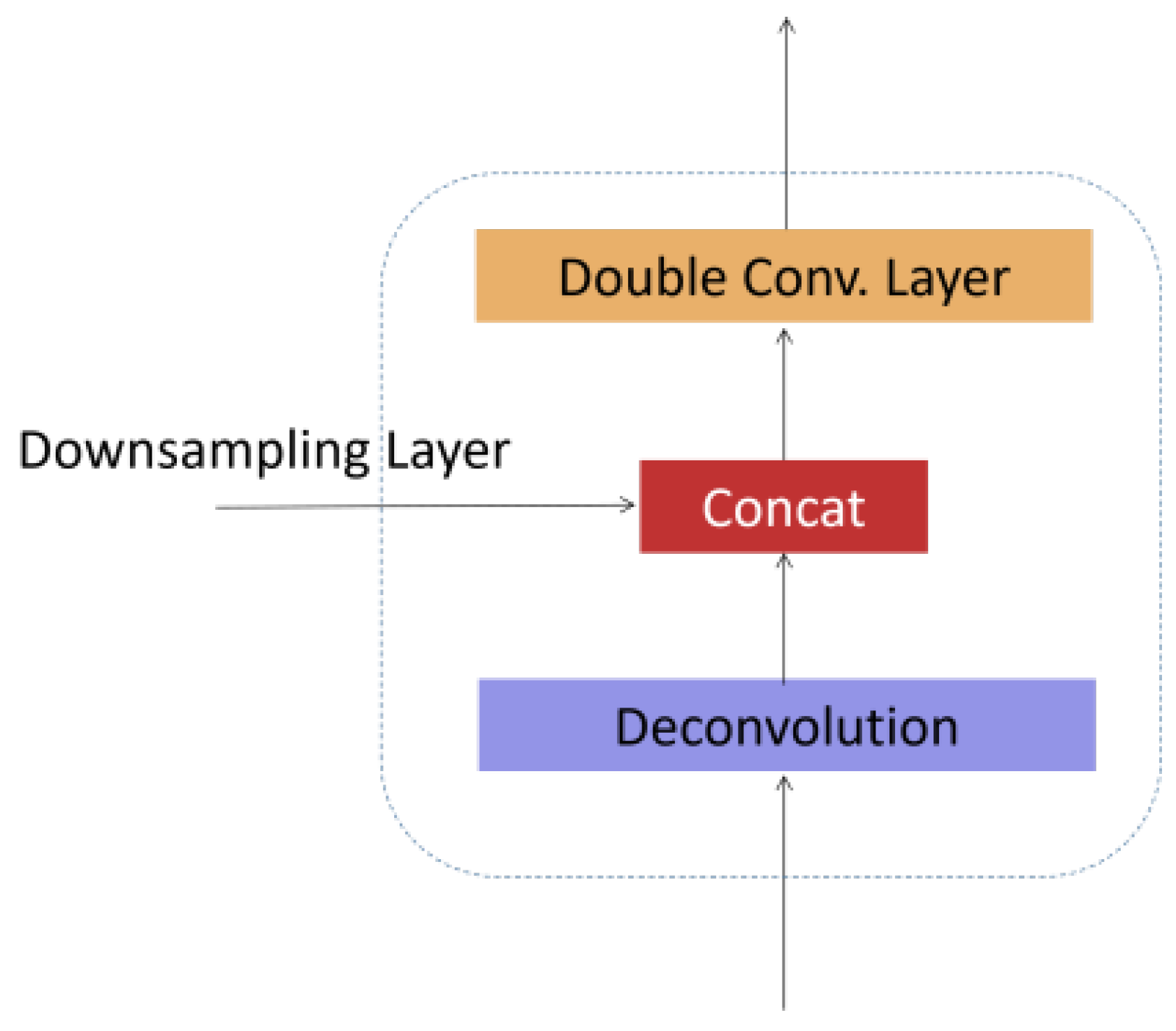

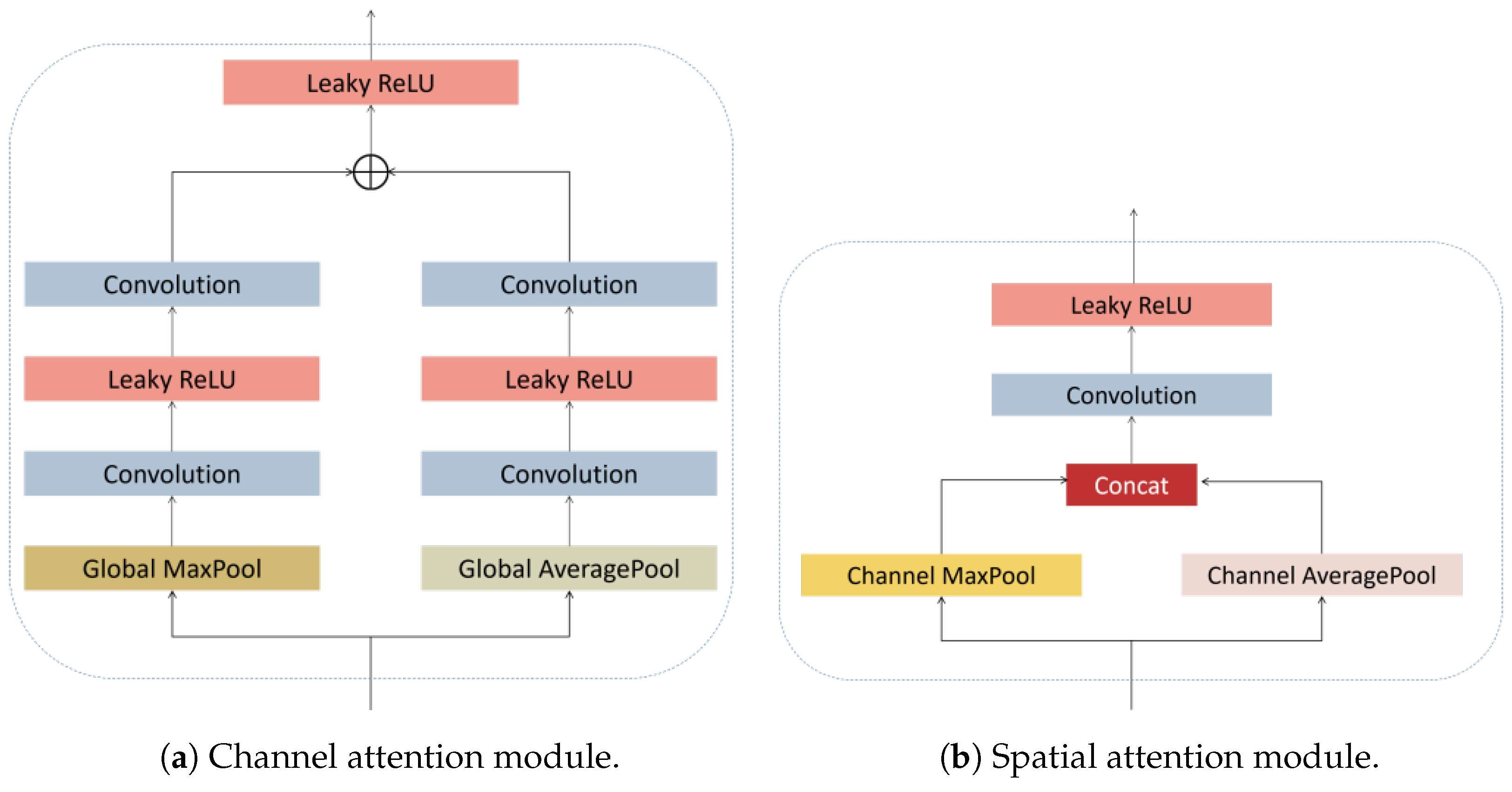

To address these issues, we propose a multi-input attention network for the dehazing of a single multispectral remote sensing image. Since different bands in multispectral images have different features, we utilize the strong penetration capability of richer infrared bands and divide the multiple bands into three groups to extract features. Our proposed network consists of an encoder–decoder structure and uses head-to-tail connections and a multi-scale output structure, similar to the feature pyramid network. This structure enables the dehazing model to effectively remove haze while maintaining ground details. It can directly process bands of different resolutions. Furthermore, improved channel attention and spatial attention structure are added for extracting features from different inputs, which improves the efficiency and adaptability of training. In this research, we use real haze and haze-free multispectral remote sensing image pairs as datasets. Our dehazing model achieves very good results in restructuring a variety of cloud-contaminated multispectral images.

The main contributions of this study are as follows:

We propose a multi-input attention network to dehaze multispectral remote sensing images. This method does not require upsampling/downsampling on the training data. It can dehaze the images captured by Sentinel-2 with different resolutions in nine bands, effectively avoiding information loss due to upsampling/downsampling. To obtain the best recovery effect, the visible light band and the features of the infrared band are fused. It takes the advantage of the strong penetration capability of the infrared band.

We build an end-to-end dehazing network with an encoder–decoder structure, which directly obtains haze-free images from learning hazy images. To improve training efficiency, the structure of weighted multiplication and residual connection between different input lines are used to adjust the feature extraction.

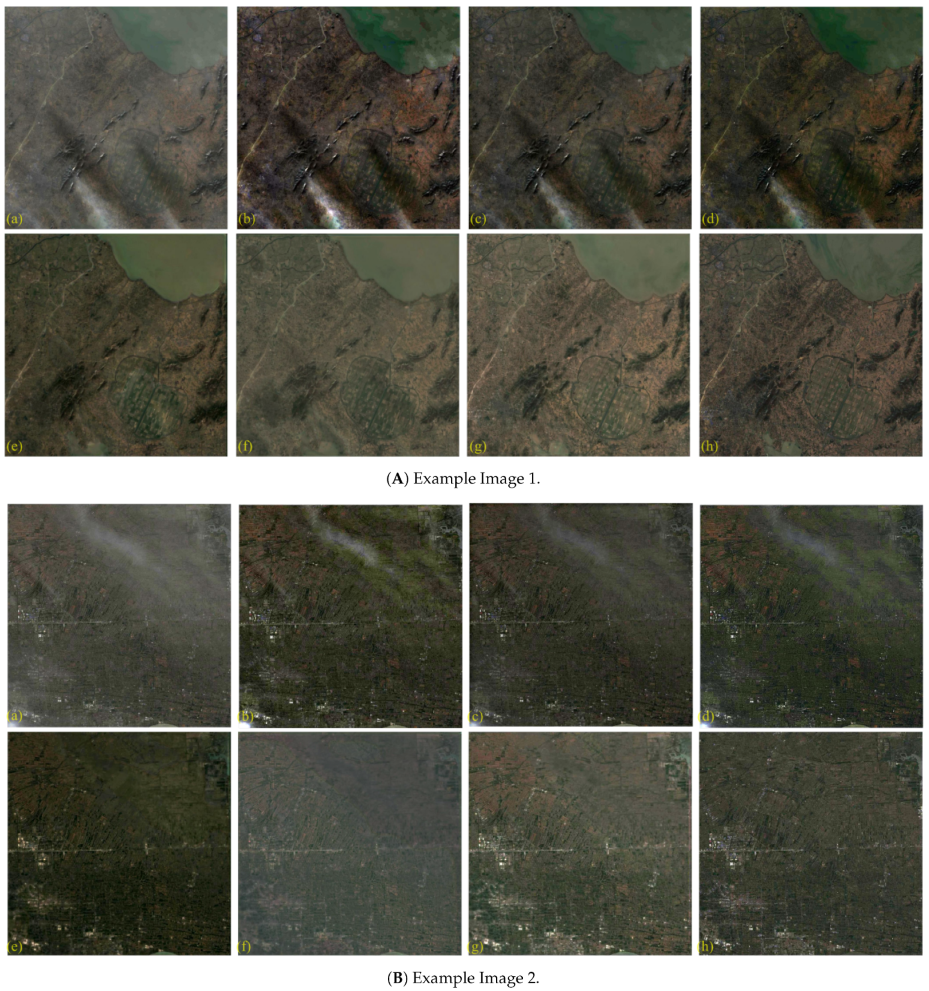

We use skip connections and a multi-layer output structure in the network, which can produce multi-spectral dehazing images with different resolutions. Connecting the shallow part with the tail of the network preserves the ground details and allows the network to fully extract deep features. Meanwhile, adding an improved attention module to the connection part further improves feature extraction. Finally, the network can effectively remove the disturbances, including clouds and cloud shadows under non-uniform distribution.

5. Conclusions

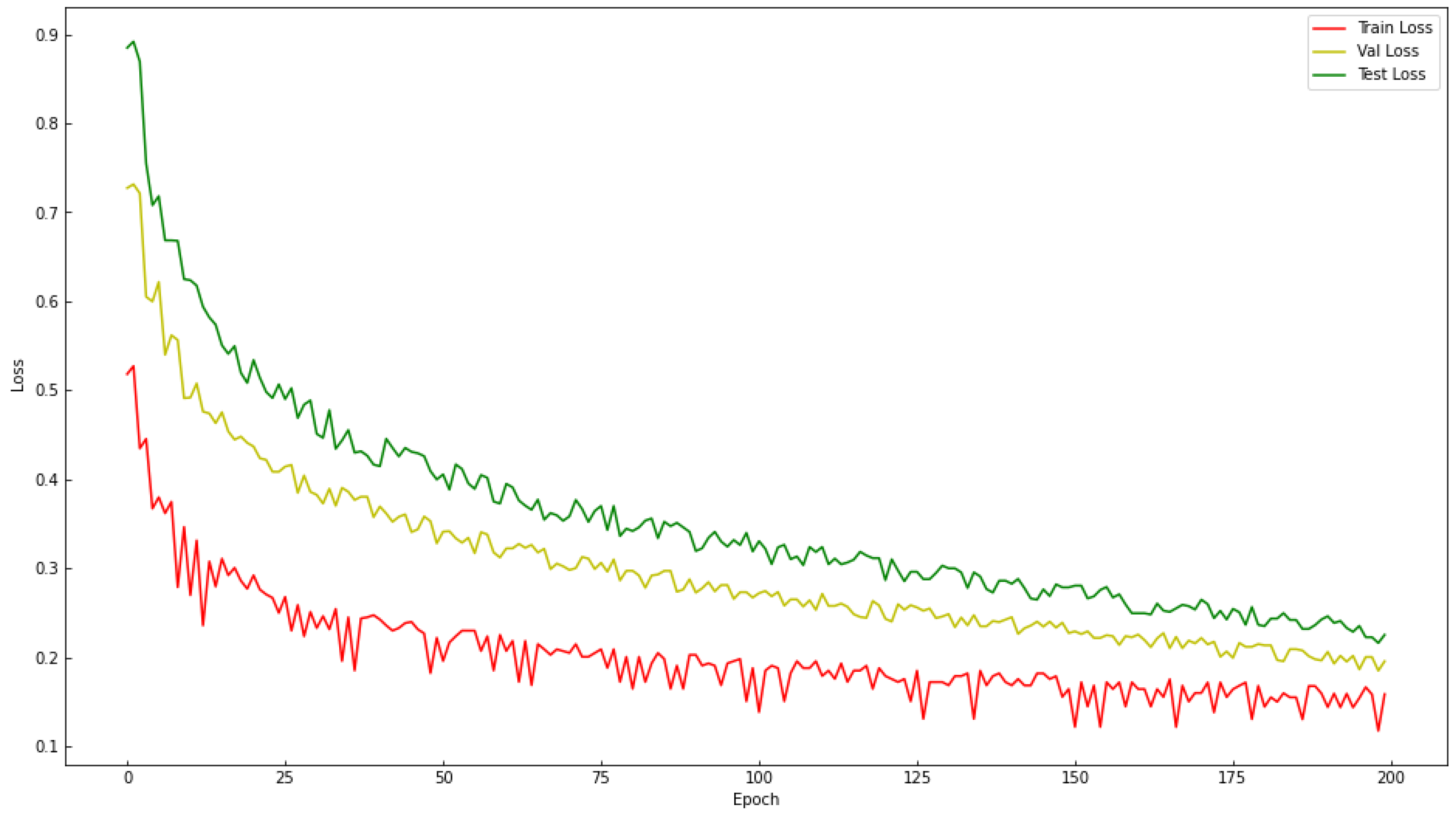

Traditional dehazing methods rely on prior features and are less versatile, which makes them not applicable, especially for remote sensing images with widespread non-uniform haze. In recent years, deep learning methods have been applied for automatic feature extraction. However, the structure of remote sensing images with haze is relatively complex. It is difficult for general neural networks to effectively extract features. Meanwhile, there are very few network models targeting muti-band remote sensing images. In this research, we propose a multi-input, multi-spectral remote sensing image dehazing network, which effectively utilizes the penetrating capability of the infrared band to haze. We used global skip connections and attention modules to achieve effective feature extraction and maintain ground details in the meantime. Finally, we designed experiments to test the performance of the proposed method on multispectral images captured by Sentinel-2, which have different degrees of haze effects. Our method can effectively restore the images. It outperforms the traditional dark channel method and several neural network methods, such as DehazeNet, AOD-Net, MSBDN and GridDehazeNet, in terms of haze residues and quantitative evaluation metrics.

Meanwhile, there are some limits in this research. First, the training dataset is not categorized based on the types of haze, which could impact the effectiveness of the proposed model. Second, even though the ground details are well maintained in the restored images, there is still some loss compared to haze-free images. As for our future work, we will formulate an indicator to describe the degree of the haze effect, which will be used to classify the images in the training dataset. At the same time, we will improve the model by referring to the method that can effectively improve the detail resolution in super-resolution research.