This section will describe the experimental environment, the datasets used, and the evaluation metrics used to evaluate the models we present.

4.1. Experimental Setup

To validate the effectiveness of the proposed CAT-IADEF, we have set up 5 experiments as follows:

- (1)

Model Performance Comparison. Observe the performance of our method compared to the baseline method. The experimental results can be found in the “Anomaly detection” part in

Section 4.4.

- (2)

Anomaly Explanation Results. Anomaly Explanation Results of CAT-IADEF. The experimental results can be found in the “Anomaly explanation” part in

Section 4.4.

- (3)

Validation of Anomaly Explanation Framework. Validate the framework on different models. The experimental results can be found in the “Anomaly explanation” part in

Section 4.4.

- (4)

Ablation Analysis. Observe the effect of model components on model performance. The experimental results are shown in

Section 4.5.

- (5)

Sensitivity Analysis of Parameters. Observe the effect of different parameters on model performance. The experimental results are shown in

Section 4.6.

Experimental Environments: (1) CPU: Intel (R) Core (TM) i7-7500U CPU@2.70 GHz; (2) RAM: 8 GB; (3) Python version: 3.7.11; and (4) Pytorch version: 1.6.0.

We selected the following methods for performance comparison experiments: PCA [

6] based on dimensionality reduction, LOF [

9] based on density, COPOD [

8] based on statistics and machine learning, and OCSVM [

11] based on classification; these four are relatively classic methods. MAD_GAN [

22], OmniAnomaly [

25], USAD [

4], and TranAD [

28] are the latest deep learning methods with excellent performances.

4.2. Public Datasets

We chose to use four datasets, including the Singapore Safe Water Treatment dataset; all selected datasets are detailed below and in

Table 1. For each dataset used, we selected multiple data subsets on which we trained and evaluated the models in our experiments.

SMAP. Soil collected by satellite comprises remote sensing information data [

34]. Satellite-derived active and passive level 3 soil moisture observations were integrated into a modified two-layer Palmer model.

MSL. Similar to SMAP datasets. It consists of data collected by the Mars Science Laboratory [

34] rover.

SwaT. Safe Water Treatment Dataset. Real-world industrial water treatment plants collect during the production of filtered water. It contains data from 7 days of regular operation and 4 days of abnormal function [

35].

SKAB. A dataset is involved in evaluating anomaly detection algorithms [

36]. The benchmark currently includes over 30 datasets and Python modules for evaluating algorithms. Each dataset represents a multivariate time series collected from sensors installed on the testbed.

Table 1 shows how many instances are included in each dataset, the ratio of training to testing, the number of features in each dataset, and the proportion of anomalies. The four open datasets are commonly used in anomaly detection, whose training/testing partition varies from dataset to dataset, and the number of features and anomaly ratios also vary among different datasets. We validate the effectiveness and robustness of our approach using various datasets with different proportions of anomalies, different partitions of training/test divisions, and different feature numbers.

4.4. Experimental Results

Anomaly detection. Deep learning methods are known to be generally superior to machine learning methods. Still, machine learning methods may be better on some specific datasets, so, to show the overall performance of CAT-IADEF, we compare it with four classical methods and four deep learning methods. In the comparison experiments, to ensure the fairness of the comparison, we add dynamic threshold adjustment to all methods, and each group of experiments is carried out 5 times to obtain the average value.

Table 2 shows the accuracy, precision, recall, and F1 scores of CAT-IADEF and the other compared models on seven datasets.

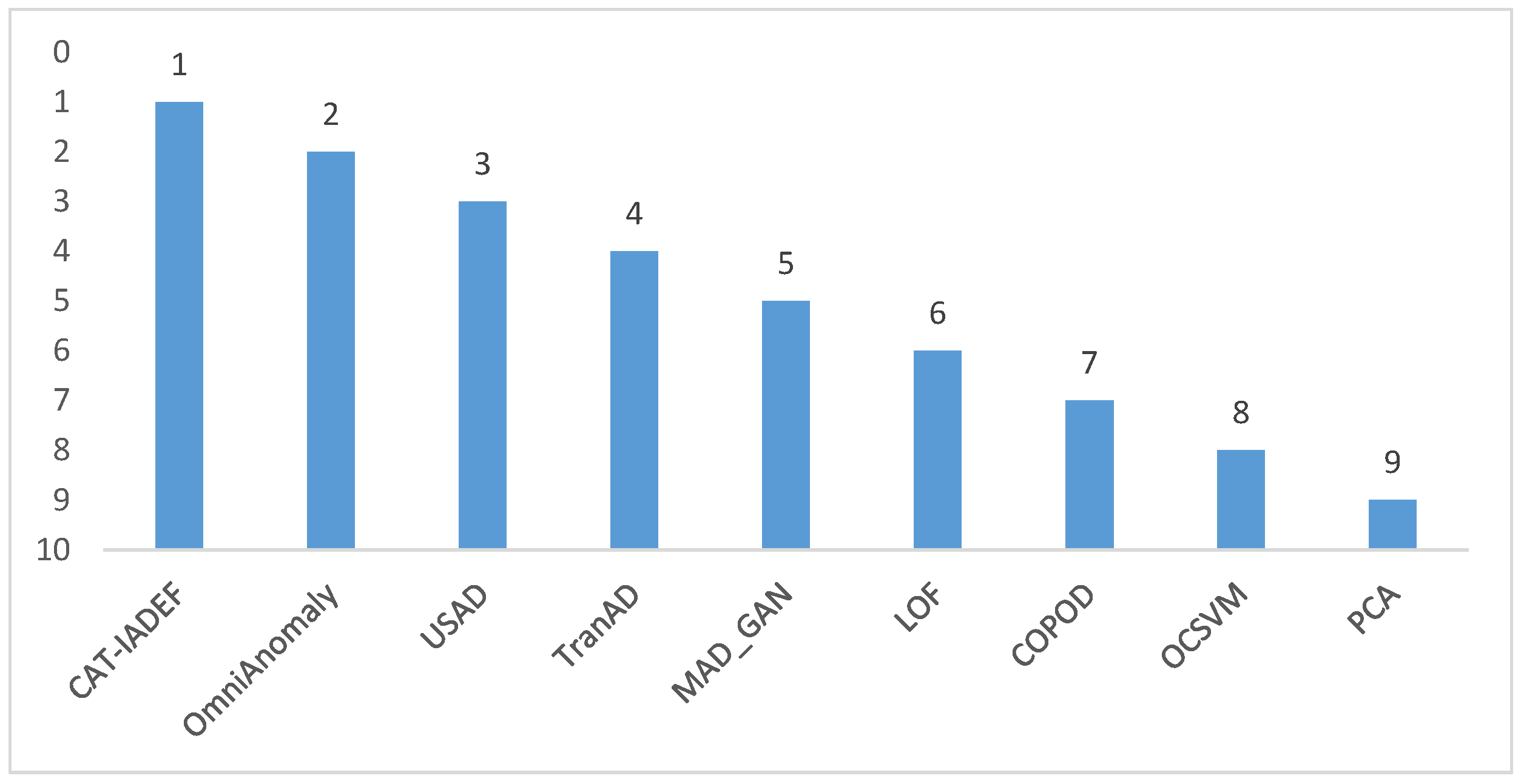

It can be seen from

Table 2 that CAT-IADEF achieved the best F1 values on the data subsets T4 of MSL, Valve1-4 and Valve1-14 of SKAB, and D15 of MSL; these values were, respectively, 0.747, 0.929, 0.987, and 0.993. On the P1 subset of SMAP, OmniAnomaly’s method achieved the best F1 value of 0.924, and CAT-IADEF’s F1 value of 0.923 ranked second. On the data subset of SWaT, the TranAD method achieved the best F1 value of 0.814, and CAT-IADEF’s F1 value of 0.811 ranked fourth. On the data subset T1 of SMAP, the LOF method performed the best, with an F1 value of 0.971, and the F1 value of CAT-IADEF was 0.960, ranking second. We rank the F1 of the models comprehensively on all datasets, as shown in

Figure 2. CAT-IADEF has a combined ranking of about 1.7, ranking first among all the compared models.

To analyze the different models, we first analyze four machine learning methods. The number of dimensionality reductions finally obtained by the PCA, that is, the number of latent variables, cannot be well estimated; therefore, its performance is not outstanding on the seven datasets. COPOD does not need to adjust parameters nor calculate the distance between samples, and the overhead is negligible. COPOD and OCSVM perform well on the T1 dataset and poorly on the rest of the datasets. This is due to the following problem: the scene changes in the face of non-stationary, unbalanced time series. The performance of LOF on other datasets is not outstanding. Still, the optimal F1 value is obtained on the T1 dataset, which is considered because this subset’s local distribution of the time series is suitable for calculating k-fields in LOF.

For the deep learning method, USAD only uses the simplest AE model and only considers the linear transformation, resulting in its insignificant effect, while CAT-IADEF adopts the CNN network and considers the relationship between different features. TranAD and MAN_GAN use an attention mechanism when detecting anomalies. TranAD uses positional encoding in the transformer structure to help capture the temporal order, which performs optimally on the SWaT dataset. OmniAnomaly is not the most prominent for all datasets except P1 because it is input sequentially, preserves essential information, and reconstructs all inputs regardless of input data, which prevents them from detecting anomalies that are close to normal trends. CAT-IADEF’s confrontational training allows it to magnify “slight” anomalies and help it see them, even though other models will detect “slight” anomalies as routine data.

Anomaly explanation. The results of the anomaly explanation are shown in

Table 3, which we tested on the P1 dataset.

In

Table 3, Dimension 1 is the dimension with the highest number of anomalies, and Dimension 2 is the dimension with the second highest number of anomalies. The number corresponding to the dimension is the serial number of the dimension. On the P1 dataset, for example, the dimension containing the most anomalies is Dimension 5, and the dimension with the second-most anomalies is Dimension 6.

To test the validity of the anomaly explanation, we tested five deep learning models, including CAT-IADEF, on experimental datasets.

Table 4 shows that only TranAD attributes anomalies to Dimensions 0 and 3 on the dataset P1, and the remaining methods attribute anomalies to Dimensions 5 and 6. In the SKAB dataset, the percentage of anomalies is up to 35.4%. However, we can still see that the anomalies in the Valve1-4 dataset are mainly concentrated in Dimensions 7 and 1, and the Valve1-14 datasets are concentrated in Dimensions 7, 4, and 2. The results of the remaining datasets are similar and validate the validity of the anomaly explanation module.

4.5. Ablation Analysis

To investigate the importance of each model component to the overall model performance, we exclude each significant element in turn and observe how it affects model performance on the experimental dataset.

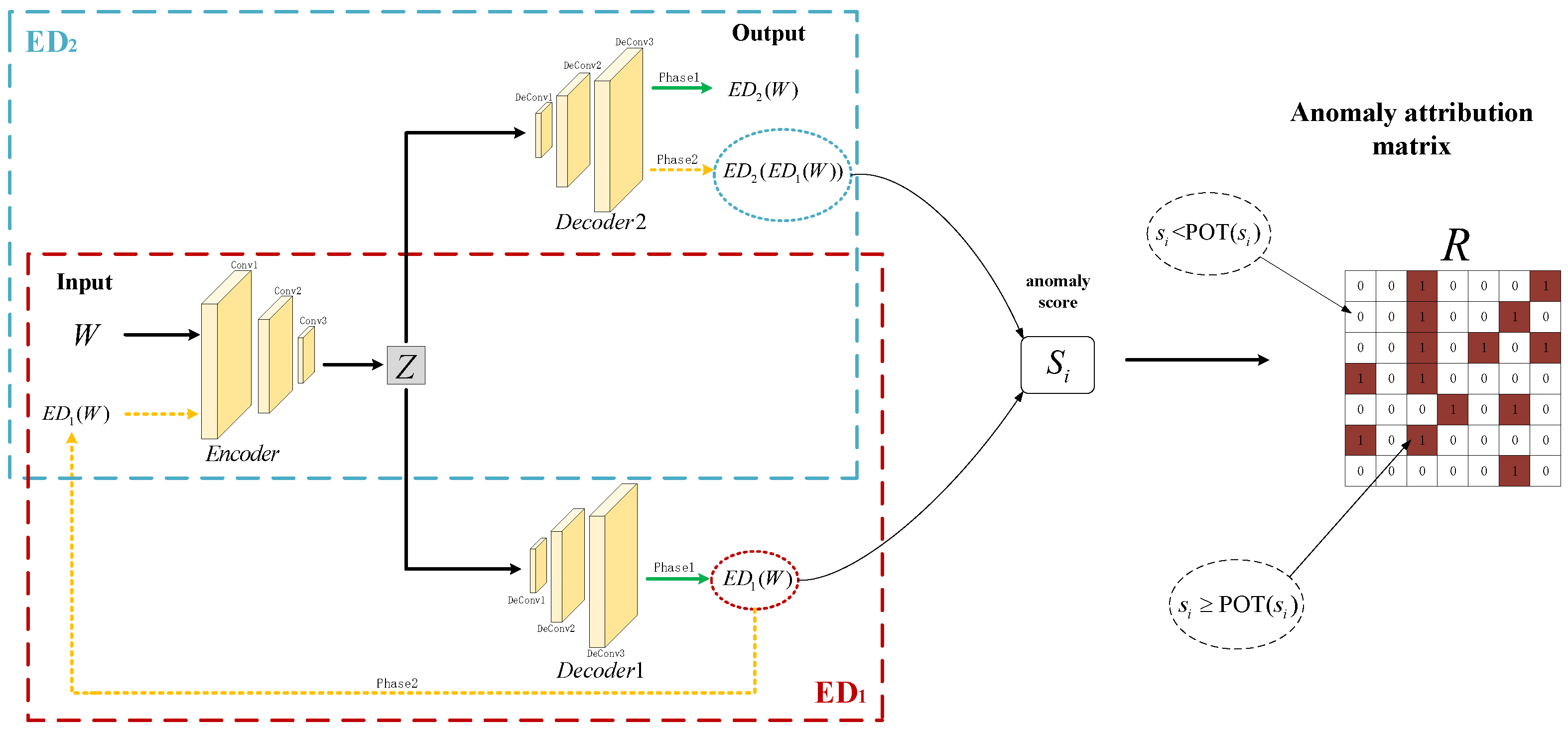

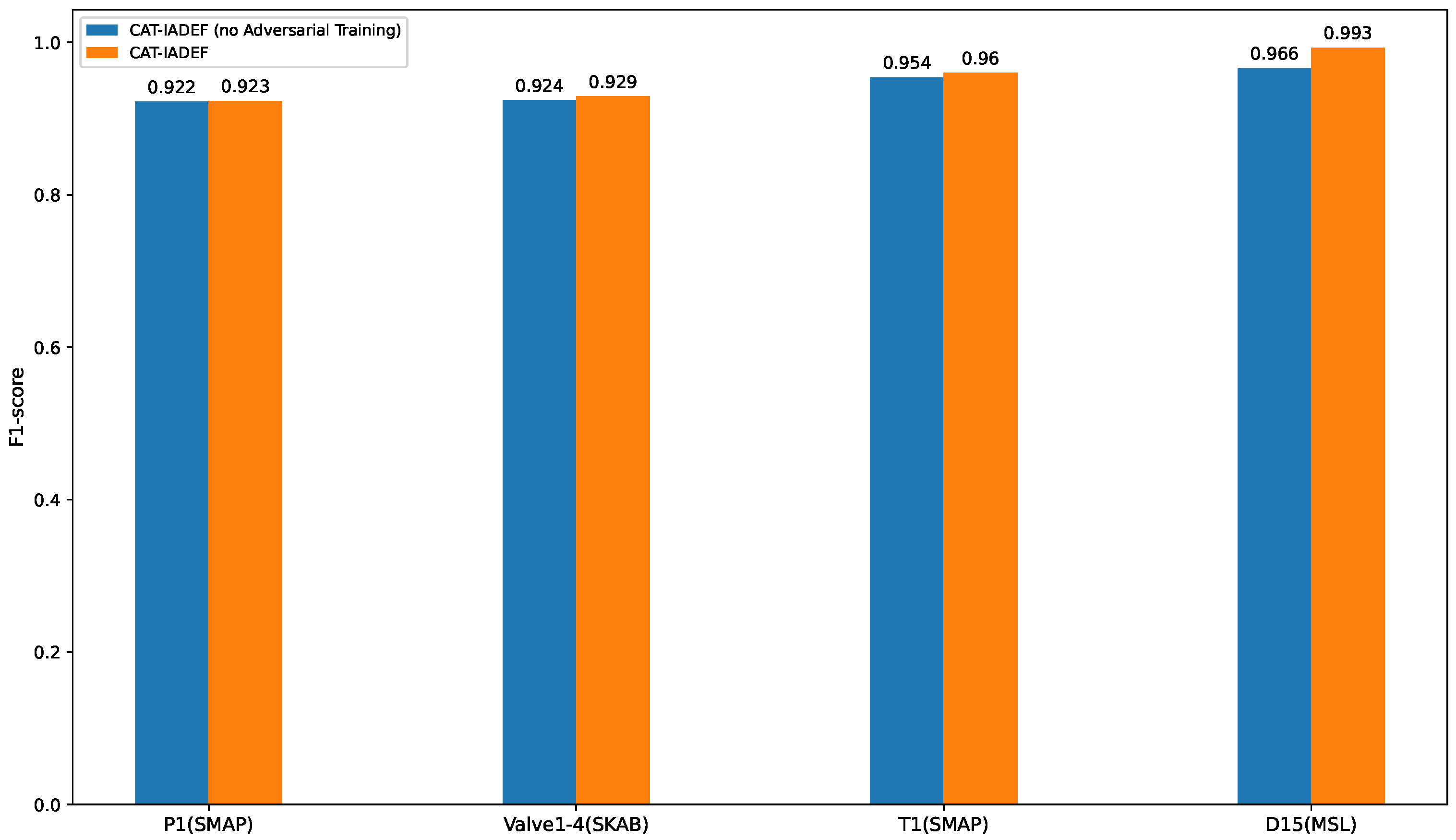

Adversarial training. In the experimental dataset, we compared the entire model to a model with no adversarial training, i.e., no

.

Figure 3 shows the F1 scores of the CAT-IADEF and CAT-IADEF (no adversarial training) models on four experimental datasets.

As shown in

Figure 3 the performance of CAT-IADEF (no adversarial) has declined on the four datasets, with a minimum drop of 0.11% and a maximum drop of 2.72%; this means that adversarial training enables the model to identify “slight” anomalies by magnifying errors.

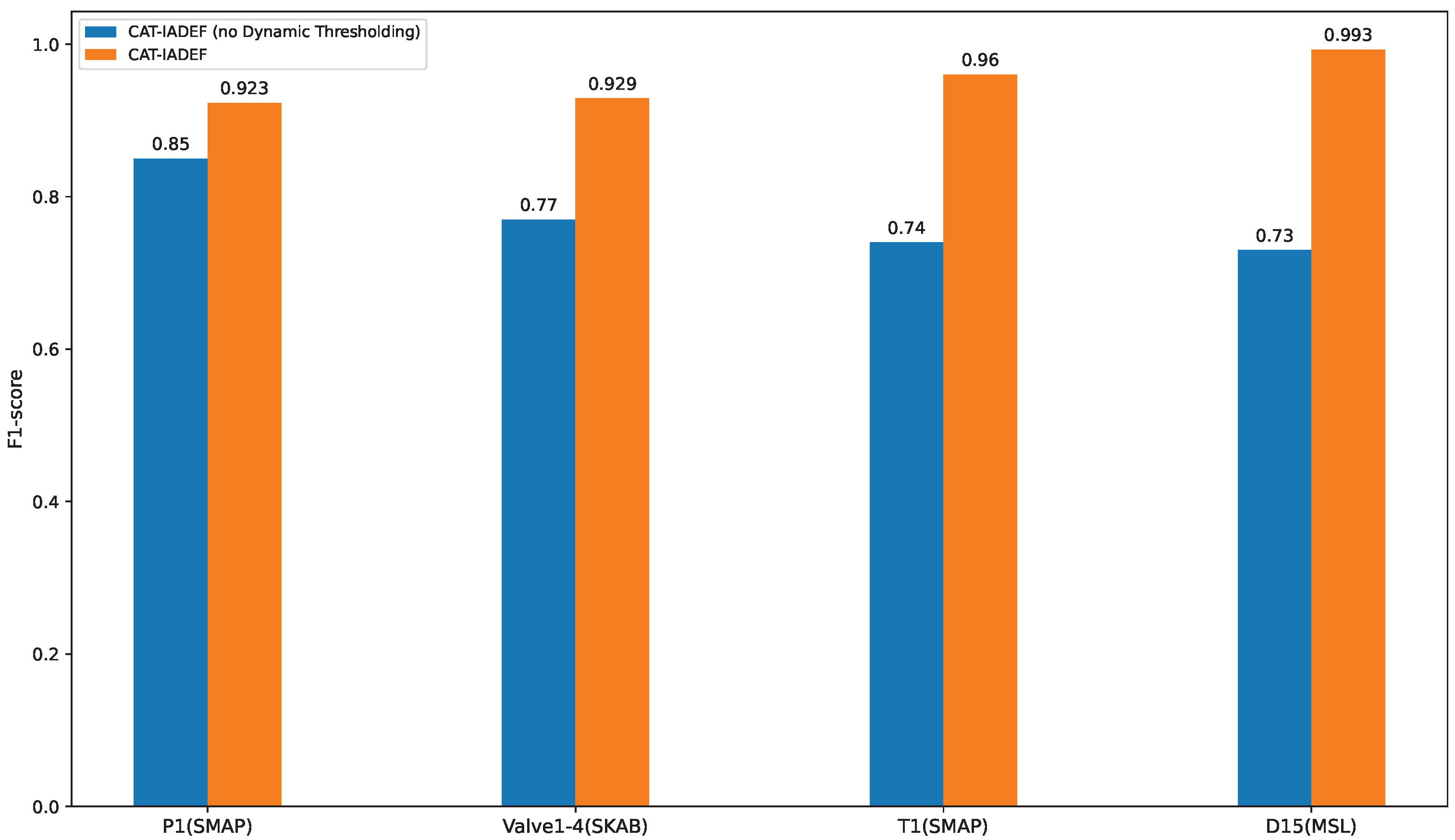

Dynamic threshold. We remove the dynamic threshold plate on CAT-IADEF and train it on the experimental dataset.

Figure 4 shows the F1 scores of CAT-IADEF and CAT-IADEF (no dynamic threshold) on our four experimental datasets.

As shown in

Figure 4, the POT dynamic selection threshold positively impacts the model. The most significant improvement is in the D15 dataset, from 0.73 to 0.993, representing 36.0% progress. The tiniest improvement is in the P1 dataset, from 0.85 to 0.923, representing an increase of 8.6%.

4.6. Sensitivity Analysis of Parameters

In the loss function, we have an

and

to perform a weighted average of

and

,

+

= 1. When

is 0 and

is 1, the loss function only considers the reconstruction loss from decoder1; when

is 1 and

is 0, the model only considers the reconstruction loss of

on the generated data. As shown in

Table 5, we conducted experiments on the P1 dataset and considered 7 cases to illustrate the parameter pair impact on model performance.

It can be seen from

Table 5 that when

+

is changed from 0 to 1, the closer

is to 1 and the closer

is to 0 (i.e., more consideration of the accurate data), the value of F1 tends to decrease, and F1 takes the maximum value. When

is closer to 0,

is closer to 1 (i.e., more concerned with the reconstruction loss of the generated data, that is, the anomalies tend to the standard data in the simulated data). In reality, the training data is not necessarily normal, and the sensitivity study of

and

can make the model more adaptable to different experimental environments.