Abstract

The job shop scheduling problem (JSSP) is a fundamental operational research topic with numerous applications in the real world. Since the JSSP is an NP-hard (nondeterministic polynomial time) problem, approximation approaches are frequently used to rectify it. This study proposes a novel biologically-inspired metaheuristic method named Coral Reef Optimization in conjunction with two local search techniques, Simulated Annealing (SA) and Variable Neighborhood Search (VNS), with significant performance and finding-solutions speed enhancement. The two-hybrid algorithms’ performance is evaluated by solving JSSP of various sizes. The findings demonstrate that local search strategies significantly enhance the search efficiency of the two hybrid algorithms compared to the original algorithm. Furthermore, the comparison results with two other metaheuristic algorithms that also use the local search feature and five state-of-the-art algorithms found in the literature reveal the superior search capability of the two proposed hybrid algorithms.

1. Introduction

Scheduling production is crucial in product manufacturing since it directly influences system performance and overall manufacturing process efficiency [1]. In various enterprises, production scheduling has become a significant issue. Dozens of innovative techniques are being scrutinized to increase manufacturing efficiency, emphasizing schedule optimization [2]. Accordingly, optimizing production scheduling problems is attracting attention in both research and manufacturing realms [3].

Industrialization has regarded production schedules as a crucial issue since the 1950s, and the job shop scheduling problem (JSSP) is a quintessential production scheduling model [4]. Since Johnson’s (1954) first methodology of scheduling with two machines [5], the complexity of the JSSP has grown in correlation with the number of devices and jobs. Due to its immense complexity, the JSSP is categorized as NP-hard (nondeterministic polynomial time) [6]. Solving large-scale JSSP in a reasonable time is a challenge that has been researched for decades. In addition to increasing workload, JSSP is taking on numerous new forms with distinct properties and characteristics. It responds in diverse approaches to solving variations of the fundamental JSSP [7]. JSSP is a typical resource allocation problem in manufacturing production scheduling. It has innumerable applications in many different industrial fields requiring high levels of automation and mass production. Among the most successful applications of JSSP are semiconductor and electronic component manufacturing, which need mass production and increased automation to improve production efficiency, reduce production cycle time, and optimize resources. According to a survey by Xiong et al. [8], within five years from 2016 to 2021, hundreds of studies and optimization models of various aspects of JSSP and its applications in the mentioned fields were conducted and presented.

Due to significant repercussions on the productivity of the production line, the JSSP has never been eradicated from combinatorial optimization. JSSP is categorized as a multi-stage, static, deterministic job scheduling problem in computer science and operations research and its solving strategies evolve at each stage of research development [8]. The fundamental JSSP includes:

- A set of jobs , where denotes ith job .

- A set of machines , and denotes jth machine.

- Each job has a specific set of operations , where is the total number of operations in job . Note that operation will be processed only once the operation has been completed in job .

The primary goal of scheduling is to assign shared resources to concurrent tasks as efficiently as possible throughout the processing period. The scheme necessitates allocating and organizing limited resources pursuant to the problem’s constraints, such as the order of activities and processing time, and providing a plan to achieve the optimization objectives. The following conditions are the fundamental JSSP requirements [9]:

- Each operation is performed independently of the others.

- One job operation cannot begin until all previous operations have been completed.

- Once a processing operation has begun, it will not be interrupted until the procedure is completed.

- It is impossible to handle multiple operations of the same job simultaneously.

- Job operations must wait in line until the next suitable machine is available.

- One machine can only perform one operation at a time.

- During the unallocated period, the machine will remain idle.

Notably, the set of constraints in real-world problems is more complex, such as multiple objective scheduling challenges in a job shop, processing times can be either deterministic (constant) or probabilistic (variable), limit idle time requests to no more than two consecutive machines, or no idle time. Any change to the problem’s limitations can create a new variation of the problem. Consequently, JSSP solving approaches evolve with each research development phase [10].

Metaheuristic optimization is one of the practical approaches to JSSP with the ability to provide a satisfactory optimization solution in a reasonable time [11]. Metaheuristic algorithms employ innovative search strategies to explore the solution space and avoid getting stuck in local optima by steering the feasible solution with a bias, enabling the rapid generation of high-quality solutions. Contemporary metaheuristic algorithms also combine with different mathematical models [12] and analytical operating procedures [13] to enhance performance.

The coral reef optimization approach (CRO) is one of the complex bio-inspired computation methods used to solve engineering and science problems by simulating the “formation” and “reproduction” of corals in coral reefs. The approach was first envisioned by Salcedo-Sanz et al. in 2014 [14]. Since then, it has been utilized in various relevant topics, including optimal mobile network deployment [15], enhanced battery scheduling of microgrids [16], and wind speed prediction systems with success in renewable energy in “Offshore Wind Farm Design” [17]. This article contributes by proposing modified coral reef optimization methods with local search techniques for the JSSP feature. Two hybrid algorithms have been developed and presented based on the original coral reef optimization (CRO) method. CROLS1 integrates CRO with the Simulated Annealing (SA) strategy, whereas CROLS2 combines CRO with the Variable Neighborhood Search (VNS) technique. This article focuses on the optimizing effect of local search techniques on the CRO algorithm. The experiments demonstrate that local search strategies significantly enhance the search efficiency of the two hybrid algorithms compared to the original algorithm. Furthermore, the comparison results with two other metaheuristic algorithms that also use the local search feature and five state-of-the-art algorithms found in the literature reveal the superior search capability of the two proposed hybrid algorithms.

2. Related Work

Since its inception, operation research has focused on exact algorithms for solving combinatorial problems involving multiple variables. Exact algorithms are defined as guaranteeing accurate solutions to an optimization problem. Utilizing exact algorithms could achieve the best solution to almost any bounded combinatorial optimization problem by identifying all possible solutions in a short timeframe [18]. However, it has been asserted that when exact algorithms are employed to handle combinatorial optimization issues, the amount of time required to identify the best strategy grows exponentially with the complexity of the problem. Branch and bound algorithms and mixed integer programming are the most frequently adopted exact algorithms for JSSP solving [19]. Small-scale JSSP rarely represents production environments in actual production, so it is crucial to evaluate more complex concerns involving various works and resources. Nevertheless, the exact methods are barely applied to large-scale situations due to resource limits and lengthy execution times.

In the context that exact algorithms cannot match the requirements of addressing large-scale optimization issues, numerous methods based on artificial intelligence were initially proposed and opened a new direction in the research of problem-solving strategies [20], and approximation algorithms are one of the most explored solutions to large-scale combinatorial optimization issues. Although approximation algorithms are not guaranteed to locate an optimal solution but assured of identifying a near-optimal solution in a decent and realistic amount of computation time. As a result, it has evolved into a new research subject for resolving complex and large-scale problems. Approximation algorithms may be divided into two categories: heuristic algorithms and metaheuristic algorithms [21].

Heuristic approaches could be divided into two parts: constructive search methods and local search methods [22]. In typical constructive algorithms, solutions are built up piece by piece until they are entirely dependent on the problem’s initial constraints or pre-determined priorities; in the case of scheduling problems, solutions are frequently developed through operations. These algorithms may “build” processes individually using “Dispatching Rules,” for example, programming to find a feasible solution within the constraints of a priority hierarchy. Following that, solutions are speedily developed while preserving the integrity of solution quality. While with local search methods, the initially generated keys are gradually replaced by features learned on a set of neighboring solutions, whether they begin with a random collection of initial solutions or use construction algorithms [23]. These methods allow the investigation of neighbor solutions more efficiently in a dilemma space. The disadvantage of these algorithms is that they cannot find and utilize global solutions. Consequently, they may become trapped in the local optima region.

Meta-heuristics combines heuristic techniques commonly adopted to handle combinatorial optimization issues. Meta-heuristic algorithms employ innovative search strategies to unravel the global optimum and avoid getting entangled in local optima by steering solution searching with a bias to gain higher viable alternatives more quickly. Some bias mechanisms include bias derived from the objective function, bias based on previous decisions, the bias of experience, etc. [21]. In this study, diverse and intensified search tactics are employed. The diversification strategy’s fundamental objective is to efficiently explore all potential solution space neighborhoods by utilizing a metaheuristic method. On the other hand, the intensification technique involves using previously gained search abilities and exploring through a more local solution subspace. Meta-heuristic algorithms are classified into two categories: population-based algorithms and single-point search algorithms. Among population-based algorithms, nature-inspired optimization algorithms are ubiquitous in terms of ease of implementation and superior searchability [24]. For instance, the GA (genetic algorithm—inspired by evolution) [25,26], PSO (particle swarm optimization—influenced by swarm intelligence) [27], and SA (simulated annealing—inspired by metal cooling behavior) [28] are among the most reliable and effective algorithms accessible.

Significantly, meta-heuristic algorithms differ from blindly random search algorithms in that randomness is used intelligently and biasedly, making them the current research trend for solving difficult and complex issues. In addition, combination metaheuristic approaches have been established to leverage the capabilities within each method to obtain more robust and exhaustive optimization strategies. Modern metaheuristic algorithms also incorporate various mathematical models and analytical operational techniques to deliver greater performance. Guzman et al. propose a metaheuristic algorithm that combines GA with a disjunctive mathematical model and employs the open-source solution Coin-OR Branch and Cut to optimize the JSSP [12]. By combining an open-source solver with genetic algorithm, the metaheuristic approach enables the development of efficient solutions and reduces computation time. Viana et al. suggested employing a guidance operator assigned to changing ill-adapted individuals utilizing genetic material from well-adapted individuals to enhance the GA population [13]. The results indicate the new algorithm achieves a result 45.88% better than the old approach. Wang et al. introduce a novel metaheuristic algorithm capable of guiding the search process to promising regions based on the expected value affected by the performance of applicant samples and the growth rate of the candidate solutions region, called search economics for the job shop scheduling problem (SEJSP) [29]. SEJSP also produced positive experimental findings when attempting to resolve JSSP.

In recent years, solving a fundamental problem such as JSSP has been approached in the direction of enhancing classical metaheuristic algorithms or combining them with other methodologies. Yu et al. [30] improve PSO to NGPSO by incorporating nonlinear inertia weight and Gaussian mutation to handle JSSP. Mohamed Kurdi proposed the GA-CPG-GT method using the GA algorithm with uniform crossover paired with the Giffler and Thompson algorithm and yielded positive results when addressing JSSP [31]. T.Jiang and C.Zang utilized Gray Wolf Optimization (GWO) algorithm, inspired by the gray wolves’ social hierarchy and hunting behaviors, to solve JSSP [32], and Feng Wang et al. developed them with some modifications to establish the Discrete Wolf Pack Algorithm (DWPA) and achieved numerous exciting results in investigating JSSP [33]. In another direction, Alper Hamzaday et al. deployed a novel meta-heuristic technique called Single Seekers Society (SSS) to manage JSSP effectively [34].

The coral reef optimization approach (CRO) is one of the complex bio-inspired computation methods used to solve engineering and science problems by simulating the “formation” and “reproduction” of corals in coral reefs. The approach was first envisioned by Salcedo-Sanz et al. in 2014 [14]. Since then, it has been utilized in various relevant topics, including optimal mobile network deployment [15], enhanced battery scheduling of microgrids [16], and wind speed prediction systems with success in renewable energy in “Off-shore Wind Farm Design” [17]. Various hybrid algorithms based on the original version have evolved to facilitate better performance while diminishing computing time. For example, in 2016, a combination of CRO and a variable neighborhood search method was applied to unequal area facility layout problems [35]. Alternatively, another hybrid CRO technique takes advantage of Spark’s MapReduce programming paradigm to reduce the system’s overall response time and numerous other fascinating applications [36].

3. Materials and Methods

To properly depict the problem’s reality and make encoding and decoding more efficient, selecting the most suitable form of methodology is necessary. This choice substantially impacts the success or failure of problem-solving.

3.1. Representation of Job-Shop Scheduling Problem

When the coral reef optimization (CRO) technique is used to solve JSSP issues, the operation solutions are encoded as sequences of decimal integers. Several other approaches describe the resolution of JSSP based on the problem’s specific characteristics, such as operation-based representation, rule-based priority representation, machine-based representation, etc. [37,38]. Direct and indirect encoding techniques are the two fundamental divisions of these representations.

Our strategy for describing the solution is based on implementing “random keys.” This technique has the advantage of providing a detailed summary of the circumstance. Each number in the sequence indicates the number of the individual jobs, and the number of occurrences of each position in sequences defines the number of machines the job must pass through before completion. In this research, a “random key” technique exposes a solution to a problem satisfying the specified criteria below:

- Each element appearing in a solution represents the job to be processed;

- The number of appearing jobs correlates to the number of machines they must pass through;

- The order of the element in the solution follows the machine sequence that the job must pass through.

Figure 1 depicts a “random key” in the case of two machines and three jobs:

Figure 1.

An example JSSP solution is created by a random key technique.

The CRO algorithm divides into two main stages: “reef formation” and “coral reproduction”:

Initially, a “reef” is constructed from a square grid of size MxN. Individual corals are selected from a population and then randomly placed in any available empty square on the reef according to the free/occupation ratio r0 (zero value representing no occupation), with the remaining available. Each coral represents a different solution in the solution space and will be given a health function; the higher the health function, the greater the likelihood that the corals would survive the algorithm’s later generations. The value of coral health is calculated using the fitness function, which depends on the objective functions of the problem.

During the second stage, the CRO performs coral reproduction with five main mechanisms being repeated to produce new coral generation (called larvae). Including External sexual “Broadcast Spawning”, Internal sexual “Brooding”, “Larvae setting”, Asexual “Budding”, and the “Depredation” phase, which are all described in detail below:

External sexual Broadcast Spawning: in nature, this process is also known as “cross-reproduction”. Two individuals produce every larva in a population model’s evolution by simulating natural selection. This process combines the attributes of each parent to create generations of offspring that inherit their positive characteristics to develop better individuals.

Internal sexual Brooding: this process simulates an individual’s mutation in a population during evolution. Each coral in the selected population can change its genetic code to create a new individual with the original individual’s characteristics and unique “mutation” attributes.

Larvae setting: this process simulates the “fighting of the coral” for space in a finite space environment. Only stronger individuals can survive and reproduce to create new generations, while weaker individuals are removed from the reef. The larvae are generated by the above sexual reproduction process and have repeatedly fought with other reef corals. Individuals with higher health values will be given more opportunities to develop in the reef.

Asexual budding: this process simulates the asexual reproduction of corals. When corals grow to a particular stage, they can separate into new individuals and disperse throughout the reef. Usually, healthy corals can produce better, more viable offspring, thus gaining preference in this spawning process. So, a select number of individuals with excellent health statistics are permitted to reproduce and spread over the reef, but only in limited numbers.

Depredation phase: this process simulates the elimination of corals. To create free space for the next generation of corals but without losing the diversity of the population, this mechanism only eliminates a part of the weak corals. After the maximum number of allowed corals is reached, any remaining similar corals in the reef are eliminated. It keeps the reef from growing too many identical corals at once and makes room for the next generation of corals. It also enhances population diversity to prevent the process from falling into a local optimum.

Figure 2 illustrates the operation of the CRO algorithm on reef size with a random key implementation corresponding to JSSP, including two machines and three jobs with an individual tracked by a red circle.

Figure 2.

The phase of CRO algorithm with random key implementation and an individual coral tracked by the red circle.

The implementation of the CRO algorithm is described in Algorithm 1 as below:

| Algorithm 1: Coral Reef Optimization (CRO). |

| Input: : reef size, : occupation rate, : fraction of broadcast spawners, : fraction of asexual reproduction, : fraction of the worse fitness corals, : the deprecated probability of the worse fitness corals. Output: reasonable solution with best fitness #Initialization—Reef formation phase:

|

3.2. Objective Function

In this study, we use “minimize the makespan” as the objective function for solving the JSSP. This is the time between starting the first job and completing the last one. For a fundamental JSSP with a set of n jobs and a set of m machines: Oij is operation of jth job that executed on ith machine; pij defines the processing time of jth job that executed on ith machine with the starting time (rij) of operation Oij; following that, the time required to complete operation Oij can be calculated as follows:

Because machines and jobs have specific and different completion times, cin and cjm are defined as the completion time of the last (nth) operation on ith machine and the completion time of the last (mth) operation of jth job, respectively. The starting time rij can be calculated as below:

Finally, makespan can be calculated as the time to complete the last operation on the last machine:

The scheduler’s scheduling efficiency can be evaluated by comparing the entire idle time spent by the machine to the total processing time spent by the system:

where denotes the machine’s idle time of machine i; C means of makespan; m denotes the total number of machines; pjk denotes the processing time of job i on machine k.

When applied to the JSSP, the algorithm evaluates the solution quality using the Objective and Fitness functions derived as Equations (5) and (6). Where the Objective function indicates how “excellent” the solution is in terms of the performance of the optimized function, the Fitness function directs the optimization process by expressing how inextricably the proposed solution meets the defined goal.

where (or makespan) is the time between starting the first job and completing the last one e, is the fitness function, n is the population size.

3.3. Local Search: Simulated Annealing (SA)

The first of two local search algorithms mentioned is the SA approach [39]. It is a method used to simulate the cooling behavior of metals when exposed to extreme heat. The metal is rapidly heated to a high temperature and then progressively cooled according to a “cooling schedule” to obtain the ideal crystal structure with the lowest possible internal energy. High temperature causes the crystal grains to have a high energy level, which allows them to “jump” freely and quickly to their proper locations in the crystal structure. During the cooling procedure, the temperature steadily decreases, and the crystals are anticipated to be in their optimal locations once the temperature has been appropriately dropped [28].

Avoiding local optimization traps is one of the primary differences between SA and conventional gradient-based approaches. In other words, each algorithm step uses a probabilistic value to determine whether to transition the system from its current state to an adjacent state s* (this state can be better or worse). When this probability is high, the system can easily switch to another state regardless of whether that state is better or worse than the previous state. Meanwhile, when this probability is low, the current state is maintained if a better state cannot be found. This probability will gradually decrease through each loop based on the decrease in system temperature controlled by the “cooling schedule.” This process is repeated until the system reaches a state that is acceptable to the application or until the given computation resource has been exhausted [40].

The SA is described by Algorithm 2 as follows:

| Algorithm 2: Simulated Annealing |

| Input: : temperature, : min temperature,: cooling rate, : fitness function, : solution, : maximum iteration Output: Best_solution #Initialization:

|

3.4. Local Search: Variable Neighborhood Search (VNS)

Variable Neighborhood Search (VNS) is another ancillary local search technique [41]. This approach executes the search process by altering the solution’s neighborhood structure to identify the optimal solution, using a combination of two nested loops: shake and local search. The VNS algorithm’s fundamental design is simple and sometimes needs no additional parameters. It is primarily accomplished by transforming solutions from one state to another across the whole solution space using neighborhood structures (NS). Each neighborhood in the VNS solution space is considered a subset of the overall solution space, so it is possible to retrieve a trapped solution in one structure by using it in another structure. VNS systematically adjusts the neighborhood by moving from one NS to another while searching via nested loops, which are referred to as shake and local search Inside the algorithm, the shaking loop enables the algorithm to move to a different NS; meanwhile, the local search loop is responsible for finding the best solution in the current neighborhood structure. The cycle of local search is repeated until a more acceptable solution is discovered. The allowed loop will control the shaking loop. As a result, the algorithm expands the search space and improves the ability to locate the optimal solution [42]. Algorithm 3 describes the VNS as follows:

| Algorithm 3: Variable Neighborhood Search |

| Input: : index denoting the neighborhood structure, : total number of neighborhood structures,: solution, : fitness function, : solution set in -th neighborhood structure, : computation time Output: Best_solution #Initialization:

|

3.5. Proposal Approaches

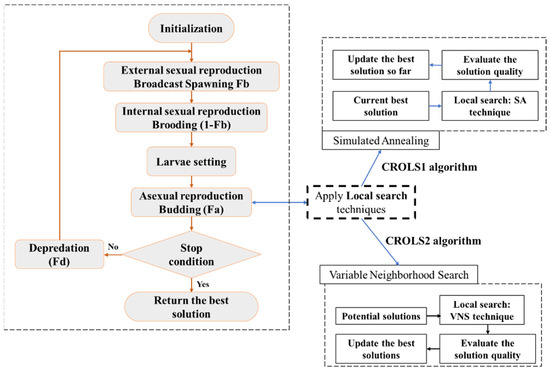

With the two local search techniques mentioned above, we propose two hybrid algorithms named CROLS1 and CROLS2 based on the CRO algorithm described in the following flow chart in Figure 3:

Figure 3.

CRO applied local search techniques.

Typically, algorithms that identify the optimal solution from a random initial solution, such as the CRO, take significant time to obtain an optimal result. Occasionally, the search process becomes trapped in the local optimum and cannot converge to the optimal answer. Combining local search approaches is recommended to reduce the convergence time to the optimal solution, avoid needless local optimal, and enhance search efficiency. The process is performed by searching for answers in the neighborhood of the input solution, modifying the structure, and locating the optimal solutions in the solution space; or discovering the ideal solution when the search process is close to the optimal solution, while preventing other mechanisms from altering the solution’s structure. We found that employing local search strategies on all current problem solutions is time-consuming and unnecessary. So, two distinct search strategies were employed to optimize computation time and enhance algorithm performance:

With CROLS1, applied only with the current best solution, the probability of implementation is small at the beginning and increases until the end of the search. The idea is to increase the local search when the found solution is close to the optimal solution.

With CROLS2, apply the local search on several best solutions selected through the budding process above. The idea here is to find the optimal solution through all the neighborhoods of the potential solutions.

Algorithm 4 describes the CROLS1 and CROLS2 algorithms as follows:

| Algorithm 4: Hybrid Coral Reef Optimization Algorithms (CROLS1 and CROLS2) |

| Input: : reef size, : occupation rate, : fraction of broadcast spawners, : fraction of asexual reproduction, : fraction of the worse fitness corals, : the deprecated probability of the worse fitness corals. Output: Reasonable solution with best fitness #Initialization—Reef formation phase:

|

3.6. Time Complexity

Without any loss of generality, let be the optimization problem by CRO, and be the local search problems by SA and VNS approach, respectively. Assume that the computational time complexity of evaluating the problem’s function value is . Accordingly, the computational time complexity of CRO is defined as and the computational time complexity of two hybrid algorithms CROLS1 and CROLS2 are and , respectively, where is the maximum number of iterations, is the number of corals (population size), is the number of corals that performed SA technique on and is the number of corals that performed VNS technique on.

4. Experiment Results and Discussion

This section provides a brief and accurate description of the experimental data, their interpretation, and the discussion derived from the investigation.

4.1. Dataset

In this study, we employ a subset of JSSP from Lawrence (1984) (LA) [43], one of the typical instances for addressing JSSP to evaluate the algorithm’s efficiency. The two primary components of input data are machine sequence and processing time. Where machine sequence reflects the execution order on the machines for each specific job, processing time represents the time consumption for each operation above. The most significant difference between LA instances is their complexity and the number of possible solutions to the problem, which grows exponentially with the number of jobs and machines. These are all feasible (n!m) solutions to problems involving n jobs and m machines. LA is also a well-known instance group in JSSP commonly used with a significant level of normalization and convergence. LA contains groups of sizes 10 × 5, 15 × 5, 20 × 5, 10 × 10, 15 × 10, 20 × 10, 30 × 10 and 15 × 15. Due to the similarity of the problem posed within each size group, we chose two sample examples from each size group to conduct the experiments and related comparisons so that the experimental method is not spread.

4.2. Parameters Used in the Algorithm

A plurality of metaheuristic optimization algorithms use randomly generated parameters to direct their search for the optimal solution. Consequently, establishing the criteria is among the most critical procedures. These settings stipulate the algorithm’s exploration and mining capabilities and substantially impact the algorithm’s performance. We chose suitable parameters for the hybrid algorithm based on the theory of general evolutionary algorithms and JSSP experiments. These parameters are shown in Table 1 below:

Table 1.

Parameters used in the hybrid algorithms.

Based on the experimental findings, we believe that the algorithm’s parameters may fluctuate based on the complexity and size of the problem. It should be emphasized that even though the setup parameters are identical, the outcomes in different situations are not equivalent. It is necessary to experiment with alternative setup settings to discover the ideal solution in a reasonable time for problems of varying sizes. We conducted the trial-and-error methodology for tuning parameters through a series of experiments to acquire a suitable parameter set. Table 2 summarizes parameter setting recommendations for two hybrid proposal algorithms with different problem sizes.

Table 2.

Suggested parameters set for different sizes of problem.

4.3. Experiment Results

The experimental approach is conducted in two steps: first, we will evaluate the impact of local search on CRO via an aggregated outcomes table of CRO and two hybrid algorithms. Friedman’s test and Wilcoxon’s signed-rank test were also performed later based on the discovered table to evaluate the results statistically. Second, we evaluate the effectiveness of local search approaches applied to CRO by comparing the best search results of CROLS1, CROLS2, and two algorithms that also employ local search techniques: HGA [44] (hybrid genetic algorithm integrated with local search and some novel genetic operators) and MA [45] (memetic algorithm combines global search and local search, exchanging and inserting depending on the critical route). The proposed algorithm is coded in Python on a computer with 3.1 GHz Intel (R) Core i5 CPU and 24 GB of RAM.

4.3.1. Search Performance on CRO-Based Algorithm with Different Reef Sizes

We will evaluate the impact of local search on CRO via an aggregated outcomes table of CRO and two hybrid algorithms. Table 3 depicts the best, the worst, the mean, and the standard deviation (SD) of the makespan values derived from 30 independent executions of each method on 16 JSSP instances.

Table 3.

CRO-based algorithms statistics for 16 LA instances.

Table 3 shows that the local search strategies have made the CRO algorithm more stable, as the amplitude of the mean between the best to the worst and the standard deviation in all cases have improved. CROLS2 demonstrates greater stability with smaller standard deviations than CRO and CROLS1 in most instances. Additionally, local search methods assist in reducing the worst-case of CROLS1 and CROLS2 compared to CRO. Similar to the best value acquired by each hybrid algorithm, the local search technique helps CROLS1 and CROLS2 reach the best-known optimal values for the situations examined. In most instances, CROLS1 and CROLS2 can get the best-known outcomes with reef sizes of 10 × 10 and 20 × 20. However, for instances of high complexity, such as LA39 and LA40, the best results can only be reached with reef sizes of 30 × 30.

CROLS1 and CROLS2 significantly reduce the search duration compared to the original method. This is more noticeable when the complexity of the instance is greater; the computational time can be reduced by up to 30% for LA40. In addition, for complex cases such as LA39 and LA40, the CROLS2 algorithm surpasses CROLS1 when outcomes are similar, but the execution time is reduced by more than 10%. Technically, CROLS1 and CROLS2 have 20% and 100% more fitness function calls than the original algorithm since they employ local search techniques. Even though this increases the amount of computational work, it helps the algorithm converge quickly and experimentally shows that CROLS1 and CROLS2 are still more rapid at searching than the original CRO.

To evaluate the improvement of the two hybrid algorithms compared to CRO statistically, we performed Friedman’s test on the data in Table 3. Friedman’s test, based on the ranking, will be utilized to determine the difference between three CRO-based algorithms using experimental findings collected from 16 LA instances and categorized by three reef sizes 10 × 10, 20 × 20, and 30 × 30. The “mean rank” and Friedman’s test statistic results of three algorithms participating in the evaluation will be described in Table 4 and Table 5, respectively.

Table 4.

Mean rank of three CRO-based algorithms.

Table 5.

Friedman’s test statistic of three CRO-based algorithm.

According to Table 4, there is little difference in the mean rank of the CRO algorithm between reef sizes. The mean rank of CROLS1 and CROLS2 are significantly different from CRO, indicating that local search techniques substantially impact these two algorithms. Table 5 provides the test statistics for Friedman’s test with a significance level of α = 0.05. All values are larger than the critical value and all ρ values are less than 0.05, demonstrating statistically significant differences in the performance of three CRO-based algorithms.

Following Friedman’s test made a significant result, we performed Wilcoxon’s signed-rank test as a posthoc analysis to determine the difference between three CRO-based algorithms in pairwise group. The search findings of three reef sizes will be averaged to feed the Wilcoxon’s test with a statistical significance of 0.05. Table 6 provides the statistical results of the Wilcoxon’s test.

Table 6.

Wilcoxon’s test statistic of three CRO-based algorithms.

Using the Z distribution table, we can find the critical value of Z is 1.96 at a statistical significance of 0.05. According to Table 6, the statistical findings of two pairs of algorithms, CRO-CROLS1 and CRO-CROLS2, are nearly equivalent as Z = −3.516 (|Z| > 1.96) and p = 0.000438 < 0.05. So, the null hypothesis is rejected at statistical significance of 0.05; the advantage of local search approaches on CROLS1 and CROLS2 compared to the original CRO algorithm is statistically significant. In contrast, the values Z = −1.533 (|Z|< 1.96), and p = 0.125153 > 0.05 indicate no significant performance difference between the CROLS1 and CROLS2 hybrid algorithms.

4.3.2. Comparison of the Computational Result of CRO-Based Algorithms with Other Implemented Local Search Technique Algorithms

This experiment aims to test the algorithm’s efficiency in finding the optimal value of the problem. We choose MA and HGA as two algorithms employing local search strategies to compare the performance of algorithms that use such techniques. Five algorithms are mentioned in Table 7, including the original algorithm CRO and two proposed hybrid algorithms CROLS1 and CROLS2. Each CRO algorithm is executed 30 times with three different reef sizes and takes the best value obtained in Table 7. The results of HGA and MA were consulted from the research of Y. Wang et al. [44] and L. Gao et al. [45], respectively.

Table 7.

Experiment results of five metaheuristic algorithms employing local search techniques.

It can be seen that the two-hybrid algorithms CROLS1 and CROLS2 can discover the optimal solution in the majority of instances with the reef size set to 20 × 20 and 30 × 30. However, the original algorithm CRO can only find the optimal solution in a few cases with a small number of jobs and machines. Compared with the other two metaheuristics, the performance of the two-proposed hybrid algorithm is superior when the reef size is 20 × 20 or 30 × 30 in some instances of the problem.

4.3.3. Comparison of the Computational Result of CRO-Based Algorithms with Other Contemporary Algorithms

To further verify the effectiveness of CRO and two proposed hybrid algorithms, we performed extensive experiments on the 12 LA instances mentioned before, Fisher and Thompson instances [46]: FT06, FT10, FT20; Applegate and Cook instances [47]: ORB01–ORB09; and five of Adams et al. instances [48] denoted as ABZ05 to ABZ09. Three CRO-based algorithms were compared with the results of five state-of-the-art algorithms found in the literature: Multi-Crossover Local Search Genetic Algorithm (mXLSGA) [49], hybrid PSO enhanced with nonlinear inertia weight, and Gaussian mutation (NGPSO) [30], single seekers society (SSS) algorithm [34], genetic algorithm with a critical-path-guided Giffler and Thompson crossover operator (GA-CPG-GT) [31], discrete wolf pack algorithm (DWPA) [33]. The best makespan produced by the CRO-based algorithms with reef size 30 × 30 from 10 independent runs was utilized as a performance criterion. Table 8 presents the experimental results for the 34 instances, listing the instance name, problem size (number of tasks × number of machines), best-known solution (BKS), and best solution achieved by each of the compared algorithms.

Table 8.

Comparison of experimental results between CRO-based algorithms and other state-of-the-art algorithms for 34 instances. The symbol “-” means “not evaluated in that instance.”.

We can observe that the original CRO algorithm can only discover the optimal value in a few basic cases, such as LA01, LA02, LA06, LA07, LA11, LA12, and FT06, but the rest of the CRO results are relatively decent and equivalent to GA-CPG-GT. While both the CROLS1 and CROLS2 hybrid algorithms demonstrated efficiency, CROLS1 obtained the best comparative value in 11/12 LA cases, 2/3 FT instances, 3/5 ABZ instances, and 5/10 ORB instances. CROLS2 produced comparable results, with 11/12 LA instances, 2/3 FT instances, 3/5 ABZ instances, and 5/10 ORB instances better or equal to the compared results of five state-of-the-art algorithms. The findings of two algorithms, CROLS1 and CROLS2, outperform SSS, GA-CPG-GT, DWPA algorithms, and competitive comparison with mXLSGA and NGPSO, even outperforming NGPSO in ABZ instances and some instances as ORB01, ORB03, ORB08, ORB10.

4.3.4. Improvement of Search Efficiency

We utilize the mean deviation from the optimal makespan of the two hybrid algorithms CROLS1 and CROLS2 that surpass the original approach CRO in terms of search performance to evaluate the two hybrid algorithms’ performance enhancement. The progression of search effectiveness is calculated using the following formula:

where is the percentage of improvement, is the minuscule makespan by CROLS, is the minuscule makespan by CRO and is the minuscule makespan in comparison between CROLS and CRO.

Table 9 represents the percentage performance increase in CROLS1 and CROLS2 over the original CRO.

Table 9.

Improve performance of two hybrid algorithms.

Analyzing Table 9 reveals that the local search approaches have enhanced the original CRO algorithm’s search performance. The improvement is not too obvious in the simple instances (LA01–LA22), with most PI metrics below 3%. Nonetheless, when the problem complexity increased, both CROLS1 and CROLS2 performed significantly more effectively than the original CRO. In LA26 and LA27, CROLS1 and CROLS2 outperform CRO when working on small reef sizes such as 10 × 10 and 20 × 20 with a PI of approximately 5%. However, when operating on reef sizes 30 × 30, CRO is as efficient as two hybrid algorithms with a PI of less than 2%; since when the population size increases, the searchability of CRO will increase, and even the original CRO can find a good solution. Even in LA40, where it is difficult for the CRO to discover the best-known solution, the PI reaches a peak of approximately 10%, which occurs in all reef sizes. This demonstrates the positive impact of local search algorithms on search performance.

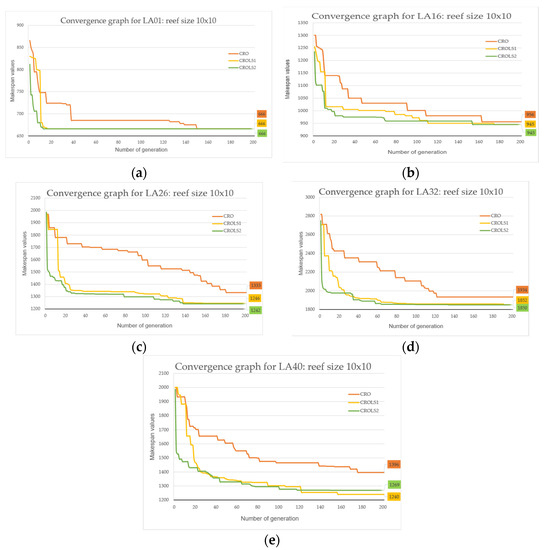

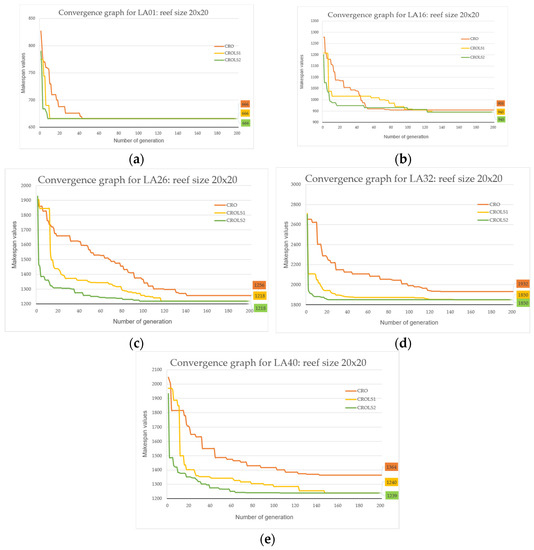

4.3.5. Convergence Ability

The algorithm’s ability to converge to the optimal solution is a second criterion for comparison. The collection of data is dependent on the experimental outcomes of the LA01 instance. Table 10 displays the average number of generations required to discover the optimal solution for three CRO algorithms with three different reef sizes.

Table 10.

Average objective function evaluations test needed to find the optimal solution.

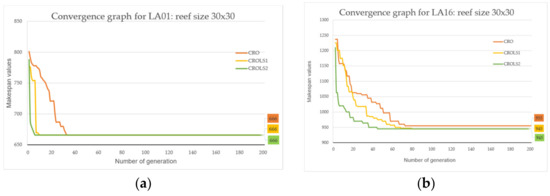

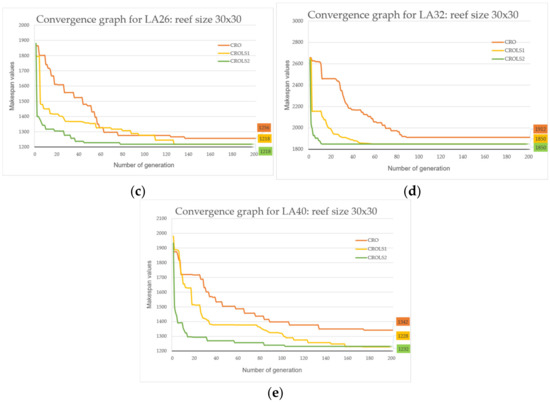

First, as the reef size parameter increases, the number of generations conducted to explore the optimal solution for the three algorithms decreases most noticeably for the original CRO. Second, the two hybrid algorithms have significantly fewer generations than the original. In this scenario, the CROLS2 algorithm exhibits the quickest convergence capability. Experimental results proved that local search strategies significantly enhanced the search process and expedited finding optimal answers. For optimal performance, it is preferable to utilize local search approaches later in the algorithm because they have little impact in the early stages and waste time. Figure 4, Figure 5 and Figure 6 depict the convergence graph of three CRO, CROLS1, and CROLS2 methods for three reef sizes (10 × 10, 20 × 20, and 30 × 30) in five instances LA01, LA16, LA26, LA32, and LA40.

Figure 4.

Convergence graph of CRO-based with reef size 10 × 10: (a) LA01, (b) LA16, (c) LA26, (d) LA32, (e) LA40.

Figure 5.

Convergence graph of CRO-based with reef size 20 × 20: (a) LA01, (b) LA16, (c) LA26, (d) LA32, (e) LA40.

Figure 6.

Convergence graph of CRO-based with reef size 30 × 30: (a) LA01, (b) LA16, (c) LA26, (d) LA32, (e) LA40.

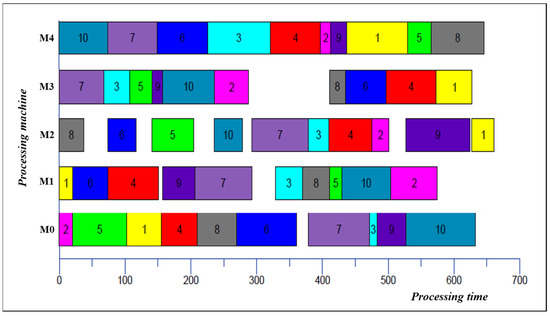

The optimal solution (corresponding to the makespan value 666) of the LA01 instance is shown in Figure 7 through Gantt-chart, including ten jobs (J1–J10) and five machines (M0–M4), with each color representing a job.

Figure 7.

Representing optimal solution of LA01 instance by Gantt-chart.

5. Conclusions

This paper presents two novel hybrid algorithms, CROLS1 and CROLS2, that utilize distinct search strategies. The CROLS1 algorithm employs simulated annealing (SA) to find the global optimal solution and avoid the local optimum. This technique increases the likelihood of obtaining the optimal response while decreasing execution time. In the second model, VNS is applied to the most significant number of probable solutions in CROLS2. This search strategy aims to create new, superior individuals by utilizing the reef-wide potential and increasing its convergence.

The experiment presented that local search tactics have made the CRO algorithm more stable, as the mean amplitude and standard deviation have improved. Local search approaches also reduce CROLS1 and CROLS2 worst-case compared to CRO and let them find the best-known optimal values for each case more conveniently. CROLS1 and CROLS2 considerably shorten search time compared to the original CRO, even saving computational time by up to 30% for the LA40 instance. For complicated scenarios such as LA39 and LA40, CROLS2 surpassed CROLS1 when results are similar, but the execution time is 10% faster. Statistical analyses such as Friedman’s and Wilcoxon’s tests also confirm that the improvement of CROLS1 and CROLS2 compared to the original algorithm is significant. Comparing the best search results with two metaheuristic algorithms that employ other local search strategies, MA and HGA, CROLS1 and CROLS2 demonstrate their superiority in complex problem scenarios. To further verify the effectiveness of CRO and two proposed hybrid algorithms, we conducted extensive experiments on the 34 instances of varying complexity as LA, FT, ABZ, and ORB. The achieved results were compared with five state-of-the-art algorithms in related works: mXLSGA, NGPSO, SSS, GA-CPG-GT, and DWPA. By analyzing the results, we can conclude that the two proposed hybrid algorithms get competitive results in JSSP instances and can obtain good makespan results.

This research focuses on investigating the improvement of the local search approach on the CRO algorithm, which is demonstrated through positive experimental evidence. The efficiency of the two hybrid algorithms is further seen when their best search outcomes are comparable with the best-known results. Numerous multi-objective optimization techniques and various JSSP have been suggested for more challenging issues. In the upcoming plan, we aim to develop the optimal algorithm for multi-objectives and optimize the processing time. Developing algorithms to handle multi-objective optimization problems is an exciting future research path to increase the relevance of JSSP in manufacturing.

Author Contributions

Conceptualization, C.-S.S.; methodology, D.-C.N.; software, D.-C.N.; vali-dation, T.-T.N.; writing—original draft preparation, T.-T.N.; writing—review and editing, C.-S.S. and W.-W.L.; visualization, D.-C.N. and W.-W.L.; supervision, C.-S.S.; project administration, C.-S.S. and M.-F.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research was partly supported by National Science and Technology Council, Taiwan with grant numbers 111-2221-E-992-066 and 109-2221-E-992-073-MY3.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data supporting the reported results are available upon request.

Acknowledgments

The authors would also like to thank the anonymous reviewers for their constructive comments which led to improvements in the manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Baptista, M. How Important Is Production Scheduling Today? April 2020. Available online: https://blogs.sw.siemens.com/opcenter/how-important-is-production-scheduling-today/ (accessed on 31 August 2022).

- Ben Hmida, J.; Lee, J.; Wang, X.; Boukadi, F. Production scheduling for continuous manufacturing systems with quality constraints. Prod. Manuf. Res. 2014, 2, 95–111. [Google Scholar] [CrossRef][Green Version]

- Jiang, Z.; Yuan, S.; Ma, J.; Wang, Q. The evolution of production scheduling from Industry 3.0 through Industry 4.0. Int. J. Prod. Res. 2021, 60, 3534–3554. [Google Scholar] [CrossRef]

- Graves, S.C. A Review of Production Scheduling. Oper. Res. 1981, 29, 646–675. [Google Scholar] [CrossRef]

- Johnson, S.M. Optimal two- and three-stage production schedules with setup times included. Nav. Res. Logist. Q. 1954, 1, 61–68. [Google Scholar] [CrossRef]

- Garey, M.R.; Johnson, D.S.; Sethi, R. The Complexity of Flowshop and Jobshop Scheduling. Math. Oper. Res. 1976, 1, 117–129. [Google Scholar] [CrossRef]

- Zhang, J.; Ding, G.; Zou, Y.; Qin, S.; Fu, J. Review of job shop scheduling research and its new perspectives under Industry 4.0. J. Intell. Manuf. 2017, 30, 1809–1830. [Google Scholar] [CrossRef]

- Xiong, H.; Shi, S.; Ren, D.; Hu, J. A survey of job shop scheduling problem: The types and models. Comput. Oper. Res. 2022, 142, 105731. [Google Scholar] [CrossRef]

- Xhafa, F.; Abraham, A. Metaheuristics for Scheduling in Industrial and Manufacturing Applications. Available online: https://link.springer.com/book/10.1007/978-3-540-78985-7 (accessed on 1 July 2022).

- Pinedo, M.L. Planning and Scheduling in Manufacturing and Services. 2009. Available online: https://link.springer.com/book/10.1007/978-1-4419-0910-7 (accessed on 3 July 2022).

- Türkyılmaz, A.; Şenvar, Ö.; Ünal, I.; Bulkan, S. A research survey: Heuristic approaches for solving multi objective flexible job shop problems. J. Intell. Manuf. 2020, 31, 1949–1983. [Google Scholar] [CrossRef]

- Guzman, E.; Andres, B.; Poler, R. Matheuristic Algorithm for Job-Shop Scheduling Problem Using a Disjunctive Mathematical Model. Computers 2021, 11, 1. [Google Scholar] [CrossRef]

- Viana, M.S.; Contreras, R.C.; Junior, O.M. A New Frequency Analysis Operator for Population Improvement in Genetic Algorithms to Solve the Job Shop Scheduling Problem. Sensors 2022, 22, 4561. [Google Scholar] [CrossRef]

- Salcedo-Sanz, S.; Del Ser, J.; Landa-Torres, I.; Gil-López, S.; Portilla-Figueras, J.A. The Coral Reefs Optimization Algorithm: A Novel Metaheuristic for Efficiently Solving Optimization Problems. Sci. World J. 2014, 2014, 739768. [Google Scholar] [CrossRef] [PubMed]

- Salcedo-Sanz, S.; García-Díaz, P.; Portilla-Figueras, J.; Del Ser, J.; Gil-López, S. A Coral Reefs Optimization algorithm for optimal mobile network deployment with electromagnetic pollution control criterion. Appl. Soft Comput. 2014, 24, 239–248. [Google Scholar] [CrossRef]

- Salcedo-Sanz, S.; Camacho-Gómez, C.; Mallol-Poyato, R.; Jiménez-Fernández, S.; Del Ser, J. A novel Coral Reefs Optimization algorithm with substrate layers for optimal battery scheduling optimization in micro-grids. Soft Comput. 2016, 20, 4287–4300. [Google Scholar] [CrossRef]

- Salcedo-Sanz, S.; Gallo-Marazuela, D.; Pastor-Sánchez, A.; Carro-Calvo, L.; Portilla-Figueras, A.; Prieto, L. Offshore wind farm design with the Coral Reefs Optimization algorithm. Renew. Energy 2014, 63, 109–115. [Google Scholar] [CrossRef]

- Bedoya-Valencia, L. Exact and Heuristic Algorithms for the Job Shop Scheduling Problem with Earliness and Tardiness over a Common Due Date. Ph.D. Thesis, Old Dominion University, Norfolk, VA, USA, 2007. [Google Scholar]

- Brucker, P.; Jurisch, B.; Sievers, B. A branch and bound algorithm for the job-shop scheduling problem. Discret. Appl. Math. 1994, 49, 107–127. [Google Scholar] [CrossRef]

- Çaliş, B.; Bulkan, S. A research survey: Review of AI solution strategies of job shop scheduling problem. J. Intell. Manuf. 2013, 26, 961–973. [Google Scholar] [CrossRef]

- Muthuraman, S.; Venkatesan, V.P. A Comprehensive Study on Hybrid Meta-Heuristic Approaches Used for Solving Combinatorial Optimization Problems. In Proceedings of the 2017 World Congress on Computing and Communication Technologies (WCCCT), Tiruchirappalli, India, 2–4 February 2017; pp. 185–190. [Google Scholar] [CrossRef]

- Aarts, E.; Aarts, E.H.; Lenstra, J.K. Local Search in Combinatorial Optimization. 2003. Available online: https://press.princeton.edu/books/paperback/9780691115221/local-search-in-combinatorial-optimization (accessed on 3 July 2022).

- Gendreau, M.; Potvin, J.-Y. Metaheuristics in Combinatorial Optimization. Ann. Oper. Res. 2005, 140, 189–213. [Google Scholar] [CrossRef]

- Yang, X.-S. (Ed.) Nature-Inspired Optimization Algorithms; Elsevier: Oxford, UK, 2014. [Google Scholar] [CrossRef]

- Davis, L. Job Shop Scheduling with Genetic Algorithms. In Proceedings of the 1st International Conference on Genetic Algorithms, Pittsburgh, PA, USA, 24–26 July 1985; pp. 136–140. [Google Scholar]

- Holland, J.H. Adaptation in Natural and Artificial Systems: An Introductory Analysis with Applications to Biology, Control, and Artificial Intelligence. MIT Press eBooks. IEEE Xplore. Available online: https://ieeexplore.ieee.org/book/6267401 (accessed on 3 July 2022).

- Kennedy, J.; Eberhart, R. Particle Swarm Optimization. In Proceedings of the ICNN’95—International Conference on Neural Networks, Perth, Australia, 27 November–1 December 1995; Volume 4, pp. 1942–1948. [Google Scholar] [CrossRef]

- Kirkpatrick, S.; Gelatt, C.D., Jr.; Vecchi, M.P. Optimization by Simulated Annealing. Science 1983, 220, 671–680. [Google Scholar] [CrossRef]

- Wang, S.-J.; Tsai, C.-W.; Chiang, M.-C. A High Performance Search Algorithm for Job-Shop Scheduling Problem. Procedia Comput. Sci. 2018, 141, 119–126. [Google Scholar] [CrossRef]

- Yu, H.; Gao, Y.; Wang, L.; Meng, J. A Hybrid Particle Swarm Optimization Algorithm Enhanced with Nonlinear Inertial Weight and Gaussian Mutation for Job Shop Scheduling Problems. Mathematics 2020, 8, 1355. [Google Scholar] [CrossRef]

- Kurdi, M. An effective genetic algorithm with a critical-path-guided Giffler and Thompson crossover operator for job shop scheduling problem. Int. J. Intell. Syst. Appl. Eng. 2019, 7, 13–18. [Google Scholar] [CrossRef][Green Version]

- Jiang, T.; Zhang, C. Application of Grey Wolf Optimization for Solving Combinatorial Problems: Job Shop and Flexible Job Shop Scheduling Cases. IEEE Access 2018, 6, 26231–26240. [Google Scholar] [CrossRef]

- Wang, F.; Tian, Y.; Wang, X. A Discrete Wolf Pack Algorithm for Job Shop Scheduling Problem. In Proceedings of the 2019 5th International Conference on Control, Automation and Robotics (ICCAR), Beijing, China, 19–22 April 2019; pp. 581–585. [Google Scholar] [CrossRef]

- Hamzadayı, A.; Baykasoğlu, A.; Akpınar, Ş. Solving combinatorial optimization problems with single seekers society algorithm. Knowl.-Based Syst. 2020, 201–202, 106036. [Google Scholar] [CrossRef]

- Garcia-Hernandez, L.; Salas-Morera, L.; Carmona-Munoz, C.; Abraham, A.; Salcedo-Sanz, S. A Hybrid Coral Reefs Optimization—Variable Neighborhood Search Approach for the Unequal Area Facility Layout Problem. IEEE Access 2020, 8, 134042–134050. [Google Scholar] [CrossRef]

- Tsai, C.-W.; Chang, H.-C.; Hu, K.-C.; Chiang, M.-C. Parallel coral reef algorithm for solving JSP on Spark. In Proceedings of the 2016 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Budapest, Hungary, 9–12 October 2016; pp. 001872–001877. [Google Scholar] [CrossRef]

- Cheng, R.; Gen, M.; Tsujimura, Y. A tutorial survey of job-shop scheduling problems using genetic algorithms—I. Representation. Comput. Ind. Eng. 1996, 30, 983–997. [Google Scholar] [CrossRef]

- Cheng, R.; Gen, M.; Tsujimura, Y. A tutorial survey of job-shop scheduling problems using genetic algorithms, Part II: Hybrid genetic search strategies. Comput. Ind. Eng. 1999, 36, 343–364. [Google Scholar] [CrossRef]

- Lee, Y.S.; Graham, E.; Jackson, G.; Galindo, A.; Adjiman, C.S. A comparison of the performance of multi-objective optimization methodologies for solvent design. In Computer Aided Chemical Engineering; Kiss, A.A., Zondervan, E., Lakerveld, R., Özkan, L., Eds.; Elsevier: Amsterdam, The Netherlands, 2019; Volume 46, pp. 37–42. [Google Scholar] [CrossRef]

- Ruiz, J.; Garcia, G. Simulated Annealing Evolution; IntechOpen: London, UK, 2012. [Google Scholar] [CrossRef]

- Mladenović, N.; Hansen, P. Variable neighborhood search. Comput. Oper. Res. 1997, 24, 1097–1100. [Google Scholar] [CrossRef]

- Hansen, P.; Mladenović, N.; Urošević, D. Variable neighborhood search for the maximum clique. Discret. Appl. Math. 2004, 145, 117–125. [Google Scholar] [CrossRef]

- Lawrence, S. Resource constrained project scheduling: An experimental investigation of heuristic scheduling techniques (Supplement). Master’s Thesis, Graduate School of Industrial Administration, Pittsburgh, PA, USA, 1984. [Google Scholar]

- Qing-Dao-Er-Ji, R.; Wang, Y. A new hybrid genetic algorithm for job shop scheduling problem. Comput. Oper. Res. 2012, 39, 2291–2299. [Google Scholar] [CrossRef]

- Gao, L.; Zhang, G.; Zhang, L.; Li, X. An efficient memetic algorithm for solving the job shop scheduling problem. Comput. Ind. Eng. 2011, 60, 699–705. [Google Scholar] [CrossRef]

- Fisher, C.; Thompson, G. Probabilistic Learning Combinations of Local Job-shop Scheduling Rules; Industrial Scheduling: Englewood Cliffs, NJ, USA, 1963; pp. 225–251. [Google Scholar]

- Applegate, D.; Cook, W. A Computational Study of the Job-Shop Scheduling Problem. Informs J. Comput. 1991, 3, 149–156. [Google Scholar] [CrossRef]

- Adams, J.; Balas, E.; Zawack, D. The Shifting Bottleneck Procedure for Job Shop Scheduling. Manag. Sci. 1988, 34, 391–401. [Google Scholar] [CrossRef]

- Viana, M.S.; Junior, O.M.; Contreras, R.C. An Improved Local Search Genetic Algorithm with Multi-crossover for Job Shop Scheduling Problem. In Artificial Intelligence and Soft Computing; Springer: Cham, Swizerland, 2020; pp. 464–479. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).