1. Introduction

Deepfake is a category of synthetic media [

1] in which fake content is generated based on existing content, usually a person’s media. In 2017, the “deepfake” term was first invented by a Reddit user of the name deepfake. The fake content is based on video graphics, audio signals, and face swapping technology. The artificial intelligence-based general adversarial networks [

2] are utilized for deepfake generation. The deepfakes involved in cybercrimes [

3] include identity theft, cyber extortion, imposter scam, fake news, incite violence, financial fraud, cyberbullying, celebrity fake obscenity videos [

4] for blackmailing, democratic election, and many more. Detecting deepfake media is a big challenge with high demand in digital forensics.

Deepfake is mainly used to threaten organizations and individuals. The cybercrimes related to deepfake are rising day by day. The creation of deepfakes is unethical and is a severe crime. Over 96% of the deepfakes are of obscene content, according to a Sensity report [

5]. The United Kingdom, United States, Canada, India, and South Korea are the victims of deepfakes. In 2019, cybercriminals scammed a chief executive officer through fake audio content by transferring

$243,000 to their bank account [

6]. Deepfake-related rising crimes must be controlled and detected with an advanced tool.

Face recognition technology is utilized to verify an individual’s identity by using a face database [

7]. Face recognition is a category of biometric security. Face recognition systems are primarily used in law enforcement and security to control cybercrimes. The two- and three-dimensional pixels-based face images are utilized for face detection. The critical patterns to distinguishing faces are based on the chin, ears, contour of the lips, shape of cheekbones, depth of eye sockets, and distance between eyes.

The face recognition technology utilizes deep learning-based networks [

8] to identify and learn specific face patterns. The face-related data is converted into a mathematical representation. The deep learning methods are best known for representation learning. Deep neural networks consist of multiple layers of connected neurons. The deep learning methods learned prominent facial biometric patterns [

9] for face recognition with high accuracy. The databases containing the faces are utilized for training deep learning-based models. The deep learning-based models outperform the face recognition capabilities of humans.

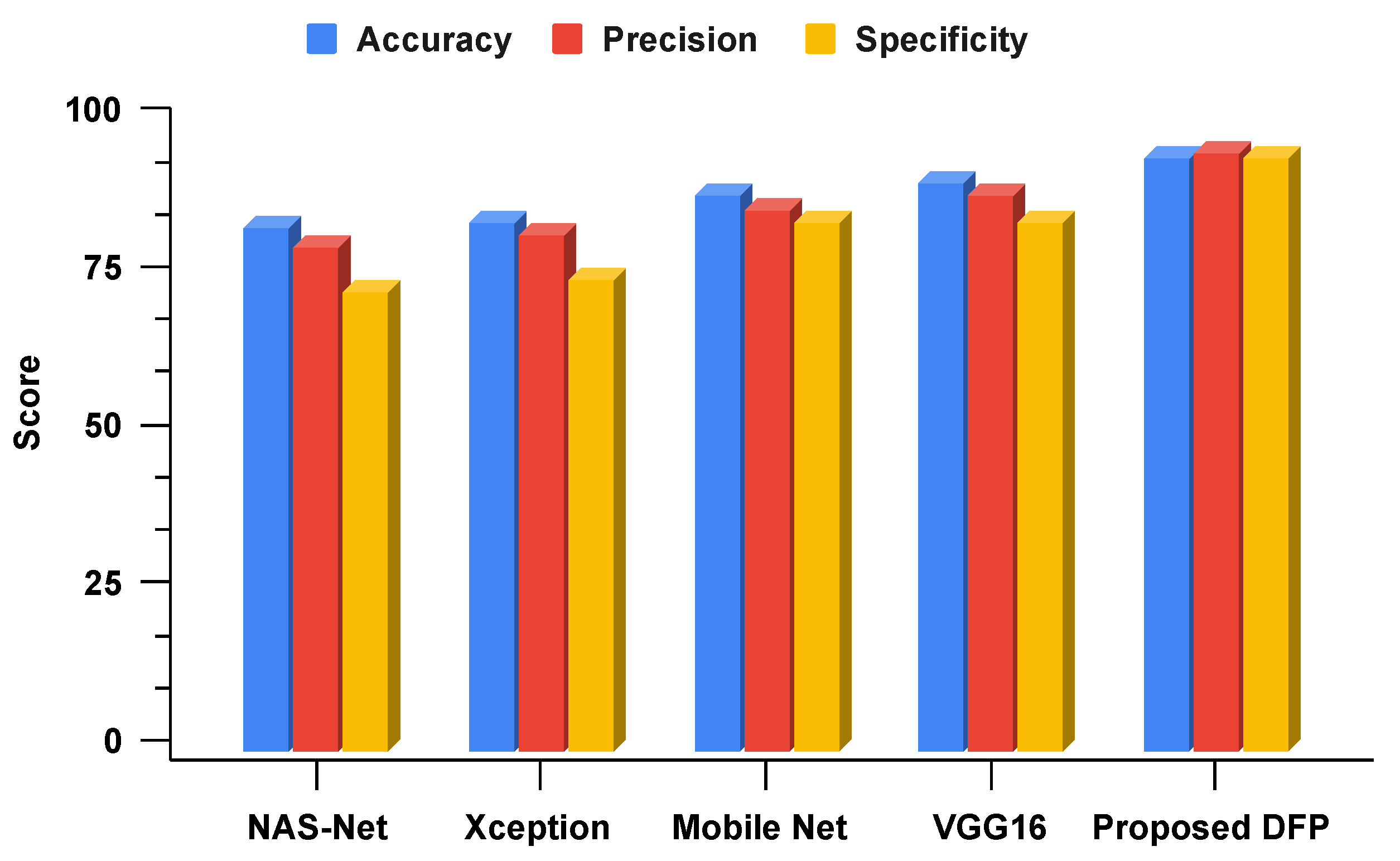

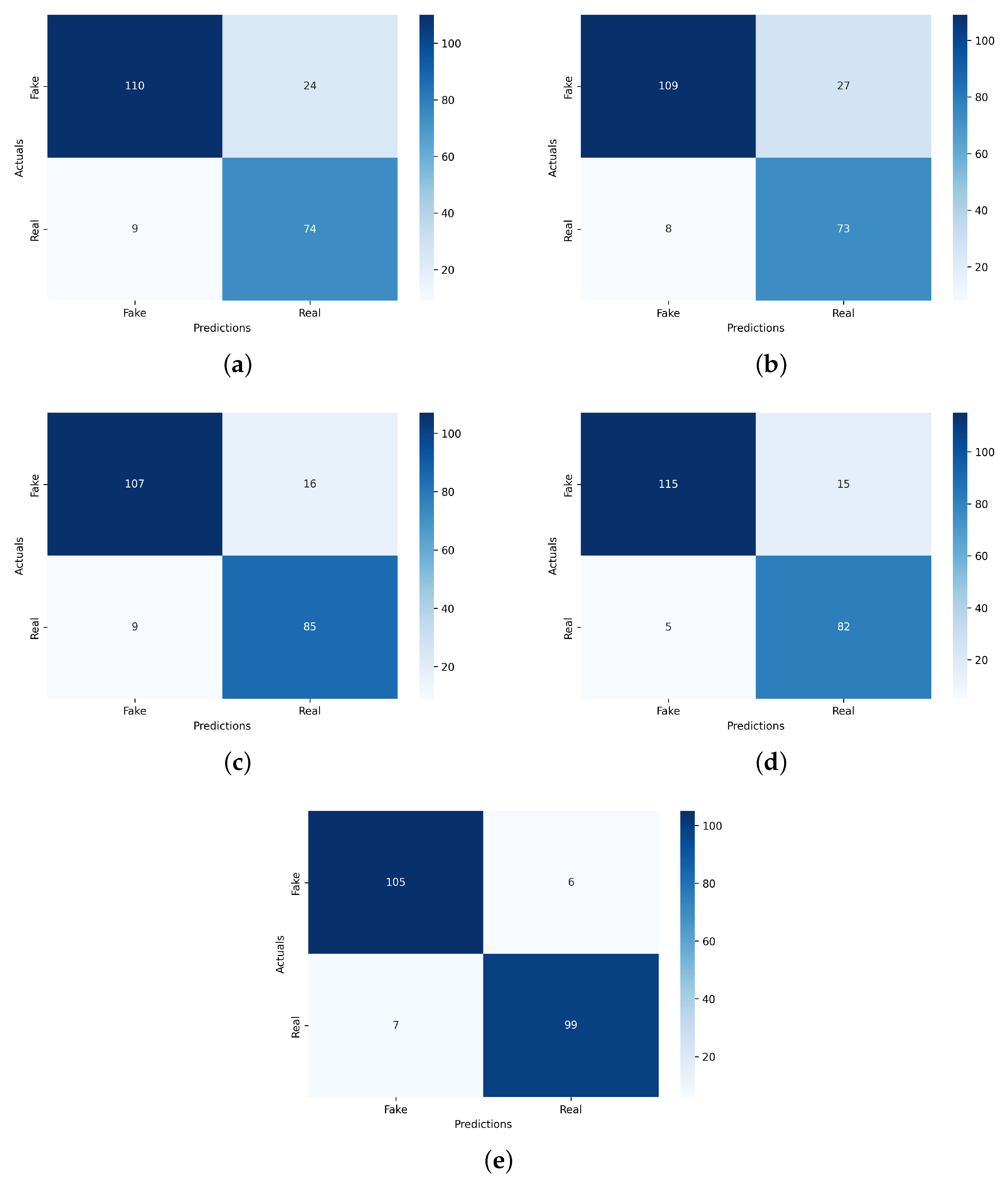

The primary study contributions for deepfake detection are analyzed in detail. Transfer learning and deep learning-based neural network techniques are employed in comparison. The Xception, NAS-Net, Mobile Net, and VGG16 are the employed transfer learning techniques. A novel DFP approach based on a hybrid of VGG16 and convolutional neural network architecture is proposed for deepfake detection. The novel proposed DFP outperformed other state-of-the-art studies and transfer learning techniques. Hyperparameter tuning is applied to determine the best-fit parameters for the neural network techniques to achieve maximum performance accuracy scores. The training and validation effects of employed neural network techniques are examined in time-series graphs. The confusion matrix analysis of employed neural network techniques is conducted for performance metrics validation.

The remainder of the study organization is as follows: the related literature studies to deepfakes are examined in

Section 2. The study methodology is based on the research working flow analyzed in

Section 3. The utilized deepfake dataset is described in

Section 4. The transfer learning-based neural network techniques are examined in

Section 5. The novel proposed deep learning-based approach is described in

Section 6. The hyperparameter tuning of employed neural network techniques is conducted in

Section 7. The discussions of results and scientific validations of our research study are evaluated in

Section 8. Our novel research study is concluded in

Section 9.

2. Related Literature

The deepfake-related literature studies are examined in this section. The recent state-of-the-art studies applied for deepfake detection are described. The literature analysis is based on the dataset utilized and performance accuracy scores. The deepfake detection-related literature summary is analyzed in

Table 1.

Video deepfake detection using a deep learning-based methodology was proposed in this study [

10]. The XGBoost approach was the proposed approach. The face areas were extracted from video frames using a YOLO face detector [

11], CNN, and Inception Res-Net techniques. The CelebDF and FaceForencics++ datasets were utilized for model building and training. The extracted features from faces were input to XGBoost for detection. The proposed approach achieved a 90% accuracy score for video deepfake detection.

Automatic deepfake video classification using deep learning was proposed in this study [

12]. The Mobile Net and Xception are the applied deep learning-based techniques. The FaceForensics++ dataset was utilized for deep learning model training and testing. The applied deep learning technique’s accuracy scores vary between 91% and 98% for deepfake video classification.

Deepfake recognition based on human eye blinking patterns using deep learning was proposed [

13]. DeepVision was the proposed approach for deepfake detection. The static deepfakes eye blinking images dataset based on eight video frames was utilized for proposed model testing and training. The proposed model achieved an 87% accuracy score for deepfake detection.

The deepfake detection based on spectral, spatial, and temporal inconsistencies using multimodal deep learning techniques was proposed in this study [

14]. The Facebook deepfake challenge dataset was utilized for learning model building. The multimodal network was proposed based on Long Short-Term Memory (LSTM) networks. The proposed model achieved a 61% accuracy score for deepfake detection.

Deepfake image detection using pairwise deep learning was proposed in this study [

15]. All research experiments were carried out by using the CelebA dataset. Generative adversarial networks generated the fake and real image pairs. The Dense Net and fake feature network were proposed for deepfake image detection. The proposed approach achieved a 90% accuracy score for deepfake detection.

The deepfake detection from manipulated videos using ensemble-based learning approaches was proposed in this study [

17]. The DeepfakeStack was the proposed approach for deepfake detection. The DeepfakeStack approach training and testing were performed using the FaceForensics++ dataset.

Medical deepfake image detection based on machine learning and deep learning was proposed in this study [

16]. The annotated CT-GAN dataset was employed for building learning techniques. The proposed Dense Net achieved an accuracy score of 80% for multiclass delocalized medical deepfake images.

The literature review demonstrates that no previous research study uses the optimized hybrid model architecture compared to our proposed study. Our proposed study achieved high metrics scores for deepfake detection compared to previous studies. The key findings of our study are based on a novel optimized hybrid model for deepfake detection. The model architecture utilized in our research study contains less complexity. The proposed optimized model is efficient in the data processing. In conclusion, our novel research study has many valuable applications in cybersecurity for deepfake detection.

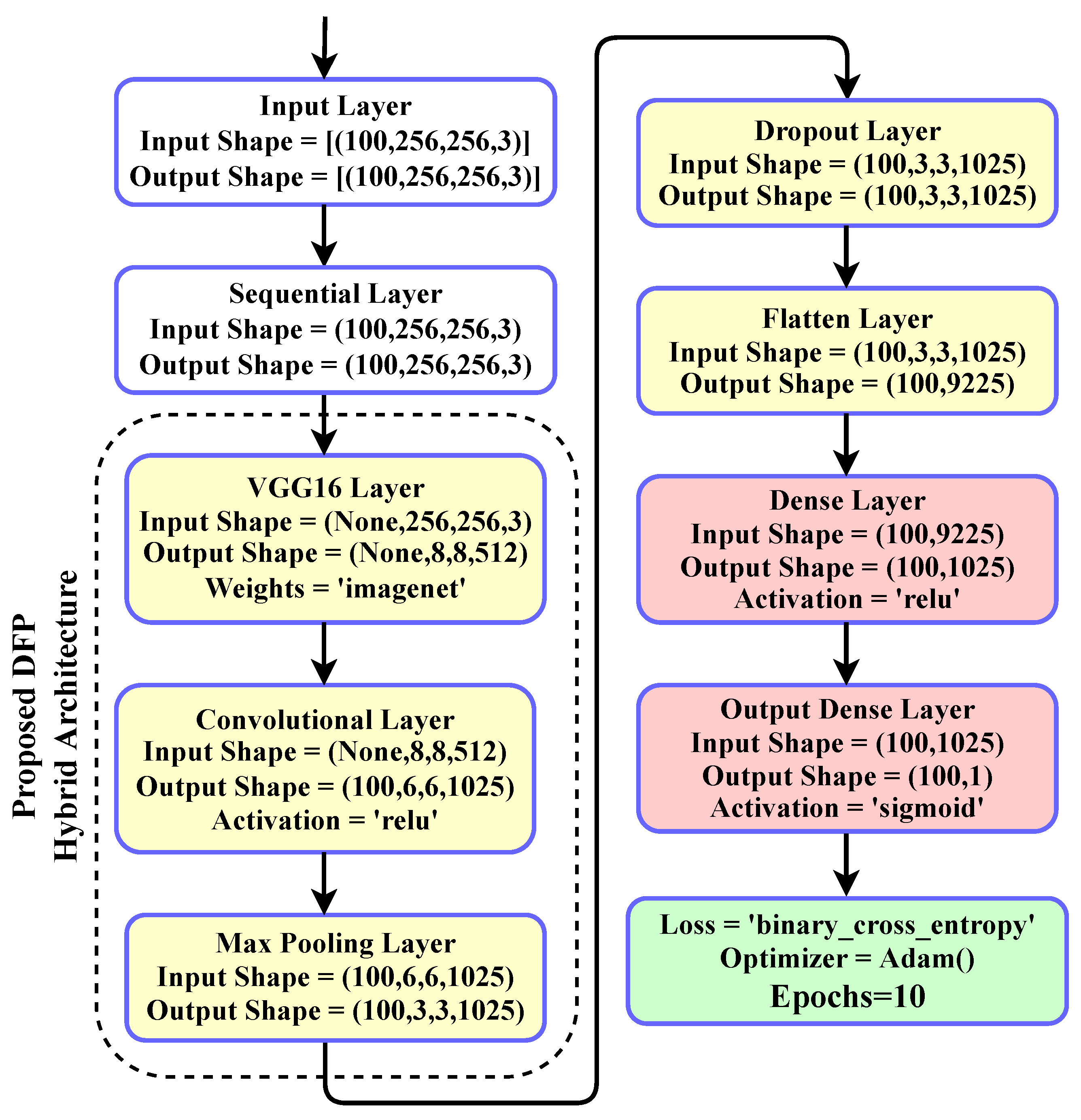

6. Novel Proposed Approach

A novel DFP approach is proposed based on a hybrid of VGG16 and convolutional neural network architecture. For the first time, our research study uses a hybrid of transfer learning and deep learning-based neural network architecture for deepfake detection. The VGG16 and convolutional neural network layers were combined to make the proposed model architecture. Convolutional neural networks are the family of artificial neural networks primarily used in image recognition applications. The convolutional neural networks are specifically designed to process pixel data. The image data is represented in convolutional neural networks using multidimensional array forms. The primary aim of the artificial neural network is to learn patterns from historical data and make predictions for unseen data.

The proposed model layer’s architecture and configuration parameters are analyzed. The configuration parameters of the novel proposed approach are examined in

Table 6. The configuration parameters described the units and parameters used during the proposed model building. The architecture analysis is visualized in

Figure 3. The architectural analysis demonstrates the deepfake detection image data flow from input to prediction layers using the proposed technique. The hybrid layers of VGG16 and convolutional neural networks are involved in creating the architecture. The pooling, dropout, flatten, and fully-connected layers were combined to build the novel proposed model architecture.

The novel proposed DFP model has many advantages compared to state-of-the-art models. The proposed DFP network architecture is fully optimized. The comparative architectural analysis demonstrates that the proposed model contains less complexity. The proposed optimized model is efficient in data processing. The high-performance metrics scores were obtained for deepfake detection using the proposed three stacks of convolutional neural network layers.