Lightweight and Parameter-Optimized Real-Time Food Calorie Estimation from Images Using CNN-Based Approach

Abstract

:1. Introduction

- Developing a parameter-optimized lightweight CNN model to instinctively analyze food images, and estimate constituent calorie by detecting distinct items in it;

- Training and optimizing the model performance to achieve an accuracy of 85%;

- Undertake a comparative assessment among different configurations of the CNN-based approach in relation to accuracy, speed, and complexity.

2. Literature Review

| Studies | Year | Food Calorie Estimation | Real Time | Optimize Time Complexity | Optimize Space Complexity | Satisfactory Score |

|---|---|---|---|---|---|---|

| Hoashi et al. [14] | 2010 | − | − | − | − | ✓ |

| Pouladzade et al. [15] | 2014 | ✓ | − | ✓ | ✓ | − |

| Liang & Li [16] | 2017 | ✓ | − | − | − | ✓ |

| Raikwar et al. [17] | 2018 | ✓ | − | ✓ | ✓ | − |

| Meneze et al. [18] | 2019 | − | − | − | − | ✓ |

| Zaman et al. [31] | 2019 | − | ✓ | ✓ | ✓ | − |

| Poply et al. [13] | 2020 | ✓ | − | − | − | ✓ |

| Latif et al. [19] | 2020 | ✓ | − | − | − | ✓ |

| Shen et al. [20] | 2020 | ✓ | − | − | − | ✓ |

| Ruede et al. [25] | 2020 | ✓ | − | − | − | ✓ |

| Kasyap et al. [22] | 2021 | ✓ | − | − | − | − |

| Ayon et al. [23] | 2021 | ✓ | − | − | − | − |

| Darapaneni et al. [21] | 2021 | ✓ | − | − | − | ✓ |

| Okamoto et al. [24] | 2021 | ✓ | − | − | − | − |

| Naritomi et al. [26] | 2021 | ✓ | − | − | − | − |

| Jelodar & Sun [27] | 2021 | ✓ | − | − | − | − |

| Naritomi & Yanai [28] | 2021 | ✓ | ✓ | − | − | ✓ |

| Siemon et al. [30] | 2021 | − | − | − | − | ✓ |

| Subaran [29] | 2022 | ✓ | − | − | − | ✓ |

| Proposed system | 2022 | ✓ | ✓ | ✓ | ✓ | ✓ |

3. Preliminaries

3.1. Real-Time System

3.2. Deep Learning and CNN

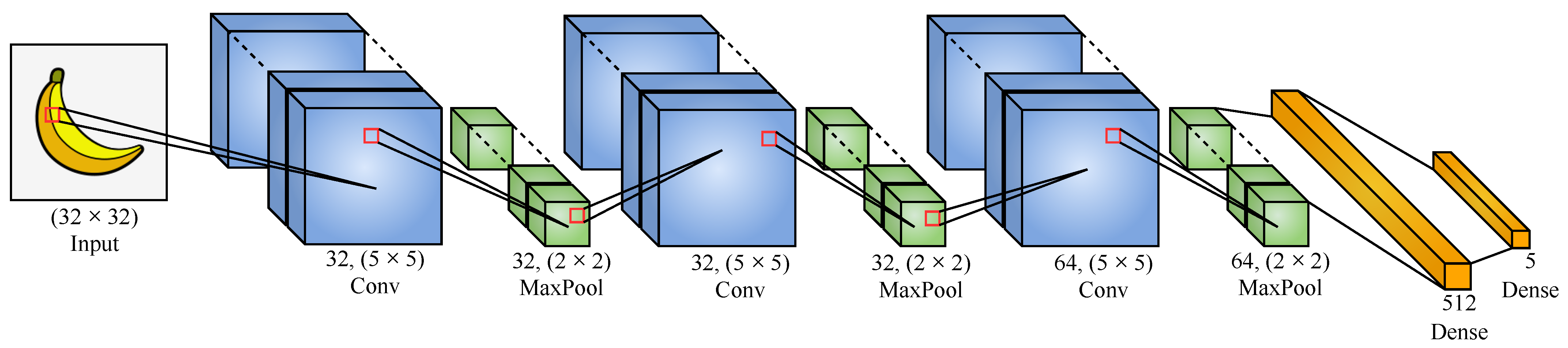

- Convolution Layer: The computer stores image data as a matrix where every individual pixel value of the image is preserved. In this layer, different filters play active roles. A filter is also a matrix, but smaller than the input matrix of any image. In a convolution layer, every filter dimension is the same, but values may differ. When an image is fed into one of these filters, the filter scans the matrix of the image, performs a dot product between the matrix value of the image and filter, adds all the values and a new matrix is generated as an output of this layer.

- Max Pooling Layer: The max-pooling layer is commonly used after every convolution layer. The main task of this max-pooling layer is the feature extraction. It finds and extracts the dominant feature from the matrix generated in the convolution layer, ignoring the less important ones. This makes the deep learning model much more efficient.

- Dense Layer: The dense layer is a fully connected layer. Every neuron or filter of the dense layer is connected to every output node of the previous layer. It is actually a small traditional neural network inside the CNN [36]. It feeds all outputs from the previous layer to all its neurons where each neuron provides one output to the next layer.

- ReLu (Rectified Linear unit) Activation: This activation function improves the decision and nonlinear features of the network without changing the receptive fields of the convolution layer. ReLU is often preferred over other nonlinear functions used in CNNs (such as hyperbolic tangent, absolute of hyperbolic tangent, and sigmoid) because it trains the neural network several times faster without a significant penalty to generalization accuracy.

- ADAM Optimizer: Adam is a stochastic gradient descent optimization method that may be used in place of the conventional stochastic gradient descent technique to update network weights which are iterative based on training data [37]. It holds the decreased average of the past squared gradients such as AdaDelta and RMSprop; it furthermore holds a decreased average of past gradients , i.e.,

- SoftMax Function: This function transforms a vector of K real values and converts it to a vector of K absolute values that sum to one. Although the input values may be positive, negative, zero, or more than one, SoftMax converts them to values between 0 and 1 that can be interpreted as probabilities.Here, values are input vector elements and may take any real value. The normalizing factor at the bottom of the formula guarantees that the summation of all the function’s output values equals one.

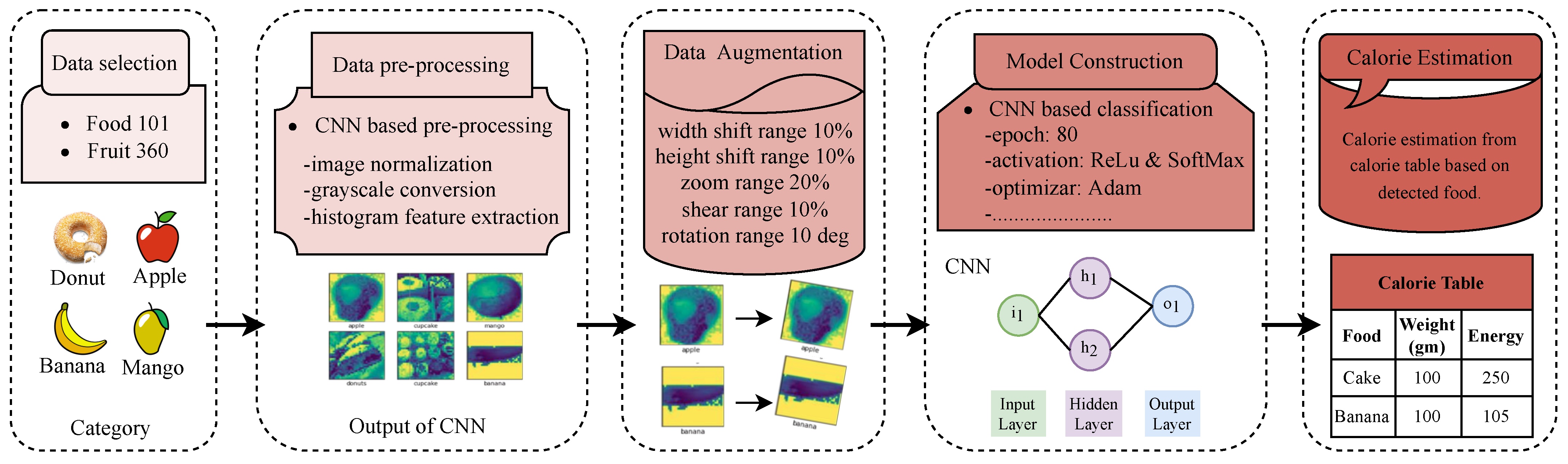

4. Methodology

4.1. Data Set Selection

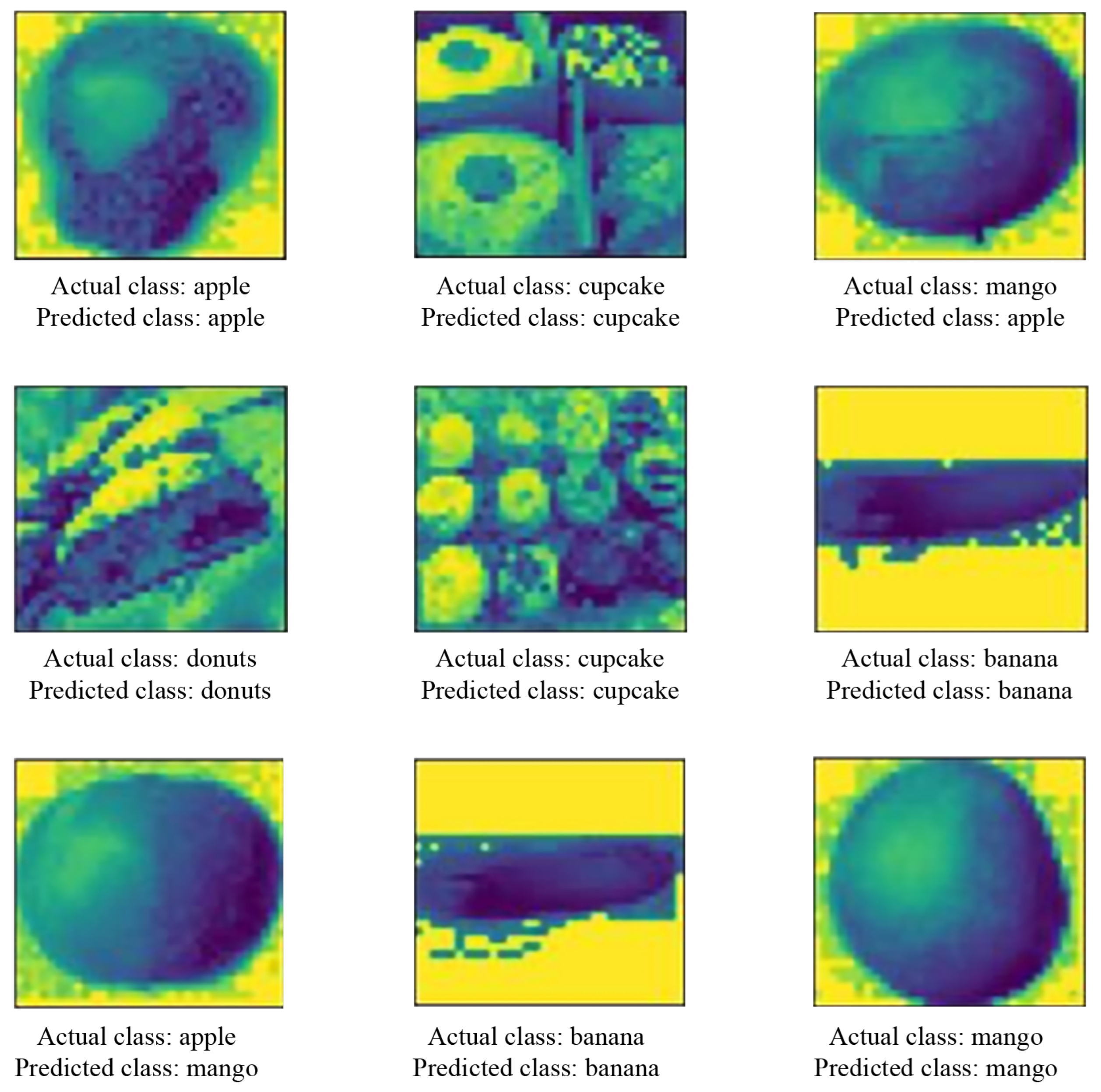

4.2. Data Set Preprocessing

4.3. Data Augmentation

4.4. Model Construction

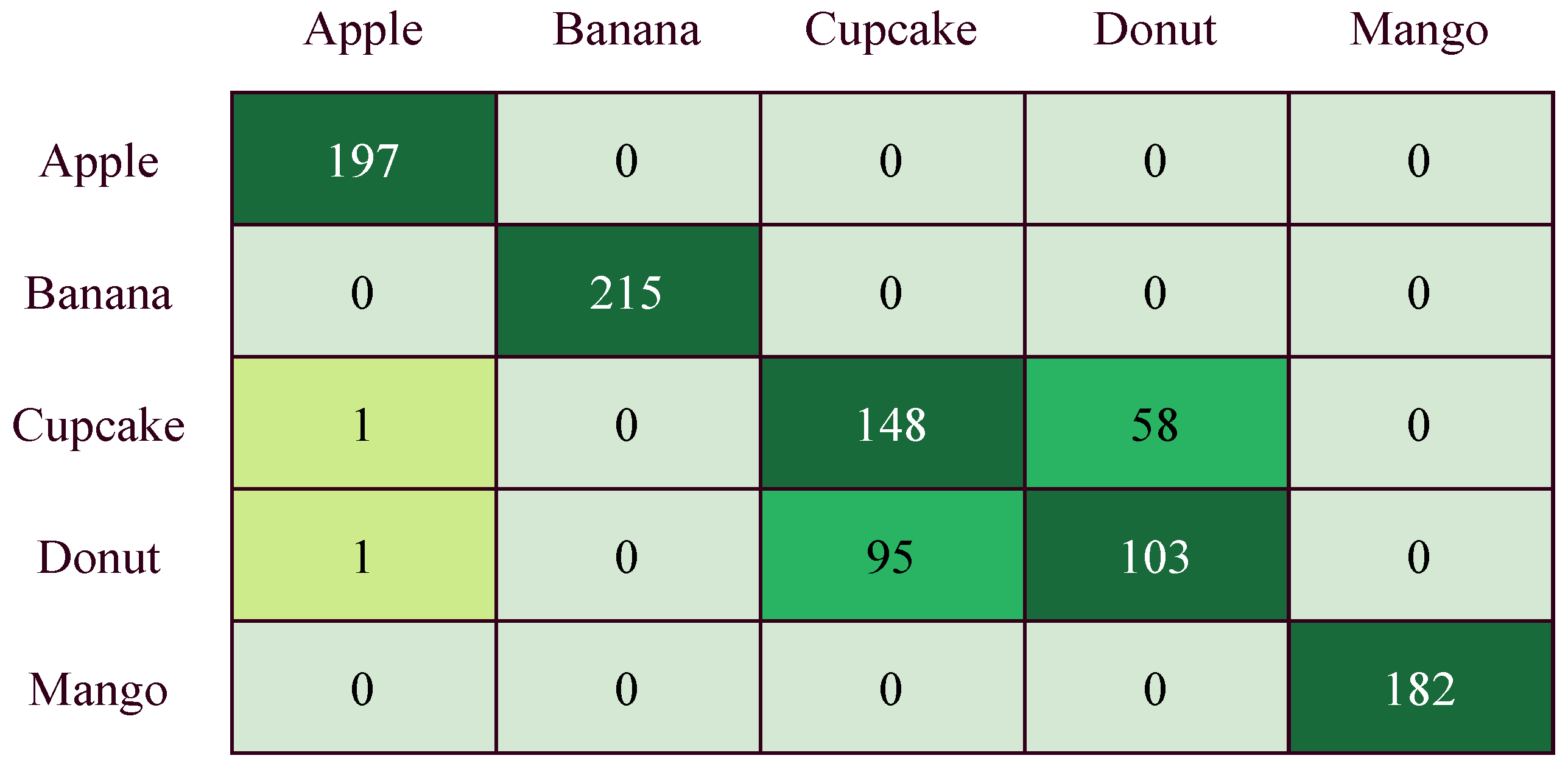

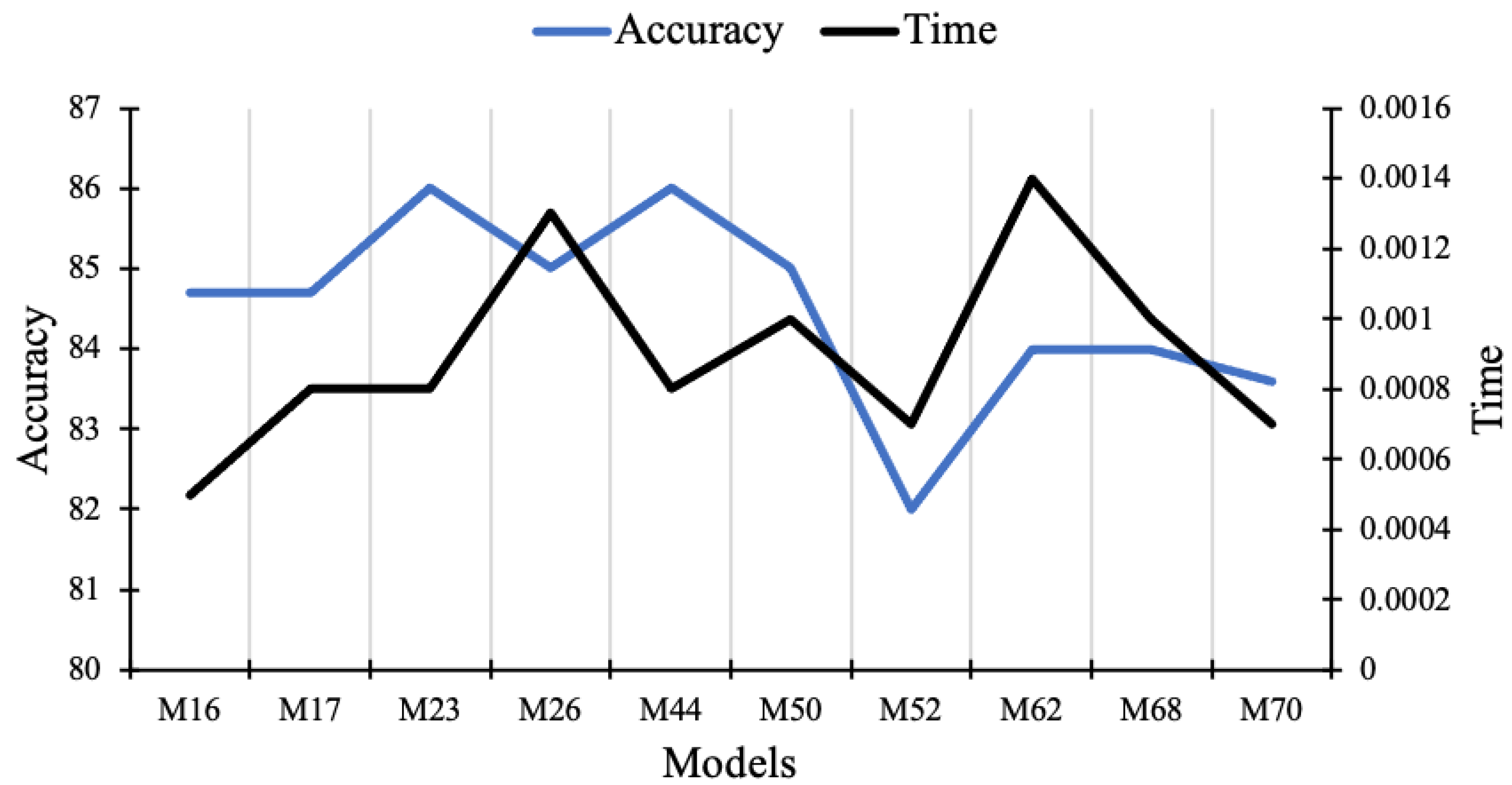

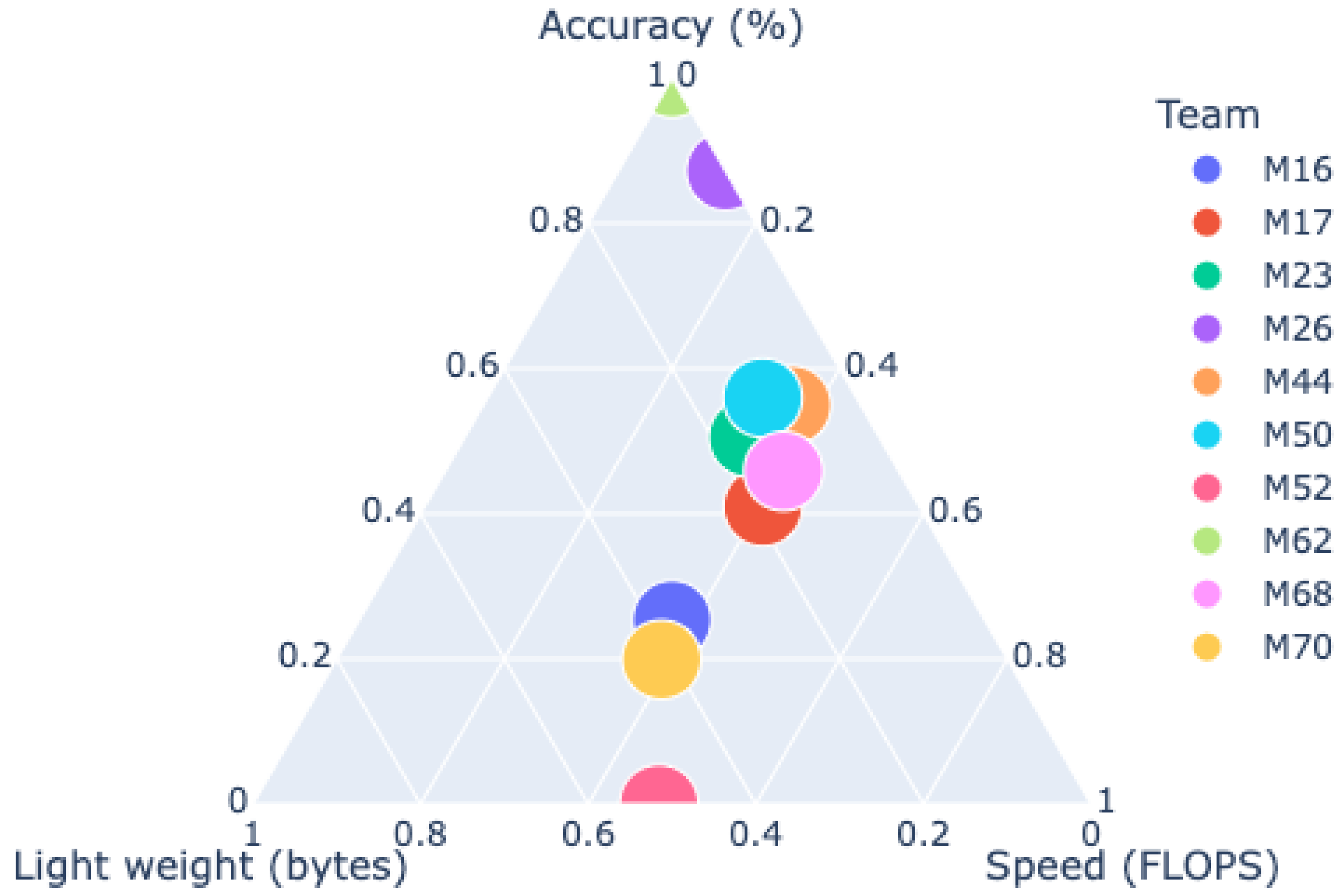

5. Results and Findings

- FLOPs: To measure the inference time of a model, we have calculated the total number of computations performed by the model. This is where we mention the term Floating Point Operation (FLOP). This could be an addition, subtraction, division, multiplication, or any other operation that involves a floating point value. The FLOPs provide the complexity of the model.

- FLOPS: The next term is the Floating Point Operations per Second (FLOPS). This term provides information on the efficiency of the hardware system. For this study, 1 FLOPS is considered as 1,000,000,000 operations per second.

6. Discussion

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Bray, G.; Bouchard, C. (Eds.) Handbook of Obesity-Volume 2: Clinical Applications; CRC Press: Boca Raton, FL, USA, 2014. [Google Scholar]

- Prentice, A.M.; Jebb, S.A. Beyond body mass index. Obes. Rev. 2001, 2, 141–147. [Google Scholar] [CrossRef]

- Petimar, J.; Ramirez, M.; Rifas-Shiman, S.L.; Linakis, S.; Mullen, J.; Roberto, C.A.; Block, J.P. Evaluation of the impact of calorie labeling on McDonald’s restaurant menus: A natural experiment. Int. J. Behav. Nutr. Phys. Act. 2019, 16, 99. [Google Scholar] [CrossRef]

- Health Canada. Health Canada Nutrient Values. November 2011. Available online: https://www.canada.ca/en/health-canada/services/food-nutrition/healthy-eating/nutrient-data/nutrient-value-some-common-foods-booklet.html (accessed on 31 August 2022).

- Kasar, M.M.; Bhattacharyya, D.; Kim, T.H. Face recognition using neural network: A review. Int. J. Secur. Its Appl. 2016, 10, 81–100. [Google Scholar] [CrossRef]

- Li, G.Z.; Bu, H.L.; Yang, M.Q.; Zeng, X.Q.; Yang, J.Y. Selecting subsets of newly extracted features from PCA and PLS in microarray data analysis. BMC Genom. 2008, 9, S24. [Google Scholar] [CrossRef]

- Ciocca, G.; Micali, G.; Napoletano, P. State recognition of food images using deep features. IEEE Access 2020, 8, 32003–32017. [Google Scholar] [CrossRef]

- Park, S.J.; Palvanov, A.; Lee, C.H.; Jeong, N.; Cho, Y.I.; Lee, H.J. The development of food image detection and recognition model of Korean food for mobile dietary management. Nutr. Res. Pract. 2019, 13, 521–528. [Google Scholar] [CrossRef]

- Mezgec, S.; Seljak, B.K. Using deep learning for food and beverage image recognition. In Proceedings of the 2019 IEEE International Conference on Big Data (Big Data), Los Angeles, CA, USA, 9–12 December 2019; pp. 5149–5151. [Google Scholar]

- Mezgec, S.; Eftimov, T.; Bucher, T.; Seljak, B.K. Mixed deep learning and natural language processing method for fake-food image recognition and standardization to help automated dietary assessment. Public Health Nutr. 2019, 22, 1193–1202. [Google Scholar] [CrossRef]

- Moolchandani, D.; Kumar, A.; Sarangi, S.R. Accelerating cnn inference on asics: A survey. J. Syst. Archit. 2021, 113, 101887. [Google Scholar] [CrossRef]

- Liang, H.; Gao, Y.; Sun, Y.; Sun, X. CEP: Calories estimation from food photos. Int. J. Comput. Appl. 2020, 42, 569–577. [Google Scholar] [CrossRef]

- Poply, P. An Instance Segmentation approach to Food Calorie Estimation using Mask R-CNN. In Proceedings of the 2020 3rd International Conference on Signal Processing and Machine Learning, Beijing, China, 22–24 October 2020; pp. 73–78. [Google Scholar]

- Hoashi, H.; Joutou, T.; Yanai, K. Image recognition of 85 food categories by feature fusion. In Proceedings of the Proceedings of the 2010 IEEE International Symposium on Multimedia, Taichung, Taiwan, 13–15 December 2010; pp. 296–301.

- Pouladzadeh, P.; Shirmohammadi, S.; Al-Maghrabi, R. Measuring calorie and nutrition from food image. IEEE Trans. Instrum. Meas. 2014, 63, 1947–1956. [Google Scholar] [CrossRef]

- Liang, Y.; Li, J. Computer vision-based food calorie estimation: Data set, method, and experiment. arXiv 2017, arXiv:1705.07632. [Google Scholar]

- Raikwar, H.; Jain, H.; Baghel, A. Calorie Estimation from Fast Food Images Using Support Vector Machine. Int. J. Future Revolut. Comput. Sci. Commun. Eng. 2018, 4, 98–102. [Google Scholar]

- De Menezes, R.S.T.; Magalhaes, R.M.; Maia, H. Object recognition using convolutional neural networks. In Recent Trends in Artificial Neural Networks-from Training to Prediction; IntechOpen: London, UK, 2019. [Google Scholar]

- Latif, G.; Alsalem, B.; Mubarky, W.; Mohammad, N.; Alghazo, J. Automatic Fruits Calories Estimation through Convolutional Neural Networks. In Proceedings of the 2020 6th International Conference on Computer and Technology Applications, Antalya, Turkey, 14–16 April 2020; pp. 17–21. [Google Scholar]

- Shen, Z.; Shehzad, A.; Chen, S.; Sun, H.; Liu, J. Machine learning based approach on food recognition and nutrition estimation. Procedia Comput. Sci. 2020, 174, 448–453. [Google Scholar] [CrossRef]

- Darapaneni, N.; Singh, V.; Tarkar, Y.S.; Kataria, S.; Bansal, N.; Kharade, A.; Paduri, A.R. Food Image Recognition and Calorie Prediction. In Proceedings of the 2021 IEEE International IOT, Electronics and Mechatronics Conference (IEMTRONICS), Toronto, ON, Canada, 21–24 April 2021; pp. 1–6. [Google Scholar]

- Kasyap, V.B.; Jayapandian, N. Food Calorie Estimation using Convolutional Neural Network. In Proceedings of the 2021 3rd International Conference on Signal Processing and Communication (ICPSC), Coimbatore, India, 13–14 May 2021; pp. 666–670. [Google Scholar]

- Ayon, S.A.; Mashrafi, C.Z.; Yousuf, A.B.; Hossain, F.; Hossain, M.I. FoodieCal: A Convolutional Neural Network Based Food Detection and Calorie Estimation System. In Proceedings of the 2021 National Computing Colleges Conference (NCCC), Taif, Saudi Arabia, 27–28 March 2021; pp. 1–6. [Google Scholar]

- Okamoto, K.; Adachi, K.; Yanai, K. Region-Based Food Calorie Estimation for Multiple-Dish Meals. In Proceedings of the 13th International Workshop on Multimedia for Cooking and Eating Activities, Taipei, Taiwan, 16–19 November 2021; pp. 17–24. [Google Scholar]

- Ruede, R.; Heusser, V.; Frank, L.; Roitberg, A.; Haurilet, M.; Stiefelhagen, R. Multi-task learning for calorie prediction on a novel large-scale recipe data set enriched with nutritional information. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; pp. 4001–4008. [Google Scholar]

- Naritomi, S.; Yanai, K. CalorieCaptorGlass: Food calorie estimation based on actual size using hololens and deep learning. In Proceedings of the 2020 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Atlanta, GA, USA, 22–26 March 2020; pp. 818–819. [Google Scholar]

- Jelodar, A.B.; Sun, Y. Calorie Aware Automatic Meal Kit Generation from an Image. arXiv 2021, arXiv:2112.09839. [Google Scholar]

- Naritomi, S.; Yanai, K. Pop’n Food: 3D Food Model Estimation System from a Single Image. In Proceedings of the 2021 IEEE 4th International Conference on Multimedia Information Processing and Retrieval (MIPR), Tokyo, Japan, 8–10 September 2021; pp. 223–226. [Google Scholar]

- Subaran, T.L.; Semiawan, T.; Syakrani, N. Mask R-CNN and GrabCut Algorithm for an Image-based Calorie Estimation System. J. Inf. Syst. Eng. Bus. Intell. 2022, 8, 1–10. [Google Scholar] [CrossRef]

- Siemon, M.S.; Shihavuddin, A.S.M.; Ravn-Haren, G. Sequential transfer learning based on hierarchical clustering for improved performance in deep learning based food segmentation. Sci. Rep. 2021, 11, 813. [Google Scholar] [CrossRef]

- Zaman, D.M.S.; Maruf, M.H.; Rahman, M.A.; Ferdousy, J.; Shihavuddin, A.S.M. Food Depth Estimation Using Low-Cost Mobile-Based System for Real-Time Dietary Assessment. GUB J. Sci. Eng. 2019, 6, 1–11. [Google Scholar] [CrossRef]

- Buttazzo, G.; Lipari, G.; Abeni, L.; Caccamo, M. Soft Real-Time Systems; Springer: Berlin/Heidelberg, Germany, 2005; Volume 283. [Google Scholar]

- Heenaye-Mamode Khan, M.; Boodoo-Jahangeer, N.; Dullull, W.; Nathire, S.; Gao, X.; Sinha, G.R.; Nagwanshi, K.K. Multi-class classification of breast cancer abnormalities using Deep Convolutional Neural Network (CNN). PLoS ONE 2021, 16, e0256500. [Google Scholar] [CrossRef]

- Jaiswal, S.; Nandi, G.C. Robust real-time emotion detection system using CNN architecture. Neural Comput. Appl. 2020, 32, 11253–11262. [Google Scholar] [CrossRef]

- Albawi, S.; Mohammed, T.A.; Al-Zawi, S. Understanding of a convolutional neural network. In Proceedings of the 2017 international conference on engineering and technology (ICET), Antalya, Turkey, 21–23 August 2017; pp. 1–6. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Kaggle Data Set: Food-101. Available online: https://www.kaggle.com/datasets/dansbecker/food-101 (accessed on 1 July 2022).

- Kaggle Data Set: Fruit-360. Available online: https://www.kaggle.com/datasets/moltean/fruits (accessed on 1 July 2022).

- Jabbar, H.; Khan, R.Z. Methods to avoid over-fitting and under-fitting in supervised machine learning (comparative study). Comput. Sci. Commun. Instrum. Devices 2015, 70, 163–172. [Google Scholar]

- TensorFlow v2.10.0. 2021. Available online: https://www.tensorflow.org/api_docs/python/tf/keras/preprocessing/image/ImageDataGenerator (accessed on 26 April 2021).

| Source | Data Set Name | Types | Number of Instances | Volume (Gram) | Energy (Kilocalorie) |

|---|---|---|---|---|---|

| Apple | 1000 | 133 | 72 | ||

| FOOD-101 | Banana | 1000 | 118 | 105 | |

| Kaggle | and | Donut | 1000 | 64 | 269 |

| Fruit-360 | Cupcake | 1000 | 72 | 262 | |

| Mango | 1000 | 133 | 68 |

| Parameters | Values |

|---|---|

| Width shift range (%) | 10 |

| Height shift range (%) | 10 |

| Zoom range (%) | 20 |

| Shear range (%) | 10 |

| Rotation range (deg) | 10 |

| Groups | Layers |

|---|---|

| Group 1 (tunable) | Conv2D, Conv2D, and MaxPooling2D |

| Group 2 (tunable) | Conv2D, Conv2D, and MaxPooling2D |

| Group 3 (tunable) | Conv2D, Conv2D, and MaxPooling2D |

| Group 4 (tunable) | DropOut, Flatten, Dense Layer, and DropOut |

| Model Name | Group-1 Filter Num | Group-2 Filter Num | Group-3 Filter Num | Filter Size | Training | Validation | Test | Space | Time (s) |

|---|---|---|---|---|---|---|---|---|---|

| model 16 | 16 | 32 | 64 | (3,3) | A: 0.795 | A: 0.847 | A: 0.836 | 30,500 | 0.0005 |

| L: 0.38 | L: 0.29 | L: 0.3 | |||||||

| model 17 | 16 | 32 | 64 | (5,5) | A: 0.809 | A: 0.847 | A: 0.829 | 66,340 | 0.0008 |

| L: 0.34 | L: 0.27 | L: 0.28 | |||||||

| model 23 | 16 | 64 | 32 | (5,5) | A: 0.80 | A: 0.86 | A: 0.85 | 66,340 | 0.0008 |

| L: 0.37 | L: 0.27 | L: 0.27 | |||||||

| model 26 | 16 | 64 | 64 | (5,5) | A: 0.818 | A: 0.85 | A: 0.84 | 82,340 | 0.0013 |

| L: 0.34 | L: 0.26 | L: 0.27 | |||||||

| model 44 | 32 | 32 | 64 | (5,5) | A: 0.84 | A: 0.86 | A: 0.848 | 74,340 | 0.0008 |

| L: 0.31 | L: 0.25 | L: 0.26 | |||||||

| model 50 | 32 | 64 | 32 | (5,5) | A: 0.81 | A: 0.85 | A: 0.829 | 74,340 | 0.0010 |

| L: 0.36 | L: 0.26 | L: 0.28 | |||||||

| model 52 | 32 | 64 | 64 | (3,3) | A: 0.82 | A: 0.82 | A: 0.79 | 39,140 | 0.0007 |

| L: 0.32 | L: 0.31 | L: 0.34 | |||||||

| model 62 | 64 | 16 | 64 | (5,5) | A: 0.80 | A: 0.84 | A: 0.836 | 82,340 | 0.0014 |

| L: 0.35 | L: 0.27 | L: 0.28 | |||||||

| model 68 | 64 | 32 | 32 | (5,5) | A: 0.81 | A: 0.84 | A: 0.836 | 74,340 | 0.0010 |

| L: 0.35 | L: 0.28 | L: 0.29 | |||||||

| model 70 | 64 | 32 | 64 | (3,3) | A: 0.83 | A: 0.836 | A: 0.837 | 39,140 | 0.0007 |

| L: 0.34 | L: 0.32 | L: 0.31 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Haque, R.U.; Khan, R.H.; Shihavuddin, A.S.M.; Syeed, M.M.M.; Uddin, M.F. Lightweight and Parameter-Optimized Real-Time Food Calorie Estimation from Images Using CNN-Based Approach. Appl. Sci. 2022, 12, 9733. https://doi.org/10.3390/app12199733

Haque RU, Khan RH, Shihavuddin ASM, Syeed MMM, Uddin MF. Lightweight and Parameter-Optimized Real-Time Food Calorie Estimation from Images Using CNN-Based Approach. Applied Sciences. 2022; 12(19):9733. https://doi.org/10.3390/app12199733

Chicago/Turabian StyleHaque, Rakib Ul, Razib Hayat Khan, A. S. M. Shihavuddin, M. M. Mahbubul Syeed, and Mohammad Faisal Uddin. 2022. "Lightweight and Parameter-Optimized Real-Time Food Calorie Estimation from Images Using CNN-Based Approach" Applied Sciences 12, no. 19: 9733. https://doi.org/10.3390/app12199733

APA StyleHaque, R. U., Khan, R. H., Shihavuddin, A. S. M., Syeed, M. M. M., & Uddin, M. F. (2022). Lightweight and Parameter-Optimized Real-Time Food Calorie Estimation from Images Using CNN-Based Approach. Applied Sciences, 12(19), 9733. https://doi.org/10.3390/app12199733