A Multiple-Choice Maze-like Spatial Navigation Task for Humans Implemented in a Real-Space, Multipurpose Circular Arena

Abstract

:1. Introduction

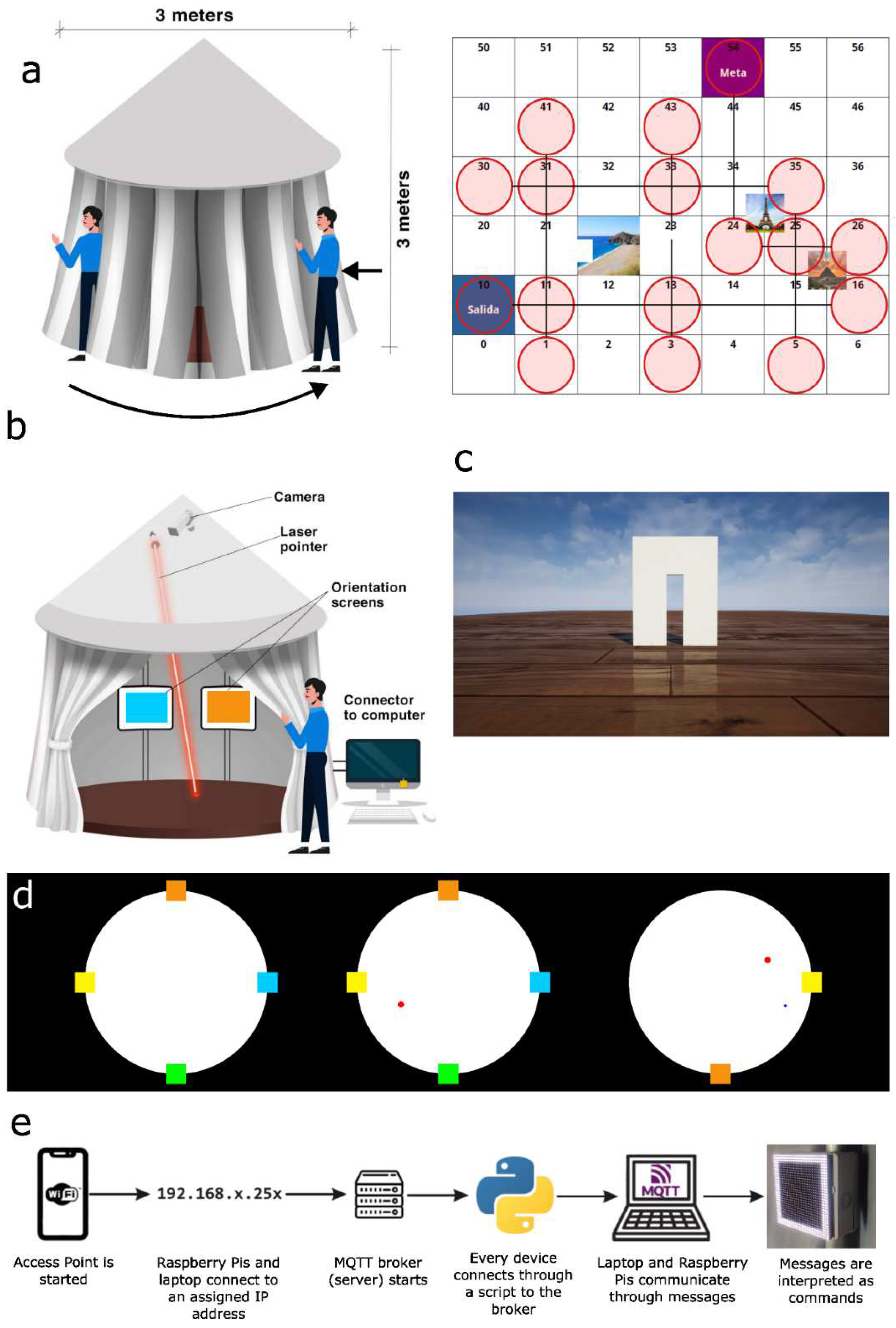

2. Materials and Methods

2.1. Subjects

2.2. Tests

2.3. Technical Section

2.4. Functional Section

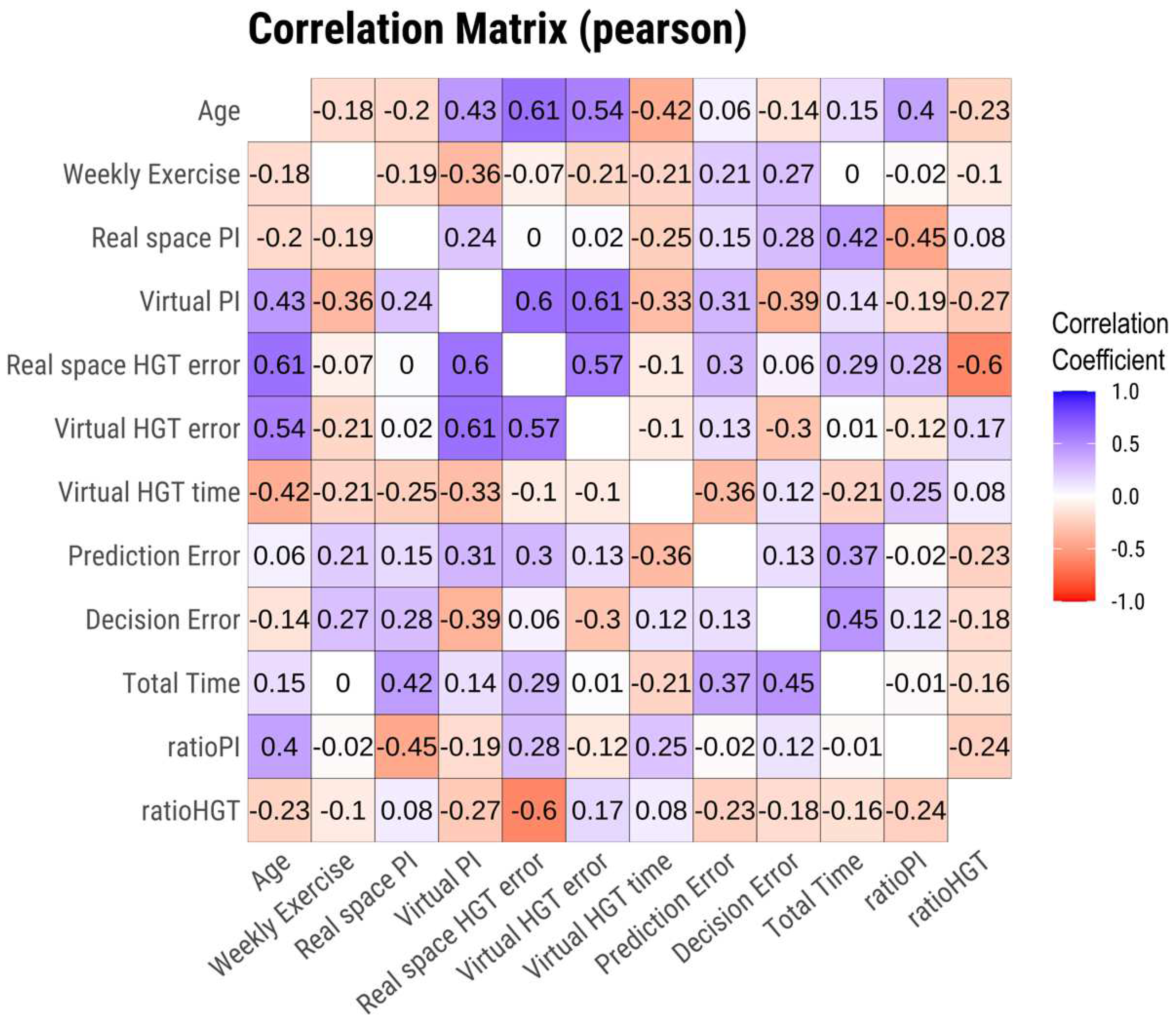

2.5. Factor and Level Analysis

2.6. Statistical Analysis

- -

- Kruskal–Wallis for non-parametric variables with more than two grouping levels.

- -

- Wilcoxon for pair comparisons of the significant results from the Kruskal–Wallis (adjusting the p by the Benjamini–Hochberg method) and for non-parametric variables with less than two grouping levels.

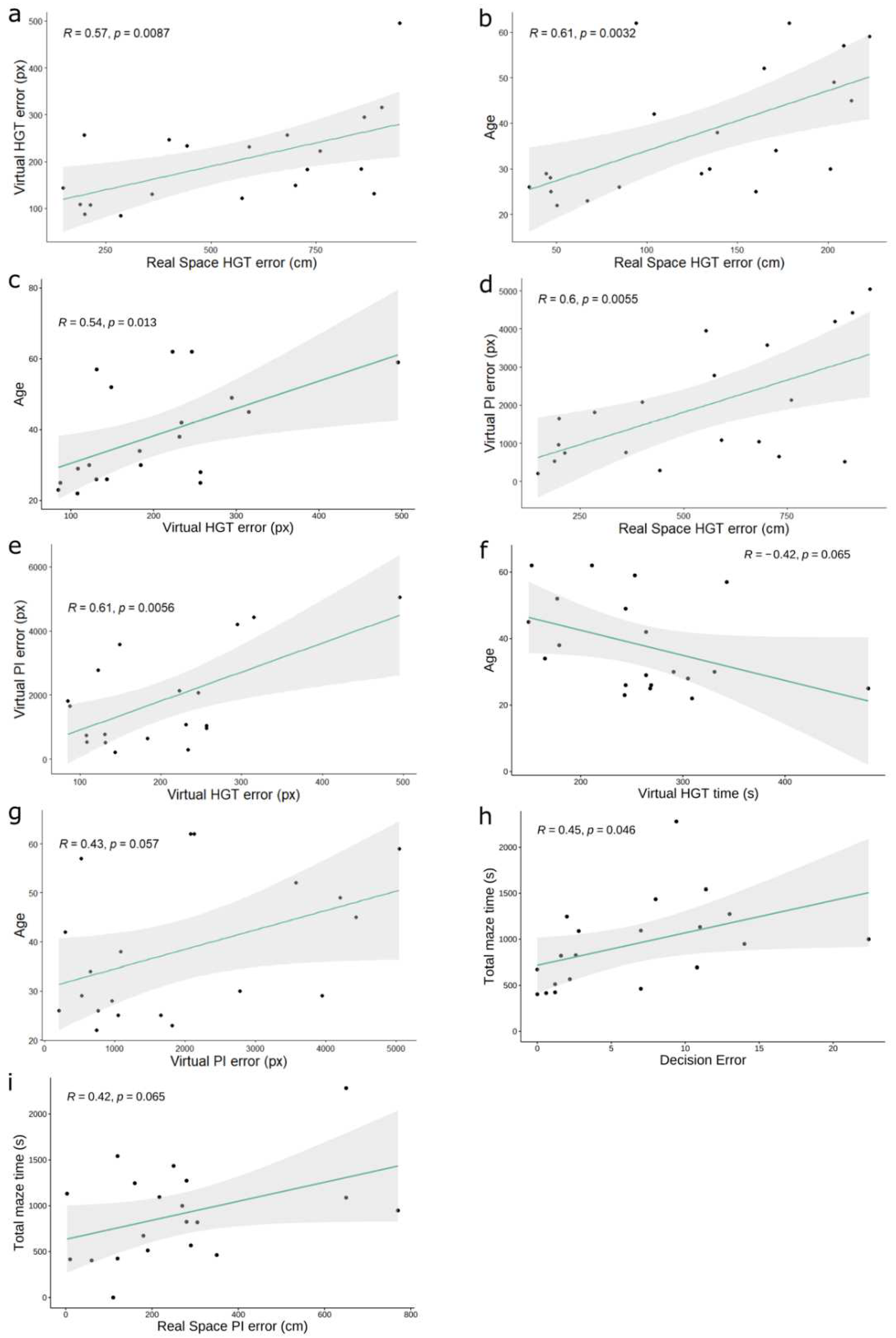

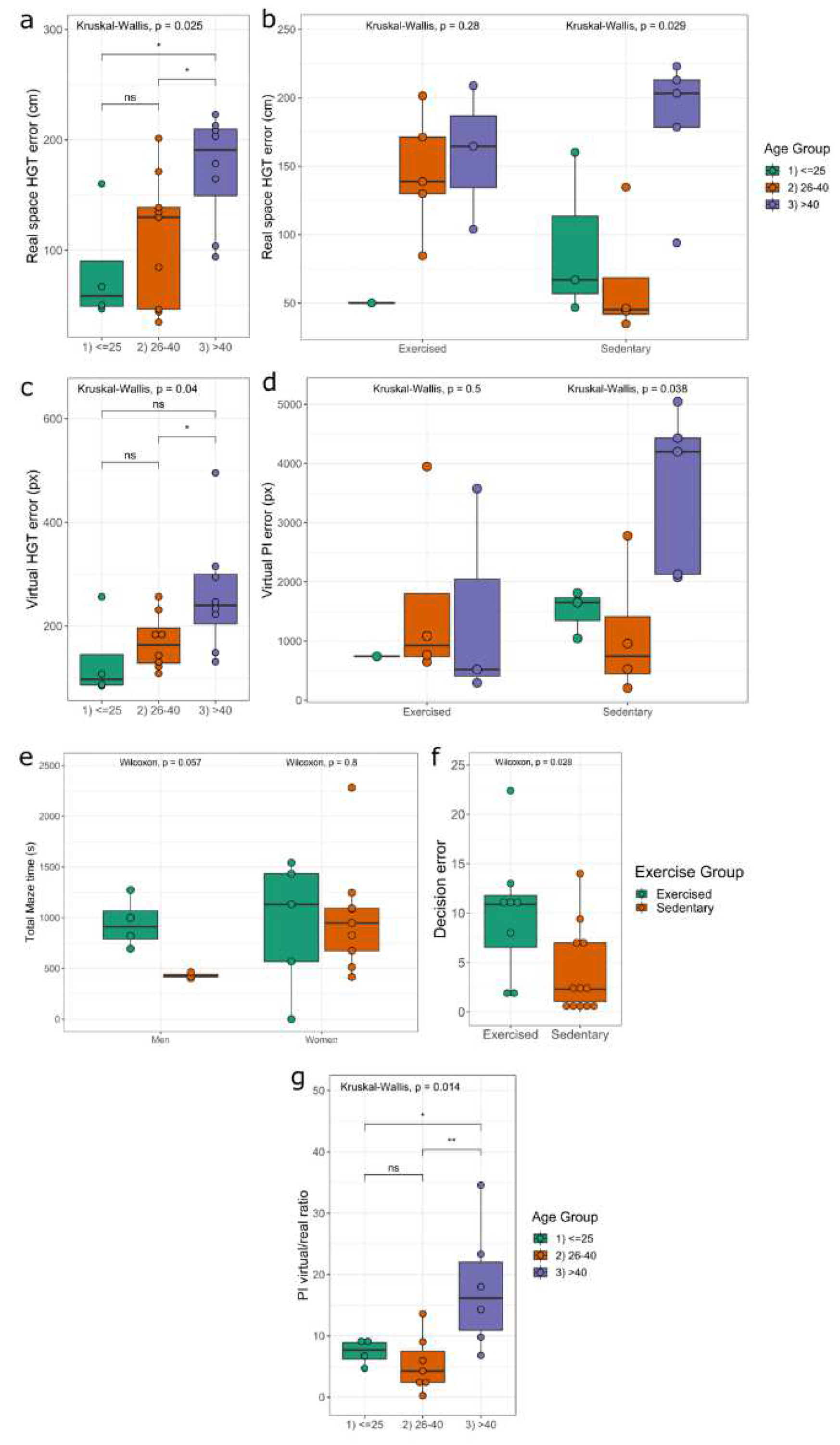

3. Results

4. Discussion

4.1. Limitations

4.2. Future Directions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ekstrom, A.D.; Spiers, H.J.; Bohbot, V.D.; Rosenbaum, R.S. Human Spatial Navigation; Princeton University Press: Princeton, NJ, USA, 2018; ISBN 978-0-691-17174-6. [Google Scholar]

- Tuena, C.; Mancuso, V.; Stramba-Badiale, C.; Pedroli, E.; Stramba-Badiale, M.; Riva, G.; Repetto, C. Egocentric and Allocentric Spatial Memory in Mild Cognitive Impairment with Real-World and Virtual Navigation Tasks: A Systematic Review. J. Alzheimers Dis. JAD 2021, 79, 95–116. [Google Scholar] [CrossRef] [PubMed]

- Montello, D.R. Navigation. In The Cambridge Handbook of Visuospatial Thinking; Miyake, A., Shah, P., Eds.; Cambridge Handbooks in Psychology; Cambridge University Press: Cambridge, UK, 2005; pp. 257–294. ISBN 978-0-521-80710-4. [Google Scholar]

- Patai, E.Z.; Spiers, H.J. The Versatile Wayfinder: Prefrontal Contributions to Spatial Navigation. Trends Cogn. Sci. 2021, 25, 520–533. [Google Scholar] [CrossRef] [PubMed]

- Banquet, J.-P.; Gaussier, P.; Cuperlier, N.; Hok, V.; Save, E.; Poucet, B.; Quoy, M.; Wiener, S.I. Time as the Fourth Dimension in the Hippocampus. Prog. Neurobiol. 2021, 199, 101920. [Google Scholar] [CrossRef] [PubMed]

- Mobbs, D.; Wise, T.; Suthana, N.; Guzmán, N.; Kriegeskorte, N.; Leibo, J.Z. Promises and Challenges of Human Computational Ethology. Neuron 2021, 109, 2224–2238. [Google Scholar] [CrossRef]

- Moffat, S.D.; Kennedy, K.M.; Rodrigue, K.M.; Raz, N. Extrahippocampal Contributions to Age Differences in Human Spatial Navigation. Cereb. Cortex 2007, 17, 1274–1282. [Google Scholar] [CrossRef]

- Kim, B.; Lee, S.; Lee, J. Gender Differences in Spatial Navigation. World Acad. Sci. Eng. Technol. 2007, 31, 297–300. [Google Scholar]

- Munion, A.K.; Stefanucci, J.K.; Rovira, E.; Squire, P.; Hendricks, M. Gender Differences in Spatial Navigation: Characterizing Wayfinding Behaviors. Psychon. Bull. Rev. 2019, 26, 1933–1940. [Google Scholar] [CrossRef]

- Zanco, M.; Plácido, J.; Marinho, V.; Ferreira, J.V.; de Oliveira, F.; Monteiro-Junior, R.; Barca, M.; Engedal, K.; Laks, J.; Deslandes, A. Spatial Navigation in the Elderly with Alzheimer’s Disease: A Cross-Sectional Study. J. Alzheimers Dis. JAD 2018, 66, 1683–1694. [Google Scholar] [CrossRef]

- Yu, S.; Boone, A.P.; He, C.; Davis, R.C.; Hegarty, M.; Chrastil, E.R.; Jacobs, E.G. Age-Related Changes in Spatial Navigation Are Evident by Midlife and Differ by Sex. Psychol. Sci. 2021, 32, 692–704. [Google Scholar] [CrossRef]

- Turgeon, M.; Lustig, C.; Meck, W.H. Cognitive Aging and Time Perception: Roles of Bayesian Optimization and Degeneracy. Front. Aging Neurosci. 2016, 8, 102. [Google Scholar] [CrossRef]

- Plácido, J.; de Almeida, C.A.B.; Ferreira, J.V.; de Oliveira Silva, F.; Monteiro-Junior, R.S.; Tangen, G.G.; Laks, J.; Deslandes, A.C. Spatial Navigation in Older Adults with Mild Cognitive Impairment and Dementia: A Systematic Review and Meta-Analysis. Exp. Gerontol. 2022, 165, 111852. [Google Scholar] [CrossRef] [PubMed]

- Cammisuli, D.M.; Cipriani, G.; Castelnuovo, G. Technological Solutions for Diagnosis, Management and Treatment of Alzheimer’s Disease-Related Symptoms: A Structured Review of the Recent Scientific Literature. Int. J. Environ. Res. Public. Health 2022, 19, 3122. [Google Scholar] [CrossRef] [PubMed]

- Lithfous, S.; Dufour, A.; Després, O. Spatial Navigation in Normal Aging and the Prodromal Stage of Alzheimer’s Disease: Insights from Imaging and Behavioral Studies. Ageing Res. Rev. 2013, 12, 201–213. [Google Scholar] [CrossRef] [PubMed]

- Gazova, I.; Vlcek, K.; Laczó, J.; Nedelska, Z.; Hyncicova, E.; Mokrisova, I.; Sheardova, K.; Hort, J. Spatial Navigation-a Unique Window into Physiological and Pathological Aging. Front. Aging Neurosci. 2012, 4, 16. [Google Scholar] [CrossRef] [PubMed]

- Parizkova, M.; Lerch, O.; Moffat, S.D.; Andel, R.; Mazancova, A.F.; Nedelska, Z.; Vyhnalek, M.; Hort, J.; Laczó, J. The Effect of Alzheimer’s Disease on Spatial Navigation Strategies. Neurobiol. Aging 2018, 64, 107–115. [Google Scholar] [CrossRef]

- Stuchlik, A.; Kubik, S.; Vlcek, K.; Vales, K. Spatial Navigation: Implications for Animal Models, Drug Development and Human Studies. Physiol. Res. 2014, 63, S237–S249. [Google Scholar] [CrossRef]

- Fellous, J.-M.; Dominey, P.; Weitzenfeld, A. Complex Spatial Navigation in Animals, Computational Models and Neuro-Inspired Robots. Biol. Cybern. 2020, 114, 137–138. [Google Scholar] [CrossRef]

- Issa, J.B.; Tocker, G.; Hasselmo, M.E.; Heys, J.G.; Dombeck, D.A. Navigating Through Time: A Spatial Navigation Perspective on How the Brain May Encode Time. Annu. Rev. Neurosci. 2020, 43, 73–93. [Google Scholar] [CrossRef]

- Wei, E.X.; Anson, E.R.; Resnick, S.M.; Agrawal, Y. Psychometric Tests and Spatial Navigation: Data From the Baltimore Longitudinal Study of Aging. Front. Neurol. 2020, 11, 484. [Google Scholar] [CrossRef]

- Laczó, J.; Vlcek, K.; Vyhnálek, M.; Vajnerová, O.; Ort, M.; Holmerová, I.; Tolar, M.; Andel, R.; Bojar, M.; Hort, J. Spatial Navigation Testing Discriminates Two Types of Amnestic Mild Cognitive Impairment. Behav. Brain Res. 2009, 202, 252–259. [Google Scholar] [CrossRef]

- Laczó, J.; Andel, R.; Vyhnalek, M.; Vlcek, K.; Magerova, H.; Varjassyova, A.; Tolar, M.; Hort, J. Human Analogue of the Morris Water Maze for Testing Subjects at Risk of Alzheimer’s Disease. Neurodegener. Dis. 2010, 7, 148–152. [Google Scholar] [CrossRef] [PubMed]

- Laczó, J.; Andel, R.; Vyhnalek, M.; Vlcek, K.; Magerova, H.; Varjassyova, A.; Nedelska, Z.; Gazova, I.; Bojar, M.; Sheardova, K.; et al. From Morris Water Maze to Computer Tests in the Prediction of Alzheimer’s Disease. Neurodegener. Dis. 2012, 10, 153–157. [Google Scholar] [CrossRef] [PubMed]

- Schöberl, F.; Zwergal, A.; Brandt, T. Testing Navigation in Real Space: Contributions to Understanding the Physiology and Pathology of Human Navigation Control. Front. Neural Circuits 2020, 14, 6. [Google Scholar] [CrossRef] [PubMed]

- Diersch, N.; Wolbers, T. The Potential of Virtual Reality for Spatial Navigation Research across the Adult Lifespan. J. Exp. Biol. 2019, 222, jeb187252. [Google Scholar] [CrossRef] [PubMed]

- Park, J.L.; Dudchenko, P.A.; Donaldson, D.I. Navigation in Real-World Environments: New Opportunities Afforded by Advances in Mobile Brain Imaging. Front. Hum. Neurosci. 2018, 12, 361. [Google Scholar] [CrossRef] [PubMed]

- Bažadona, D.; Fabek, I.; Babić Leko, M.; Bobić Rasonja, M.; Kalinić, D.; Bilić, E.; Raguž, J.D.; Mimica, N.; Borovečki, F.; Hof, P.R.; et al. A Non-Invasive Hidden-Goal Test for Spatial Orientation Deficit Detection in Subjects with Suspected Mild Cognitive Impairment. J. Neurosci. Methods 2020, 332, 108547. [Google Scholar] [CrossRef] [PubMed]

- Kalová, E.; Vlcek, K.; Jarolímová, E.; Bures, J. Allothetic Orientation and Sequential Ordering of Places Is Impaired in Early Stages of Alzheimer’s Disease: Corresponding Results in Real Space Tests and Computer Tests. Behav. Brain Res. 2005, 159, 175–186. [Google Scholar] [CrossRef]

- Laczó, J.; Markova, H.; Lobellova, V.; Gazova, I.; Parizkova, M.; Cerman, J.; Nekovarova, T.; Vales, K.; Klovrzova, S.; Harrison, J.; et al. Scopolamine Disrupts Place Navigation in Rats and Humans: A Translational Validation of the Hidden Goal Task in the Morris Water Maze and a Real Maze for Humans. Psychopharmacology 2017, 234, 535–547. [Google Scholar] [CrossRef]

- Schindelin, J.; Arganda-Carreras, I.; Frise, E.; Kaynig, V.; Longair, M.; Pietzsch, T.; Preibisch, S.; Rueden, C.; Saalfeld, S.; Schmid, B.; et al. Fiji: An Open-Source Platform for Biological-Image Analysis. Nat. Methods 2012, 9, 676–682. [Google Scholar] [CrossRef]

- Evans, J.D. Straightforward Statistics for the Behavioral Sciences; Thomson Brooks/Cole Publishing Co: Belmont, CA, USA, 1996; ISBN 0-534-23100-4. [Google Scholar]

- Grewe, P.; Lahr, D.; Kohsik, A.; Dyck, E.; Markowitsch, H.J.; Bien, C.G.; Botsch, M.; Piefke, M. Real-Life Memory and Spatial Navigation in Patients with Focal Epilepsy: Ecological Validity of a Virtual Reality Supermarket Task. Epilepsy Behav. 2014, 31, 57–66. [Google Scholar] [CrossRef]

- Topalovic, U.; Aghajan, Z.M.; Villaroman, D.; Hiller, S.; Christov-Moore, L.; Wishard, T.J.; Stangl, M.; Hasulak, N.R.; Inman, C.S.; Fields, T.A.; et al. Wireless Programmable Recording and Stimulation of Deep Brain Activity in Freely Moving Humans. Neuron 2020, 108, 322–334.e9. [Google Scholar] [CrossRef] [PubMed]

- Lin, C.-H.; Lin, H.-C.; Chen, C.-Y.; Lih, C.-C. Variations in Intraocular Pressure and Visual Parameters before and after Using Mobile Virtual Reality Glasses and Their Effects on the Eyes. Sci. Rep. 2022, 12, 3176. [Google Scholar] [CrossRef] [PubMed]

- Lubetzky, A.V.; Kelly, J.; Wang, Z.; Gospodarek, M.; Fu, G.; Sutera, J.; Hujsak, B.D. Contextual Sensory Integration Training via Head Mounted Display for Individuals with Vestibular Disorders: A Feasibility Study. Disabil. Rehabil. Assist. Technol. 2022, 17, 74–84. [Google Scholar] [CrossRef] [PubMed]

- Olson, K.E.; O’Brien, M.A.; Rogers, W.A.; Charness, N. Diffusion of Technology: Frequency of Use for Younger and Older Adults. Ageing Int. 2011, 36, 123–145. [Google Scholar] [CrossRef] [PubMed]

- Richardson, C.; Bucks, R.S.; Hogan, A.M. Effects of Aging on Habituation to Novelty: An ERP Study. Int. J. Psychophysiol. Off. J. Int. Organ. Psychophysiol. 2011, 79, 97–105. [Google Scholar] [CrossRef]

- Behforuzi, H.; Feng, N.C.; Billig, A.R.; Ryan, E.; Tusch, E.S.; Holcomb, P.J.; Mohammed, A.H.; Daffner, K.R. Markers of Novelty Processing in Older Adults Are Stable and Reliable. Front. Aging Neurosci. 2019, 11, 165. [Google Scholar] [CrossRef]

- Wang, J.; Pan, Y. Eye Proprioception May Provide Real Time Eye Position Information. Neurol. Sci. Off. J. Ital. Neurol. Soc. Ital. Soc. Clin. Neurophysiol. 2013, 34, 281–286. [Google Scholar] [CrossRef]

- Renault, A.G.; Auvray, M.; Parseihian, G.; Miall, R.C.; Cole, J.; Sarlegna, F.R. Does Proprioception Influence Human Spatial Cognition? A Study on Individuals With Massive Deafferentation. Front. Psychol. 2018, 9, 1322. [Google Scholar] [CrossRef]

- Nguyen, D.; Truong, D.; Nguyen, D.; Hoai, M.; Pham, C. Self-Controlling Photonic-on-Chip Networks with Deep Reinforcement Learning. Sci. Rep. 2021, 11, 23151. [Google Scholar] [CrossRef]

| Total Number | 21 | |||

| Sex | Men | 7 | ||

| Women | 14 | |||

| Age | ≤25 | 4 | Men | 2 |

| Women | 2 | |||

| 26 < x < 40 | 9 | Men | 3 | |

| Women | 6 | |||

| >40 | 8 | Men | 2 | |

| Women | 6 | |||

| Exercise | Sedentary | 14 | Men | 4 |

| Women | 10 | |||

| Exercised | 7 | Men | 4 | |

| Women | 3 | |||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Muela, P.; Cintado, E.; Tezanos, P.; Fernández-García, B.; Tomás-Zapico, C.; Iglesias-Gutiérrez, E.; Díaz Martínez, A.E.; Butler, R.G.; Cuadrado-Peñafiel, V.; De la Vega, R.; et al. A Multiple-Choice Maze-like Spatial Navigation Task for Humans Implemented in a Real-Space, Multipurpose Circular Arena. Appl. Sci. 2022, 12, 9707. https://doi.org/10.3390/app12199707

Muela P, Cintado E, Tezanos P, Fernández-García B, Tomás-Zapico C, Iglesias-Gutiérrez E, Díaz Martínez AE, Butler RG, Cuadrado-Peñafiel V, De la Vega R, et al. A Multiple-Choice Maze-like Spatial Navigation Task for Humans Implemented in a Real-Space, Multipurpose Circular Arena. Applied Sciences. 2022; 12(19):9707. https://doi.org/10.3390/app12199707

Chicago/Turabian StyleMuela, Pablo, Elisa Cintado, Patricia Tezanos, Benjamín Fernández-García, Cristina Tomás-Zapico, Eduardo Iglesias-Gutiérrez, Angel Enrique Díaz Martínez, Ray G. Butler, Victor Cuadrado-Peñafiel, Ricardo De la Vega, and et al. 2022. "A Multiple-Choice Maze-like Spatial Navigation Task for Humans Implemented in a Real-Space, Multipurpose Circular Arena" Applied Sciences 12, no. 19: 9707. https://doi.org/10.3390/app12199707

APA StyleMuela, P., Cintado, E., Tezanos, P., Fernández-García, B., Tomás-Zapico, C., Iglesias-Gutiérrez, E., Díaz Martínez, A. E., Butler, R. G., Cuadrado-Peñafiel, V., De la Vega, R., Soto-León, V., Oliviero, A., López-Mascaraque, L., & Trejo, J. L. (2022). A Multiple-Choice Maze-like Spatial Navigation Task for Humans Implemented in a Real-Space, Multipurpose Circular Arena. Applied Sciences, 12(19), 9707. https://doi.org/10.3390/app12199707