Abstract

In hazardous environments where direct human operation of machinery is not possible, such as in a nuclear power plant (NPP), teleoperation may be utilized to complete tasks safely. However, because teleoperation tasks are time consuming, complex, highly difficult, and need to be performed with incomplete information, they may increase the human operator’s cognitive load, which can affect the efficiency of the human operator as well as the completeness of the task. In this study, we propose a teleoperation system using a hybrid teleoperation controller that can increase operator efficiency for specific teleoperation tasks in complex sequences. First, we decomposed the task into a sequence of unit subtasks. For each subtask, the input space was allocated, and either position control or rate control by the hybrid controller was determined. Teleoperation experiments were conducted to verify the controller. To evaluate the efficiency improvement of the teleoperators, the completion time and NASA task-load index (NASA-TLX) were measured. Using the hybrid controller reduced the completion time and the NASA-TLX score by 17.23% and 34.02%, respectively, compared to the conventional position controller.

1. Introduction

Teleoperation is typically used in hazardous or unstructured environments where direct human operations or automatic controls are not available, such as nuclear power plants (NPPs). Therefore, many studies have attempted to use teleoperation in NPPs. Some teleoperation applications in NPP for power plant dismantling [1,2,3,4], object handling [5,6,7], pipe cutting [8], and rescue [9] have been studied. In general, teleoperation is performed by viewing only the image screens that appear on the monitor. As a result, this prevents the operator from accurately determining the distance or direction of the target object because the operator only sees the image of the target object displayed on the monitor.

Many techniques for performing sophisticated teleoperation tasks to overcome this problem are being studied. Force-rendering techniques are widely used methods in teleoperation to assist operators in controlling haptic devices. In [10], a method was developed to formulate the desired behavior in any number of task frames associated with the task to optimize motion control in a limited workspace. Furthermore, additional linear constraints could have been applied to each task frame.

In [11], the effects of force feedback on a blunt dissection task were examined. It was argued that force feedback is useful in this context when there is a large contrast in the mechanical properties along the dissection plane between adjacent areas of the tissue. A parallel robot-aided minimum surgical system with a force-feedback function was employed to study the practical force-feedback effects of artificial tissue samples [12]. The force feedback was provided using pre-programmed rules and provided to the user through the joystick to prevent the robot from falling into an unstable state or coming in contact with an obstacle [13]. In [14,15], interactive virtual fixture generation with shared control was proposed to harness the cognitive abilities of teleoperators.

A retargeting function was used to deal with complex kinematic constraints owing to the difference in topology, anthropometry, joint limits, and degrees of freedom between the human demonstrator and the robot [16,17,18]. In [19], a Cartesian-to-Cartesian mapping retargeting function using a data glove and wrist-tracking sensors to control the slave robot was proposed.

Overcoming the conspicuous workspace disparity between the master device and the slave robots is also a major problem in teleoperation. To solve this problem, some researchers have implemented hybrid control for teleoperation [20,21,22,23,24]. In [20], a control method for teleoperation by integrating hybrid position and velocity control with an interoperability protocol was proposed. The proposed method can overcome the difference in the workspace between the master device and the slave robot. Most of the hybrid position–velocity controllers are switched on manually with a specific button at the operator site [20,21].

The bubble technique, which is based on hybrid position–rate control was also implemented to overcome the disparity in workspace between the master and slave [25,26,27]. The control method applied depends on whether the haptic device is inside or outside of the bubble. When the haptic device is inside the bubble, position control is used for fine positioning, and when it is outside the bubble, rate control is applied for coarse positioning. The motion-scaling technique [27,28] is another well-known technique for dealing with the disparity between the master and slave workspaces. A motion-scaling factor is handled with a scale equal to the ratio of the maximum workspace of the haptic device workspace to the largest workspace of the virtual environment.

Another issue in teleoperation is its difficulty, as it is necessary to simultaneously manipulate the slave robot’s multiple degrees of freedom while viewing only two-dimensional (2D) camera images that do not give an accurate perspective of the machinery installed at a remote site. Neuroscience studies have shown that when a human operates an object in three-dimensional (3D) space and consciously adjusts the two degrees of freedom to three degrees of freedom, the remaining degrees of freedom are driven by unconscious subordinate commands, which can be acquired through human experience or training [29].

The teleoperation task that we deal with is called MADMOFEM (operating a manual drive mechanism of a fuel exchange machine) and is covered in Section 2. This task requires a complex sequence of clutch and driver operations performed with a dual-arm manipulator. Prolonged telemanipulation can cause heavy workload, and interpretation of the 2D camera images of the remote site can increase the cognitive load experienced by the operator. Therefore, we focus on increasing the efficiency of the operator by reducing the work time and the level of difficulty of a task. To do this, we first divided our objective task into specific subtasks and applied a position–rate hybrid controller that adjusts the input space and control mode of the slave robot according to the current subtask index.

The remainder of this paper is organized as follows. In Section 2, our objective dual-arm teleoperation task, called MADMOFEM, is described and demonstrates the sition–rat-rate hybrid controller that adjusts the input space and control mode of the slave according to the corresponding subtask index. The experimental setup and scenarios are explained in Section 3. Section 4 presents the experimental results, and Section 5 presents the conclusions.

2. Control Method

This chapter describes the operation of a dual arm manipulator called MADMOFEM. The task is to perform clutch and shaft work, which must be done in a series of sequences. In addition, both agile and fine movements of the robot are required. To solve this problem, the control method can be changed according to the subtask, and the difficulty of the operation can be lowered through appropriate constraints for the degrees of freedom of the master device.

2.1. Madmofem Task

In a fuel-handling machine—one of the main components of a pressurized heavy water reactor (PHWR) nuclear power plant (NPP) age—the machinery can become stuck in the pressure tube in front of the PHWR calandria. Although the machine has a means of troubleshooting the manual drive mechanism, accessing the manual drive mechanism remains a difficult problem. If the machine is stuck, the NPP becomes radioactive, and the machine can be located at heights of up to 9 m. Therefore, Shin developed a manual drive mechanism for a fuel exchange machine (MADMOFEM) to handle the machine remotely in an NPP that is not accessible to humans [30].

Owing to the complexity of the MADMOFEM task and considering the limited cognitive abilities of the human operator, a control scheme is required. There are two objective operations: driver and shaft operations. The operations contain a certain sequence of manipulation subtasks. The sequence starts with the insertion of the tool into the clutch of the right robot. Then, the shaft operation with the left robot is executed. After the shaft operation is completed, the tool inserted into the right robot is removed from the clutch as shown in Figure 1.

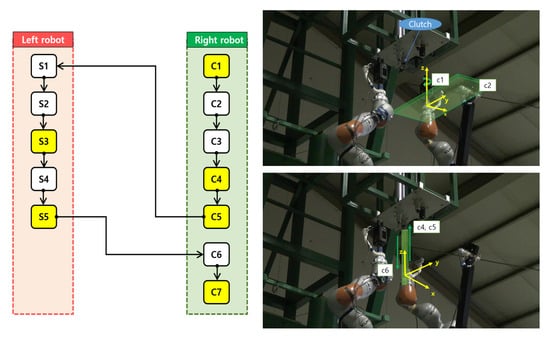

Figure 1.

The sequence diagram of MADMOFEM task with dual-arm teleoperation system (left picture); when using the hybrid controller, position control is applied in the state of the white box, and speed control is applied in the state of the yellow box. State description of clutch operations in a real-world task environment (right picture).

In Figure 1, the right part starting with “C” represents the task of inserting the tool into the clutch with the right slave manipulator, and the left part starting with “S” symbolizes the insertion of the tool into the shaft with the left slave manipulator. The items below are explained for each subtask. The MADMOFEM task is a complex task that requires sequential operation of the clutch and shaft.

- C1: Align the rotation axis of the right robot and the clutch.

- C2: Align the x- and y-axes of the right robot and the clutch.

- C3: Move the right robot in the z-axis direction to approach the clutch.

- C4: Insert the tool into the clutch through fine manipulation of the right robot.

- C5: Standby.

- S1: Align the x- and y-axes of the left robot and the shaft.

- S2: Move the left robot in the z-axis direction to approach the shaft.

- S3: Insert the tool into the shaft through fine manipulation of the left robot.

- S4: Remove the tool from the shaft with the left robot.

- S5: Standby.

- C6: Remove the tool from the clutch with the right robot.

- C7: Task finished.

During teleoperation, the slave robot is generally controlled by position or rate control. In the case of position control, a scaling factor is usually incorporated to overcome the different workspaces between the master and slave. A low scaling factor has advantages in areas that require precision control with high-resolution movement, such as inserting a peg into a hole, and has a considerable amount of indexing to approach the target. On the other hand, with a high scaling factor, the slave can approach the target at a high speed; however, low-resolution movement might occur in dangerous situations. When rate control is used, it is possible to approach the target proximity area conveniently; however, on the other hand, unlike position control, it has poor transparency that can cause risky situations near the target.

2.2. Position Control Mode

Using position control, the motion of the slave robot corresponds to the motion of the master device with superior transparency. Depending on the scaling factor, a trade-off between the safety of the operation and overcoming the workspace disparity occurs. Therefore, the scaling factor should be determined. In our system, because the task environment is at a height of 9 m, a relatively low scaling factor was determined in consideration of safety. The displacement moved by the master became the input of the slave (1).

represents when the master velocity when the position control mode is used, is the position of the master at time t, and is used as the scaling matrix. The velocity of the master is obtained as the displacement of the master during .

2.3. Rate-Control Mode

When the slave is operated in the rate-control mode, significant stability and transparency issues are experienced when contact is made with the environment; however, rate control was proven to be suitable for the slave when it is operated in free motion [24]. In our system, therefore, rate control is applied in the initial condition where the slave was far from the target. The master velocity in the rate-control mode is a vector of magnitude away from the master’s origin position (2). The master’s origin position is set when the button on the master device is initially pressed.

indicates the value input to the slave when the rate-control mode is applied, is the position of master when the gripper of the master is pressed for the first time, and is used as the scaling matrix. The velocity of the master was determined by the displacement from the origin of the master. The farther away from the origin, the higher the velocity obtained as the output of the master. Our master system set the origin as the point at which the button on the master is initially pressed.

2.4. Position-Rate Hybrid Teleoperation Control

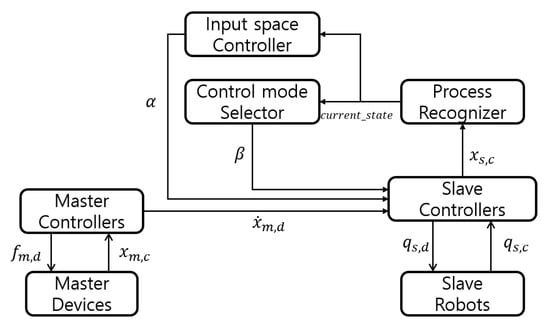

Figure 2 is a schematic of the proposed position–rate hybrid teleoperation control. The subscripts m, s, c, and d in Figure 2 represents the master device, slave robot, current, and desired, respectively. The position-based process recognizer outputs the corresponding subtask index based on the distance between the slave robot position and the desired position. With this result, the input space controller determines the input space (), and the control-mode selector determines the control mode () between the position and rate control.

Figure 2.

Schematic showing position–rate hybrid teleoperation control.

represents the position controller, and represents the rate controller. is determined by the scalar value of in the tables below to apply the corresponding mode between the position and rate control for each subtask. is the inverse of the Jacobian, and is the desired joint velocity. Our teleoperation system assigned position and rate-control modes based on the corresponding subtask index using a predetermined .

We selected an advantageous control method from the corresponding subtask index among the two control methods to complete the MADMOFEM tasks. For example, because of the distance between the target position ( the position of the shaft or driver) and the initial position, we used rate control to approach the target quickly and switched to position control when the target was close, allowing the task to be completed accurately and safely.

Predetermined input space vector and control mode are listed in Table 1 and Table 2. With the vector, the input space for the slave robot is determined and is also used for haptic rendering. If the corresponding value is zero, then the input space is ignored; otherwise, the input space is allowed. For example, in the C1 state, the operator must handle the master device in the yaw direction. Therefore, only the value of is set to 1.

Table 1.

The predefined parameters related to the left robot.

Table 2.

The predefined parameters related to the right robot.

When the value of the Euclidean distance between the robot and the target in the direction where the input space is allowed is below the threshold, the state is converted to the next state. For example, because the input space in the yaw direction is only allowed in the C1 state when the yaw component of the target and the robot are below the threshold, it is converted to the next state, which is the C2 state. In the C2 state, the and components are equal to 1; therefore, when the Euclidean distance in the corresponding direction is below the threshold, the state switches to the C3 state, and proceeds to switch repeatedly until the final state is achieved.

As the corresponding state is determined based on the slave and target positions, the algorithm has the disadvantage of not being available in an unstructured environment because it assumes that we know the location of the target. Therefore, in future work, we plan to improve the state conversion automatically by using vision-based deep learning that takes the egocentric view mounted on the end-effector as an input.

2.5. Minimizing the Operating Degree of Freedom

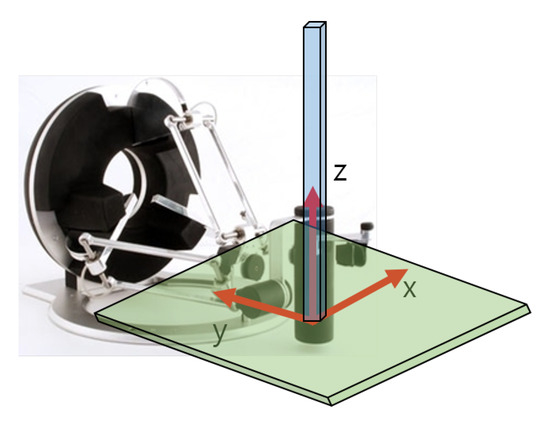

The haptic rendering technique was applied to the master device so that the operator could intuitively recognize the minimized input space. We constrained the freedom of the master device with each subtask. If the subtask is part of the process that aligns the x- and y-axes, a reaction force is generated by offsets in both directions of the z-axis, thus, constraining the master device to move only on the x–y plane (Figure 3). If the subtask is part of the process of inserting the tool into the z-axis, a reaction force is provided, which results in the manipulator being unable to move in the x- and y-axes so that the master device is constrained to move only along the z-axis.

Figure 3.

The generation of virtual walls that can only be manipulated in the x–y plane (green cuboid) or the z-axis (blue cuboid).

3. Experiments

3.1. Experimental Setup

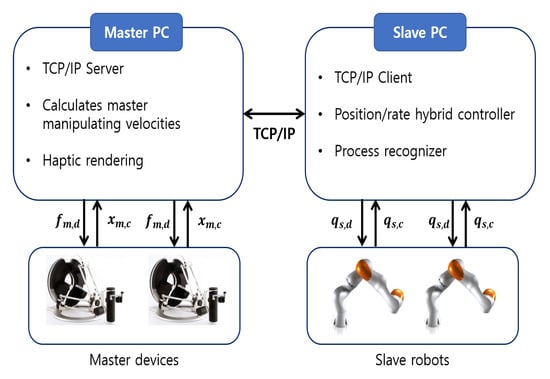

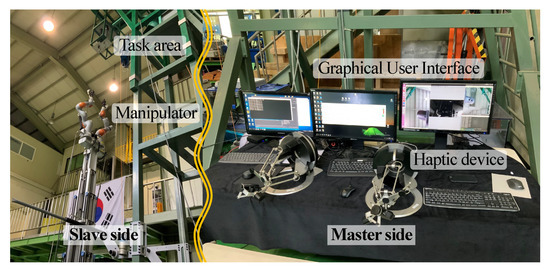

Two Force Dimension’s Omega7 devices (https://www.forcedimension.com/, accessed on 29 August 2022) and two KUKA lbr iiwa r820 manipulators (https://www.kuka.com/, accessed on 29 August 2022) were used as the master device and slave robot, respectively. The master PC acted as a TCP/IP server and performed the calculations for the master’s operation velocity and haptic rendering. The slave PC included a position-rate hybrid controller and a process recognizer.

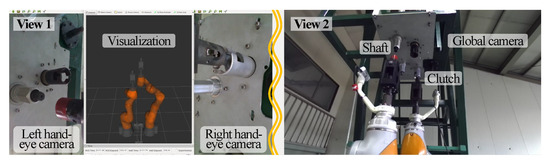

The process recognizer output the corresponding subtask index for each slave robot and sent the results back to the master PC. All communication between PCs was based on the TCP/IP protocol(Figure 4). A total of seven healthy subjects participated in the teleoperation experiments. As shown in Figure 5, the subjects manipulated two slave manipulators (left arm and right arm) that were approximately 9 m high with two master devices (left-handed and right-handed) by viewing only the hand-eye camera images (left side in Figure 6) and the global camera view (right side in Figure 6). Sufficient practice time was given for the subjects to become accustomed to teleoperation.

Figure 4.

Teleoperation system with the process-recognizer-based hybrid controller.

Figure 5.

Dual-arm master–slave teleoperation experimental environment. The teleoperator operates two master devices (left-handed and right-handed) to manipulate dual-arm slave robots. The dual-arm manipulator can be raised to a height of 9 m using the telescopic mast below the robotic system.

Figure 6.

Teleoperation experiments were performed while viewing the hand-eye camera images (view 1 on the left), attached to the left and right robots, and global camera images (view 2 on the right).

3.2. Scenario

In the scenario, the objective was to remotely operate the MADMOFEM in a nuclear power plant with two-armed robots (Figure 6). The robot system consists of a mobile robot at the bottom, a mast capable of moving up and down to a height of 9 m in the middle, and two slave robots at the top. The experiment is assumed to begin after the mast reaches a height of 9 m. The sequence of the tasks is shown in Figure 1.

The tasks of minimizing the input space and constraining the degrees of freedom of the masters are automatically implemented by subtasks. In the C2 state, for example, a subtask aligns the x-axis and y-axis of the right robot and the clutch. Therefore, inputs other than the x and y directions were removed, and rate control was used. The haptic device generates reaction forces to be manipulated in the x–y plane through haptic rendering (Figure 3).

In the C3 state, in which the tool is inserted into the clutch in the z-axis direction by the right robot, inputs other than the z-axis direction are ignored, and rate control is used as in the C2 state. Haptic rendering was used to manipulate the slave in only the z-axis direction. In the C5 state, because the right manipulator is close to the clutch, the control mode is converted to position control, allowing precise insertion of the tool into the clutch. In this way, the subjects performed the experiment according to the sequence shown in Figure 1.

4. Results

To confirm the efficiency improvement of the operator, the distance operated by the master, task completion time, and fatigue level were measured using a NASA-TLX questionnaire.

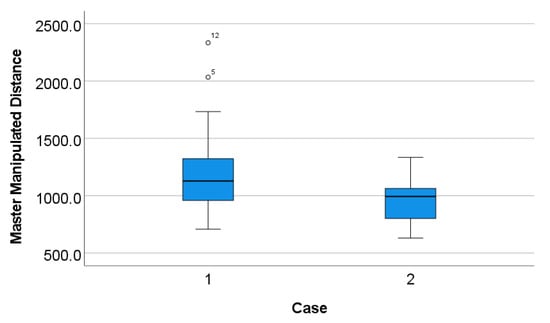

4.1. Manipulated Distance

When the operator manipulated in the position control mode, the operator needed to index repeatedly to approach the goal position. In contrast, in the rate-control mode, the operator needed a lower amount of indexing to approach the desired position. For this reason, the operator manipulated the master device less when a hybrid controller was used. The manipulated distance was reduced by 22.69% (** p < 0.01) from 1226.2 to 948.02 mm as shown in Figure 7. This result indicates that, when the hybrid controller is applied, the workspace mismatch between the master and slave can be overcome by applying the rate control at a distance far from the target and the position control at a distance near the target.

Figure 7.

Manipulated distance of the master device (Case 1: Position control and Case 2: Hybrid control).

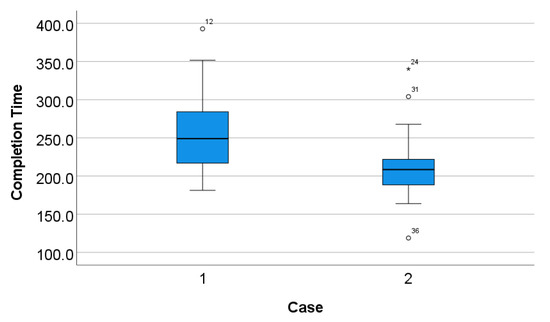

4.2. Completion Time

The completion times were 212.78 and 257.08 s using the hybrid control and not using the hybrid control, respectively (Figure 8). Thus, the completion time decreased by 17.23% (** p < 0.01) when the hybrid control was used. This means that, when the slave is far away from the target, the slave can be quickly manipulated to approach the target with rate control.

Figure 8.

Decrease in completion time owing to use of the hybrid controller (Case 1: Position control and Case 2: Hybrid control).

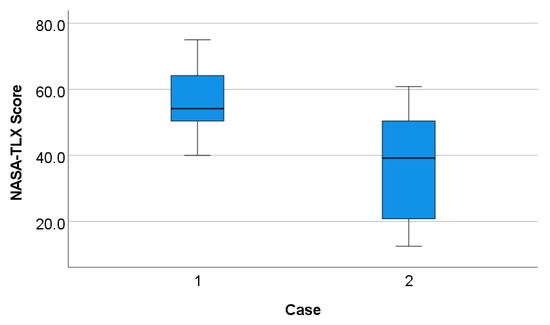

4.3. NASA-TLX

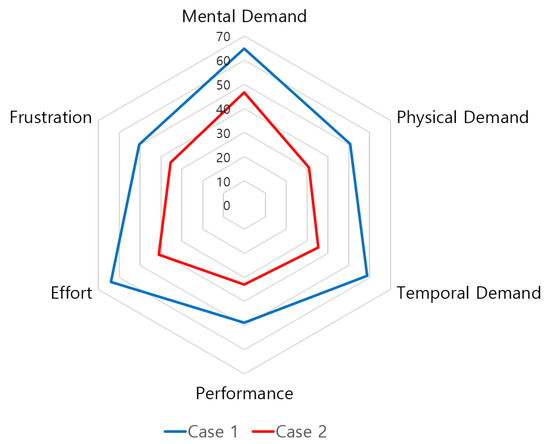

The NASA-TLX was measured to evaluate the workload of the subjects during the teleoperation experiments. Subjects scored between 0 and 100 for six questionnaire measurements (mental demand, physical demand, temporal demand, performance, effort, and frustration). The subjects were evaluated after each experiment. As shown in Figure 9, the average NASA-TLX reduced from 56.17 to 37.06. In other words, with the hybrid control, the average NASA-TLX score was 34.02% (*** p < 0.001) lower than that achieved with conventional position control. Furthermore, when the hybrid control was used, we confirmed that each measurement score was lower than for the case where position control was used (Figure 10). In other words, minimizing the input space and constraining the degrees of freedom of the master with haptic rendering had a significant influence on the NASA-TLX score.

Figure 9.

Averaged weight of the NASA-TLX ratings (Case 1: Position control and Case 2: Hybrid control).

Figure 10.

NASA-TLX measurement scores (Case 1: Position control and Case 2: Hybrid control).

5. Conclusions and Future Work

We introduced a position–rate hybrid control that can increase the efficiency with which an operator completes a specific dual-arm teleoperation task called MADMOFEM, which is time-consuming, complex, and highly difficult. Furthermore, the operator is required to complete the task with incomplete information. To decrease the cognitive load and a heavy workload, we first decomposed the task into a sequence of subtasks. The process recognizer, based on the relative distance between the slave and the target, recognizes which subtask index each arm currently corresponds to.

Depending on the subtask index, the degrees of freedom for human manipulation are minimized by constraining the input space using the input-space controller, and either the position or rate control is selected by the control-mode selector. Experiments were conducted to compare general position control and hybrid controllers, including freedom constraints of master devices, to verify to what extent the proposed system increased the operator’s efficiency. By allocating a control mode method suitable for the situation due to the hybrid controller, the workload was reduced by reducing the working time and the distance the master moved. Furthermore, we confirmed that the cognitive load of the operator was reduced through constraining the degrees of freedom of the master device suitable for the subtask.

In our current teleoperation system, the process recognizer outputs the result of the subtask index based on the position of the slave and the target. If the mobile robot at the bottom of the system moves or the position of the target changes, the relative position of the target changes; as a result, the position of the target needs to be changed. Therefore, in future work, a robust process recognizer that uses a deep-learning model whose inputs are camera images taken at a remote site will be developed so that the recognizer can be used even when changes occur in an unstructured environment.

Author Contributions

Supervision and Funding Acquisition, G.-H.Y.; Writing-original draft Preparation, J.H.; Writing-Review & Editing, G.-H.Y.; Conceptualization, J.H. and G.-H.Y.; Data acquisition and analysis, J.H. and G.-H.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Korea Institute of Industrial Technology (KITECH) through the In-House Research Program (Development of Core Technologies for a Working Partner Robot in the Manufacturing Field, Grant Number: EO220009).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Gelhaus, F.E.; Roman, H.T. Robot applications in nuclear power plants. Prog. Nucl. Energy 1990, 23, 1–33. [Google Scholar] [CrossRef]

- Khan, H.; Abbasi, S.J.; Lee, M.C. DPSO and inverse jacobian-based real-Time inverse kinematics with trajectory tracking using integral SMC for teleoperation. IEEE Access 2020, 8, 159622–159638. [Google Scholar] [CrossRef]

- Bloss, R. How do you decommission a nuclear installation? Call in the robots. Ind. Robot. 2010, 37, 133–136. [Google Scholar] [CrossRef]

- Burrell, T.; Montazeri, A.; Monk, S.; Taylor, C.J. Feedback control—Based inverse kinematics solvers for a nuclear decommissioning robot. IFAC-PapersOnLine 2016, 49, 177–184. [Google Scholar] [CrossRef]

- Álvarez, B.; Iborra, A.; Alonso, A.; de la Puente, J.A. Reference architecture for robot teleoperation: Development details and practical use. Control Eng. Pract. 2001, 9, 395–402. [Google Scholar] [CrossRef]

- Sun, L.; Zhao, C.; Yan, Z.; Liu, P.; Duckett, T.; Stolkin, R. A novel weakly-supervised approach for RGB-D-based nuclear waste object detection. IEEE Sens. J. 2018, 19, 3487–3500. [Google Scholar] [CrossRef]

- Kim, K.; Park, J.; Lee, H.; Song, K. Teleoperated cleaning robots for use in a highly radioactive environment of the DFDF. In Proceedings of the 2006 SICE-ICASE International Joint Conference, Busan, Korea, 18–21 October 2006; pp. 3094–3099. [Google Scholar]

- Bandala, M.; West, C.; Monk, S.; Montazeri, A.; Taylor, C.J. Vision-based assisted tele-operation of a dual-arm hydraulically actuated robot for pipe cutting and grasping in nuclear environments. Robotics 2019, 8, 42. [Google Scholar] [CrossRef]

- Nagatani, K.; Kiribayashi, S.; Okada, Y.; Otake, K.; Yoshida, K.; Tadokoro, S.; Nishimura, T.; Yoshida, T.; Koyanagi, E.; Fukushima, M.; et al. Emergency response to the nuclear accident at the Fukushima Daiichi Nuclear Power Plants using mobile rescue robots. J. Field Robot. 2013, 30, 44–63. [Google Scholar] [CrossRef]

- Funda, J.; Taylor, R.H.; Eldridge, B.; Gomory, S.; Gruben, K.G. Constrained Cartesian motion control for teleoperated surgical robots. IEEE Trans. Robot. Autom. 1996, 12, 453–465. [Google Scholar] [CrossRef]

- Wagner, C.R.; Stylopoulos, N.; Jackson, P.G.; Howe, R.D. The benefit of force feedback in surgery: Examination of blunt dissection. Presence Teleoper. Virtual Environ. 2007, 16, 252–262. [Google Scholar] [CrossRef]

- Moradi Dalvand, M.; Shirinzadeh, B.; Nahavandi, S.; Smith, J. Effects of realistic force feedback in a robotic assisted minimally invasive surgery system. Minim. Invasive Ther. Allied Technol. 2014, 23, 127–135. [Google Scholar] [CrossRef] [PubMed]

- Tang, X.; Zhao, D.; Yamada, H.; Ni, T. Haptic interaction in tele-operation control system of construction robot based on virtual reality. In Proceedings of the 2009 International Conference on Mechatronics and Automation, Changchun, China, 9–12 August 2009; pp. 78–83. [Google Scholar]

- Lee, K.H.; Pruks, V.; Ryu, J.H. Development of shared autonomy and virtual guidance generation system for human interactive teleoperation. In Proceedings of the 2017 14th International Conference on Ubiquitous Robots and Ambient Intelligence (URAI), Jeju, Korea, 28 June–1 July 2017; pp. 457–461. [Google Scholar]

- Pruks, V.; Ryu, J.H. A Framework for Interactive Virtual Fixture Generation for Shared Teleoperation in Unstructured Environments. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 10234–10241. [Google Scholar]

- Dariush, B.; Gienger, M.; Arumbakkam, A.; Goerick, C.; Zhu, Y.; Fujimura, K. Online and markerless motion retargeting with kinematic constraints. In Proceedings of the 2008 IEEE/RSJ International Conference on Intelligent Robots and Systems, Nice, France, 22–26 September 2008; pp. 191–198. [Google Scholar]

- Rakita, D.; Mutlu, B.; Gleicher, M. A motion retargeting method for effective mimicry-based teleoperation of robot arms. In Proceedings of the 2017 ACM/IEEE International Conference on Human-Robot Interaction, Vienna, Austria, 6–9 March 2017; pp. 361–370. [Google Scholar]

- Lu, W.; Liu, Y.; Sun, J.; Sun, L. A motion retargeting method for topologically different characters. In Proceedings of the 2009 Sixth International Conference on Computer Graphics, Imaging and Visualization, Tianjin, China, 11–14 August 2009; pp. 96–100. [Google Scholar]

- Yang, G.H.; Won, J.; Ryu, S. Online Retargeting for Multi-lateral teleoperation. In Proceedings of the 2013 tenth International Conference on Ubiquitous Robots and Ambient Intelligence (URAI), Jeju, Korea, 30 October–2 November 2013; pp. 238–240. [Google Scholar]

- Omarali, B.; Palermo, F.; Valle, M.; Poslad, S.; Althoefer, K.; Farkhatdinov, I. Position and velocity control for telemanipulation with interoperability protocol. In TAROS 2019: Towards Autonomous Robotic Systems; Springer: Berlin/Heidelberg, Germany, 2019; pp. 316–324. [Google Scholar]

- Wrock, M.; Nokleby, S. Haptic teleoperation of a manipulator using virtual fixtures and hybrid position-velocity control. In Proceedings of the IFToMM World Congress in Mechanism and Machine Science, Guanajuato, Mexico, 19–23 June 2011. [Google Scholar]

- Farkhatdinov, I.; Ryu, J.H. Switching of control signals in teleoperation systems: Formalization and application. In Proceedings of the 2008 IEEE/ASME International Conference on Advanced Intelligent Mechatronics, Xi’an, China, 2–5 July 2008; pp. 353–358. [Google Scholar]

- Teather, R.J.; MacKenzie, I.S. Position vs. velocity control for tilt-based interaction. In Graphics Interface; CRC Press: Boca Raton, FL, USA, 2014; pp. 51–58. [Google Scholar]

- Mokogwu, C.N.; Hashtrudi-Zaad, K. A hybrid position-rate teleoperation system. Robot. Auton. Syst. 2021, 141, 103781. [Google Scholar] [CrossRef]

- Dominjon, L.; Lécuyer, A.; Burkhardt, J.M.; Andrade-Barroso, G.; Richir, S. The “bubble” technique: Interacting with large virtual environments using haptic devices with limited workspace. In Proceedings of the First Joint Eurohaptics Conference and Symposium on Haptic Interfaces for Virtual Environment and Teleoperator Systems, World Haptics Conference, Pisa, Italy, 18–20 March 2005; pp. 639–640. [Google Scholar]

- Filippeschi, A.; Brizzi, F.; Ruffaldi, E.; Jacinto Villegas, J.M.; Landolfi, L.; Avizzano, C.A. Evaluation of diagnostician user interface aspects in a virtual reality-based tele-ultrasonography simulation. Adv. Robot. 2019, 33, 840–852. [Google Scholar] [CrossRef]

- Dominjon, L.; Lécuyer, A.; Burkhardt, J.M.; Richir, S. A comparison of three techniques to interact in large virtual environments using haptic devices with limited workspace. In CGI 2006: Advances in Computer Graphics; Springer: Berlin/Heidelberg, Germany, 2006; pp. 288–299. [Google Scholar]

- Fischer, A.; Vance, J.M. PHANToM haptic device implemented in a projection screen virtual environment. In Proceedings of the IPT/EGVE03: Imersive Progection Technologies/Eurographics Virtual Environments, Zurich, Switzerland, 22–23 May 2003; pp. 225–229. [Google Scholar]

- Lee, H.G.; Hyung, H.J.; Lee, D.W. Egocentric teleoperation approach. Int. J. Control Autom. Syst. 2017, 15, 2744–2753. [Google Scholar] [CrossRef]

- Shin, H.; Jung, S.H.; Choi, Y.R.; Kim, C. Development of a shared remote control robot for aerial work in nuclear power plants. Nucl. Eng. Technol. 2018, 50, 613–618. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).