Diverse Pose Lip-Reading Framework

Abstract

1. Introduction

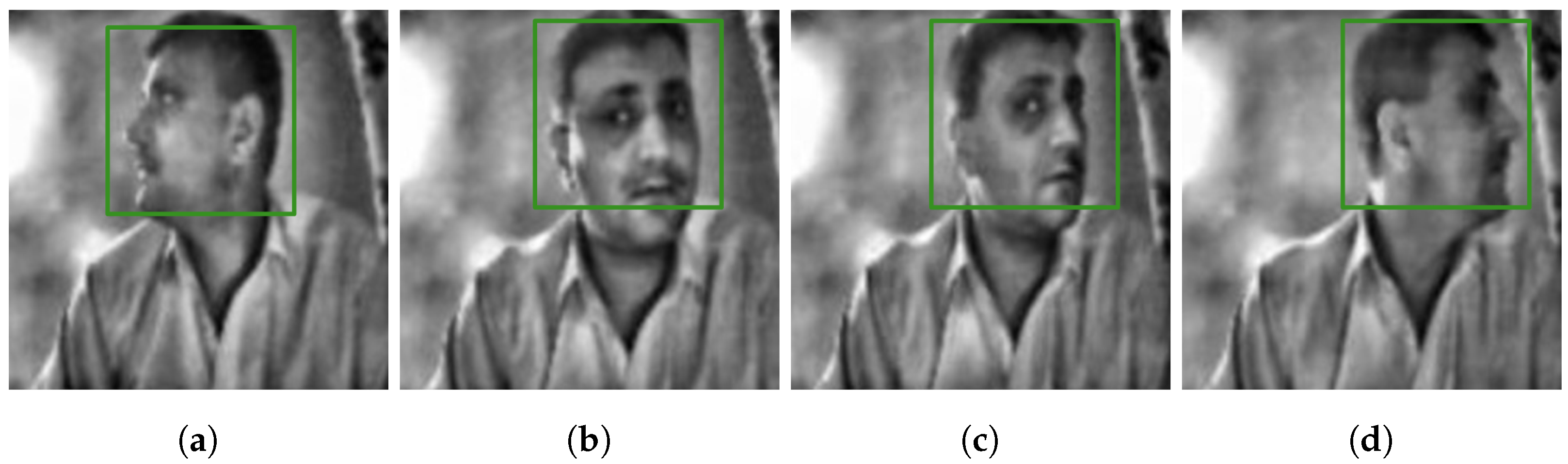

- Achieving high accuracy of lip reading in low quality videos where the mouth region is not clearly visible;

- The use of FF-GAN to predict lip reading in extreme pose angles from ° to the profile.

2. Literature Review

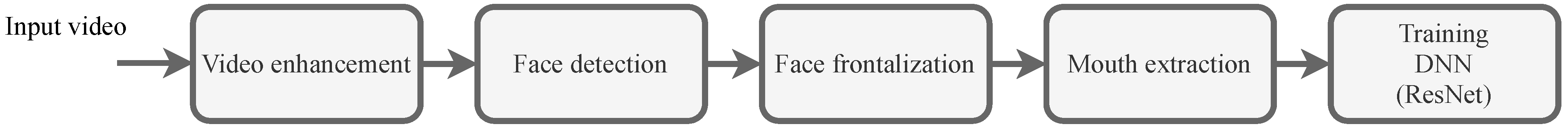

3. Proposed Lip-Reading Framework

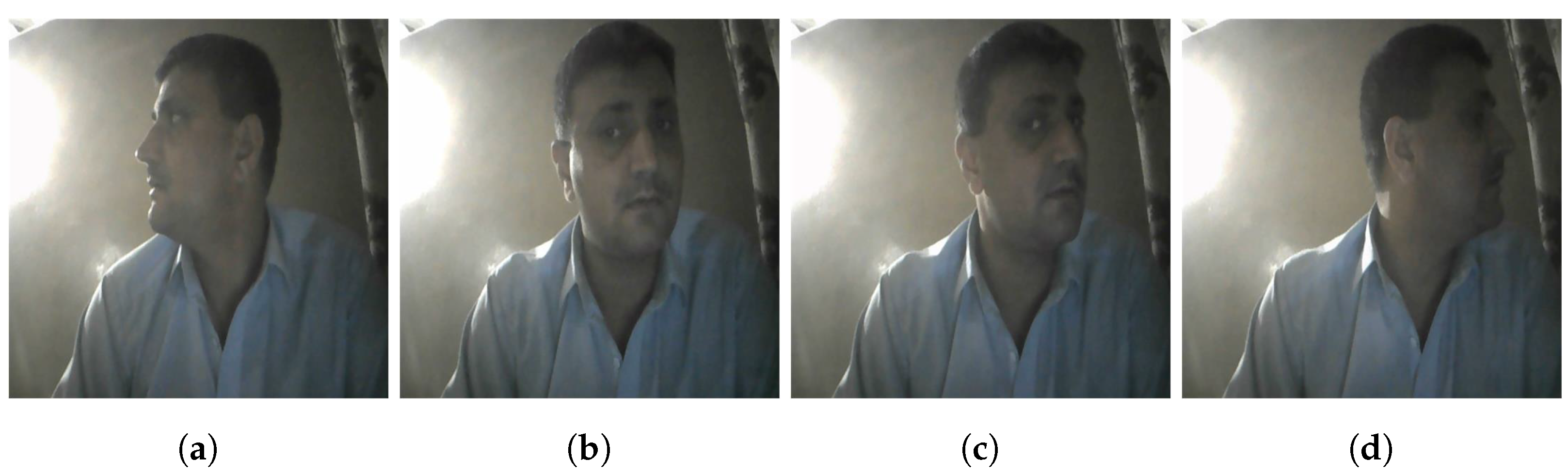

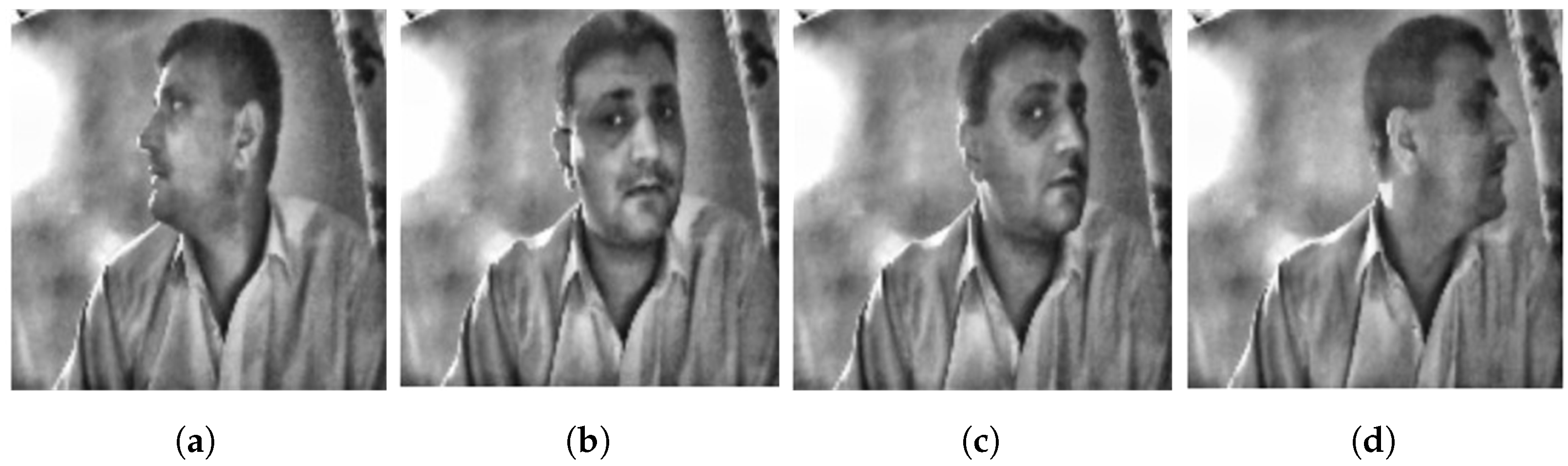

3.1. Video Enhancement

3.2. Face Detection

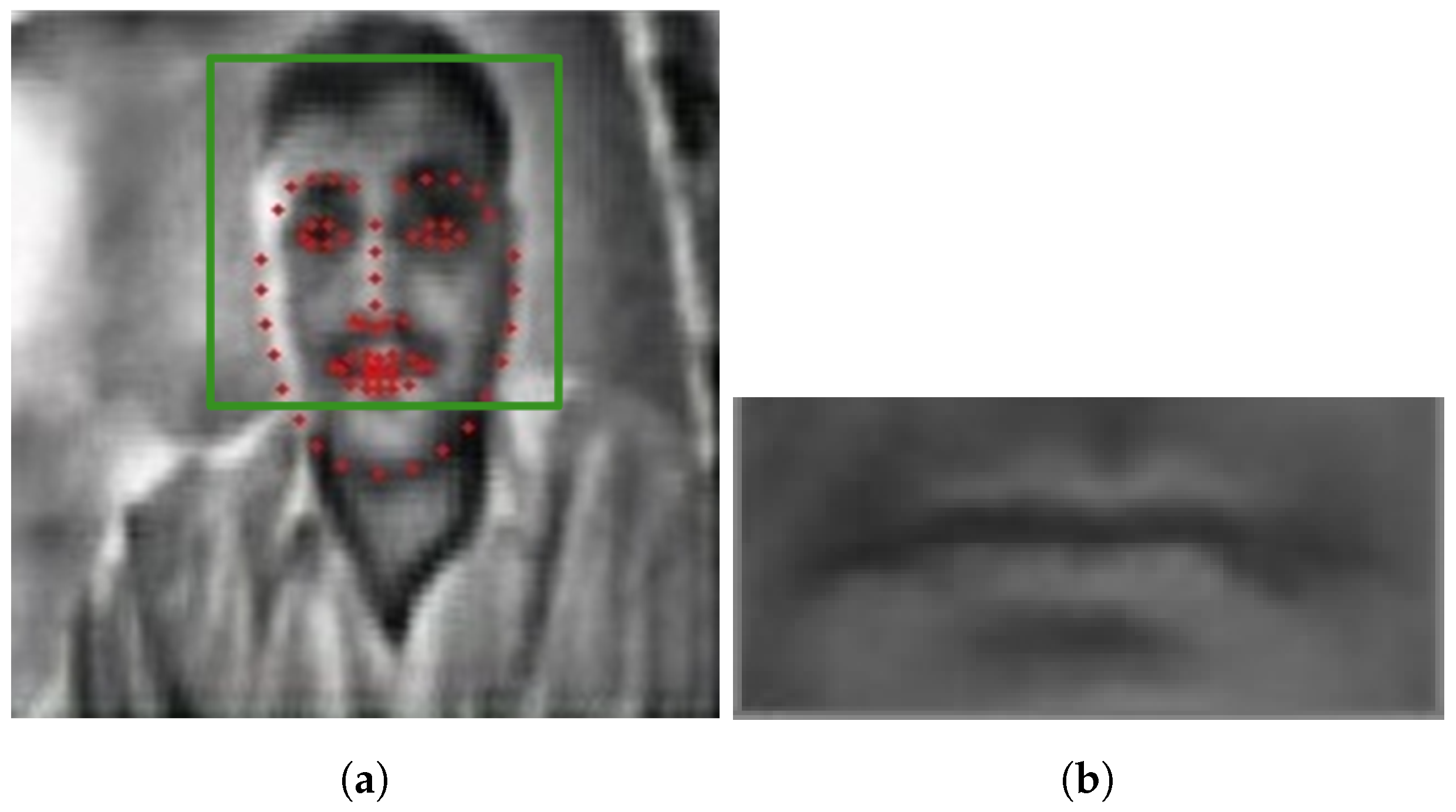

3.3. Face Frontalization (FF)

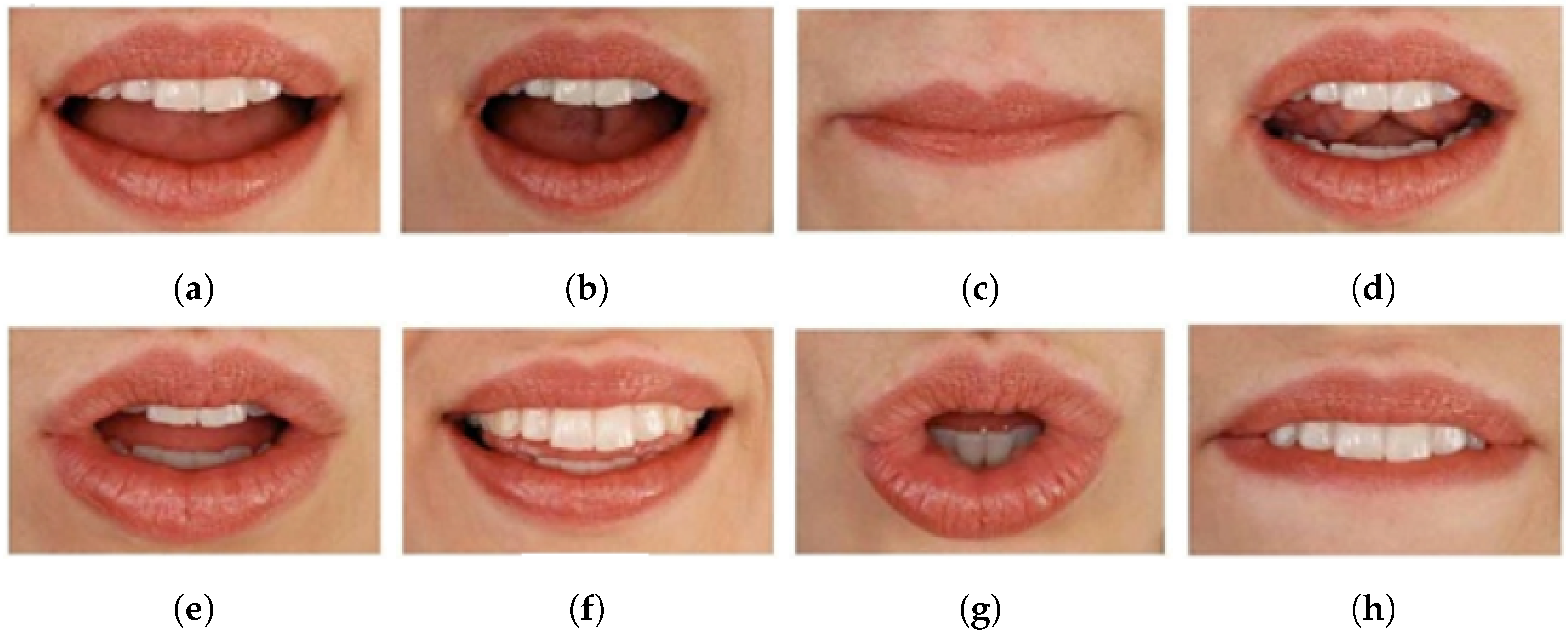

3.4. Mouth Extraction

4. Deep Neural Network (DNN) for the Lip-Reading

Training

5. Experimental Results

5.1. Dataset

5.2. Evaluation Metrics

5.3. Results

5.4. Comparison

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- McGurk, H.; MacDonald, J. Hearing lips and seeing voices. Nature 1976, 264, 746–748. [Google Scholar] [CrossRef] [PubMed]

- Scanlon, P.; Reilly, R. Feature analysis for automatic speechreading. In Proceedings of the 2001 IEEE Fourth Workshop on Multimedia Signal Processing (Cat. No. 01TH8564), Cannes, France, 3–5 October 2001; pp. 625–630. [Google Scholar]

- Matthews, I.; Potamianos, G.; Neti, C.; Luettin, J. A comparison of model and transform-based visual features for audio-visual LVCSR. In Proceedings of the IEEE International Conference on Multimedia and Expo, 2001 (ICME 2001), Tokyo, Japan, 22–25 August 2001; IEEE Computer Society: Washington, DC, USA, 2001. [Google Scholar]

- Aleksic, P.S.; Katsaggelos, A.K. Comparison of low-and high-level visual features for audio-visual continuous automatic speech recognition. In Proceedings of the 2004 IEEE International Conference on Acoustics, Speech, and Signal Processing, Montreal, QC, Canada, 17–21 May 2004; Volume 5, pp. 4–917. [Google Scholar]

- Shaikh, A.A.; Kumar, D.K.; Yau, W.C.; Azemin, M.C.; Gubbi, J. Lip reading using optical flow and support vector machines. In Proceedings of the 2010 3rd International Congress on Image and Signal Processing, Yantai, China, 16–18 October 2010; Volume 1, pp. 327–330. [Google Scholar]

- Puviarasan, N.; Palanivel, S. Lip reading of hearing impaired persons using HMM. Expert Syst. Appl. 2011, 38, 4477–4481. [Google Scholar] [CrossRef]

- Bear, H.L.; Harvey, R.W.; Lan, Y. Finding phonemes: Improving machine lip-reading. arXiv 2017, arXiv:1710.01142. [Google Scholar]

- Chung, J.S.; Zisserman, A. Learning to lip read words by watching videos. Comput. Vis. Image Underst. 2018, 173, 76–85. [Google Scholar] [CrossRef]

- Paleček, K. Experimenting with lipreading for large vocabulary continuous speech recognition. J. Multimodal User Interfaces 2018, 12, 309–318. [Google Scholar] [CrossRef]

- Thangthai, K.; Bear, H.L.; Harvey, R. Comparing phonemes and visemes with DNN-based lipreading. arXiv 2018, arXiv:1805.02924. [Google Scholar]

- Bear, H.L. Decoding Visemes: Improving Machine Lip-Reading. Ph.D. Thesis, University of East Anglia, Norwich, UK, 2016. [Google Scholar]

- Bear, H.L.; Cox, S.J.; Harvey, R.W. Speaker-independent machine lip-reading with speaker-dependent viseme classifiers. arXiv 2017, arXiv:1710.01122. [Google Scholar]

- Chung, J.S.; Senior, A.; Vinyals, O.; Zisserman, A. Lip reading sentences in the wild. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 3444–3453. [Google Scholar]

- Chung, J.S.; Zisserman, A. Lip reading in the wild. In Proceedings of the Asian Conference on Computer Vision, Taipei, Taiwan, 20–24 November 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 87–103. [Google Scholar]

- Hassanat, A.B. Visual speech recognition. Speech Lang. Technol. 2011, 1, 279–303. [Google Scholar]

- Almajai, I.; Cox, S.; Harvey, R.; Lan, Y. Improved speaker independent lip reading using speaker adaptive training and deep neural networks. In Proceedings of the 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Shanghai, China, 20–25 March 2016; pp. 2722–2726. [Google Scholar]

- Bear, H.L.; Harvey, R. Phoneme-to-viseme mappings: The good, the bad, and the ugly. Speech Commun. 2017, 95, 40–67. [Google Scholar] [CrossRef]

- Assael, Y.M.; Shillingford, B.; Whiteson, S.; De Freitas, N. Lipnet: End-to-end sentence-level lipreading. arXiv 2016, arXiv:1611.01599. [Google Scholar]

- Koller, O.; Ney, H.; Bowden, R. Deep learning of mouth shapes for sign language. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Santiago, Chile, 7–13 December 2015; pp. 85–91. [Google Scholar]

- Lu, Y.; Li, H. Automatic Lip-Reading System Based on Deep Convolutional Neural Network and Attention-Based Long Short-Term Memory. Appl. Sci. 2019, 9, 1599. [Google Scholar] [CrossRef]

- Lan, Y.; Theobald, B.J.; Harvey, R. View independent computer lip-reading. In Proceedings of the 2012 IEEE International Conference on Multimedia and Expo, Melbourne, VIC, Australia, 9–13 July 2012; pp. 432–437. [Google Scholar]

- Lucey, P.J.; Potamianos, G.; Sridharan, S. A unified approach to multi-pose audio-visual ASR. In Proceedings of the INTERSPEECH 2007, 8th Annual Conference of the International Speech Communication Association, Antwerp, Belgium, 27–31 August 2007. [Google Scholar]

- Marxer, R.; Barker, J.; Alghamdi, N.; Maddock, S. The impact of the Lombard effect on audio and visual speech recognition systems. Speech Commun. 2018, 100, 58–68. [Google Scholar] [CrossRef]

- Noda, K.; Yamaguchi, Y.; Nakadai, K.; Okuno, H.G.; Ogata, T. Audio-visual speech recognition using deep learning. Appl. Intell. 2015, 42, 722–737. [Google Scholar] [CrossRef]

- Castiglione, A.; Nappi, M.; Ricciardi, S. Trustworthy Method for Person Identification in IIoT Environments by Means of Facial Dynamics. IEEE Trans. Ind. Inform. 2020, 17, 766–774. [Google Scholar] [CrossRef]

- Chung, J.S.; Zisserman, A. Lip reading in profile. In Proceedings of the British Machine Vision Conference (BMVC), London, UK, 4–7 September 2017; BMVA Press: Surrey, UK, 2017. [Google Scholar]

- Yadav, G.; Maheshwari, S.; Agarwal, A. Contrast limited adaptive histogram equalization based enhancement for real time video system. In Proceedings of the 2014 International Conference on Advances in Computing, Communications and Informatics (ICACCI), Delhi, India, 24–27 September 2014; pp. 2392–2397. [Google Scholar]

- Zhu, Y.; Huang, C. An adaptive histogram equalization algorithm on the image gray level mapping. Phys. Procedia 2012, 25, 601–608. [Google Scholar] [CrossRef]

- Kazemi, V.; Sullivan, J. One millisecond face alignment with an ensemble of regression trees. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1867–1874. [Google Scholar]

- Yin, X.; Yu, X.; Sohn, K.; Liu, X.; Chandraker, M. Towards large-pose face frontalization in the wild. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 3990–3999. [Google Scholar]

- Paszke, A.; Gross, S.; Chintala, S.; Chanan, G.; Yang, E.; DeVito, Z.; Lin, Z.; Desmaison, A.; Antiga, L.; Lerer, A. Automatic differentiation in PyTorch. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2007. [Google Scholar]

- Cooke, M.; Barker, J.; Cunningham, S.; Shao, X. An audio-visual corpus for speech perception and automatic speech recognition. J. Acoust. Soc. Am. 2006, 120, 2421–2424. [Google Scholar] [CrossRef] [PubMed]

| Sets | Dates | No. of Uttrances | Vocab |

|---|---|---|---|

| Train | 01/2018–11/2018 | 8880 | 14,708 |

| Val | 01/2019–04/2019 | 4540 | 3401 |

| Test | 05/2019–11/2019 | 3890 | 3401 |

| All | 17,310 | 21,510 |

| Datasets | Types | Vocab | No. of Uttrances | Views |

|---|---|---|---|---|

| GRID [32] | Phrases | 51 | 33,000 | |

| LRS [13] | Sentences | 807,375 | 118,116 | – |

| MV-LRS [13] | Sentences | 14,960 | 74,564 | – |

| MP dataset | Sentences | 17,500 | 135,442 | to profile |

| Poses | MP dataset | MV-LRS | ||||

|---|---|---|---|---|---|---|

| CER | WER | BLEU | CER | WER | BLEU | |

| Frontal | 42.1% | 51.0% | 48.4 | 46.5% | 56.4% | 49.3 |

| 45.5% | 53.7% | 50.2 | 49% | 56% | 47 | |

| 49.7% | 57% | 45.8 | 50.4% | 59.2% | 46.1 | |

| 46% | 55.6% | 45.8 | 50.4% | 59.2% | 46.1 | |

| 48% | 55% | 46.1 | 50.4% | 59.2% | 46.1 | |

| 53.7% | 60.1% | 46.1 | 53.2% | 62.3% | 45.8 | |

| 53% | 59.3% | 41.4 | 54.4% | 62.8% | 42.5 | |

| S.No | Sentences |

|---|---|

| 1 | YOU JUST HAVE TO FIND ANOTHER TERM AND LOOK THAT UP |

| 2 | I DO LIKE MAGIC |

| 3 | WE REALLY DON’T WALK ANYMORE |

| 4 | AT SOME POINT I’M GOING TO GET OUT |

| 5 | WHEN I GET OUT OF THIS AM I GOING TO BE REJECTED |

| 6 | WE’D LOVE TO HELP |

| Methods | Frontal | ||||||

|---|---|---|---|---|---|---|---|

| Y. Lan [21] | 70.01% | 69.04% | 65.50% | 62.98% | 61.73% | 60.09% | 58.85% |

| MV-WAS [26] | 90.1% | 89.07% | 87.8% | 85.0% | 82.0% | 80.10% | 78.9% |

| DNN and LSTM [20] | 60.00% | 55.05% | 49.01% | 35.00% | 32.00% | 30.00% | 28.06% |

| DNN(ResNet) | 92.05% | 92.05% | 91.08% | 91.08% | 91.00% | 90.08% | 90.00% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Akhter, N.; Ali, M.; Hussain, L.; Shah, M.; Mahmood, T.; Ali, A.; Al-Fuqaha, A. Diverse Pose Lip-Reading Framework. Appl. Sci. 2022, 12, 9532. https://doi.org/10.3390/app12199532

Akhter N, Ali M, Hussain L, Shah M, Mahmood T, Ali A, Al-Fuqaha A. Diverse Pose Lip-Reading Framework. Applied Sciences. 2022; 12(19):9532. https://doi.org/10.3390/app12199532

Chicago/Turabian StyleAkhter, Naheed, Mushtaq Ali, Lal Hussain, Mohsin Shah, Toqeer Mahmood, Amjad Ali, and Ala Al-Fuqaha. 2022. "Diverse Pose Lip-Reading Framework" Applied Sciences 12, no. 19: 9532. https://doi.org/10.3390/app12199532

APA StyleAkhter, N., Ali, M., Hussain, L., Shah, M., Mahmood, T., Ali, A., & Al-Fuqaha, A. (2022). Diverse Pose Lip-Reading Framework. Applied Sciences, 12(19), 9532. https://doi.org/10.3390/app12199532