Abstract

Developments in multimedia and mobile communication technologies and in mobilized, personalized information security has benefitted various sectors of society, as traditional identification technologies are often complicated. In response to the sharing economy and the intellectualization of automotive electronics, major automobile companies are using biometric recognition to enhance the safety, uniqueness, and convenience of their vehicles. This study uses a deep learning-based finger-vein identification system for carputer systems. The proposed enhancement edge detection adapts to the detected fingers’ rotational and translational movements and to interference from external light and other environmental factors. This study also determines the effect of preprocessing methods on the system’s effectiveness. The experimental results demonstrate that the proposed system allows more accurate identification of 99.1% and 98.1% in various environments, using the FV-USM and SDUMLA-HMT public datasets. As results, the contribution of system is high accuracy and stability for more sanitary, contactless applications makes it eminently suited for use during the COVID-19 pandemic.

1. Introduction

Artificial intelligence (AI), the Internet of things (IoT), bigdata, mobile networks (MN) and cloud computing (CC) have been advancing rapidly. The growth of autonomous vehicles (AVs) and the Internet of Vehicles (IoV) means that these technologies are increasingly integrated into people’s lives. However, consumers who do not often drive find automobiles to be too costly. Costs include a high purchasing price, depreciation, insurance, maintenance, taxes and various other fees during and after the purchase of an automobile. A sharing economy would address this issue. The sharing economy business model promotes the redistribution of unused automobiles by carsharing, changing the traditional business model by providing reciprocal benefits for both the sharer and the user. This economic structure allows the sharing of automobiles as an alternative to the greater economic burden of individual purchasing.

Most carsharing platforms identify users using independently developed applications (App), which involves a registration process and the submission of an identity card, a driver’s license, or other documents to verify identity and driver qualifications. These documents must be verified before users rent a vehicle. Vehicles can be unlocked using virtual keys but logging into the app requires a traditional account and password verification. This identification process for carsharing platforms has numerous flaws: if an account were to be compromised, it is impossible to determine whether the driver is the registered user. Automobiles can be unlocked using a key or a keyless entry system. Physical keys can be replicated using pictures or molding techniques, but keyless entry systems are more secure. However, keyless entry systems unlock automobiles using near-field communication (NFC) [1] or Bluetooth [2] technology but a Bluetooth connection is unstable and media access addresses (MAC) are also susceptible to replication, so security breaches are possible. NFC technology is more stable and safer than a Bluetooth connection, but it is also easily replicable.

To address the security flaws of traditional identification documents, passwords, key and keyless entry systems, high-end automobiles use biometric authentication to increase security. As the demand for privacy, security and convenience increases, biometrics have become increasingly popular as a fast and simple identification process that offers security, reliability, and accuracy. Its convenience also allows for safe monitoring and system management and integration [3,4]. Biometric characteristics are universal, permanent, distinctive and collectable [5,6,7]. Biometrics are more secure than traditional identification methods because keys or ID cards are not required. The physiological characteristics that biometrics detect comprise external characteristics and internal characteristics. External characteristics include traits such as a fingerprint, a face, and a palm-print and internal characteristics include traits such as finger-veins and palm-vein.

Each of these characteristics are used for biometric analysis but internal characteristics allow more accurate and secure identification. Internal characteristics that involve vessels such as finger-veins beneath the skin are not prone to wear or interference from external staining, water, light or other environmental variables that undermine identification [3,4]. External characteristics are more easily counterfeited and affected by an external environment, so they are less suited to biometrics. Internal vein textures do not change significantly over time. Even the veins of twins have different texture to allow for identification [5]. Vein imaging uses myoglobin’s absorbance of infrared light to capture images using infrared (NIR) and visible light (RGB) detectors. Blood does not flow in deceased humans so finger-vein textures can only be acquired from living bodies [5,6].

Finger-vein identification systems use 1 to 1 verification and 1 to N recognition systems. A 1 to 1 verification system begins the identification process by requiring the user to verify identity using a card or password. The system then captures images of the user’s finger-vein, calculates the image’s similarity to samples in the registered dataset and confirms the identity and allows entry if the similarity index exceeds a specific threshold value. A 1 to N recognition system compares captured vein textures with samples in the registered dataset. When the system identifies data, it verifies the user’s identity. A 1 to N recognition system is more suited to the identification of numerous personnel. This study uses a 1 to N recognition system for the renting of shared automobiles to improve customer convenience.

2. Review of Literature

Finger-vein biometrics use statistical computations such as local binary histograms (LBH) [8], local derivative patterns (LDP) [9] and local line binary patterns (LLBP) [10] to analyze images and compare similarities between vein textures. However, these computational methods are easily affected by interference from external light sources or the rotational and translational displacement of the user’s fingers, which decreases identification accuracy.

A previous study [5] and the study by Miurea et al. [11] propose algorithms that use vein textures. These algorithms identify the darker shades of veins on the grayscale of digital images and track them to identify the texture. In [6], mathematically converted identified features to allow for correcting the errors due to rotational and translational finger displacements, but interference from external light sources cannot be countered. External light sources affect an image’s brightness and prevent the capture of vein features, so the identification rate decreases. Liu et al. [12] used bicubic interpolation to decrease the spatial resolution and create a more lightweight system but this approach inhibits the acquisition of vein texture data, so identification accuracy is decreased. Previous studies proposed finger-vein recognition using tri-branch feature points [5,13], but unstable light sources decrease the number of identifiable tri-branch feature points, so there are errors. However, these algorithms require significant computational power, so response times are slow. As CPU and GPU computing power increases and costs decrease, identification algorithms use deep learning (DL) to improve accuracy by processing and learning from large data sets of images, so identification using biometrics is more accurate. Other studies use a convolutional neural network (CNN) to extract and categorize texture features for finger-vein biometrics. CNNs are highly effective but a previous study [3,4] proposed an identification process that is slower because the system must process more samples for DL.

These recognition technologies all possess flaws. Traditional image algorithms have lower error rates for specific equipment and illumination conditions but when these settings are altered, the system’s recognition effectiveness varies. Image algorithms only use a small portion of all data during experimentation and if the dataset is expanded, the error rate changes. Traditional image algorithms retain the users’ vein features they are insufficiently secure to ensure total information safety for financial businesses and government agencies. DL-based algorithms also require large data sets, so they are susceptible to overfitting of finger-vein biometrics.

Contemporary shared automobiles verify user identities by verifying traditional ID documents. The registration processes require manual checking of driver licenses and ID documents. After registration, users rent cars by logging in with their account and password. Studies show that traditional identification features numerous risks and informational security weaknesses. Access to a registered carsharing service is possible using an account and password so anyone, including those underaged or those without a driver’s license, can use shared automobiles if they have the password. The traditional method is also vulnerable to invalid registrations using fabricated documents. Personal data breaches are possible, so the system does not protect information. To increase information security for carsharing systems, this study uses finger-vein biometric algorithms in shared automobiles’ carputers and an enhanced edge detection method to correct for rotational and translational movements of fingers and interference from external light sources and other factors in the environment. The system allows stable, recognition if there is interference from external light sources and complex environments. In terms of the COVID-19 pandemic, the proposed system verifies users’ identities by contactless interactions, which is a growing trend in the development of human-computer interaction (HCI) systems.

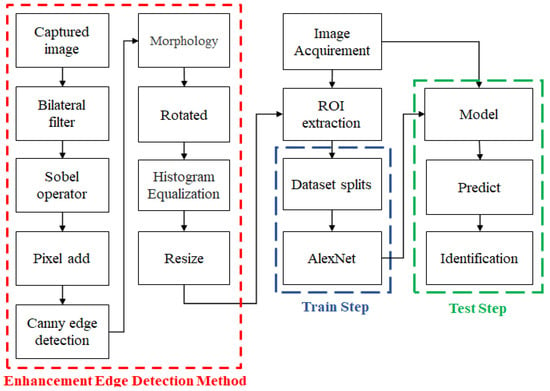

3. Proposed Finger-Vein Identification System

Figure 1 shows the conceptual flowchart for the proposed method. The identification process includes the extraction of regions of interest (ROI) and CNN training. This study uses a ROI algorithm to locate the area to be identified. Image brightness varies depending on the device and the environment, so the captured image of the ROI is adjusted using parametric-oriented histogram equalization (POHE) and contrast limited adaptive histogram equalization (CLAHE) to enhance and stabilize the vein characters. The system uses DL to match the users’ (drivers’) vein images against images for the registered user in the dataset and outputs the identification results.

Figure 1.

Flowchart for the proposed system.

3.1. Enhancement Edge Detection Method

When the user places a finger in the biometric scanner, any rotation or translation of the finger affects the identification process. Using a ROI preserves the relevant information for identification. The market uses contact-based and contactless biometrics but the hardware design for contact-based devices restricts the displacement of fingers, so interference from rotation and translation has little effect on the identification process.

Contactless options have become popular during the COVID-19 pandemic, so these devices capture more noise signals, which complicates image processing. Depending on the time of image capture, contactless biometrics are susceptible to rotation and translation of fingers, so a series of algorithms [5,6] are required to ensure that images that extracted using biometrics are sufficiently clear for analysis. The components of the algorithm for the proposed enhancement edge detection method are detailed in the next section.

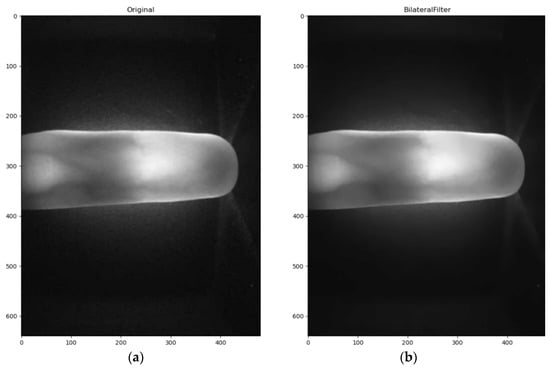

Bilateral Filter

In Figure 2a, many denoising methods compromise image edge details so high frequency information is lost. A bilateral filter is a nonlinear filter that measures the spatial domain and gray levels to preserve edges, as demonstrated in Equation (1). A bilateral filter has two components, one of which relates to the pixel distance, for which the weight is product of the spatial nearest factor and similarity factor. The spatial nearness factor is a Gaussian filter coefficient and increased pixel distance decreases the weighting. An increase in the δr value increases image smoothing, as described by Equation (2). Equation (3) describes the relationship between the luminescence similarity factor and the spatial pixel difference. The greater the spatial pixel difference, the lower is the weighting for the luminesce similarity factor. An increase in the δd value increases the smoothing effect for pixels with the same gray level so less edge data is preserved. A bilateral filter is used with a Sobel operator to improve edge detection, as shown Figure 2b.

Figure 2.

Bilateral filter processing for a finger-vein image: (a) Without the filter; (b) With the filter.

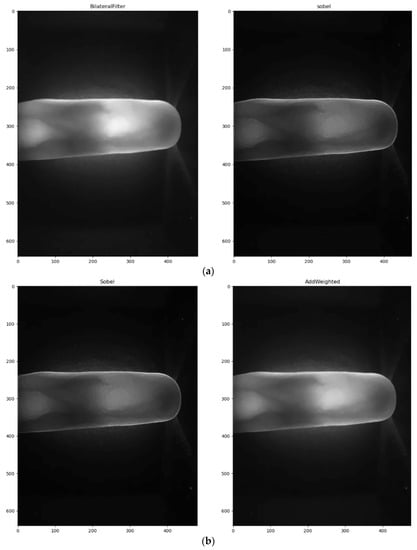

3.2. Multi-Layer Edge Detection

The Sobel operator is a common edge detection system that is used in image processing. It uses the algorithm for surrounding pixels’ gray level weighting and performs edge detection using the most extreme edge pixel values [14]. The operator uses two 3 × 3 masks, a horizontal operator Sx and a vertical operator Sy to allow convolutional computation by Equation (5).

The calculated values for Gx and Gy are combined using Equation (6) to complete the Sobel edge detection process.

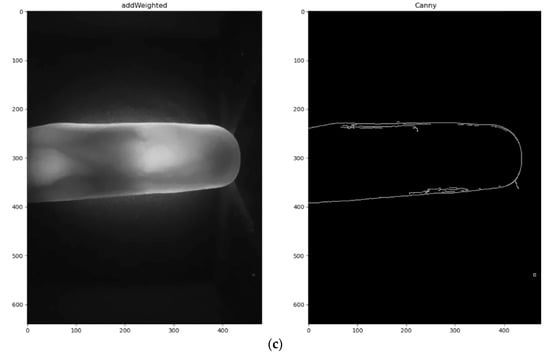

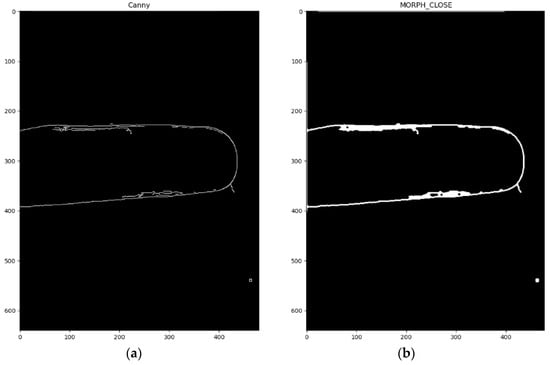

Figure 3a shows the bilateral image after processing using a Sobel operator. The pixel and the weighting result between the Sobel operator and the bilateral filter’s processed images are shown in Figure 3b. This method contrasts and enhances the finger outline and increases the effect of the Canny edge detector. It is difficult to achieve denoising and accurate edge detection so smoothly edged images are less accurate. However, increasing the sensitivity of edge detection increases the perceived noise signals. To address this issue, a Canny edge detector [15] is used to balance denoising and detection accuracy. This algorithm gives precise detection, a low error rate and produces detailed edge outlines, so the Sobel operator’s results are processed using Canny edge detection. The multi-layer edge detection method gives stable finger outline information, as shown in Figure 3c. An image that is processed using Canny edge detection still contains small cracks and unevenness so a closing operator from morphology studies is used to complete the finger edge outline while maintaining the finger position and shape, as shown in Figure 4.

Figure 3.

The multi-layer edge detection method: (a) Bilateral image after Sobel operator processing; (b) Using (a) gives the pixel add weighting result; (c) Using (b) with the Canny operator.

Figure 4.

From morphology: (a) With Canny edge detection; (b) With the closing operator.

3.3. Region of Interest Detection

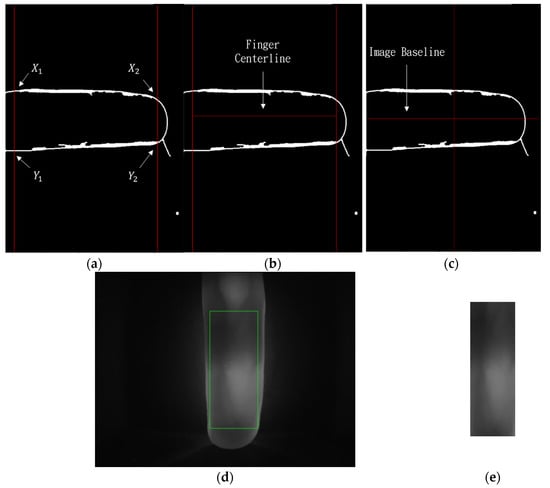

A user’s finger is displaced when it is inserted into the biometric device so this study proposes a region of interest (ROI) detection method to acquire stable and applicable vein character information. Canny edge computation firstly identifies X1 and Y1 on the left half and X2 and Y2 on the right half of the subject finger, as shown in Figure 5a. The center of the left and right halves of the finger is located through these points. The center points are then used to calculate the finger’s center baseline, as shown in Figure 5b. The calculated baseline is compared to the finger’s inherent center line, as shown in Figure 5c. Adjustments for skewed and shifted angles use calculated rotations. This normalization process decreases the chance of incorrect identification. The green bounding box (ROI) is derived from the normalized image, as shown in Figure 5d. Then, the normalized image, as shown in Figure 5e.

Figure 5.

ROI detection: (a) Left and right half coordinates; (b) Inherent finger center baseline; (c) Calculated center baseline; (d) Extracted ROI image. (e) Normalized image.

3.4. Image Enhancement and AlexNet

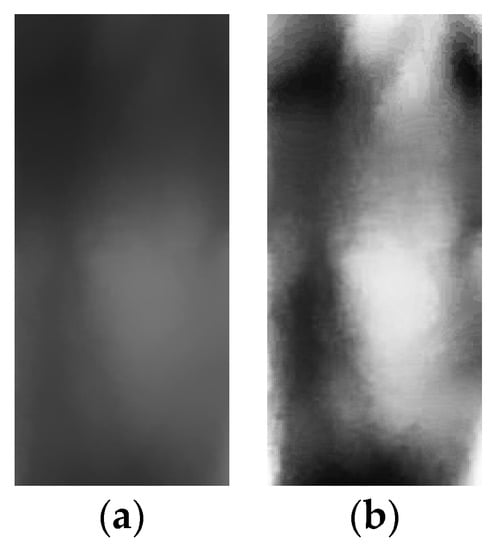

Current finger-vein biometrics are easily affected by external light sources, so image contrast levels differ. CLAHE [16] denoises and enhances image contrast, but the noise filtering process removes some vein character information, which decreases image quality. Therefore, this study uses POHE [17] to contrast and enhance the image. POHE uses Gaussian parameters to construct the functional model for a Gaussian kernel and regional histogram equalization (HE) to enhance the image. An image that is processed using POHE features more significant vein enhancement than the original, as shown in Figure 6. This method is used to extract a CNN’s vein character.

Figure 6.

Enhancing the contrast in a vein image: (a) Original image; (b) After processing using POE.

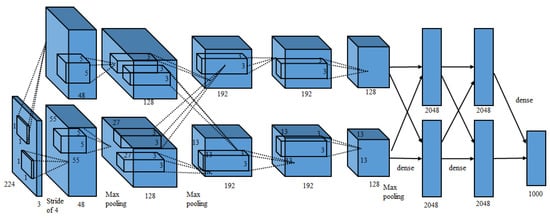

This study uses the AlexNet CNN because it categorizes very effectively. Unlike earlier neural networks (NN), AlexNet uses the activation function ReLu to accelerate convergence and dropout, in order to prevent overfitting. This NN has 60 million parameters and 65 million neurons and the AlexNet’s network architecture contains 12 layers: 1 input layer, 5 convolutional layers, 3 pool layers and 3 fully connected layers, as shown in Figure 7.

Figure 7.

The flowchart for AlexNet.

4. Experimental Results and Analysis

4.1. Experimental Environment and Datasets

The hardware environment for this study uses an Intel Core i7-8700K 3.7GHz as the central processing unit (CPU) and a Nvidia Geforce GTX 1080 Ti with DDR4 32GB computing memory as the graphics processor unit (GPU). The software is developed using Python and C++ and TensorFlow 1.15.0, Keras 2.3.1 and OpenCV 2.4.9 program libraries. To measure the effectiveness of the proposed edge enhancement detection method, this study uses public two finger-vein libraries: contact-based FV-USM [18] and contactless SUMLA-HMT [19].

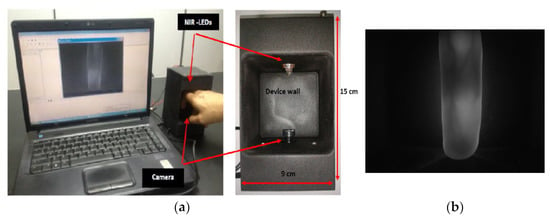

The FV-USM dataset is created by Universiti Sains Malaysia. It contains vein images of 123 subjects’ left and right index and middle fingers. The subjects include 83 males and 40 females, with ages between 20 and 52 years. The vein imaging process requires subjects’ fingertips to contact the device base, as shown in Figure 8a. Each subject provides 6 images of each finger on each hand. The testing environment has unstable light sources, but the fingers are stably positioned so the resolution is 640 × 480, as shown in Figure 8b.

Figure 8.

FV-USM dataset [18]: (a) Vein imaging device; (b) Example of a vein image.

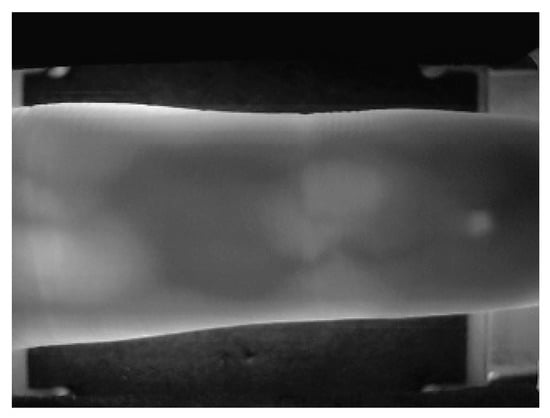

Anyway, the SDU-MLA dataset is created by Shandong University. It contains 636 vein images from 106 subjects. Six images each of every subject’s left and right index, middle, and ring fingers are contained in the dataset. The light sources in the testing environment are unstable and finger positioning is unstable, so the resolution is 320 × 240, as shown in Figure 9.

Figure 9.

Vein image from the SDUMLA-HMT dataset [19].

4.2. Setting and Evaluation

Unsuitable parameters hinder the model’s performance on systems that use DL so minor details affect the final result. This study separates the FV-USM and SDUMLA-HMT datasets into training and testing data sets. The training dataset is used to train and test the model and the testing dataset is used for verification. The AlexNet optimization component uses an Adam optimizer with a learning rate of 0.001, a batch size of 64 and 600 epochs.

In recent years, the security of biometric systems has been the subject of much interest. The definition of analysis of variances (ANOVA) test and T-test refers to the degree of difference between samples [20,21]. This is not suitable for finger-vein identification that each biometric is corresponding to the person individual. However, a critical index for the safety of 1:N identification systems is the error equal rate (EER). Identification systems incur two types of errors: the false rejection rate (FRR), whereby the system identifies a registered user as unregistered, as shown in Equation (7), and the false acceptance rate (FAR), whereby the system mistakes an unregistered user as registered, as shown in Equation (8). The security of the device is adjusted from the most to least secure by fine-tuning the system threshold. Changing the threshold increases the FAR value, until the probability approaches 1. The FRR value decreases to 0 and increases with the FAR. The EER is the intersection of the FRR and FAR curves. The threshold value is adjusted for different applications and scenarios but setting it at the EER value provides the most balanced performance. The EER is the index that denotes the system’s level of security.

This study proposes DL training for testing with edge detection and various image enhancement methods. Three different experiments give a range of analysis for future applications. Three finger-vein images were randomly selected from the dataset for training, and three were selected for testing. This is a 3T/3T setup. 4T/2T and 5T/1T setups were also used with different combinations of testing and training images. The HE using a wavelet transform (WT) [22] was used for these testing sets, as shown in Table 1.

Table 1.

Stability of identification for three vein image analysis scenarios using the FV-USM dataset.

For the contact-based FV-USM dataset, 3T/3T vein image analysis using HE and DL gives the lowest EER value of 0.003 and highest accuracy of 97.9% because HE gives a more stable luminance contrast than WT-based image processing. For 4T/2T analysis, the lowest EER value is 0.002 and the highest accuracy is 99.0%. For 5T/1T analysis, the lowest EER value is 0.002 and the highest accuracy is 99.1%. Experiments show that the device is stable for 4T/2T and 5T/1T image analysis trials, as shown in Table 1.

To measure the effectiveness of the proposed enhancement edge detection method in processing images for contactless biometrics, this study uses the contactless-based SDUMLA-HMT public dataset for testing. For 3T/3T testing using HE and DL training, the EER is 0.010 and the accuracy is 96.0% because WT increases the brightness of excessively dark regions through the low-low band. However, 5T/1T is the most common training and testing setup for practical applications. The 5T/1T setup also gives a stable accuracy rate for this study, so performance with a weighting trade-off is acceptable. For 4T/2T analysis, the lowest EER value is 0.005 and the highest accuracy is 98.1%. Experiments show that the device is stable in 4T/2T and 5T/1T image analysis trials, as shown in Table 2. The EER values for the FV-USM and SDUMLA-HMT datasets are compared with those for other recent studies. The SDUMLA-HMT is newer and contains more finger displacement, but Table 3 shows that EER values are less than those for other recent studies.

Table 2.

Identification stability for three vein image analysis setups using the SDUMLA-HMT dataset.

Table 3.

Comparison with the results for other recent studies.

5. Conclusions

This study uses a 1 to N finger-vein biometric for carputer systems and proposes a method to enhance edge detection to correct for translation and rotation of fingers, interference from external light sources and other environmental factors. The effect of the preprocessing method on the developed finger-vein system is also studied using the public FV-USM and SDUMLA-HMT datasets. This gives the best values for EER of 0.002 and 0.003 for various datasets. The indices for the EER clearly show that the proposed method outperforms state-of-the-art systems. A stable biometric system for carputer systems is constructed, which operates through contactless interactions to provide superior convenience and sanitation for the user.

Author Contributions

C.-H.H. and H.-J.W. carried out the studies and drafted the manuscript. Z.-H.Y. and K.-K.L. participated in its design and helped to draft the manuscript. C.-H.H. and Z.-H.Y. conducted the experiments and performed the statistical analysis. All authors have read and agreed to the published version of the manuscript.

Funding

This work was partially supported by the National Science and Technology Council, Taiwan, under Grant No. MOST 111-2221-E-197-020-MY3.

Institutional Review Board Statement

Not applicable. This study did not involve humans or animals.

Informed Consent Statement

Not applicable. This study did not involve humans.

Data Availability Statement

This study did not report any data.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Steffen, R.; Preißinger, J.; Schöllermann, T.; Müller, A.; Schnabel, I. Near field communication (NFC) in an automotive environment. In Proceedings of the IEEE International Workshop on Near Field Communication, Monaco, China, 20 April 2010; pp. 15–20. [Google Scholar]

- Xia, K.; Wang, H.; Wang, N.; Yu, W.; Zhou, T. Design of automobile intelligence control platform based on bluetooth low energy. In Proceedings of the IEEE Region 10 Conference, Singapore, 22–25 November 2016; pp. 2801–2805. [Google Scholar]

- Chen, Y.-Y.; Hsia, C.-H.; Chen, P.-H. Contactless multispectral palm-vein recognition system with lightweight convolutional neural network. IEEE Access 2021, 9, 149796–149806. [Google Scholar] [CrossRef]

- Chen, Y.-Y.; Jhong, S.-Y.; Hsia, C.-H.; Hua, K.-L. Explainable AI: A multispectral palm vein identification system with new augmentation features. ACM Trans. Multimed. Comput. Commun. Appl. 2021, 17, 1–21. [Google Scholar] [CrossRef]

- Hsia, C.-H. Improved finger-vein pattern method using wavelet-based for real-time personal identification system. J. Imaging Sci. Technol. 2018, 26, 30402-1–30402-8. [Google Scholar] [CrossRef]

- Hsia, C.-H. New verification strategy for finger-vein recognition system. IEEE Sens. J. 2018, 18, 790–797. [Google Scholar] [CrossRef]

- Menotti, D.; Chiachia, G.; Pinto, A.; Schwartz, W.R.; Pedrini, H.; Falcão, A.X.; Rocha, A. Deep representations for iris, face, and fingerprint spoofing detection. IEEE Trans. Inf. Forensics Secur. 2015, 10, 864–879. [Google Scholar] [CrossRef]

- Rosdi, B.A.; Shing, C.W.; Suandi, S.A. Finger-vein recognition using local line binary pattern. Sensors 2011, 11, 11357–11371. [Google Scholar] [CrossRef] [PubMed]

- Piciucco, E.; Maiorana, E.; Campisi, P. Palm vein recognition using a high dynamic range approach. IET Biom. 2018, 7, 439–446. [Google Scholar] [CrossRef]

- Kang, W.; Wu, Q. Contactless palm vein recognition using a mutual foreground-based local binary pattern. IEEE Trans. Inf. Forensics Secur. 2014, 9, 1974–1985. [Google Scholar] [CrossRef]

- Miura, N.; Nagasaka, A.; Miyatake, T. Feature extraction of finger-vein patterns based on repeated line tracking and its application to personal identification. Mach. Vis. Appl. 2004, 15, 194–203. [Google Scholar] [CrossRef]

- Liu, Z.; Song, S. An embedded real-time finger-vein recognition system for mobile devices. IEEE Trans. Consum. Electron. 2012, 58, 522–527. [Google Scholar] [CrossRef]

- Yang, L.; Yang, G.; Xi, X.; Meng, X.; Zhang, C.; Yin, Y. Tri-branch vein structure assisted finger-vein recognition. IEEE Access 2017, 5, 21020–21028. [Google Scholar] [CrossRef]

- Wulandari, M.; Basari; Gunawan, D. On the performance of pretrained CNN aimed at palm vein recognition application. In Proceedings of the IEEE International Conference on Information Technology and Electrical Engineering, Pattaya, Thailand, 10–11 October 2019; pp. 1–6. [Google Scholar]

- Canny, J. A computational approach to edge detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, PAMI-8, 679–698. [Google Scholar] [CrossRef]

- Alhussein, M.; Aurangzeb, K.; Haider, S.I. An unsupervised retinal vessel segmentation using hessian and intensity based approach. IEEE Access 2020, 8, 165056–165070. [Google Scholar] [CrossRef]

- Liu, Y.-F.; Guo, J.-M.; Lai, B.-S.; Lee, J.-D. High efficient contrast enhancement using parametric approximation. In Proceedings of the 2013 IEEE International Conference on Acoustics, Speech and Signal Processing, Vancouver, BC, Canada, 26–31 May 2013; pp. 2444–2448. [Google Scholar]

- Asaari, M.S.M.; Suandi, S.A.; Rosdi, B.A. Fusion of band limited phase only correlation and width centroid contour distance for finger based biometrics. Expert Syst. Appl. 2014, 41, 3367–3382. [Google Scholar] [CrossRef]

- Yin, Y.; Liu, L.; Sun, X. SDUMLA-HMT: A multimodal biometric dataset. Lect. Notes Comput. Sci. 2011, 7098, 2444–2448. [Google Scholar]

- El-Kenawy, E.-S.M.; Mirjalili, S.; Abdelhamid, A.A.; Ibrahim, A.; Khodadadi, N.; Eid, M.M. Meta-heuristic optimization and keystroke dynamics for authentication of smartphone users. Mathematics 2022, 10, 2912. [Google Scholar] [CrossRef]

- El-Kenawy, E.-S.M.; Albalawi, F.; Ward, S.A.; Ghoneim, S.S.M.; Eid, M.M.; Abdelhamid, A.A.; Bailek, N.; Ibrahim, A. Feature selection and classification of transformer faults based on novel meta-heuristic algorithm. Mathematics 2022, 10, 3144. [Google Scholar] [CrossRef]

- Hsia, C.-H.; Guo, J.-M.; Chiang, J.-S. Improved low-complexity algorithm for 2-D integer lifting-based discrete wavelet transform using symmetric mask-based scheme. IEEE Trans. Circuits Syst. Video Technol. 2009, 19, 1201–1208. [Google Scholar]

- Kono, M.; Ueki, H.; Umemura, S. Near-infrared finger-vein patterns for personal identification. Appl. Opt. 2002, 41, 7429–7436. [Google Scholar] [CrossRef]

- Yu, C.; Qin, H.; Zhang, L.; Cui, Y. Finger-vein image recognition combining modified hausdorff distance with minutiae feature matching. J. Biomed. Sci. Eng. 2009, 2, 261–272. [Google Scholar] [CrossRef]

- Chen, L.; Zheng, H. Persinal identification by finger-vein image base on tri value template Fuzzy matching. WSEAS Trans. Comput. 2009, 8, 1165–1174. [Google Scholar]

- Wang, D.; Li, J.; Memik, G. User identification based on finger-vein patterns for consumer electronics devices. IEEE Trans. Consum. Electron. 2010, 56, 799–804. [Google Scholar] [CrossRef]

- Mulyono, D.; Hong, S.-J. A study of finger-vein biometric for personal identification. In Proceedings of the IEEE International Symposium on Biometrics and Security Technologies, Isalambad, Pakistan, 23–24 April 2008; pp. 1–8. [Google Scholar]

- Qin, H.; Qin, L.; Yu, C. Region growth-based feature extraction method for finger-vein recognition. Opt. Eng. 2011, 50, 57281–57288. [Google Scholar] [CrossRef]

- Peng, J.; Wang, N.; El-Latif, A.A.A.; Li, Q.; Niu, X. Finger-vein verification using Gabor filter and SIFT feature matching. In Proceedings of the IEEE International Conference on Intelligent Information Hiding and Multimedia Signal Processing, Piraeus-Athens, Greece, 18–20 July 2012; pp. 45–48. [Google Scholar]

- Hong, H.; Lee, M.; Park, K. Convolutional neural network-based finger-vein recognition using NIR image sensors. Sensors 2017, 17, 1297. [Google Scholar] [CrossRef] [PubMed]

- Tao, Z.; Wang, H.; Hu, Y.; Han, Y.; Lin, S.; Liu, Y. DGLFV: Deep generalized label algorithm for finger-vein recognition. IEEE Access 2021, 9, 78594–78606. [Google Scholar] [CrossRef]

- Hsia, C.-H.; Liu, C.-H. New hierarchical finger-vein feature extraction method for iVehicles. IEEE Sens. J. 2022, 22, 13612–13621. [Google Scholar] [CrossRef]

- Shen, J.; Liu, N.; Xu, C.; Sun, H.; Xiao, Y.; Li, D.; Zhang, Y. Finger-vein recognition algorithm based on lightweight deep convolutional neural network. IEEE Trans. Instrum. Meas. 2022, 71, 5000413. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).