Abstract

To achieve successful investments, in addition to financial expertise and knowledge of market information, a further critical factor is an individual’s personality. Decisive people tend to be able to quickly judge when to invest, while calm people can analyze the current situation more carefully and make appropriate decisions. Therefore, in this study, we developed a multimodal personality-recognition system to understand investors’ personality traits. The system analyzes the personality traits of investors when they share their investment experiences and plans, allowing them to understand their own personality traits before investing. To perform system functions, we collected digital human behavior data through video-recording devices and extracted human behavior features using video, speech, and text data. We then used data fusion to fuse human behavior features from heterogeneous data to address the problem of learning only one-sided information from a single modality. Through several experiments, we demonstrated that multimodal (i.e., three different signal inputs) personality trait analysis is more accurate than unimodal models. We also used statistical methods and questionnaires to evaluate the correlation between the investor’s personality traits and risk tolerance. It was found that investors with higher openness, extraversion, and lower neuroticism personality traits took higher risks, which is similar to research findings in the field of behavioral finance. Experimental results show that, in a case study, our multimodal personality prediction system exhibits high performance with highly accurate prediction scores in various metrics.

1. Introduction

Automated analysis of human affective behavior is attracting increasing attention from researchers in psychology, computer science, linguistics, neuroscience, and other related disciplines. With the help of advanced artificial intelligence techniques, multimodal sensing systems using pattern recognition methods for human behavior analysis can operate the fusion of measurements from different sensor modalities [1]. Most of the research work focuses on the application of emotion recognition, such as [2]. This advance implies that the application of human–computer interaction (HCI) relies on knowledge about human emotional experience and about the relationship between emotional experience and emotional expression [3].

More recently, several multimodal sensing systems were directed at developing applications for personality trait recognition, such as for job interviews [4,5], work stress tests [6], public speaking [7,8], consumer behavior [9], and verbal rating systems [10]. These applications achieved good results in various fields in terms of analyzing personality traits using individual behavioral characteristics.

Another application area that could benefit from an automatic understanding of an individual’s personality traits is the wealth management industry. The wealth management industry faces a shift in the role of financial advisors. At the same time, automation technology spawned tools to make this transition more seamless. Using personality trait prediction, financial advisors can provide quality service to customers while using reasonable resources. An increasing amount of research suggests that emotional changes influence the investment process [11,12], and that deeper personality traits also play an important role in the investment decision-making process [13]. Interestingly, most of the past research in finance used questionnaires to understand people’s personality traits and used statistical methods to discover which specific personality traits are associated with, and influence, an individual’s investment behavior. [14,15,16,17,18]. However, few studies chose to directly analyze changes in customer behavior applied to the financial sector.

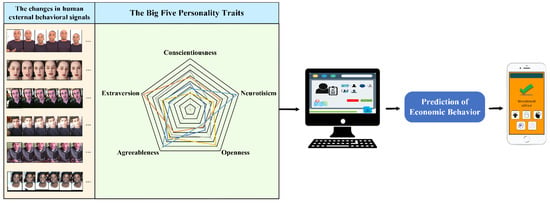

The personality characteristics of bank customers can be analyzed through personality computing. Therefore, in addition to the traditional use of questionnaires provided by banks to investigate customer risk tolerance, we can also use the developed system to collect additional information about customer interaction behavior, financial knowledge, consumption habits, and personality. An example of an automatic personality-recognition system workflow for prediction of a bank client’s economic behavior is illustrated in Figure 1. As shown in Figure 1, by collecting the features of human external behavior signals, it is possible to quantify the performance of human behavior, analyze the personality characteristics of individuals, help financial advisors understand the real risk tolerance of customers, and then provide appropriate investment planning and direction. Therefore, in this study, we propose a personalized multimodal affective sensing system to understand the investment attributes of financial customers in wealth management. The goals of this study are listed as follows:

Figure 1.

An example automatic personality-recognition system workflow for prediction of bank client economic behavior.

- To digitize the behavioral characteristics of individuals and explore how to develop appropriate investment plans according to the analyzed clients’ personalities.

- To assist financial advisors in helping clients with wealth management, we developed a multimodal personality-recognition system to collect subtle variations in client-generated conversational data. By analyzing the information on customer interaction behavior, we can help them adjust their investment plans in a timely manner.

In this study, we, therefore, worked with financial advisors to design questions related to financial management in customers’ daily life, such as daily consumption, work status, household expenses, personal financial management experience, and knowledge of financial products, in order to understand the customer’s recent living situation and financial risk tolerance. In addition, in the course of the customer conversations, we used a camera to record customer behavioral changes as they described their life situation and discussed issues. We then used the multi-modal personality-recognition system to analyze the personality characteristics of customers during the interaction process, and to analyze the investment attributes of customer financial management.

The remainder of the paper is organized as follows. In Section 2, we summarize the recent work related to our research. In Section 3, we describe our proposed system model and system framework, and report our approach to extracting behavioral features. The effectiveness of our proposed system was tested by customizing the dataset, as described in Section 4. In Section 5, we test and evaluate the system’s effectiveness with the behavioral data of financial advisors and clients. In Section 6, the experimental procedure and results are discussed in detail; future work is also discussed, and we conclude the paper and present our views on this emerging area of research.

2. Related Work

Personality is a characteristic by which we identify differences between people and which also has a significant impact on a person’s behavior and thinking. In the field of psychology, there are various personality modeling methods, such as the Big Five Model [19], the Myers–Briggs Type Indicator (MBTI) [20], Cattell’s 16 Personality Factor Model [21], the PEN Model [22] and the HEXACO Model [23]. It is worth mentioning that the HEXACO model is similar to the Big Five model, the main difference being the added honesty–humility dimension of the HEXCAO model. Table 1 shows a list of common personality trait models. Within each personality model, different human personalities are distinguished according to psychological theories, the most popular measure in automatic personality detection being the use of the Big Five model [24]. Traditionally, personality traits were mostly determined by questionnaire analysis and self-assessment to determine an individual’s personality score: these can effectively and directly present an individual’s true personality; however, there are still limitations that make it difficult to explore more complex behavioral patterns in depth [25,26]. As a result, research on human behavioral signals for analyzing personality is increasing, most notably due to the growing availability of high-dimensional and fine-grained data on human behaviors such as social interactions and daily life, which allows machine learning models to incorporate personality psychology for personality assessment [27,28]. In previous studies, personality detection techniques were increasingly used to analyze human personality differences. We observed that personality changes can be analyzed from human behavioral signals and applied to health, work, school, mental disorders, human resources employment, management and customer behavior, and many other applications [27]. In healthcare, wearable sensors were used to collect data on the physical activity, face-to-face interaction, and physical proximity of post-anesthesia care unit (PACU) staff to identify personality traits and measure team performance during interviews [29]. Suen [30] developed an end-to-end AI interview system to extract subjects’ facial expression features for automatic personality recognition through asynchronous video interviews. The experimental results showed that although machine learning was trained without large-scale data, the automatic personality-recognition (APR) system still maintained 90.9–97.4% accuracy. Hsiao [31] believes that in public speaking, leadership and emotional appeal can better reflect an individual’s communicative behavior. The authors collected speeches by former principals and extracted behavioral features using k-means word bag and Fisher vector coding; their results showed that word usage (lexicality) was important in motivational and emotionally infectious speeches. In other applications, Balakrishnan [32] detected the psychological characteristics (personalities, sentiment, and emotion) of Twitter users through automatic cyberbullying detection mechanisms, e.g., random forest, plain Bayesian, and other models, and suggested that extraversion, agreeableness, and neuroticism were more relevant to cyberbullying perpetration.

Table 1.

The major personality traits models.

In Figure 1, we organize changes in human behavior exemplified by changes in facial expressions. These photos are from the first impression dataset provided by ChaLearn Look at People (LAP). Various studies showed that personality differences can be identified from facial recognition [33,34]. Xu [35] proposed a new method for predicting the personality characteristics of university students using face images, which was performed effectively in the Soft Threshold Based Neural Network for Personality Prediction deep neural network, and which showed an accuracy of over 90% for neuroticism and extraversion on classification tasks. Sudiana [36] combined the Active Appearance Model (AAM) and Convolutional Neural Network (CNN) algorithms in the FFHQ dataset for a personality classification task on facial features, and the experimental results showed an average accuracy of 87.9%. As an expression of human behavior signals, audio data also have many excellent applications for personality prediction. Common feature extraction methods are the use of low-level descriptors or prosodic features as input signals for automatic personality trait recognition [37,38]. These methods suggested that audio information has a significant impact on the ability to recognize personality. Some studies used information from social media to identify user personalities, while others used common methods for detecting personalities in texts, such as LIWC [39] to extract various psychologically related words as features to predict the personality of the text authors [40,41]. There are also document-level detection and classification applications: Sun [42] proposed a 2CLSTM model (CNN + BiLSTM) and extracted latent sentence groups (LSG) from text as input features, in two different types of datasets (long text and short texts), achieving good results. At the same time, we found that most previous studies focused on analyzing the influence and association of behavioral signals and emotions, so there are numerous open-source databases available to researchers; however, there are relatively few publicly available datasets on personality and human behavioral signals, especially multimedia datasets. For this reason, many researchers created databases for their research. We compiled a list (Table 2) of recent studies on the analysis of personality traits through human behavioral signals.

Table 2.

Recent studies on the analysis of personality traits through human behavioral signals.

The relationship between the Big Five personality traits and financial behavior is well documented in behavioral finance research. For investment risk management, Dhiman [48] believes that risk is an important factor to consider when making investment decisions; she used a multivariate regression analysis model to determine from investors that people with agreeableness, extroversion, and openness to experience are related to risk tolerance. Aren [49] analyzed the effects of the Big Five personality traits and emotions on investor risk aversion, investment intentions, and investment choices. They validated them with statistical methods such as ANOVA and t-tests. Finally, they concluded that neuroticism and openness, as well as fear and sadness, were predictors of risk aversion in the personality traits. Aumeboosuke [50] concluded that positive thinking can contribute to risk-averse behaviors and collected 100 subjects for a Big Five personality trait measure, which was statistically analyzed using Partial Least Squares (PLS) structural equation modeling. The results demonstrated that personality traits influenced an individual’s risk-averse attitudes; it was found that affinity and emotional stability had negative effects on risk-averse ability, while conscientiousness and openness had significant positive effects. Rai [51] collected quantitative scores from 599 investors through questionnaires and analyzed the influence of five personality traits on risk tolerance through correlation and regression tests. The results confirmed that agreeableness, conscientiousness, and openness were significantly correlated with risk tolerance. Chhabra [52] used statistical methods such as correlation analysis and utilized the Kruskal–Wallis H Test to verify that impulsive personality traits had some influence on investors when they made investment decisions. Vanwalleghem [53] used the Proactive Personality Inventory to measure the proactivity of the subjects and to construct a regression model for analysis. The experimental results showed that investors maintained proactive traits so they could continue their investment behavior, while the opposite affected their willingness to invest. Chitra [54] used Systematic Random Sampling (SRS) to analyze the personality traits and investment patterns of 97 investors and to confirm the association between the majority of investors who had extraversion and emotional stability traits and investment behavior. In addition, other financial behavior researchers based their work on the Big Five personality traits; for example, Cabrera [55] proposed an adaptive intelligent system (AAS) for stock investment, designing five modules, named Investment Profile Manager, Market Data Manager, Market Analyzer, Portfolio Analyzer, and Decision Engine. The system was adjusted for five personality traits and investment market fluctuations. The results of the experiments on the official data from the New York Stock Exchange showed that Extraversion and Agreeableness accumulated the most wealth in the system. Using an ANOVA/Kruskal–Wallis model, Chen [56] analyzed the influence of investors’ personalities on short-term and long-term trading performance, analyzing the personalities through machine learning models, such as logistic, multilevel perceptron, and random forest. The experimental results showed that people with agreeableness, extraversion, and openness were able to achieve better long-term returns than people with highly neurotic traits. Thomas [57] proposed that features of handwriting could be mapped to five personality trait dimensions to identify financial behavior. Accordingly, the authors collected texts written by ten subjects using a CNN model and logistic regression, and selected seven features, such as spacing and ascending lines, for the classification task, achieving an accuracy of 63.97%.

To sum up, there are a number of successful examples, such as education and job interviews, where personality trait analysis using human behavioral signals was applied. Although analyzing investment behavior based on five personality traits is a common method in investment applications, few people use behavioral signals to analyze investment behavior. Therefore, in this work, we develop a multimodal system for analyzing personality traits via behavioral signals. The system will help human financial advisors provide appropriate financial planning advice based on the deep personalities detected in people’s investments.

3. System Model

3.1. System Framework

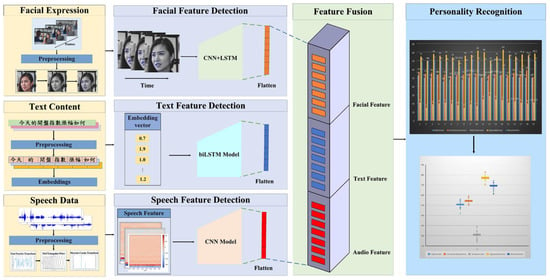

In this study, we used cameras to record clients’ thoughts and reactions as they talked about their investments and wealth management. In order to digitize the behavioral signals of customers, their facial expressions, speech tones, and words used while chatting were collected during the interview, as the basis for judging the behavior of financial customers. In addition, we combined multimodal affective sensing methods, natural language processing techniques, and deep learning models to deeply analyze the external behavior expression process of financial customers. Further, through the analysis of multimodal personality traits, the system is able to predict the scores of five personality trait indicators by integrating collected behavioral information, thereby helping financial advisors to understand customers more deeply, so as to provide customized financial services. The above-mentioned process and system framework are illustrated in Figure 2.

Figure 2.

System Framework.

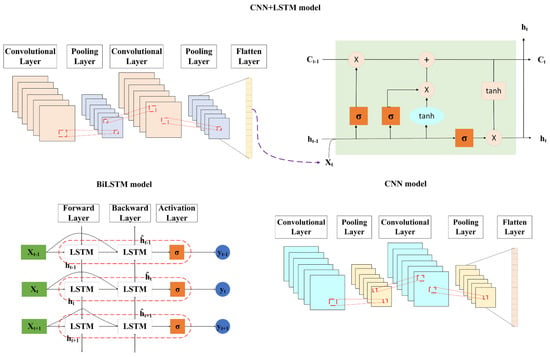

The CNN and LSTM models are well known for feature extraction in deep learning; we used the CNN model to extract speech features and facial expressions. For facial expressions, we extracted spatial features using CNN and input them to LSTM to extract temporal features. For speech features, we extracted MFCC, ZCR, and spectral centroid features before learning by the CNN model. For text, we extended the training process using the BiLSTM model with training in the direction of positive and negative times. The model architectures are shown in Figure 3.

Figure 3.

Illustration of CNN + LSTM, BiLSTM, and CNN model architectures.

3.2. Data Collection from Financial Clients

In wealth management, a very important task is to conduct “know your client” surveys (e.g., using the Attitudes to Risk Questionnaire, ATRQ), in which financial advisors typically interact face-to-face with clients using risk attribute questionnaires. The Attitude to Risk Questionnaire (ATRQ) is used to determine the risk tolerance of financial clients and make appropriate investment recommendations. In this study, we designed a dialogue process with financial advisors and collected 32 participants for an interactive dialogue in which participants shared their investment and financial knowledge, investment behavior motivation, investment experience, and investment–risk solution.

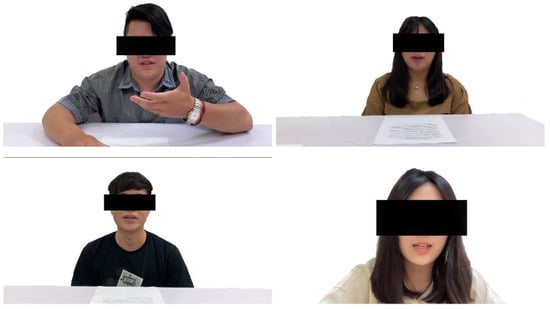

Before talking to each customer, we asked each individual to complete the Big Five personality trait questionnaire, and obtained each customer’s Big Five personality trait score. Using a video camera, we then recorded a total of 32 customers, including 22 males and 10 females, between the ages of 20–69. Following this, each participant spoke in Chinese for almost 10 min, during which time cameras were used to capture clear images of the client’s body. During the training of the model, we split the collected data into a ratio of 80% training set and 20% test set, and then split 20% of the training set as the validation set. Figure 4 shows the sample photos we collected during the experiment.

Figure 4.

Sample video photos collected during the experiment.

3.3. Personality Feature Extraction

We utilized the developed multimodal affective sensing system to detect the user’s facial expressions, the fluctuations in the tone of speech, and the text data appearing in the chat throughout the interaction with the user, as the basis for judging the user’s behavior. After collecting the customer’s video data, we manually edited each customer’s speech content (for each conversation) to generate 3452 video clips, including images, speech, and transcribed texts. Finally, we performed behavioral analysis on all face, speech, and text data.

3.3.1. Facial Expression Analysis and Feature Extraction

The video was preprocessed using the OpenCV tool, the most commonly used video-data-processing tool. We first extracted a frame at 60 fps, which is 1000 ms, to obtain an image. Finally, we took three image frames for each conversation. However, in order to emphasize the five facial features in each image, we first converted the image data to grayscale and set the image size to 128×128; we then used the Facial Landmark method to detect the location of the facial features and crop the image. This method was shown to outperform other neural networks when extracting face-related features from images and aligning them [58,59]; this provided a good choice for our next training efforts.

3.3.2. Speech Analysis and Feature Extraction

Time-domain features can be obtained from the original audio waveform by physical transformations, such as zero-crossing rate, maximum amplitude, or RMS energy, while frequency-domain features are usually converted from time to frequency domain by Fourier transform. Frequency-domain features, such as spectral centroid and spectral flux, are typically transformed from the time domain to the frequency domain using a Fourier transform. To extract emotional features from audio files, in this study, we used Librosa to extract personality features from audio. This method simulates the auditory nerve of the human ear, only paying attention to sounds of certain frequencies [60,61] and adding zero-crossing rate (ZCR) and spectral centroid as input features to the model. Speech features were extracted by setting a sliding window of 50 ms.

3.3.3. Text Analysis and Feature Extraction

In the application of personality trait analysis, we found that many researchers used Linguistic Inquiry and Word Count (LIWC) [39], Medical Research Council (MRC), or N-grams as a way of extracting textual features. In this work, we used Jieba for preprocessing of text data such as word separation; this is the most commonly used word separation tool in Chinese NLP development and enables the computer to effectively recognize the character features in the dialogues. Considering that different personalities use different words, we did not use any deactivation vocabulary to remove the auxiliary words, but left each paragraph completely intact for the model to learn the semantic meaning expressed by the clients in each conversation. Finally, we set the maximum sentence length to 150 and included it in the vector space as a feature using word embedding; this eventually provided us with a 200-dimensional feature vector as the training target in our neural network. In this study, word embedding was performed by tencent-ailab-embedding-zh, using the Directional Skip Gram (DSG) algorithm [62] as a framework; the relative positions of words were additionally considered based on the word co-occurrence relationship, which can increase the semantic accuracy.

3.3.4. Feature Fusion

In financial applications, it is difficult to understand a person’s entire personality from unilateral behavioral data, which makes it impossible for us to fully understand a customer’s potential personality and usual consumption behavior. We, therefore, conducted a multi-faceted personality analysis of our customers through images, speech, and text. In general, there are three methods for processing features extracted from different modalities: early fusion (feature-level fusion) [63], late fusion (decision-level fusion) [64], and hybrid fusion [65]. In this study, we used feature fusion for system implementation. The advantage of using feature fusion is that associations between various multimodal features provide a better fusion approach for classification or prediction tasks.

4. Experiments and Results

In this work, we designed two sets of processes to conduct experiments to build a multimodal system to analyze customers’ financial risk tolerance. In order to understand the various personality characteristics of customers, we quantified human behavior data; the five personality trait scores obtained were used as labels to train the deep learning models and we adjusted the parameters to achieve the best performance between the models. In the first stage, we set the same parameters for each model, such as the number of neurons, layers, learning rate, and batch size. We then used the Early Stopping method to determine the loss of validation data if these could not be improved continuously and the accuracy rate could no longer be improved. Following this, we stopped the training to reduce overfitting and avoid wasting computing resources. Finally, we used , , , and other regression scores to evaluate the performance of the system, as shown in the following equation, and to verify the generalization of the system. In Formula (3), denotes the residual sum of squares, and denotes the total sum of squares. In Formulae (4) and (5), N denotes the number of samples, is the true personality trait score, and is the prediction score.

4.1. Deep Feature Learning from a Single Modality of an Individual for Personality Prediction

4.1.1. Personality Trait Analysis Based on Facial Expression Features

In this work, CNN + LSTM were used to learn the properties of five facial features. We built four pairs of convolutional and pooling layers, and one LSTM layer followed by two fully connected layers, using 64 filters in the first convolutional layer with a kernel size of 3 × 3, using the fill method to set the input size dimension and output size, and ReLU as the activation function. The following pooling layer was set to the maximum pooling method with a pooling window size of 2 × 2. Each subsequent convolutional and pooling layer was treated according to this setting, except that the number of filters increased multiplicatively with the number of convolutional network layers and was set to 128, 256, and 512 in this order.

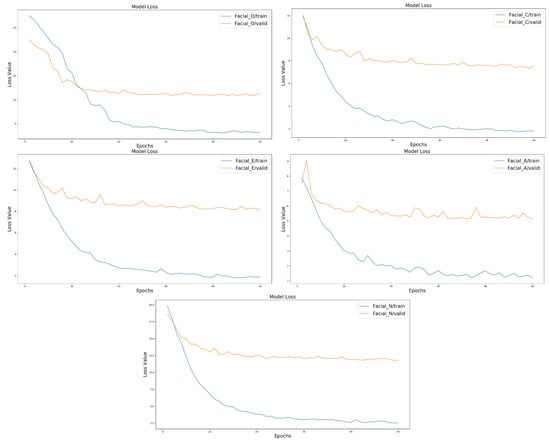

In order to turn the three-dimensional tensors into a one-dimensional tensor input to the fully connected layer, we inserted a flatten layer before the final output fraction, and the first fully connected layer had 128 neurons. We set the dropout to 0.3 in training to limit the risk of overfitting during the learning process, and we set the initial learning rate to 0.01 and dynamically altered the learning curve to improve the model’s learning capabilities. In Figure 5, we present the loss results for predicting the personality trait score based on the CNN + LSTM model. As illustrated in this figure, we can see that the loss values of the five personality traits could be effectively converged during the model learning process. The results of the model evaluation based on facial-expression feature learning are listed in Table 3.

Figure 5.

Comparison of loss values for facial expression features using CNN + LSTM model.

Table 3.

Evaluation results of the CNN + LSTM model based on facial features.

4.1.2. Personality Trait Analysis Based on Speech Features

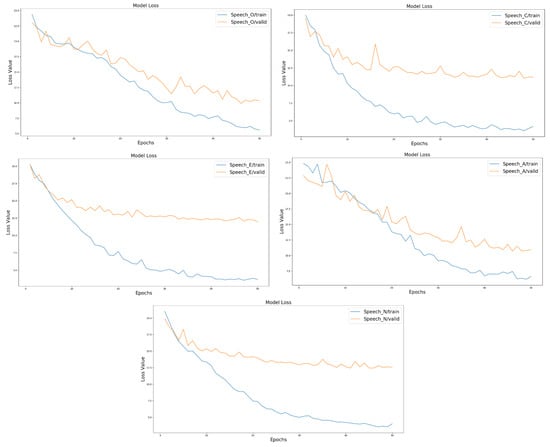

For the speech feature, we used CNN as the model for acoustic feature extraction. In developing the CNN architecture, we constructed four pairs of convolutional and pooling layers regarding the above feature extraction model structure and used two fully connected layers successively. In the first convolutional layer, we chose 32 filters with a kernel size of 3 × 3, used a padding method to fix the input size dimension and output size, and used ReLU as the activation function. For the next pooling layer, we set the pooling window size to 2 × 2 by the maximum pooling method, 64 filters for the second and third layers, 128 filters for the fourth layer, and the same parameters as in the facial feature model. Again, before the final output score, we added a flatten layer in front of the fully connected layer, with 128 neurons in the first fully connected layer. We set the neurons to one in the last layer for the score prediction task and used the linear activation function for the prediction. Table 4 demonstrates the validation of the performance of the model using MAE, MSE, and other evaluation methods to verify the performance of the model. Figure 6 demonstrates that although the initial loss value is higher, the loss value of the model tends to decrease gradually as the epoch increases.

Table 4.

Evaluation results for the CNN model based on speech features.

Figure 6.

Comparison of loss values for speech features using CNN model.

4.1.3. Personality Trait Analysis Based on Text Features

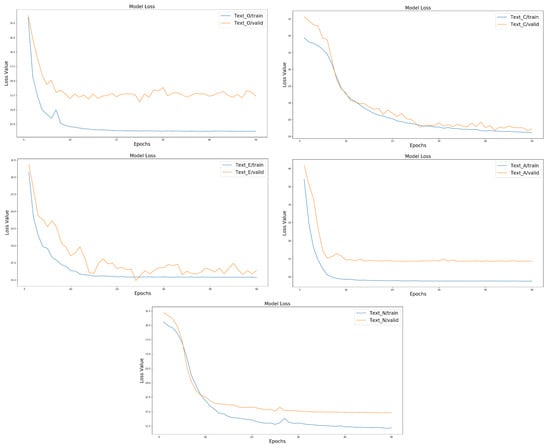

Text analysis is an effective method for collecting personality information. Most personality trait analysis literature in text-feature learning uses RNN and LSTM models as feature extraction approaches. However, for the long sequence of text message propagation processes, the unidirectional LSTM struggles to convey the message to the finish in the current timestep. As a result, the model for text feature extraction is a two-way LSTM nerve network. We utilized 200-dimensional feature vectors as the input shape and five bidirectional LSTM layers with ReLU activation functions in each layer, as well as 256, 128, 64, 32, and 16 neurons in the neuron settings. We set the number of neurons in the last fully linked layer to one and utilized the linear activation function to predict the personality trait scores. Table 5 presents the evaluation results. We found that the evaluation values of the model are slightly high, probably because the transcribed words in the dataset are too similar, resulting in the model learning effectiveness not being particularly strong. Nevertheless, we can understand from the graph that the loss value of the model maintains a continuously decreasing trend, despite the loss value being high. Figure 7 illustrates the comparison of loss values of text features using Bidirectional LSTM model.

Table 5.

Evaluation results for the Bidirectional LSTM model based on text features.

Figure 7.

Comparison of loss values of text features using Bidirectional LSTM model.

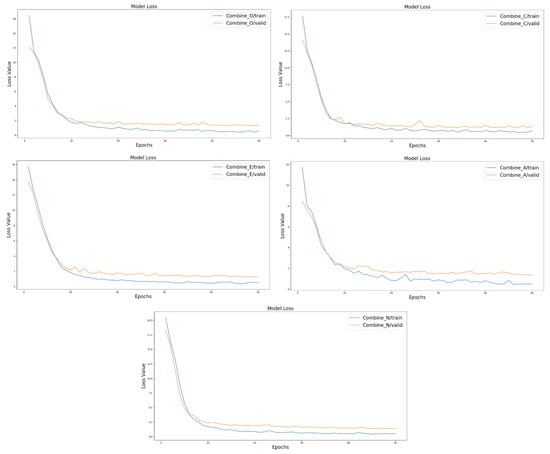

4.2. Personality Trait Analysis Based on Multimodal Feature Fusion

After the first phase of the experiments, it was found that the use of deep learning models is effective in predicting the scores of the Big Five personality traits; however, we found that the margin of error in the text and speech experiments was significant, so we extracted the features learned from the deep learning model, which used a flatten layer to convert the arrays into one dimensional features. The facial expression features, acoustic features, and text features were fused and mapped to the Big Five personality traits by nonlinear changes in the fully connected layer. Table 6 presents the multimodal assessment of the five personality trait scores based on the feature fusion approach. It is noticeable that for each personality trait, each assessment outperforms the results predicted by a single modality, which supports our belief that different aspects of personality need to be considered in determining personality. From Figure 8, we can observe that the performance of the multimodal approach based on personality trait prediction is significantly better than that of the unimodal approach in terms of loss value.

Table 6.

Evaluation results for the multimodal model based on feature fusion approach.

Figure 8.

Comparison of loss values of the combined three-features using the multimodal model.

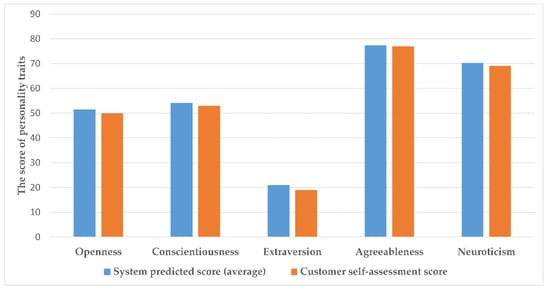

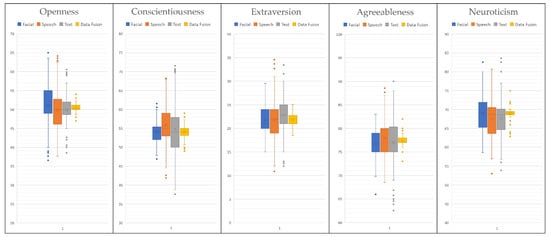

4.3. Correlation Assessment

We also conducted a correlation assessment experiment to analyze the correlation between investor personality traits and risk tolerance in this case study. First, we compared the predicted results of the Big Five personality traits in the developed multimodal system with the results of self-assessed personality traits, as shown in Figure 9. In Figure 9, the prediction results of the developed multimodal system were obtained by averaging dialogue scores, which were similar to the results for self-assessment. In Figure 10, we show the actual prediction results of our developed personality prediction system for the five customer personality traits. We then analyzed the results of the investor personality traits questionnaire and risk tolerance questionnaire using the Pearson correlation method. Table 7 shows that openness, extraversion, and neuroticism are directly correlated with risk tolerance, with openness and extraversion showing a positive correlation, and neuroticism having a negative correlation. The other two personality traits (i.e., agreeableness and conscientiousness) are not directly reflected in risk tolerance.

Figure 9.

An example of a personality trait assessment (system predicted score vs. customer self-assessment score).

Figure 10.

Sample predicted scores for customer personality traits.

Table 7.

Case study correlation analysis of investor personality traits questionnaire and risk tolerance questionnaire results.

5. Discussion

In this study, we collected customers’ personalized behavioral data by quantifying human behavior signals, and then extracting different modality types of data through various deep learning methods to analyze customer personality traits. The resulting personality trait scores can be used as a reference for predicting an individual’s investment risk tolerance. The experimental results are discussed in detail as follows:

- In past research work, it was recognized that personality traits can profoundly affect people’s habits, behaviors, and even decision-making. In behavioral finance theory, it is believed that investors are easily affected by psychological and behavioral factors that affect investment judgment, leading to irrational investment behavior. Therefore, in this research work, we explored the impact of many personality traits on investment and utilized deep learning techniques to extract deep features of human behavioral signals as client personality traits. We used multimodal data fusion techniques to address the biggest problem associated with unimodal techniques, i.e., that only one-sided personality traits can be learned.

- The experimental results of this work are shown in Table 3, Table 4, Table 5 and Table 6. In this case study, we found that facial expression features performed relatively well on unimodal measurement tasks. In non-verbal communication, we could clearly observe the changes in facial expressions; the degree of emotion, thought and attention conveyed by facial expressions is more obvious, and the influence of text features is the least obvious. This is mainly because the information content of each person’s reply may be relatively similar, so the model cannot accurately judge the change in each personality trait.

- As discussed earlier, previous research showed that investor risk taking is highly correlated with personality traits [66]. For example, according to Pak [66], conscientious people are determined, methodical, dependable, persistent, and punctual, and do not take higher risks impulsively. People with high openness to experience generally tend to conduct new experiments and take higher risks [67]. Extroverted people are more optimistic about life and events. Their positive attitude towards life and events may increase the overvaluation of the market and the undervaluation of possible risks. On the other hand, a negative attitude and narrow focus can lead to an overestimation of risk and may lead to the loss of profitable investment opportunities [68]. People with low agreeableness are generally skeptical and curious, consider more information than people with high agreeableness, and ultimately take fewer risks and make more computational decisions [54]. People with low neuroticism feel greater anxiety when making risk-taking decisions [69,70]. Similarly, in our case study, we used questionnaires and Pearson’s correlation coefficient analysis to perform correlation analysis between the personality trait scores and risk tolerance of 32 subjects. The results showed that openness, extroversion, and neuroticism were highly correlated with risk tolerance in investing. People with personality traits higher in openness, extraversion, and lower in neuroticism were able to take higher risks. These results are similar to the research findings in [66].

- Although we used the Big Five personality traits as the basis of the client’s personality in this research work to judge client risk tolerance in financial investment behavior, we still need to confront the complexities of factors that influence individual investment behavior. For example, the age, work, and education level of clients are also influential. In this work, we did not include these factors. We only took personality as the main factor to explore the impact of personality traits on investment behavior. The results coincide with the use of traditional questionnaires in behavioral finance research methods.

- Moreover, the study has several limitations. First, it only takes place in one specific city, Kaohsiung City (a city in southern Taiwan). Generalizations of the findings require careful consideration. Second, our research ignores social and cultural dimensions that may have some influence on investors’ economic behavior.

6. Conclusions

Developing effective methods to advance machine interpretation of human behavior at a deeper level is a challenging task. By collecting and digitizing the characteristics of human external behavioral signals, the expression of human behavior can be quantified for personality recognition. However, the most difficult part of quantifying individual behavior is the interpretation of interactions. When various human behaviors can be transformed into data for analysis, although the amount of data that can be captured is large, it is not consistent, which will eventually lead to wrong decisions. In this study, we demonstrate the development of automated tools and methods to address this problem by presenting an application in the wealth management industry. Automatic understanding of customer personality traits for wealth management has a great potential to drive the development of Financial Technology (FinTech) applications. In this work, we developed a multimodal personality prediction system that detects personality traits and analyzes the personality traits of investors when they share their investment experiences and plans to enable them to understand which personalities they have before investing. In order to test the capacity and robustness of the model, we designed a financial dialogue scenario and invited professional financial advisors to design dialogue content. Automatic personality recognition using unimodal detection approaches made great strides in various applications; however, there is still much room for improvement in applications using multimodal techniques. This work is one of the few applied studies that applies multimodal personality analysis to the analysis of individual investment behavior. The experimental results confirm that multimodal approaches based on feature fusion techniques can achieve better results compared with unimodal approaches. The work also demonstrates the influence of personality traits in investment and wealth management.

Much work remains to be done for further research. First, numerous studies in the field of affective computing confirmed that physiological signals such as electroencephalography (EEG) and heart rate are more accurate in analyzing behavioral characteristics, and the combination of other behavioral signals is more in line with people’s real-world behavioral motivations. Second, the application of this study is at the initial development stage, and the number of clients collected was limited. We hope to add more samples and datasets in the future so that we can continue to investigate more diverse research contents in multimodal emotion analysis techniques. Third, in addition to the five personality traits, the field of behavioral finance is beginning to take into account additional factors such as age, gender, and personal financial ability, among other variables. Integrating various factors to better match people’s current situations and motivations will lead to many research directions in the field of affective computing, such as developing human–computer interaction techniques to better understand more complex contextual perceptions in daily life.

Author Contributions

Conceptualization, C.-H.L. and Y.-X.T.; methodology, C.-H.L.; software, Y.-X.T.; validation, H.-C.Y. and X.-Q.S.; formal analysis, H.-C.Y.; investigation, C.-H.L.; resources, X.-Q.S.; data curation, H.-C.Y.; writing—original draft preparation, C.-H.L.; writing—review and editing, C.-H.L.; visualization, H.-C.Y.; supervision, C.-H.L.; project administration, C.-H.L.; funding acquisition, C.-H.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was partially funded by National Science and Technology Council (109-2221-E-992-068-) (in Taiwan).

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and approved by Human Research Ethics Committee at National Cheng Kung University (NCKU HREC-E-109-328-2, 1 August 2020) for studies involving humans.

Informed Consent Statement

Not applicable.

Data Availability Statement

The study did not report any data.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Zeng, Z.; Pantic, M.; Roisman, G.I.; Huang, T.S. A survey of affect recognition methods: Audio, visual and spontaneous expressions. In Proceedings of the 9th International Conference on Multimodal Interfaces, Nagoya Aichi, Japan, 12–15 November 2007; pp. 126–133. [Google Scholar]

- Lee, C.M.; Narayanan, S.S. Toward detecting emotions in spoken dialogs. IEEE Trans. Speech Audio Process. 2005, 13, 293–303. [Google Scholar]

- Soleymani, M.; Lichtenauer, J.; Pun, T.; Pantic, M. A multimodal database for affect recognition and implicit tagging. IEEE Trans. Affect. Comput. 2011, 3, 42–55. [Google Scholar] [CrossRef]

- Anglekar, S.; Chaudhari, U.; Chitanvis, A.; Shankarmani, R. A Deep Learning based Self-Assessment Tool for Personality Traits and Interview Preparations. In Proceedings of the 2021 International Conference on Communication information and Computing Technology (ICCICT), Mumbai, India, 25–27 June 2021; pp. 1–3. [Google Scholar]

- Nguyen, L.S.; Frauendorfer, D.; Mast, M.S.; Gatica-Perez, D. Hire me: Computational inference of hirability in employment interviews based on nonverbal behavior. IEEE Trans. Multimed. 2014, 16, 1018–1031. [Google Scholar] [CrossRef]

- Radzi, N.H.M.; Mazlan, M.S.; Mustaffa, N.H.; Sallehuddin, R. Police personality classification using principle component analysis-artificial neural network. In Proceedings of the 2017 6th ICT International Student Project Conference (ICT-ISPC), Johor, Malaysia, 23–24 May 2017; pp. 1–4. [Google Scholar]

- Butt, A.R.; Arsalan, A.; Majid, M. Multimodal personality trait recognition using wearable sensors in response to public speaking. IEEE Sens. J. 2020, 20, 6532–6541. [Google Scholar] [CrossRef]

- Chen, L.; Leong, C.W.; Feng, G.; Lee, C.M.; Somasundaran, S. Utilizing multimodal cues to automatically evaluate public speaking performance. In Proceedings of the 2015 International Conference on Affective Computing and Intelligent Interaction (ACII), Xi’an, China, 21–24 September 2015; pp. 394–400. [Google Scholar]

- Generosi, A.; Ceccacci, S.; Mengoni, M. A deep learning-based system to track and analyze customer behavior in retail store. In Proceedings of the 2018 IEEE 8th International Conference on Consumer Electronics-Berlin (ICCE-Berlin), Berlin, Germany, 2–5 September 2018; pp. 1–6. [Google Scholar]

- Saeki, M.; Matsuyama, Y.; Kobashikawa, S.; Ogawa, T.; Kobayashi, T. Analysis of Multimodal Features for Speaking Proficiency Scoring in an Interview Dialogue. In Proceedings of the 2021 IEEE Spoken Language Technology Workshop (SLT), Shenzhen, China, 19–22 January 2021; pp. 629–635. [Google Scholar]

- Hu, J.; Sui, Y.; Ma, F. The measurement method of investor sentiment and its relationship with stock market. Comput. Intell. Neurosci. 2021, 2021, 6672677. [Google Scholar] [CrossRef]

- Brooks, C.; Sangiorgi, I.; Saraeva, A.; Hillenbrand, C.; Money, K. The importance of staying positive: The impact of emotions on attitude to risk. Int. J. Finance Econ. 2022, 1–30. [Google Scholar] [CrossRef]

- Brooks, C.; Williams, L. The impact of personality traits on attitude to financial risk. Res. Int. Bus. Financ. 2021, 58, 101501. [Google Scholar] [CrossRef]

- Gambetti, E.; Zucchelli, M.M.; Nori, R.; Giusberti, F. Default rules in investment decision-making: Trait anxiety and decision-making styles. Financ. Innov. 2022, 8, 23. [Google Scholar] [CrossRef]

- Gambetti, E.; Giusberti, F. Personality, decision-making styles and investments. J. Behav. Exp. Econ. 2019, 80, 14–24. [Google Scholar] [CrossRef]

- Rajasekar, A.; Pillai, A.R.; Elangovan, R.; Parayitam, S. Risk capacity and investment priority as moderators in the relationship between big-five personality factors and investment behavior: A conditional moderated moderated-mediation model. Qual. Quant. 2022, 56, 1–33. [Google Scholar] [CrossRef]

- Tharp, D.T.; Seay, M.C.; Carswell, A.T.; MacDonald, M. Big Five personality traits, dispositional affect, and financial satisfaction among older adults. Personal. Individ. Differ. 2020, 166, 110211. [Google Scholar] [CrossRef]

- Nandan, T.; Saurabh, K. Big-five personality traits, financial risk attitude and investment intentions: Study on Generation Y. Int. J. Bus. Forecast. Mark. Intell. 2016, 2, 128–150. [Google Scholar] [CrossRef]

- Digman, J.M. Personality structure: Emergence of the five-factor model. Annu. Rev. Psychol. 1990, 41, 417–440. [Google Scholar] [CrossRef]

- Carlyn, M. An assessment of the Myers-Briggs type indicator. J. Personal. Assess. 1977, 41, 461–473. [Google Scholar] [CrossRef] [PubMed]

- Cattell, H.E.; Mead, A.D. The sixteen personality factor questionnaire (16PF). In The SAGE Handbook of Personality Theory and Assessment; Boyle, G.J., Matthews, G., Saklofske, D.H., Eds.; Sage: Thousand Oaks, CA, USA, 2008; Volume 2, pp. 135–159. [Google Scholar]

- Gray, J.A. The psychophysiological basis of introversion-extraversion. Behav. Res. Ther. 1970, 8, 249–266. [Google Scholar] [CrossRef]

- Ashton, M.C.; Lee, K. Empirical, theoretical, and practical advantages of the HEXACO model of personality structure. Personal. Soc. Psychol. Rev. 2007, 11, 150–166. [Google Scholar] [CrossRef]

- Remaida, A.; Abdellaoui, B.; Moumen, A.; El Idrissi, Y.E.B. Personality traits analysis using Artificial Neural Networks: A literature survey. In Proceedings of the 2020 1st International Conference on Innovative Research in Applied Science, Engineering and Technology (IRASET), Meknes, Morocco, 16–19 April 2020; pp. 1–6. [Google Scholar]

- Rammstedt, B.; John, O.P. Measuring personality in one minute or less: A 10-item short version of the Big Five Inventory in English and German. J. Res. Personal. 2007, 41, 203–212. [Google Scholar] [CrossRef]

- Phan, L.V.; Rauthmann, J.F. Personality computing: New frontiers in personality assessment. Soc. Personal. Psychol. Compass 2021, 15, e12624. [Google Scholar] [CrossRef]

- Mehta, Y.; Majumder, N.; Gelbukh, A.; Cambria, E. Recent trends in deep learning based personality detection. Artif. Intell. Rev. 2020, 53, 2313–2339. [Google Scholar] [CrossRef]

- Stachl, C.; Pargent, F.; Hilbert, S.; Harari, G.M.; Schoedel, R.; Vaid, S.; Gosling, S.D.; Bühner, M. Personality research and assessment in the era of machine learning. Eur. J. Personal. 2020, 34, 613–631. [Google Scholar] [CrossRef]

- Olguin, D.O.; Gloor, P.A.; Pentland, A. Wearable sensors for pervasive healthcare management. In Proceedings of the 2009 3rd International Conference on Pervasive Computing Technologies for Healthcare, London, UK, 1–3 April 2009; pp. 1–4. [Google Scholar]

- Suen, H.Y.; Hung, K.E.; Lin, C.L. TensorFlow-based automatic personality recognition used in asynchronous video interviews. IEEE Access 2019, 7, 61018–61023. [Google Scholar] [CrossRef]

- Hsiao, S.W.; Sun, H.C.; Hsieh, M.C.; Tsai, M.H.; Tsao, Y.; Lee, C.C. Toward automating oral presentation scoring during principal certification program using audio-video low-level behavior profiles. IEEE Trans. Affect. Comput. 2017, 10, 552–567. [Google Scholar] [CrossRef]

- Balakrishnan, V.; Khan, S.; Arabnia, H.R. Improving cyberbullying detection using Twitter users’ psychological features and machine learning. Comput. Secur. 2020, 90, 101710–101720. [Google Scholar] [CrossRef]

- Kachur, A.; Osin, E.; Davydov, D.; Shutilov, K.; Novokshonov, A. Assessing the Big Five personality traits using real-life static facial images. Sci. Rep. 2020, 10, 8487. [Google Scholar] [CrossRef] [PubMed]

- Borkenau, P.; Brecke, S.; Möttig, C.; Paelecke, M. Extraversion is accurately perceived after a 50-ms exposure to a face. J. Res. Personal. 2009, 43, 703–706. [Google Scholar] [CrossRef]

- Xu, J.; Tian, W.; Lv, G.; Liu, S.; Fan, Y. Prediction of the Big Five Personality Traits Using Static Facial Images of College Students with Different Academic Backgrounds. IEEE Access 2021, 9, 76822–76832. [Google Scholar] [CrossRef]

- Sudiana, D.; Rizkinia, M.; Rafid, I.M. Automatic Physiognomy System using Active Appearance Model and Convolutional Neural Network. In Proceedings of the 2021 17th International Conference on Quality in Research (QIR): International Symposium on Electrical and Computer Engineering, Depok, Indonesia, 13–15 October 2021; pp. 104–109. [Google Scholar]

- Polzehl, T.; Möller, S.; Metze, F. Automatically assessing personality from speech. In Proceedings of the 2010 IEEE Fourth International Conference on Semantic Computing, Pittsburgh, PA, USA, 22–24 September 2010; pp. 134–140. [Google Scholar]

- Guidi, A.; Gentili, C.; Scilingo, E.P.; Vanello, N. Analysis of speech features and personality traits. Biomed. Signal Process. Control 2019, 51, 1–7. [Google Scholar] [CrossRef]

- Pennebaker, J.W.; Francis, M.E.; Booth, R.J. Linguistic inquiry and word count: LIWC 2001. Mahway Lawrence Erlbaum Assoc. 2001, 71, 2001. [Google Scholar]

- Dos Santos, W.R.; Ramos, R.M.; Paraboni, I. Computational personality recognition from Facebook text: Psycholinguistic features, words and facets. New Rev. Hypermedia Multimed. 2019, 25, 268–287. [Google Scholar] [CrossRef]

- Salsabila, G.D.; Setiawan, E.B. Semantic Approach for Big Five Personality Prediction on Twitter. J. RESTI 2021, 5, 680–687. [Google Scholar] [CrossRef]

- Sun, X.; Liu, B.; Cao, J.; Luo, J.; Shen, X. Who am I? Personality detection based on deep learning for texts. In Proceedings of the 2018 IEEE International Conference on Communications (ICC), Kansas City, MO, USA, 20–24 May 2018; pp. 1–6. [Google Scholar]

- Wörtwein, T.; Chollet, M.; Schauerte, B.; Morency, L.P.; Stiefelhagen, R.; Scherer, S. Multimodal public speaking performance assessment. In Proceedings of the 2015 ACM on International Conference on Multimodal Interaction, New York, NY, USA, 9 November 2015; pp. 43–50. [Google Scholar]

- Ramanarayanan, V.; Chen, L.; Leong, C.W.; Feng, G.; Suendermann-Oeft, D. An analysis of time-aggregated and time-series features for scoring different aspects of multimodal presentation data. In Proceedings of the Sixteenth Annual Conference of the International Speech Communication Association, Dresden, Germany, 6–10 September 2015; pp. 1373–1377. [Google Scholar]

- Rasipuram, S.; Jayagopi, D.B. Asynchronous video interviews vs. face-to-face interviews for communication skill measurement: A systematic study. In Proceedings of the 18th ACM International Conference on Multimodal Interaction, ACM, Tokyo, Japan, 31 October 2016; pp. 370–377. [Google Scholar]

- Gavrilescu, M.; Vizireanu, N. Predicting the Big Five personality traits from handwriting. EURASIP J. Image Video Process. 2018, 2018, 57. [Google Scholar] [CrossRef]

- Giritlioğlu, D.; Mandira, B.; Yilmaz, S.F.; Ertenli, C.U.; Akgür, B.F.; Kınıklıoğlu, M.; Kurt, A.G.; Mutlu, E.; Gürel, Ş.C.; Dibeklioğlu, H. Multi-modal analysis of personality traits on videos of self-presentation and induced behavior. J. Multimodal User Interfaces 2021, 15, 337–358. [Google Scholar] [CrossRef]

- Dhiman, B.; Raheja, S. Do personality traits and emotional intelligence of investors determine their risk tolerance? Manag. Labour Stud. 2018, 43, 88–99. [Google Scholar] [CrossRef]

- Aren, S.; Hamamci, H.N. Relationship between risk aversion, risky investment intention, investment choices: Impact of personality traits and emotion. Kybernetes 2020, 49, 2651–2682. [Google Scholar] [CrossRef]

- Aumeboonsuke, V.; Caplanova, A. An analysis of impact of personality traits and mindfulness on risk aversion of individual investors. Curr. Psychol. 2021, 40, 1–18. [Google Scholar] [CrossRef]

- Rai, K.; Gupta, A.; Tyagi, A. Personality traits leads to investor’s financial risk tolerance: A structural equation modelling approach. Manag. Labour Stud. 2021, 46, 422–437. [Google Scholar] [CrossRef]

- Chhabra, L. Exploring Relationship between Impulsive Personality Traits and Financial Risk Behavior of Individual Investors. Int. J. Creat. Res. Thoughts 2018, 6, 1294–1304. [Google Scholar]

- Vanwalleghem, D.; Mirowska, A. The investor that could and would: The effect of proactive personality on sustainable investment choice. J. Behav. Exp. Financ. 2020, 26, 100313–100320. [Google Scholar] [CrossRef]

- Chitra, K.; Sreedevi, V.R. Does personality traits influence the choice of investment? IUP J. Behav. Financ. 2011, 8, 47–57. [Google Scholar]

- Cabrera-Paniagua, D.; Rubilar-Torrealba, R. A novel artificial autonomous system for supporting investment decisions using a Big Five model approach. Eng. Appl. Artif. Intell. 2021, 98, 104107–104116. [Google Scholar] [CrossRef]

- Chen, T.H.; Ho, R.J.; Liu, Y.W. Investor personality predicts investment performance? A statistics and machine learning model investigation. Comput. Hum. Behav. 2019, 101, 409–416. [Google Scholar] [CrossRef]

- Thomas, S.; Goel, M.; Agrawal, D. A framework for analyzing financial behavior using machine learning classification of personality through handwriting analysis. J. Behav. Exp. Financ. 2020, 26, 100315–100331. [Google Scholar] [CrossRef]

- Zhang, D.; Li, J.; Shan, Z. Implementation of Dlib Deep Learning Face Recognition Technology. In Proceedings of the 2020 International Conference on Robots & Intelligent System (ICRIS), Sanya, China, 7–8 November 2020; pp. 88–91. [Google Scholar]

- Niu, G.; Chen, Q. Learning an video frame-based face detection system for security fields. J. Vis. Commun. Image Represent. 2018, 55, 457–463. [Google Scholar] [CrossRef]

- Ganchev, T.; Fakotakis, N.; Kokkinakis, G. Comparative Evaluation of Various MFCC Implementations on the Speaker Verification Task. In Proceedings of the 10th International Conference on Speech and Computer (SPECOM 2005), Rion-Patras, Greece, 17–19 October 2005; Volume 1, pp. 191–194. [Google Scholar]

- Peng, P.; He, Z.; Wang, L. Automatic Classification of Microseismic Signals Based on MFCC and GMM-HMM in Underground Mines. Shock. Vib. 2019, 2019, 5803184. [Google Scholar] [CrossRef]

- Song, Y.; Shi, S.; Li, J.; Zhang, H. Directional skip-gram: Explicitly distinguishing left and right context for word embeddings. In Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, New Orleans, LA, USA, 1–6 June 2018; Volume 2, pp. 175–180. [Google Scholar]

- Zhang, K.; Geng, Y.; Zhao, J.; Liu, J.; Li, W. Sentiment analysis of social media via multimodal feature fusion. Symmetry 2020, 12, 2010. [Google Scholar] [CrossRef]

- Zhang, S.; Li, B.; Yin, C. Cross-Modal Sentiment Sensing with Visual-Augmented Representation and Diverse Decision Fusion. Sensors 2021, 22, 74. [Google Scholar] [CrossRef]

- Nemati, S.; Rohani, R.; Basiri, M.E.; Abdar, M.; Yen, N.Y.; Makarenkov, V. A hybrid latent space data fusion method for multimodal emotion recognition. IEEE Access 2019, 7, 172948–172964. [Google Scholar] [CrossRef]

- Pak, O.; Mahmood, M. Impact of personality on risk tolerance and investment decisions: A study on potential investors of Kazakhstan. Int. J. Commer. Manag. 2015, 25, 370–384. [Google Scholar] [CrossRef]

- Mayfield, C.; Perdue, G.; Wooten, K. Investment management and personality type. Financ. Serv. Rev. 2008, 17, 219–236. [Google Scholar]

- Lo, A.W.; Repin, D.V.; Steenbarger, B.N. Fear and greed in financial markets: A clinical study of day-traders. Am. Econ. Rev. 2005, 95, 352–359. [Google Scholar] [CrossRef]

- Vigil-Colet, A. Impulsivity and decision making in the balloon analogue risk-taking task. Personal. Individ. Differ. 2007, 43, 37–45. [Google Scholar] [CrossRef]

- Young, S.; Gudjonsson, G.H.; Carter, P.; Terry, R.; Morris, R. Simulation of risk-taking and it relationship with personality. Personal. Individ. Differ. 2012, 53, 294–299. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).