Target-Oriented Teaching Path Planning with Deep Reinforcement Learning for Cloud Computing-Assisted Instructions

Abstract

:1. Introduction

- To the best of our knowledge, we are the first to propose a teaching path planning problem to help teachers achieve multiple teaching objectives based on refined feedback from students;

- To more efficiently obtain the solution, we present a new DRL scheme which is capable of processing the huge state space of the learning of the entire class and the complex strategies caused by the interrelationship between knowledge points.

- Finally, we compare our method with baselines on various student models, and the results show that our method is effective and has better performance.

2. Related Work

2.1. Student Model

2.2. Learning Path Planning

2.3. Cloud Computing

3. Methodology

3.1. Background

- The teacher and all students have smartphones or pads with an especially designed APP, which supports in-class question–answer and can access cloud computing services.

- All teaching contents, including in-class questions, homework, exams, are converted into some knowledge points, or some combinations of knowledge points.

- All the responses of students, including in-class answers, homework, exam answers, are recorded by cloud computing services for teaching path optimization.

- All teaching targets, including the passing rate, average score, and so on, can be defined as the mastering of knowledge points by students, e.g., the results of teaching path optimization.

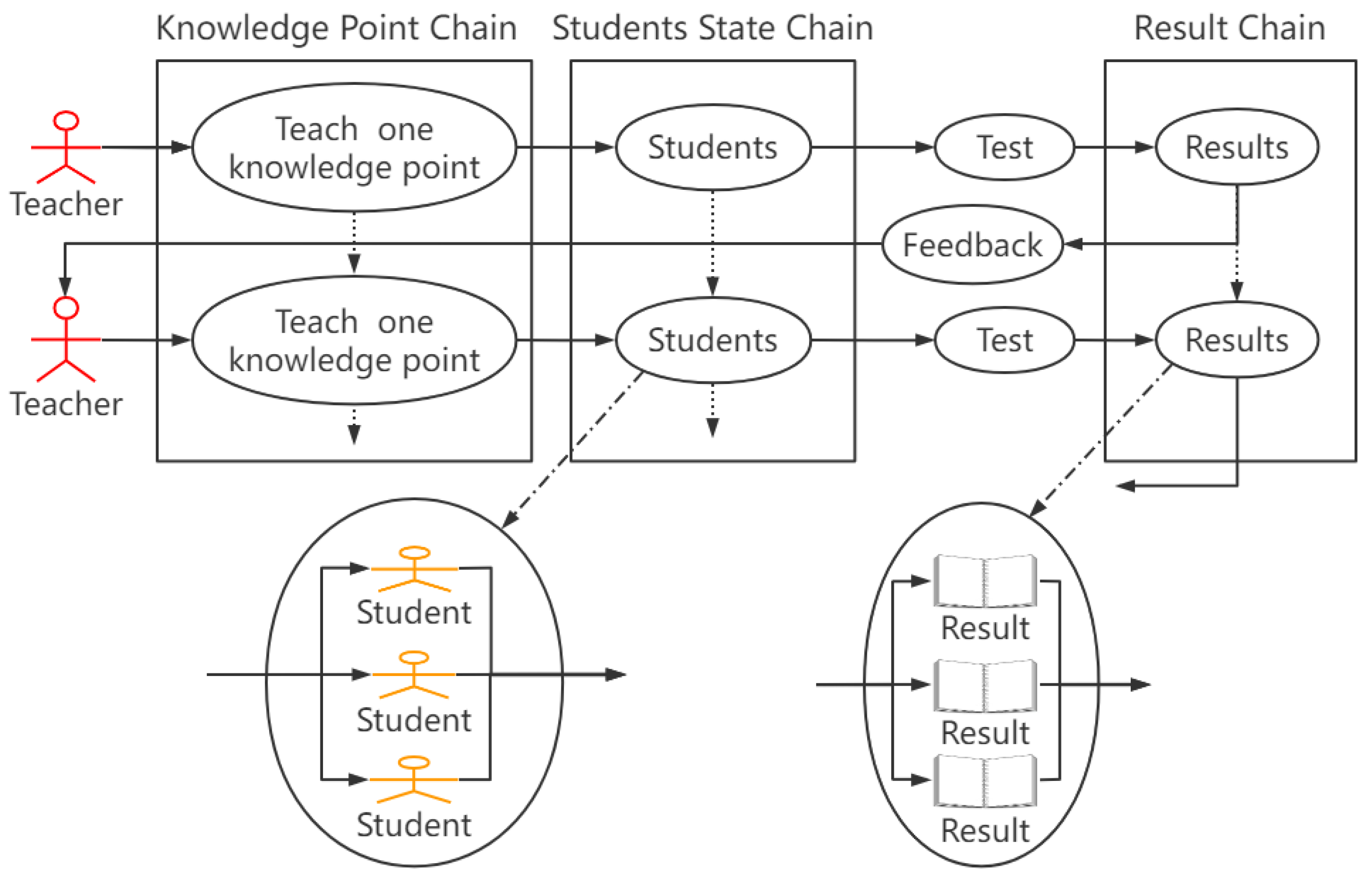

3.2. Problem Formulation

- The total class time is limited, that is, the teacher can only have a limited number of classes for teaching, and each class can only teach one knowledge point;

- There is only one teacher, and one or more students, and all students participate in the course at the same time;

- There are three teaching objectives as the optimization target of teaching path planning: the ratio of students passing the final exam; the ratio of students achieving excellent results in the final exam; and the average score of students in the final exam. This poses an exam-oriented optimization target for our teaching path planning scheme;

- The knowledge points are directly related to each other and the learning of the current knowledge point will be affected by the learning of its anterior knowledge points.

3.3. Student Model

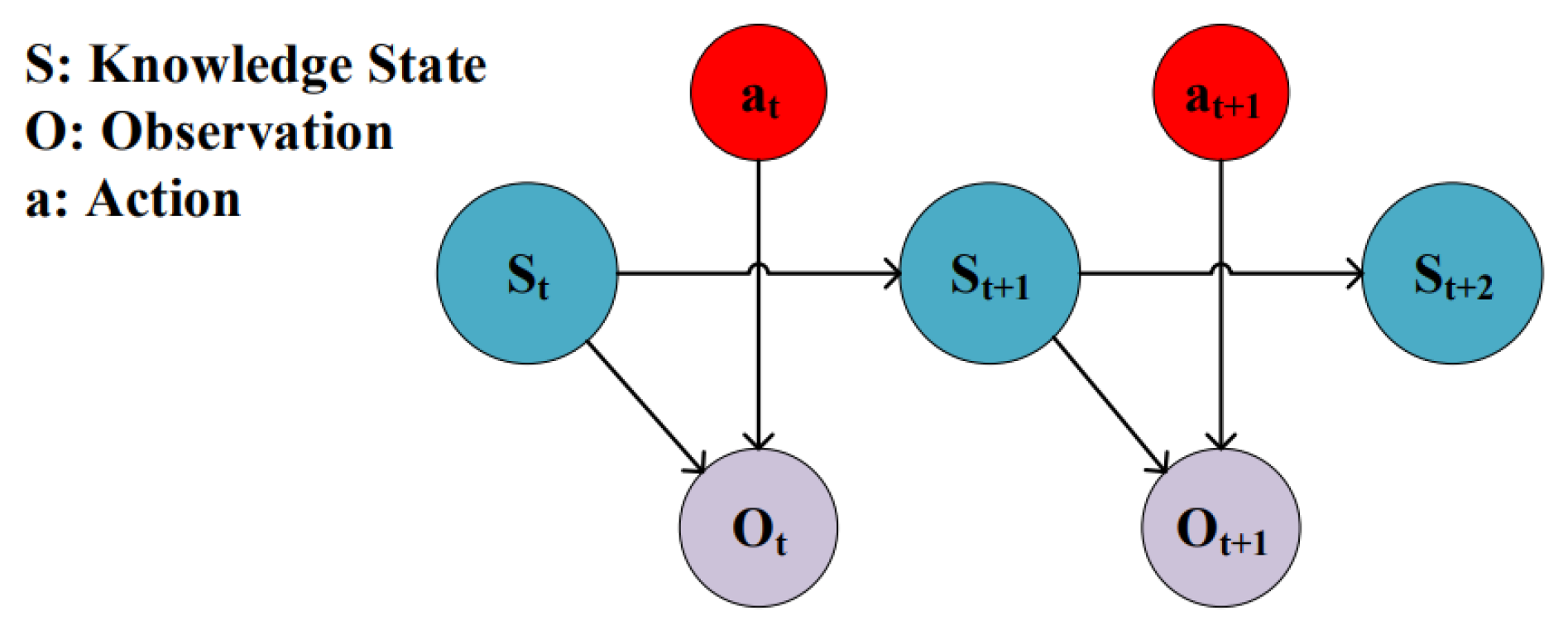

3.4. Deep Reinforcement Learning

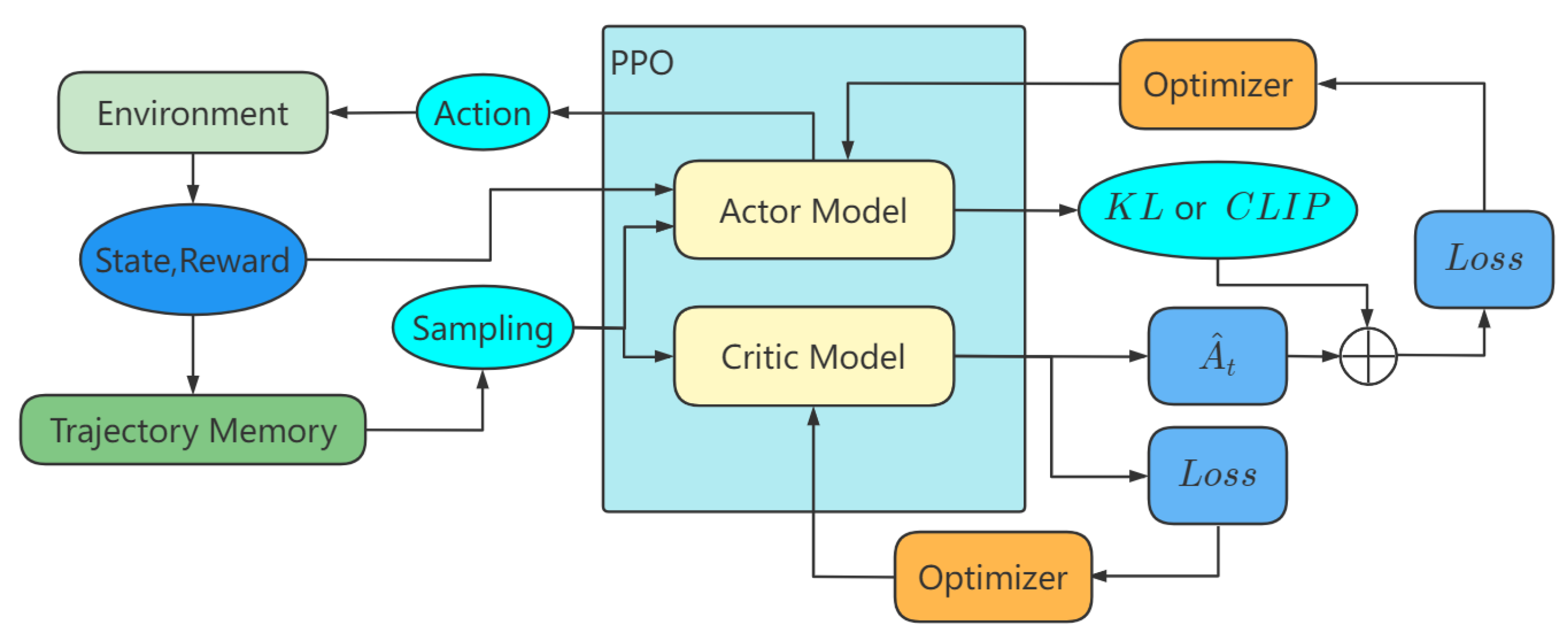

3.5. Proximal Policy Optimization

- when using the dual Lagrangian method, a dynamically changing value is used to constrain the update speed. The objective function is as follows:where means the newest actor stochastic, means the newest strategy when taking samples, and is the estimator of the advantage function of time t based on the current critic value function . During the training process, the value of is changed depending on the expected value of the KL divergence. The formula is as follows:Let present the target value. If d is less than , then is reduced to a half. If d is greater than , then is doubled. Other conditions remain unchanged.

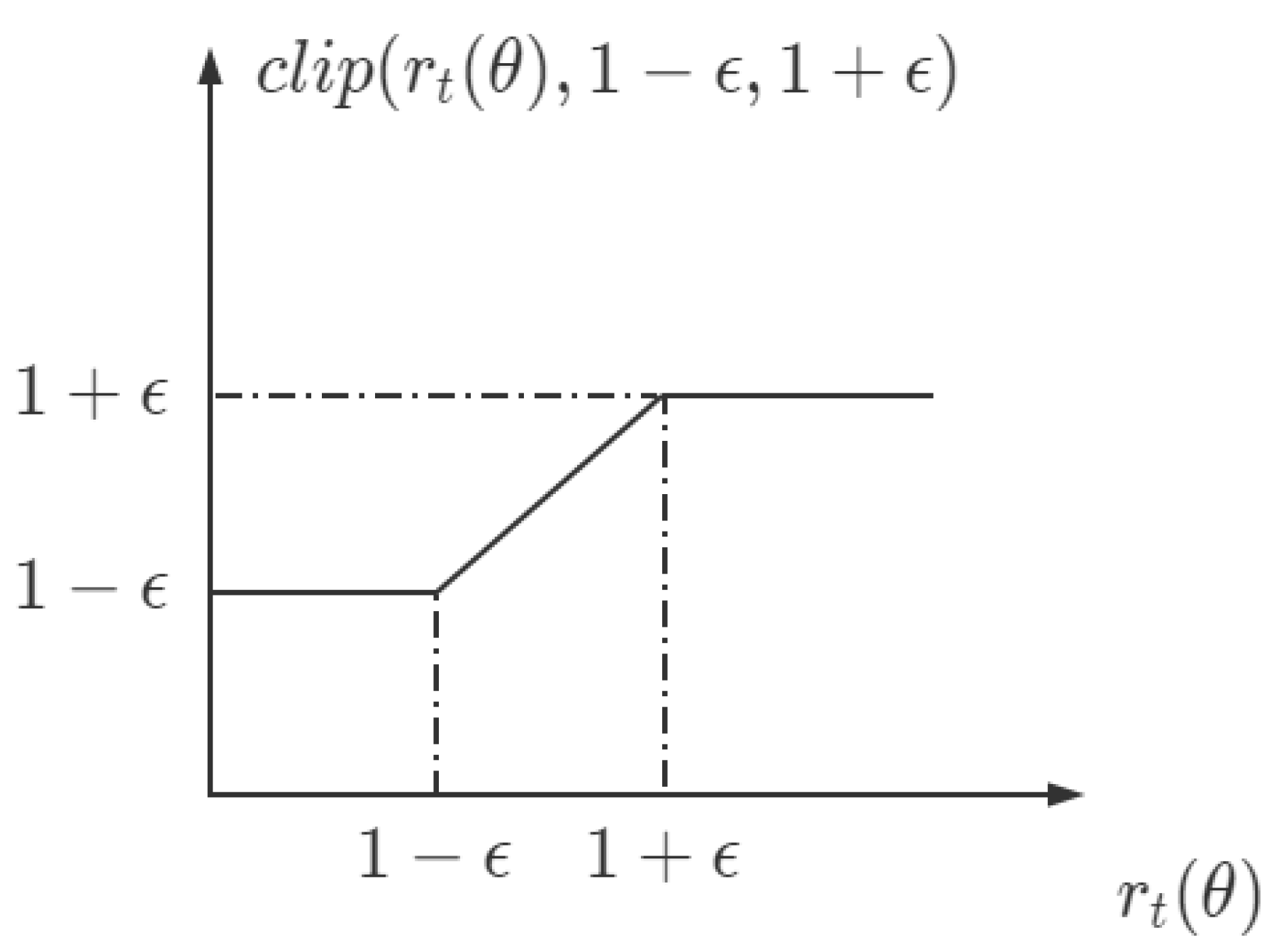

- Use the clip function in Figure 3 to directly limit the difference between the output actions of the latest actor and the older actor. The probability ratio is as follows:where denotes the current parameters of the actor neural network; denotes the parameters at the time of sampling; and denotes the action probability of the actor output after inputting the observed states in which the parameters are. It is worth mentioning that due to the use of the LSTM neural network, the current actor output is influenced by the previously inputted observation states in the same input sequence, corresponding to the learning environment as the learning trajectory and feedback from all previous times during the complete learning process of a course. Therefore, the closer is to 1, the better. The reconstruction objective function is as follows:where is a custom constant, and the function limits the upper and lower bounds of the input.

3.6. Teaching Path Planning with DRL

- State Space: State Space S is all the possible states of all students in the class, that is, the possibilities for mastering a knowledge point. The difference is that for the EFC model, there are knowledge point difficulties, the times of learning, and the time after the last learning of each knowledge point. Contrary to the EFC model, the HLR model has no knowledge point difficulties and has a feature matrix. The feature matrix contains the times of the learned knowledge points, the times that knowledge points can be memorized, and the times that knowledge points cannot be recalled.

- Observation Space: Because the teaching process is a POMDP, the real state cannot be obtained, and there is only one observation result, that is, the partial state. All possible states of this part constitute the Observation Space O, which is specifically all current real-time feedback, that is, whether the knowledge point is mastered. In actual teaching, real students obtain results by answering questions, etc. The model tests the samples through the following formula:

- Action Space: The action space A is a collection of actions that can be acted upon, that is, knowledge points that teachers can choose to teach.

- Transfer Function: The transfer function refers to the process of transforming state into state through action A. Specifically, after the teacher teaches, the class as whole changes state. In the EFC model, it changes the times of learning the knowledge point and the interval time between two times of learning. In the HLR model, it is similar to EFC, except that the feature matrix is additionally changed. The BKT model completes the state transition through Formula (9).

- Reward Function: We define the player’s reward as the expected value of the test. The formula is as follows:In constraint 3, it can be seen that the overall reward is composed of three parts, as shown in the following formula:where represents the pass rate reward, represents the excellent rate reward, represents the average score reward, represents the number of students who passed the test, and represents the number of students with excellent test scores. We consider the logarithmic reward, for which the formula is as follows:

- Discount factor: The discount factor determines whether to pay more attention to current returns or long-term returns. In the teaching process, we usually pay more attention to the teaching income at any time, so a large discount factor is better.

| Algorithm 1 PPO-Clip |

|

- First, we initialize various parameters and then train through K iterations. In a training iteration, collecting samples is the primary activity, which uses an actor neural network to generate a strategy to capture a period of an environmental change trajectory. In our algorithm, the actor neural network used to generate the strategy and the critic neural network used to calculate the value are both LSTM. The input of the actor is the sequence of the observation space of the whole class, the knowledge points of the previous teaching, and the time interval from the previous teaching. The output is the currently selected teaching knowledge point sequence. After receiving the knowledge that it is about to be taught, the environment model returns the teaching observation and reward. At the time of sample collection, the specific data of the sample are the current observation, the knowledge point for teaching, the observation after teaching, and the reward obtained.

- According to the collected data, the advantage value is calculated through a critic neural network. The input of the critic is the same as the actor, and the output value is used to calculate the advantage. Then, we train the actor neural network based on Line 8.

- Then we use Line 9 to train the critic neural network.

- Looping the above steps k times is a complete training process.

4. Experiments

4.1. Experimental Setup

- Random method: Random method is completely random when selecting a recommended knowledge point, and is the most common.

- Linear method: Linear method seeks to evenly allocate time to each knowledge point and then teach in the order of knowledge points. This is the most widespread method in traditional classrooms.

- Cyclic method: Cyclic method means that after learning all the knowledge points one by one, if there is still time, re-learn the first knowledge point and repeat.

- Threshold method: The threshold method is a cheating method. It directly reads the explicit content of the student model and selects the knowledge point with the lowest average mastery.

4.2. Results

- The average value of the average mastery of knowledge points of each student in the class;

- The number of students whose average mastery of knowledge points exceeds 0.6;

- The number of students whose average mastery of knowledge points exceeds 0.8;

- The standard deviation of the average mastery of knowledge points in the class.

4.3. Discussion

5. Cloud Computing Assisted

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| IOT | Internet of Things |

| DRL | Deep Reinforcement Learning |

| DDPG | Deep Deterministic Policy Gradient |

| AAL | Ambient-assisted living |

| BS | Base station |

References

- Stowell, J.R. Use of clickers vs. mobile devices for classroom polling. Comput. Educ. 2015, 82, 329–334. [Google Scholar] [CrossRef]

- Ceven McNally, J. Learning from one’s own teaching: New science teachers analyzing their practice through classroom observation cycles. J. Res. Sci. Teach. 2016, 53, 473–501. [Google Scholar] [CrossRef]

- Shou, Z.; Lu, X.; Wu, Z.; Yuan, H.; Zhang, H.; Lai, J. On learning path planning algorithm based on collaborative analysis of learning behavior. IEEE Access 2020, 8, 119863–119879. [Google Scholar] [CrossRef]

- Xie, H.; Zou, D.; Wang, F.L.; Wong, T.L.; Rao, Y.; Wang, S.H. Discover learning path for group users: A profile-based approach. Neurocomputing 2017, 254, 59–70. [Google Scholar] [CrossRef]

- Ebbinghaus, H. Memory: A contribution to experimental psychology. Ann. Neurosci. 2013, 20, 155. [Google Scholar] [CrossRef]

- Settles, B.; Meeder, B. A trainable spaced repetition model for language learning. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Berlin, Germany, 7–12 August 2016; pp. 1848–1858. [Google Scholar]

- Zaidi, A.; Caines, A.; Moore, R.; Buttery, P.; Rice, A. Adaptive forgetting curves for spaced repetition language learning. In Proceedings of the International Conference on Artificial Intelligence in Education, Ifrane, Morocco, 6–10 July 2020; pp. 358–363. [Google Scholar]

- Corbett, A.T.; Anderson, J.R. Knowledge tracing: Modeling the acquisition of procedural knowledge. User Model. User-Adapt. Interact. 1994, 4, 253–278. [Google Scholar] [CrossRef]

- Van De Sande, B. Properties of the Bayesian Knowledge Tracing Model. J. Educ. Data Min. 2013, 5, 1–10. [Google Scholar]

- Piech, C.; Spencer, J.; Huang, J.; Ganguli, S.; Sahami, M.; Guibas, L.; Sohl-Dickstein, J. Deep knowledge tracing. arXiv 2015, arXiv:1506.05908. [Google Scholar]

- Ding, X.; Larson, E.C. Incorporating uncertainties in student response modeling by loss function regularization. Neurocomputing 2020, 409, 74–82. [Google Scholar] [CrossRef]

- Lu, X.; Zhu, Y.; Xu, Y.; Yu, J. Learning from multiple dynamic graphs of student and course interactions for student grade predictions. Neurocomputing 2021, 431, 23–33. [Google Scholar] [CrossRef]

- Rafferty, A.N.; Brunskill, E.; Griffiths, T.L.; Shafto, P. Faster teaching via pomdp planning. Cogn. Sci. 2016, 40, 1290–1332. [Google Scholar] [CrossRef]

- Elshani, L.; Nuçi, K.P. Constructing a personalized learning path using genetic algorithms approach. arXiv 2021, arXiv:2104.11276. [Google Scholar]

- Niknam, M.; Thulasiraman, P. LPR: A bio-inspired intelligent learning path recommendation system based on meaningful learning theory. Educ. Inf. Technol. 2020, 25, 3797–3819. [Google Scholar] [CrossRef]

- Reddy, S.; Labutov, I.; Banerjee, S.; Joachims, T. Unbounded human learning: Optimal scheduling for spaced repetition. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 1815–1824. [Google Scholar]

- Hoi, S.C.; Sahoo, D.; Lu, J.; Zhao, P. Online learning: A comprehensive survey. arXiv 2018, arXiv:1802.02871. [Google Scholar] [CrossRef]

- Wang, S.; Xu, Y.; Li, Q.; Chen, Y. Learning Path Planning Algorithm Based on Learner Behavior Analysis. In Proceedings of the 2021 4th International Conference on Big Data and Education, London, UK, 3–5 February 2021; pp. 26–33. [Google Scholar]

- Shi, D.; Wang, T.; Xing, H.; Xu, H. A learning path recommendation model based on a multidimensional knowledge graph framework for e-learning. Knowl.-Based Syst. 2020, 195, 105618. [Google Scholar] [CrossRef]

- Reddy, S.; Levine, S.; Dragan, A. Accelerating human learning with deep reinforcement learning. In Proceedings of the NIPS’17 Workshop: Teaching Machines, Robots, and Humans, Long Beach, CA, USA, 9 December 2017; pp. 5–9. [Google Scholar]

- Sinha, S. Using Deep Reinforcement Learning for Personalizing Review Sessions on e-Learning Platforms with Spaced Repetition. 2019. Available online: https://www.semanticscholar.org/paper/Using-deep-reinforcement-learning-for-personalizing-Sinha/3f73a776916f491f18a24576ac352c63bd533040 (accessed on 2 August 2022).

- Ghiani, G.; Manni, E.; Romano, A. Training offer selection and course timetabling for remedial education. Comput. Ind. Eng. 2017, 111, 282–288. [Google Scholar] [CrossRef]

- Muhammad, I.; Azhar, I.M.; Muhammad, A.; Arshad, I.M. SIM-Cumulus: An Academic Cloud for the Provisioning of Network-Simulation-as-a-Service (NSaaS). IEEE Access 2018, 6, 27313–27323. [Google Scholar]

- Ibrahim, M.; Nabi, S.; Baz, A.; Naveed, N.; Alhakami, H. Toward a Task and Resource Aware Task Scheduling in Cloud Computing: An Experimental Comparative Evaluation. Int. J. Netw. Distrib. Comput. 2020, 8, 131–138. [Google Scholar] [CrossRef]

- Wang, Z.; Wan, Y.; Liang, H. The Impact of Cloud Computing-Based Big Data Platform on IE Education. Wirel. Commun. Mob. Comput. 2022, 2022, 1–13. [Google Scholar] [CrossRef]

- Tai, B.; Li, X.; Yang, L.; Miao, Y.; Lin, W.; Yan, C. Cloud Computing-aided Multi-type Data Fusion with Correlation for Education. Wirel. Netw. 2022, 1–12. [Google Scholar] [CrossRef]

- Zhao, J. Construction of College Chinese Mobile Learning Environment Based on Intelligent Reinforcement Learning Technology in Wireless Network Environment. Wirel. Commun. Mob. Comput. 2022, 2022, 5164430. [Google Scholar] [CrossRef]

- Siemens, G.; Gašević, D.; Dawson, S. Preparing for the Digital University: A Review of the History and Current State of Distance, Blended, and Online Learning; Athabasca University Press: Athabasca, AB, Canada, 2015. [Google Scholar]

- Nikou, S.A.; Economides, A.A. Mobile-based assessment: Investigating the factors that influence behavioral intention to use. Comput. Educ. 2017, 109, 56–73. [Google Scholar] [CrossRef]

- Anshari, M.; Almunawar, M.N.; Shahrill, M.; Wicaksono, D.K.; Huda, M. Smartphones usage in the classrooms: Learning aid or interference? Educ. Inf. Technol. 2017, 22, 3063–3079. [Google Scholar] [CrossRef]

- Han, J.H.; Finkelstein, A. Understanding the effects of professors’ pedagogical development with Clicker Assessment and Feedback technologies and the impact on students’ engagement and learning in higher education. Comput. Educ. 2013, 65, 64–76. [Google Scholar] [CrossRef]

- Kim, I.; Kim, R.; Kim, H.; Kim, D.; Han, K.; Lee, P.H.; Mark, G.; Lee, U. Understanding smartphone usage in college classrooms: A long-term measurement study. Comput. Educ. 2019, 141, 103611. [Google Scholar] [CrossRef]

- Sung, Y.T.; Chang, K.E.; Liu, T.C. The effects of integrating mobile devices with teaching and learning on students’ learning performance: A meta-analysis and research synthesis. Comput. Educ. 2016, 94, 252–275. [Google Scholar] [CrossRef]

- Burden, K.; Kearney, M.; Schuck, S.; Hall, T. Investigating the use of innovative mobile pedagogies for school-aged students: A systematic literature review. Comput. Educ. 2019, 138, 83–100. [Google Scholar] [CrossRef]

- Chung, C.J.; Hwang, G.J.; Lai, C.L. A review of experimental mobile learning research in 2010–2016 based on the activity theory framework. Comput. Educ. 2019, 129, 1–13. [Google Scholar] [CrossRef]

- Schulman, J.; Wolski, F.; Dhariwal, P.; Radford, A.; Klimov, O. Proximal policy optimization algorithms. arXiv 2017, arXiv:1707.06347. [Google Scholar]

- Xie, T.; Cheng, X.; Wang, X.; Liu, M.; Deng, J.; Zhou, T.; Liu, M. Cut-Thumbnail: A Novel Data Augmentation for Convolutional Neural Network. In Proceedings of the 29th ACM International Conference on Multimedia, Virtual Event, 20–24 October 2021; pp. 1627–1635. [Google Scholar]

| Method | Average | Pass | Excellent | SD | Average (log) | Pass (log) | Excellent (log) | SD (log) |

|---|---|---|---|---|---|---|---|---|

| Random | 0.319 | 3 | 0 | 0.222 | 0.353 | 5 | 1 | 0.255 |

| Linear | 0.229 | 2 | 0 | 0.213 | 0.308 | 3 | 1 | 0.265 |

| Cyclic | 0.443 | 6 | 3 | 0.255 | 0.522 | 8 | 3 | 0.276 |

| Threshold | 0.428 | 6 | 3 | 0.258 | 0.489 | 7 | 3 | 0.289 |

| DRL | 0.438 | 6 | 3 | 0.247 | 0.500 | 9 | 3 | 0.241 |

| Method | Average | Pass | Excellent | SD | Average (log) | Pass (log) | Excellent (log) | SD (log) |

|---|---|---|---|---|---|---|---|---|

| Random | 0.462 | 5 | 2 | 0.201 | 0.366 | 3 | 2 | 0.208 |

| Linear | 0.413 | 4 | 2 | 0.205 | 0.309 | 3 | 1 | 0.218 |

| Cyclic | 0.557 | 8 | 3 | 0.212 | 0.451 | 4 | 2 | 0.220 |

| Threshold | 0.556 | 7 | 3 | 0.213 | 0.451 | 4 | 2 | 0.224 |

| DRL | 0.485 | 8 | 2 | 0.204 | 0.396 | 5 | 2 | 0.225 |

| Method | Average | Pass | Excellent | SD | Average (log) | Pass (log) | Excellent (log) | SD (log) |

|---|---|---|---|---|---|---|---|---|

| Random | 0.271 | 4 | 1 | 0.252 | 0.209 | 1 | 0 | 0.173 |

| Linear | 0.412 | 5 | 4 | 0.300 | 0.377 | 5 | 2 | 0.284 |

| Cyclic | 0.422 | 5 | 4 | 0.288 | 0.373 | 5 | 3 | 0.269 |

| Threshold | 0.347 | 4 | 3 | 0.299 | 0.312 | 5 | 1 | 0.280 |

| DRL | 0.503 | 7 | 4 | 0.237 | 0.564 | 6 | 4 | 0.285 |

| Method | Average | Pass | Excellent | SD | Average (log) | Pass (log) | Excellent (log) | SD (log) |

|---|---|---|---|---|---|---|---|---|

| Random | 0.525 | 6 | 4 | 0.207 | 0.490 | 6 | 1 | 0.170 |

| Linear | 0.680 | 12 | 5 | 0.173 | 0.645 | 8 | 6 | 0.169 |

| Cyclic | 0.586 | 8 | 4 | 0.223 | 0.514 | 6 | 5 | 0.239 |

| Threshold | 0.582 | 8 | 4 | 0.233 | 0.514 | 6 | 5 | 0.236 |

| DRL | 0.625 | 12 | 4 | 0.212 | 0.540 | 8 | 5 | 0.227 |

| Method | Average | Pass | Excellent | SD | Average (log) | Pass (log) | Excellent (log) | SD (log) |

|---|---|---|---|---|---|---|---|---|

| Random | 0.470 | 3 | 0 | 0.111 | 0.461 | 4 | 0 | 0.119 |

| Linear | 0.630 | 10 | 4 | 0.147 | 0.536 | 8 | 0 | 0.151 |

| Cyclic | 0.620 | 9 | 4 | 0.144 | 0.560 | 8 | 2 | 0.156 |

| Threshold | 0.599 | 8 | 2 | 0.144 | 0.595 | 8 | 2 | 0.157 |

| DRL | 0.643 | 12 | 4 | 0.150 | 0.592 | 10 | 3 | 0.152 |

| Method | Average | Pass | Excellent | SD | Average (log) | Pass (log) | Excellent (log) | SD (log) |

|---|---|---|---|---|---|---|---|---|

| Random | 0.553 | 7 | 0 | 0.096 | 0.528 | 6 | 0 | 0.110 |

| Linear | 0.624 | 12 | 3 | 0.120 | 0.575 | 7 | 1 | 0.121 |

| Cyclic | 0.633 | 13 | 3 | 0.123 | 0.580 | 8 | 1 | 0.126 |

| Threshold | 0.633 | 13 | 3 | 0.124 | 0.583 | 8 | 1 | 0.127 |

| DRL | 0.633 | 12 | 3 | 0.123 | 0.581 | 8 | 1 | 0.126 |

| Num | 1 | 2 | 3 |

|---|---|---|---|

| Name |

| Method | Average | Pass | Excellent |

|---|---|---|---|

| 12 | 0.405 | 5 | 3 |

| 13 | 0.406 | 6 | 0 |

| 23 | 0.429 | 6 | 2 |

| DRL(123) | 0.437 | 6 | 3 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, T.; Zuo, L.; Yang, X.; Liu, N. Target-Oriented Teaching Path Planning with Deep Reinforcement Learning for Cloud Computing-Assisted Instructions. Appl. Sci. 2022, 12, 9376. https://doi.org/10.3390/app12189376

Yang T, Zuo L, Yang X, Liu N. Target-Oriented Teaching Path Planning with Deep Reinforcement Learning for Cloud Computing-Assisted Instructions. Applied Sciences. 2022; 12(18):9376. https://doi.org/10.3390/app12189376

Chicago/Turabian StyleYang, Tengjie, Lin Zuo, Xinduoji Yang, and Nianbo Liu. 2022. "Target-Oriented Teaching Path Planning with Deep Reinforcement Learning for Cloud Computing-Assisted Instructions" Applied Sciences 12, no. 18: 9376. https://doi.org/10.3390/app12189376

APA StyleYang, T., Zuo, L., Yang, X., & Liu, N. (2022). Target-Oriented Teaching Path Planning with Deep Reinforcement Learning for Cloud Computing-Assisted Instructions. Applied Sciences, 12(18), 9376. https://doi.org/10.3390/app12189376