Cognitive Artificial Intelligence Using Bayesian Computing Based on Hybrid Monte Carlo Algorithm

Abstract

:1. Introduction

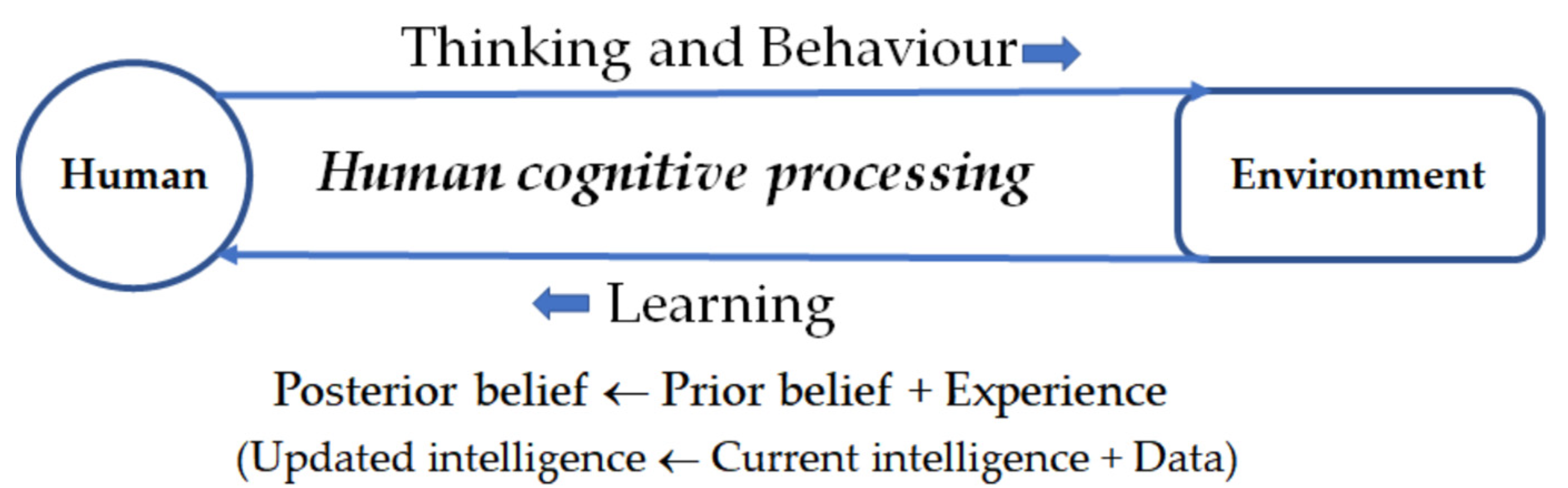

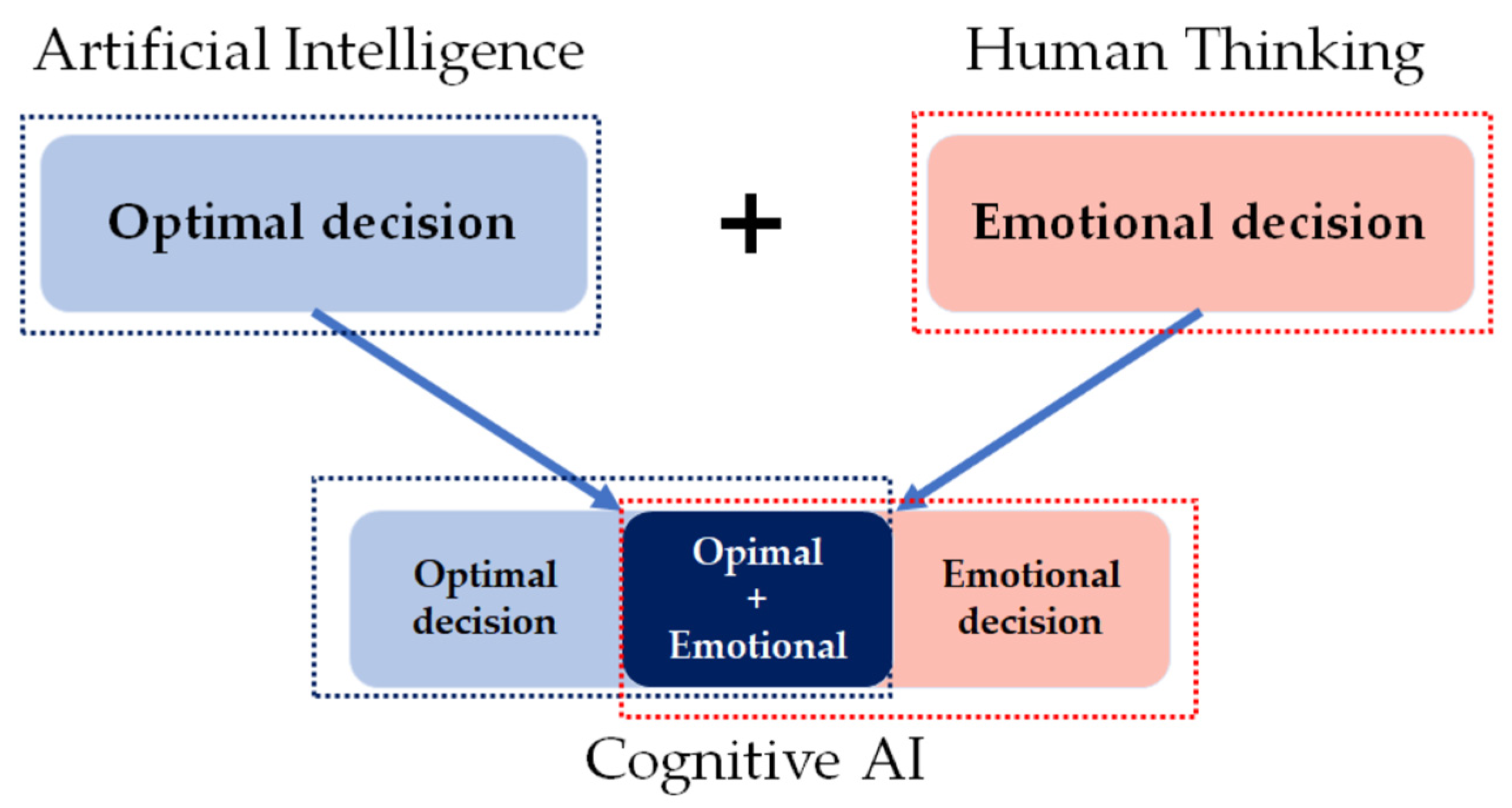

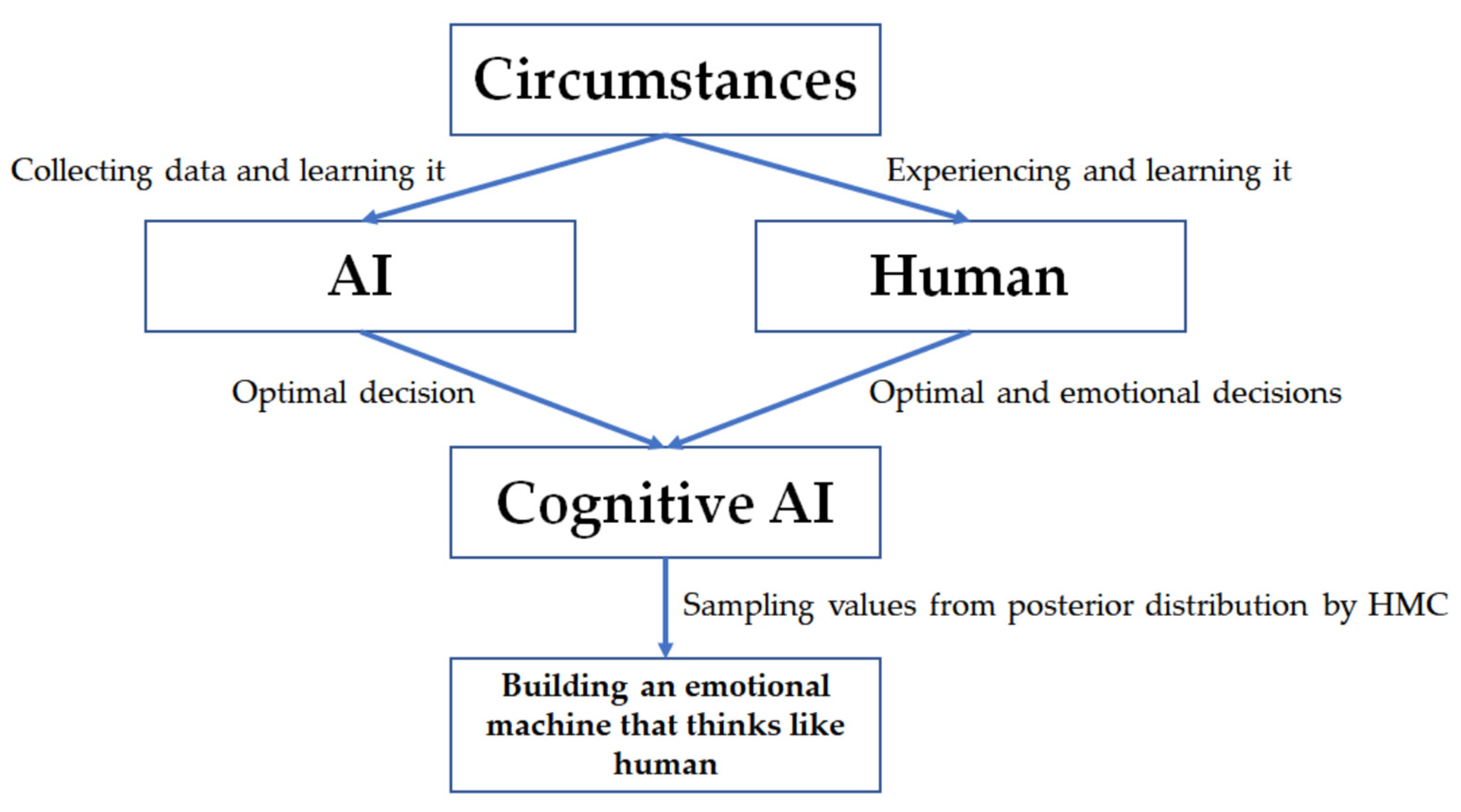

2. Research Backgrounds

2.1. Cognitive Systems for Artificial Intelligence

2.2. Markov Chain Monte Carlo Algorithms

- (Step 1) Drawing initial value from starting distribution .

- (Step 2) Sampling new parameter value from proposal distribution ().

- (Step 3) Calculating the acceptance probability of the new parameter value by (1).

- (Step 4) Selecting a new parameter value if the acceptance probability is higher than the value obtained from a uniform distribution on [0, 1]; otherwise, it stays at the current value.

- (Step 5) Repeating Steps 2 through 4 until we have enough samples.

3. Proposed Method

- (Step 1) Initializing

- (1-1)

- Initial value of parameters, ;

- (1-2)

- Time start, t = 1;

- (1-3)

- Initial log posterior density, ;

- (1-4)

- Generating momentum q from .

- (Step 2) Sampling

- (2-1)

- Starting states for leapfrog, ;

- (2-2)

- Repeating leapfrog algorithm (L times);

- (2-3)

- Producing HMC proposal density, and .

- (Step 3) Accepting or rejecting

- (3-1)

- Determining acceptance probability, α;

- (3-2)

- If accepting, ;

- (3-3)

- If rejecting, .

- (Step 4) Repeating Steps 2 and 3 until N samples are obtained, .

4. Experiments and Results

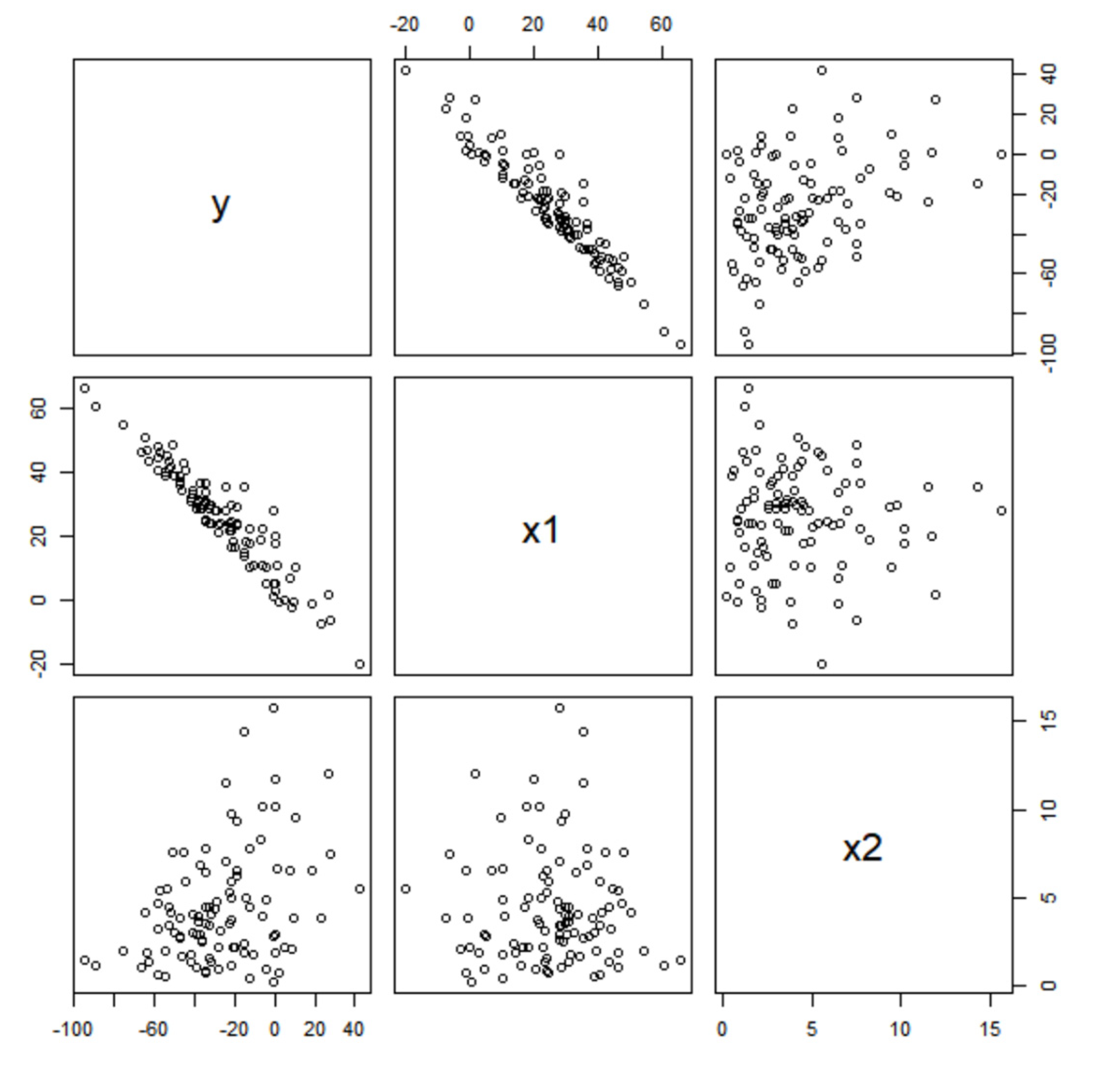

4.1. Simulation Data

4.2. Car Data Set

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep Learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Russell, S.; Norvig, P. Artificial Intelligence—A Modern Approach, 3rd ed.; Pearson: Essex, UK, 2014. [Google Scholar]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Korb, K.B.; Nicholson, A.E. Bayesian Artificial Intelligence, 2nd ed.; CRC Press: London, UK, 2011. [Google Scholar]

- Lake, B.M.; Ullman, T.D.; Tenenbaum, J.B.; Gershman, S.J. Building machines that learn and think like people. Behav. Brain Sci. 2017, 40, e253. [Google Scholar] [CrossRef] [PubMed]

- Sumari, A.D.W.; Syamsiana, I.N. A Simple Introduction to Cognitive Artificial Intelligence’s Knowledge Growing System. In Proceedings of the 2021 International Conference on Data Science, Artificial Intelligence, and Business Analytics, Medan, Indonesia, 11–12 November 2021; pp. 170–175. [Google Scholar] [CrossRef]

- Silver, D.; Huang, A.; Maddison, C.J.; Guez, A.; Sifre, L.; Driessche, G.; Schrittwieser, J.; Antonoglou, I.; Panneershelvam, V.; Lanctot, M.; et al. Mastering the game of Go with deep neural networks and tree search. Nature 2016, 529, 484–489. [Google Scholar] [CrossRef] [PubMed]

- Jun, S. Machines Imitating Human Thinking Using Bayesian Learning and Bootstrap. Symmetry 2021, 13, 389. [Google Scholar] [CrossRef]

- Hurwitz, J.S.; Kaufman, M.; Bowles, A. Cognitive Computing and Big Data Analysis; Wiley: Indianapolis, IN, USA, 2015. [Google Scholar]

- Sreedevi, A.; Harshitha, T.N.; Sugumaran, V.; Shankar, P. Application of cognitive computing in healthcare, cybersecurity, big data and IoT: A literature review. Inf. Process. Manag. 2022, 59, 102888. [Google Scholar] [CrossRef]

- Behera, R.K.; Bala, P.K.; Dhir, A. The emerging role of cognitive computing in healthcare: A systematic literature review. Int. J. Med. Inform. 2019, 129, 154–166. [Google Scholar] [CrossRef]

- Wan, S.; Gu, Z.; Ni, Q. Cognitive computing and wireless communications on the edge for healthcare service robots. Comput. Commun. 2020, 149, 99–106. [Google Scholar] [CrossRef]

- Müller, S.; Bergande, B.; Brune, P. Robot tutoring: On the feasibility of using cognitive systems as tutors in introductory programming education: A teaching experiment. In Proceedings of the 3rd European Conference of Software Engineering Education, Bavaria, Germany, 14–15 June 2018; pp. 45–49. [Google Scholar]

- Coccoli, M.; Maresca, P.; Stanganelli, L. Cognitive computing in education. J. E-Learn. Knowl. Soc. 2016, 12, 55–69. [Google Scholar]

- Sumari, A.D.W.; Asmara, E.A.; Putra, D.R.H.; Syamsiana, I.N. Prediction Using Knowledge Growing System: A Cognitive Artificial Intelligence Approach. In Proceedings of the 2021 International Conference on Electrical and Information Technology, Malang, Indonesia, 14–15 September 2021; pp. 15–20. [Google Scholar]

- Thomas, S.; Tu, W. Learning Hamiltonian Monte Carlo in R. Am. Stat. 2021, 75, 403–413. [Google Scholar] [CrossRef]

- Neal, R.M. Bayesian Learning for Neural Networks; Springer: New York, NY, USA, 1996. [Google Scholar]

- Hoffman, M.D.; Gelman, A. The No-U-Turn Sampler: Adaptively Setting Path Lengths in Hamiltonian Monte Carlo. J. Mach. Learn. Res. 2014, 15, 1593–1623. [Google Scholar]

- Xu, D.; Fekri, F. Improving Actor-Critic Reinforcement Learning via Hamiltonian Monte Carlo Method. In Proceedings of the 2022 IEEE International Conference on Acoustics, Speech and Signal Processing, Singapore, 22–27 May 2022; pp. 4018–4022. [Google Scholar]

- Wang, H.; Li, G.; Liu, X.; Lin, L. A Hamiltonian Monte Carlo Method for Probabilistic Adversarial Attack and Learning. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 1725–1737. [Google Scholar] [CrossRef] [PubMed]

- Xu, X.; Liu, C.; Yang, H. Robust Inference Based on the Complementary Hamiltonian Monte Carlo. IEEE Trans. Reliab. 2022, 71, 111–126. [Google Scholar] [CrossRef]

- Matsumura, K.; Hagiwara, J.; Nishimura, T.; Ohgane, T.; Ogawa, Y.; Sato, T. A Novel MIMO Signal Detection Method Using Hamiltonian Monte Carlo Approach. In Proceedings of the 24th International Symposium on Wireless Personal Multimedia Communications, Okayama, Japan, 14–16 December 2021; pp. 1–6. [Google Scholar]

- Xu, L. Finite Element Mesh Based Hybrid Monte Carlo Micromagnetics. In Proceedings of the 23rd International Conference on the Computation of Electromagnetic Fields, Malang, Indonesia, 16–20 January 2022; pp. 1–4. [Google Scholar]

- Murphy, K.P. Machine Learning: A Probabilistic Perspective; MIT Press: Cambridge, MA, USA, 2012. [Google Scholar]

- Ahmed, I.; Jeon, G.; Piccialli, F. From Artificial Intelligence to Explainable Artificial Intelligence in Industry 4.0: A Survey on What, How, and Where. IEEE Trans. Ind. Inform. 2022, 18, 5031–5042. [Google Scholar] [CrossRef]

- Abdar, M.; Khosravi, A.; Islam, S.M.S.; Acharya, U.R.; Vasilakos, A.V. The need for quantification of uncertainty in artificial intelligence for clinical data analysis: Increasing the level of trust in the decision-making process. IEEE Syst. Man Cybern. Mag. 2022, 8, 28–40. [Google Scholar] [CrossRef]

- Rowe, N.C. Algorithms for Artificial Intelligence. Computer 2022, 55, 97–102. [Google Scholar] [CrossRef]

- Minsky, M. The Emotion Machine; Simon & Schuster Paperbacks: New York, NY, USA, 2006. [Google Scholar]

- Economides, M.; Kurth-Nelson, Z.; Lübbert, A.; Masip, M.G.; Dolan, R.J. Model-Based Reasoning in Humans Becomes Automatic with Training. PLoS Comput. Biol. 2015, 11, e1004463. [Google Scholar] [CrossRef] [PubMed]

- Gershman, S.J.; Horvitz, E.J.; Tenenbaum, J.B. Computational rationality: A converging paradigm for intelligence in brains, minds, and machines. Science 2015, 349, 273–278. [Google Scholar] [CrossRef] [PubMed]

- Ghahramani, Z. Probabilistic machine learning and artificial intelligence. Nature 2015, 521, 452–459. [Google Scholar] [CrossRef]

- Griffiths, T.L.; Vul, E.; Sanborn, A. Bridging Levels of Analysis for Probabilistic Models of Cognition. Curr. Dir. Psychol. Sci. 2012, 21, 263–268. [Google Scholar] [CrossRef]

- Lake, B.M.; Salakhutdinov, R.; Tenenbaum, J.B. Human-level concept learning through probabilistic program induction. Science 2015, 350, 1332–1338. [Google Scholar] [CrossRef]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G.; et al. Human-level control through deep reinforcement learning. Nature 2015, 518, 529–533. [Google Scholar] [CrossRef] [PubMed]

- Tenenbaum, J.B.; Kemp, C.; Griffiths, T.L.; Goodman, N.D. How to Grow a Mind: Statistics, Structure, and Abstraction. Science 2011, 331, 1279–1285. [Google Scholar] [CrossRef] [PubMed]

- Ellis, K.; Albright, A.; Solar-Lezama, A.; Tenenbaum, J.B.; O’Donnell, T.J. Synthesizing theories of human language with Bayesian program induction. Nat. Commun. 2022, 13, 5024. [Google Scholar] [CrossRef] [PubMed]

- Kryven, M.; Ullman, T.D.; Cowan, W.; Tenenbaum, J.B. Plans or Outcomes: How Do We Attribute Intelligence to Others? Cogn. Sci. 2021, 45, e13041. [Google Scholar] [CrossRef]

- Krafft, P.M.; Shmueli, E.; Griffiths, T.L.; Tenenbaum, J.B. Bayesian collective learning emerges from heuristic social learning. Cognition 2021, 212, 104469. [Google Scholar]

- Donovan, T.M.; Mickey, R.M. Bayesian Statistics for Beginners; Oxford University Press: Oxford, UK, 2019. [Google Scholar]

- Koduvely, H.M. Learning Bayesian Models with R; Packt: Birmingham, UK, 2015. [Google Scholar]

- Martin, O. Bayesian Analysis with Python, 2nd ed.; Packt: Birmingham, UK, 2018. [Google Scholar]

- Hogg, R.V.; Mckean, J.W.; Craig, A.T. Introduction to Mathematical Statistics, 8th ed.; Pearson: Essex, UK, 2020. [Google Scholar]

- Gelman, A.; Carlin, J.B.; Stern, H.S.; Dunson, D.B.; Vehtari, A.; Rubin, D.B. Bayesian Data Analysis, 3rd ed.; Chapman & Hall/CRC Press: Boca Raton, FL, USA, 2013. [Google Scholar]

- Thomas, C. Package ‘hmclearn’ Version 0.0.5, Fit Statistical Models Using Hamiltonian Monte Carlo, CRAN of R project. 2020. Available online: https://search.r-project.org/CRAN/refmans/hmclearn/html/00Index.html (accessed on 12 August 2022).

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2013. Available online: https://www.R-project.org/ (accessed on 19 April 2022).

| CAI | Optimal AI | Emotional AI |

|---|---|---|

| Computer science Data science Statistics | Silver et al. (2016) [7] Russell and Norvig (2014) [2] Goodfellow et al. (2016) [3] Neal (1996) [17] Ghahramani (2015) [31] | Sumari and Syamsiana (2021) [6] Jun (2021) [8] |

| Cognitive science Psychology Cognitive psychology | Griffiths et al. (2012) [32] Mnih et al. (2015) [34] Tenenbaum et al. (2011) [35] Ellis et al. (2022) [36] Krafft et al. (2021) [38] | Lake et al. (2017) [5] Economides et al. (2015) [29] Gershman et al. (2015) [30] Lake et al. (2015) [33] Kryven et al. (2021) [37] |

| Variable | Distribution | Parameter | Expectation | Variance |

|---|---|---|---|---|

| Gaussian | Mean = 24 Standard deviation = 16 | |||

| Gamma | Shape = 2 Inverse scale = 0.5 | 4 | ||

| Gaussian | Mean = 0 Standard deviation = 1 |

| Estimated | Confidence Interval: HMC | |||

|---|---|---|---|---|

| GLM | HMC | 50% | 90% | |

| 0.1987 | 0.6866 | (0.6866, 0.6866) | (0.6866, 1.0489) | |

| −1.4923 | −1.4878 | (−1.4971, −1.4878) | (−3.6898, −1.4878) | |

| 2.5389 | 2.4011 | (2.4011, 2.4011) | (1.7923, 2.5970) | |

| Input | Optimal | Emotional: HMC | |||

|---|---|---|---|---|---|

| GLM | HMC | 0.5 | 0.9 | ||

| 2 | 2 4 6 | 2.2919 7.3697 12.4475 | 2.5132 7.3154 12.1176 | (2.4987, 2.4989, 2.5021) (7.3009, 7.3011, 7.3043) (12.1031, 12.1033, 12.1065) | (2.1376, −0.3294, −1.0978) (6.7160, 3.3936, 3.7315) (11.2944, 7.1167, 8.5608) |

| 4 | 2 4 6 | −0.6927 4.3851 9.4629 | −0.4624 4.3398 9.1420 | (−0.4914, −0.4911, −0.4847) (4.3108, 4.3111, 4.3175) (9.1130, 9.1133, 9.1197) | (−1.0696, −5.1515, −7.8567) (3.5088, −1.4284, −3.0273) (8.0873, 2.2946, 1.8020) |

| 6 | 2 4 6 | −3.6773 1.4005 6.4783 | −3.4380 1.3642 6.1664 | (−3.4815, −3.4810, −3.4714) (1.3207, 0.3212, 1.3308) (6.1229, 6.1234, 6.1330) | (−4.2768, −9.9735, −14.6155) (0.3016, −6.2505, −9.7862) (4.8801, −2.5275, −4.9569) |

| Estimated | Confidence Interval: HMC | |||

|---|---|---|---|---|

| GLM | HMC | 50% | 90% | |

| −17.5791 | −2.2399 | (−3.0122, −1.7071) | (−3.4520, −0.7613) | |

| 3.9324 | 3.1163 | (2.9993, 3.2496) | (2.8191, 7.2629) | |

| Speed | Optimal | Emotional: HMC | ||

|---|---|---|---|---|

| GLM | HMC | 0.5 | 0.9 | |

| 17 | 49.2717 | 50.7372 | (52.2944, 50.7462, 49.0986) | (116.6369, 88.9605, 56.1986) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Park, S.; Jun, S. Cognitive Artificial Intelligence Using Bayesian Computing Based on Hybrid Monte Carlo Algorithm. Appl. Sci. 2022, 12, 9270. https://doi.org/10.3390/app12189270

Park S, Jun S. Cognitive Artificial Intelligence Using Bayesian Computing Based on Hybrid Monte Carlo Algorithm. Applied Sciences. 2022; 12(18):9270. https://doi.org/10.3390/app12189270

Chicago/Turabian StylePark, Sangsung, and Sunghae Jun. 2022. "Cognitive Artificial Intelligence Using Bayesian Computing Based on Hybrid Monte Carlo Algorithm" Applied Sciences 12, no. 18: 9270. https://doi.org/10.3390/app12189270

APA StylePark, S., & Jun, S. (2022). Cognitive Artificial Intelligence Using Bayesian Computing Based on Hybrid Monte Carlo Algorithm. Applied Sciences, 12(18), 9270. https://doi.org/10.3390/app12189270