An Unsupervised Depth-Estimation Model for Monocular Images Based on Perceptual Image Error Assessment

Abstract

:1. Introduction

2. Related Work

2.1. Supervised Leaning Models for Monocular Depth Estimation

2.2. Unsupervised Learning Models for Monocular Depth Estimation Based on Video Sequence (UVS Models)

2.3. Unsupervised Learning Models for Monocular Depth Estimation Based on Stereo Images (USI Models)

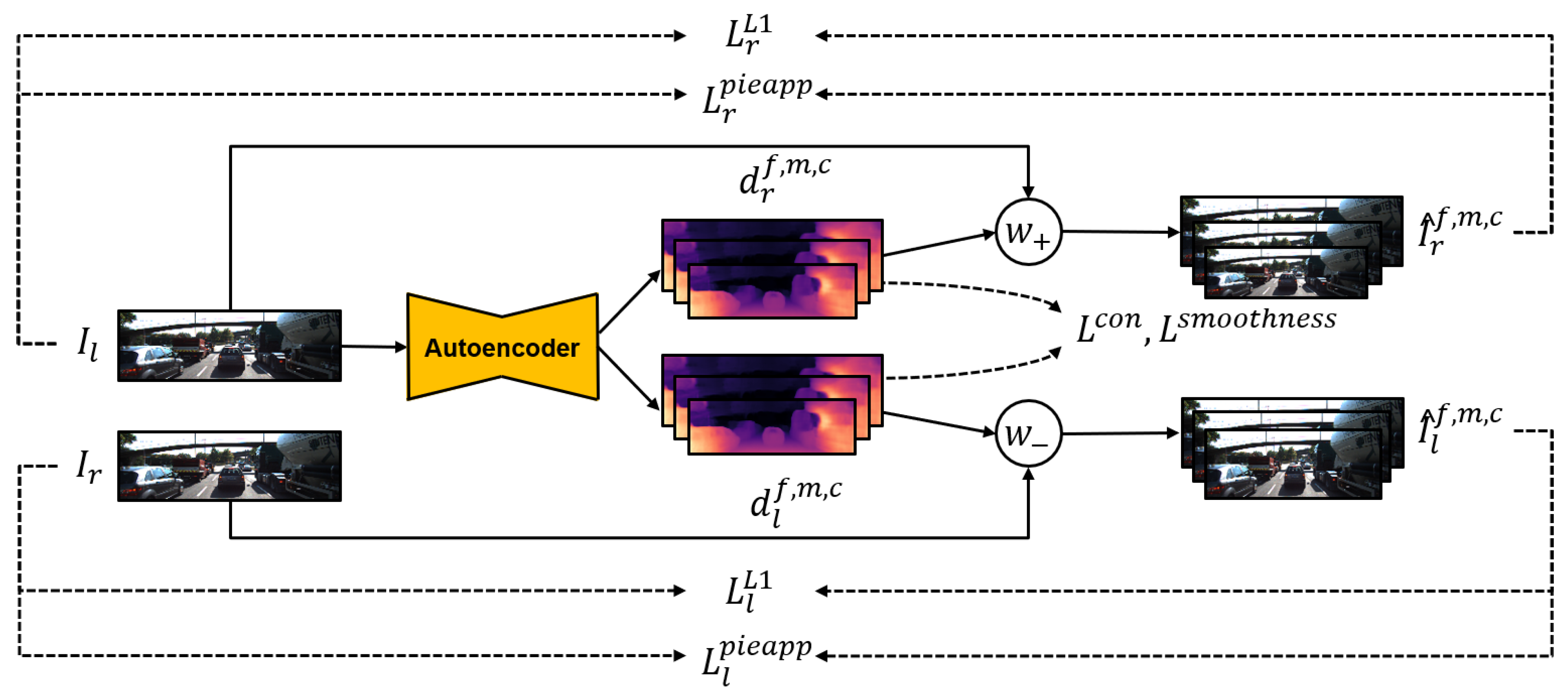

3. The Proposed Model

3.1. Depth Prediction Network

3.2. Training Loss

4. Experiments

4.1. Experimental Setup

4.1.1. Datasets

4.1.2. Implementation Details and Parameter Settings

4.2. Evaluation on KITTI Dataset

4.2.1. Quantitative Results

- (1)

- Absolute relative error (Abs Rel): .

- (2)

- Squared relative error (Sq Rel): .

- (3)

- Root-mean-squared error (RMSE): .

- (4)

- Mean error (RMSE log): .

- (5)

- Accuracy with threshold t, that is, the percentage of such that , where .

4.2.2. Qualitative Results

4.2.3. Ablation Study

4.3. Evaluation on CityScapes Dataset

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| IQA | Image Quality Assessment |

| PieAPP | Perceptual Image-Error Assessment Through Pairwise Preference |

References

- Godard, C.; Aodha, O.M.; Firman, M.; Brostow, G.J. Digging Into Self-Supervised Monocular Depth Estimation. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 3828–3838. [Google Scholar]

- Sak, H.; Senior, A.; Rao, K.; Beaufays, F. Fast and accurate recurrent neural network acoustic models for speech recognition. arXiv 2015, arXiv:1507.06947. [Google Scholar]

- Couprie, C.; Farabet, C.; Najman, L.; LeCun, Y. Indoor semantic segmentation using depth information. arXiv 2013, arXiv:1301.3572. [Google Scholar]

- Hazirbas, C.; Ma, L.; Domokos, C.; Cremers, D. FuseNet: Incorporating depth into semantic segmentation via fusion-based CNN architecture. In Computer Vision–ACCV 2016; Lecture Notes in Computer Science; Springer: Taiwan, China, 2016; pp. 213–228. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Proc. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Godard, C.; Aodha, O.M.; Brostow, G.J. Unsupervised Monocular Depth Estimation With left–right Consistency. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 270–279. [Google Scholar]

- Park, H.; Park, S.; Joo, Y. Relativistic Approach for Training Self-Supervised Adversarial Depth Prediction Model Using Symmetric Consistency. IEEE Access 2020, 8, 206835–206847. [Google Scholar] [CrossRef]

- Ding, K.; Ma, K.; Wang, S.; Simoncelli, E.P. Image Quality Assessment: Unifying Structure and Texture Similarity. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 2567–2581. [Google Scholar] [CrossRef] [PubMed]

- Zbontar, J.; LeCun, Y. Stereo matching by training a convolutional neural network to compare image patches. J. Mach. Learn. Res. 2016, 17, 2287–2318. [Google Scholar]

- Zhang, R.; Isola, P.; Efros, A.A.; Shechtman, E.; Wang, O. The Unreasonable Effectiveness of Deep Features as a Perceptual Metric. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 586–595. [Google Scholar]

- Prashnani, E.; Cai, H.; Mostofi, Y.; Sen, P. PieAPP: Perceptual Image-Error Assessment Through Pairwise Preference. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 1808–1817. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for autonomous driving? The KITTI vision benchmark suite. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 3354–3361. [Google Scholar]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The Cityscapes Dataset for Semantic Urban Scene Understanding. In Proceedings of the Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 3213–3223. [Google Scholar]

- Eigen, D.; Puhrsch, C.; Fergus, R. Depth map prediction from a single image using a multi-scale deep network. In Proceedings of the 27th International Conference on Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; pp. 2366–2374. [Google Scholar]

- Liu, M.; Salzmann, M.; He, X. Discrete-Continuous Depth Estimation from a Single Image. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014; pp. 716–723. [Google Scholar]

- Saxena, A.; Sun, M.; Ng, A.Y. Make3D: Learning 3D Scene Structure from a Single Still Image. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 31, 824–840. [Google Scholar] [CrossRef] [PubMed]

- Silberman, N.; Hoiem, D.; Kohli, P.; Fergus, R. Indoor segmentation and support inference from rgbd images. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Fu, H.; Gong, M.; Wang, C.; Batmanghelich, K.; Tao, D. Deep Ordinal Regression Network for Monocular Depth Estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 2002–2011. [Google Scholar]

- Zhou, T.; Brown, M.; Snavely, N.; Lowe, D.G. Unsupervised Learning of Depth and Ego-Motion From Video. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1851–1858. [Google Scholar]

- Mayer, N.; Ilg, E.; Häusser, P.; Fischer, P.; Cremers, D.; Dosovitskiy, A.; Brox, T. A Large Dataset to Train Convolutional Networks for Disparity, Optical Flow, and Scene Flow Estimation. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 4040–4048. [Google Scholar]

- Yang, Z.; Wang, P.; Xu, W.; Zhao, L.; Nevatia, R. Unsupervised learning of geometry with edge-aware depth-normal consistency. arXiv 2018, arXiv:1711.03665. [Google Scholar] [CrossRef]

- Mahjourian, R.; Wicke, M.; Angelova, A. Unsupervised Learning of Depth and Ego-Motion From Monocular Video Using 3D Geometric Constraints. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 5667–5675. [Google Scholar]

- Yin, Z.; Shi, J. GeoNet: Unsupervised Learning of Dense Depth, Optical Flow and Camera Pose. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 1983–1992. [Google Scholar]

- Wang, C.; Buenaposada, J.M.; Zhu, R.; Lucey, S. Learning Depth From Monocular Videos Using Direct Methods. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 2022–2030. [Google Scholar]

- Engel, J.; Schöps, T.; Cremers, D. LSD-SLAM: Large-scale direct monocular SLAM. In European Conference on Computer Vision; Springer: Cham, Switherland, 2014. [Google Scholar]

- Luo, C.; Yang, Z.; Wang, P.; Wang, Y.; Xu, W.; Nevatia, R.; Yuille, A. Every Pixel Counts ++: Joint Learning of Geometry and Motion with 3D Holistic Understanding. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 42, 2624–2641. [Google Scholar] [CrossRef] [PubMed]

- Garg, R.; Kumar, V.; Gustavo, B.G.; Reid, C. Unsupervised CNN for Single View Depth Estimation: Geometry to the Rescue. In European Conference on Computer Vision (ECCV); Springer: Berlin/Heidelberg, Germany, 2016; Volume 9912, pp. 740–756. [Google Scholar]

- Pillai, S.; Ambruş, R.; Gaidon, A. SuperDepth: Self-Supervised, Super-Resolved Monocular Depth Estimation. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 9250–9256. [Google Scholar]

- Xue, W.; Zhang, L.; Mou, X.; Bovik, A.C. Gradient Magnitude Similarity Deviation: A Highly Efficient Perceptual Image Quality Index. IEEE Trans. Image Proc. 2014, 23, 684–695. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Nets. In Proceedings of the Advances in Neural Information Processing Systems 27 (NIPS 2014), Montreal, QC, Canada, 8–13 December 2014. [Google Scholar]

- Jolicoeur-Martineau, A. The relativistic discriminator: A key element missing from standard GAN. In Proceedings of the International Conference on Learning Representations (ICLR) 2019, New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Kastryulin, S.; Zakirov, D.; Prokopenko, D. PyTorch Image Quality: Metrics and Measure for Image Quality Assessment. Available online: https://github.com/photosynthesis-team/piq/ (accessed on 3 March 2022).

- Paszke, A.; Gross, S.; Chintala, S.; Chanan, G.; Yang, E.; DeVito, Z.; Lin, Z.; Desmaison, A.; Antiga, L.; Lerer, A. Automatic differentiation in PyTorch. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

| Type | Method | Image-Reconstruction Loss |

|---|---|---|

| UVS (M) | Zhou [20] | L1 pixel-wise photometric loss |

| Yang [22] | L1 pixel-wise photometric loss | |

| Mahjourian [23] | L1 pixel-wise photometric loss | |

| GeoNet [24] | A linear combination of L1 photometric loss and sigle scale SSIM loss | |

| DDVO [25] | A linear combination of L1 photometric loss and sigle scale SSIM loss | |

| EPC++ [27] | L1 pixel-wise photometric loss | |

| USI (S) | Garg [28] | L2 pixel-wise photometric loss |

| Godard [6] | A linear combination of L1 photometric loss and sigle scale SSIM loss | |

| SuperDepth [29] | A linear combination of L1 photometric loss and sigle scale SSIM loss | |

| Monodepth2 [1] | A linear combination of L1 photometric loss and sigle scale SSIM loss | |

| Park+pp [7] | A linear combination of L1 photometric loss and sigle scale GMSD loss |

| Type | Method | Abs Rel | Sq Rel | RMSE | RMSE log | |||

|---|---|---|---|---|---|---|---|---|

| M | Zhou [20] | 0.183 | 1.595 | 6.709 | 0.270 | 0.734 | 0.902 | 0.959 |

| Yang [22] | 0.182 | 1.481 | 6.501 | 0.267 | 0.725 | 0.906 | 0.963 | |

| Mahjourian [23] | 0.163 | 1.240 | 6.220 | 0.250 | 0.762 | 0.916 | 0.968 | |

| GeoNet [24] | 0.149 | 1.060 | 5.567 | 0.226 | 0.796 | 0.935 | 0.975 | |

| DDVO [25] | 0.151 | 1.257 | 5.583 | 0.228 | 0.810 | 0.933 | 0.974 | |

| EPC++ [27] | 0.141 | 1.029 | 5.350 | 0.216 | 0.816 | 0.941 | 0.979 | |

| S | Garg [28] | 0.152 | 1.226 | 5.849 | 0.246 | 0.784 | 0.921 | 0.967 |

| Godard [6] | 0.148 | 1.344 | 5.927 | 0.247 | 0.803 | 0.922 | 0.964 | |

| SuperDepth+pp [29] () | 0.112 | 0.875 | 4.958 | 0.207 | 0.852 | 0.947 | 0.977 | |

| Monodepth2 [1] () | 0.109 | 0.873 | 4.960 | 0.209 | 0.864 | 0.948 | 0.975 | |

| Park+pp [7] | 0.121 | 0.836 | 4.808 | 0.194 | 0.859 | 0.957 | 0.982 | |

| Ours | 0.116 | 0.873 | 4.805 | 0.198 | 0.869 | 0.953 | 0.977 | |

| Ours+pp | 0.112 | 0.832 | 4.741 | 0.192 | 0.876 | 0.957 | 0.980 | |

| MS | EPC++ [27] | 0.128 | 0.935 | 5.011 | 0.209 | 0.831 | 0.945 | 0.979 |

| Monodepth2 [1] | 0.106 | 0.818 | 4.750 | 0.196 | 0.874 | 0.957 | 0.979 |

| Method | L1 | PieAPP | Left–Right | Smooth | Abs | Sq | RMSE | RMSE | |||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Consistency | Rel | Rel | log | 1.25 | 1.252 | 1.253 | |||||

| (a) Base (L1) | ✓ | 0.197 | 1.849 | 6.104 | 0.278 | 0.722 | 0.887 | 0.944 | |||

| (b) Base (PieAPP) | ✓ | 0.190 | 1.678 | 6.224 | 0.300 | 0.729 | 0.892 | 0.947 | |||

| (c) Ours without PieAPP | ✓ | ✓ | ✓ | 0.149 | 1.134 | 5.559 | 0.227 | 0.799 | 0.937 | 0.973 | |

| (d) Ours without L1 | ✓ | ✓ | ✓ | 0.137 | 1.014 | 5.212 | 0.211 | 0.835 | 0.950 | 0.975 | |

| (e) Ours (full) | ✓ | ✓ | ✓ | ✓ | 0.116 | 0.873 | 4.805 | 0.198 | 0.869 | 0.953 | 0.977 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Park, H.; Park, S. An Unsupervised Depth-Estimation Model for Monocular Images Based on Perceptual Image Error Assessment. Appl. Sci. 2022, 12, 8829. https://doi.org/10.3390/app12178829

Park H, Park S. An Unsupervised Depth-Estimation Model for Monocular Images Based on Perceptual Image Error Assessment. Applied Sciences. 2022; 12(17):8829. https://doi.org/10.3390/app12178829

Chicago/Turabian StylePark, Hyeseung, and Seungchul Park. 2022. "An Unsupervised Depth-Estimation Model for Monocular Images Based on Perceptual Image Error Assessment" Applied Sciences 12, no. 17: 8829. https://doi.org/10.3390/app12178829

APA StylePark, H., & Park, S. (2022). An Unsupervised Depth-Estimation Model for Monocular Images Based on Perceptual Image Error Assessment. Applied Sciences, 12(17), 8829. https://doi.org/10.3390/app12178829