A Kernel Extreme Learning Machine-Grey Wolf Optimizer (KELM-GWO) Model to Predict Uniaxial Compressive Strength of Rock

Abstract

:1. Introduction

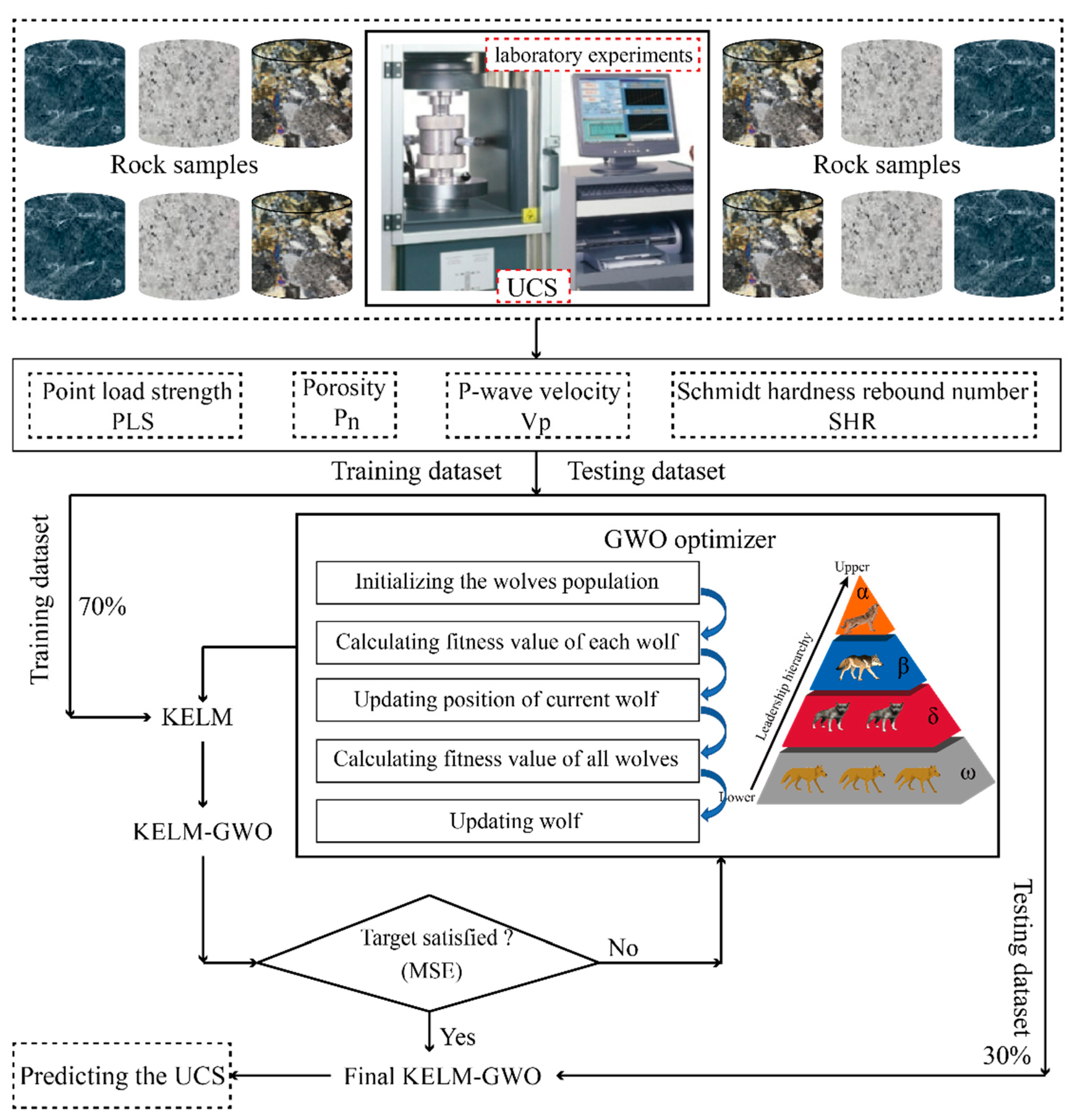

2. The Novel KELM-GWO Model for Estimating the Uniaxial Compressive Strength

2.1. Kernel Extreme Learning Machine (KELM)

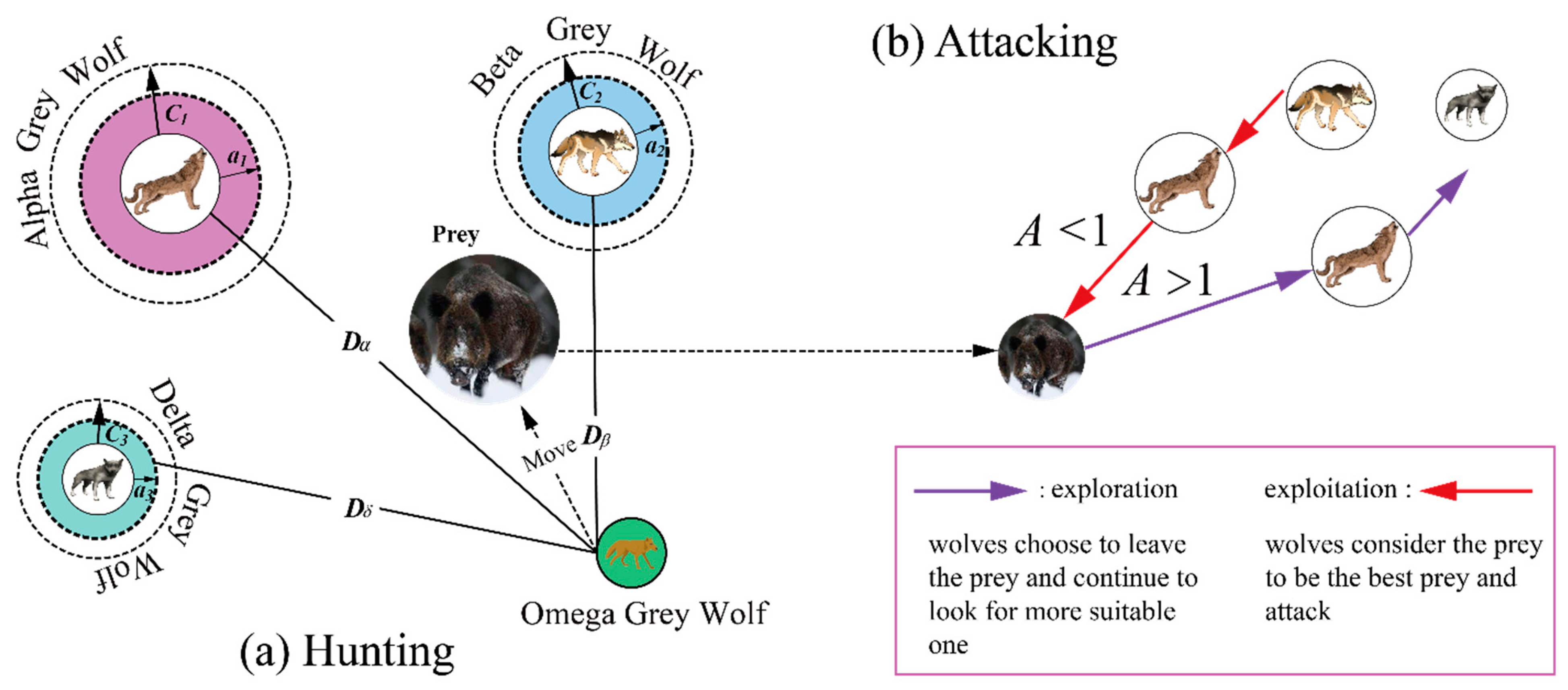

2.2. Grey Wolf Optimizer (GWO)

2.3. Novel Hybrid KELM-GWO

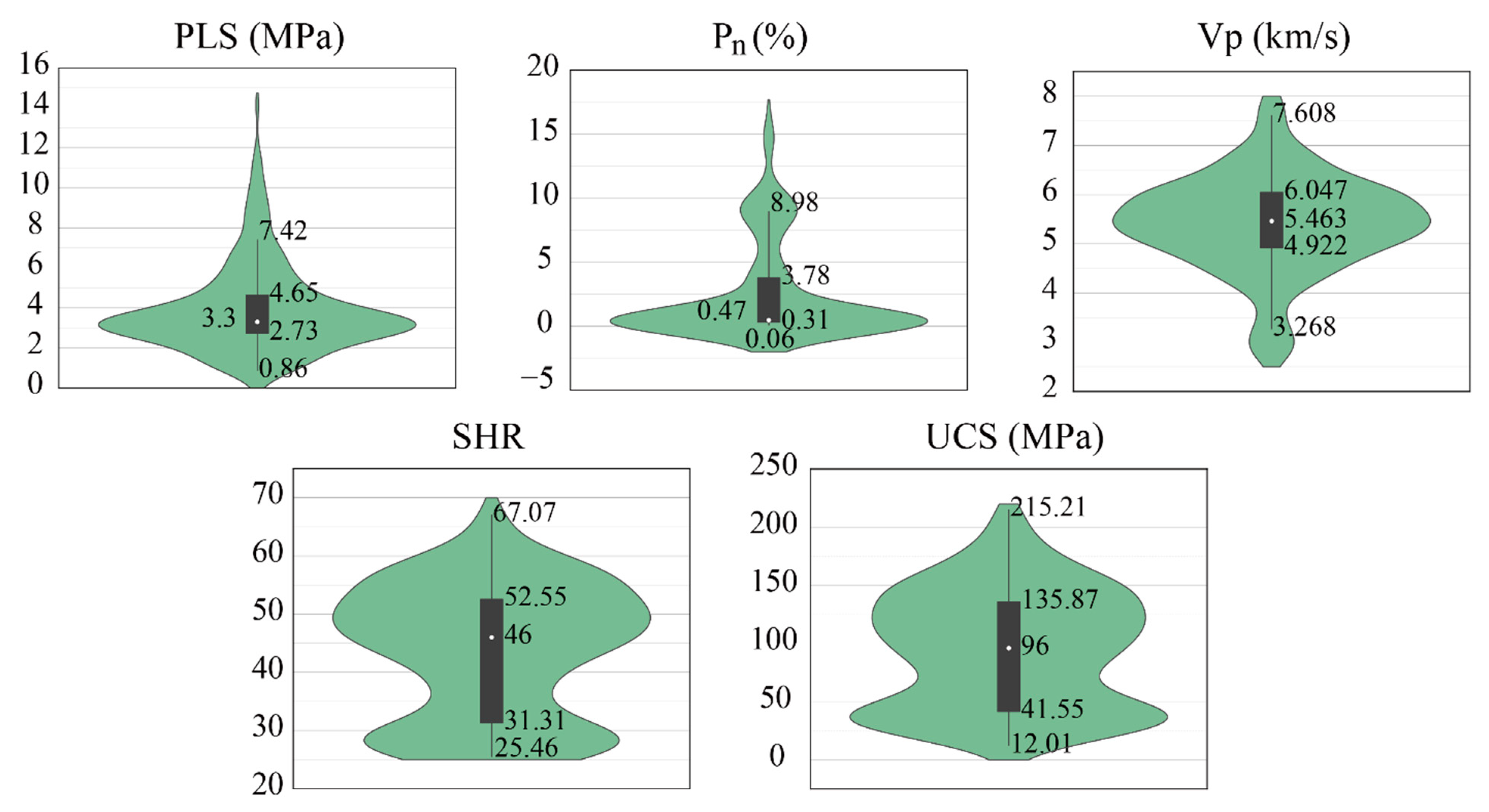

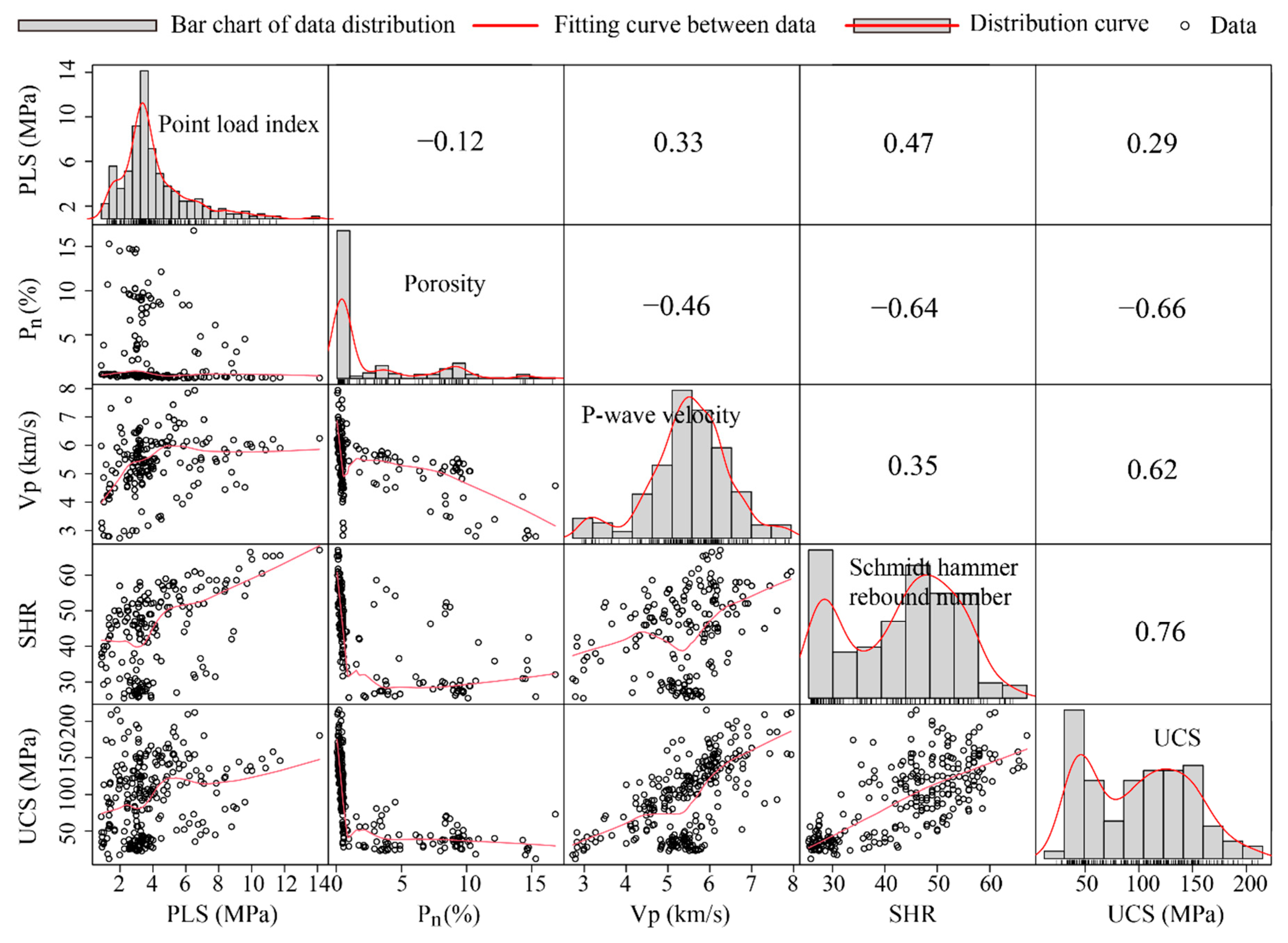

3. Dataset

4. Performance Indices for the Assessment of Models

5. Developing the Models for Predicting UCS

5.1. ELM

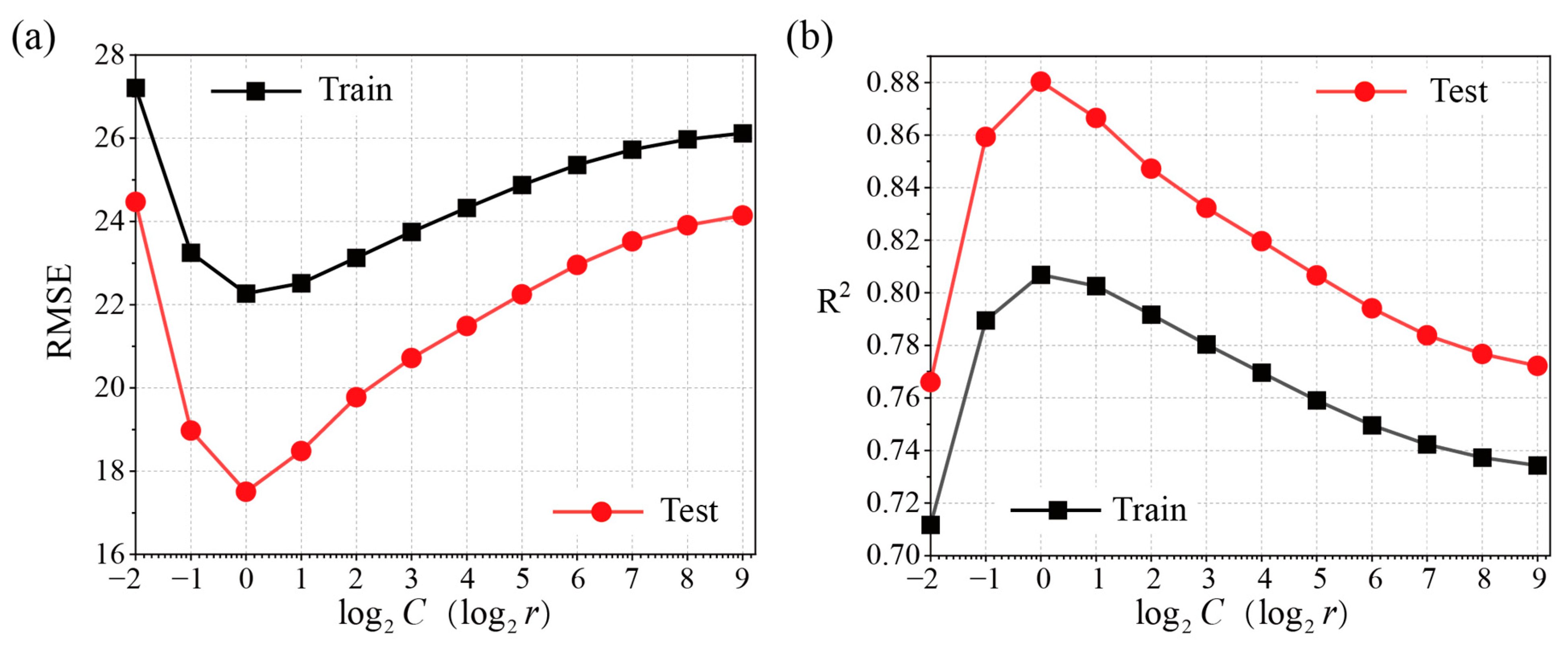

5.2. KELM

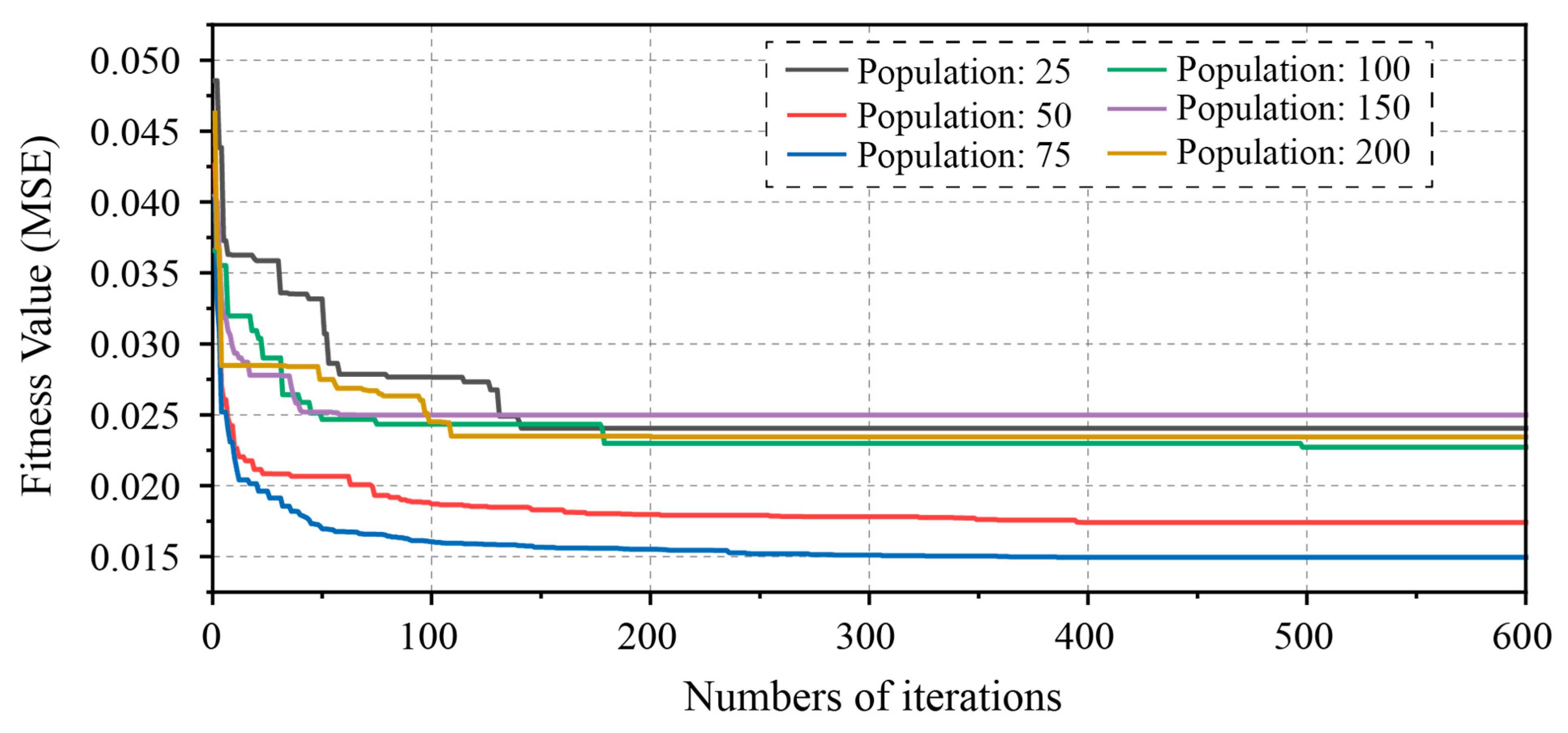

5.3. KELM-GWO

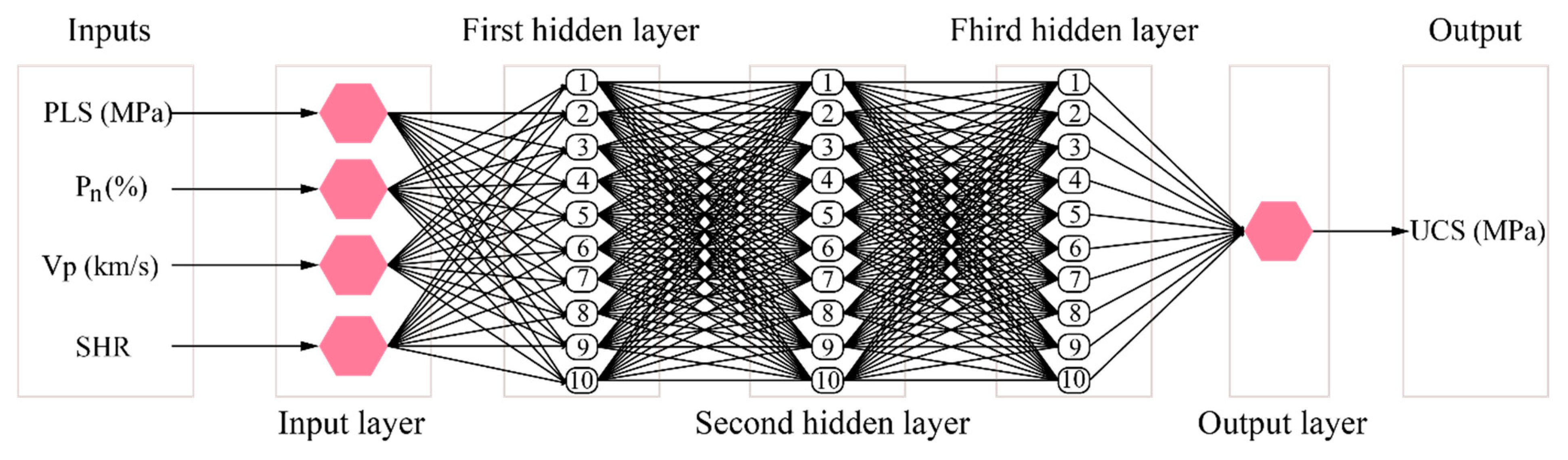

5.4. DELM

5.5. BPNN

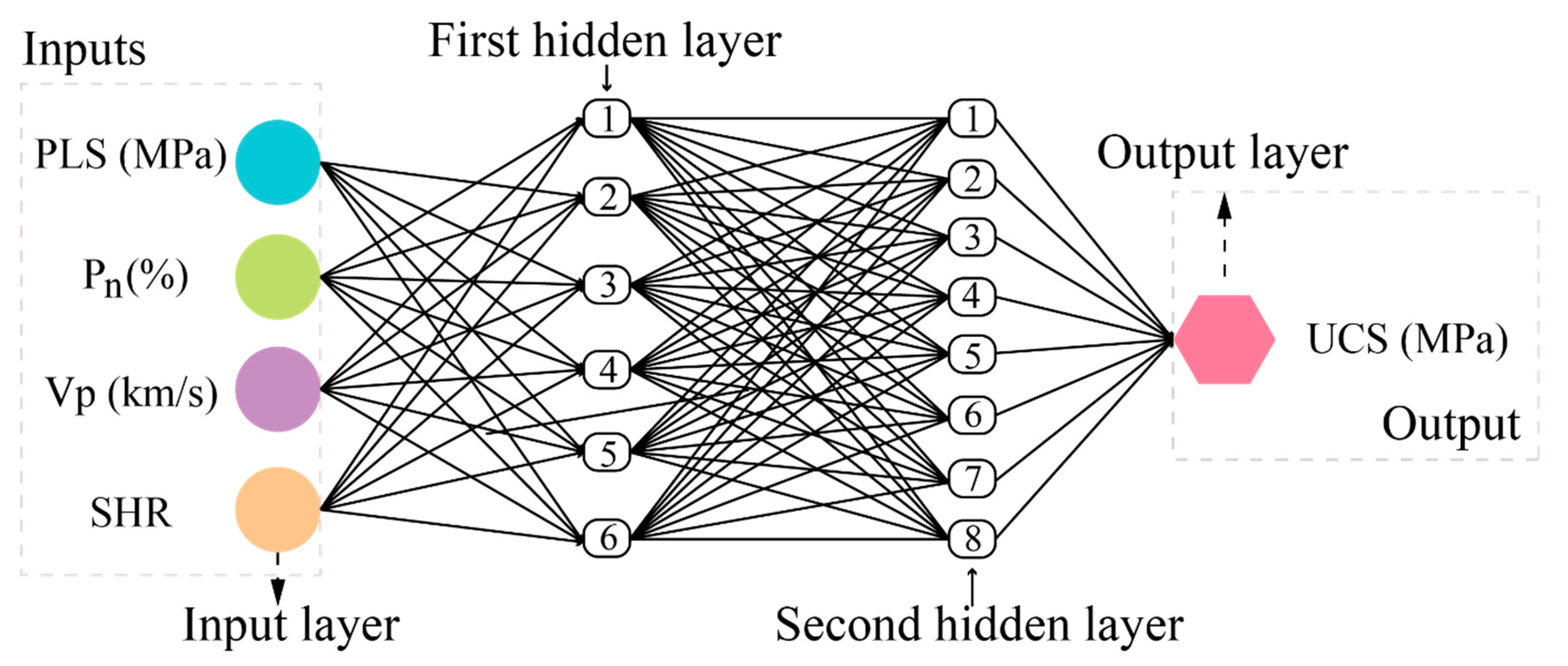

5.6. Empirical Model

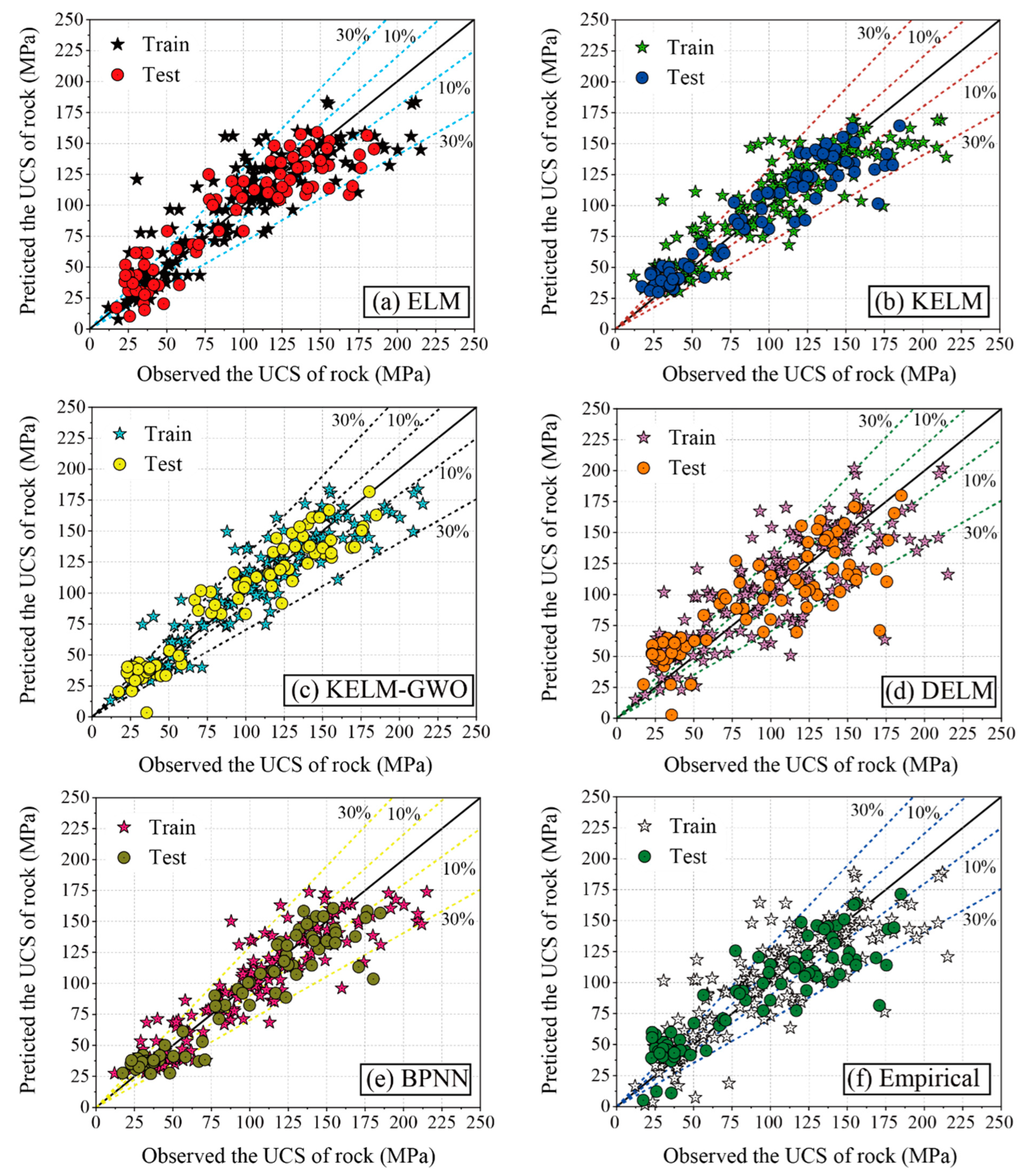

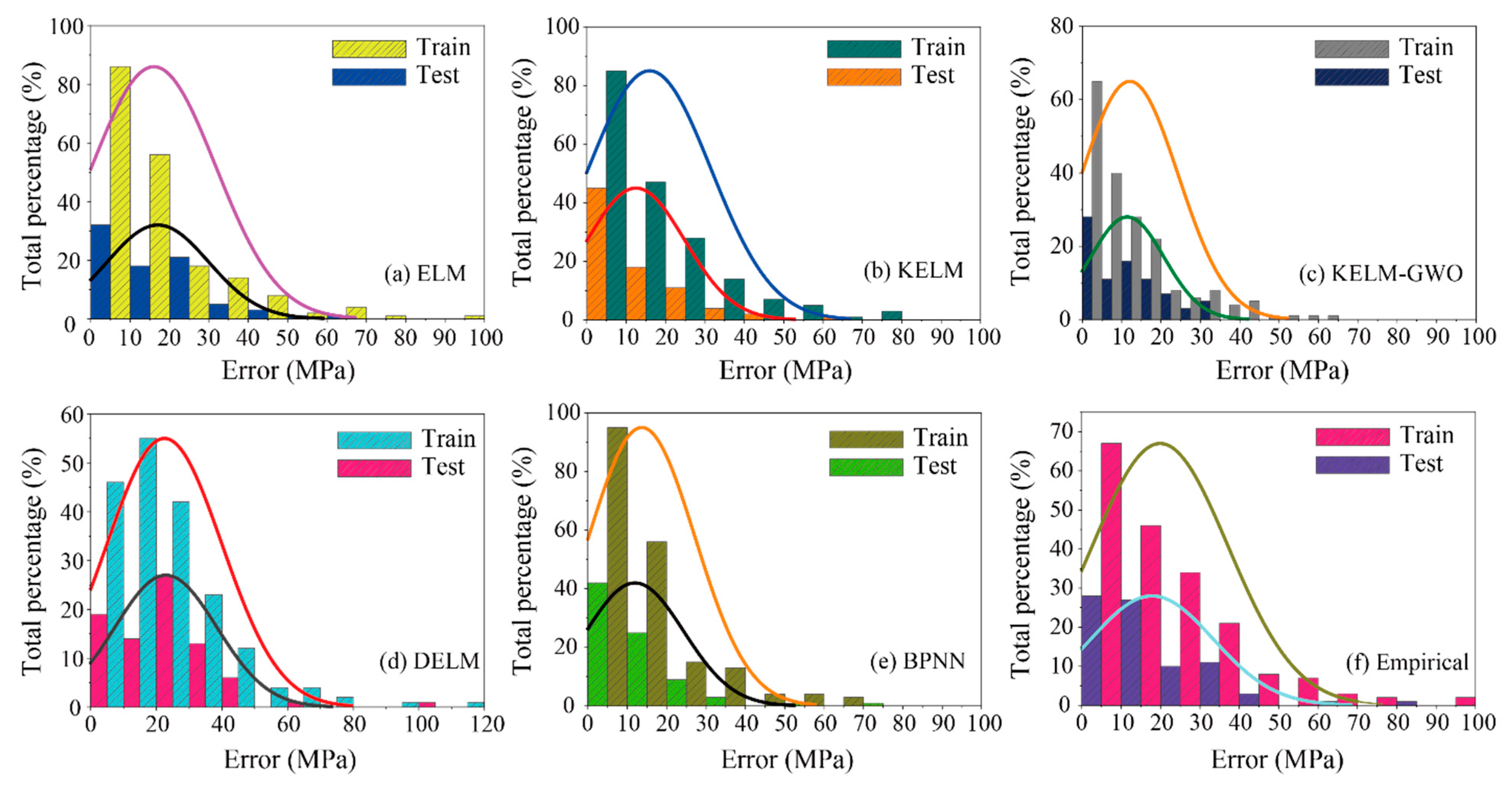

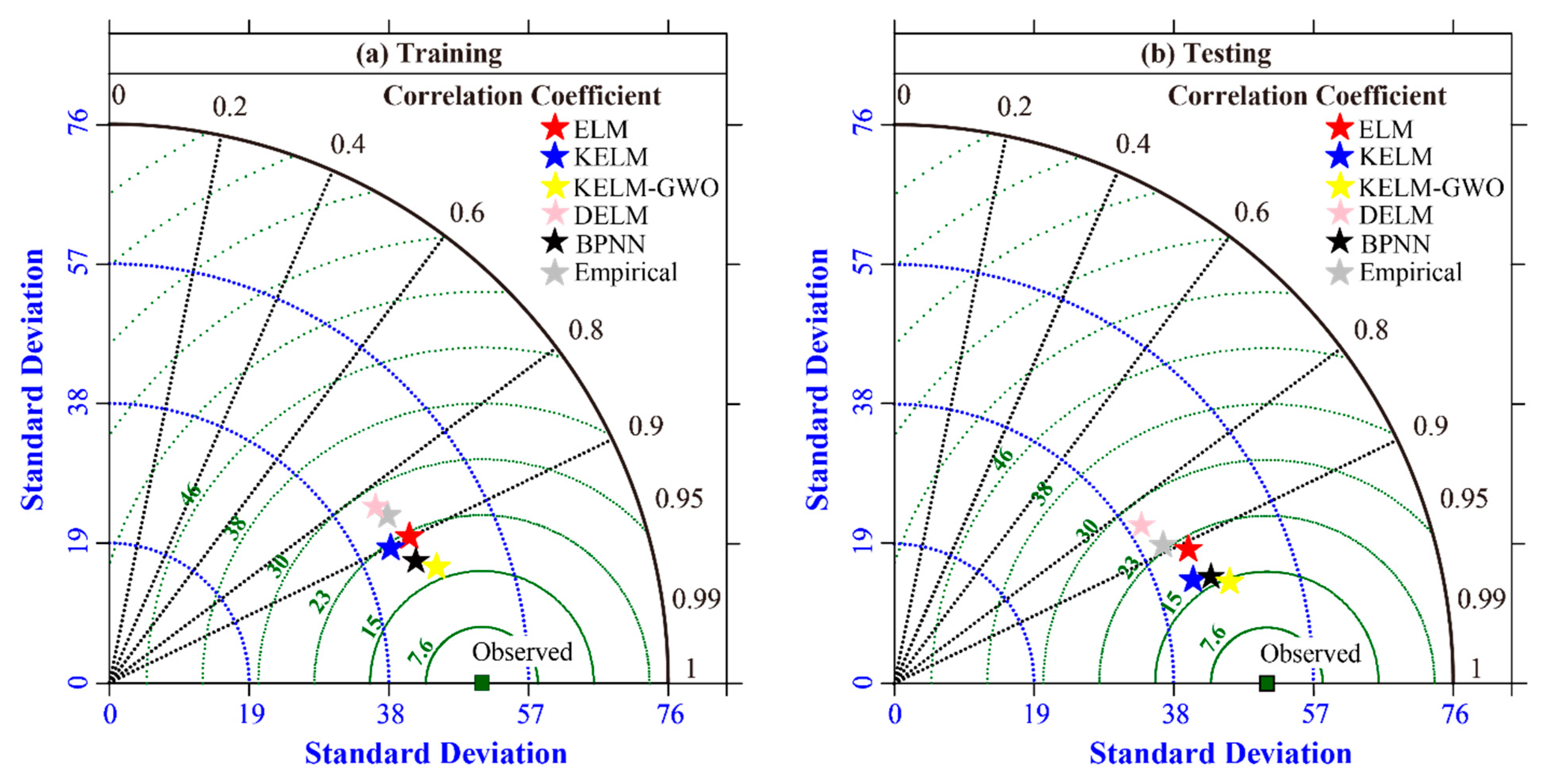

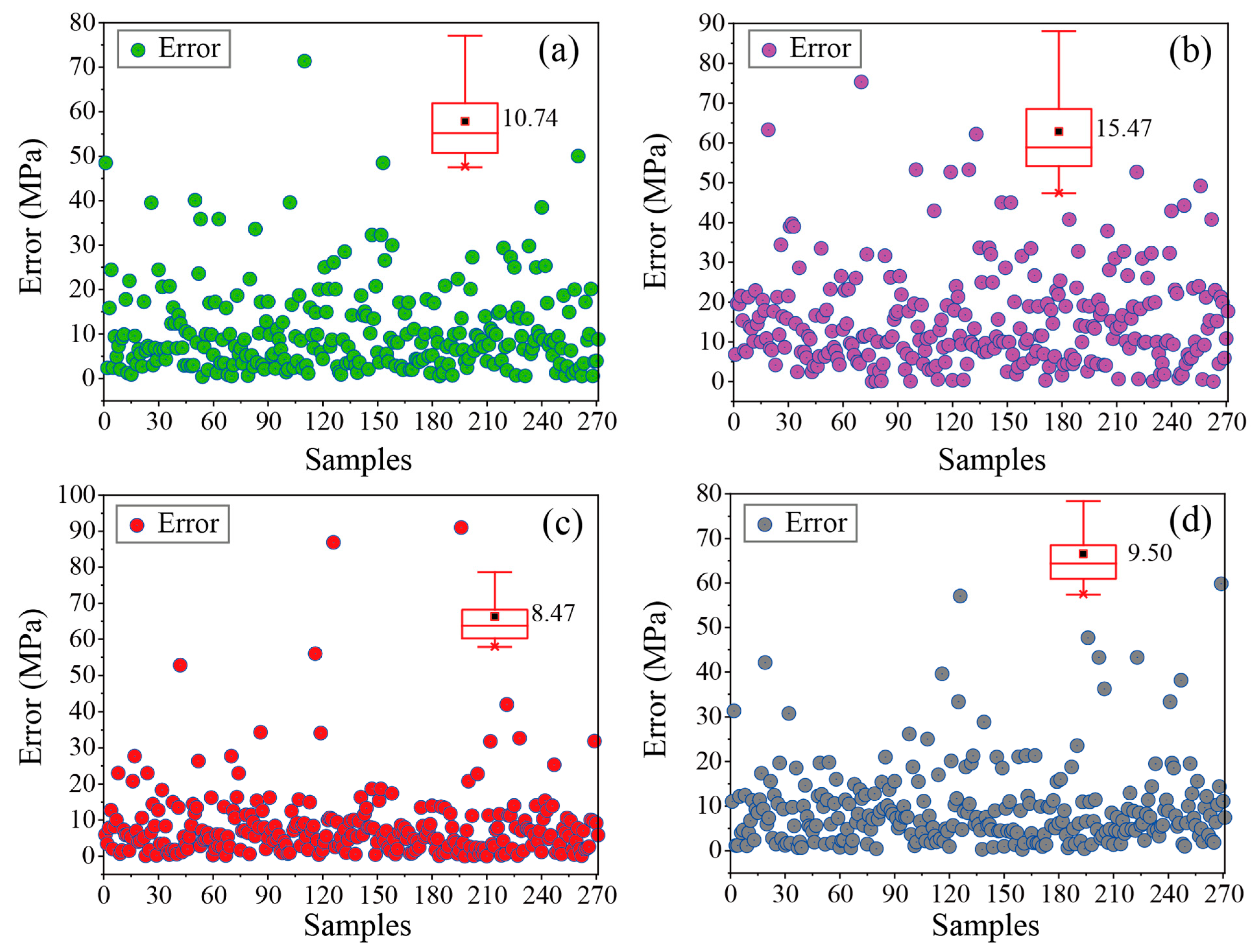

6. Results and Discussion

7. Conclusions and Summary

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Bieniawski, Z.T. Estimating the strength of rock materials. J. S. Afr. Inst. Min. Metall. 1974, 74, 312–320. [Google Scholar] [CrossRef]

- Gokceoglu, C.; Zorlu, K. A fuzzy model to predict the uniaxial compressive strength and the modulus of elasticity of a problematic rock. Eng. Appl. Artif. Intell. 2004, 17, 61–72. [Google Scholar] [CrossRef]

- Luo, Y. Influence of water on mechanical behavior of surrounding rock in hard-rock tunnels: An experimental simulation. Eng. Geol. 2020, 277, 105816. [Google Scholar]

- Elmo, D.; Donati, D.; Stead, D. Challenges in the characterisation of intact rock bridges in rock slopes. Eng. Geol. 2018, 245, 81–96. [Google Scholar] [CrossRef]

- Ghasemi, E.; Kalhori, H.; Bagherpour, R.; Yagiz, S. Model tree approach for predicting uniaxial compressive strength and Young’s modulus of carbonate rocks. Bull. Eng. Geol. Environ. 2018, 77, 331–343. [Google Scholar]

- Mahmoodzadeh, A.; Mohammadi, M.; Daraei, A.; Faraj, R.H.; Omer, R.; Sherwani, A.F.H. Decision-making in tunneling using artificial intelligence tools. Tunn. Undergr. Space Technol. 2020, 103, 103514. [Google Scholar] [CrossRef]

- Armaghani, D.J.; Safari, V.; Fahimifar, A.; Amin, M.F.M.; Monjezi, M.; Mohammadi, M.A. Uniaxial compressive strength prediction through a new technique based on gene expression programming. Neural Comput. Appl. 2018, 30, 3523–3532. [Google Scholar] [CrossRef]

- Ying, J.; Han, Z.; Shen, L.; Li, W. Influence of Parent Concrete Properties on Compressive Strength and Chloride Diffusion Coefficient of Concrete with Strengthened Recycled Aggregates. Materials 2020, 13, 4631. [Google Scholar] [CrossRef] [PubMed]

- Parsajoo, M.; Armaghani, D.J.; Mohammed, A.S.; Khari, M.; Jahandari, S. Tensile strength prediction of rock material using non-destructive tests: A comparative intelligent study. Transp. Geotech. 2021, 31, 100652. [Google Scholar] [CrossRef]

- Koopialipoor, M.; Asteris, P.G.; Mohammed, A.S.; Alexakis, D.E.; Mamou, A.; Armaghani, D.J. Introducing stacking machine learning approaches for the prediction of rock deformation. Transp. Geotech. 2022, 34, 100756. [Google Scholar]

- Kahraman, S. Evaluation of simple methods for assessing the uniaxial compressive strength of rock. Int. J. Rock Mech. Min. Sci. 2001, 38, 981–994. [Google Scholar] [CrossRef]

- Mahdiabadi, N.; Khanlari, G. Prediction of Uniaxial Compressive Strength and Modulus of Elasticity in Calcareous Mudstones Using Neural Networks, Fuzzy Systems, and Regression Analysis. Period. Polytech. Civ. Eng. 2019, 63, 104–114. [Google Scholar] [CrossRef]

- Baykasoğlu, A.; Güllü, H.; Canakci, H.; Özbakır, L. Prediction of compressive and tensile strength of limestone via genetic programming. Expert Syst. Appl. 2008, 35, 111–123. [Google Scholar] [CrossRef]

- Yılmaz, I.; Sendır, H. Correlation of Schmidt hardness with unconfined compressive strength and Young’s modulus in gypsum from Sivas (Turkey). Eng. Geol. 2002, 66, 211–219. [Google Scholar] [CrossRef]

- obanoğlu, İ.; Çelik, S.B. Estimation of uniaxial compressive strength from point load strength, Schmidt hardness and P-wave velocity. Bull. Eng. Geol. Environ. 2008, 67, 491–498. [Google Scholar] [CrossRef]

- Sharma, P.K.; Singh, T.N. A correlation between P-wave velocity, impact strength index, slake durability index and uniaxial compressive strength. Bull. Eng. Geol. Environ. 2008, 67, 17–22. [Google Scholar] [CrossRef]

- Khandelwal, M.; Singh, T. Correlating static properties of coal measures rocks with P-wave velocity. Int. J. Coal Geol. 2009, 79, 55–60. [Google Scholar] [CrossRef]

- Kahraman, S. The determination of uniaxial compressive strength from point load strength for pyroclastic rocks. Eng. Geol. 2014, 170, 33–42. [Google Scholar] [CrossRef]

- Mohamad, E.T.; Armaghani, D.J.; Momeni, E.; Abad, S.V.A.N.K. Prediction of the unconfined compressive strength of soft rocks: A PSO-based ANN approach. Bull. Eng. Geol. Environ. 2015, 74, 745–757. [Google Scholar] [CrossRef]

- Dehghan, S.; Sattari, G.; Chelgani, S.C.; Aliabadi, M. Prediction of uniaxial compressive strength and modulus of elasticity for Travertine samples using regression and artificial neural networks. Min. Sci. Technol. 2010, 20, 41–46. [Google Scholar] [CrossRef]

- Beiki, M.; Majdi, A.; Givshad, A.D. Application of genetic programming to predict the uniaxial compressive strength and elastic modulus of carbonate rocks. Int. J. Rock Mech. Min. Sci. 2013, 63, 159–169. [Google Scholar] [CrossRef]

- Grima, M.A.; Babuška, R. Fuzzy model for the prediction of unconfined compressive strength of rock samples. Int. J. Rock Mech. Min. Sci. 1999, 36, 339–349. [Google Scholar] [CrossRef]

- Tiryaki, B. Predicting intact rock strength for mechanical excavation using multivariate statistics, artificial neural networks, and regression trees. Eng. Geol. 2008, 99, 51–60. [Google Scholar] [CrossRef]

- Zorlu, K.; Gokceoglu, C.; Ocakoglu, F.; Nefeslioglu, H.; Acikalin, S. Prediction of uniaxial compressive strength of sandstones using petrography-based models. Eng. Geol. 2008, 96, 141–158. [Google Scholar] [CrossRef]

- Yılmaz, I.; Yuksek, A.G. An Example of Artificial Neural Network (ANN) Application for Indirect Estimation of Rock Parameters. Rock Mech. Rock Eng. 2008, 41, 781–795. [Google Scholar]

- Sarkar, K.; Tiwary, A.; Singh, T.N. Estimation of strength parameters of rock using artificial neural networks. Bull. Eng. Geol. Environ. 2010, 69, 599–606. [Google Scholar] [CrossRef]

- Sonmez, H.; Tuncay, E.; Gokceoglu, C. Models to predict the uniaxial compressive strength and the modulus of elasticity for Ankara Agglomerate. Int. J. Rock Mech. Min. Sci. 2004, 41, 717–729. [Google Scholar] [CrossRef]

- Gokceoglu, C.; Sonmez, H.; Zorlu, K. Estimating the uniaxial compressive strength of some clay-bearing rocks selected from Turkey by nonlinear multivariable regression and rule-based fuzzy models. Expert Syst. 2009, 26, 176–190. [Google Scholar] [CrossRef]

- Mishra, D.; Basu, A. Estimation of uniaxial compressive strength of rock materials by index tests using regression analysis and fuzzy inference system. Eng. Geol. 2013, 160, 54–68. [Google Scholar]

- Rezaei, M.; Majdi, A.; Monjezi, M. An intelligent approach to predict unconfined compressive strength of rock surrounding access tunnels in longwall coal mining. Neural Comput. Appl. 2014, 24, 233–241. [Google Scholar] [CrossRef]

- Ceryan, N. Application of support vector machines and relevance vector machines in predicting uniaxial compressive strength of volcanic rocks. J. Afr. Earth Sci. 2014, 100, 634–644. [Google Scholar]

- Barzegar, R.; Sattarpour, M.; Nikudel, M.R.; Moghaddam, A.A. Comparative evaluation of artificial intelligence models for prediction of uniaxial compressive strength of travertine rocks, Case study: Azarshahr area, NW Iran. Model. Earth Syst. Environ. 2016, 2, 76. [Google Scholar] [CrossRef]

- Çelik, S.B. Prediction of uniaxial compressive strength of carbonate rocks from nondestructive tests using multivariate regression and LS-SVM methods. Arab. J. Geosci. 2019, 12, 193. [Google Scholar]

- Mahmoodzadeh, A.; Mohammadi, M.; Ibrahim, H.H.; Abdulhamid, S.N.; Salim, S.G.; Ali, H.F.H.; Majeed, M.K. Artificial intelligence forecasting models of uniaxial compressive strength. Transp. Geotech. 2021, 27, 100499. [Google Scholar]

- Matin, S.; Farahzadi, L.; Makaremi, S.; Chelgani, S.C.; Sattari, G. Variable selection and prediction of uniaxial compressive strength and modulus of elasticity by random forest. Appl. Soft Comput. 2018, 70, 980–987. [Google Scholar] [CrossRef]

- Barzegar, R.; Sattarpour, M.; Deo, R.; Fijani, E.; Adamowski, J. An ensemble tree-based machine learning model for predicting the uniaxial compressive strength of travertine rocks. Neural Comput. Appl. 2020, 32, 9065–9080. [Google Scholar]

- Yilmaz, I.; Yuksek, G. Prediction of the strength and elasticity modulus of gypsum using multiple regression, ANN, and ANFIS models. Int. J. Rock Mech. Min. Sci. 2009, 46, 803–810. [Google Scholar] [CrossRef]

- Yesiloglu-Gultekin, N.; Sezer, E.A.; Gokceoglu, C.; Bayhan, H. An application of adaptive neuro fuzzy inference system for estimating the uniaxial compressive strength of certain granitic rocks from their mineral contents. Expert Syst. Appl. 2013, 40, 921–928. [Google Scholar] [CrossRef]

- Chentout, M.; Alloul, B.; Rezouk, A.; Belhai, D. Experimental study to evaluate the effect of travertine structure on the physical and mechanical properties of the material. Arab. J. Geosci. 2015, 8, 8975–8985. [Google Scholar] [CrossRef]

- Asheghi, R.; Shahri, A.A.; Zak, M.K. Prediction of Uniaxial Compressive Strength of Different Quarried Rocks Using Metaheuristic Algorithm. Arab. J. Sci. Eng. 2019, 44, 8645–8659. [Google Scholar]

- Çanakcı, H.; Baykasoğlu, A.; Güllü, H. Prediction of compressive and tensile strength of Gaziantep basalts via neural networks and gene expression programming. Neural Comput. Appl. 2009, 18, 1031–1041. [Google Scholar] [CrossRef]

- Ozbek, A.; Unsal, M.; Dikec, A. Estimating uniaxial compressive strength of rocks using genetic expression programming. J. Rock Mech. Geotech. Eng. 2013, 5, 325–329. [Google Scholar] [CrossRef]

- Manouchehrian, A.; Sharifzadeh, M.; Moghadam, R.H.; Nouri, T. Selection of regression models for predicting strength and deformability properties of rocks using GA. Int. J. Min. Sci. Technol. 2013, 23, 495–501. [Google Scholar] [CrossRef]

- Liu, Z.; Shao, J.; Xu, W.; Wu, Q. Indirect estimation of unconfined compressive strength of carbonate rocks using extreme learning machine. Acta Geotech. 2015, 10, 651–663. [Google Scholar] [CrossRef]

- Zeng, J.; Roy, B.; Kumar, D.; Mohammed, A.S.; Armaghani, D.J.; Zhou, J.; Mohamad, E.T. Proposing several hybrid PSO-extreme learning machine techniques to predict TBM performance. Eng. Comput. 2021, 1–17. [Google Scholar] [CrossRef]

- Lu, X.; Hasanipanah, M.; Brindhadevi, K.; Amnieh, H.B.; Khalafi, S. ORELM: A Novel Machine Learning Approach for Prediction of Flyrock in Mine Blasting. Nonrenew. Resour. 2020, 29, 641–654. [Google Scholar] [CrossRef]

- Murlidhar, B.R.; Kumar, D.; Armaghani, D.J.; Mohamad, E.T.; Roy, B.; Pham, B.T. A Novel Intelligent ELM-BBO Technique for Predicting Distance of Mine Blasting-Induced Flyrock. Nonrenew. Resour. 2020, 29, 4103–4120. [Google Scholar] [CrossRef]

- Li, C.; Zhou, J.; Khandelwal, M.; Zhang, X.; Monjezi, M.; Qiu, Y. Six Novel Hybrid Extreme Learning Machine–Swarm Intelligence Optimization (ELM–SIO) Models for Predicting Backbreak in Open-Pit Blasting. Nonrenew. Resour. 2022, 1–23. [Google Scholar] [CrossRef]

- Armaghani, D.J.; Kumar, D.; Samui, P.; Hasanipanah, M.; Roy, B. A novel approach for forecasting of ground vibrations resulting from blasting: Modified particle swarm optimization coupled extreme learning machine. Eng. Comput. 2021, 37, 3221–3235. [Google Scholar] [CrossRef]

- Bardhan, A.; Kardani, N.; GuhaRay, A.; Burman, A.; Samui, P.; Zhang, Y. Hybrid ensemble soft computing approach for predicting penetration rate of tunnel boring machine in a rock environment. J. Rock Mech. Geotech. Eng. 2021, 13, 1398–1412. [Google Scholar] [CrossRef]

- Huang, G.-B.; Zhu, Q.-Y.; Siew, C.-K. Extreme learning machine: Theory and applications. Neurocomputing 2006, 70, 489–501. [Google Scholar] [CrossRef]

- Huang, G.-B.; Zhou, H.; Ding, X.; Zhang, R. Extreme Learning Machine for Regression and Multiclass Classification. IEEE Trans. Syst. Man Cybern. Part B Cybern. 2011, 42, 513–529. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Tang, Z.; Tamura, H.; Ishii, M.; Sun, W. An improved backpropagation algorithm to avoid the local minima problem. Neurocomputing 2004, 56, 455–460. [Google Scholar] [CrossRef]

- Moayedi, H.; Armaghani, D.J. Optimizing an ANN model with ICA for estimating bearing capacity of driven pile in cohesionless soil. Eng. Comput. 2018, 34, 347–356. [Google Scholar] [CrossRef]

- Ghaleini, E.N.; Koopialipoor, M.; Momenzadeh, M.; Sarafraz, M.E.; Mohamad, E.T.; Gordan, B. A combination of artificial bee colony and neural network for approximating the safety factor of retaining walls. Eng. Comput. 2019, 35, 647–658. [Google Scholar] [CrossRef]

- Mohamad, E.T.; Armaghani, D.J.; Momeni, E.; Yazdavar, A.H.; Ebrahimi, M. Rock strength estimation: A PSO-based BP approach. Neural Comput. Appl. 2018, 30, 1635–1646. [Google Scholar] [CrossRef]

- Momeni, E.; Armaghani, D.J.; Hajihassani, M.; Amin, M.F.M. Prediction of uniaxial compressive strength of rock samples using hybrid particle swarm optimization-based artificial neural networks. Measurement 2015, 60, 50–63. [Google Scholar] [CrossRef]

- Jing, H.; Rad, H.N.; Hasanipanah, M.; Armaghani, D.J.; Qasem, S.N. Design and implementation of a new tuned hybrid intelligent model to predict the uniaxial compressive strength of the rock using SFS-ANFIS. Eng. Comput. 2021, 37, 2717–2734. [Google Scholar] [CrossRef]

- Abdi, Y.; Momeni, E.; Khabir, R.R. A Reliable PSO-based ANN Approach for Predicting Unconfined Compressive Strength of Sandstones. Open Constr. Build. Technol. J. 2020, 14, 237–249. [Google Scholar] [CrossRef]

- Al-Bared, M.A.M.; Mustaffa, Z.; Armaghani, D.J.; Marto, A.; Yunus, N.Z.M.; Hasanipanah, M. Application of hybrid intelligent systems in predicting the unconfined compressive strength of clay material mixed with recycled additive. Transp. Geotech. 2021, 30, 100627. [Google Scholar] [CrossRef]

- Cao, J.; Gao, J.; Rad, H.N.; Mohammed, A.S.; Hasanipanah, M.; Zhou, J. A novel systematic and evolved approach based on XGBoost-firefly algorithm to predict Young’s modulus and unconfined compressive strength of rock. Eng. Comput. 2021, 1–17. [Google Scholar] [CrossRef]

- Zhou, J.; Huang, S.; Wang, M.; Qiu, Y. Performance evaluation of hybrid GA–SVM and GWO–SVM models to predict earthquake-induced liquefaction potential of soil: A multi-dataset investigation. Eng. Comput. 2021, 1–19. [Google Scholar] [CrossRef]

- Zhou, J.; Qiu, Y.; Zhu, S.; Armaghani, D.J.; Li, C.; Nguyen, H.; Yagiz, S. Optimization of support vector machine through the use of metaheuristic algorithms in forecasting TBM advance rate. Eng. Appl. Artif. Intell. 2021, 97, 104015. [Google Scholar] [CrossRef]

- Zhou, J.; Qiu, Y.; Armaghani, D.J.; Zhang, W.; Li, C.; Zhu, S.; Tarinejad, R. Predicting TBM penetration rate in hard rock condition: A comparative study among six XGB-based metaheuristic techniques. Geosci. Front. 2021, 12, 101091. [Google Scholar] [CrossRef]

- Meulenkamp, F.; Grima, M. Application of neural networks for the prediction of the unconfined compressive strength (UCS) from Equotip hardness. Int. J. Rock Mech. Min. Sci. 1999, 36, 29–39. [Google Scholar] [CrossRef]

- Karakus, M.; Tutmez, B. Fuzzy and Multiple Regression Modelling for Evaluation of Intact Rock Strength Based on Point Load, Schmidt Hammer and Sonic Velocity. Rock Mech. Rock Eng. 2006, 39, 45–57. [Google Scholar] [CrossRef]

- Altindag, R. Correlation between P-wave velocity and some mechanical properties for sedimentary rocks. J. South. Afr. Inst. Min. Metall. 2012, 112, 229–237. [Google Scholar]

- Madhubabu, N.; Singh, P.; Kainthola, A.; Mahanta, B.; Tripathy, A.; Singh, T. Prediction of compressive strength and elastic modulus of carbonate rocks. Measurement 2016, 88, 202–213. [Google Scholar] [CrossRef]

- Armaghani, D.J.; Mohamad, E.T.; Momeni, E.; Monjezi, M.; Narayanasamy, M.S. Prediction of the strength and elasticity modulus of granite through an expert artificial neural network. Arab. J. Geosci. 2016, 9, 48. [Google Scholar] [CrossRef]

- Heidari, M.; Mohseni, H.; Jalali, S.H. Prediction of Uniaxial Compressive Strength of Some Sedimentary Rocks by Fuzzy and Regression Models. Geotech. Geol. Eng. 2018, 36, 401–412. [Google Scholar] [CrossRef]

- Rezaei, M.; Asadizadeh, M. Predicting Unconfined Compressive Strength of Intact Rock Using New Hybrid Intelligent Models. J. Min. Environ. 2020, 11, 231–246. [Google Scholar]

- Moosavi, S.A.; Mohammadi, M. Development of a new empirical model and adaptive neuro-fuzzy inference systems in predicting unconfined compressive strength of weathered granite grade III. Bull. Eng. Geol. Environ. 2021, 80, 2399–2413. [Google Scholar] [CrossRef]

- Wang, Z.; Li, W.; Chen, J. Application of Various Nonlinear Models to Predict the Uniaxial Compressive Strength of Weakly Cemented Jurassic Rocks. Nonrenew. Resour. 2022, 31, 371–384. [Google Scholar] [CrossRef]

- Wang, M.; Chen, H.; Li, H.; Cai, Z.; Zhao, X.; Tong, C.; Li, J.; Xu, X. Grey wolf optimization evolving kernel extreme learning machine: Application to bankruptcy prediction. Eng. Appl. Artif. Intell. 2017, 63, 54–68. [Google Scholar] [CrossRef]

- Huang, G.-B.; Siew, C.-K. Extreme learning machine: RBF network case. IEEE 2004, 2, 1029–1036. [Google Scholar]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey wolf optimizer. Advances in engineering software. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Shariati, M.; Mafipour, M.S.; Ghahremani, B.; Azarhomayun, F.; Ahmadi, M.; Trung, N.T.; Shariati, A. A novel hybrid extreme learning machine–grey wolf optimizer (ELM-GWO) model to predict compressive strength of concrete with partial replacements for cement. Eng. Comput. 2020, 38, 757–779. [Google Scholar] [CrossRef]

- Muro, C.; Escobedo, R.; Spector, L.; Coppinger, R. Wolf-pack (Canis lupus) hunting strategies emerge from simple rules in computational simulations. Behav. Process. 2011, 88, 192–197. [Google Scholar] [CrossRef] [PubMed]

- Zhu, L.; Zhang, C.; Zhang, C.; Zhou, X.; Wang, J.; Wang, X. Application of Multiboost-KELM algorithm to alleviate the collinearity of log curves for evaluating the abundance of organic matter in marine mud shale reservoirs: A case study in Sichuan Basin, China. Acta Geophys. 2018, 66, 983–1000. [Google Scholar] [CrossRef]

- Li, C.; Zhou, J.; Armaghani, D.J.; Li, X. Stability analysis of underground mine hard rock pillars via combination of finite difference methods, neural networks, and Monte Carlo simulation techniques. Undergr. Space 2021, 6, 379–395. [Google Scholar] [CrossRef]

- Li, C.; Zhou, J.; Armaghani, D.J.; Cao, W.; Yagiz, S. Stochastic assessment of hard rock pillar stability based on the geological strength index system. Géoméch. Geophys. Geo-Energy Geo-Resour. 2021, 7, 47. [Google Scholar] [CrossRef]

- Sun, Y.; Zhang, J.; Li, G.; Wang, Y.; Sun, J.; Jiang, C. Optimized neural network using beetle antennae search for predicting the unconfined compressive strength of jet grouting coalcretes. Int. J. Numer. Anal. Methods Géoméch. 2019, 43, 801–813. [Google Scholar] [CrossRef]

- Liu, B.; Wang, R.; Zhao, G.; Guo, X.; Wang, Y.; Li, J.; Wang, S. Prediction of rock mass parameters in the TBM tunnel based on BP neural network integrated simulated annealing algorithm. Tunn. Undergr. Space Technol. 2020, 95, 103103. [Google Scholar] [CrossRef]

- Zhang, J.; Huang, Y.; Wang, Y.; Ma, G. Multi-objective optimization of concrete mixture proportions using machine learning and metaheuristic algorithms. Constr. Build. Mater. 2020, 253, 119208. [Google Scholar] [CrossRef]

- Abbas, S.; Khan, M.A.; Falcon-Morales, L.E.; Rehman, A.; Saeed, Y.; Zareei, M.; Zeb, A.; Mohamed, E.M. Modeling, Simulation and Optimization of Power Plant Energy Sustainability for IoT Enabled Smart Cities Empowered with Deep Extreme Learning Machine. IEEE Access 2020, 8, 39982–39997. [Google Scholar] [CrossRef]

- Zhang, W.; Ching, J.; Goh, A.T.C.; Leung, A.Y. Big data and machine learning in geoscience and geoengineering: Introduction. Geosci. Front. 2021, 12, 327–329. [Google Scholar] [CrossRef]

- Yong, W.; Zhou, J.; Armaghani, D.J.; Tahir, M.M.; Tarinejad, R.; Pham, B.T.; Van Huynh, V. A new hybrid simulated annealing-based genetic programming technique to predict the ultimate bearing capacity of piles. Eng. Comput. 2021, 37, 2111–2127. [Google Scholar] [CrossRef]

- Jamei, M.; Hasanipanah, M.; Karbasi, M.; Ahmadianfar, I.; Taherifar, S. Prediction of flyrock induced by mine blasting using a novel kernel-based extreme learning machine. J. Rock Mech. Geotech. Eng. 2021, 13, 1438–1451. [Google Scholar] [CrossRef]

- Xie, C.; Nguyen, H.; Bui, X.-N.; Choi, Y.; Zhou, J.; Nguyen-Trang, T. Predicting rock size distribution in mine blasting using various novel soft computing models based on meta-heuristics and machine learning algorithms. Geosci. Front. 2021, 12, 101108. [Google Scholar] [CrossRef]

- Xie, C.; Nguyen, H.; Bui, X.-N.; Nguyen, V.-T.; Zhou, J. Predicting roof displacement of roadways in underground coal mines using adaptive neuro-fuzzy inference system optimized by various physics-based optimization algorithms. J. Rock Mech. Geotech. Eng. 2021, 13, 1452–1465. [Google Scholar] [CrossRef]

- Armaghani, D.J.; Harandizadeh, H.; Momeni, E.; Maizir, H.; Zhou, J. An optimized system of GMDH-ANFIS predictive model by ICA for estimating pile bearing capacity. Artif. Intell. Rev. 2021, 55, 2313–2350. [Google Scholar] [CrossRef]

- Zhou, J.; Li, E.; Yang, S.; Wang, M.; Shi, X.; Yao, S.; Mitri, H.S. Slope stability prediction for circular mode failure using gradient boosting machine approach based on an updated database of case histories. Saf. Sci. 2019, 118, 505–518. [Google Scholar] [CrossRef]

- Zhou, J.; Aghili, N.; Ghaleini, E.N.; Bui, D.T.; Tahir, M.M.; Koopialipoor, M. A Monte Carlo simulation approach for effective assessment of flyrock based on intelligent system of neural network. Eng. Comput. 2020, 36, 713–723. [Google Scholar] [CrossRef]

- Zhou, J.; Koopialipoor, M.; Murlidhar, B.R.; Fatemi, S.A.; Tahir, M.M.; Armaghani, D.J.; Li, C. Use of Intelligent Methods to Design Effective Pattern Parameters of Mine Blasting to Minimize Flyrock Distance. Nonrenew. Resour. 2020, 29, 625–639. [Google Scholar] [CrossRef]

- MolaAbasi, H.; Khajeh, A.; Chenari, R.J.; Payan, M. A framework to predict the load-settlement behavior of shallow foundations in a range of soils from silty clays to sands using CPT records. Soft Comput. 2022, 26, 3545–3560. [Google Scholar] [CrossRef]

- Baliarsingh, S.K.; Vipsita, S.; Muhammad, K.; Dash, B.; Bakshi, S. Analysis of high-dimensional genomic data employing a novel bio-inspired algorithm. Appl. Soft Comput. 2019, 77, 520–532. [Google Scholar] [CrossRef]

- Armaghani, D.J.; Hajihassani, M.; Sohaei, H.; Mohamad, E.T.; Marto, A.; Motaghedi, H.; Moghaddam, M.R. Neuro-fuzzy technique to predict air-overpressure induced by blasting. Arab. J. Geosci. 2015, 8, 10937–10950. [Google Scholar] [CrossRef]

- Jiang, J.-L.; Su, X.; Zhang, H.; Zhang, X.-H.; Yuan, Y.-J. A Novel Approach to Active Compounds Identification Based on Support Vector Regression Model and Mean Impact Value. Chem. Biol. Drug Des. 2013, 81, 650–657. [Google Scholar] [CrossRef]

- Zeng, Y.R.; Zeng, Y.; Choi, B.; Wang, L. Multifactor-influenced energy consumption forecasting using enhanced back-propagation neural network. Energy 2017, 127, 381–396. [Google Scholar] [CrossRef]

- Gu, Y.; Zhang, Z.; Zhang, D.; Zhu, Y.; Bao, Z.; Zhang, D. Complex lithology prediction using mean impact value, particle swarm optimization, and probabilistic neural network techniques. Acta Geophys. 2020, 68, 1727–1752. [Google Scholar] [CrossRef]

- Rabbani, E.; Sharif, F.; Salooki, M.K.; Moradzadeh, A. Application of neural network technique for prediction of uniaxial compressive strength using reservoir formation properties. Int. J. Rock Mech. Min. Sci. 2012, 56, 100–111. [Google Scholar] [CrossRef]

- Torabi-Kaveh, M.; Naseri, F.; Saneie, S.; Sarshari, B. Application of artificial neural networks and multivariate statistics to predict UCS and E using physical properties of Asmari limestones. Arab. J. Geosci. 2015, 8, 2889–2897. [Google Scholar] [CrossRef]

| References | Equation | Rock Type | Performance |

|---|---|---|---|

| [2] | UCS = 0.0065Vp + 1.468BPI + 4.094PLS + 2.418TS − 225 | WE, FR, THR | RMSE = 15.62 |

| [12] | UCS = −6.479 + 3.425BPI + 0.639CPI + 7.889PLS | MU | R2 = 0.87 |

| [20] | UCS = −595.303 − 442.363Vp + 45.338Vp2 − 6.1 Pn + 0.52 Pn2 + 28.314 (PLS − 4.06PLS)2 + 115.822SH − 2.007SH2 | TR | R2 = 0.64 |

| [21] | UCS = 0.386EH + 39.268r − 1.307 Pn − 246.804 | SA, LI, DO, GR, GRA | RMSE = 2.91 |

| [23] | UCS = 0.88r2.24SH0.22CI0.89 | IG, SR | R = 0.55 |

| [25] | UCS = 0.48SH + 1.863PLS + 248WC + 7.972Vp − 23.859 | GY | RMSE = 7.332 |

| [29] | UCS = exp (0.011BPI + 0.065PLS + 0.029SH + 0.000012Vp + 2.157) | CL, MU | R2 = 0.91 |

| [33] | UCS = 15.14UW + 2.88SHR − 446.3 | MA, DO, LI, TR | R2 = 0.79 |

| [35] | UCS = −120.912 − 2.036Vp + 31.064PLS | TR | RMSE = 9.43 |

| [65] | UCS = 0.25EH + 18.14r − 0.75 Pn − 15.47GS − 21.55RT | SA, LI, DO, GR, GRA | R2 = 0.90 |

| [66] | UCS = 0.89SH + 131.PLS − 1.68Vp − 35.9 | MA, LI, DA | RMSE = 11.38 |

| [67] | UCS = 5.734Vp + 10.876TS − 2.408PLS − 10.029 | TR, LI, DI | R = 0.90 |

| [68] | UCS = −2.572 Pn + 23.665PLS + 41.654PR + 12.197r − 0.001Vp − 11.813 | PYR | RMSE = 11.40 |

| [69] | UCS = −153.61 Pn + 0.010Vp + 7.111PLS | GR | RMSE = 13.81 |

| [70] | UCS = 1.277SH + 2.186BPI + 16.41PLS + 0.011Vp − 82.436 | GS, WS, BS, GY, SM | RMSE = 10.80 |

| [71] | UCS = −350.784 − 1.825 Pn + 82.749r + 5.708SHR | GRA, GA | R2 = 0.89 |

| [72] | UCS = −22.1 + 0.4SHR + 0.0093Vp + 3.9PLS | GR | R2 = 0.79 |

| [73] | UCS = 2.411u + 0.004Vp + 4.322TS + 2.583E − 49.700 UCS = 0.0003u3.099Vp0.172TS0.206E0.393 | WCJR | R2MLRA = 0.853 R2MNRA = 0.855 |

| Model No. | Neurons of Hidden Layer | RMSE | R2 | ||

|---|---|---|---|---|---|

| Training | Testing | Training | Testing | ||

| 1 | 20 | 28.7283 | 27.4770 | 0.6786 | 0.7050 |

| 2 | 30 | 24.0466 | 21.9013 | 0.7480 | 0.8126 |

| 3 | 40 | 22.5454 | 21.5436 | 0.8021 | 0.8186 |

| 4 | 50 | 22.1986 | 21.7206 | 0.8081 | 0.8157 |

| 5 | 60 | 22.3844 | 21.3123 | 0.8049 | 0.8225 |

| 6 | 70 | 19.6458 | 22.7176 | 0.8497 | 0.7983 |

| 7 | 80 | 19.9072 | 25.3655 | 0.8457 | 0.7486 |

| 8 | 90 | 17.6883 | 31.2900 | 0.8782 | 0.7683 |

| 9 | 100 | 18.7275 | 25.3018 | 0.8634 | 0.7499 |

| 10 | 110 | 17.5671 | 23.6957 | 0.8798 | 0.7806 |

| 11 | 120 | 18.8266 | 27.1939 | 0.8620 | 0.7110 |

| 12 | 130 | 18.6357 | 34.9914 | 0.8648 | 0.5216 |

| 13 | 140 | 16.8519 | 34.6346 | 0.8894 | 0.5313 |

| 14 | 150 | 17.1542 | 34.7546 | 0.8714 | 0.5285 |

| Model Multi-Hidden Layers | RMSE | R2 | ||

|---|---|---|---|---|

| Training | Testing | Training | Testing | |

| 5-5-5 | 31.1924 | 30.0702 | 0.6211 | 0.6467 |

| 10-10-10 | 28.4753 | 27.6212 | 0.6843 | 0.7019 |

| 15-15-15 | 28.5459 | 27.6791 | 0.6827 | 0.7006 |

| 5-5-5-5 | 34.0340 | 32.3176 | 0.5490 | 0.5919 |

| 10-10-10-10 | 35.5967 | 33.8719 | 0.5066 | 0.5517 |

| 15-15-15-15 | 32.3059 | 30.5482 | 0.5936 | 0.6354 |

| 5-5-5-5-5 | 32.1756 | 30.6333 | 0.5969 | 0.6333 |

| 10-10-10-10-10 | 34.0784 | 33.2670 | 0.5478 | 0.5676 |

| 15-15-15-15-15 | 40.7977 | 39.3185 | 0.3519 | 0.3959 |

| Model | Hidden Layers | RMSE | R2 | |||

|---|---|---|---|---|---|---|

| 1 | 2 | Training | Testing | Training | Testing | |

| 1 | 2 | 2 | 24.7580 | 20.6986 | 0.7613 | 0.8326 |

| 2 | 2 | 4 | 20.3518 | 17.7909 | 0.8387 | 0.8763 |

| 3 | 2 | 6 | 21.6890 | 24.4836 | 0.8168 | 0.7658 |

| 4 | 2 | 8 | 20.0367 | 17.5967 | 0.8437 | 0.8790 |

| 5 | 2 | 10 | 25.3568 | 21.7866 | 0.7496 | 0.8145 |

| 6 | 4 | 4 | 20.4635 | 18.0377 | 0.8369 | 0.8729 |

| 7 | 4 | 6 | 23.7747 | 21.6982 | 0.7799 | 0.8160 |

| 8 | 4 | 8 | 19.9445 | 17.2805 | 0.8602 | 0.8833 |

| 9 | 4 | 10 | 20.0098 | 17.9689 | 0.8441 | 0.8738 |

| 10 | 6 | 6 | 24.7290 | 20.3359 | 0.7619 | 0.8384 |

| 11 | 6 | 8 | 19.2109 | 17.1627 | 0.8563 | 0.8849 |

| 12 | 6 | 10 | 22.4402 | 18.1689 | 0.8039 | 0.8710 |

| 13 | 8 | 8 | 19.7370 | 17.5722 | 0.8633 | 0.8793 |

| 14 | 8 | 10 | 22.5188 | 19.8386 | 0.8025 | 0.8462 |

| 15 | 10 | 10 | 22.7941 | 19.1961 | 0.7941 | 0.8560 |

| Model | Performance (Training) | |||||

| RMSE | R2 | MAE | U1 | U2 | VAF (%) | |

| ELM | 22.3844 | 0.8049 | 15.9951 | 0.1041 | 0.0443 | 80.4891 |

| KELM | 22.2684 | 0.8069 | 15.9380 | 0.1038 | 0.0442 | 80.7237 |

| KELM-GWO | 17.2176 | 0.8846 | 12.0577 | 0.0798 | 0.0259 | 88.4566 |

| DELM | 28.4753 | 0.6843 | 22.4743 | 0.1306 | 0.0677 | 69.1651 |

| BPNN | 19.2109 | 0.8563 | 13.6784 | 0.0905 | 0.0344 | 85.9230 |

| Empirical | 26.3309 | 0.7300 | 19.8876 | 0.1223 | 0.0610 | 73.0331 |

| Model | Performance (Testing) | |||||

| RMSE | R2 | MAE | U1 | U2 | VAF (%) | |

| ELM | 21.3123 | 0.8225 | 17.0308 | 0.1038 | 0.0452 | 82.4108 |

| KELM | 17.5050 | 0.8803 | 12.4699 | 0.0854 | 0.0308 | 88.1894 |

| KELM-GWO | 14.7327 | 0.9152 | 11.4315 | 0.0706 | 0.0259 | 91.5207 |

| DELM | 27.6213 | 0.7019 | 22.9152 | 0.1332 | 0.0730 | 70.3401 |

| BPNN | 17.1627 | 0.8849 | 11.9449 | 0.0945 | 0.0305 | 89.4529 |

| Empirical | 24.0864 | 0.7733 | 18.2310 | 0.1181 | 0.0595 | 77.6171 |

| References | AI Models | Input Parameters | No. of Dataset | Performance |

|---|---|---|---|---|

| [20] | ANN | PLS, Vp, SHR, Pn | 30 | R2 = 0.93 |

| [21] | GP | Pn, r, Vp | 72 | RMSE = 12.3 |

| [24] | ANN | r, CC, QC | 138 | R2 = 0.76 |

| [25] | ANN | Pn, SD, SH, PLS | 39 | R2 = 0.93 |

| [28] | FIS | SDI2, SDI4, CLC | 65 | RMSE = 2.767 |

| [29] | FIS | BPI, PLS, SHR, Vp, Pn, r | 60 | R2 = 0.98 RMSE = 8.21 |

| [30] | FIS | SH, r, Pn | 75 | R2 = 0.9437 |

| [31] | SVM | Pn, Vp, SD | 47 | R2 = 0.7712 |

| [32] | SVM | Vp, Pn, SHR | 85 | R2 = 0.9516 RMSE = 2.14 |

| [33] | SVM | Vp, SHR, CSS | 90 | R2 = 0.867 |

| [34] | SVM | SH, Pn, Vp, PLS | 170 | R2 = 0.9363 RMSE = 1.097 |

| [35] | RF | PLS, Pn, Vp, SHR | 30 | R2 = 0.93 |

| [36] | RF | Vp, SH, Pn, PLS | 93 | R2 = 0.488 RMSE = 8.071 |

| [40] | MLP | RC, r, Pn, Vp, WA, PLS | 197 | R2 = 0.90 RMSE = 0.289 |

| [41] | GEP | UPV, WA, Dd, Sd, Bd | 167 | R2 = 0.877 |

| [43] | GA | QC, r, Pn, CI, SSH | 44 | R2 = 0.63 |

| [101] | ANN | WS, r, Pn | 83 | R2 = 0.96 |

| [102] | ANN | Vp, r, Pn | 105 | R2 = 0.95 |

| This study | ELM KELM KELM-GWO DELM BPNN | SH, Pn, Vp, PLS | 271 | R2ELM = 0.8225 R2KELM = 0.8803 R2KELM-GWO = 0.9152 R2DELM = 0.7019 R2BPNN = 0.8849 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, C.; Zhou, J.; Dias, D.; Gui, Y. A Kernel Extreme Learning Machine-Grey Wolf Optimizer (KELM-GWO) Model to Predict Uniaxial Compressive Strength of Rock. Appl. Sci. 2022, 12, 8468. https://doi.org/10.3390/app12178468

Li C, Zhou J, Dias D, Gui Y. A Kernel Extreme Learning Machine-Grey Wolf Optimizer (KELM-GWO) Model to Predict Uniaxial Compressive Strength of Rock. Applied Sciences. 2022; 12(17):8468. https://doi.org/10.3390/app12178468

Chicago/Turabian StyleLi, Chuanqi, Jian Zhou, Daniel Dias, and Yilin Gui. 2022. "A Kernel Extreme Learning Machine-Grey Wolf Optimizer (KELM-GWO) Model to Predict Uniaxial Compressive Strength of Rock" Applied Sciences 12, no. 17: 8468. https://doi.org/10.3390/app12178468

APA StyleLi, C., Zhou, J., Dias, D., & Gui, Y. (2022). A Kernel Extreme Learning Machine-Grey Wolf Optimizer (KELM-GWO) Model to Predict Uniaxial Compressive Strength of Rock. Applied Sciences, 12(17), 8468. https://doi.org/10.3390/app12178468