360-Degree Video Bandwidth Reduction: Technique and Approaches Comprehensive Review

Abstract

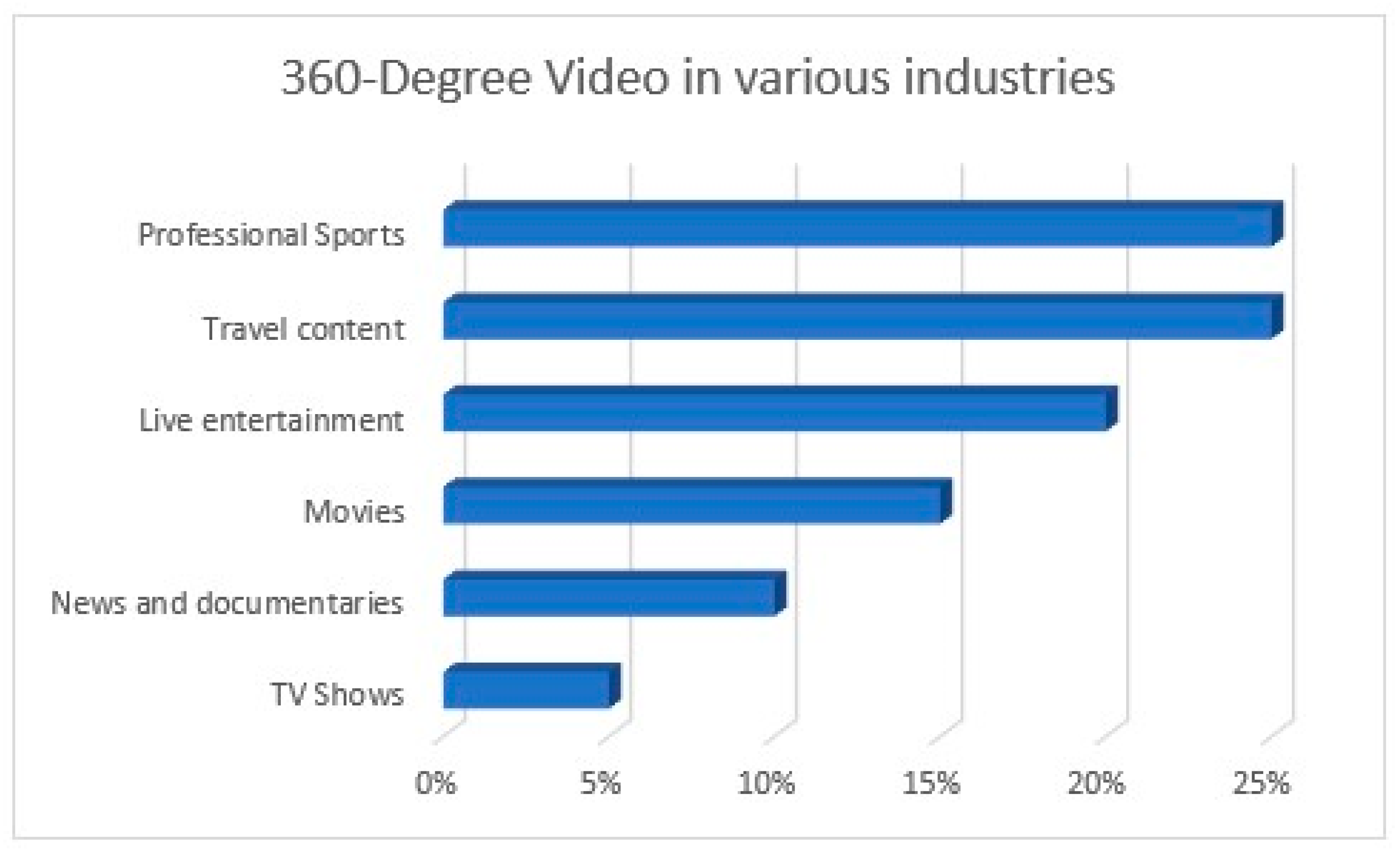

:1. Introduction

- (a)

- Discuss challenges faced by 360-degree video streaming;

- (b)

- Discuss existing approaches and techniques available for bandwidth reduction for 360-degree video;

- (c)

- Discuss existing network approaches in optimizing the streaming of 360-degree video;

- (d)

- Discuss existing Quality of Experience (QoE) measurement metrics of 360-degree video;

- (e)

- Discuss the future works to improve existing approaches.

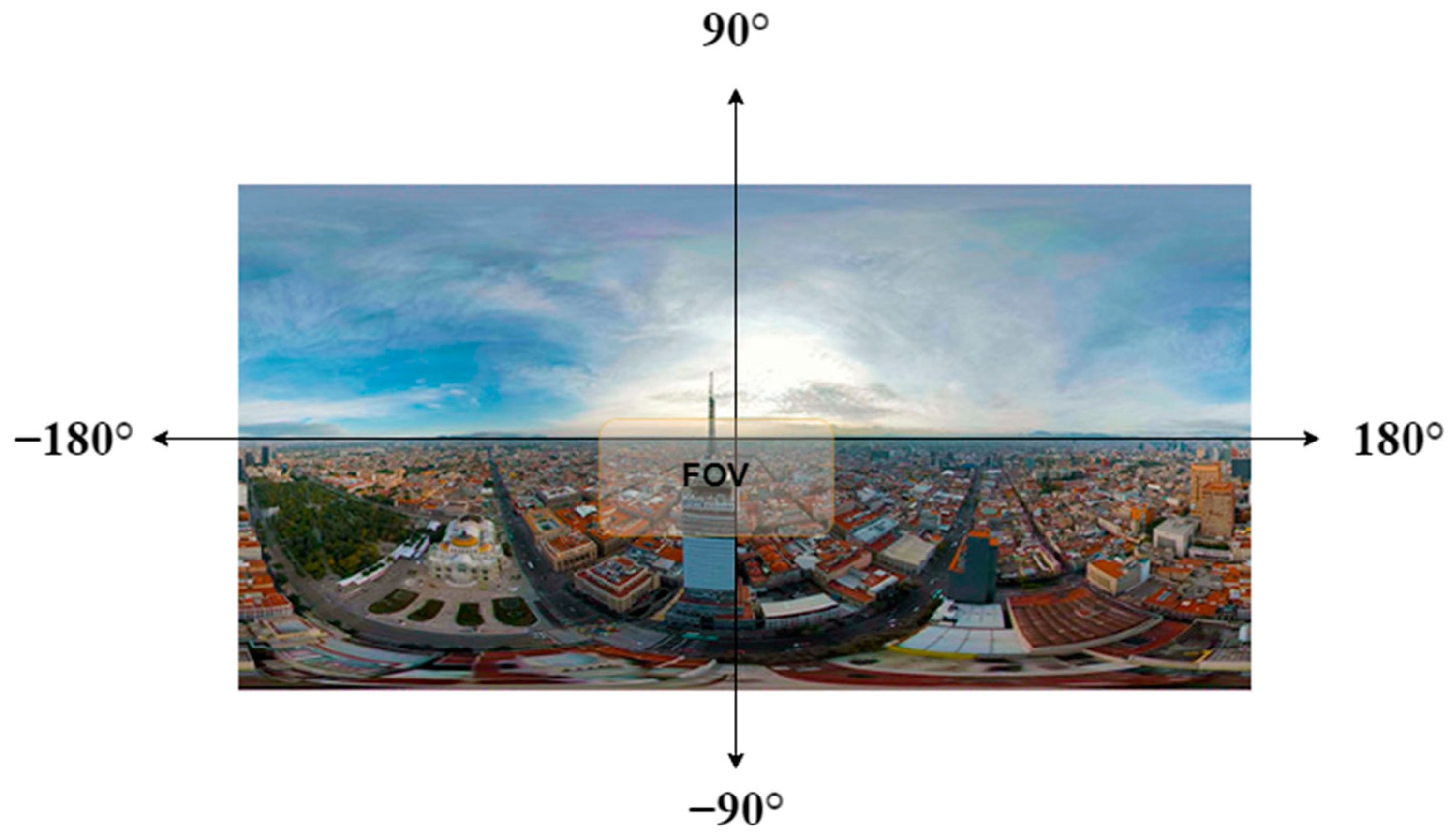

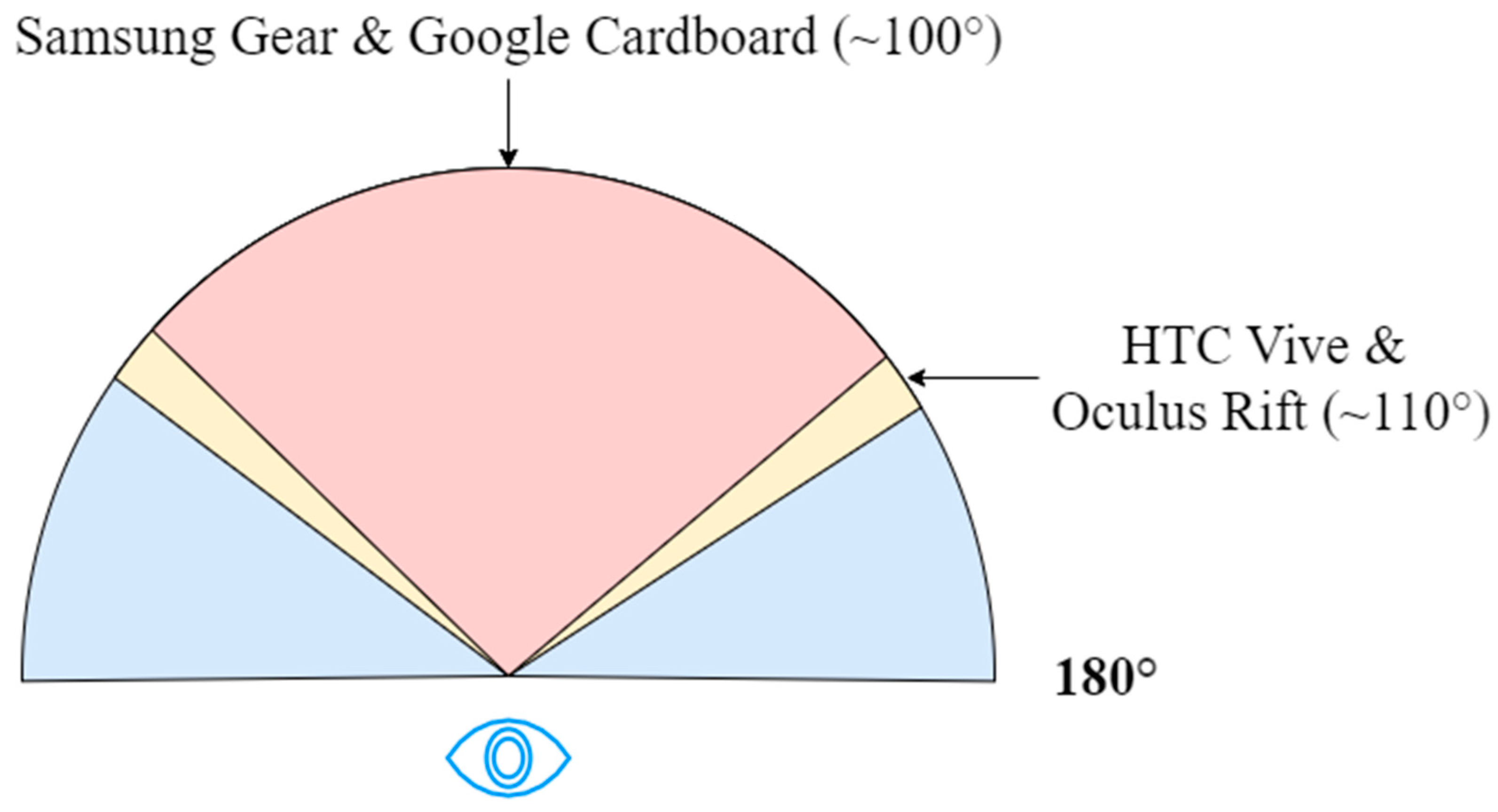

2. Challenges Faced by 360-Degree Video Streaming

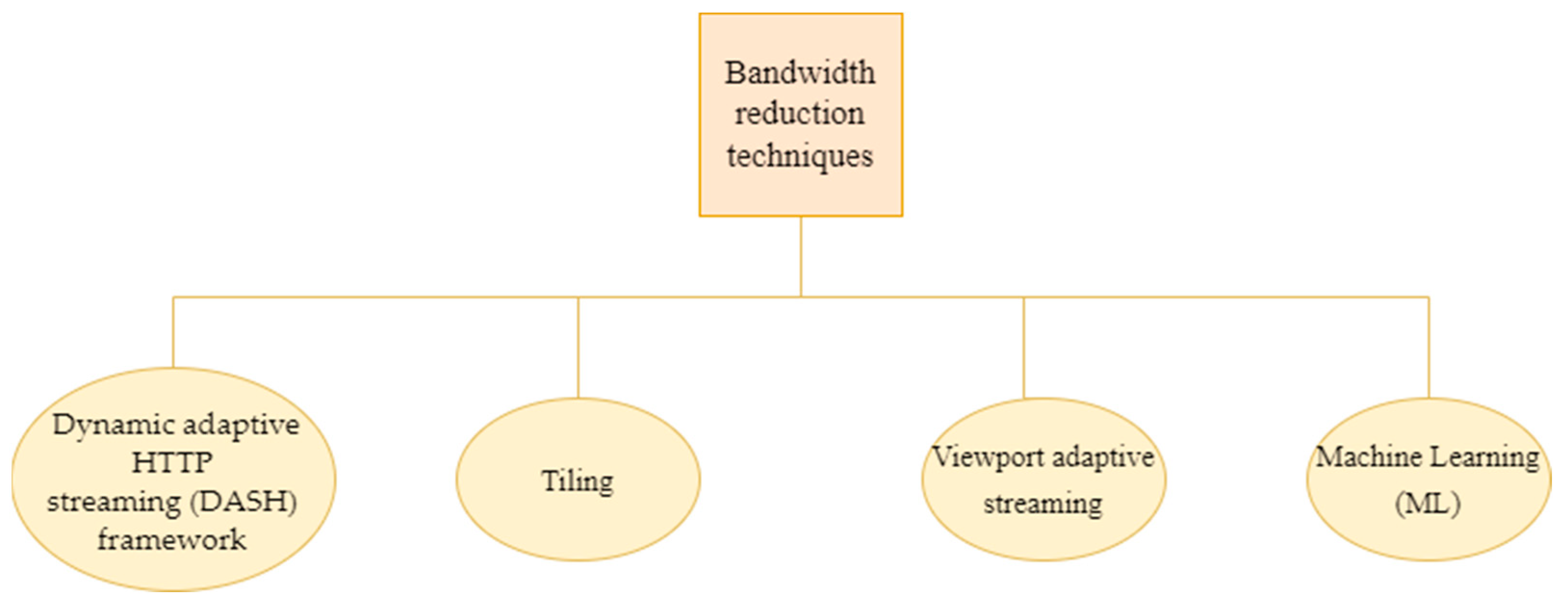

3. Available Techniques to Reduce the Bandwidth of the 360-Degree Video

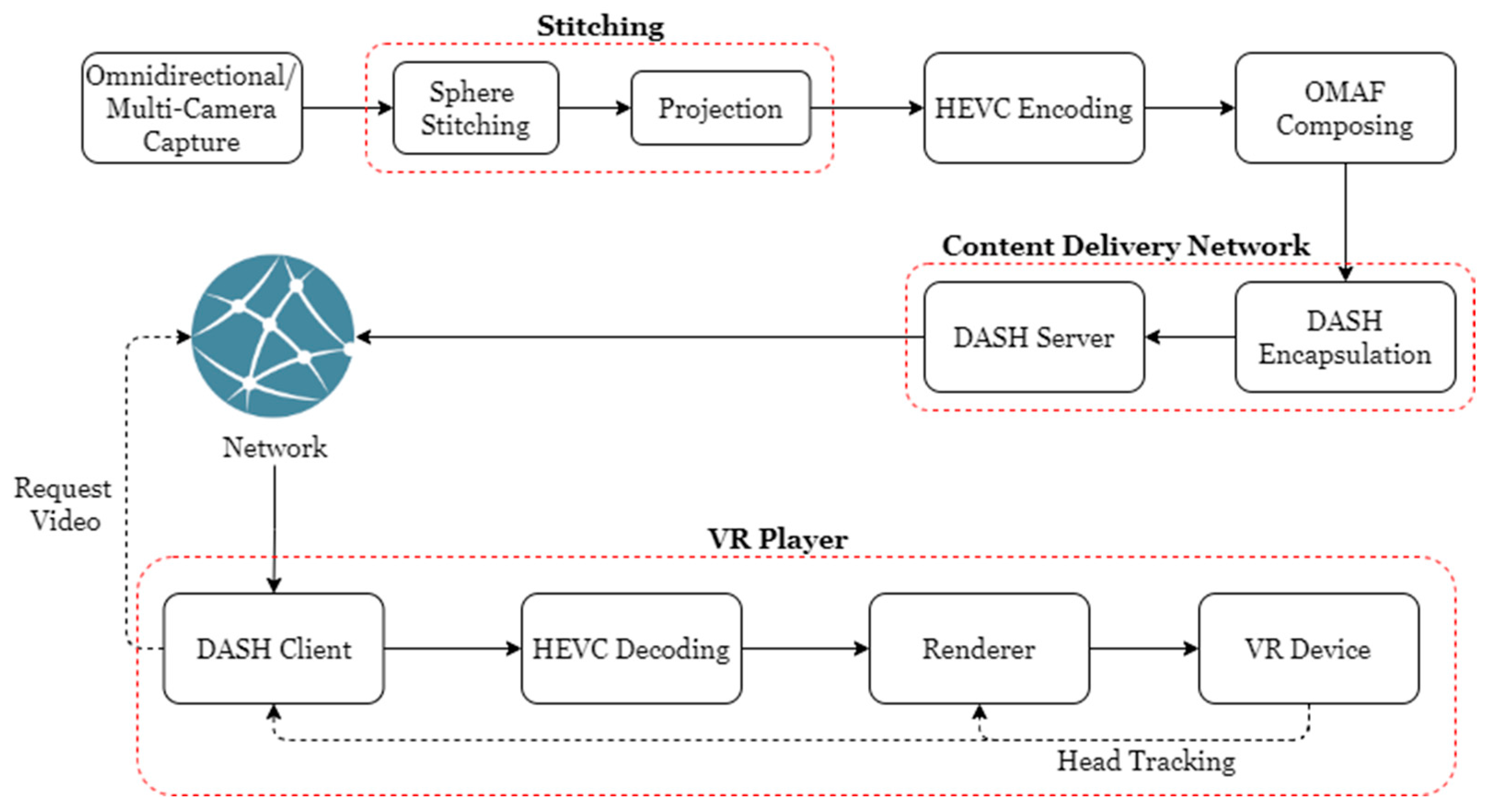

3.1. Dynamic Adaptive HTTP Streaming (DASH) Framework

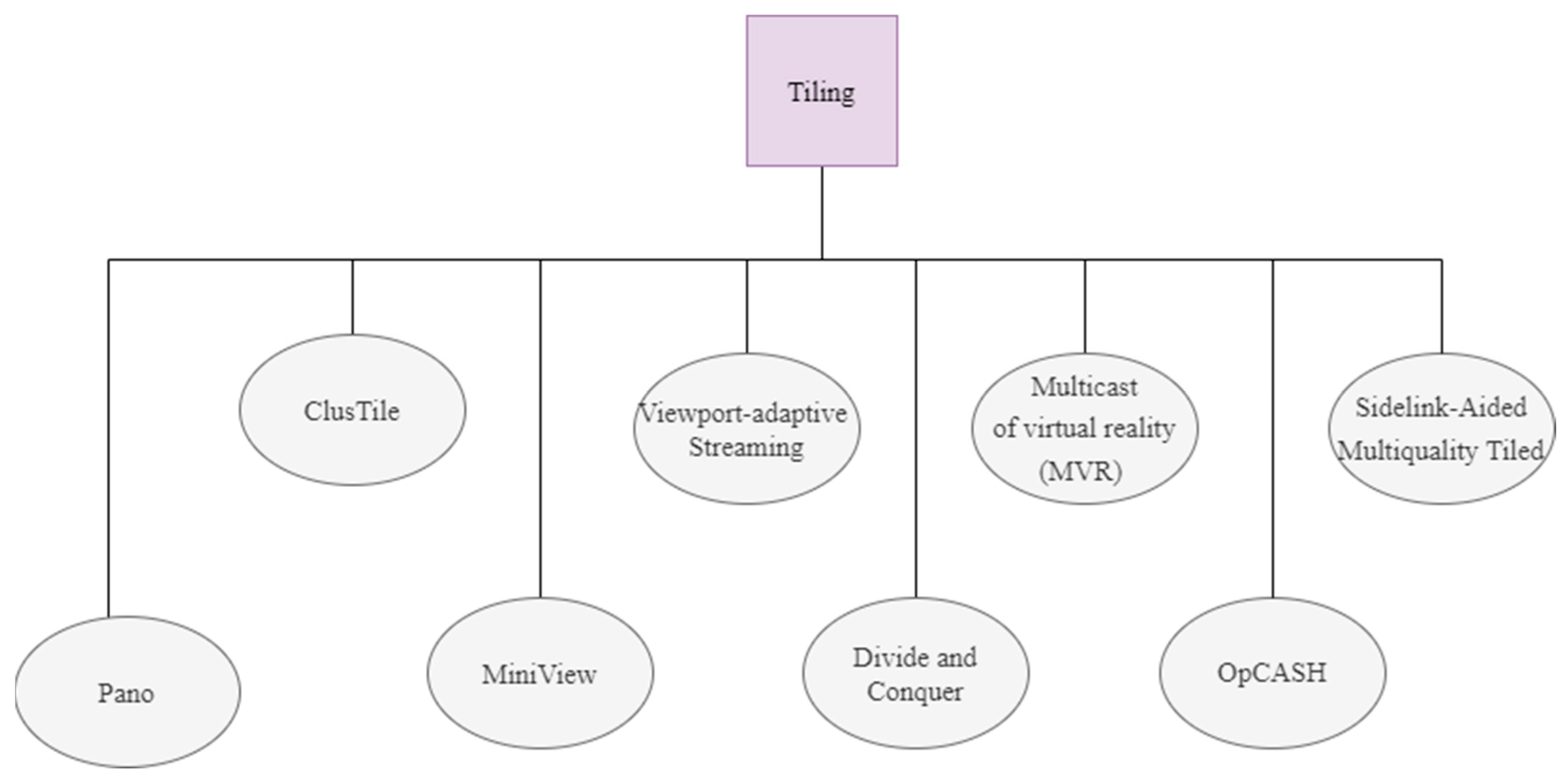

3.2. Tiling

3.2.1. ClusTile

3.2.2. PANO

3.2.3. MiniView Layout

3.2.4. Viewport Adaptive Streaming

3.2.5. Divide and Conquer

3.2.6. Multicast Virtual Reality (MVR)

3.2.7. Sidelink-Aided Multiquality Tiled

3.2.8. OpCASH

| Source | Technique | Result | Limitation |

|---|---|---|---|

| [34] |

|

| A fixed tiling scheme requires tile selection algorithms. |

| [12] |

|

| Improved adaption algorithms are required to predict head movement, as well as a new video encoding approach to do quality-differentiated encoding for high-resolution videos. |

| [38] |

|

| Require a better tile weighting approach with data-driven probabilistic as well as an improved rate adaption algorithm. |

| [37] |

| 72% bandwidth savings. | Improve performance with an adaptive rate allocation method for tile streaming based on available bandwidth. |

| [35] |

| The same PSPNR was obtained with 41–46 percent reduced bandwidth consumption than [34]. | The 360JND model is based on the results of a survey in which the values of 360° video-specific characteristics were varied individually. |

| [36] |

| Saved up to 16% encoded video size without much quality loss. | Fixed tiles, each miniview might well be encoded into segments individually, and the streaming client could request these segments as needed. |

| [39] |

| Dai, Yue [39] formulated optimization problems based on the interaction between tile quality level selection, sidelink sender selection, and bandwidth allocation to optimize the overall utility of all users. | When the number of groups is increased from 10 to 50, the tile quality degrades because less bandwidth can be provided to each group as the number of groups grows. |

| [40] |

| OpCASH obtained more than 95 percent VP coverage from cache after only 24 views of the video. When compared to a baseline that illustrates standard tile-based caching, OpCASH reduces data fetched from content servers by 85% and overall content delivery time by 74%. | Improve real-time tile encoding features on content servers by including tile quality selection in the ILP formulation and increasing the variable quality level tiles streaming in. Next, in a lab scenario, interact with many edge nodes using real-world user testing to achieve the biggest benefit at the edge layer. |

3.3. Viewport-Based Streaming

3.4. Machine Learning (ML)

3.5. Comparison between Techniques

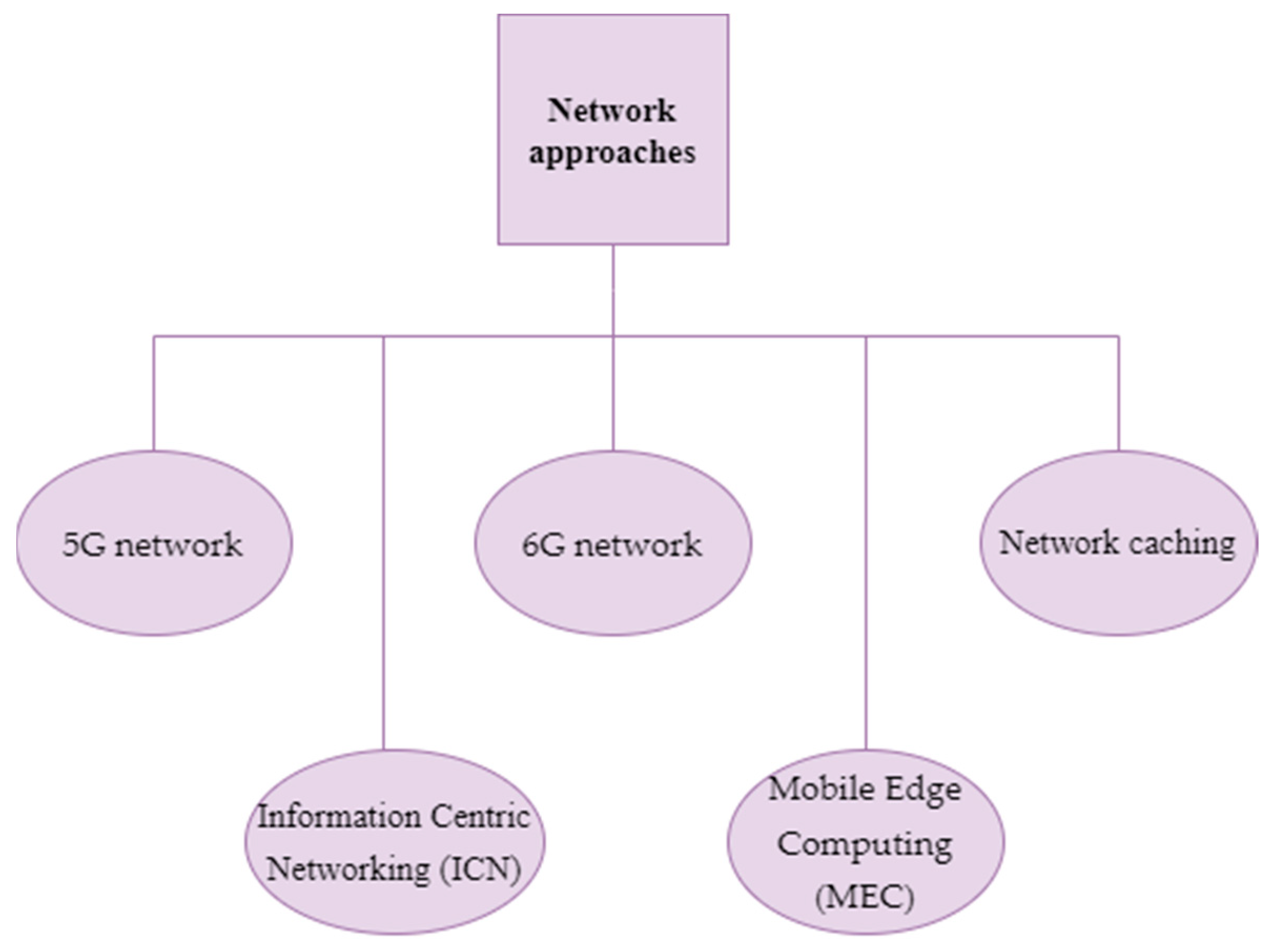

4. Network Approaches to Optimize 360-Degree Video Streaming

4.1. 5G Network

4.2. 6G Network

4.3. Network Caching

4.4. Information-Centric Networking (ICN)

4.5. Mobile Edge Computing (MEC)

4.6. Comparison between Network Approaches

5. Quality of Experience (QoE) Assessment

5.1. Objective QoE Assessment

5.2. Subjective QoE Assessment

6. Discussion and Future Works

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Brewer, J. Cisco Launches Webex Hologram, An AR Meeting Solution. 2021. Available online: https://newsroom.cisco.com/press-release-content?type=webcontent&articleId=2202545 (accessed on 28 December 2021).

- Indigo9 Digital Inc. 10 of the Best Augmented Reality (AR) Shopping Apps to Try Today. 2021. Available online: https://www.indigo9digital.com/blog/how-six-leading-retailers-use-augmented-reality-apps-to-disrupt-the-shopping-experience (accessed on 5 December 2021).

- Shafi, R.; Shuai, W.; Younus, M.U. 360-Degree Video Streaming: A Survey of the State of the Art. Symmetry 2020, 12, 1491. [Google Scholar] [CrossRef]

- Reyna, J. The Potential of 360-Degree Videos for Teaching, Learning and Research. INTED Proc. 2018, 1448–1454. [Google Scholar]

- Lampropoulos, G.; Barkoukis, V.; Burden, K.; Anastasiadis, T. 360-degree video in education: An overview and a comparative social media data analysis of the last decade. Smart Learn. Environ. 2021, 8, 20. [Google Scholar] [CrossRef]

- Meta. Introducing Meta: A Social Technology Company. 2021. Available online: https://about.fb.com/news/2021/10/facebook-company-is-now-meta/ (accessed on 28 December 2021).

- Sriram, S. JPMorgan Opens a Lounge in Decentraland, Sees $1 Trillion Metaverse Opportunity. 2022. Available online: https://www.benzinga.com/markets/cryptocurrency/22/02/25655613/jpmorgan-opens-a-lounge-in-decentraland-sees-1-trillion-metaverse-opportunity (accessed on 18 February 2022).

- Strickland, J.; Pollette, C. How Second Life Works. 2021. Available online: https://computer.howstuffworks.com/internet/social-networking/networks/second-life.htm (accessed on 14 December 2021).

- SM Entertainment. SM Brand Marketing Signs a Metaverse Partnership with the World’s Largest Metaverse Platform, The Sandbox. 2022. Available online: https://smentertainment.com/PressCenter/Details/7936 (accessed on 27 February 2022).

- Molina, B. Bill Gates Predicts Our Work Meetings Will Move to Metaverse in 2–3 years. 2021. Available online: https://www.usatoday.com/story/tech/2021/12/10/bill-gates-metaverse-work-meetings-predictions/6459911001/ (accessed on 15 December 2021).

- Bao, Y.; Wu, H.; Zhang, T.; Ramli, A.A.; Liu, X. Shooting a moving target: Motion-prediction-based transmission for 360-degree videos. In Proceedings of the 2016 IEEE International Conference on Big Data (Big Data), Washington, DC, USA, 5–8 December 2016; pp. 1161–1170. [Google Scholar]

- Corbillon, X.; Simon, G.; Devlic, A.; Chakareski, J. Viewport-adaptive navigable 360-degree video delivery. In Proceedings of the 2017 IEEE International Conference on Communications (ICC), Paris, France, 21–25 May 2017. [Google Scholar]

- Zhou, Y.; Sun, B.; Qi, Y.; Peng, Y.; Liu, L.; Zhang, Z.; Liu, Y.; Liu, D.; Li, Z.; Tian, L. Mobile AR/VR in 5G based on convergence of communication and computing. Telecommun. Sci. 2018, 34, 19–33. [Google Scholar]

- Ruan, J.; Xie, D. Networked VR: State of the Art, Solutions, and Challenges. Electronics 2021, 10, 166. [Google Scholar] [CrossRef]

- Grzelka, A.; Dziembowski, A.; Mieloch, D.; Stankiewicz, O.; Stankowski, J.; Domanski, M. Impact of video streaming delay on user experience with head-mounted displays. In Proceedings of the 2019 Picture Coding Symposium (PCS), Ningbo, China, 12–15 November 2019; IEEE: New York, NY, USA, 2019. [Google Scholar]

- Mania, K.; Adelstein, B.D.; Ellis, S.R.; Hill, M.I. Perceptual sensitivity to head tracking latency in virtual environments with varying degrees of scene complexity. In Proceedings of the 1st Symposium on Applied Perception in Graphics and Visualization, Los Angeles, CA, USA, 7–8 August 2004. [Google Scholar]

- Albert, R.; Patney, A.; Luebke, D.; Kim, J. Latency Requirements for Foveated Rendering in Virtual Reality. ACM Trans. Appl. Percept. 2017, 14, 25. [Google Scholar] [CrossRef]

- Chen, M.; Saad, W.; Yin, C. Virtual Reality Over Wireless Networks: Quality-of-Service Model and Learning-Based Resource Management. IEEE Trans. Commun. 2018, 66, 5621–5635. [Google Scholar] [CrossRef] [Green Version]

- Doppler, K.; Torkildson, E.; Bouwen, J. On wireless networks for the era of mixed reality. In Proceedings of the 2017 European Conference on Networks and Communications (EuCNC), Oulu, Finland, 12–15 June 2017; IEEE: New York, NY, USA, 2017. [Google Scholar]

- Ju, R.; He, J.; Sun, F.; Li, J.; Li, F.; Zhu, J.; Han, L. Ultra wide view based panoramic VR streaming. In Proceedings of the Workshop on Virtual Reality and Augmented Reality Network, Los Angeles, CA, USA, 25 August 2017. [Google Scholar]

- Gohar, A.; Lee, S. Multipath Dynamic Adaptive Streaming over HTTP Using Scalable Video Coding in Software Defined Networking. Appl. Sci. 2020, 10, 7691. [Google Scholar] [CrossRef]

- Hannuksela, M.M.; Wang, Y.-K.; Hourunrant, A. An overview of the OMAF standard for 360 video. In Proceedings of the 2019 Data Compression Conference (DCC), Snowbird, UT, USA, 26–29 March 2019; IEEE: New York, NY, USA, 2019. [Google Scholar]

- Monnier, R.; van Brandenburg, R.; Koenen, R. Streaming UHD-Quality VR at realistic bitrates: Mission impossible? In Proceedings of the 2017 NAB Broadcast Engineering and Information Technology Conference (BEITC), Las Vegas, NV, USA, 22–27 April 2017. [Google Scholar]

- Skupin, R.; Sanchez, Y.; Podborski, D.; Hellge, C.; Schierl, T. Viewport-dependent 360 degree video streaming based on the emerging Omnidirectional Media Format (OMAF) standard. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; IEEE: New York, NY, USA, 2017. [Google Scholar]

- Chiariotti, F. A survey on 360-degree video: Coding, quality of experience and streaming. Comput. Commun. 2021, 177, 133–155. [Google Scholar] [CrossRef]

- Song, J.; Yang, F.; Zhang, W.; Zou, W.; Fan, Y.; Di, P. A fast fov-switching dash system based on tiling mechanism for practical omnidirectional video services. IEEE Trans. Multimed. 2019, 22, 2366–2381. [Google Scholar] [CrossRef]

- D’Acunto, L.; Van den Berg, J.; Thomas, E.; Niamut, O. Using MPEG DASH SRD for zoomable and navigable video. In Proceedings of the 7th International Conference on Multimedia Systems, Klagenfurt, Austria, 10–13 May 2016. [Google Scholar]

- Xie, L.; Xu, Z.; Ban, Y.; Zhang, X.; Guo, Z. 360 probdash: Improving qoe of 360 video streaming using tile-based http adaptive streaming. In Proceedings of the 25th ACM International Conference on Multimedia, Mountain View, CA, USA, 23–27 October 2017. [Google Scholar]

- Huang, W.; Ding, L.; Wei, H.Y.; Hwang, J.N.; Xu, Y.; Zhang, W. Qoe-oriented resource allocation for 360-degree video transmission over heterogeneous networks. arXiv 2018, arXiv:1803.07789. [Google Scholar]

- Nguyen, D.; Tran, H.T.; Thang, T.C. A client-based adaptation framework for 360-degree video streaming. J. Vis. Commun. Image Represent. 2019, 59, 231–243. [Google Scholar] [CrossRef]

- Google Cardboard. 2021. Available online: https://arvr.google.com/cardboard/ (accessed on 30 December 2021).

- Samsung Gear VR. 2021. Available online: https://www.samsung.com/global/galaxy/gear-vr/ (accessed on 30 December 2021).

- HTC Vive VR. 2021. Available online: https://www.vive.com/ (accessed on 30 December 2021).

- Zhou, C.; Xiao, M.; Liu, Y. Clustile: Toward minimizing bandwidth in 360-degree video streaming. In Proceedings of the IEEE INFOCOM 2018-IEEE Conference on Computer Communications, Honolulu, HI, USA, 16–19 April 2018; IEEE: New York, NY, USA, 2018. [Google Scholar]

- Guan, Y.; Zheng, C.; Zhang, X.; Guo, Z.; Jiang, J. Pano: Optimizing 360 video streaming with a better understanding of quality perception. In Proceedings of the ACM Special Interest Group on Data Communication, Beijing China, 19–23 August 2019; pp. 394–407. [Google Scholar]

- Xiao, M.; Wang, S.; Zhou, C.; Liu, L.; Li, Z.; Liu, Y.; Chen, S. Miniview layout for bandwidth-efficient 360-degree video. In Proceedings of the 26th ACM international Conference on Multimedia, Seoul, Korea, 22–26 October 2018. [Google Scholar]

- Hosseini, M.; Swaminathan, V. Adaptive 360 VR video streaming: Divide and conquer. In Proceedings of the 2016 IEEE International Symposium on Multimedia (ISM), San Jose, CA, USA, 11–13 December 2016; IEEE: New York, NY, USA, 2016. [Google Scholar]

- Ahmadi, H.; Eltobgy, O.; Hefeeda, M. Adaptive Multicast Streaming of Virtual Reality Content to Mobile Users. In Proceedings of the on Thematic Workshops of ACM Multimedia, Mountain View, CA, USA, 23–27 October 2017; pp. 170–178. [Google Scholar]

- Dai, J.; Yue, G.; Mao, S.; Liu, D. Sidelink-Aided Multiquality Tiled 360° Virtual Reality Video Multicast. IEEE Internet Things J. 2022, 9, 4584–4597. [Google Scholar] [CrossRef]

- Madarasingha, C.; Thilakarathna, K.; Zomaya, A. OpCASH: Optimized Utilization of MEC Cache for 360-Degree Video Streaming with Dynamic Tiling. In Proceedings of the 2022 IEEE International Conference on Pervasive Computing and Communications (PerCom), Pisa, Italy, 22–25 March 2022. [Google Scholar]

- Ribezzo, G.; De Cicco, L.; Palmisano, V.; Mascolo, S. A DASH 360 ° immersive video streaming control system. Internet Technol. Lett. 2020, 3, e175. [Google Scholar] [CrossRef]

- Qian, F.; Han, B.; Xiao, Q.; Gopalakrishnan, V. Flare: Practical viewport-adaptive 360-degree video streaming for mobile devices. In Proceedings of the 24th Annual International Conference on Mobile Computing and Networking, New Delhi, India, 29 October–2 November 2018. [Google Scholar]

- Ban, Y.; Xie, L.; Xu, Z.; Zhang, X.; Guo, Z.; Wang, Y. CUB360: Exploiting Cross-Users Behaviors for Viewport Prediction in 360 Video Adaptive Streaming. In Proceedings of the 2018 IEEE International Conference on Multimedia and Expo (ICME), San Diego, CA, USA, 23–27 July 2018; pp. 1–6. [Google Scholar]

- Chakareski, J.; Aksu, R.; Corbillon, X.; Simon, G.; Swaminathan, V. Viewport-Driven Rate-Distortion Optimized 360° Video Streaming. In Proceedings of the EEE International Conference on Communications (ICC), Kansas City, MO, USA, 20–24 May 2018; pp. 1–7. [Google Scholar]

- Rossi, S.; Toni, L. Navigation-Aware Adaptive Streaming Strategies for Omnidirectional Video. In Proceedings of the IEEE 19th International Workshop on Multimedia Signal Processing (MMSP), Luton, UK, 16–18 October 2017; pp. 1–6. [Google Scholar]

- Koch, C.; Rak, A.-T.; Zink, M.; Steinmetz, R.; Rizk, A. Transitions of viewport quality adaptation mechanisms in 360° video streaming. In Proceedings of the 29th ACM Workshop on Network and Operating Systems Support for Digital Audio and Video, Amherst, MA, USA, 21 June 2019; pp. 14–19. [Google Scholar]

- Fan, C.L.; Lee, J.; Lo, W.C.; Huang, C.Y.; Chen, K.T.; Hsu, C.H. Fixation Prediction for 360° Video Streaming in Head-Mounted Virtual Reality. In Proceedings of the 27th Workshop on Network and Operating Systems Support for Digital Audio and Video, New York, NY, USA, 20–23 June 2017; Association for Computing Machinery: Taipei, Taiwan, 2017; pp. 67–72. [Google Scholar]

- Xu, Z.; Ban, Y.; Zhang, K.; Xie, L.; Zhang, X.; Guo, Z.; Meng, S.; Wang, Y. Tile-Based Qoe-Driven Http/2 Streaming System For 360 Video. In Proceedings of the 2018 IEEE International Conference on Multimedia & Expo Workshops (ICMEW), San Diego, CA, USA, 23–27 July 2018; pp. 1–4. [Google Scholar]

- Park, S.; Bhattacharya, A.; Yang, Z.; Dasari, M.; Das, S.R.; Samaras, D. Advancing User Quality of Experience in 360-degree Video Streaming. In Proceedings of the 2019 IFIP Networking Conference (IFIP Networking), Warsaw, Poland, 20–22 May 2019; pp. 1–9. [Google Scholar]

- Chopra, L.; Chakraborty, S.; Mondal, A.; Chakraborty, S. PARIMA: Viewport Adaptive 360-Degree Video Streaming. In Proceedings of the Web Conference 2021, Ljubljana Slovenia, 19–23 April 2021. [Google Scholar]

- Yaqoob, A.; Togou, M.A.; Muntean, G.-M. Dynamic Viewport Selection-Based Prioritized Bitrate Adaptation for Tile-Based 360° Video Streaming. IEEE Access 2022, 10, 29377–29392. [Google Scholar] [CrossRef]

- Dasari, M.; Bhattacharya, A.; Vargas, S.; Sahu, P.; Balasubramanian, A.; Das, S.R. Streaming 360-Degree Videos Using Super-Resolution. In Proceedings of the IEEE INFOCOM 2020—IEEE Conference on Computer Communications, Toronto, ON, Canada, 6–9 July 2020. [Google Scholar]

- Yu, L.; Tillo, T.; Xiao, J. QoE-driven dynamic adaptive video streaming strategy with future information. IEEE Trans. Broadcasting 2017, 63, 523–534. [Google Scholar] [CrossRef] [Green Version]

- Filho, R.I.T.D.C.; Luizelli, M.C.; Petrangeli, S.; Vega, M.T.; Van der Hooft, J.; Wauters, T.; De Turck, F.; Gaspary, L.P. Dissecting the Performance of VR Video Streaming through the VR-EXP Experimentation Platform. ACM Trans. Multimedia Comput. Commun. Appl. 2019, 15, 1–23. [Google Scholar] [CrossRef]

- Zhang, Y.; Guan, Y.; Bian, K.; Liu, Y.; Tuo, H.; Song, L.; Li, X. EPASS360: QoE-aware 360-degree video streaming over mobile devices. IEEE Trans. Mob. Comput. 2020, 20, 2338–2353. [Google Scholar] [CrossRef]

- Vega, M.T.; Mocanu, D.C.; Barresi, R.; Fortino, G.; Liotta, A. Cognitive streaming on android devices. In Proceedings of the 2015 IFIP/IEEE International Symposium on Integrated Network Management (IM), Ottawa, ON, Canada, 11–15 May 2015; IEEE: New York, NY, USA, 2015. [Google Scholar]

- Li, C.; Xu, M.; Du, X.; Wang, Z. Bridge the gap between VQA and human behavior on omnidirectional video: A large-scale dataset and a deep learning model. In Proceedings of the 26th ACM International Conference on Multimedia, Seoul, Korea, 22–26 October 2018. [Google Scholar]

- Kan, N.; Zou, J.; Li, C.; Dai, W.; Xiong, H. RAPT360: Reinforcement Learning-Based Rate Adaptation for 360-Degree Video Streaming with Adaptive Prediction and Tiling. IEEE Trans. Circuits Syst. Video Technol. 2021, 32, 1607–1623. [Google Scholar] [CrossRef]

- Younus, M.U.; Shafi, R.; Rafiq, A.; Anjum, M.R.; Afridi, S.; Jamali, A.A.; Arain, Z.A. Encoder-Decoder Based LSTM Model to Advance User QoE in 360-Degree Video. Comput. Mater. Contin. 2022, 71, 2617–2631. [Google Scholar]

- Maniotis, P.; Thomos, N. Viewport-Aware Deep Reinforcement Learning Approach for 360° Video Caching. IEEE Trans. Multimed. 2022, 24, 386–399. [Google Scholar] [CrossRef]

- Partners, S. How 5G and Edge Computing Will Transform AR & VR Use Cases. Available online: https://stlpartners.com/articles/edge-computing/how-5g-and-edge-computing-will-transform-ar-vr-use-cases/ (accessed on 18 February 2022).

- Ganesan, E.; Liem, A.T.; Hwang, I.-S. QoS-Aware Multicast for Crowdsourced 360° Live Streaming in SDN Aided NG-EPON. IEEE Access 2022, 10, 9935–9949. [Google Scholar] [CrossRef]

- Peltonen, E.; Bennis, M.; Capobianco, M.; Debbah, M.; Ding, A.; Gil-Castiñeira, F.; Jurmu, M.; Karvonen, T.; Kelanti, M.; Kliks, A.; et al. 6G White Paper on Edge Intelligence. arXiv 2020, arXiv:2004.14850. [Google Scholar]

- Mangiante, S.; Klas, G.; Navon, A.; GuanHua, Z.; Ran, J.; Silva, M.D. VR is on the Edge: How to Deliver 360° Videos in Mobile Networks. In Proceedings of the Workshop on Virtual Reality and Augmented Reality Network, Los Angeles, CA, USA, 25 August 2017; pp. 30–35. [Google Scholar]

- Matsuzono, K.; Asaeda, H.; Turletti, T. Low latency low loss streaming using in-network coding and caching. In Proceedings of the IEEE INFOCOM 2017-IEEE Conference on Computer Communications, Atlanta, GA, USA, 1–4 May 2017; IEEE: New York, NY, USA, 2017. [Google Scholar]

- Chakareski, J. VR/AR immersive communication: Caching, edge computing, and transmission trade-offs. In Proceedings of the Workshop on Virtual Reality and Augmented Reality Network, Los Angeles, CA, USA, 25 August 2017. [Google Scholar]

- Westphal, C. Challenges in Networking to Support Augmented Reality and Virtual Reality. In Proceedings of the IEEE ICNC 2017, Silicon Valley, CA, USA, 26–29 January 2017. [Google Scholar]

- Westphal, C. Adaptive Video Streaming in Information-Centric Networking (ICN); IRTF RFC7933, ICN Research Group. (August 2016); IETF: Fremont, CA, USA, 2016. [Google Scholar]

- Lav Gupta, R.J.; Chan, H.A. Mobile Edge Computing—An Important Ingredient of 5G Networks. 2016. Available online: https://sdn.ieee.org/newsletter/march-2016/mobile-edge-computing-an-important-ingredient-of-5g-networks (accessed on 18 February 2022).

- Dai, J.; Liu, D. An mec-enabled wireless vr transmission system with view synthesis-based caching. In Proceedings of the 2019 IEEE Wireless Communications and Networking Conference Workshop (WCNCW), Marrakech, Morocco, 15–18 April 2019; IEEE: New York, NY, USA, 2019. [Google Scholar]

- Dai, J.; Zhang, Z.; Mao, S.; Liu, D. A View Synthesis-Based 360° VR Caching System Over MEC-Enabled C-RAN. IEEE Trans. Circuits Syst. Video Technol. 2019, 30, 3843–3855. [Google Scholar] [CrossRef]

- Liu, Y.; Liu, J.; Argyriou, A.; Ci, S. MEC-Assisted Panoramic VR Video Streaming Over Millimeter Wave Mobile Networks. IEEE Trans. Multimed. 2018, 21, 1302–1316. [Google Scholar] [CrossRef]

- Yang, X.; Chen, Z.; Li, K.; Sun, Y.; Liu, N.; Xie, W.; Zhao, Y. Communication-Constrained Mobile Edge Computing Systems for Wireless Virtual Reality: Scheduling and Tradeoff. IEEE Access 2018, 6, 16665–16677. [Google Scholar] [CrossRef]

- Liu, H.; Chen, Z.; Qian, L. The three primary colors of mobile systems. IEEE Commun. Mag. 2016, 54, 15–21. [Google Scholar] [CrossRef] [Green Version]

- Perfecto, C.; Elbamby, M.S.; Del Ser, J.; Bennis, M. Taming the latency in multi-user VR 360°: A QoE-aware deep learning-aided multicast framework. IEEE Trans. Commun. 2020, 68, 2491–2508. [Google Scholar] [CrossRef] [Green Version]

- Elbamby, M.S.; Perfecto, C.; Bennis, M.; Doppler, K. Edge computing meets millimeter-wave enabled VR: Paving the way to cutting the cord. In Proceedings of the 2018 IEEE Wireless Communications and Networking Conference (WCNC), Barcelona, Spain, 15–18 April 2018; IEEE: New York, NY, USA, 2018. [Google Scholar]

- Kumar, S.; Bhagat, L.A.; Franklin, A.A.; Jin, J. Multi-neural network based tiled 360° video caching with Mobile Edge Computing. J. Netw. Comput. Appl. 2022, 201, 103342. [Google Scholar] [CrossRef]

- Yu, Z.; Liu, J.; Liu, S.; Yang, Q. Co-Optimizing Latency and Energy with Learning Based 360° Video Edge Caching Policy. In Proceedings of the 2022 IEEE Wireless Communications and Networking Conference (WCNC), Austin, TX, USA, 10–13 April 2022. [Google Scholar]

- Zhang, L.; Chakareski, J. UAV-Assisted Edge Computing and Streaming for Wireless Virtual Reality: Analysis, Algorithm Design, and Performance Guarantees. IEEE Trans. Veh. Technol. 2022, 71, 3267–3275. [Google Scholar] [CrossRef]

- ITU-T. Vocabulary for Performance, Quality of Service and Quality of Experience. 2017. Available online: https://www.itu.int/rec/T-REC-P.10-201711-I/en (accessed on 1 June 2022).

- Azevedo, R.G.D.A.; Birkbeck, N.; De Simone, F.; Janatra, I.; Adsumilli, B.; Frossard, P. Visual Distortions in 360° Videos. IEEE Trans. Circuits Syst. Video Technol. 2020, 30, 2524–2537. [Google Scholar] [CrossRef] [Green Version]

- Tran, H.T.; Ngoc, N.P.; Pham, C.T.; Jung, Y.J.; Thang, T.C. A subjective study on QoE of 360 video for VR communication. In Proceedings of the 2017 IEEE 19th International Workshop on Multimedia Signal Processing (MMSP), Luton, UK, 16–18 October 2017; IEEE: New York, NY, USA, 2017. [Google Scholar]

- Cummings, J.J.; Bailenson, J.N. How Immersive Is Enough? A Meta-Analysis of the Effect of Immersive Technology on User Presence. Media Psychol. 2014, 19, 272–309. [Google Scholar] [CrossRef]

- Zou, W.; Yang, F.; Zhang, W.; Li, Y.; Yu, H. A Framework for Assessing Spatial Presence of Omnidirectional Video on Virtual Reality Device. IEEE Access 2018, 6, 44676–44684. [Google Scholar] [CrossRef]

- Salomoni, P.; Prandi, C.; Roccetti, M.; Casanova, L.; Marchetti, L.; Marfia, G. Diegetic user interfaces for virtual environments with HMDs: A user experience study with oculus rift. J. Multimodal User Interfaces 2017, 11, 173–184. [Google Scholar] [CrossRef]

- Van Kasteren, A.; Brunnström, K.; Hedlund, J.; Snijders, C. Quality of experience of 360 video—Subjective and eye-tracking assessment of encoding and freezing distortions. Multimed. Tools Appl. 2022, 81, 9771–9802. [Google Scholar] [CrossRef]

- Sweller, J. Cognitive load theory. In Psychology of Learning and Motivation; Elsevier: Amsterdam, The Netherlands, 2011; pp. 37–76. [Google Scholar]

- Zhu, H.; Li, T.; Wang, C.; Jin, W.; Murali, S.; Xiao, M.; Ye, D.; Li, M. EyeQoE: A Novel QoE Assessment Model for 360-degree Videos Using Ocular Behaviors. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2022, 6, 39. [Google Scholar] [CrossRef]

- Wang, Y.; Liu, D.; Ma, S.; Wu, F.; Gao, W. Spherical Coordinates Transform-Based Motion Model for Panoramic Video Coding. IEEE J. Emerg. Sel. Top. Circuits Syst. 2019, 9, 98–109. [Google Scholar] [CrossRef]

- Yu, M.; Lakshman, H.; Girod, B. A framework to evaluate omnidirectional video coding schemes. In Proceedings of the 2015 IEEE International Symposium on Mixed and Augmented Reality, Fukuoka, Japan, 29 September–3 October 2015; IEEE: New York, NY, USA, 2015. [Google Scholar]

- Alshina, E.; Boyce, J.; Abbas, A.; Ye, Y. JVET common test conditions and evaluation procedures for 360° video. JVET document, JVET-G1030. In Proceedings of the Joint Video Exploration Team (JVET) of ITU-T SG 16 WP 3 and ISO/IEC JTC 1/SC 29/WG 11 7th Meeting, Torino, Italy, 13–21 July 2017. [Google Scholar]

- Lo, W.-C.; Fan, C.-L.; Yen, S.-C.; Hsu, C.-H. Performance measurements of 360 video streaming to head-mounted displays over live 4G cellular networks. In Proceedings of the 2017 19th Asia-Pacific Network Operations and Management Symposium (APNOMS), Seoul, Korea, 27–29 September 2017; IEEE: New York, NY, USA, 2017. [Google Scholar]

- Yu, M.; Lakshman, H.; Girod, B. Content adaptive representations of omnidirectional videos for cinematic virtual reality. In Proceedings of the 3rd International Workshop on Immersive Media Experiences, Brisbane, Australia, 26–30 October 2015. [Google Scholar]

- Sun, Y.; Lu, A.; Yu, L. Weighted-to-Spherically-Uniform Quality Evaluation for Omnidirectional Video. IEEE Signal Process. Lett. 2017, 24, 1408–1412. [Google Scholar] [CrossRef]

- Chen, S.; Zhang, Y.; Li, Y.; Chen, Z.; Wang, Z. Spherical structural similarity index for objective omnidirectional video quality assessment. In Proceedings of the 2018 IEEE International Conference on Multimedia and Expo (ICME), San Diego, CA, USA, 23–27 July 2018; IEEE: New York, NY, USA, 2018. [Google Scholar]

- Zhou, Y.; Yu, M.; Ma, H.; Shao, H.; Jiang, G. Weighted-to-spherically-uniform SSIM objective quality evaluation for panoramic video. In Proceedings of the 2018 14th IEEE International Conference on Signal Processing (ICSP), Beijing, China, 12–16 August 2018; IEEE: New York, NY, USA, 2018. [Google Scholar]

- Rai, Y.; le Callet, P.; Guillotel, P. Which saliency weighting for omni directional image quality assessment? In Proceedings of the 2017 Ninth International Conference on Quality of Multimedia Experience (QoMEX), Erfurt, Germany, 31 May–2 June 2017; IEEE: New York, NY, USA, 2017. [Google Scholar]

- Sun, W.; Min, X.; Zhai, G.; Gu, K.; Duan, H.; Ma, S. MC360IQA: A Multi-channel CNN for Blind 360-Degree Image Quality Assessment. IEEE J. Sel. Top. Signal Process. 2019, 14, 64–77. [Google Scholar] [CrossRef]

- Singla, A.; Fremerey, S.; Robitza, W.; Lebreton, P.; Raake, A. Comparison of subjective quality evaluation for HEVC encoded omnidirectional videos at different bit-rates for UHD and FHD resolution. In Proceedings of the Thematic Workshops of ACM Multimedia 2017, Mountain View, CA, USA, 23–27 October 2017. [Google Scholar]

- Fei, Z.; Wang, F.; Wang, J.; Xie, X. QoE Evaluation Methods for 360-Degree VR Video Transmission. IEEE J. Sel. Top. Signal Process. 2019, 14, 78–88. [Google Scholar] [CrossRef]

- Croci, S.; Ozcinar, C.; Zerman, E.; Cabrera, J.; Smolic, A. Voronoi-based Objective Quality Metrics for Omnidirectional Video. In Proceedings of the 2019 Eleventh International Conference on Quality of Multimedia Experience (QoMEX), Berlin, Germany, 5–7 June 2019; pp. 1–6. [Google Scholar]

- Croci, S.; Ozcinar, C.; Zerman, E.; Knorr, S.; Cabrera, J.; Smolic, A. Visual attention-aware quality estimation framework for omnidirectional video using spherical Voronoi diagram. Qual. User Exp. 2020, 5, 4. [Google Scholar] [CrossRef]

- Upenik, E.; Rerabek, M.; Ebrahimi, T. On the performance of objective metrics for omnidirectional visual content. In Proceedings of the 2017 Ninth International Conference on Quality of Multimedia Experience (QoMEX), Erfurt, Germany, 31 May–2 June 2017; IEEE: New York, NY, USA, 2017. [Google Scholar]

- Xu, M.; Li, C.; Chen, Z.; Wang, Z.; Guan, Z. Assessing Visual Quality of Omnidirectional Videos. IEEE Trans. Circuits Syst. Video Technol. 2019, 29, 3516–3530. [Google Scholar] [CrossRef] [Green Version]

- Li, C.; Xu, M.; Jiang, L.; Zhang, S.; Tao, X. Viewport Proposal CNN for 360° Video Quality Assessment. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 10169–10178. [Google Scholar]

- Marandi, R.Z.; Madeleine, P.; Omland, Ø.; Vuillerme, N.; Samani, A. Eye movement characteristics reflected fatigue development in both young and elderly individuals. Sci. Rep. 2018, 8, 13148. [Google Scholar] [CrossRef] [PubMed]

- O’Dwyer, J.; Murray, N.; Flynn, R. Eye-based Continuous Affect Prediction. In Proceedings of the 2019 8th International Conference on Affective Computing and Intelligent Interaction (ACII), Cambridge, UK, 3–6 September 2019; IEEE: New York, NY, USA, 2019. [Google Scholar]

- Egan, D.; Brennan, S.; Barrett, J.; Qiao, Y.; Timmerer, C.; Murray, N. An evaluation of Heart Rate and ElectroDermal Activity as an objective QoE evaluation method for immersive virtual reality environments. In Proceedings of the 2016 Eighth International Conference on Quality of Multimedia Experience (QoMEX), Lisbon, Portugal, 6–8 June 2016; IEEE: New York, NY, USA, 2016. [Google Scholar]

- Singla, A.; Göring, S.; Raake, A.; Meixner, B.; Koenen, R.; Buchholz, T. Subjective quality evaluation of tile-based streaming for omnidirectional videos. In Proceedings of the 10th ACM Multimedia Systems Conference, Amherst, MA, USA, 18–21 June 2019. [Google Scholar]

- Fan, C.-L.; Hung, T.-H.; Hsu, C.-H. Modeling the User Experience of Watching 360° Videos with Head-Mounted Displays. ACM Trans. Multimedia Comput. Commun. Appl. 2022, 18, 1–23. [Google Scholar] [CrossRef]

| VR | Resolution | Equivalent TV Res. | Bandwidth | Latency (ms) |

|---|---|---|---|---|

| Early stage VR | 1K × 1K@visual field 2D_30fps_8bit_4K | 240P | 25 Mbps | 40 |

| Entry level VR | 2K × 2K@visual field 2D_30fps_8bit_8K | SD | 100 Mbps | 30 |

| Advanced VR | 4K × 4K@visual field 2D_60fps_10bit_12K | HD | 400 Mbps | 20 |

| Extreme VR | 8K × 8K@visual field 3D_120fps_12bit_24K | 4K | 2.35 Gbps | 10 |

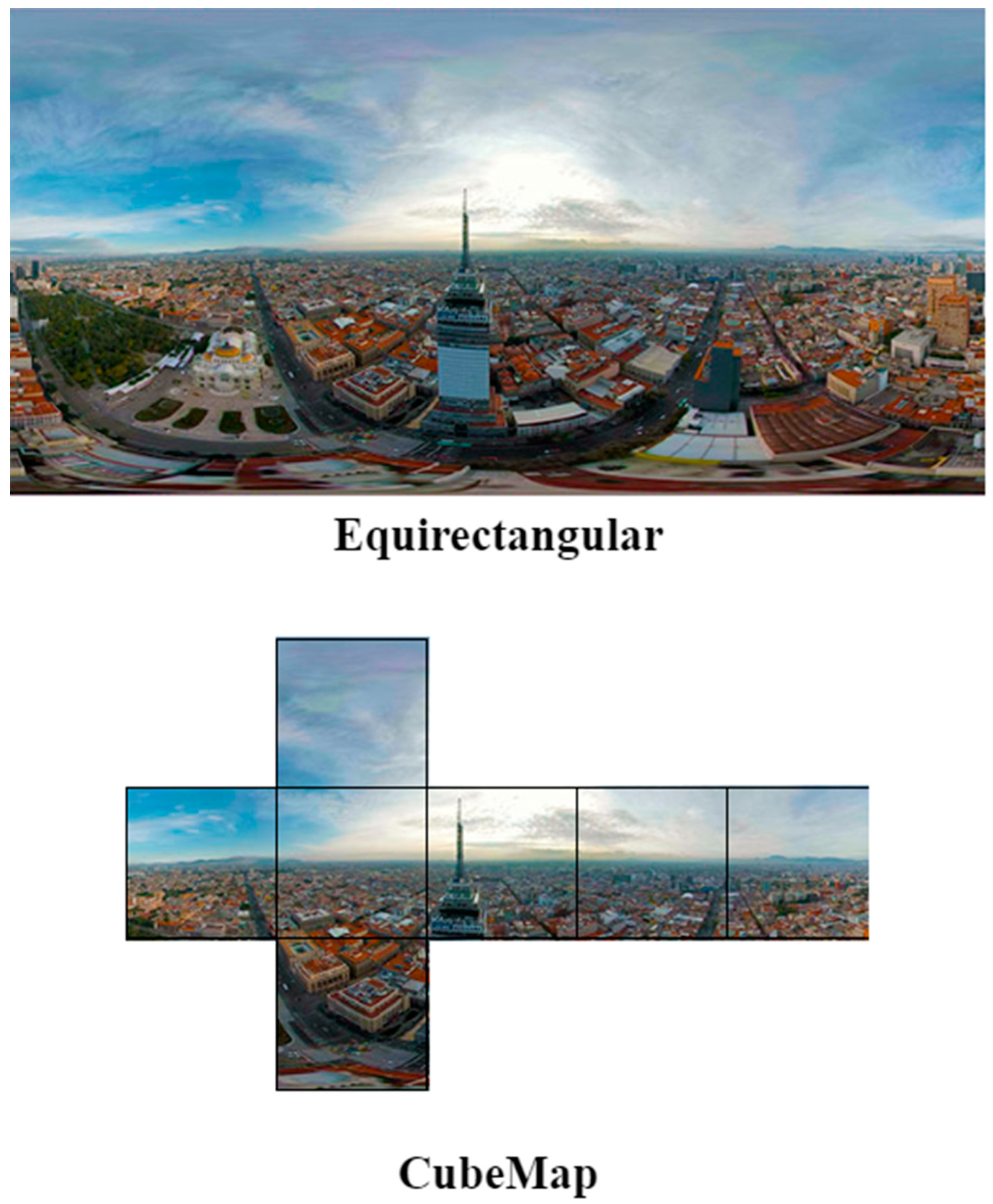

| Step | Process |

|---|---|

| Stitching | Stitch videos collected by many cameras/an omnidirectional camera onto diverse planar models such as cubic and affine transformation models match up the various camera images, merging and distorting the views to a sphere’s surface [3]. For successful coding and transmission, the 360-degree sphere is projected to a 2D planar format such as Cubic Mapping Projection (CMP) and Equirectangular Projection (ERP). |

| Encoding and segmentation | The video file is segmented into smaller parts of a few seconds in length by the origin server. Each section is encoded in numerous bitrate or quality level variants. |

| Delivery | The encoded video segments are sent out to client devices over a content delivery network (CDN). |

| Decoding, rendering and play | Decodes the streamed data. With adaptive bitrate streaming, it plays the video and automatically adjusts the quality of the picture according to the network condition/user’s views at the client device. |

| Source | Viewport Prediction Scheme | Descriptions |

|---|---|---|

| [42] | Historical viewport movement | Prediction with Linear Regression (LR) and Ridge Regression (RR) using viewing data collected from 130 users. |

| [43] | Cross-user similarity | Cross-Users Behaviors (named CUB360) based on k-NN and LR take into account both the user’s specific information and cross-user behavior information to forecast future viewports. |

| [44] | Popularity-based model | Predict based on the popularity of the tiles where they are visited with a higher frequency at a certain time, might be due to the nature of the video like interesting content along with the evaluation of the rate-distortion curve for each tile. |

| [45] | Popularity-based model | Similar to [44] and provide the popularity of each shown viewport (heatmap) and rate-distortion function for each tile-representation for the interested segments periodically to clients during each downloading. |

| [46] | Content Analysis + Popularity | Sensor- and content-based predictive mechanisms, similar to [47] with linear regression (LR). When a transition due to insufficient bandwidth occurs, the tile popularity is solely used to determine the tile quality levels. |

| [48] | k-Nearest Neighbors (k-NN) | Improve the accuracy of traditional linear regression (LR) with cross-users watching behaviors that take advantage of prior users’ data by identifying common scan paths and allocating a higher chance to future FoVs from those users. |

| [47] | Deep content analysis | Concurrently leverage sensor characteristics (HMD orientations) and content-related information (image saliency maps and motion maps) with LSTM to predict the viewer fixation in the future. The estimated viewing probability for each equirectangular tile may then be used in the quality optimization based on probability. |

| [49] | 3D-CNN (convolutional neural networks) | 3D-CNN to extract the Spatio-temporal features (saliency, motion, and FoV info) from the videos, has better performance than [47]. |

| [50] | Content Analysis + Cross-user similarity | PARIMA, which is a hybrid of Passive Aggressive (PA) Regression and Auto-Regressive Integrated Moving Average (ARIMA) times series models to predict viewports based on users’ behavior and the YOLOv3 algorithm on the stitched image to recognize the objects and retrieve their bounding box coordinates in each frame. |

| [51] | Content Analysis + Cross-user similarity | 2 dynamic viewport selection (DVS) which changes the streaming areas depending on content complexity and user head movements to assure viewport accessibility and non-delay visual views for virtual reality users. To achieve higher accuracy, DVS1 focuses on the adjusted prediction distance between two prediction mechanisms whereas DVS2 selects the tiles for the following segment based on the modified prediction difference between actual and predicted perspectives based on content complexity variations. |

| Source | Technique | Scope |

|---|---|---|

| [11] |

| Motion detection and prediction. |

| [52] |

| Reduce bandwidth requirement and Improve video quality. |

| [53] |

| Improve Variable bitrate (VBR). |

| [54] |

| Improve Adaptive VR Streaming. |

| [42] |

| Viewpoint prediction and Bandwidth prediction. |

| [55] |

| Viewpoint prediction and Bandwidth prediction. |

| [56] |

| Improve constant bitrate (CBR). |

| [58] |

| Viewpoint prediction and Optimal bitrate allocation. |

| [59] |

| Viewpoint prediction and Rate adaptation. |

| [60] |

| Reactive caching and Viewport prediction. |

| Network Approach | Scope |

|---|---|

| 5G network | Edge computing and Edge caching brings content and resources nearer to the client. |

| 6G network | AI-powered 6G service applications (AR, VR, XR, MR) reduce device battery consumption, computation capacity, and end-to-end latency. |

| Network caching | Cache the VR content to optimize the bandwidth and latency. |

| Information Centric Networking (ICN) | Content-centric, location-independent models enable retrieval of content over any network interfaces available. |

| Mobile Edge Computing (MEC) | Reduce the intensive computing burden on VR devices. The MEC server assists the mobile VR device by processing some computational and rendering tasks and then delivering the task to the mobile device. |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wong, E.S.; Wahab, N.H.A.; Saeed, F.; Alharbi, N. 360-Degree Video Bandwidth Reduction: Technique and Approaches Comprehensive Review. Appl. Sci. 2022, 12, 7581. https://doi.org/10.3390/app12157581

Wong ES, Wahab NHA, Saeed F, Alharbi N. 360-Degree Video Bandwidth Reduction: Technique and Approaches Comprehensive Review. Applied Sciences. 2022; 12(15):7581. https://doi.org/10.3390/app12157581

Chicago/Turabian StyleWong, En Sing, Nur Haliza Abdul Wahab, Faisal Saeed, and Nouf Alharbi. 2022. "360-Degree Video Bandwidth Reduction: Technique and Approaches Comprehensive Review" Applied Sciences 12, no. 15: 7581. https://doi.org/10.3390/app12157581

APA StyleWong, E. S., Wahab, N. H. A., Saeed, F., & Alharbi, N. (2022). 360-Degree Video Bandwidth Reduction: Technique and Approaches Comprehensive Review. Applied Sciences, 12(15), 7581. https://doi.org/10.3390/app12157581