Analysis of Score-Level Fusion Rules for Deepfake Detection

Abstract

:1. Introduction

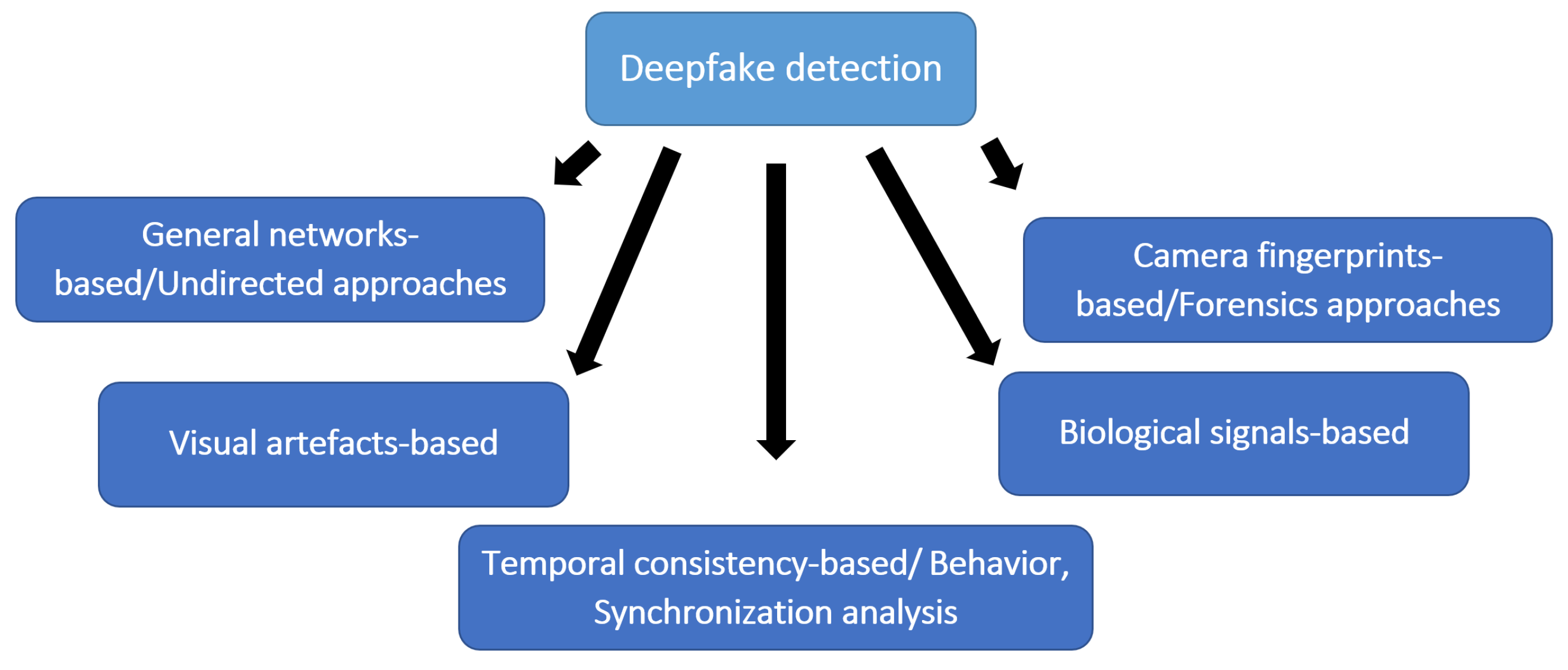

2. Related Work

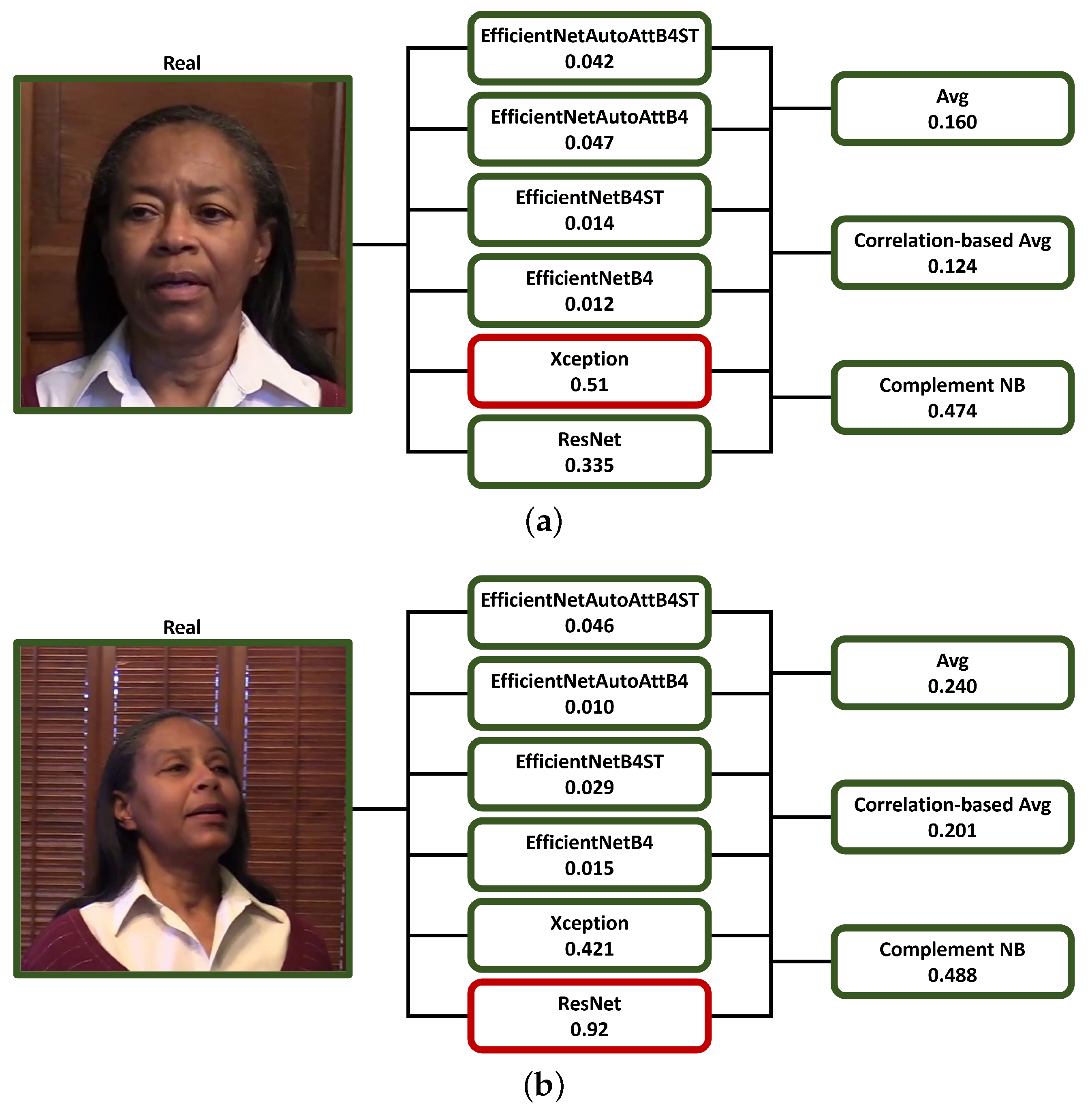

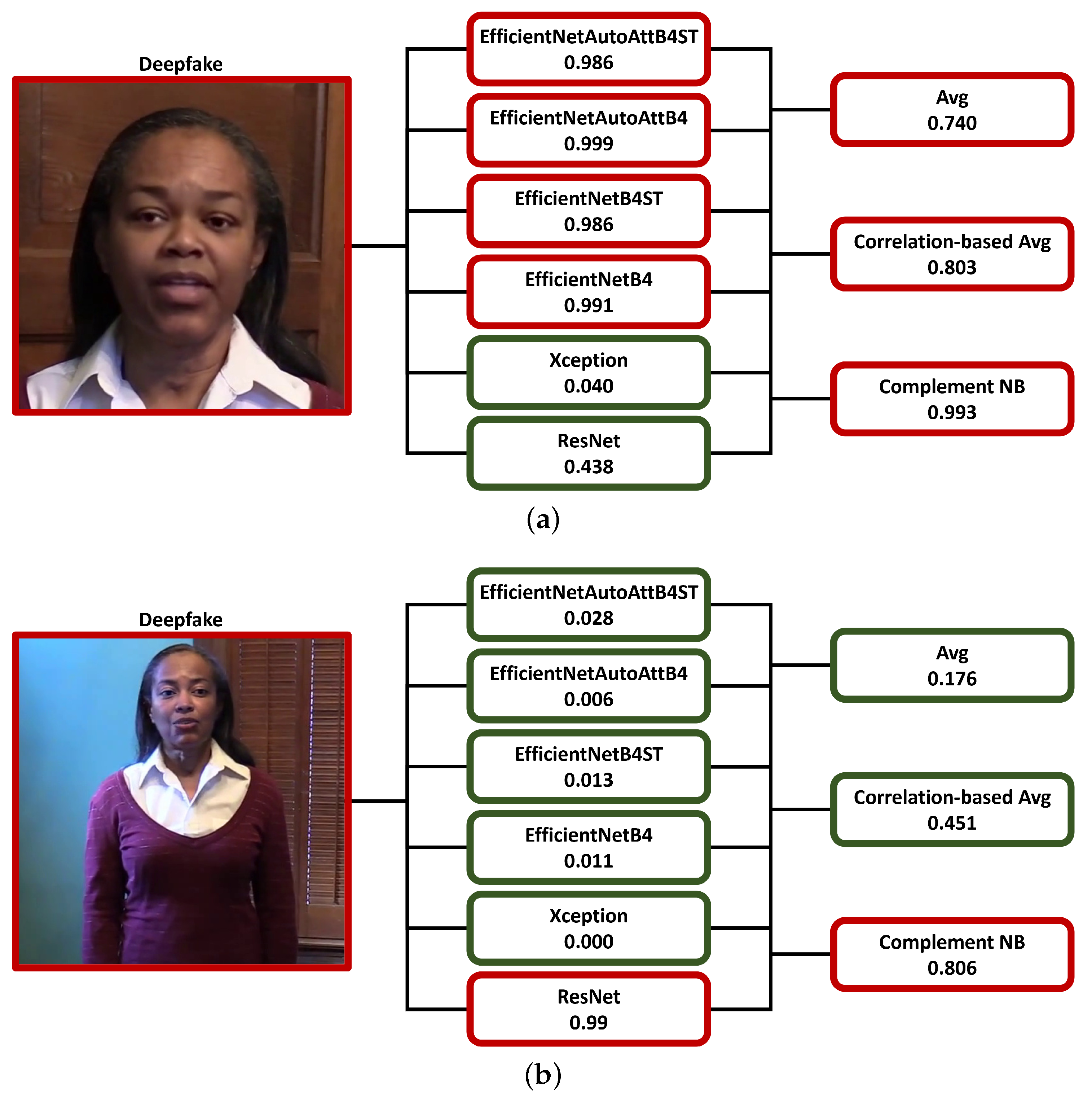

- A visual artifact-based detector, consisting of a ResNet50 model fine-tuned on manipulated facial images to simulate resolution inconsistencies [24].

- A general network-based detector, consisting of an XceptionNet model fine-tuned on deepfake data [25].

- Four EfficientNet models that exploit different attention mechanisms and training techniques [26]. In particular: (i) EfficientNetB4, a multi-objective neural network that optimizes accuracy and FLOPS, trained with a classical end-to-end approach; (ii) EfficientNetB4ST, the EfficientNetB4 architecture, trained using the Siamese strategy and based on the concept of triplet loss function; (iii) EfficientNetAutoAttB4, a variant of the standard EfficientNetB4 architecture trained with a classical end-to-end approach with the addition of an attention mechanism that allows the network to concentrate on the most important areas of the feature maps; and (iv) EfficientNetAutoAttB4ST, the same EfficientNetAutoAttB4 architecture trained using a Siamese strategy.

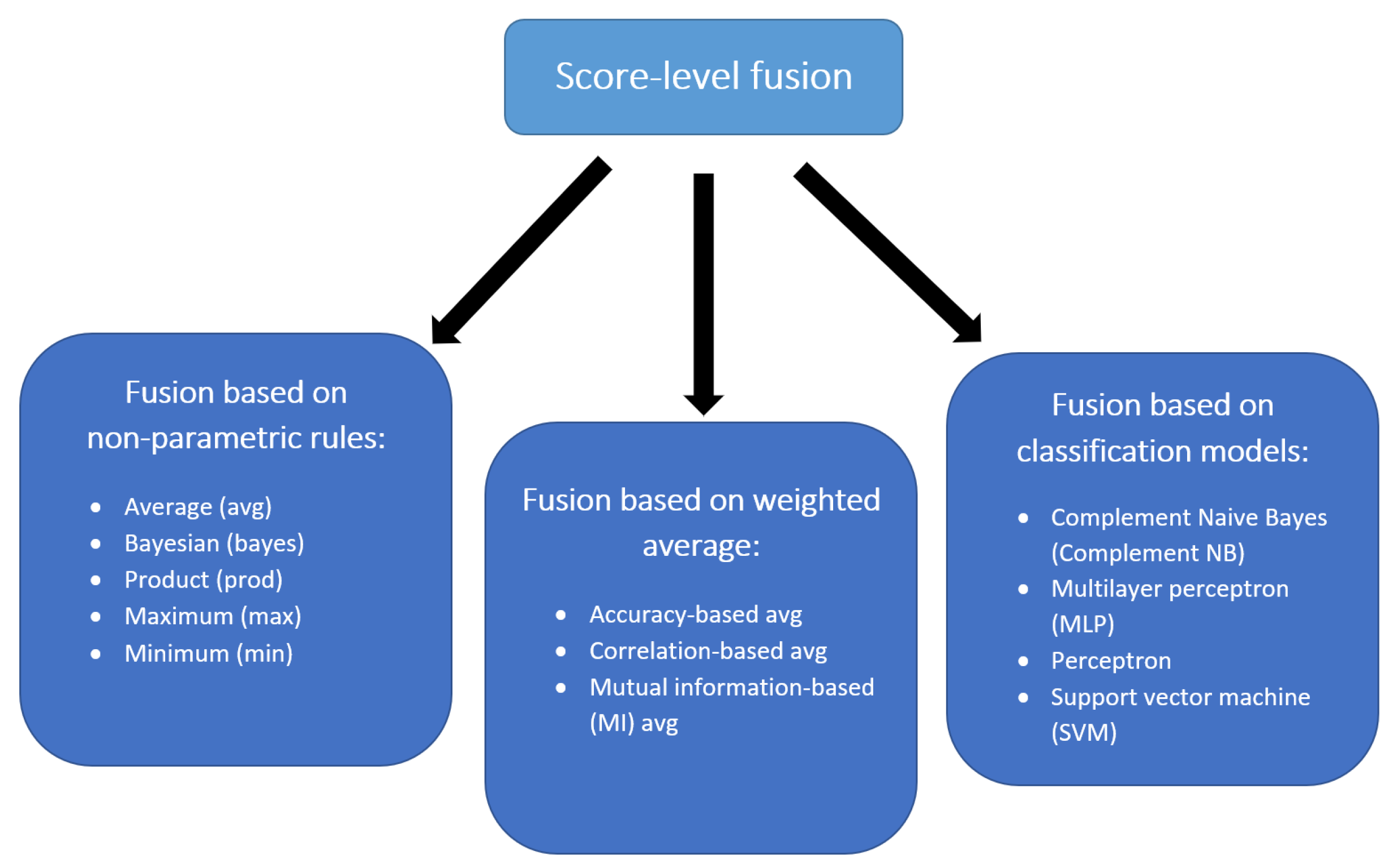

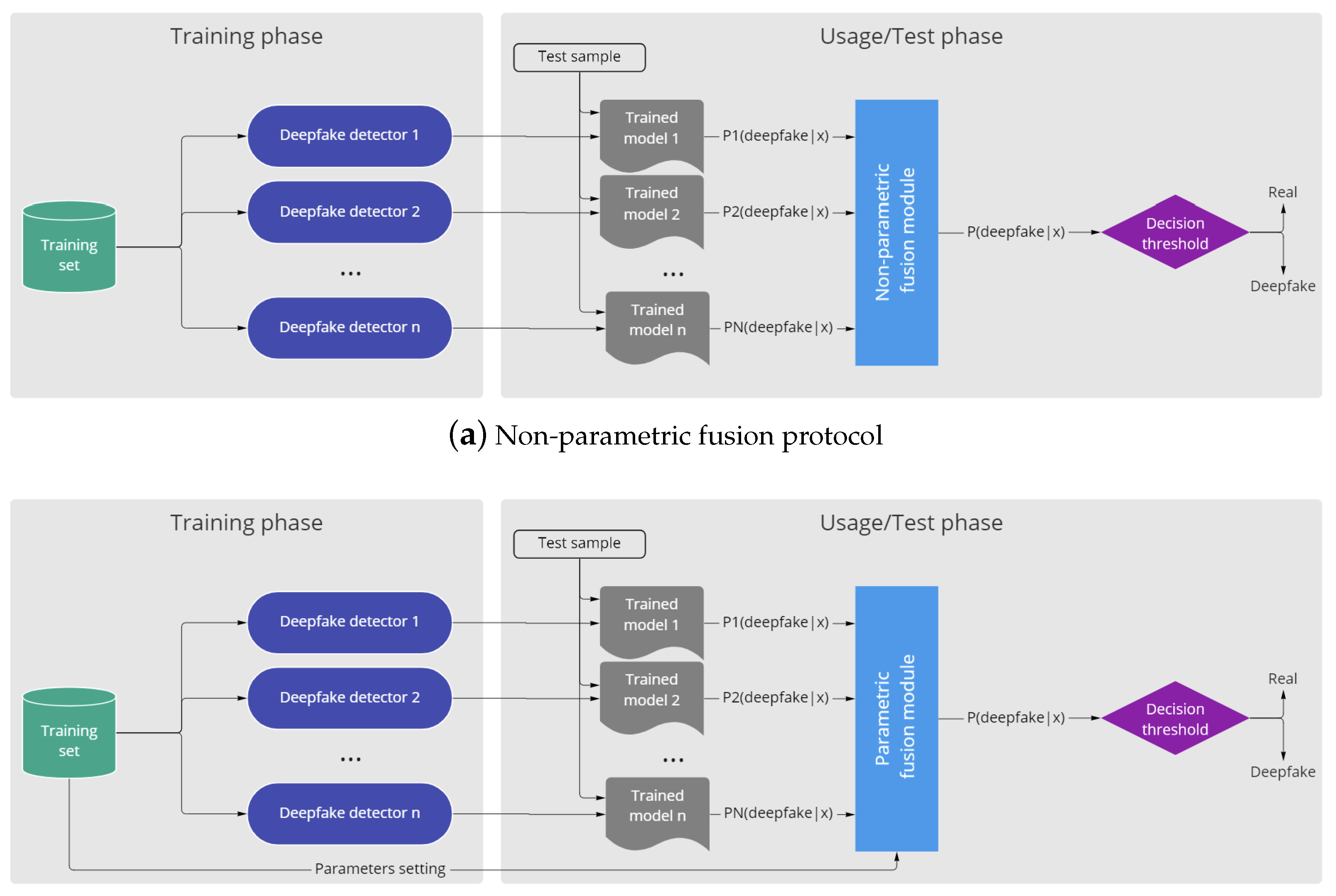

3. Ensemble Methods for Deepfake Detection

3.1. Non-Parametric Fusion Methods

3.2. Fusion Methods Based on Weighted Average

3.3. Fusion Methods Based on Classification Models

4. Experimental Results

4.1. Experimental Protocol

4.2. Results

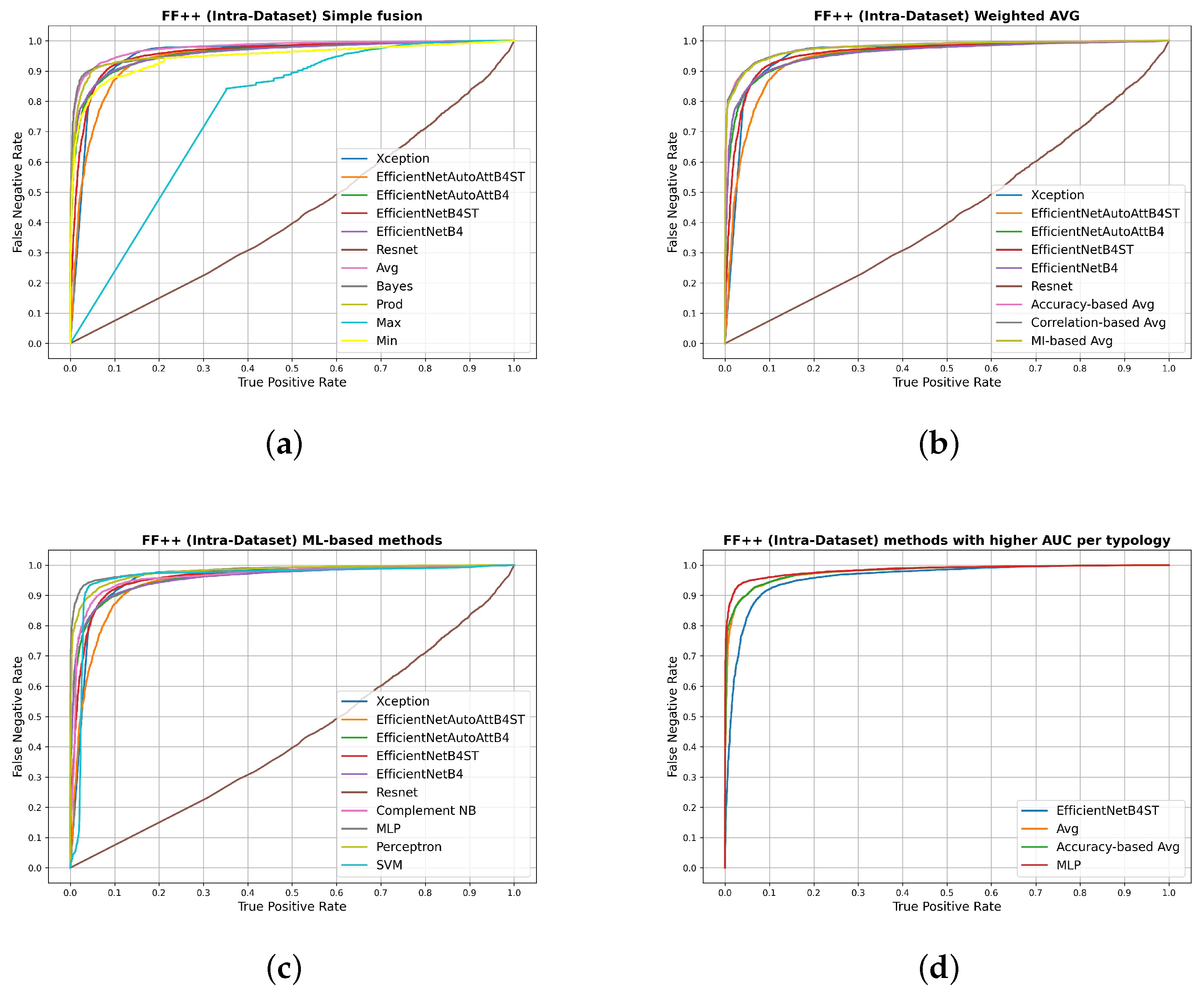

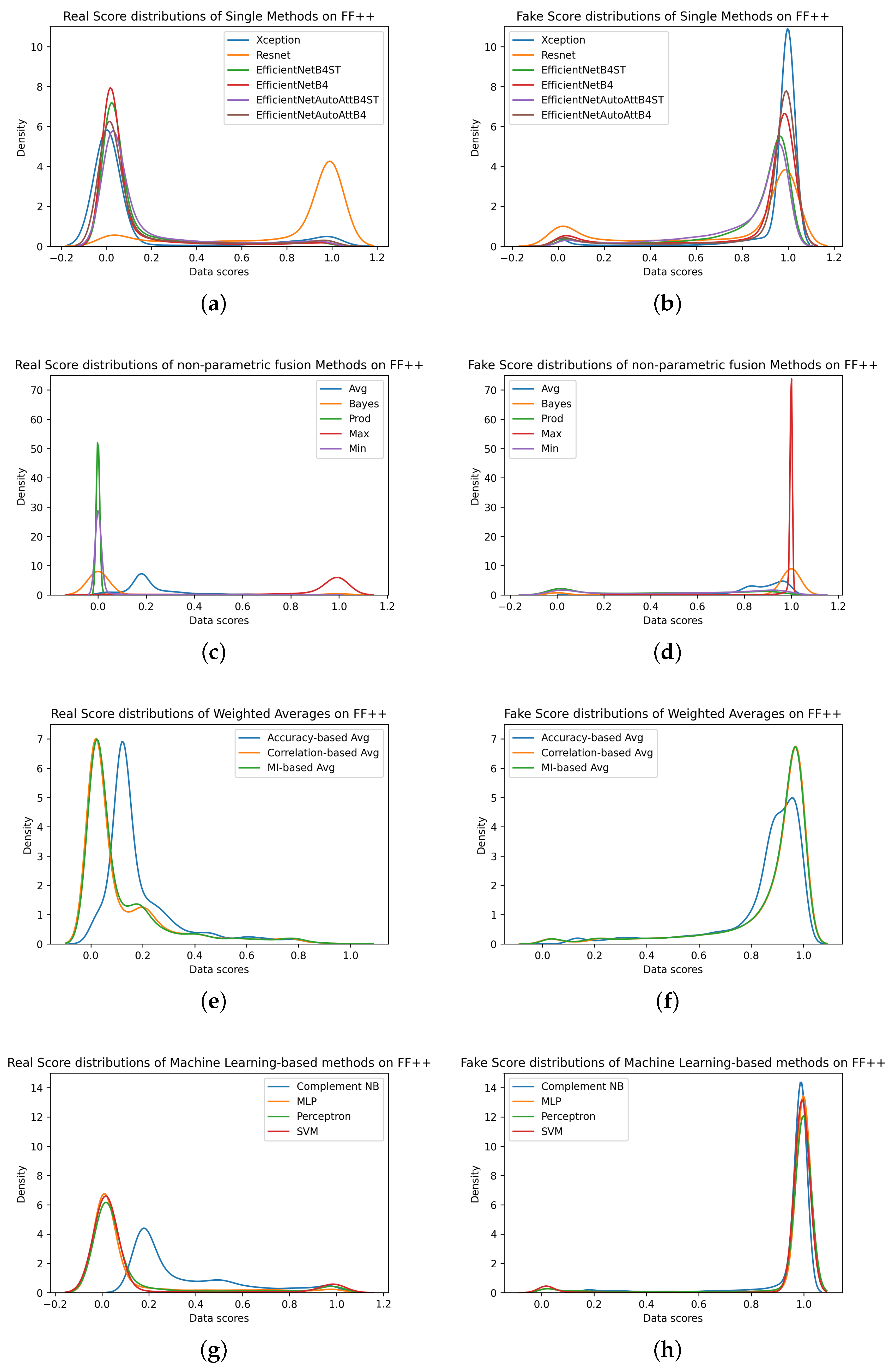

4.2.1. Intra-Dataset Scenario

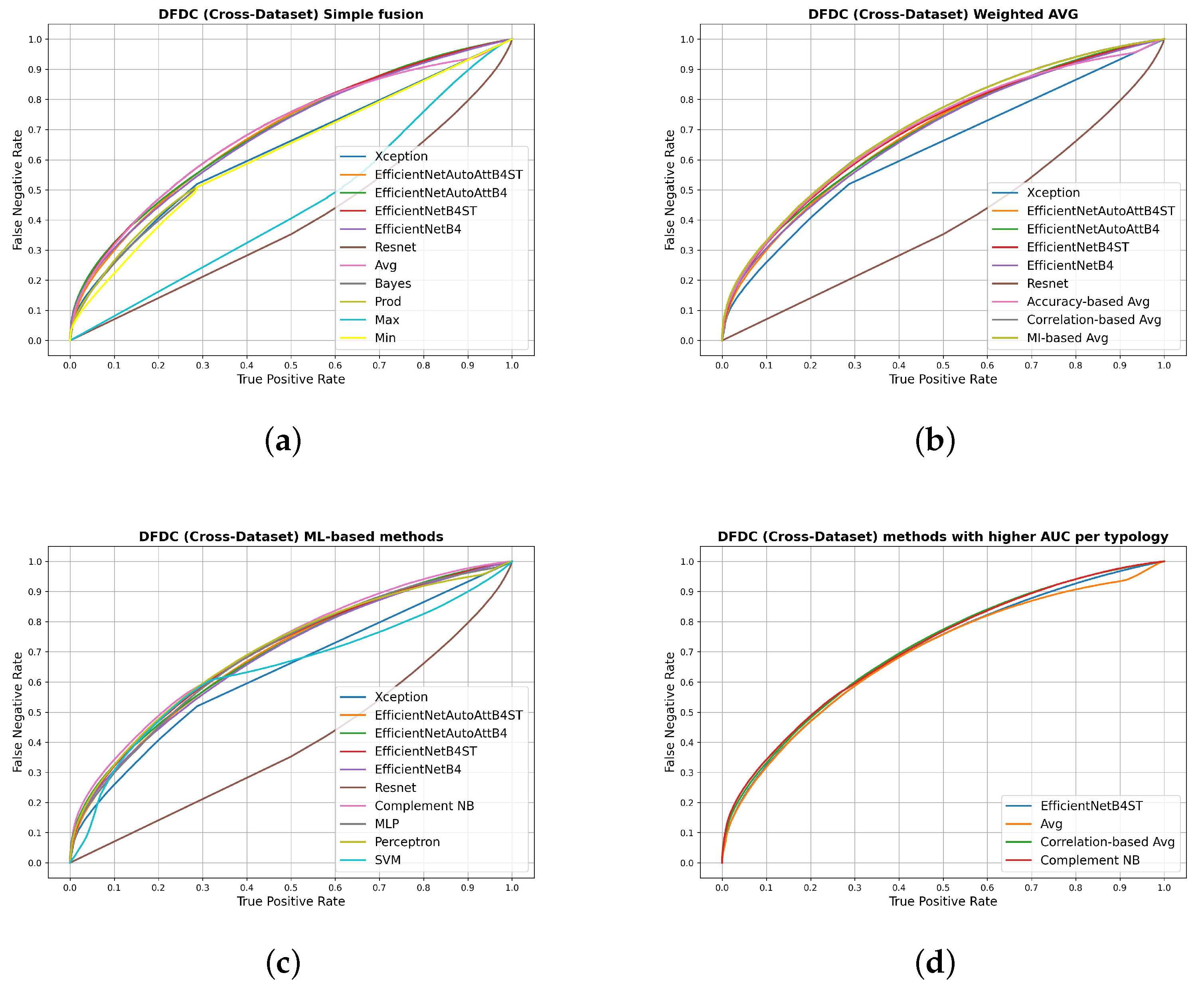

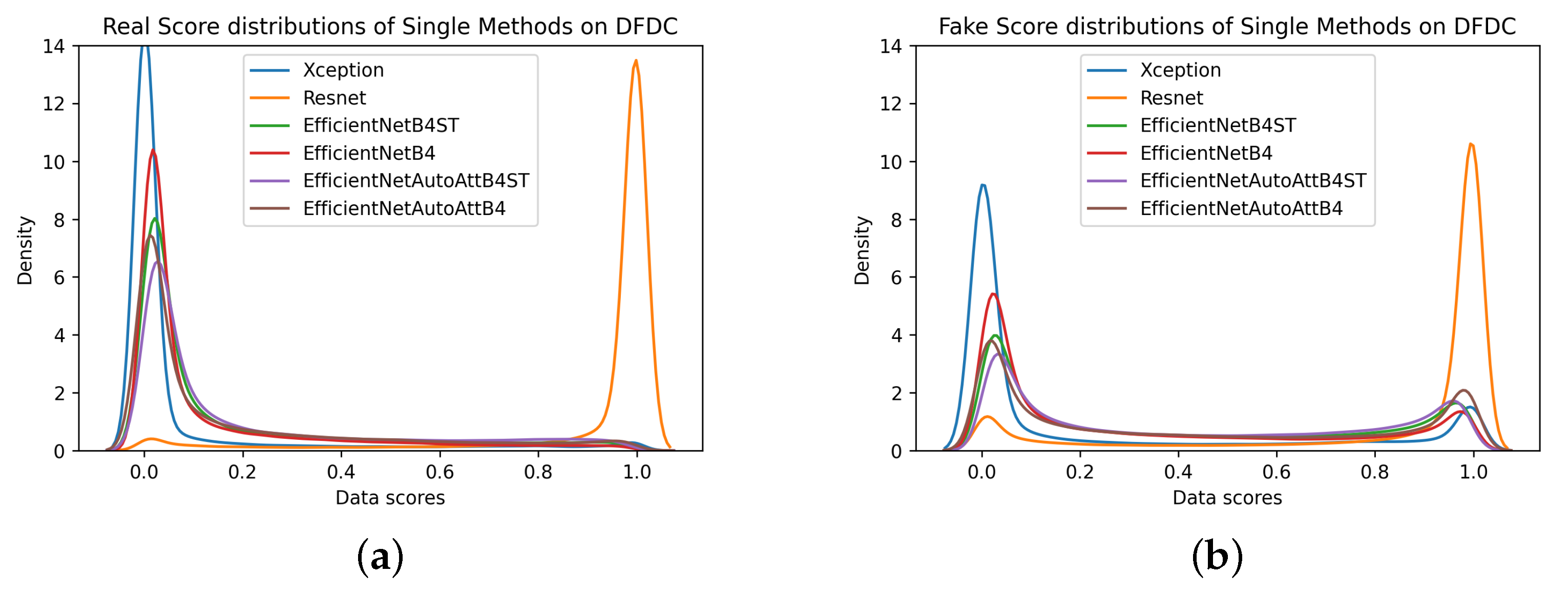

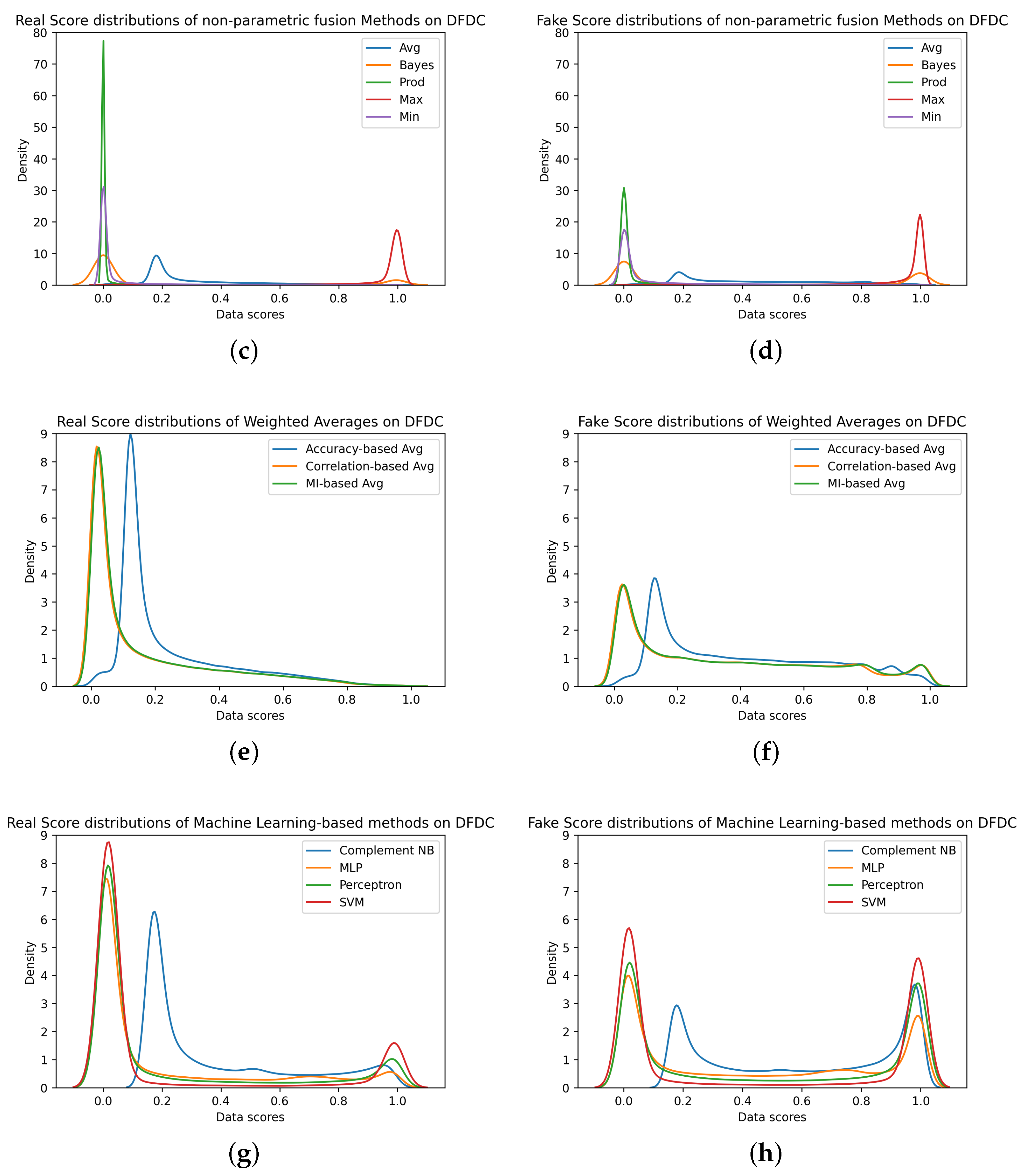

4.2.2. Cross-Dataset Scenario

- Non-parametric fusion rules fail to separate the two distributions. Due to the complete control of the experimentation, it is possible to note that, in contradiction with our earlier findings [10], the Maximum rule does not bring benefit in the detection of never-seen-before deepfake samples. In fact, this function is limited to shifting the mode score towards 1.

- Weighted average-based methods lower both the fake mode densities and the real mode density, thereby, resulting in distributions with high variability. This results in a slight improvement in cross-dataset performance, which is appreciable particularly when the operational point is set at the EER.

- Methods based on classification models increase the density of the fake mode with a mean equal to 1 (resulting in a distribution with a lower variability) but decrease the density of the real mode by increasing its variability. This results in a slight improvement in the cross-dataset performance, which is appreciable particularly when the operational points are stringent from the point of view of the FPR (e.g., the threshold at 1 % FPR).

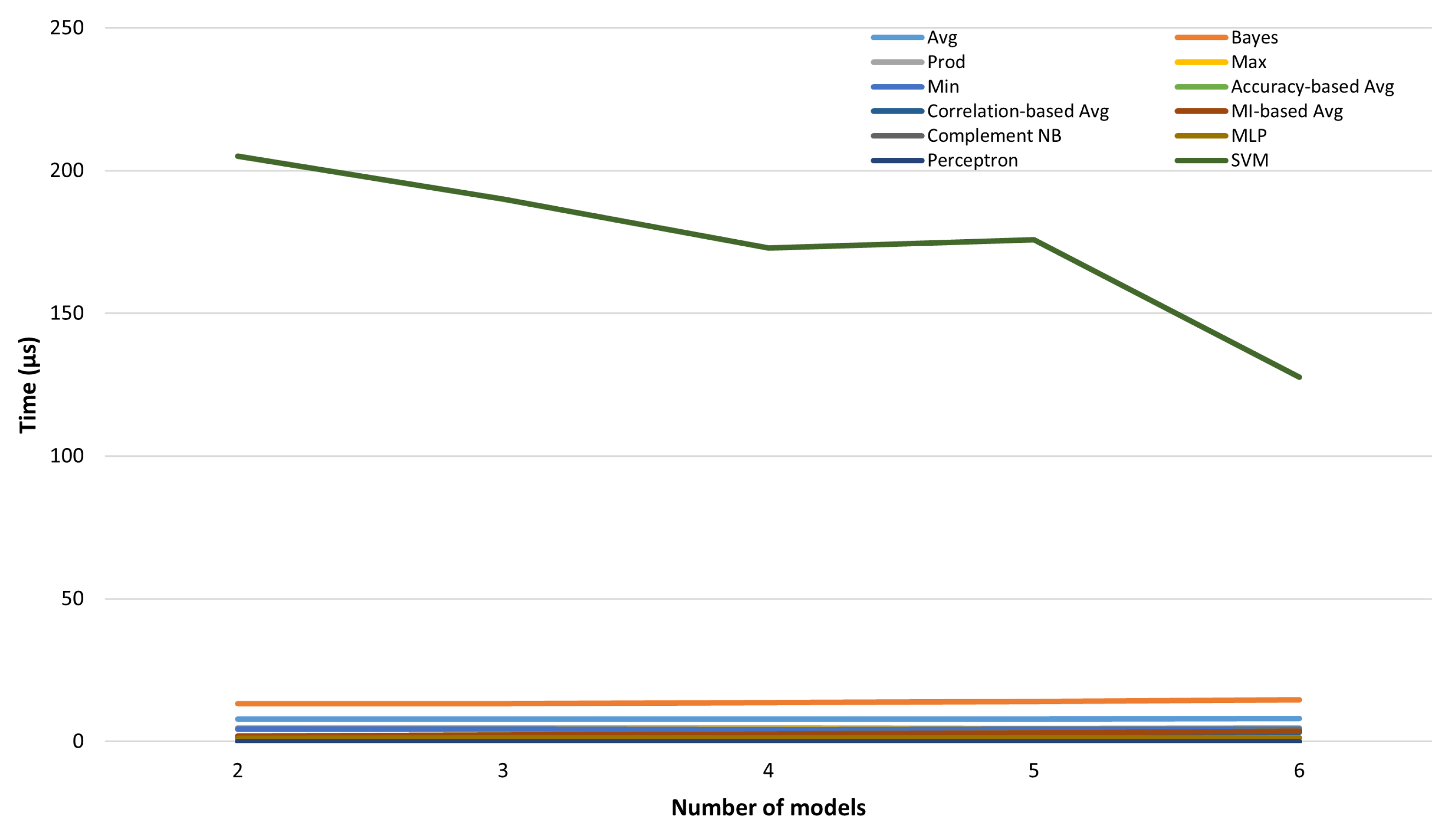

4.3. Fusion Time Analysis

5. Discussion and Conclusions

- The designer, on the basis of the application context, should define whether they expect known (controlled tests) or unknown (uncontrolled or partially controlled tests) types of data and manipulations.

- The designer should define at which operational point the detection system will have to work on the basis of the desired weight of first or second type errors.

- The designer should use a validation set to analyze the distributions of the scores and select the fusion rule closest to their needs.

- In an optimal application context given by controlled tests, the designer should select the fusion rule that allows them to have the two distributions of the real and fake scores of the validation set unimodal with a small variability and centered, respectively, on 0 and 1.

- The methods based on the weighted average generate single-model distributions with high variability and are therefore more suitable for applications with a decision threshold set to the system’s EER.

- The methods based on classification models generate bimodal distributions centered in 0 and 1 with a small variability and low density for the mode related to the wrong classifications; they are therefore more suitable for applications with a stringent decision threshold set, for example, at 1% FPR or 1% FNR.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| FLOPS | FLoating point Operations Per Second |

| PCC | Pearson Correlation Coefficient |

| RBF | Radial Basis Function |

| MI | Mutual Information |

| MLP | Multilayer Perceptron |

| SVM | Support Vector Machine |

| Complement NB | Complement Naive Bayes |

| FF++ | FaceForensics++ |

| DFDC | Deepfake Detection Challenge |

| GAN | Generative Adversarial Network |

| FPR | False Positive Rate |

| TPR | True Positive Rate |

| FNR | False Negative Rate |

| ROC | Receiver Operating Characteristic |

| AUC | Area Under the Curve |

| EER | Equal Error Rate |

| avg | average fusion rule |

| Bayes | Bayesian fusion rule |

| prod | Product fusion rule |

| max | maximum fusion rule |

| min | minimum fusion rule |

References

- Yu, P.; Xia, Z.; Fei, J.; Lu, Y. A survey on deepfake video detection. IET Biom. 2021, 10, 607–624. [Google Scholar] [CrossRef]

- Yadav, D.; Salmani, S. Deepfake: A survey on facial forgery technique using generative adversarial network. In Proceedings of the 2019 International Conference on Intelligent Computing and Control Systems (ICCS), Madurai, India, 15–17 May 2019; pp. 852–857. [Google Scholar]

- Chesney, B.; Citron, D. Deep fakes: A looming challenge for privacy, democracy, and national security. Calif. L. Rev. 2019, 107, 1753. [Google Scholar] [CrossRef] [Green Version]

- Feldstein, S. How artificial intelligence systems could threaten democracy. Conversation 2019. [Google Scholar]

- Delfino, R.A. Pornographic deepfakes: The case for federal criminalization of revenge porn’s next tragic act. Actual Probs. Econ. L. 2020, 105. [Google Scholar] [CrossRef]

- Zi, B.; Chang, M.; Chen, J.; Ma, X.; Jiang, Y.G. WildDeepfake: A Challenging Real-World Dataset for Deepfake Detection. In Proceedings of the 28th ACM International Conference on Multimedia, Virtual, Seattle, WA, USA,, 12–16 October 2020; pp. 2382–2390. [Google Scholar] [CrossRef]

- Zhang, T. Deepfake generation and detection, a survey. Multimed. Tools Appl. 2022, 81, 6259–6276. [Google Scholar] [CrossRef]

- Tolosana, R.; Romero-Tapiador, S.; Vera-Rodriguez, R.; Gonzalez-Sosa, E.; Fierrez, J. DeepFakes detection across generations: Analysis of facial regions, fusion, and performance evaluation. Eng. Appl. Artif. Intell. 2022, 110, 104673. [Google Scholar] [CrossRef]

- Rana, M.S.; Sung, A.H. DeepfakeStack: A Deep Ensemble-based Learning Technique for Deepfake Detection. In Proceedings of the 2020 seventh IEEE CSCloud/2020 Sixth IEEE EdgeCom, New York, NY, USA, 1–3 August 2020; pp. 70–75. [Google Scholar] [CrossRef]

- Concas, S.; Gao, J.; Cuccu, C.; Orrù, G.; Feng, X.; Marcialis, G.L.; Puglisi, G.; Roli, F. Experimental Results on Multi-modal Deepfake Detection. In Proceedings of the Image Analysis and Processing—ICIAP 2022; Sclaroff, S., Distante, C., Leo, M., Farinella, G.M., Tombari, F., Eds.; Springer: Cham, Switzerland, 2022; pp. 164–175. [Google Scholar]

- Tolosana, R.; Vera-Rodriguez, R.; Fierrez, J.; Morales, A.; Ortega-Garcia, J. Deepfakes and beyond: A Survey of face manipulation and fake detection. Inf. Fusion 2020, 64, 131–148. [Google Scholar] [CrossRef]

- Afchar, D.; Nozick, V.; Yamagishi, J.; Echizen, I. MesoNet: A Compact Facial Video Forgery Detection Network. In Proceedings of the 2018 IEEE International Workshop on Information Forensics and Security (WIFS), Hong Kong, China, 11–13 December 2018; pp. 1–7. [Google Scholar] [CrossRef] [Green Version]

- Marra, F.; Gragnaniello, D.; Cozzolino, D.; Verdoliva, L. Detection of GAN-Generated Fake Images over Social Networks. In Proceedings of the 2018 IEEE Conference on Multimedia Information Processing and Retrieval (MIPR), Miami, FL, USA, 12 April 2018; pp. 384–389. [Google Scholar] [CrossRef]

- Khalid, H.; Woo, S.S. OC-FakeDect: Classifying Deepfakes Using One-class Variational Autoencoder. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Seattle, WA, USA, 14–19 June 2020; pp. 2794–2803. [Google Scholar] [CrossRef]

- Li, Y.; Lyu, S. Exposing DeepFake Videos By Detecting Face Warping Artifacts. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Long Beach, CA, USA, 16–17 June 2019. [Google Scholar]

- Li, L.; Bao, J.; Zhang, T.; Yang, H.; Chen, D.; Wen, F.; Guo, B. Face X-Ray for More General Face Forgery Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 5000–5009. [Google Scholar] [CrossRef]

- Korshunov, P.; Marcel, S. Speaker Inconsistency Detection in Tampered Video. In Proceedings of the 2018 26th European Signal Processing Conference (EUSIPCO), Roma, Italy, 3–7 September 2018; pp. 2375–2379. [Google Scholar] [CrossRef] [Green Version]

- Agarwal, S.; Farid, H.; Gu, Y.; He, M.; Nagano, K.; Li, H. Protecting World Leaders Against Deep Fakes. In Proceedings of the CVPR Workshops, Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Mittal, T.; Bhattacharya, U.; Chandra, R.; Bera, A.; Manocha, D. Emotions Do not Lie: An Audio-Visual Deepfake Detection Method Using Affective Cues. In Proceedings of the 28th ACM International Conference on Multimedia, Seattle, WA, USA, 12–16 October 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 2823–2832. [Google Scholar]

- Conotter, V.; Bodnari, E.; Boato, G.; Farid, H. Physiologically-based detection of computer generated faces in video. In Proceedings of the 2014 IEEE International Conference on Image Processing (ICIP), Paris, France, 27–30 October 2014; pp. 248–252. [Google Scholar] [CrossRef] [Green Version]

- Hernandez-Ortega, J.; Tolosana, R.; Fierrez, J.; Morales, A. DeepFakes Detection Based on Heart Rate Estimation: Single- and Multi-frame. In Handbook of Digital Face Manipulation and Detection: From DeepFakes to Morphing Attacks; Rathgeb, C., Tolosana, R., Vera-Rodriguez, R., Busch, C., Eds.; Springer: Cham, Switzerland, 2022; pp. 255–273. [Google Scholar] [CrossRef]

- Yu, N.; Davis, L.; Fritz, M. Attributing Fake Images to GANs: Learning and Analyzing GAN Fingerprints. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 7555–7565. [Google Scholar]

- Marra, F.; Gragnaniello, D.; Verdoliva, L.; Poggi, G. Do GANs Leave Artificial Fingerprints? In Proceedings of the 2019 IEEE Conference on Multimedia Information Processing and Retrieval (MIPR), San Jose, CA, USA, 28–30 March 2019; pp. 506–511. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef] [Green Version]

- Rössler, A.; Cozzolino, D.; Verdoliva, L.; Riess, C.; Thies, J.; Niessner, M. FaceForensics++: Learning to Detect Manipulated Facial Images. In Proceedings of the ICCV 2019, Seoul, Korea, 27 October–2 November 2019; pp. 1–11. [Google Scholar] [CrossRef] [Green Version]

- Bonettini, N.; Cannas, E.D.; Mandelli, S.; Bondi, L.; Bestagini, P.; Tubaro, S. Video Face Manipulation Detection Through Ensemble of CNNs. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; pp. 5012–5019. [Google Scholar] [CrossRef]

- Ross, A.; Nandakumar, K. Fusion, Score-Level. In Encyclopedia of Biometrics; Li, S.Z., Jain, A., Eds.; Springer: Boston, MA, USA, 2009; pp. 611–616. [Google Scholar] [CrossRef]

- Noore, A.; Singh, R.; Vasta, M. Fusion, Sensor-Level. In Encyclopedia of Biometrics; Li, S.Z., Jain, A., Eds.; Springer: Boston, MA, USA, 2009; pp. 616–621. [Google Scholar] [CrossRef]

- Osadciw, L.; Veeramachaneni, K. Fusion, Decision-Level. In Encyclopedia of Biometrics; Li, S.Z., Jain, A., Eds.; Springer: Boston, MA, USA, 2009; pp. 593–597. [Google Scholar] [CrossRef]

- Sun, F.; Zhang, N.; Xu, P.; Song, Z. Deepfake Detection Method Based on Cross-Domain Fusion. Secur. Commun. Netw. 2021, 2021, 2482942. [Google Scholar] [CrossRef]

- Zhao, L.; Zhang, M.; Ding, H.; Cui, X. MFF-Net: Deepfake Detection Network Based on Multi-Feature Fusion. Entropy 2021, 23, 1692. [Google Scholar] [CrossRef]

- Zhao, Z.; Wang, P.; Lu, W. Multi-Layer Fusion Neural Network for Deepfake Detection. Int. J. Digit. Crime Forensics 2021, 13, 26–39. [Google Scholar] [CrossRef]

- Tao, Q. Face Verification for Mobile Personal Devices; University of Twente: Enschede, The Netherlands, 2009. [Google Scholar]

- Sim, H.M.; Asmuni, H.; Hassan, R.; Othman, R.M. Multimodal biometrics: Weighted score level fusion based on non-ideal iris and face images. Expert Syst. Appl. 2014, 41, 5390–5404. [Google Scholar] [CrossRef]

- Peng, J.; Abd El-Latif, A.A.; Li, Q.; Niu, X. Multimodal biometric authentication based on score level fusion of finger biometrics. Optik 2014, 125, 6891–6897. [Google Scholar] [CrossRef]

- Dass, S.C.; Nandakumar, K.; Jain, A.K. A principled approach to score level fusion in multimodal biometric systems. In Proceedings of the International Conference on Audio-and Video-Based Biometric Person Authentication, Hilton Rye Town, NY, USA, 20–22 July 2005; Springer: Berlin/Heidelberg, Germany, 2005; pp. 1049–1058. [Google Scholar]

- Duda, R.O.; Hart, P.E.; Stork, D.G. Pattern Classification; John Wiley & Sons: Hoboken, NJ, USA, 2006. [Google Scholar]

- Kabir, W.; Ahmad, M.O.; Swamy, M. Score reliability based weighting technique for score-level fusion in multi-biometric systems. In Proceedings of the 2016 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Placid, NY, USA, 7–10 March 2016; pp. 1–7. [Google Scholar]

- Snelick, R.; Uludag, U.; Mink, A.; Indovina, M.; Jain, A. Large-scale evaluation of multimodal biometric authentication using state-of-the-art systems. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 450–455. [Google Scholar] [CrossRef]

- Chia, C.; Sherkat, N.; Nolle, L. Towards a best linear combination for multimodal biometric fusion. In Proceedings of the 2010 20th International Conference on Pattern Recognition, Istanbul, Turkey, 23–26 August 2010; pp. 1176–1179. [Google Scholar]

- Poh, N.; Bengio, S. A Study of the Effects of Score Normalisation Prior to Fusion in Biometric Authentication Tasks. Technical Report, IDIAP. 2004. Available online: https://infoscience.epfl.ch/record/83130 (accessed on 18 June 2022).

- Kraskov, A.; Stögbauer, H.; Grassberger, P. Estimating mutual information. Phys. Rev. E 2004, 69, 066138. [Google Scholar] [CrossRef] [Green Version]

- Ross, B.C. Mutual information between discrete and continuous data sets. PLoS ONE 2014, 9, e87357. [Google Scholar] [CrossRef] [PubMed]

- Kozachenko, L.F.; Leonenko, N.N. Sample estimate of the entropy of a random vector. Probl. Peredachi Informatsii 1987, 23, 9–16. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Rennie, J.D.; Shih, L.; Teevan, J.; Karger, D.R. Tackling the poor assumptions of naive bayes text classifiers. In Proceedings of the 20th International Conference on Machine Learning (ICML-03), Washington, DC, USA, 21–24 August 2003; pp. 616–623. [Google Scholar]

- Dolhansky, B.; Bitton, J.; Pflaum, B.; Lu, J.; Howes, R.; Wang, M.; Ferrer, C.C. The DeepFake Detection Challenge Dataset. arXiv 2020, arXiv:2006.07397. [Google Scholar]

- Cohen, J. Statistical Power Analysis for the Behavioral Sciences, 2nd ed.; Routledge: New York, New York, 1988. [Google Scholar]

- He, M.; Horng, S.J.; Fan, P.; Run, R.S.; Chen, R.J.; Lai, J.L.; Khan, M.K.; Sentosa, K.O. Performance evaluation of score level fusion in multimodal biometric systems. Pattern Recognit. 2010, 43, 1789–1800. [Google Scholar] [CrossRef]

- Horng, S.J.; Chen, Y.H.; Run, R.S.; Chen, R.J.; Lai, J.L.; Sentosal, K.O. An improved score level fusion in multimodal biometric systems. In Proceedings of the 2009 International Conference on Parallel and Distributed Computing, Applications and Technologies, Higashihiroshima, Japan, 8–11 December 2009; pp. 239–246. [Google Scholar]

- Abderrahmane, H.; Noubeil, G.; Lahcene, Z.; Akhtar, Z.; Dasgupta, D. Weighted quasi-arithmetic mean based score level fusion for multi-biometric systems. IET Biom. 2020, 9, 91–99. [Google Scholar] [CrossRef]

- Bianco, S.; Cadene, R.; Celona, L.; Napoletano, P. Benchmark analysis of representative deep neural network architectures. IEEE Access 2018, 6, 64270–64277. [Google Scholar] [CrossRef]

- Afzaal, H.; Farooque, A.A.; Schumann, A.W.; Hussain, N.; McKenzie-Gopsill, A.; Esau, T.; Abbas, F.; Acharya, B. Detection of a potato disease (early blight) using artificial intelligence. Remote Sens. 2021, 13, 411. [Google Scholar] [CrossRef]

- Yakkati, R.R.; Yeduri, S.R.; Cenkeramaddi, L.R. Hand Gesture Classification Using Grayscale Thermal Images and Convolutional Neural Network. In Proceedings of the 2021 IEEE International Symposium on Smart Electronic Systems (iSES) (Formerly iNiS), Jaipur, India, 18–22 December 2021; pp. 111–116. [Google Scholar]

| Dataset | Train Samples | Test Samples |

|---|---|---|

| FF++ (Intra-Dataset) | 92,160 | 17,920 |

| DFDC (Cross-Dataset) | - | 793,216 |

| Model/Fusion Method | AUC | Cohens’s d | EER | FPR@FNR1% | FNR@FPR1% |

|---|---|---|---|---|---|

| EfficientNetAutoAttB4ST | 0.943 | 2.742 | 0.111 | 0.624 | 0.750 |

| EfficientNetAutoAttB4 | 0.959 | 2.958 | 0.101 | 0.628 | 0.388 |

| EfficientNetB4ST | 0.959 | 3.037 | 0.089 | 0.564 | 0.589 |

| EfficientNetB4 | 0.958 | 2.829 | 0.099 | 0.688 | 0.343 |

| Xception | 0.954 | 3.423 | 0.095 | 1.000 | 1.000 |

| ResNet | 0.428 | 0.230 | 0.553 | 1.000 | 1.000 |

| Avg | 0.977 | 3.555 | 0.072 | 0.418 | 0.222 |

| Bayes | 0.958 | 3.210 | 0.079 | 1.000 | 0.212 |

| Prod | 0.955 | 1.413 | 0.079 | 1.000 | 0.318 |

| Max | 0.762 | 0.943 | 0.257 | 0.770 | 1.000 |

| Min | 0.945 | 1.600 | 0.118 | 1.000 | 0.381 |

| Accuracy-based Avg | 0.979 | 3.670 | 0.071 | 0.430 | 0.190 |

| Correlation-based Avg | 0.978 | 3.691 | 0.071 | 0.455 | 0.185 |

| MI-based Avg | 0.978 | 3.65 | 0.072 | 0.456 | 0.196 |

| Complement NB | 0.963 | 3.351 | 0.083 | 0.591 | 0.558 |

| MLP | 0.984 | 4.310 | 0.053 | 0.401 | 0.131 |

| Perceptron | 0.979 | 3.793 | 0.071 | 0.433 | 0.206 |

| SVM | 0.957 | 3.864 | 0.058 | 0.794 | 0.949 |

| Model/Fusion Method | AUC | Cohens’s d | EER | FPR@FNR1% | FNR@FPR1% |

|---|---|---|---|---|---|

| EfficientNetAutoAttB4ST | 0.688 | 0.614 | 0.365 | 0.968 | 0.918 |

| EfficientNetAutoAttB4 | 0.692 | 0.625 | 0.367 | 0.963 | 0.889 |

| EfficientNetB4ST | 0.697 | 0.635 | 0.357 | 0.965 | 0.907 |

| EfficientNetB4 | 0.684 | 0.571 | 0.371 | 0.969 | 0.898 |

| Xception | 0.632 | 0.428 | 0.384 | 1.000 | 0.936 |

| ResNet | 0.398 | 0.303 | 0.579 | 1.000 | 1.000 |

| Avg | 0.690 | 0.636 | 0.357 | 0.983 | 0.908 |

| Bayes | 0.626 | 0.421 | 0.389 | 1.000 | 0.931 |

| Prod | 0.629 | 0.289 | 0.389 | 1.000 | 0.931 |

| Max | 0.448 | 0.011 | 0.554 | 0.984 | 1.000 |

| Min | 0.620 | 0.334 | 0.389 | 1.000 | 0.941 |

| Accuracy-based Avg | 0.697 | 0.660 | 0.354 | 0.982 | 0.899 |

| Correlation-based Avg | 0.711 | 0.685 | 0.350 | 0.952 | 0.890 |

| MI-based Avg | 0.710 | 0.435 | 0.351 | 0.955 | 0.886 |

| Complement NB | 0.711 | 0.709 | 0.354 | 0.949 | 0.876 |

| MLP | 0.693 | 0.611 | 0.358 | 0.979 | 0.893 |

| Perceptron | 0.698 | 0.632 | 0.353 | 0.982 | 0.901 |

| SVM | 0.637 | 0.578 | 0.376 | 0.993 | 0.979 |

| Fusion Time (s) | |||||

|---|---|---|---|---|---|

| Fusion Method | 2 Models | 3 Models | 4 Models | 5 Models | 6 Models |

| Avg | 7.872 | 7.891 | 7.825 | 7.853 | 8.132 |

| Bayes | 13.149 | 13.231 | 13.617 | 13.960 | 14.649 |

| Prod | 4.701 | 4.701 | 4.711 | 4.691 | 4.719 |

| Max | 4.280 | 4.290 | 4.306 | 4.576 | 4.315 |

| Min | 4.344 | 4.333 | 4.319 | 4.341 | 4.352 |

| Accuracy-based Avg | 1.823 | 2.259 | 2.672 | 3.117 | 3.622 |

| Correlation-based Avg | 1.693 | 2.056 | 2.434 | 2.810 | 3.270 |

| MI-based Avg | 1.828 | 2.255 | 2.672 | 3.103 | 3.646 |

| Complement NB | 0.528 | 0.524 | 0.525 | 0.517 | 0.534 |

| MLP | 1.180 | 1.206 | 1.221 | 1.251 | 1.292 |

| Perceptron | 0.613 | 0.625 | 0.640 | 0.658 | 0.646 |

| SVM | 204.975 | 190.043 | 172.928 | 175.817 | 127.683 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Concas, S.; La Cava, S.M.; Orrù, G.; Cuccu, C.; Gao, J.; Feng, X.; Marcialis, G.L.; Roli, F. Analysis of Score-Level Fusion Rules for Deepfake Detection. Appl. Sci. 2022, 12, 7365. https://doi.org/10.3390/app12157365

Concas S, La Cava SM, Orrù G, Cuccu C, Gao J, Feng X, Marcialis GL, Roli F. Analysis of Score-Level Fusion Rules for Deepfake Detection. Applied Sciences. 2022; 12(15):7365. https://doi.org/10.3390/app12157365

Chicago/Turabian StyleConcas, Sara, Simone Maurizio La Cava, Giulia Orrù, Carlo Cuccu, Jie Gao, Xiaoyi Feng, Gian Luca Marcialis, and Fabio Roli. 2022. "Analysis of Score-Level Fusion Rules for Deepfake Detection" Applied Sciences 12, no. 15: 7365. https://doi.org/10.3390/app12157365

APA StyleConcas, S., La Cava, S. M., Orrù, G., Cuccu, C., Gao, J., Feng, X., Marcialis, G. L., & Roli, F. (2022). Analysis of Score-Level Fusion Rules for Deepfake Detection. Applied Sciences, 12(15), 7365. https://doi.org/10.3390/app12157365