Abstract

Aspect-based sentiment analysis is a text analysis technique that categorizes data by aspect and identifies the sentiment attributed to each one and a task for a fine-grained sentiment analysis. In order to accurately perform a fine-grained sentiment analysis, a sentiment word within a text, a target it modifies, and a holder who represents the sentiment word are required; however, they should be extracted in sequence because the sentiment word is an important clue for extracting the target, which is key evidence of the holder. Namely, the three types of information sequentially become an important clue. Therefore, in this paper, we propose a stepwise multi-task learning model for holder extraction with RoBERTa and Bi-LSTM. The tasks are sentiment word extraction, target extraction, and holder extraction. The proposed model was trained and evaluated under Laptop and Restaurant datasets in SemEval 2014 through 2016. We have observed that the performance of the proposed model was improved by using stepwised features that are the output of the previous task. Furthermore, the generalization effect has been observed by making the final output format of the model a BIO tagging scheme. This can avoid overfitting to a specific domain of the review text by outputting BIO tags instead of the words.

1. Introduction

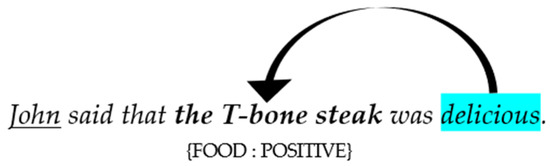

For a coarse sentiment analysis at the sentence- or document-level, the entire content of the sentence or the document is classified as one sentiment polarity and sentiment expressions play an important role in finding the correct polarity in most methods. On the other hand, for a fine-grained sentiment analysis (SA), aspect-based sentiment analysis (ABSA) is a text analysis technique that categorizes data by aspect and identifies the sentiment attributed to each one [1]. Figure 1 shows an example of ABSA. There is one aspect in the sentence, “John said that the T-bone steak was delicious”, in which the FOOD aspect is positive. As shown in Figure 1, to classify sentiment polarity for aspects, we need three types of information: the sentiment word (“delicious”), target (“T-bone steak”), and holder (“John”) of the aspect (“FOOD”). The sentiment word is an expression containing sentiment about the aspect in the text. The target is an object that the sentiment word modifies within the text. The holder is the person who expresses the sentiment for the aspect by using the sentiment word for the target.

Figure 1.

An example of ABSA on a restaurant review.

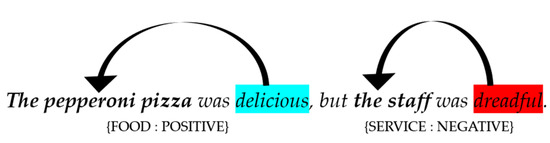

In particular, the sentiment polarity of all aspects needs to be identified when multiple aspects are presented in one sentence or document (see Figure 2). There are two aspects in the sentence, “The pepperoni pizza was delicious, but the staff was dreadful”, in which the FOOD aspect (colored in blue) is positive, while the SERVICE aspect (colored in red) is negative. Such problems are a significantly more challenging task for a fine-grained sentiment.

Figure 2.

Another example of ABSA on a restaurant review.

Generally, ABSA tasks can be divided into aspect-entity extraction and aspect-level sentiment classification. However, aspect-level sentiment classification requires aspect-entity extraction. In aspect-entity extraction, there are five sub-level tasks: sentiment word, target, holder, aspect, and entity extraction. These are characteristic of fine-grained SA and are information that is required to analyze sentiment accurately for aspects. However, we tackle sentiment word and target extraction as well as holder extraction but focus on the holder extraction due to the following three reasons. First, the aspect and the entity are fixed in Laptop and Restaurant datasets, disclosed in SemEval-2014 through 2016 [1,2,3] which are most frequently used in the ABSA task. Second, extracting the sentiment word, the target, and the holder should be performed at once according to Moore [4] because they are linked to one aspect. They should be extracted in sequence because the sentiment word is an important clue for extracting the target, which is key evidence of the holder. Namely, the three types of information sequentially become an important clue. Therefore, the holder should be extracted in order to obtain all information in ABSA. Third, an aspect-level sentiment classification cannot be performed in non-annotated data excluding the existing datasets of the Laptop and the Restaurant. This task outputs only three classification results—positive, negative, and neutral—using sentiment word, target, and holder information that is provided in the data. Therefore, it can be considered as performing a coarse SA by providing three pieces of information called stepwised features in this paper. We thus design and experiment a system that outputs sentiment word, target, and holder, by taking the raw text in Laptop and Restaurant datasets as input.

For ABSA, multiple aspects can be presented in one data. Therefore, sentiment expressions in a text affect the polarity classification of aspects but can also be an important clue for identifying the target modified by the sentiment expressions. Hu and Bing [5] and Popescu and Oren [6] conducted experiments on the extraction of sentiment words and collocation nouns that frequently co-occur by identifying whether sentiment words are present within the text using a sentiment lexicon. Qui et al. [7] analyzed sentiment words and modified target words using dependency parsing of a sentence, while Liu et al. [8] extracted targets using the relationship between nouns and adjectives and a translation model. Accordingly, sentiment word identification within a text and a relevant target extraction task is used as important clues for holder extraction which is the next step. To achieve high accuracy in ABSA, correctly extracting sentiment expression, relevant target, and holder is critical in a fine-grained SA task [9]. Johansson and Alessandro [10] tested a holder extraction task by combining binary classifiers of sentiment word and holder using a conditional random field (CRF). Performance was further improved in Yang and Claire [11] by employing a logistic classifier which was used as a feature by combining target extraction information in Johansson and Alessandro [10]. Zhang et al. [12] simultaneously employed two tasks of target extraction and holder extraction by carrying out multi-task labeling using Bi-LSTM-CRF. The advantage of this study is that Bi-LSTM was used for training the model with enriched contextual information. However, the disadvantages are that fixed GloVe embedding [13], not optimized during the training procedure, was used and the performance was not a too high and they did not use the sentiment word and the target information containing a key clue for the target extraction task and holder extraction task, respectively. Therefore, Zhang et al. [12] used Bi-LSTM, but a relatively lower F1 score was measured than Yang and Claire [11].

For overcoming these disadvantages, we use RoBERTa [14] as a language model to deliver more enriched contextual information. In particular, for improving the accuracy of the holder extraction task, three tasks of sentiment word, target, and holder extraction sequentially proceed. Therefore, we adopt a hard parameter sharing model [15], a type of multi-task learning, but the input of the task-specific layer consists of the output of the previous task-specific layer and the output of the shared layer, unlike the traditional models. Hence, the task-specific layer of the target extraction task takes sentiment word information as input, while the task-specific layer of the holder extraction task takes sentiment word and target information for training as input. We call this model a stepwise multi-task learning model in this paper.

The remainder of this paper is organized as follows. Section 2 introduces the ABSA, holder extraction, and multi-task learning model configuration. In Section 3, the stepwise multi-task learning model proposed in this paper is described in detail, while the performance of each task is evaluated and analyzed through experiments in Section 4. In Section 5, we draw conclusions from experiments and discuss future works.

2. Related Works

2.1. ABSA

A document- or sentence-level SA is assumed to discuss one topic within an analysis scope in general [16]. However, sentiments of other topics may be included in addition to the major topic of a document or a sentence. For overcoming such issues, ABSA finds sentimental expressions within the text and classifies the polarities for related aspects [4]. ABSA is largely categorized into document-level ABSA and sentence-level ABSA depending on the scope of text for which analysis. The major difference between the two types is the number of aspects possessed by the targets of each analysis. This is because fixed aspects mostly appear in a document, whereas three or fewer aspects generally appear in a sentence.

2.1.1. Document-Level ABSA

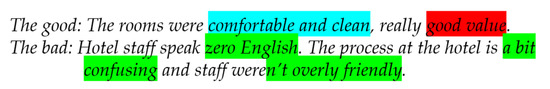

Figure 3 shows an example of a hotel review. If a review is considered as one document, document-level ABSA has latent aspects that are being analyzed.

Figure 3.

An example for document-level ABSA containing aspects: SERVICE (colored in green), CLEANESS (colored in blue), and PRICE (colored in red).

Yin et al. [17] attempted to solve this problem through the attention mechanism between sentence and word by selecting keywords that are highly associated with latent aspects. Furthermore, Li et al. [18] discovered that the sentiment of a document is correlated with the sentiment of each aspect. Consequently, sentiment information of a document was added to improve the performance when the attention mechanism is applied.

2.1.2. Sentiment-Level ABSA

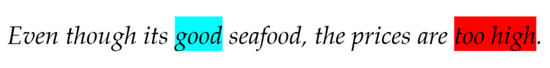

Figure 4 shows an example of a restaurant review in one sentence. Sentence-level ABSA tends to include a fewer number of aspects than document-level ABSA.

Figure 4.

An example for sentence-level ABSA containing aspects: FOOD (colored in blue) and PRICE (colored in red).

Sun et al. [19] produced auxiliary sentences corresponding to the sentences in a dataset according to set rules. Accordingly, the ABSA task was changed to a sentence-pair classification task to solve the problem. Jiang et al. [20] applied average pooling for embedding vectors of all selected aspects to perform the respective task using training data that have been concatenated with context.

2.2. Holder Extraction

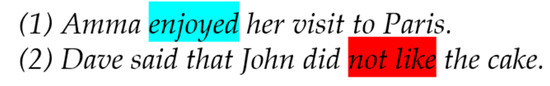

A holder extraction task involves extracting a direct or indirect holder or source of the sentiment represented in the text [21]. Figure 5 shows examples of when sentiment holders are direct and indirect.

Figure 5.

Examples of direct and indirect sentiment holders.

In Sentence (1) in Figure 5, a sentiment word “enjoyed” has a direct holder of “Amma”, while in Sentence 2, the holder “John” for a sentiment word “not like” is mentioned by “Dave” which is indirectly described.

A holder extraction task is also referred to as opinion source identification [21], source extraction [9], opinion holder extraction [10], or opinion entity identification [11]. Mariasovic and Anette [22] employed the holder extraction task and proposed an approach to a sequence labeling problem. A holder is defined as three items below. This task is distinguished from the named entity recognition (NER) task due to the third item.

- (i)

- A holder is generally a noun phrase.

- (ii)

- A holder must contain semantic sentiment or be an entity capable of expression.

- (iii)

- A holder must be related to sentiment.

Choi et al. [9] and Yang and Claire [11] experimented with sentiment word, target, and holder extraction tasks together using a CRF tagger and binary classifier. Johansson and Alessandro [10] improved accuracy by modeling the relationship between sentiment word and holder within a text based on the insight that one holder may have multiple sentiment words. However, the above approach has a disadvantage that a vast range of feature engineering procedures is required.

Accordingly, Hochreiter and Schmidhuber [23] simplified the feature engineering procedure by applying LSTM which is a neural network model. However, it demonstrated poorer performance compared to machine learning approaches due to insufficient training data. Zhang et al. [12] used a multi-task learning model for target extraction and holder extraction task. For resolving the issue of insufficient data, a shared layer was trained with a semantic role labeling model. The output of the semantic role labeling layer was used as input for task-specific layers, which is the last layer of the multi-task learning model. Data insufficiency was resolved by reducing the number of input data to simplify the process.

Using a multi-task learning model to simultaneously employ two tasks of target and holder extraction from one text increased training efficiency and solved the issue of lack of data, but such a method entails the following three limitations. First, the entire model becomes dependent on errors generated in a semantic role labeling layer. Second, sentiment word information, which can be a clue for target and holder extraction tasks, was not used. Third, the contextual information of text data was changed to semantic role information, thus causing information loss.

Therefore, in this paper, sentiment word and target extraction tasks are simultaneously executed for the holder extraction task. Sentiment word and target information become a clue for holder extraction. Furthermore, the extraction task was not replaced with another task to avoid information loss.

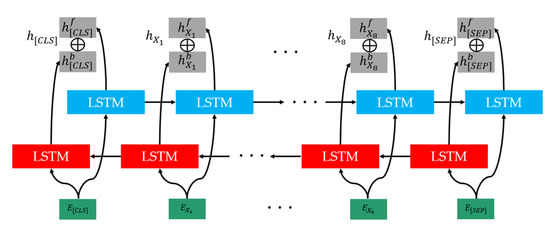

2.3. Bi-LSTM

As a type of neural network, a recurrent neural network (RNN) model specialized for sequential data processing has a limitation of temporal dependencies. This is called a “long-term dependency problem”. As a solution, Schuster and Paliwal [24] proposed a new type of neural network called long short-term memory (LSTM) by adding a “forget gate” to an RNN.

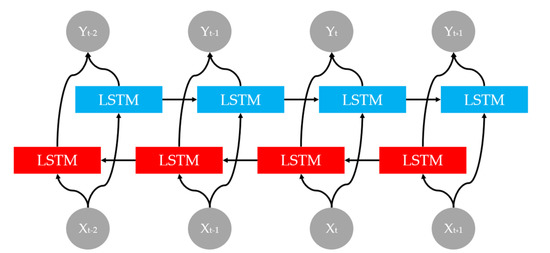

However, LSTM only uses previous content of each time step during training, thus failing to reflect future content. Vaswani et al. [25] proposed Bi-LSTM in which forward LSTM and backward LSTM are combined for solving such a problem. Figure 6 shows the architecture of Bi-LSTM.

Figure 6.

The architecture of Bi−LSTM.

For every time step, backward LSTM takes data in the reverse order of forward LSTM and outputs hidden vectors. If the input sequence is , forward LSTM is , while backward LSTM is ; and are concatenated to become the output sequence, . The Bi-LSTM model can reflect enriched contextual information of a text since forward and backward information is present in the output data.

In order to extract three types of information-sentiment word, target, and holder-from the same text, the contextual information must be reflected in the data as much as possible. Accordingly, in this paper, a hidden vector is generated while reflecting the contextual information of a text as much as possible by embedding data as input for Bi-LSTM.

2.4. RoBERTa

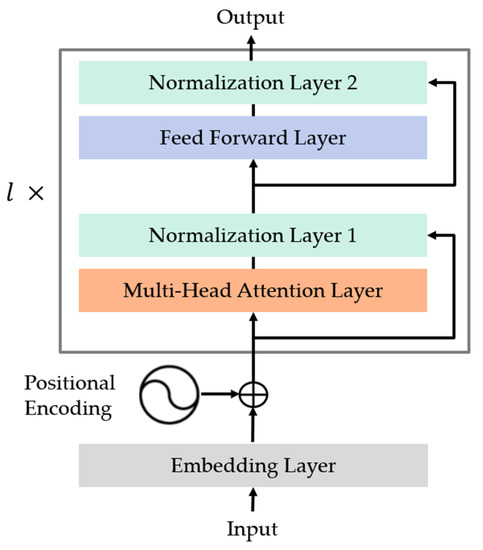

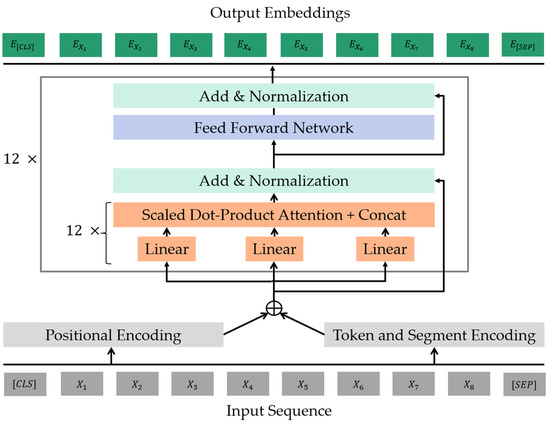

RoBERTa [14] is an improved model of BERT [26] based on a transformer [25]. Liu et al. [14] used the same architecture while assuming the distributed BERT model as an underfitting model, but significantly increased train data, epoch, and batch size. Next, sentence prediction (NSP) which is one of the training methods of the BERT model was removed, and masking patterns were dynamically arranged to improve the model’s performance. Figure 7 shows the architecture of RoBERTa.

Figure 7.

The architecture of RoBERTa.

Similar to BERT, RoBERTa has the encoder architecture of a transformer. In Figure 7, is the number of transformers. is calculated using Equation (1) by using the output of a previous transformer. is the sum of the embedding layer and positional encoding. and represent the first and second normalization layer, respectively. and represent the multi-head attention layer and the feed forward layer, respectively.

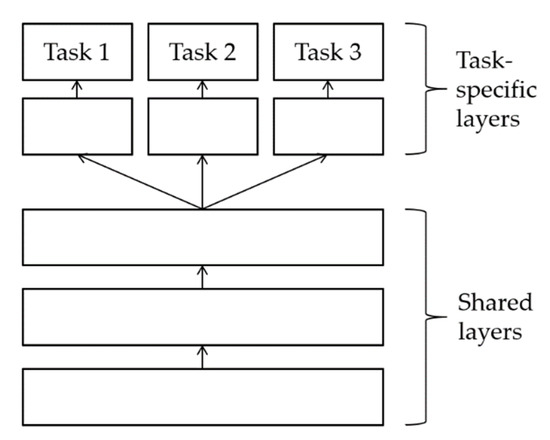

2.5. Multi-Task Learning

Multi-task learning is a training approach where one neural network can simultaneously execute multiple tasks, thus being effective when various problems share one low-level feature [27]. Multi-task learning can be categorized into the hard parameter sharing method [15] and the soft parameter sharing method [28]. The hard parameter sharing method has an architecture where the hidden layer of a neural network model is shared and the output layer is optimized for each task, as shown in Figure 8. This method has the most basic architecture of multi-task layers where the model must select an appropriate output for each task to prevent overfitting to a specific task [29]. The soft parameter sharing method uses a separate neural network for each task in which multi-task learning is executed through regularization of the difference between the parameters of each model.

Figure 8.

A structure of multi-task learning model using hard parameter sharing method.

Multi-task learning can generalize the data by minimizing or ignoring the noise in training multiple tasks. Generalized latent representation thus can be trained for multiple tasks that are simultaneously executed [27].

In this paper, we use multi-task learning of the hard parameter sharing method for sequentially executing sentiment word, target, and holder extraction tasks because the previous task becomes the clue for the next task. Each task-specific layer takes the output of a shared layer and the output of a task-specific layer of a previous step as input, while training specific features of the task to extract results.

3. Stepwise Multi-Task Learning Model for Holder Extraction

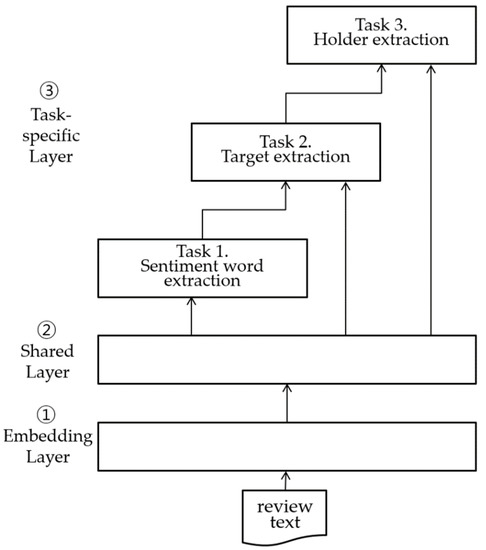

We propose a stepwise multi-task learning model which sequentially performs sentiment word, target, and holder extraction. In this section, we explain the architecture of the proposed model and the stepwise multi-task learning procedure in turn. Figure 9 shows the overview of the proposed model. It follows the basic format of the hard parameter sharing model mentioned in Section 2.5, but the task-specific outputs of sentiment word extraction and target extraction are sequentially connected to the input of the next task-specific layer.

Figure 9.

The overall structure of the proposed model.

The proposed stepwise multi-task learning model largely consists of three layers. The first layer is the embedding layer in which review text data are passed through RoBERTa to be converted to an embedding vector. The second layer is the shared layer which takes the output of RoBERTa as input. The shared layer consists of one Bi-LSTM. The third layer has three task-specific layers specified for sentiment word extraction, target extraction, and holder extraction tasks, respectively. Each task-specific layer consists of one Bi-LSTM and one softmax layer in which actual extraction occurs.

3.1. Embedding Layer

The embedding layer converts text needed for training and evaluating the stepwise multi-task learning model into embedding vectors. Figure 10 shows the converting procedure of RoBERTa-base embedding model. For generating the embedding vectors, RoBERTa-base which has improved performance than BERT as explained in Section 2.4 is selected. Moreover, as mentioned in Section 2.4, the RoBERTa-base model takes as input to generate positional encoding and token and segment encoding those convey to 12 transformers. Output embeddings are embedding matrices of each input sentence. which is the embedding matrix of the -th review text delivered to the shared layer.

Figure 10.

The framework of the embedding layer.

3.2. Shared Layer

The shared layer trains one Bi-LSTM by taking generated from the embedding layer as input. Accordingly, automatic feature extraction is performed. Figure 11 shows the feature extraction procedure performed by the shared layer.

Figure 11.

The framework of the shared layer.

One Bi-LSTM model consisting of forward LSTM and backward LSTM structure is used for catching linguistic features for sentiment word, target, and holder extraction tasks in the review text. As mentioned in Section 2.3, LSTM is a type of RNN that generally gains high performance in the feature generation of sequential data. An RNN does not have any problem if the length of input data is relatively short, but a longer length leads to the long-term dependency problem where initial input data do not get properly delivered to the last recurrent step. For solving this problem, LSTM has a forget gate added to the architecture of traditional RNN. Bi-LSTM combined with backward LSTM is thus used since LSTM cannot reflect future information in each training step. The review text contains sentiment information modifying which target is represented by a specific holder according to context. Bi-LSTM is used to ensure such relation is adequately reflected as features.

-th sequence data that have passed through Bi-LSTM become the hidden vector matrix , of forward LSTM and backward LSTM, and output from the shared layer. Feature representation generated through the shared layer is used in each task-specific layer for extraction tasks accurately.

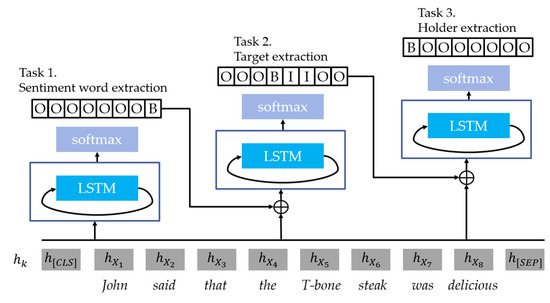

3.3. Task-Specific Layer

The task-specific layer executes practical extraction tasks based on the context vector delivered from the shared layer. The sentiment word extraction specific layer, target extraction specific layer, and holder extraction specific layer are sequentially arranged. Excluding the sentiment word extraction specific layer, the other two layers take the output of the shared layer and the output of the previous specific layer as input at the same time. Figure 12 shows the overall framework of the task-specific layer.

Figure 12.

The framework of task-specific layer.

The task-specific layer uses one LSTM instead of the Bi-LSTM used in the shared layer, because passing through the shared layer, the bidirectional context of the review text was reflected in . The hidden vector that passed through the shared layer also has the nature of sequential data. Therefore, after having the hidden vector pass through one LSTM, the BIO tag [30] classification task appropriate for each task is performed through the softmax layer.

Other specific layers, excluding the sentiment word extraction specific layer, additionally take the output of a previous specific layer’s LSTM as input. For example, the target extraction specific layer simultaneously takes the output of the sentiment word extraction specific layer’s LSTM as well as the output of the shared layer as input. The holder extraction specific layer also simultaneously takes the output of the target extraction specific layer’s LSTM as well as the output of the shared layer as input. Accordingly, stepwise linguistic information is delivered in addition to contextual information of sequential data. The reason for using the BIO tagged result instead of the output of LSTM which is used as input of the softmax layer is to avoid overfitting of the model and for data generalization. The target extraction specific layer and holder extraction specific layer can extract without overfitting when the generalized BIO tagged data are delivered while the hidden vector of the output is not directly delivered to the next specific layer. For such reasons, each of the three task-specific layers takes stepwised features which use a context vector appropriate for each task as input and gets the advantage of a multi-task learning model in which the parameters of the shared layer are shared.

Each task-specific layer uses cross-entropy as a loss function for training in the same way, and Equation (2) shows the cross-entropy loss calculation. In Equation (2), refers to the number of reviews for training in all tasks for minimizing the loss value. According to the characteristics of a multi-task learning model, the error propagation of the shared layer is applied with the average error of all tasks.

4. Experiments and Evaluation

We describe experimental environments and performance evaluation of the proposed holder extraction method and discuss some findings in this section.

4.1. Environment Setup

We use two domain-specific datasets for laptops and restaurants, called Laptop 14 through 16 and Restaurant 14 through 16 disclosed in SemEval 2014 through 2016. The datasets had been built for ABSA and consist of review texts and fine-grained aspect-level human annotations, for example, aspect term and its polarity, aspect category and its polarity, and so on. The datasets are widely used for training and evaluation in the ABSA task, especially, the holder extraction task [4,7,8,9,10,11,12,17,18,19,20,22]. In the datasets, sentiment word, target, and holder information are contained as answers for each task. Table 1 presents the statistics of each dataset.

Table 1.

Statistics of the datasets.

The amount of training data can affect the performance of deep learning-based models. Therefore, we prepared six datasets as many as possible and used the BIO tag mentioned in Section 3.3 for overcoming data insufficiency. For consistency in the training and testing of datasets and models, the six datasets in Table 1 were only selected and excluded other datasets [20,31], which involve diverse aspects even though they are available publicly.

Review texts in the datasets were converted to an embedding matrix using a RoBERTa-base language model explained in Section 2.4 and Section 3.1. The experiments were conducted by dividing the training data and testing data at a ratio of 7:3.

4.2. Performance Evaluation

As explained in Section 3.3, the output of a previous task-specific layer is used as input of each layer in target extraction and holder extraction specific layers, excluding the sentiment word extraction specific layer, for improving the performance of each task by being provided with stepwised features inherent in the training data. The effectiveness of this approach was substantiated by comparing the accuracy of the model consisting of task-specific layers which only uses the output of the shared layer as input and the proposed stepwise multi-task learning model. Table 2 presents the accuracy depending on whether stepwised features of each task are used. Task 1 is sentiment word extraction, Task 2 is target extraction, and Task 3 is holder extraction as mentioned above.

Table 2.

Performance evaluation in accuracy for stepwised features of each task.

As can be known through the model structure, Task 1 did not employ the stepwised features since a previous task-specific layer did not exist. Accuracies are relatively higher in Tasks 2 and 3 for which stepwised features are used, namely Task 2 and 3 were improved by 3.37%p and 1.19%p, respectively. We found out that the effect of sentiment word information on target extraction is greater than that of target information on holder extraction. It is reasonable to assume that this is attributable to noise or erroneous outputs of previous tasks.

The final task of the proposed stepwise multi-task learning model is holder extraction; however, sentiment word extraction and target extraction tasks were also experimented together. Sentiment word extraction and target extraction tasks were trained to deliver feature information to the holder extraction specific layer.

4.3. Detailed Performance Analysis

As shown in Table 1, the datasets used in the experiments are Laptop domain and Restaurant domain review text. The two datasets are both the review of a purchaser or a user, but the domains are completely different. Since the test results may affect the performance due to the domain difference, both micro-accuracy and macro-accuracy are measured for evaluating the extraction performance. Table 3, Table 4 and Table 5 present the extraction performance of each task, respectively.

Table 3.

Performance for sentiment word extraction (Task 1).

Table 4.

Performance for target extraction (Task 2).

Table 5.

Performance for holder extraction (Task 3).

As mentioned in Section 4.2, the stepwised features using the output of a previous task-specific layer improved the performance. Accuracy for each task did not vary significantly from each other, and all three tasks resulted in fairly high accuracy of over 80%.

However, accuracy varies according to datasets. The largest gap is 3.59%p between those of Restaurant 15 and Laptop 14 in Task 3; such a difference is significantly related to the difference in the ATT value between the datasets. A higher ATT value in Table 1 indicates a greater number of triplets in the text. Consequently, it causes ambiguity in the extraction task and also generates noise in the training of the stepwise multi-task learning model.

In spite of the difference in the domain of the datasets, micro-accuracy and macro-accuracy are not different in all tasks remarkably. This implies that the difference between the domains does not induce the proposed model to be overfitted, thus, the proposed model can be robust. It is reasonable to consider that this is attributable to the BIO tag scheme as the output format of the task-specific layer and is the effect of generalization in the training procedure.

4.4. Effectiveness Evaluation through Error Analysis

As shown in Table 3 and Table 4, however, the outputs of Tasks 1 and 2 can include errors because the performance is not perfect. That is, some noise was included in the stepwised features delivered to the task-specific layers of target extraction and holder extraction. Accordingly, the errors are propagated when they are generated at the previous task-specific layer.

Therefore, we re-analyzed the experiment results described in Section 4.3 in order to investigate the effectiveness of the proposed stepwise method. We counted the improvement of accuracy by using the stepwise method for the case with or without errors in delivery. Table 6 and Table 7 show the confusion matrix for the results of Task 2 and 3 according to the correct or error cases of Task 1.

Table 6.

Confusion matrix of tracking performance in correct case of Task 1.

Table 7.

Confusion matrix of tracking performance in incorrect case of Task 1.

By comparing the ratio of (c) and (f) in Table 6 with the ratio of (c′) and (f′) in Table 7, the effectiveness of delivering correct features from Task 1 to Task 2 was observed. When correct features were delivered, extraction was correctly executed in Task 2 at a ratio of 31881.76. When error features were delivered, in contrast, the correct answer was found in Task 2 at a ratio of 6184981.24. These results show the effectiveness of using stepwised features and of delivering correct features.

The ratio of (a) and (b) in Table 6 is 24996893.63, thus showing the capability as clue data when correct answers to Tasks 1 and 2 are delivered. Furthermore, these results indicate that the proposed stepwise method is effective in the holder extraction task. The ratio of (a′) and (b′) in Table 7 is 4671513.09, which is slightly lower by 0.54, but the ratio of the correct answer is still relatively high. On the contrary, the ratio of (d) and (e) is 9788321.18, in which extraction performance of the holder extraction was drastically reduced although an error occurs in the target information, but sentiment word is correctly extracted. It can thus be inferred that holder information is far more significantly affected by target information than sentiment word information. In other words, rather than Task 1, the result of Task 2 has a greater impact on the result of Task 3. However, the ratio of (d′) and (e′) where both Tasks 1 and 2 have errors was 1523460.44, thus showing a significant performance degradation of the holder extraction specific layer. This result indicates that the dependency on stepwised features is higher than the dependency on the output of the shared layer used as input in Task 3.

From these analyses, we observed that the stepwised features are useful for holder extraction and the dependency between tasks is clearly present. Thus, the performance of holder extraction can be improved by reducing errors of the previous task.

4.5. Discussion

We did not directly compare the proposed method and others in performance because it is difficult as well as takes much time to re-implement other models. In this section, we briefly discuss the difference between the proposed method and others, which are the most recent studies [12,22] mentioned in Section 2.2. Table 8 shows the difference. The evaluation measure of the former is accuracy while those of the latter are F1 scores. Datasets of the former are Laptop and Restaurant 14–16, while those of the latter are MPQA 2.0 [32], which contain no reviews but news articles manually annotated using an annotation scheme for opinions and other private states (i.e., beliefs, emotions, sentiments, speculations, etc.). The former experiments all of the sentiment words, targets, and holders, while the latter did not experiment the sentiment word extraction.

Table 8.

Comparison of the proposed method and other methods.

The core concept of this paper is the stepwised features, and then we showed the improvement of the performance using them in Section 4.2. In the case of Mariasovic and Anette [22], unlike what we obtained by extracting the correct holder, they labeled all words in the text with semantic roles. As a result, there is currently no study that simultaneously extracts the sentiment word, target, and holder. We compared only with the baseline in order to show the effectiveness of the stepwised features.

5. Conclusions

In this paper, we proposed a stepwise multi-task learning model for the holder extraction task. Moreover, the proposed model extracted sentiment words and targets together for holder extraction, but they extracted in sequence because of their dependency; that is, the sentiment word is an important clue for extracting the target, which is key evidence of the holder. Consequentially, we treat multi-tasks of sentiment word extraction, target extraction, and holder extraction, not simultaneously but sequentially. Through experiments with Laptop 14 through 16 and Restaurant 14 through 16 datasets, the performance of the proposed model was enhanced by using the stepwised features which are the output of a previous task-specific layer in training. In addition, we used the BIO tag scheme of the output format, and, on this account, the proposed approach is generalized so that the model becomes less sensitive to domains. In addition, we observed that the performance of the proposed model was a little bit sensitive to the ATT value of each dataset. Finally, the stepwised features help in enhancing the performance, but they may include some noise, which can be propagated to sub-sequential tasks and may have a negative effect on the performance of the final task.

In the future, experiments will be conducted by separating the review text into sentiment expressions through semantic segmentation in order to reduce noise and alleviate dependency on the ATT value.

Author Contributions

Conceptualization, H.-M.P. and J.-H.K.; methodology, H.-M.P.; software, H.-M.P.; validation, H.-M.P. and J.-H.K.; resources, H.-M.P.; data curation, H.-M.P.; writing—original draft preparation, H.-M.P.; writing—review and editing, J.-H.K.; supervision, J.-H.K.; project administration, J.-H.K. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the BK21 Four program through the National Research Foundation of Korea (NRF) funded by the Ministry of Education of Korea (Center for Creative Leaders in Maritime Convergence).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Pontiki, M.; Galanis, D.; Pavlopoulos, J.; Papageorgiou, H.; Androutsopoulos, I.; Manandhar, S. SemEval-2014 task 4: Aspect based sentiment analysis. In Proceedings of the 8th International Workshop on Semantic Evaluation, Dublin, Ireland, 23–29 August 2014; pp. 27–35. [Google Scholar]

- Pontiki, M.; Galanis, D.; Papageorgiou, H.; Manandhar, S.; Androutsopoulos, I. SemEval-2015 task 12: Aspect based sentiment analysis. In Proceedings of the 9th International Workshop on Semantic Evaluation, Denver, CO, USA, 4–5 June 2015; pp. 486–495. [Google Scholar]

- Pontiki, M.; Galanis, D.; Pavlopoulos, J.; Papageorgiou, H.; Androutsopoulos, I.; Manandhar, S.; Al-Smadi, M.; Al-Ayyoub, M.; Zhao, Y.; Qin, B.; et al. SemEval-2016 task 5: Aspect based sentiment analysis. In Proceedings of the 10th International Workshop on Semantic Evaluation, Diego, CA, USA, 16–17 June 2016; pp. 19–30. [Google Scholar]

- Moore, A. Empirical Evaluation Methodology for Target Dependent Sentiment Analysis. Ph.D. Thesis, Lancaster University, Lancaster, UK, 2021. [Google Scholar]

- Hu, M.; Bing, L. Mining opinion features in customer reviews. In Proceedings of the 19th National Conference on Artificial Intelligence, 16th Conference on Innovative Applications of Artificial Intelligence, Orlando, FL, USA, 18–22 July 2004; pp. 755–760. [Google Scholar]

- Popescu, A.M.; Oren, E. Extracting product features and opinions from reviews. In Proceedings of the Human Technology Conference and Conference on Empirical Methods in Natural Language Processing, Vancouver, BC, Canada, 6–8 October 2005; pp. 339–346. [Google Scholar]

- Qiu, G.; Liu, B.; Bu, J.; Chen, C. Opinion word expansion and target extraction through double propagation. Comput. Linguist. 2011, 37, 9–27. [Google Scholar] [CrossRef]

- Liu, K.; Liheng, X.; Jun, Z. Opinion target extraction using word-based translation model. In Proceedings of the 2012 Joint Conference on Empirical Methods in Natural Language Processing and Computational Linguistics, Jeju Island, Korea, 12–14 July 2012; pp. 1346–1356. [Google Scholar]

- Choi, Y.; Eric, B.; Claire, C. Joint extraction of entities and relations for opinion recognition. In Proceedings of the 2006 Conference on Empirical Methods in Natural Language Processing, Sydney, Australia, 22–23 July 2006; pp. 431–439. [Google Scholar]

- Johansson, R.; Alessandro, M. Reranking models in fine-grained opinion analysis. In Proceedings of the 23rd International Conference on Computational Linguistics, Beijing, China, 23–27 August 2010; pp. 519–527. [Google Scholar]

- Yang, B.; Claire, C. Joint inference for fine-grained opinion extraction. In Proceedings of the 51st Annual Meeting of the Association for Computational Linguistics, Sofia, Bulgaria, 4–9 August 2013; pp. 1640–1649. [Google Scholar]

- Zhang, M.; Peile, L.; Guohong, F. Enhancing opinion role labeling with semantic-aware word representations from semantic role labeling. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, MI, USA, 2–7 June 2019; pp. 641–646. [Google Scholar]

- Pennington, J.; Socher, R.; Manning, C. Glove: Global vectors for word representation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing, Doha, Qatar, 25–29 October 2014; pp. 1532–1543. [Google Scholar]

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V. Roberta: A robustly optimized BERT pre-training approach. arXiv 2019, arXiv:1907.11692. [Google Scholar]

- Caruana, R. Multitask learning. Auton. Agents Multi-Agent Syst. 1998, 27, 95–133. [Google Scholar]

- Zhao, J.; Liu, K.; Xu, L. Sentiment analysis: Mining opinions, sentiments, and emotions. Comput. Linguist. 2014, 42, 595–598. [Google Scholar] [CrossRef]

- Yin, Y.; Yangqiu, S.; Ming, Z. Document-level multi-aspect sentiment classification as machine comprehension. In Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing, Copenhagen, Denmark, 7–11 September 2017; pp. 2044–2054. [Google Scholar]

- Li, J.; Haitong, Y.; Chengqing, Z. Document-level multi-aspect sentiment classification by jointly modeling users, aspects, and overall ratings. In Proceedings of the 27th International Conference on Computational Linguistics, Santa Fe, NM, USA, 20–26 August 2018; pp. 925–936. [Google Scholar]

- Sun, C.; Luyao, H.; Xipeng, Q. Utilizing BERT for aspect-based sentiment analysis via constructing auxiliary sentence. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, MI, USA, 2–7 June 2019; pp. 380–385. [Google Scholar]

- Jiang, Q.; Chen, L.; Xu, R.; Ao, X.; Yang, M. A challenge dataset and effective models for aspect-based sentiment analysis. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference for Computational Linguistics, Hong Kong, China, 3–7 November 2019; pp. 6280–6285. [Google Scholar]

- Choi, Y.; Cardie, C.; Riloff, E.; Patwardhan, S. Identifying sources of opinions with conditional random fields and extraction patterns. In Proceedings of the Human Language Technology Conference and Conference on Empirical Methods in Natural Language Processing, Vancouver, BC, Canada, 5–8 October 2005; pp. 355–362. [Google Scholar]

- Mariasovic, A.; Anette, F. SRL4ORL: Improving opinion role labeling using multi-task learning with semantic role labeling. In Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, New Orleans, LA, USA, 1–6 June 2018; pp. 583–594. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Schuster, M.; Paliwal, K.K. Bidirectional recurrent neural networks. IEEE Trans. Signal Processing 1997, 45, 2673–2681. [Google Scholar] [CrossRef] [Green Version]

- Vaswani, A.; Sharzeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 6000–6010. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, MI, USA, 2–7 June 2019; pp. 4171–4186. [Google Scholar]

- Ruber, S. An overview of multi-task learning in deep neural networks. arXiv 2017, arXiv:1706.05098. [Google Scholar]

- Duong, L.; Cohn, T.; Bird, S.; Cook, P. Low resource dependency parsing: Cross-lingual parameter sharing in a neural network parser. In Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics and the 7th International Joint Conference on Natural Language Processing, Beijing, China, 26–31 July 2015; pp. 845–850. [Google Scholar]

- Baxter, J. A Bayesian/information theoretic model of learning to learn via multiple task sampling. Mach. Learn. 1997, 28, 7–39. [Google Scholar] [CrossRef]

- Ramshaw, L.A.; Mitchell, P.M. Text chunking using transformation-based learning. In Proceedings of the 3rd Workshop on Very Large Corpora, Maryland, MD, USA, 21–22 June 1999; pp. 157–176. [Google Scholar]

- Dong, L.; Wei, F.; Tan, C.; Tang, D.; Zhou, M.; Xu, K. Adaptive recursive neural network for target-dependent twitter sentiment classification. In Proceedings of the 52nd Annual Meeting of the Association for Computational Linguistics, Baltimore, MD, USA, 23–24 June 2014; pp. 49–54. [Google Scholar]

- Wilson, T. Fine-Grained Subjectivity and Sentiment Analysis: Recognizing the Intensity, Polarity, and Attitudes of Private States. Ph.D. Thesis, University of Pittsburgh, Pittsburgh, PA, USA, 2007. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).