In this section, we give an overview of the Silvera language.

Silvera is a declarative language developed for the domain of microservice software architecture development. We call these types of DSL “technical DSLs” or “horizontal DSLs”. The language is designed in a way that directly implements design patterns related to the domain of MSA.

3.1. Implementation Phases

There are several approaches to the implementation of DSLs. These approaches were first classified by Spinellis [

47] in the form of a collection of design patterns. His work was later extended by Mernik et al. [

48]. The patterns are associated with the development phases of DSLs, which results in the following classes of patterns:

Decision patterns—which provide a set of common situations for which DSLs have been successfully created in the past,

Analysis patterns—which provide a set of guidelines on how to identify the problem domain and gather the domain knowledge,

Design patterns—which provide a set of guidelines on how to design a DSL,

Implementation patterns—which provide a set of guidelines on how to choose the most suitable implementation approach.

Silvera was developed in four successive phases: Analysis, Decision, Design, and Implementation.

Analysis phase. During the analysis phase, we used the analysis patterns from

Table 5.

Most of the domain knowledge was gathered by analyzing the available literature, the code, and documentation (

Extract from Code) pattern of available systems. We analyzed the domain in an informal way (

Informal) pattern, and we gathered the literature mostly via the snowballing approach. We gathered a body of relevant papers, searched the papers that were in the reference list of these starting papers (

Backward Snowballing [

49]), and the papers that cite these starting papers (

Forward Snowballing [

49]). The output of this phase consisted of domain-specific terminology and semantics in more or less abstract form [

48].

Decision phase. During this phase, we used the decision patterns from

Table 6.

The decision to create a new DSL stemmed from the fact that we wanted to automatically generate the infrastructure code (Task Automation) pattern and also to be able to perform domain-specific analysis and evaluation of the designed microservice-based system (AVOPT) pattern.

Design phase. This phase can be characterized along two orthogonal dimensions: the relationship between the DSL and existing languages and the formal nature of the design description [

48]. During this phase, we used patterns shown in

Table 7.

The easiest way to design a DSL is to base it on the existing language. DSLs built this way are called

Internal DSLs. The advantage of this approach is that no new language infrastructure has to be built, but the downside is the limited flexibility since a DSL has to be expressed by using concepts of the host language [

50]. Another approach is to create a so-called

External DSL. An external DSL is a completely independent language built from scratch. As external DSLs are independent of any other language, they need their own infrastructures such as parsers, linkers, compilers, or interpreters [

50]. Silvera is an external DSL with no relationships with any existing languages (

Language Invention) pattern.

Mernik et al. [

48] distinguish between informal and formal designs. In an informal design, the language specification is usually in some form of natural language [

48]. In a formal design, a language syntax is usually specified via regular expression and grammar, whereas a semantic is specified via attribute grammars, rewrite systems, and abstract state machines [

48]. The formal design has several benefits [

48]: (i) brings problems to light before the DSL is actually implemented and (ii) can be implemented automatically by language development tools, which significantly reduces the implementation effort. Silvera’s syntax specification is defined in the form of PEG (

Parsing Expression Grammar) grammar (

Formal) pattern. However, Silvera’s semantic specification is defined by code generators (

Formal) pattern.

Implementation phase. In this phase, we considered multiple implementation patterns, as shown in

Table 8.

We chose

Compiler Application Generator pattern over other patterns, such as

Interpreter,

Embedding,

Extensible Compiler/ Interpreter, and

Commercial-Off-The-Shelf approach. A disadvantage of this approach is the higher cost of building the compiler from scratch. However, this approach also yields advantages such as closer syntax to the notation used by domain experts, good error reporting [

48], and minimized user effort to write correct programs [

51]. The patterns

Compiler/Application Generator pattern and

Interpreter offer similar advantages and disadvantages [

48], but we chose the former due to execution speed.

3.2. Silvera Abstract Syntax

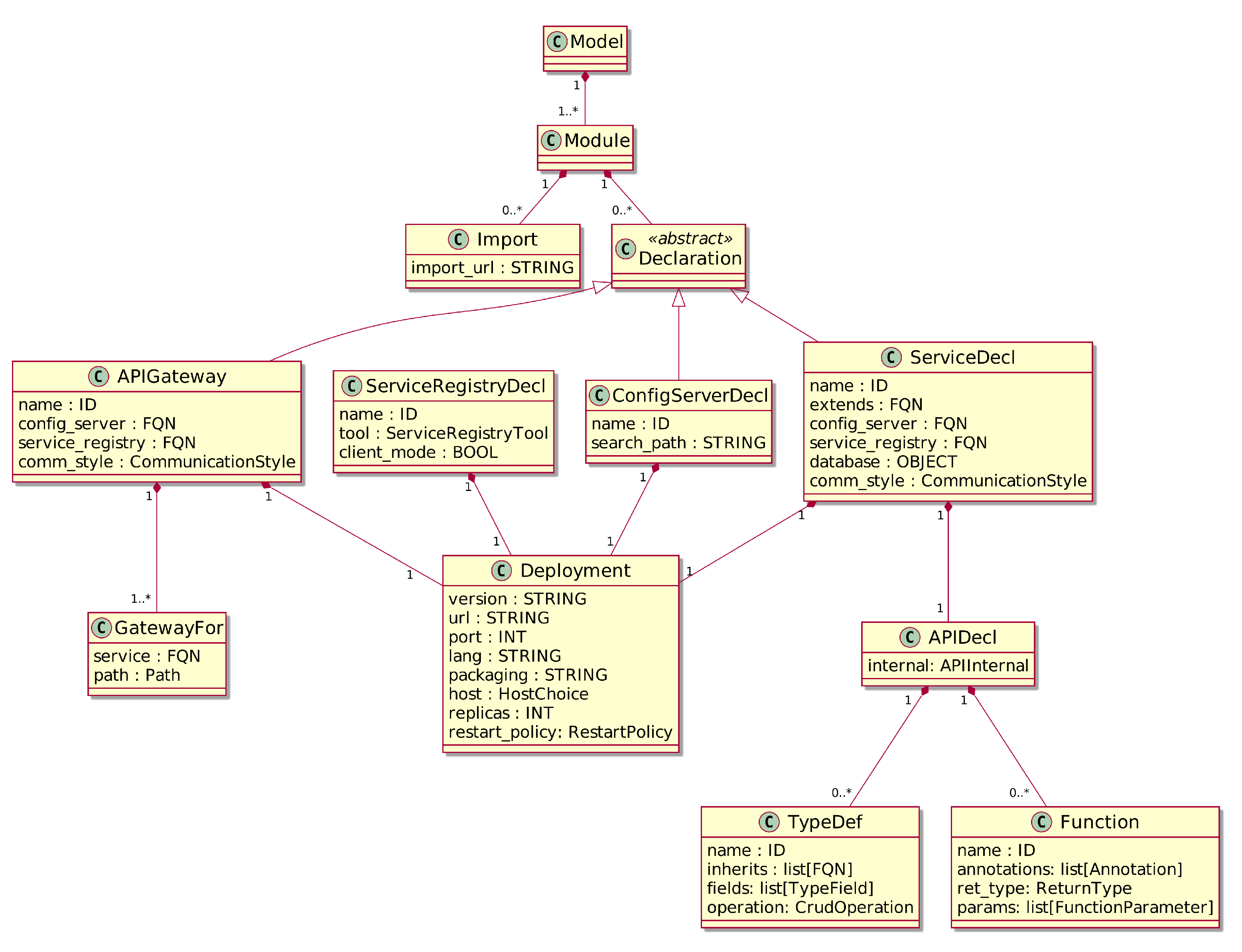

In this section, we present the abstract syntax of the Silvera language. Silvera’s abstract syntax is specified in the form of a metamodel. The simplified version of the metamodel, for brevity, is presented in

Figure 1 and

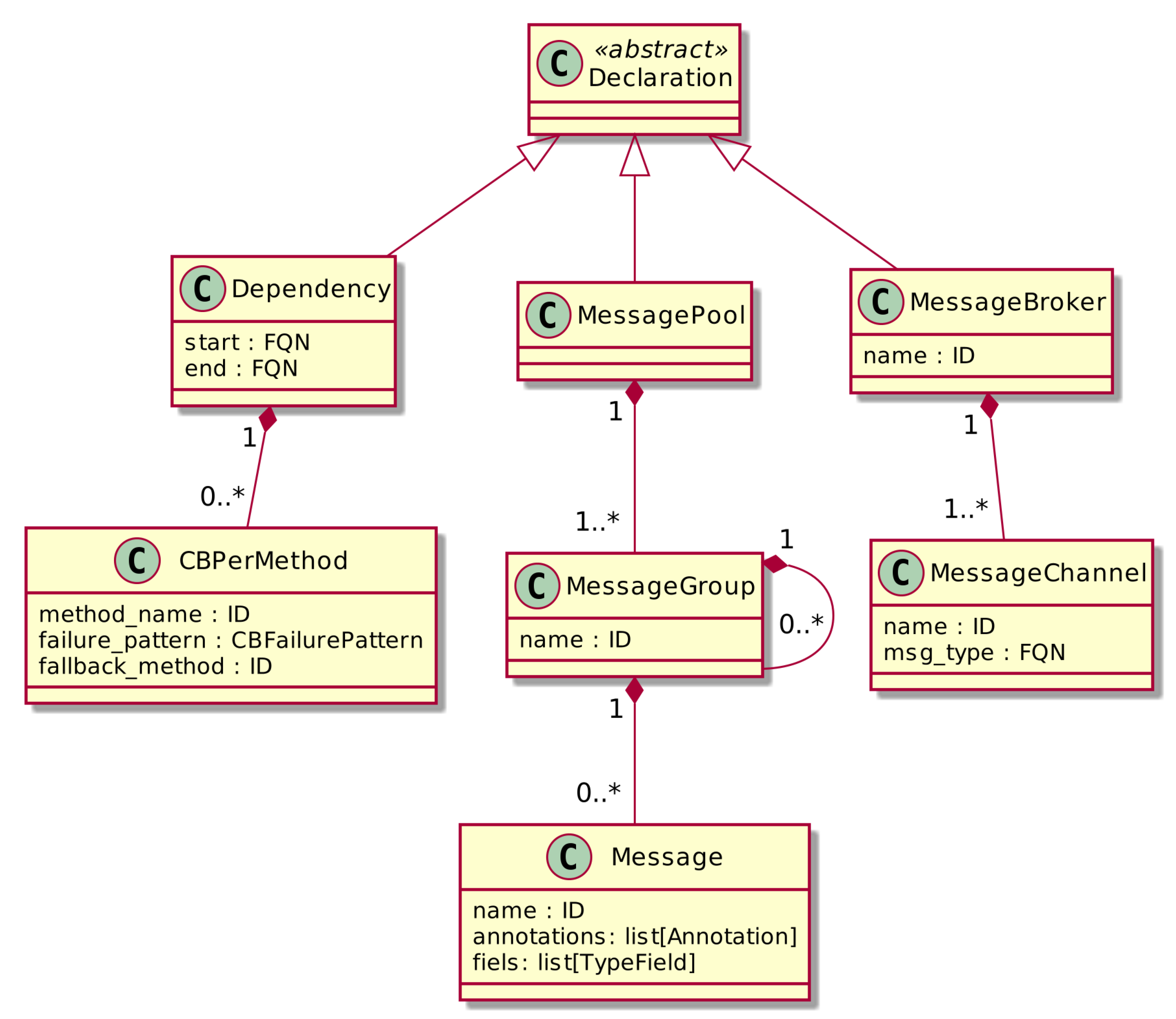

Figure 2.

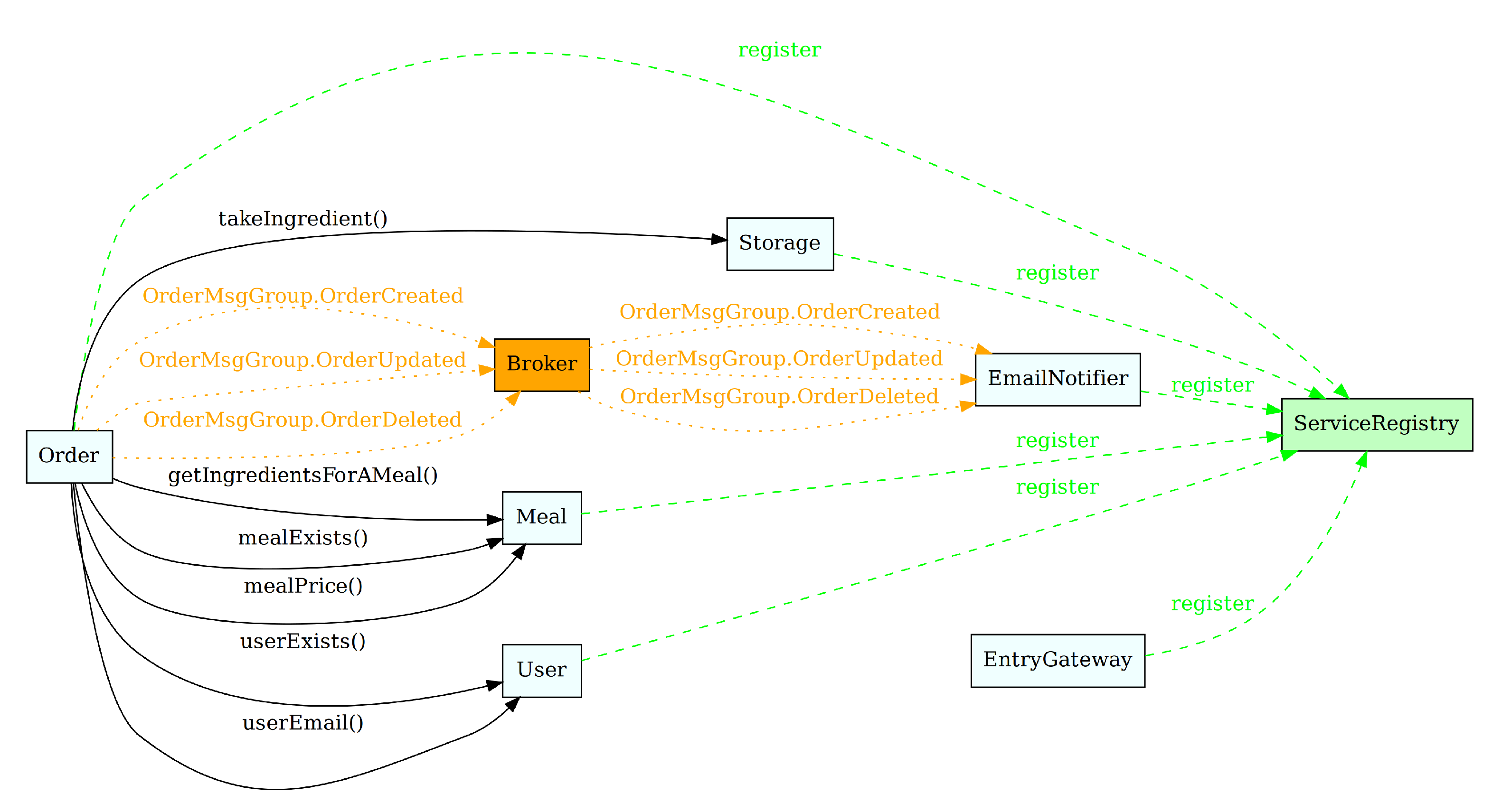

The main concept of the Silvera metamodel is the

Model, which consists of one or more modules (

Module). A module enables users to logically organize their Silvera code. Each module can consist of declarations of microservices (

ServiceDecl), API gateways (

APIGateway), service registries (

ServiceRegistryDecl), configuration servers (

ConfigServerDecl), dependencies (

Dependency), message pool (

MessagePool), or message brokers (

MessageBroker). Each declaration can be identified by its name which is unique at the module level. The unique identifier for a declaration within the model is its fully qualified name (FQN), which is calculated by the following formula:

A module can reference declarations from another module by importing it.

For each microservice, it is possible to define its name, API, deployment strategy, communication style, whether the microservice should be registered within a service registry, and whether it should draw external configuration data from a configuration server.

The API (APIDecl) declaration consists of function definitions and the definition of service-specific objects used for modeling microservice business entities. Each function definition consists of a function name, function parameters, return type, and annotation (optional), whereas the definition of the service-specific object (TypeDef) is given by its name and one or more fields (TypeField). Every field has its name and a data type, but can also have a special ID attribute, which is used to identify fields during serialization and deserialization of messages in a binary format and to ensure backward compatibility for newer versions of the API. For that reason, once assigned, an ID attribute should not be changed.

In Silvera, each microservice has a particular communication style. Communication style defines a protocol used to send and receive messages. Currently, it is possible to choose between RPC-based and messaging-based communication styles. The format of messages that can be sent and received from a microservice is defined by its API. Microservices that use RPC to call methods from other microservices must define those microservices as dependencies. RPC-based communication is synchronous by default, but Silvera supports asynchronous RPC communication as well.

Since microservices can fail at any time, MSAs must be designed to cope with failures [

1]. The failure of one microservice should not take down the whole system. One of the design patterns that helps in mitigating such problems is the

Circuit Breaker pattern (see

Section 2.2), which is directly supported in Silvera. Failure recovery must be defined for every API function that the

start microservice calls from the

end microservice.

Table 9 shows failure recovery strategies supported by Silvera.

When using a messaging-based communication style, microservices communicate asynchronously (via passing messages). Every message type (Message) used within the system is defined in the message pool (MessagePool). The Message pool is globally available and can be referenced from any module. Messages are delivered to their destinations by message brokers (MessageBroker). Every message broker contains one or more message channels (MessageChannel). The message broker creates message channels, and each channel can have multiple consumers and/or producers. The consumer is every microservice that reads messages from a channel. Analogously, the producer is a microservice that puts messages inside the channel. Message channels in Silvera are typed, which means that each channel is dedicated to a specific message type. Messages can only be consumed or produced by API methods. For a microservice to send a message, its API method must be registered as a publisher to a channel whose specific purpose is to communicate that kind of message. Likewise, to receive a message of a particular type, its API method must be registered as a consumer of a channel that contains a given message type. The method can be registered as a consumer with an @consumer annotation and as a publisher with an @producer annotation. The method can, at the same time, be both consumer and producer. Message channels are logical addresses in the messaging system; how they are actually implemented depends on the messaging system product and its implementation.

In MSA, client applications usually need to collect data from more than one microservice. If the communication is direct, the client needs to communicate with multiple microservices to collect the data. Such communication is inefficient and increases the coupling between the client and the microservices [

27]. An alternative is to implement an

API Gateway. An API gateway represents a single entry point for all clients, and it can handle requests in one of two ways: (a) requests are routed to the appropriate service, and (b) requests are fanned out to multiple microservices. In Silvera, an API gateway is a special service implemented in the form of an

APIGateway object. Its attribute

gateway_for determines which microservices will be put behind the gateway. In the current implementation of Silvera, the API gateway only serves as a router of requests. In the future, we plan to expand on this implementation by providing security features, implementing the

API Composition [

27] pattern and adding the option to restrict services’ APIs to a certain set of operations. The

API Composition pattern uses an API composer, or aggregator, to implement a query by invoking individual microservices that own the data and then combine the results by performing an in-memory join [

27].

In Silvera, each microservice can define its specific deployment requirements. Deployment is managed by the Deployment object, with the following attributes: version, url, port, lang, packaging, host, replicas, and restart_policy. Attribute version defines a version of a microservice. Attributes url and port define a location of a microservice on a computer network. Attributes lang and packaging define a programming language in which the microservice will be implemented and in which form it will be used (source or binary). Attribute host defines whether a microservice will run on a physical host, virtual machine, or inside a container, whereas attribute replicas defines a number of instances of a microservice. Finally, the attribute restart_policy defines when a microservice should be restarted (after failure, always, etc.). This attribute can be currently used only if the host is a container.

A service registry is a special service that contains information about a number of instances and locations of each microservice in the system. In Silvera, the service registry is implemented in the form of ServiceRegistryDecl object. This object contains the following attributes: tool—which defines which tool will be used as a service registry, client_mode—which defines whether the service registry could be registered within another service registry. Since it is a special type of microservice, a service registry can also be deployed in various ways by using the Deployment object. The microservice is registered within the service registry by providing a reference to a ServiceRegistryDecl object to its service_registry attribute.

In Silvera, microservices can draw configuration files from an external configuration server. The configuration server is implemented in the form of a ConfigServerDecl object.

3.3. Silvera Concrete Syntax

In this section, we present the concrete syntax of the Silvera language. Concrete syntax defines how the abstract syntax concepts are presented to the user [

12]. It is possible to create multiple concrete syntaxes (textual, graphical, etc.) for a single abstract syntax. Silvera’s concrete syntax is provided in the form of textual notation. In

Section 3.3.1, we have shown an excerpt from the grammar. We omitted some parts of the rules for brevity. The full version of the grammar is available on GitHub (Silvera grammar—

https://github.com/alensuljkanovic/silvera/blob/master/silvera/lang/silvera.tx (accessed on 1 June 2022)).

3.3.1. Microservice Declaration

In Listing 1, we show a simplified grammar rule for a microservice declaration. Some parts are omitted for brevity.

| Listing 1. An excerpt of the grammar rule for a microservice declaration. |

| 1 ServiceDecl: |

| 2 ’service’ name=ID ’{’ |

| 3 (’config_server’ ’=’ config_server=FQN)? |

| 4 (’service_registry’ ’=’ service_registry=FQN)? |

| 5 (deployment=Deployment)? |

| 6 ’communication_style’ ’=’ comm_style=CommunicationStyle |

| 7 (api=APIDecl)? |

| 8 ’}’ |

| 9 ; |

The ServiceDecl rule starts with the keyword service followed by the attribute name matched by the textX built-in rule ID that further follows the literal string match “{” (line 2). The body of the microservice declaration starts with the definition of two optional variables. First, we have a variable that keeps reference towards the configuration server. Its definition starts with the config_server keyword followed by the literal string match “=”, after which comes the attribute config_server matched by the rule FQN (line 3). Second, we have a similarly defined variable that keeps reference towards the service registry (line 4). Then the optional variable assignment matched by the rule Deployment (line 5) follows. Next, we have the definition of communication style CommunicationStyle (line 6). In the end, another optional attribute api is matched by the rule APIDecl (line 7). The closing curly brace ends the microservice declaration.

Listing 2 shows how to define a simple

User microservice in Silvera.

User microservice is registered within

ServiceRegistry (line 3), and it communicates with the rest of the system by using RPC (line 4). This microservice is deployed inside a container, and it listens to HTTP requests on the 8080 HTTP port. Since the

host attribute is not defined in the

deployment section, its default value will be applied—

http://localhost, accessed on 1 June 2022. The API of this microservice consists of the

User domain object and several publicly available methods. CRUD methods for the

User domain object are generated automatically due to the

@crud annotation. This annotation represents a shortcut, and the same effect can be achieved by using

@create,

@read,

@update, and

@delete annotations. In addition to the CRUD methods, we have three additional methods

listUsers,

userExists, and

userEmail. All API methods for this microservice are exposed over REST. URL mapping for each of the methods will be auto-generated based on the microservice URL, method name, and HTTP method defined by the corresponding

@rest annotation. For example, the method

listUsers can be accessed with the following URL:

http://localhost:8080/user/listusers, accessed on 1 June 2022. It is, however, possible to set custom URL mapping for the API method by using the

mapping attribute of the annotation:

@rest(method=GET, mapping=<user_defined_mapping>). This attribute, however, is not currently available when using CRUD annotations.

| Listing 2. An example that shows how to define a service. |

| 1 service User { |

| 2 |

| 3 service_registry=ServiceRegistry |

| 4 communication_style=rpc |

| 5 |

| 6 deployment { |

| 7 version="0.1" |

| 8 port=8080 |

| 9 host=container |

| 10 } |

| 11 |

| 12 api{ |

| 13 @crud |

| 14 typedef User [ |

| 15 @id str username |

| 16 @required str password |

| 17 @required @unique str email |

| 18 |

| 19 int age //optional |

| 20 ] |

| 21 |

| 22 @rest (method=GET) |

| 23 list<User> listUsers () |

| 24 |

| 25 @rest(method=GET) |

| 26 bool userExists (str username) |

| 27 |

| 28 @rest (method=GET) |

| 29 str userEmail(str username) |

| 30 } |

| 31 } |

3.3.2. Service Registry Declaration

Listing 3 shows how to define a service registry named

ServiceRegistry. This service registry is generated as a

Eureka service registry (line 2) that will not register itself within another service registry (line 3).

ServiceRegistry listens for requests at the 9091 HTTP port at

http://registry.example.com, accessed on 1 June 2022 and is deployed inside a container (line 8). The current version of the registry is

0.0.1.

| Listing 3. An example that shows how to define a service registry. |

| 1 service-registry ServiceRegistry { |

| 2 tool=eureka |

| 3 client_mode=False |

| 4 deployment { |

| 5 version="0.0.1" |

| 6 port=9091 |

| 7 url="http://registry.example.com" |

| 8 host=container |

| 9 } |

| 10 } |

3.3.3. API Gateway Declaration

Listing 4 shows how to define an API gateway named

EntryGateway. The

EntryGateway provides a single entry point to the system, and the user only needs to remember the URL of the gateway. For each microservice behind the gateway, an URL mapping is provided. For example, to call the

listUsers method from the

User microservice, a user needs to use the following call:

http://entry.example.com:9095/api/u/listusers, accessed on 1 June 2022. The

EntryGateway is also registered within

ServiceRegistry.

| Listing 4. An example that shows how to define an API gateway. |

| 1 api-gateway EntryGateway { |

| 2 |

| 3 service_registry=ServiceRegistry |

| 4 |

| 5 deployment { |

| 6 version="0.0.1" |

| 7 port=9095 |

| 8 url=" http://entry.example.com" |

| 9 } |

| 10 |

| 11 communication_style=rpc |

| 12 |

| 13 gateway-for { |

| 14 User as /api/u |

| 15 } |

| 16 } |

3.3.4. Declaration of Microservice Dependency

Listing 5 shows how to define a dependency between Order and User microservices. In this particular example, the Order microservice requires userExists and userEmail methods from the User microservice. For each requirement, a failure recovery strategy is defined (a fallback_static strategy for the userExists method and a fail_silent strategy for the userEmail method).

| Listing 5. Defining dependency between Order and User microservices. |

| 1 dependency Order -> User { |

| 2 userExists[fallback_static] |

| 3 userEmail[fail_silent] |

| 4 } |

3.3.5. Switching from RPC to Messaging Communication

So far, we have shown how to define microservices that use the RPC mechanism to communicate. In the text that follows, we will show how to change communication style from RPC to messaging.

First, the message pool and a message broker need to be defined. Listing 6 shows how to define the message pool with one message group —UserMsgGroup. This message group contains three message types: UserAdded, UserUpdated, and UserDeleted. Each message has two fields: userId and userEmail.

| Listing 6. An excerpt of the grammar rule for microservice declaration. |

| 1 msg-pool { |

| 2 group UserMsgGroup [ |

| 3 msg UserAdded [ |

| 4 str userId |

| 5 str userEmail |

| 6 ] |

| 7 ... |

| 8 ] |

| 9 } |

Listing 7 shows how to define a message broker named Broker. This message broker has three typed message channels: EV_USER_ADDED_CHANNEL channel for the UserAdded message, EV_USER_UPDATED_CHANNEL channel for the UserUpdated message, and EV_USER_DELETED_CHANNEL channel for the UserDeleted message. When instantiating a channel, the FQN of a message must be used.

| Listing 7. An example that shows how to define a message broker. |

| 1 msg-broker Broker { |

| 2 |

| 3 channel EV_USER_ADDED_CHANNEL(UserMsgGroup.UserAdded) |

| 4 channel EV_USER_UPDATED_CHANNEL(UserMsgGroup.UserUpdated) |

| 5 channel EV_USER_DELETED_CHANNEL(UserMsgGroup.UserDeleted) |

| 6 } |

Listing 8 shows how the User microservice should be changed to use the messaging communication style. In the example, the User microservice publishes a message every time a user is added, updated, or deleted. Not only CRUD methods can produce or consume messages; regular API functions can use @producer and @consumer annotations that use the same syntax as shown in the example.

| Listing 8. User microservices that uses messaging communication style. |

| 1 service User { |

| 2 ... |

| 3 communication_style=messaging |

| 4 ... |

| 5 |

| 6 api { |

| 7 @create(UserMsgGroup.UserAdded -> Broker.EV_USER_ADDED_CHANNEL) |

| 8 @read |

| 9 @update(UserMsgGroup.UserUpdated -> |

| 10 Broker.EV_USER_UPDATED_CHANNEL) |

| 11 @delete(UserMsgGroup.UserDeleted -> |

| 12 Broker.EV_DELETED_DELETED_CHANNEL) |

| 13 typedef User [ |

| 14 ... |

| 15 ] |

| 16 ... |

| 17 } |

| 18 } |

3.4. Compiler

Silvera compiler consists of two logically separated parts: front-end and back-end. The front-end further consists of modules for analysis and evaluation, whereas the back-end is comprised of a set of language-specific code generators.

3.4.1. Front-End

The compiler’s

front-end performs lexical analysis, parsing, semantic analysis, and translation to an intermediate representation. The parser is produced by

textX [

52] based on the Silvera grammar. The textX is an open-source tool for fast DSL development in Python that is IDE agnostic and provides a fast round-trip from grammar change to testing [

52]. Since DSLs are susceptible to changes [

48], we chose

textX because it provides easy language evolution. The parser created by

textX parses Silvera programs and creates a graph of Python objects (model) where each object is an instance of a corresponding class from the metamodel. This way, instead of an

Abstract Syntax Tree (AST), textX returns a

Model object (

Section 3.2).

The front-end detects both syntax and semantic errors. Since Silvera is IDE-independent, errors are detected only during the compilation time. Syntax errors are detected early on, during parsing, by

textX, which comes with extensive error reporting and debugging support [

52].

Before the Model object is passed to the compiler’s back-end, it is processed by the communication resolving processor (CRP) and the architecture evaluation processor (AEP). Since microservices created in Silvera can have multiple communication styles, the primary purpose of the CRP is to validate the Silvera model according to the corresponding communication style and enrich the model with communication-style-specific information needed by the compiler’s back-end. Each communication style comes with a specific CRP.

The purpose of the AEP is to provide a metrics-based evaluation of the microservices-based system implemented in Silvera. Evaluation metrics applicable to microservice-based systems are defined by Bogner et al. [

46]. Even though the AEP is an independent module, by utilizing it in the front-end, we are implementing the

AVOPT pattern (see

Section 3.1). This way, evaluation results can be used by the back-end to generate optimized applications.

For the evaluation, the AEP is using the following metrics: Weighted Service Interface Count (), Number of Versions per Service (), Services Interdependence in the System (), Absolute Importance of the Service (), Absolute Dependence of the Service (), and Absolute Criticality of the Service ().

[

53] is the number of exposed API functions of microservice

S. Lower values for

are more favorable for the maintainability of a microservice. As absolute values for this metric are not conclusive on their own [

46], the system-wide average

is calculated. By comparing values with the average, the largest microservices in the system can be identified and potentially split.

[

53] is the number of versions of microservice

S currently used in the system. A large

value indicates high complexity and bears down on the maintainability [

46].

[

54] is the number of microservice pairs that are bi-directionally dependent on each other. According to [

54], interdependent pairs should be avoided as they attest to poor services’ design. If such pairs exist, it can be a feasible solution to merge each of them into a single microservice [

46].

[

54] is the number of consumer microservices that depend on the microservice

S.

of every microservice is compared to a system-wide

, which can be used to identify very important microservices in the system.

[

54] is the number of microservices that microservice

S depends on. Again,

is calculated and can be used for comparison.

combines

and

to find the most critical and potentially problematic parts of the system. According to [

54], the most critical microservices are those that are called from many different clients as well as those that invoke a lot of other microservices.

Evaluation is performed during the model compilation or on command (silvera evaluate <path_to_model_dir>). At the end of the evaluation, the AEP creates a detailed report file that contains calculated values for each microservice.

If needed, Silvera allows developers to register custom AEPs as plugins (see

Section 3.4.3).

3.4.2. Back-End

After the front-end processes the Model object, the object is being passed to the compiler’s back end as an input.

The back-end of the Silvera compiler iterates over each module in the model and passes declarations (Decl objects) to code generators. The number of code generators is not limited. The Silvera compiler offers a possibility to register custom code generators as plugins.

For every REST-based microservice, the back-end generates an OpenAPI document named openapi.json. OpenAPI files provide information about where to reach an API, which operations are available, what are the expected inputs and outputs, etc.

The built-in code generator. The built-in code generator uses template-based model-to-text transformations to produce the Java applications based on the Spring Boot (Spring Boot—

https://spring.io/projects/spring-boot (accessed on 24 December 2021)) framework. The template-based code generation is a synthesis technique that produces code from high-level specifications called

templates [

55]. A template is an abstract representation of the textual output it describes. It has a static part, text fragments that appear in the output “as is”, and a dynamic part embedded with splices of meta-code that encode the generation logic [

55]. Templates lend themselves to iterative development as they can be easily derived from examples. Each declaration has a corresponding set of templates. The appropriate set of templates is chosen based on the declaration type and its target programming language. In the case of the built-in code generator, the target language is always Java 17. Once the appropriate set of templates is chosen, the code generator analyses the declaration and extracts relevant data. The data are subsequently used to fill the dynamic parts of the template.

The built-in code generator generates a Spring Boot application for every microservice present in the model. Most of the code is generated automatically; however, in some cases, developers must implement business logic manually. To ensure that the manually added code is preserved between successive code generations, the built-in code generator implements the

generation gap pattern [

56]. The implementation of this pattern ensures that manually written code can be added non-invasively using inheritance, where the manually added classes inherit the generated classes. A guide on adding manual changes to the generated code is part of Silvera’s documentation (Introduce manual changes to the generated code—

https://alensuljkanovic.github.io/silvera/compilation/#introduce-manual-changes-to-the-generated-code (accessed on 1 June 2022)).

We adhered to the best practices defined by Hofmann et al. [

57], so each generated Spring Boot application has the following modules:

domain,

controller,

repository, and

service. Microservices that use messaging communication style contain two additional modules:

config and

messages.

The domain module contains classes that specify the application’s domain model (business entities). These classes are derived from the type definitions (typedefs) located in the microservice’s API. The service module contains classes that specify applications’ business rules. These modules contain two sub-modules: base and impl. The base module contains a definition of the Java interface with methods defined in API, whereas the impl module contains a class that implements the base interface. The impl module is different from the rest of the generated modules because files in the impl module preserve manual changes in the code between successive code generations. In contrast, the rest of the generated files are always rewritten.

The repository module contains the implementation of the Repository pattern in the form of MongoRepository provided by MongoDB. Currently, the built-in code generator by default supports only MongoDB. However, support for an arbitrary database can be added either by extending the existing code generator or by registering a new one.

All messages defined in the message pool are generated as classes in the messages module. Messages are sent through the network as JSON objects.

The config module contains classes used to define how the generated microservice application will communicate with a message broker. These classes are: (i) the KafkaConfig class that defines how the application is registered within the Kafka cluster, and (ii) the MessageDeserializer class that defines how messages received as JSON objects will be transformed into message objects defined in the messages module. MessageDeserializer is optional and will be generated only if the application consumes messages from the message broker.

The controller module contains a class that specifies the REST API of the generated microservice application. The class contains methods that belong to both the public and internal API of the microservice. Methods from the internal API are private and cannot be accessed from the outside.

In addition to the modules mentioned above, pom.xml and bootstrap.properties files are generated for each microservice. The file bootstrap.properties is used to setup Spring Boot applications, whereas Maven uses pom.xml files to manage dependencies.

API gateways and service registries are also generated as separate Spring Boot applications. The API gateway is generated as a

Zuul Proxy (Netlix Zuul—

https://github.com/Netflix/zuul (accessed on 24 December 2021)) server.

Zuul is a gateway service developed by Netflix, and it provides dynamic routing, monitoring, resiliency, security, etc. Generated code for the API gateway is simple because it contains only one class with the main function and the

application.properties file, which defines how the API gateway will perform request routing and whether it will contact the service registry to retrieve the URL of the corresponding microservice. The service registry is also a simple application, with one class with the main function and the

bootstrap.properties file.

The built-in code generator produces a special run script and a Docker file (if the deployment host is set to the container) for each microservice, API gateway, or service registry. The run script utilizes Maven to produce and run the jar file.

3.4.3. Customization Support

Silvera allows users to register new AEP or code generators as plugins. Both are registered in the same way, so for brevity, we will only describe the registration process for the new code generator.

A custom code generator needs to be implemented in Python. Silvera uses the

pkg_resources module from

setuptools (Python’s

setuptools module—

https://setuptools.readthedocs.io/en/latest/ (accessed on 24 December 2021)) and its concept of extension point to declaratively specify the registration of the new code generator. Extensions are defined within the project’s

setup.py module. All Python projects installed in the environment that declare the extension point will be discoverable dynamically.

The registration of a new code generator is performed in two steps. The first step is to create an instance of the GeneratorDesc class. An instance of the GeneratorDesc class contains information about the code generator’s target language, description, and the reference towards the function that should be called to perform code generation. As shown in Listing 9, this function has three parameters: Decl object, a path to the directory where code will be generated, and a flag that shows whether the code generator is run in debug mode.

| Listing 9. Implementation of a GeneratorDesc object and the prototype of the generate function. |

| 1 from silvera.generator.gen_reg import GeneratorDesc |

| 2 |

| 3 def generate(decl, output_dir, debug): |

| 4 """Entry point function for code generator. |

| 5 |

| 6 Args: |

| 7 decl(Decl): can be declaration of service registry or config |

| 8 server. |

| 9 output_dir(str): output directory. |

| 10 debug(bool): True if debug mode activated. False otherwise. |

| 11 """ |

| 12 ... |

| 13 |

| 14 python = GeneratorDesc( |

| 15 language_name="python", |

| 16 language_ver="3.7.4", |

| 17 description="Python 3.7.4 code generator", |

| 18 gen_func=generate |

| 19 ) |

The second step is to make the code generator discoverable by Silvera. To do this, we must register the GeneratorDesc object in the setup.py entry point named silvera_generators, as shown in. Listing 10.

| Listing 10. Making the new code generator discoverable by Silvera by using silvera_generators entry point. |

| 1 from setuptools import setup |

| 2 |

| 3 setup( |

| 4 ... |

| 5 entry_points={ |

| 6 ’silvera_generators’: [ |

| 7 ’python = pygen.generator:python’ |

| 8 ] |

| 9 } |

| 10 ) |

Silvera provides a command (silvera list-generators) that lists all registered generators in the current environment.