1. Introduction

Over the course of life, individuals regularly make a distinction between their own actions and all other external events. We draw this distinction because we have a sense of agency (SoA) [

1], a specific awareness of our own actions. SoA is closely related to the sense of control [

2]—the sense that the future is largely determined by our momentary decisions. In order to successfully control one’s body and employ external instruments, one must feel and comprehend that one has control over the world around oneself [

3]. The lack of SoA results in frustration, distraction from current activities and insecurity in one’s actions [

4]. SoA studies are a field devoted to investigating the conditions under which an individual perceives themselves as a source of various events or, on the contrary, rejects them.

This field of research can yield considerable practical value. In today’s world, individuals have to interact with a variety of technical devices. Some of them, such as robotic grasping arms (e.g., [

5]), functional prostheses (e.g., [

6]) and robotic wheelchairs (e.g., [

7]), can restore patients’ ability to move around and act independently. Recently, the development of robotic wheelchairs has become an urgent task. In the last 30 years, the global prevalence of spinal cord injuries has severely increased [

8]. What should such devices as robotic wheelchairs be like so that a person feels comfortable working with them and sees the instrument as an extension of their own body and will? According to Rule 7 of the The Eight Golden Rules of Interface Design [

9], an interface has to support the internal locus of control, which is equivalent to maintaining SoA. SoA studies can aid in the development of various devices and interfaces and improve their efficiency. For example, there is ongoing research into explicit ratings of SoA and its EEG correlates during assisted driving [

10,

11]; in this setting, it is important that the operator maintains a sense of control over the movement of the vehicle.

1.1. Implicit Correlates of SoA

The simplest way to measure SoA would be to ask the agent whether they think they are the source of a particular action. However, the judgments people make about various events can be biased for at least three reasons. First of all, in everyday life, people routinely perform actions without reflecting on who is their author. The requirement to evaluate one’s own role in the surrounding events significantly distances the experience of action in an experimental environment from ordinary life. Secondly, contemporary theories allow for dissociation between low-level and high-level SoA [

12,

13], i.e., immediate feeling of agency and conceptualized judgments of agency. Verbal reports or ratings provide evidence only for the second variety, as it is itself generated by high-level processes. Thirdly, in experimental research, judgements of agency are captured through questionnaires that have no standard formulations and may be understood differently by participants. Depending on the study, participants rate their agreement with some statements on a Likert scale (e.g., [

14]) or choose one of the statements that describes their experience (e.g., [

15]). In light of the outlined challenges, one of the main objectives of experimental research on SoA is to find its implicit indicators. These indicators would allow a direct assessment of the participant’s experience in action without a direct survey.

1.2. Intentional Binding

One of the most discussed implicit correlates of the sense of agency is intentional binding (IB), originally reported by Haggard et al. [

16]. IB is the phenomenon of subjective temporal convergence of a voluntary action and its sensory effect. In the experiment by Haggard et al., participants heard a tone 250 ms after pressing a key. It was found that they perceived the action as happening later than it actually occurred, and the tone as sounding earlier than it actually did. This phenomenon was not observed if they pressed the key not voluntarily but due to transcranial magnetic stimulation. The authors suggested that IB correlates with SoA. In this original study, participants watched Libet’s clock [

17] to determine the moment of a particular event (either action or tone). This method requires careful observation of the clock during the activity, and also does not allow the subjective timing of both events to be recorded within a single trial. Engbert et al. [

18] found that IB was reproducible when participants gave direct estimates of time intervals between actions and their effects. The intervals were estimated as shorter for voluntary actions.

IB has been repeatedly applied to determine the convenience of interfaces in human–machine interaction. For example, it has been used to evaluate various input methods [

19,

20] or to evaluate how the degree of automation affects the attribution of responsibility during complex tasks [

21]. As an indicator, IB has the advantage of not requiring expensive equipment and being easy to implement in experiments.

However, currently there is a debate in the literature as to whether IB is a specific indicator of SoA or whether it arises from the predictability of events. The separation of the factors of agency and predictability is difficult, and it is a major limitation of many SoA studies, although attempts were made to solve this problem [

22]. The predictability of sensory consequences of an action is one of the mechanisms that low-level SoA relies on [

23]. Nevertheless, as agents, we do not consider all predictable events to be the results of our actions. When we act, we usually know when exactly the action and its consequences take place. It is widely accepted that as the predictability of the type and timing of feedback decreases, IB declines as well [

24]. Yet, it is still unclear whether this happens because SoA is highly dependent on predictability or because IB reflects the predictability of the event for the agent rather than the perceived degree of their involvement in the action. In light of this, there is a need for studies where the predictability of the consequences of voluntary and involuntary actions is equated as much as possible. In our study, we attempted to do this, specifically in the context of spatial binding.

1.3. Spatial Binding

IB has a counterpart that works for distance estimates; however, it has been studied less extensively. From now on, we will call it spatial binding (SB). The existence of this phenomenon was first reported by Kirsch et al. [

25]. In their experiment, participants estimated the distance between an effector—a hand with a stylus—and a visual stimulus accompanying the stylus movement. The participant’s hand with the stylus was concealed under an opaque surface. When the stylus was moved voluntarily, participants judged the position of their hand to be closer to the visual stimulus on the surface than it actually was. Previously, Buehner and Humphreys [

26] found that participants perceived the objects to be closer if they causally interacted. This phenomenon is a form of causal binding, an effect close to IB. In situations where the Libet’s clock is not applicable, SB may be a more practical implicit indicator than IB based on direct time interval estimation. It could be easier for humans to estimate distances than to give time estimates. Distance estimation is an everyday task everyone is familiar with.

Such a task would be particularly natural and have practical implications when an agent is themself moving by driving a vehicle. To our knowledge, the possible emergence of spatial binding has not been studied under such conditions.

Experiment 1 of the present study was aimed at testing SB in a situation where a participant is moving in a robotic wheelchair. Our hypothesis was that the participant’s distance estimates would be significantly lower when the participant controls the wheelchair than when they do not control it.

1.4. Auditory N1 as an Indicator of SoA

In addition to behavioral variables, the electrophysiological correlates of SoA have been studied extensively in recent years [

27]. SoA experiments frequently use tones or more complex sounds that serve as sensory effects of actions. Back in the 1970s, Schafer and Marcus [

28] found that the N1 component of the event-related potentials (ERP) in response to a tone had a smaller amplitude if the participant controlled the moment of the tone. Bäß et al. [

29] studied the difference between the amplitude of the N1 component for self-generated and external tones. They found that a reduction in the component amplitude for self-generated tones occurred even when their frequency and timing were unpredictable for the participant. By comparing responses to self-generated tones with responses to cued external tones, Lange [

30] found that a more significant attenuation of the auditory N1 was caused by voluntary actions.

These results suggest that the auditory N1 amplitude is an indicator of SoA. Therefore, we counted on using it in the EEG study and implemented this idea in Experiment 2. In Experiment 2, we sought to specify the results of Experiment 1 using a different implicit SoA indicator.

2. Experiment 1

2.1. Materials and Methods

2.1.1. Participants

Twenty-five naïve right-handed healthy volunteers (10 males and 15 females, age 23.5 ± 5.5 years (M ± SD)) participated in this study. The sample size was restricted by time constraints and organizational difficulties. All subjects were introduced to the procedure and signed informed consent. The experiment procedures were approved by the local ethics committee and were in agreement with the institutional and national guidelines for experiments with human subjects as well as with the Declaration of Helsinki.

2.1.2. Apparatus

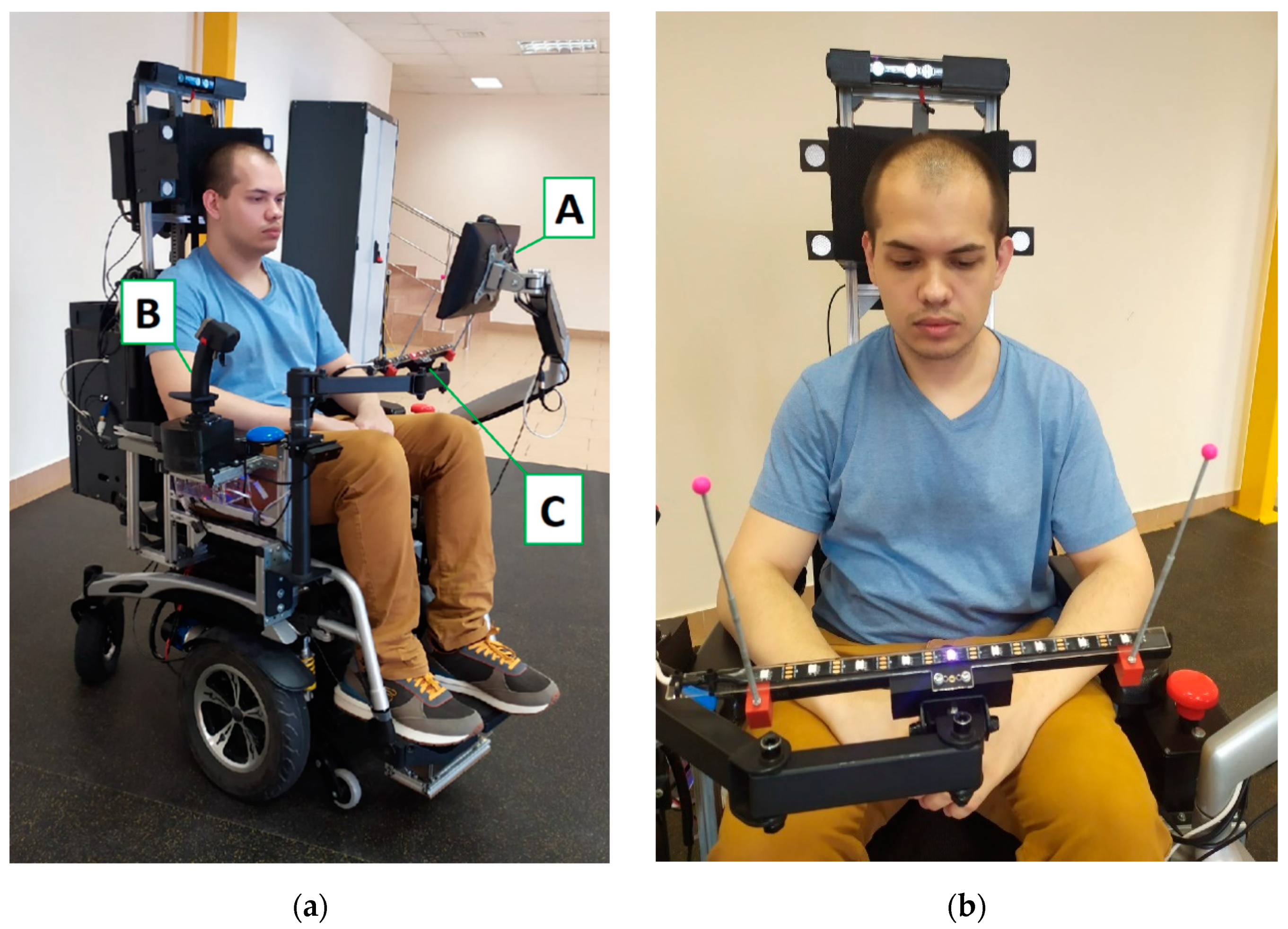

The robotic wheelchair (

Figure 1) [

31] was designed as both a prototype of an assistive device and an experimental platform for various research in the fields of steering vehicles, robotics and human–machine interfaces. The robotic wheelchair was based on a motorized wheelchair, the Ortonica Pulse 330. The default driver was replaced with a Roboteq MDC2460 and quadrature encoders were added to the motors. The default joystick was replaced with a flight simulator joystick which has a number of extra buttons for control. To provide extra information about the current system state, a touch monitor, speakers and an LED strip (fixed on the top of an eye tracker not used in the current study) were installed. The monitor can be folded to the side so that it does not obstruct the operator’s view. The control system uses Intel NUC 8i (Intel Corporation, Santa Clara, CA, USA) under Kubuntu 20.04 LTS with a Robot Operating System (ROS) Noetic. The steering system is able to conduct basic movements and measure traveled distance, as well as do complex navigation tasks. Navigation is available due to a number of sensors mounted on a wheelchair. Currently it has three depth cameras (Kinect, RealSense and OAK-D Lite) and a 2D range scanner RPLidar A3. These sensors are able to detect obstacles in the front and back arc of a robotic wheelchair. If an operator gives a command that leads to a collision, it will be automatically blocked. The wheelchair can be controlled in different ways ranging from a joystick to an eye tracker and a high-level verbal command detector [

32]. To separate different sources of commands to the actuators, a special control system was developed. Each type of wheelchair control is implemented in a program module (ROS node) with a standardized interface, which has start and stop services. When the start is called, the control node is able to send commands to motors. When the braking is called, it immediately stops sending any commands. The state controller node provides switching between control nodes by operator commands. As all parts of the system are designed with ROS interfaces, the remote control is possible from a different PC which is connected to the same network and has the required ROS nodes and control devices such as a joystick.

2.1.3. Experiment Design

A participant was seated in the robotic wheelchair. The wheelchair was navigated along three sides of a rectangular track under the supervision of the experimenter.

The experiment included three conditions (each consisting of multiple identical trials):

- -

Active control (Act)—a participant controls the wheelchair on their own using the joystick;

- -

Experimenter’s control (Exp)—the experimenter walks beside the wheelchair and controls it within the visual field of the participant, while the participant sits idly;

- -

Remote control (Rem)—the experimenter controls the wheelchair remotely from a different room.

We decided to introduce both Exp and Rem conditions since some studies (e.g., [

33]) showed that actions of other human agents may induce intentional binding. The core difference between the Exp and Rem conditions is that the Exp condition included a vivid representation of action as the experimenter used the joystick manually, being visible to the participant. In contrast, when the wheelchair was controlled remotely, the participant could not see the experimenter.

All these activities were perfectly safe for participants and required minimal physical effort. Before the experiment each condition was presented to the participant by the means of 2–4 practice trials. The order of conditions was randomized for each participant.

2.1.4. Procedure

The structure of trials in different experimental conditions is shown in the flowchart (

Figure 2). The participant task in the Act condition was to travel in the robotic wheelchair in a straight line until they heard a sound signal to stop. The distance was not known to the participant beforehand and was chosen randomly for each trial from five values: 0.5 m, 1 m, 2 m, 2.5 m and 3 m. The participant started driving by holding the button on the joystick (B in

Figure 1a) and tilting the joystick forward up to the limit. The participant was not able to change the direction of the wheelchair movement during the trial. Eventually, a short signal sound (the sound production was implemented as “dzin” text read by a text-to-speech device. “Dzin” is a standard phonetic imitation of a bell sound in Russian, and by using this text we intended to reproduce a primitive tone as close as possible. The duration of the sound was approximately 200 ms) alerted the participant that the distance had been covered.

The exact moment of the signal sounding was not known to the participant, but the sound occurred only after the wheelchair had covered the distance. Since the sound would not have occurred if the participant had not been moving forward in the wheelchair, the sound was (counterfactually [

34]) the effect of the participant’s actions. According to the instructions, after the signal, the participant was to quickly release the button on the joystick and return the joystick to its initial state. If the participant did not release the button, the wheelchair would stop on its own for safety reasons, but the experimenter made sure that the participant released the button in each trial. The participant was requested to drive until the sound occurred without stopping or varying the speed of the wheelchair.

In the Exp condition, it was the experimenter who stopped the wheelchair after the sound, and in the Rem mode, the wheelchair was started by a button press from a different room and stopped on its own in a preprogrammed way. In these two conditions, the subject observed what was happening without having control over the wheelchair.

The wheelchair did not stop instantaneously, but with a braking path of (M ± SD) 1.22 ± 0.14 m in conditions with joystick control (Act, Exp) and 1.21 ± 0.13 m when the braking was preprogrammed (Rem).

After the wheelchair stopped, in every trial the participant was asked to estimate (in centimeters) the distance covered by the wheelchair from the start of movement to the sound signal onset. The participant was never informed that the distance was chosen from a set of five values. The participant was asked to avoid using spatial reference points and to make estimates based on their internal sense of motion.

During wheelchair movement, the participant was asked to look at the tablet placed in front of them on a folding arm (A in

Figure 1a). When the wheelchair stopped, they used the tablet to enter the estimated distance via a screen input box and screen number buttons with a backspace and enter buttons. In addition to distance estimates, in each trial we asked the participant to estimate the degree of their control over the movement of the wheelchair. The score was given on a Likert scale from 0 to 9 using the same input box. The participant was informed that a score of 9 meant that “you felt that you had full control over the movement of the wheelchair” and a score of 0 meant that “you sensed no control at all over the movement of the wheelchair”.

After consequently entering the estimate and the score, the wheelchair was free to drive forward again. When the wheelchair approached the boundary of the racetrack, the experimenter put it into manual control mode and turned it away from the path. After that, the condition continued.

In all three conditions, the predictability of the sound was consistent, and the sound was always caused by the wheelchair moving. We consider this equal predictability to be an important feature of our design. The number of trials in every condition was as follows (M ± SD): 31.6 ± 3.1 in Act, 31.5 ± 4.2 in Exp and 32.3 ± 3.8 in Rem condition.

2.2. Results

2.2.1. Distance Estimates and the Degree of Control

On average, participants significantly underestimated the distances covered by the wheelchair before the sound for all but 50 cm distances. It should be kept in mind that after the sound, the wheelchair took a considerable braking distance. We expected that, in view of this, participants would, on the contrary, overestimate the distances. Below, we present a graph of the dependence of estimates on real distance (

Figure 3). Relatively large standard deviations resulted from the fact that different participants tended to estimate the distances with individual patterns, i.e., they consistently overestimated or underestimated the distances.

In order to test the possible influence of the degree of control on the subjective distance estimates, we used repeated measures ANOVA. The analysis was carried out in Statistica 10 (Statsoft, Tulsa, OK, USA).

Having obtained a specific dependence of the estimates on real distances, we abandoned the usual comparison of differences between estimates and real distances in different conditions. Absolute differences are functions of real distances, and the accuracy of the estimates decreases with distance in a non-trivial way (if we consider distances of 0.5 m). Therefore, instead of differences, we compared the estimates. Such an analysis is justified if the estimates of the different distances are separated. Moreover, in different conditions, the wheelchair traveled, on average, almost the same distance (1.95 ± 0.14 in Act, 1.97 ± 0.14 in Exp, 1.92 ± 0.16 in Rem). Direct comparison of estimates instead of differences has already been used by other authors who studied IB (e.g., [

31,

32]). For each participant, we determined the median of the estimates in every experimental condition for all five distance values. In contrast to averages, medians reduce the impact of statistical outliers.

According to two-way repeated measures ANOVA, the effect of the “Activity” factor was statistically significant (F(2, 44) = 4.3, p = 0.02). As expected, the effect of the “Distance” factor was also significant (F(4, 88) = 77.67, p < 0.00001), while the interaction of factors was not significant F(8, 176) = 0.08, p = 0.65). Further comparisons were made using the Fisher LSD post-hoc criterion. Post-hoc analysis revealed differences between the Act condition and Exp condition (p = 0.02), and between the Act condition and Rem condition (p = 0.01), but not between Exp and Rem (p = 0.85). To our surprise, the estimates turned out to be greater in the Act condition, refuting our initial hypothesis.

2.2.2. Control Ratings

On average, the control rating scores were as follows (M ± SD): 8.01 ± 1.54 in Act; 2.73 ± 2.84 in Exp; 1.85 ± 2.70 in the Rem condition. These scores confirm that participants felt a high degree of control only in the Act condition. According to our observations and comments made by participants, in many cases, participants considered the degree of control to be self-evident, and they gave a score without deliberation. There was a good reason for this: the experiment did not involve any manipulation of control and the participant always clearly understood who was driving the wheelchair. A total of 10 subjects gave only 9-point scores in the Act condition or gave 9 points in all but one trial. Participants regularly asked why they were requested to enter scores if the degree of their control over the wheelchair was obvious and constant across the condition. We decided that in a large share of cases, the scores do not reflect the condition of the participant on a trial-by-trial basis, and, therefore, refrained from computing correlation between the distance estimates and control scores.

2.3. Discussion

All IB varieties reported in the literature, including spatial binding, are characterized by a spatiotemporal convergence of the action and its result, with decreased estimates in the case of voluntary actions. In our experiment, we unexpectedly observed an inverse effect: in the Act condition, where the action was voluntary, the estimates of distance were significantly greater than in the other, non-voluntary conditions. As a result, our initial hypothesis was refuted. One possible explanation is that the participants did not perceive the sound as the direct result of voluntarily steering the wheelchair, but rather as an uncontrolled external event or a command they had to follow (it was a signal to release the button on the joystick). Moreover, the perception of the sound could have been influenced by increased attention to it in the voluntary condition, as it has been reported that attention may lead to subjective time expansion in some circumstances [

35].

Faced with this unusual effect, we decided to supplement these results with an auxiliary EEG study. Since we used a sound in the procedure, we were able to study the parameters of the auditory ERP in response to this sound. On one hand, the reduced amplitude of the auditory N1 component is a well-known indicator of the sense of agency. On the other hand, it seems that a high level of attention contributes to an increase in the amplitude of the component and its latency [

36,

37]. Consequently, we decided to rely on the auditory N1 component to test whether the sound was perceived as a signal unrelated to action and to indirectly assess the contribution of attention to the effect.

3. Experiment 2

3.1. Methods

3.1.1. Participants

Eleven naïve right-handed healthy volunteers (7 males and 4 females, age 23.1 ± 3.5 years (M ± SD)) participated in this study. The sample size was restricted by time constraints and organizational difficulties. All subjects were introduced to the procedure and signed informed consent. The experiment procedures were approved by the local ethics committee and were in agreement with the institutional and national guidelines for experiments with human subjects as well as with the Declaration of Helsinki.

3.1.2. Apparatus

In Experiment 2, we used the same robotic wheelchair as in Experiment 1. This time, however, we utilized one of the LEDs located on the wheelchair’s eye tracker, which was held by the second folding arm under the tablet.

3.1.3. Experiment Design

As in Experiment 1, a participant was seated in the robotic wheelchair. The wheelchair was navigated along three sides of a rectangular track under the supervision of the experimenter.

The experiment included four conditions, in two of which the wheelchair was moving:

- -

Active control (Act)—a participant controls the wheelchair on their own using the joystick;

- -

Experimenter’s control (Exp)—the experimenter walks beside the wheelchair and controls it within the visual field of the participant, while the participant sits idly.

The Act and Exp conditions were almost exactly identical to the conditions with the same names in Experiment 1. The difference was that in Experiment 2, participants did not have to give distance estimates. The tablet was turned away from the subject and they were asked to look ahead and to try not to shift their gaze to objects haphazardly.

In two new conditions, the wheelchair was standing still:

- -

Control condition with self-generated sound (CtrlSelfgen)—the participant generates a sound by pressing the button on the joystick;

- -

Control condition with random sounds (Ctrl Rand)—the participant passively listens to randomly generated sounds.

These new control conditions were included in order to replicate the classic auditory N1 suppression for self-generated sounds in our setting. We also intended to compare latencies for external and self-generated sounds, as they may relate differently depending on the exact conditions of the experiment [

30].

All these activities were perfectly safe for participants and required minimal physical effort. Before the experiment, each condition was presented to the participant by means of 2–4 practice trials. The control conditions always preceded the driving conditions. The order in the pair of control conditions and in the pair of main conditions was randomized independently for every participant.

3.1.4. Procedure

In the CtrlSelf condition, a participant was instructed to look at an LED before them (

Figure 1b) and generate a sound by pressing a button on the joystick. The sound was the same as in Experiment 1. After the LED was lit, a participant pressed the button at a random point in time and heard the sound after 500 ms. After another 500 ms, the LED would turn off, and then turn on again after two seconds. Therefore, in this condition, a participant controlled the signal timing. In the CtrlRand condition, the sound was generated without the participant’s input. In this condition, the participant was required to look at the LED as it lit up and wait for the sound. For each trial, the interval between the LED lighting and the sound was random and could be 1, 1.5, 2, 2.5 or 3 s. After 500 ms following the sound, the LED was also turned off, and lit up again after 2 s. In both conditions, the participant was asked to try not to blink while the LED was lit for better EEG data.

3.1.5. EEG Recording

EEG was recorded from 23 locations (F3, F1, Fz, F2, F4, C3, C1, Cz, C2, C4, P1, P3, Pz, P2, P4, PO7, PO3, POz, PO4, PO8, O1, Oz, O2) with 0–200 Hz bandpass at a 500 Hz sampling rate and 24-bit voltage resolution with the NVX52 DC system (Medical Computer Systems, Moscow, Russia). The electrooculogram (EOG) was recorded with the same amplifier using electrodes placed near the outer canthi for recording the horizontal component of eye movement, and above and below the right eye for recording the vertical component. The ground electrode was placed at the Fpz position. Impedance was kept below 15 kΩ. The times related to audio feedback were recorded along with the EEG using an Arduino Nano (Arduino, Somerville, MA, USA) single-board microcontroller.

3.1.6. Data Analysis

EEG analysis was made offline using the data collected during the experiments. Signal preprocessing, statistical analysis and visualization were performed using MATLAB (MathWorks, Portola Valley, CA, USA). EEG data were filtered with a zero-phase bandpass 2nd order Butterworth filter with a lower cutoff frequency of 0.5 Hz and a higher cutoff frequency of 20 Hz. EEG segmentation was based on triggers in the Arduino Nano channel, which corresponded to the onsets of the audio feedback. One triggering was considered as constituting one trial. EEG/EOG epochs −1500…+1000 ms relative to the trigger were extracted. Resulting trials were visually inspected using the EEGLAB toolbox package [

38]. In the presence of an oculographic or mechanical (due to wheelchair or participant movements) artifact in the time range −400…+500 ms, the trial was rejected from the analysis. Mean numbers of epochs per participant for Act, Exp, CtrlRand and CtrlSelfgen conditions were (M ± SD) 49.8 ± 8.9, 46.0 ± 8.7, 48.1 ± 1.6, 47.6 ± 2.1, accordingly. Mean numbers of deleted epochs were 13.6 ± 8.0 (Act), 19.2 ± 9.0 (Exp), 1.9 ± 1.6 (CtrlRand) and 2.4 ± 2.1 (CtrlSelfgen).

Components associated with eye movements were removed from the EEG signal through the independent components analysis (ICA) using the runica algorithm (based on Infomax ICA) with the default settings in the EEGLAB package. ICA was applied to concatenated segments of the EEG and monopolar EOG signals from all conditions, but with the bandpass filtered in a narrower 1…9 Hz range, for each participant separately, and produced 27 independent components. To determine which of them had the oculomotor origin, we separately calculated the Pearson correlation coefficient between the component activations and the vertical and horizontal EOG. Visual inspection of component projection topographic maps and activation time courses using the EEGLAB functions showed that components with a correlation coefficient larger than 0.35 with either HEOG or VEOG were indeed related to the oculomotor activity. Some components with correlation coefficient near the threshold were also removed. Three to five components per participant met this criterion and were rejected from dataset 1, which was used for the EEG analysis. To analyze the effects in the ERP data, paired samples t-tests were used. Statistical analysis was carried out using Statistica 10 (Statsoft, Tulsa, OK, USA).

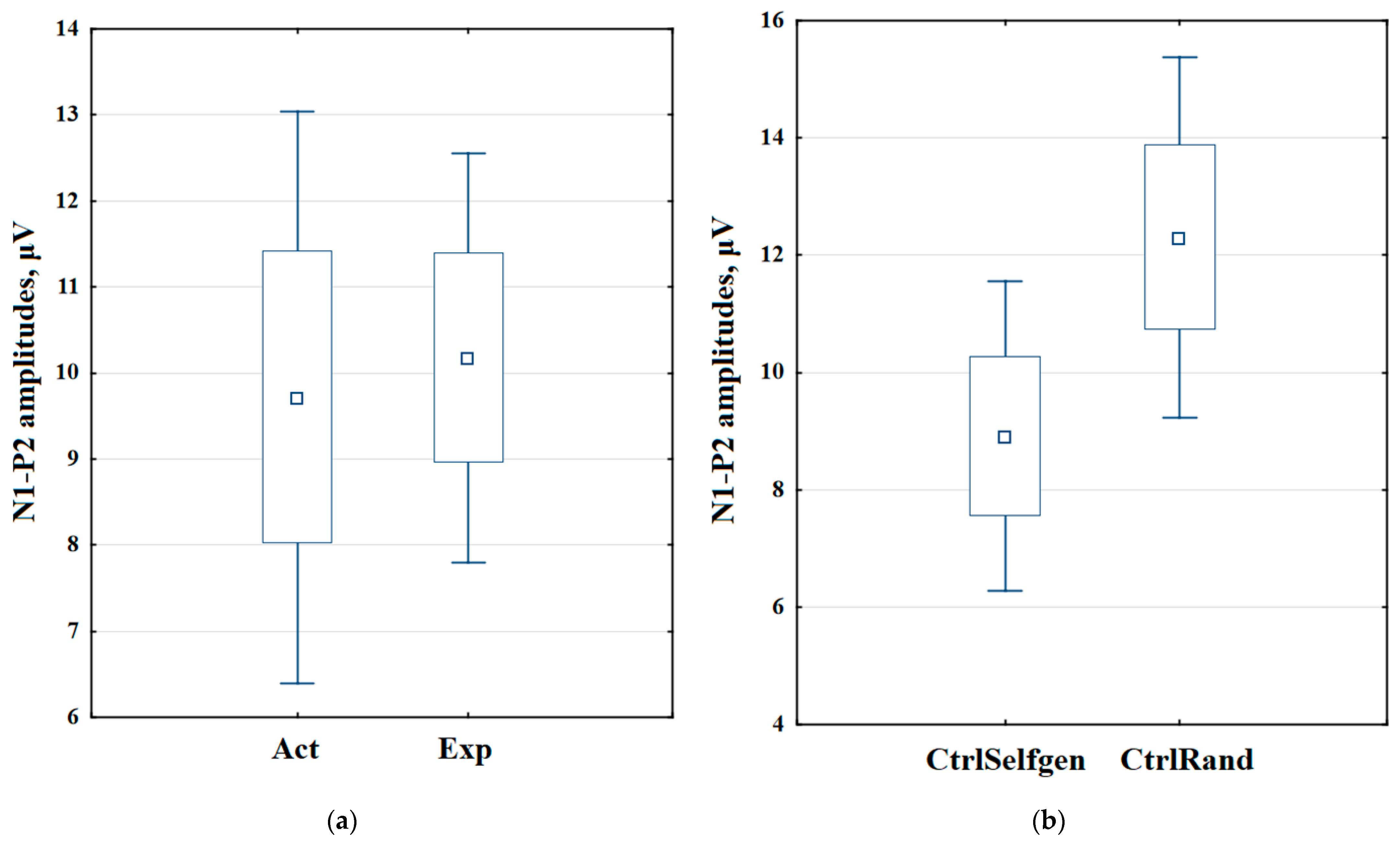

3.2. Results

The auditory N1 is a negative waveform component of the ERP, elicited by an auditory stimulus and distributed mostly over the fronto-central region of the scalp. The N1 is followed by the P2 positive component. In the current study, we examined N1-P2 inter-peak amplitudes and latencies in the auditory N1. The N1 peak latency was determined as a minimum in the interval (0, 250) ms, and the P2 peak latency was determined as the closest maximum after N1. The amplitudes and latencies were obtained after averaging over epochs. The highest amplitude of the N1 component was observed at the Cz location. For this reason, we measured the amplitudes and latencies at this location. Mean ERPs can be seen in

Figure 4, and the topographical ERP maps can be seen in

Figure 5. The highest EEG amplitude in the Cz location was not observed in the Act condition, but we believe that the lateralized activity that is located in the parietal area is likely related to the motor preparation of button release.

Because of the major methodological difference between the conditions with wheelchair movement and the control conditions, we performed pairwise comparisons of the conditions using paired samples t-tests. Average values of N1-P2 amplitudes for different conditions are shown in

Figure 6. Comparisons of inter-peak amplitudes showed a significant difference between the CtrlSelfgen and CtrlRand conditions (t(10) = 2.87,

p = 0.02), with higher amplitude in CtrlRand, but not for the Act and Exp conditions (t(11) = 0.38,

p = 0.71).

Latencies in the auditory N1 were determined for the peak at the Cz location. Average values of N1 latencies for different conditions are shown in

Figure 7. Paired samples t-tests showed a difference between the Act and Exp conditions (t(10) = 2.37,

p = 0.039) and CtrlRand and CtrlSelfgen conditions (t(10) = 3.88,

p = 0.003). The latencies were greater in the Exp and CtrlRand conditions, respectively.

3.3. Discussion

We conducted Experiment 2 in order to obtain more information on how participants perceived the sound in addition to the distance estimates. We expected to obtain the usual auditory N1 suppression for the Act condition relative to the Exp condition. We also hypothesized that the relation of latencies will be analogous in the Act/Exp and CtrlSelfgen/CtrlRand pairs of conditions. Normally, the N1 component of ERP in response to a sound accompanying an action has a smaller amplitude compared to an exogenous sound. Our first hypothesis was not confirmed, as no significant difference was found for N1-P2 inter-peak amplitude, whereas for conditions without wheelchair movement, the classical auditory N1 suppression for self-generated sounds was reproduced.

Examining the latencies in the N1 component, we saw they were significantly greater in the CtrlRand condition than in the CtrlSelfgen condition. In other words, in the condition where the participant caused the sound by their action directly, the latency in the component was smaller. As we expected, in the Act and Exp conditions, a similar pattern was found: the latencies were significantly greater in the Exp condition, which corresponds to the CtrlRand condition, as the sound is exogenous in both instances. This result indicates that the sound was perceived as, at least partly, self-generated in the Act condition. In summary, the results of this EEG study imply that the explanation of the effect obtained in Experiment 1 should not be reduced to a high level of attention prior to the sound. We will integrate the results of both experiments in the General Discussion.

4. General Discussion

In this study, we examined possible objective indicators of the sense of agency experienced by a user of a robotic wheelchair. The main feature of the action examined in our study was the participant’s relocation during the course of action, which has not yet been considered in SoA studies. Accordingly, the participant estimated the spatial displacement of their whole body rather than their body parts or external objects. The action performed by the participant was time extended: the task of the participant was to drive forward on the wheelchair, and to do this, they pressed a button on the joystick and tilted it forward. Time-extended actions are more common in real-life settings and are closer to the real situation of long-term control of a device than the simple button-pushing action favored by experimenters. Finally, in this study, we tried to equalize the predictability of events in the main experimental conditions, in order to exclude variations as a probable explanation of the obtained effect. As in many other studies of effects from the intentional binding (IB) family, in our design, the action was the cause of a sound. However, sound timing was largely unpredictable, as it was bound to an initially unknown distance that they traveled.

In Experiment 1, we investigated a less-well-studied variety of IB called spatial binding. It should be specified that our methodology was different from the one used by other authors [

25,

26]. In our experiment, the participant’s position changed during the course of the action. Moreover, the participant estimated the distance traveled over a period of time, rather than the distance between two static objects. We found an unexpected effect: distance estimates were greater for the participant’s voluntary actions as compared to uncontrolled wheelchair movements. The participant drove the wheelchair on their own in the Act condition, while in the Exp condition, it was manually guided by the experimenter using the same joystick, and in the Rem condition, the wheelchair was controlled remotely. We believe the role of the sound in the experiment to be one of the likely reasons for this result. The sound served not only as a result of an action, but also as a signal to stop the wheelchair. This feature in the method strongly stands out against the background of the known studies. Should the stopping of the wheelchair be considered only as completion of the action or is it a separate consequent action? This question largely relates to the problem of action individuation in the continuum of behavior. Either way, the instruction to release the button on the joystick after the sound may have increased the participant’s attention to ongoing events. It is known that, under certain conditions, an increase in participant attention may lead to a subjective expansion of time.

It should be noted that the involvement of attention in the explanation does not really affect the significance of the result obtained. Rather, what matters is the reason why the participant’s attention is supposedly increased in the Act condition. If the participant is indeed more concentrated waiting for the sound in the Act condition, why is this the case? We drafted two alternative explanations. On the one hand, waiting for the sound could have nothing to do with controlling the wheelchair before the sound: the participant could simply be waiting for it in order to perform the action of releasing the joystick. On the other hand, the participant was told that the sound notified them of the completion of a task—therefore, it was a call to interrupt the action. In light of that, preparation for the sound could be coupled with constant control of the situation, implying that in this case, increased attention accompanies SoA. In order to corroborate either explanation, we decided to supplement information about the subject’s state with a minor EEG study. Importantly, our behavioral study has one serious methodological limitation. It lacked a condition where the wheelchair would move automatically and a participant would be instructed to stop it after the sound. If such a condition had been included, we would have a better understanding of whether the causes of the sound were necessary to produce the effect.

In Experiment 2, we tested a reliable indicator of SoA—the N1 component of the auditory ERP. It is known that attention to a sound affects the parameters of the component: its latency and amplitude increase. Voluntary control over the sound, on the contrary, leads to a decrease in the amplitude. We added two control conditions to the procedure, with participants listening to either random sounds (CtrlRand) or self-generated sounds (CtrlSelfgen). These conditions were included to reproduce the classical result for N1-P2 amplitude suppression and to compare the latencies of the component in these conditions to those in the Act and Exp conditions. Ultimately, the N1 suppression in the CtrlSelfgen condition was replicated, and the latencies were significantly smaller in the CtrlSelfgen condition. The amplitudes did not differ much in the Act and Exp conditions, and were even slightly greater in the Exp condition. If attention were to play a critical role in the response to the sound, one would expect significantly higher amplitudes in the Act condition. In addition, the amplitudes in the Act and Exp conditions occupied an intermediate position in magnitude between the amplitudes in the control conditions. However, it should be noted here that comparing the ERP parameters in the conditions with a static wheelchair with the conditions where it is moving is not entirely appropriate. A significant difference between the Act and Exp conditions emerged in the latencies of component N1: the latencies were significantly smaller in the Act condition. This difference is similar to that observed for the control conditions. In sum, the results obtained for the latencies support the fact that participants perceived the sound in the Act condition as self-generated, at least to some extent. This assertion would be more robust if a significant reduction in the N1 amplitude was obtained in the Act condition.

Accordingly, we believe it is reasonable to conclude that the difference between the main conditions in Experiment 1 is best explained by the presence of the participant’s SoA in the Act condition and its absence in the Exp and Rem conditions. Even if the participants did not think their actions were causing the sound, they nevertheless believed they were in control of the wheelchair in the Act condition. The sense of control scores in respective conditions confirm this thesis, and the EEG study corroborates it further. The effect associated with distance estimates that we identified can potentially be used to assess the involvement of the agent in controlling various means of transportation. In particular, this indicator may provide a way to compare different ways of piloting a vehicle for perceived control. However, this study had several important features that set it apart from similar experiments. It is important that the effect be investigated further, and that it proves to be reproducible under differing conditions. Currently, it is impossible to say exactly why the distance estimation effect was inverted in our design.

5. Conclusions

In this study, we examined the relationship between a participant’s sense of agency and spatial perception during movement in a robotic wheelchair. We discovered that participants tended to give greater estimates of distance when they were driving the wheelchair. The observed effect is a variety of intentional binding, an implicit indicator of the sense of agency. An auxiliary EEG study and direct ratings of the sense of agency imply that participants perceived events in the experiment as being under their control when they were instructed to operate the wheelchair. The increase in estimates in the active condition runs counter to earlier findings on the influence of the sense of agency on the perception of space and time. The exact mechanisms of the observed effect are unknown, and further research is needed to determine them. It would also be valuable to find more information about the exact conditions under which the effect occurs, e.g., whether it persists if the participant is asked to stop the wheelchair when it is moving on its own.

Regardless of the mechanisms of the effect, we proposed a model for studying SoA in a situation where the action is directed at the relocation of the agent. This model might be useful in studying SoA in an important area of human–machine interaction—the control of semi-autonomous vehicles. For example, we suggest that this model might help assess the quality of robotic wheelchair interfaces; first of all, the degree of control that a person experiences when operating the device. This is essential because having a sense of control (an aspect of SoA) is a necessary condition for the successful operation of a device. Like other effects related to intentional binding, our technique can be implemented by simple means, as it only involves distance estimation. Currently, the applicability of our methodology has a serious limitation: so far, we do not know whether it can distinguish between different gradations of the sense of agency. To clarify this, studies are needed where full control of the wheelchair will be compared with defective or partial control. After the technique is tested in these conditions, it will become suitable for practical use.

Author Contributions

Conceptualization, A.S.Y. and I.A.D.; methodology, A.S.Y.; software, A.D.M.; formal analysis, D.G.Z., D.S.Y. and I.A.N.; investigation, A.N.S. and A.S.Y.; resources, A.D.M.; writing—original draft preparation, A.S.Y. and D.G.Z.; writing—review and editing, S.L.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the NRC “Kurchatov Institute”, order No. 2754/28.10.2021.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki and approved by the Ethics Committee of the NRC “Kurchatov Institute” (13 February 2020).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study are available on request from the corresponding author. The data are not publicly available due to privacy restrictions.

Acknowledgments

The authors thank Anatoly N. Vasilyev for useful suggestions related to data analysis.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Gallagher, S. Philosophical Conceptions of the Self: Implications for Cognitive Science. Trends Cogn. Sci. 2000, 4, 14–21. [Google Scholar] [CrossRef]

- Pacherie, E. The Sense of Control and the Sense of Agency. Psyche 2007, 13, 1–30. [Google Scholar]

- Seghezzi, S.; Convertino, L.; Zapparoli, L. Sense of Agency Disturbances in Movement Disorders: A Comprehensive Review. Conscious. Cogn. 2021, 96, 103228. [Google Scholar] [CrossRef] [PubMed]

- Moore, J.W. What Is the Sense of Agency and Why Does It Matter? Front. Psychol. 2016, 7, 1272. [Google Scholar] [CrossRef]

- Laffont, I.; Biard, N.; Chalubert, G.; Delahoche, L.; Marhic, B.; Boyer, F.C.; Leroux, C. Evaluation of a Graphic Interface to Control A Robotic Grasping Arm: A Multicenter Study. Arch. Phys. Med. Rehabil. 2009, 90, 1740–1748. [Google Scholar] [CrossRef] [PubMed]

- Petrini, F.M.; Valle, G.; Bumbasirevic, M.; Barberi, F.; Bortolotti, D.; Cvancara, P.; Hiairrassary, A.; Mijovic, P.; Sverrisson, A.Ö.; Pedrocchi, A.; et al. Enhancing Functional Abilities and Cognitive Integration of the Lower Limb Prosthesis. Sci. Transl. Med. 2019, 11, eaav8939. [Google Scholar] [CrossRef]

- Huang, Q.; Chen, Y.; Zhang, Z.; He, S.; Zhang, R.; Liu, J.; Zhang, Y.; Shao, M.; Li, Y. An EOG-Based Wheelchair Robotic Arm System for Assisting Patients with Severe Spinal Cord Injuries. J. Neural Eng. 2019, 16, 026021. [Google Scholar] [CrossRef]

- Anjum, A.; Yazid, M.D.; Daud, M.F.; Idris, J.; Hwei Ng, A.M.; Naicker, A.S.; Rashidah Ismail, O.H.; Kumar, R.K.A.; Lokanathan, Y. Spinal Cord Injury: Pathophysiology, Multimolecular Interactions, and Underlying Recovery Mechanisms. Int. J. Mol. Sci. 2020, 21, 7533. [Google Scholar] [CrossRef]

- Shneiderman, B. Designing the User Interface Strategies for Effective Human-Computer Interaction. ACM SIGBIO Newsl. 1987, 9, 6. [Google Scholar] [CrossRef]

- Wen, W.; Yun, S.; Yamashita, A.; Northcutt, B.D.; Asama, H. Deceleration Assistance Mitigated the Trade-off Between Sense of Agency and Driving Performance. Front. Psychol. 2021, 12, 1231. [Google Scholar] [CrossRef]

- Yun, S.; Wen, W.; An, Q.; Hamasaki, S.; Yamakawa, H.; Tamura, Y.; Yamashita, A.; Asama, H. Investigating the Relationship Between Assisted Driver’s SoA and EEG. In Proceedings of the International Conference on NeuroRehabilitation, Pisa, Italy, 16–20 October 2018; Biosystems and Biorobotics. Springer: Berlin/Heidelberg, Germany, 2019; Volume 21, pp. 1039–1043. [Google Scholar]

- Bayne, T.; Pacherie, E. Narrators and Comparators: The Architecture of Agentive Self-Awareness. Synthese 2007, 159, 475–491. [Google Scholar] [CrossRef] [Green Version]

- Synofzik, M.; Vosgerau, G.; Newen, A. Beyond the Comparator Model: A Multifactorial Two-Step Account of Agency. Conscious. Cogn. 2008, 17, 219–239. [Google Scholar] [CrossRef] [PubMed]

- Dewey, J.A.; Knoblich, G. Do Implicit and Explicit Measures of the Sense of Agency Measure the Same Thing? PLoS ONE 2014, 9, e110118. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Braun, N.; Thorne, J.D.; Hildebrandt, H.; Debener, S. Interplay of Agency and Ownership: The Intentional Binding and Rubber Hand Illusion Paradigm Combined. PLoS ONE 2014, 9, e111967. [Google Scholar] [CrossRef] [PubMed]

- Haggard, P.; Clark, S.; Kalogeras, J. Voluntary Action and Conscious Awareness. Nat. Neurosci. 2002, 5, 382–385. [Google Scholar] [CrossRef]

- Libet, B.; Gleason, C.A.; Wright, E.W.; Pearl, D.K. Time of Conscious Intention to Act in Relation to Onset of Cerebral Activity (Readiness-Potential): The Unconscious Initiation of a Freely Voluntary Act. Brain 1983, 106, 623–642. [Google Scholar] [CrossRef]

- Engbert, K.; Wohlschläger, A.; Haggard, P. Who Is Causing What? The Sense of Agency Is Relational and Efferent-Triggered. Cognition 2008, 107, 693–704. [Google Scholar] [CrossRef]

- Coyle, D.; Moore, J.; Kristensson, P.O.; Fletcher, P.C.; Blackwell, A.F. I Did That! Measuring Users’ Experience of Agency in Their Own Actions. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Austin, TX, USA, 5–10 May 2012; pp. 2025–2034. [Google Scholar]

- Limerick, H.; Coyle, D.; Moore, J.W. The Experience of Agency in Human-Computer Interactions: A Review. Front. Hum. Neurosci. 2014, 8, 643. [Google Scholar] [CrossRef] [Green Version]

- Berberian, B.; Sarrazin, J.C.; Le Blaye, P.; Haggard, P. Automation Technology and Sense of Control: A Window on Human Agency. PLoS ONE 2012, 7, e34075. [Google Scholar] [CrossRef]

- Kirsch, W.; Kunde, W.; Herbort, O. Intentional Binding Is Unrelated to Action Intention. J. Exp. Psychol. Hum. Percept. Perform. 2019, 45, 378–385. [Google Scholar] [CrossRef]

- Frith, C.D.; Blakemore, S.J.; Wolpert, D.M. Abnormalities in the Awareness and Control of Action. Philos. Trans. R. Soc. B Biol. Sci. 2000, 355, 1771–1788. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hughes, G.; Desantis, A.; Waszak, F. Mechanisms of Intentional Binding and Sensory Attenuation: The Role of Temporal Prediction, Temporal Control, Identity Prediction, and Motor Prediction. Psychol. Bull. 2013, 139, 133–151. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kirsch, W.; Pfister, R.; Kunde, W. Spatial Action-Effect Binding. Atten. Percept. Psychophys. 2016, 78, 133–142. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Buehner, M.J.; Humphreys, G.R. Causal Contraction: Spatial Binding in the Perception of Collision Events. Psychol. Sci. 2010, 21, 44–48. [Google Scholar] [CrossRef] [Green Version]

- Haggard, P. Sense of Agency in the Human Brain. Nat. Rev. Neurosci. 2017, 18, 196–207. [Google Scholar] [CrossRef]

- Schafer, E.W.P.; Marcus, M.M. Self-Stimulation Alters Human Sensory Brain Responses. Science 1973, 181, 175–177. [Google Scholar] [CrossRef]

- Bäß, P.; Jacobsen, T.; Schröger, E. Suppression of the Auditory N1 Event-Related Potential Component with Unpredictable Self-Initiated Tones: Evidence for Internal Forward Models with Dynamic Stimulation. Int. J. Psychophysiol. 2008, 70, 137–143. [Google Scholar] [CrossRef]

- Lange, K. The Reduced N1 to Self-Generated Tones: An Effect of Temporal Predictability? Psychophysiology 2011, 48, 1088–1095. [Google Scholar] [CrossRef]

- Karpov, V.E.; Malakhov, D.G.; Moscowsky, A.D.; Rovbo, M.A.; Sorokoumov, P.S.; Velichkovsky, B.M.; Ushakov, V.L. Architecture of a Wheelchair Control System for Disabled People: Towards Multifunctional Robotic Solution with Neurobiological Interfaces. Sovrem. Tehnol. Med. 2019, 11, 90–100. [Google Scholar] [CrossRef]

- Sorokoumov, P.S.; Rovbo, M.A.; Moscowsky, A.D.; Malyshev, A.A. Robotic Wheelchair Control System for Multimodal Interfaces Based on a Symbolic Model of the World. In Smart Electromechanical Systems; Springer: Cham, Switzerland, 2021; Volume 352, pp. 163–183. [Google Scholar]

- Obhi, S.S.; Hall, P. Sense of Agency and Intentional Binding in Joint Action. Exp. Brain Res. 2011, 211, 655–662. [Google Scholar] [CrossRef]

- Lewis, D. Causation. J. Philos. 1973, 70, 556–567. [Google Scholar] [CrossRef]

- Tse, P.U.; Intriligator, J.; Rivest, J.; Cavanagh, P. Attention and the Subjective Expansion of Time. Percept. Psychophys. 2004, 66, 1171–1189. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ford, J.M.; Roth, W.T.; Kopell, B.S. Attention Effects on Auditory Evoked Potentials to Infrequent Events. Biol. Psychol. 1976, 4, 65–77. [Google Scholar] [CrossRef]

- Thornton, A.R.D.; Harmer, M.; Lavoie, B.A. Selective Attention Increases the Temporal Precision of the Auditory N100 Event-Related Potential. Hear. Res. 2007, 230, 73–79. [Google Scholar] [CrossRef]

- Delorme, A.; Makeig, S. EEGLAB: An Open Source Toolbox for Analysis of Single-Trial EEG Dynamics Including Independent Component Analysis. J. Neurosci. Methods 2004, 134, 9–21. [Google Scholar] [CrossRef] [Green Version]

| Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).