An Intelligent Penetration Test Simulation Environment Construction Method Incorporating Social Engineering Factors

Abstract

:1. Introduction

- Challenge 1: It is inevitable that mistakes will occur in manual penetration tests. Social engineering is a useful way to exploit human defects. However, the existing simulation environment does not incorporate social engineering.

- Challenge 2: The existing simulation environment is based on the traditional network graph model, which only includes the basic elements and connection relations of the network and does not incorporate the security attributes and elements related to penetration tests.

- To improve the exited network graph model and integrate it into security-related attributes and elements in the penetration test. We propose an improved network graph model for penetration test (NMPT); it could be better used to describe the penetration test process.

- To expand the penetration tester agent actions and incorporate social engineering factors into the intelligent penetration test. We propose an intelligent penetration test simulation environment construction method based on social engineering factors (SE-AIPT). The integration of social engineering actions provides a new way and possibility for the penetration tester agent to discover the attack path during a penetration test. The research in this paper can further provide an interactive penetration test simulation environment and a platform for RL algorithms to train penetration tester agents.

2. Background

2.1. Manual Penetration Test and AI-Driven Penetration Test

2.2. Intelligent Penetration Test Simulation Environment

3. Methods

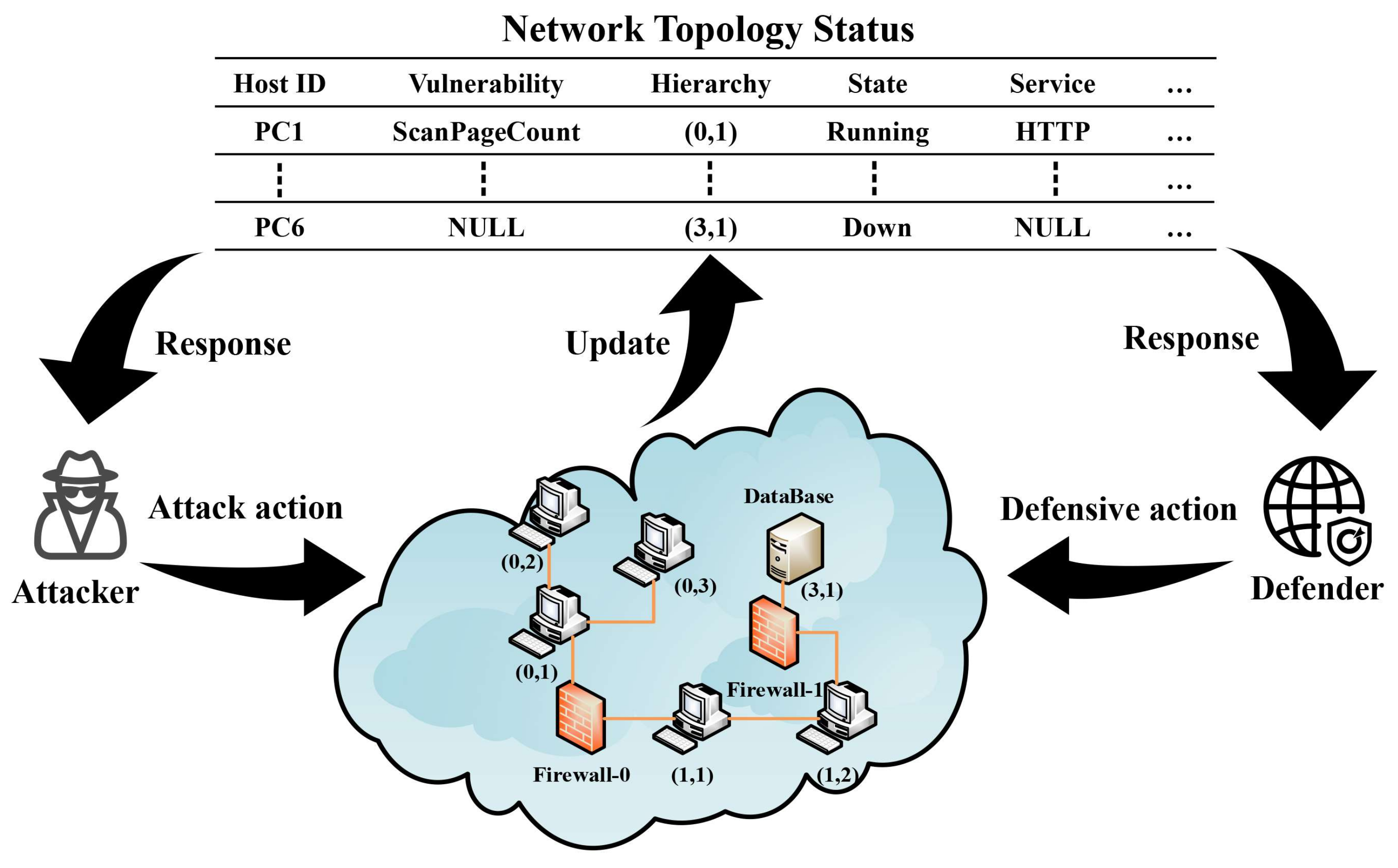

3.1. Network Graph Model for Penetration Test

3.1.1. Definition of the Model

- (1)

- Node identification

- (2)

- Node type

- (3)

- Node properties

- (1)

- Edge identification

- (2)

- Edge type

3.1.2. The Role of NMPT in a Penetration Test

3.2. Social Engineering Factor Extended Intelligent Penetration Test Model

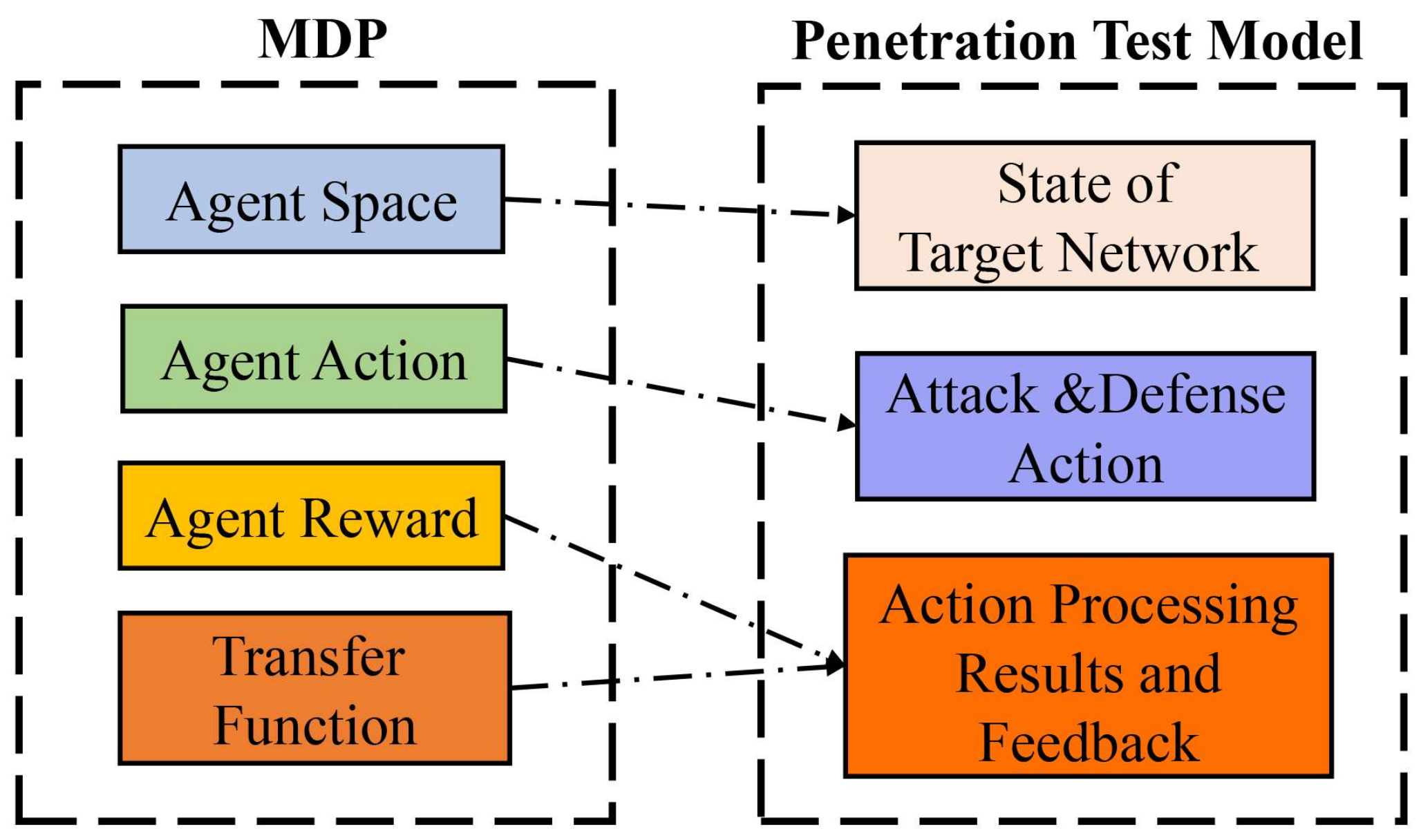

3.2.1. Penetration Test Model

- (1)

- Role in the penetration test model

- (2)

- Action in the penetration test model

- (3)

- Target in the penetration test model

- (4)

- Observation in the penetration test model

- Whether the current penetration tester action is successful.

- The network status changes caused by the success or failure of the action.

- How does the current state change motivate both penetration tester and defender.

- The penetration test target has been achieved or the penetration tester has given up the penetration detection on the target network.

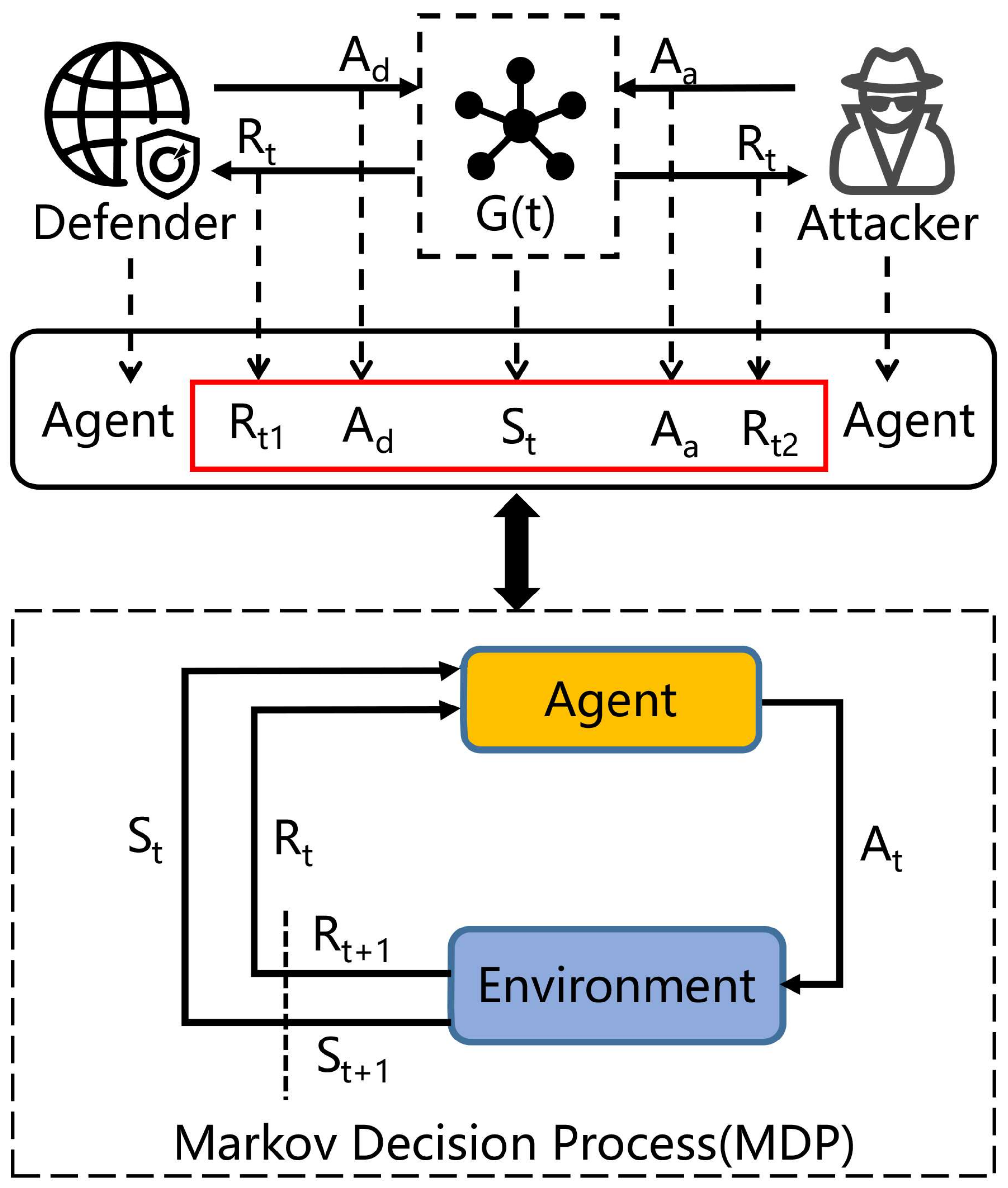

3.2.2. Process Description of the Penetration Test Model

3.2.3. Intellectualization of the Penetration Test Model

3.3. The Simulation Environment Construction of SE-AIPT

- a.

- Simulation model component

- b.

- Environment generation and registration

- c.

- Artificial intelligence algorithm

- Step 1: Add social engineering action types to the definition of the agent action model.

- Step 2: Define the exploitation results of social worker actions and the corresponding reward and punishment values. The exploitation results of social engineering actions include connection credential leakage and host node leakage.

- Step 3: Add an independent undirected graph structure to represent the social network composed of person nodes, and each node in the undirected graph contains attributes and asset information of the person.

- Step 1: Define global identifiers, global maximum number of actions for social engineering action types.

- Step 2: Define the parameters of the social engineering action, the size of action space and the action bitmask that can be applied to the action processing in the environment.

- Step 3: Define a bitmask validation method for environmental processing of social work actions to determine whether the currently performed social engineering action is within the range of space and whether the current target node satisfies the social engineering conditions.

- Step 4: Generation of network scenario and registration of the environment.

4. Experiments

4.1. Experiment Environment and Process

4.2. Basic Settings for Using the RL Method

4.2.1. Agent State

4.2.2. Agent Action

4.2.3. Reward

4.3. Baseline

- Random: The selection of agent actions is not relevant to the agent state. An agent could randomly select actions to interact with the environment; the feedback and reward of the environment have no influence on the agent’s choice of the next action.

- Tabular Q-learning: Tabular Q-learning is a value-based algorithm of RL algorithms. The main idea is to construct a table of states and actions to store Q values and then select the actions that can obtain the maximum benefits according to the Q values. However, for the RL tasks of high-dimensional state space and action space, the limited space of the table cannot store all the states and actions, which limits the performance of the algorithm.

- DQL: DQL combines deep learning and reinforcement learning to effectively solve the large state-action storage space. DQL uses a neural network to replace the Q-value table in Tabular Q-learning. Thus, the original convergence problem of the action value function is transformed into the function-fitting problem of a neural network, which is a representative work in the field of DRL.

4.4. Experimental Settings and Results

4.4.1. Experiment A

4.4.2. Experiment B

4.4.3. Experiment C

4.5. Result Analysis

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Chowdhary, A.; Huang, D.; Mahendran, J.S.; Romo, D.; Deng, Y.; Sabur, A. Autonomous security analysis and penetration testing. In Proceedings of the 2020 16th International Conference on Mobility, Sensing and Networking (MSN), Tokyo, Japan, 17–19 December 2020; pp. 508–515. [Google Scholar]

- Yichao, Z.; Tianyang, Z.; Junhu, Z.; Qingxian, W. Domain-independent intelligent planning technology and its application to automated penetration testing oriented attack path discovery. J. Electron. Inf. Technol. 2020, 42, 2095–2107. [Google Scholar]

- Baiardi, F. Avoiding the weaknesses of a penetration test. Comput. Fraud Secur. 2019, 2019, 11–15. [Google Scholar] [CrossRef]

- Polatidis, N.; Pimenidis, E.; Pavlidis, M.; Papastergiou, S.; Mouratidis, H. From product recommendation to cyber-attack prediction: Generating attack graphs and predicting future attacks. Evol. Syst. 2020, 11, 479–490. [Google Scholar] [CrossRef] [Green Version]

- Walter, E.; Ferguson-Walter, K.; Ridley, A. Incorporating Deception into CyberBattleSim for Autonomous Defense. arXiv 2021, arXiv:2108.13980. [Google Scholar]

- Schwartz, J. Network Attack Simulator. 2017. Available online: https://github.com/Jjschwartz/NetworkAttackSimulator (accessed on 16 May 2022).

- Baillie, C.; Standen, M.; Schwartz, J.; Docking, M.; Bowman, D.; Kim, J. Cyborg: An autonomous cyber operations research gym. arXiv 2020, arXiv:2002.10667. [Google Scholar]

- Team, M.D. CyberBattleSim. 2021. Available online: https://github.com/microsoft/cyberbattlesim (accessed on 16 May 2022).

- Li, L.; Fayad, R.; Taylor, A. CyGIL: A Cyber Gym for Training Autonomous Agents over Emulated Network Systems. arXiv 2021, arXiv:2109.03331. [Google Scholar]

- Salahdine, F.; Kaabouch, N. Social engineering attacks: A survey. Future Internet 2019, 11, 89. [Google Scholar] [CrossRef] [Green Version]

- Krombholz, K.; Hobel, H.; Huber, M.; Weippl, E. Advanced social engineering attacks. J. Inf. Secur. Appl. 2015, 22, 113–122. [Google Scholar] [CrossRef]

- Shah, S.; Mehtre, B.M. An overview of vulnerability assessment and penetration testing techniques. J. Comput. Virol. Hacking Tech. 2015, 11, 27–49. [Google Scholar] [CrossRef]

- Yaqoob, I.; Hussain, S.A.; Mamoon, S.; Naseer, N.; Akram, J.; ur Rehman, A. Penetration testing and vulnerability assessment. J. Netw. Commun. Emerg. Technol. 2017, 7, 10–18. [Google Scholar]

- Chu, G.; Lisitsa, A. Ontology-based Automation of Penetration Testing. In Proceedings of the 6th International Conference on Information Systems Security and Privacy (ICISSP 2020), Valletta, Malta, 25–27 February 2020; pp. 713–720. [Google Scholar]

- Li, Y. Deep reinforcement learning: An overview. arXiv 2017, arXiv:1701.07274. [Google Scholar]

- François-Lavet, V.; Henderson, P.; Islam, R.; Bellemare, M.G.; Pineau, J. An introduction to deep reinforcement learning. arXiv 2018, arXiv:1811.12560. [Google Scholar]

- Hu, Z.; Beuran, R.; Tan, Y. Automated penetration testing using deep reinforcement learning. In Proceedings of the 2020 IEEE European Symposium on Security and Privacy Workshops (EuroS&PW), All-Digital, Genoa, Italy, 7–11 September 2020; pp. 2–10. [Google Scholar]

- Applebaum, A.; Miller, D.; Strom, B.; Korban, C.; Wolf, R. Intelligent, automated red team emulation. In Proceedings of the 32nd Annual Conference on Computer Security Applications, Los Angeles, CA, USA, 5–9 December 2016; pp. 363–373. [Google Scholar]

- Schwartz, J.; Kurniawati, H.; El-Mahassni, E. Pomdp+ information-decay: Incorporating defender’s behaviour in autonomous penetration testing. In Proceedings of the International Conference on Automated Planning and Scheduling, Nancy, France, 14–19 June 2020; Volume 30, pp. 235–243. [Google Scholar]

- Durkota, K.; Lisỳ, V. Computing Optimal Policies for Attack Graphs with Action Failures and Costs. In Proceedings of the 7th European Starting AI Researcher Symposium, Prague, Czech Republic, 18–22 August 2014; pp. 101–110. [Google Scholar]

- Hoffmann, J. Simulated Penetration Testing: From “Dijkstra” to “Turing Test++”. In Proceedings of the International Conference on Automated Planning and Scheduling, Jerusalem, Israel, 7–11 June 2015; Volume 25, pp. 364–372. [Google Scholar]

- Schwartz, J.; Kurniawati, H. Autonomous penetration testing using reinforcement learning. arXiv 2019, arXiv:1905.05965. [Google Scholar]

- Zennaro, F.M.; Erdodi, L. Modeling penetration testing with reinforcement learning using capture-the-flag challenges and tabular Q-learning. arXiv 2020, arXiv:2005.12632. [Google Scholar]

- Hatfield, J.M. Virtuous human hacking: The ethics of social engineering in penetration-testing. Comput. Secur. 2019, 83, 354–366. [Google Scholar] [CrossRef]

- Aldawood, H.A.; Skinner, G. A critical appraisal of contemporary cyber security social engineering solutions: Measures, policies, tools and applications. In Proceedings of the 2018 26th International Conference on Systems Engineering (ICSEng), Sydney, NSW, Australia, 18–20 December 2018; pp. 1–6. [Google Scholar]

- Stiawan, D. Cyber-attack penetration test and vulnerability analysis. Int. J. Online Biomed. Eng. 2017, 13, 125–132. [Google Scholar] [CrossRef] [Green Version]

- Xiong, X.; Guo, L.; Zhang, Y.; Zhang, J. Cyber-Security Enhanced Network Meta-Model and its Application. In Proceedings of the 6th International Conference on Cryptography Security and Privacy, Tianjin, China, 14–16 January 2022. [Google Scholar]

| Action | SourceID | TargetID | Vulnerability or Means | Additional Parameters |

|---|---|---|---|---|

| Local | ✓ | ✗ | ✓ | ✗ |

| Remote | ✓ | ✓ | ✓ | ✗ |

| Connect | ✓ | ✓ | ✓ | ✓ |

| Social Engineering | ✗ | ✓ | ✓ | ✗ |

| Hyperparameters | DQL | Tabular Q-Learning | Random |

|---|---|---|---|

| Batch Size | 32 | * | * |

| Learning Rate | 0.01 | 0.01 | * |

| Epsilon | 0.9 | 0.9 | * |

| Discount Factor | 0.015 | 0.015 | * |

| Replay Memory Size | 10,000 | * | * |

| Target network update frequency | 10 | * | * |

| Hyperparameters | DQL | Tabular Q-Learning | Random |

|---|---|---|---|

| Max steps per episode | 6000 | 6000 | 6000 |

| Episode | 150 | 150 | 50 |

| Algorithm | Scenario | Goal | Number of Step |

|---|---|---|---|

| DQL | CyberBattleChain with Social Engineering | Cat The Flag | 343 |

| Tabular Q-learning | CyberBattleChain with Social Engineering | Cat The Flag | 821 |

| Random | CyberBattleChain with Social Engineering | Cat The Flag | 1337 |

| DQL | CyberBattleChain | Cat The Flag | 1025 |

| Tabular Q-learning | CyberBattleChain | Cat The Flag | 1232 |

| Random | CyberBattleChain | Cat The Flag | 1145 |

| Algorithm | Scenario | Goal | Number of Step |

|---|---|---|---|

| DQL | CyberBattleChain-10 with Social Engineering | Cat The Flag | 187 |

| DQL | CyberBattleChain-10 | Cat The Flag | 623 |

| DQL | CyberBattleChain-20 with Social Engineering | Cat The Flag | 778 |

| DQL | CyberBattleChain-20 | Cat The Flag | 1121 |

| Hyperparameters | DQL | Tabular Q-Learning | Random |

|---|---|---|---|

| Max steps per episode | 6000 | 6000 | 6000 |

| Episode | 150 | 150 | 50 |

| Algorithm | Scenario | Goal | Number of Step |

|---|---|---|---|

| DQL | CyberBattleChain-10 with Social Engineering | Fixed reward value | 210 |

| Tabular Q-learning | CyberBattleChain-10 with Social Engineering | Fixed reward value | 670 |

| Random | CyberBattleChain-10 with Social Engineering | Fixed reward value | 798 |

| Algorithm | Scenario | Goal | Number of Step |

|---|---|---|---|

| DQL | CyberBattleChain-20 with Social Engineering | Fixed reward value | 1210 |

| Tabular Q-learning | CyberBattleChain-20 with Social Engineering | Fixed reward value | 3278 |

| Random | CyberBattleChain-20 with Social Engineering | Fixed reward value | 3488 |

| Hyperparameters | DQL | Tabular Q-Learning | Random |

|---|---|---|---|

| Max steps per episode | 7000 | 9000 | 13,000 |

| Episode | 50 | 50 | 50 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Y.; Wang, Y.; Xiong, X.; Zhang, J.; Yao, Q. An Intelligent Penetration Test Simulation Environment Construction Method Incorporating Social Engineering Factors. Appl. Sci. 2022, 12, 6186. https://doi.org/10.3390/app12126186

Li Y, Wang Y, Xiong X, Zhang J, Yao Q. An Intelligent Penetration Test Simulation Environment Construction Method Incorporating Social Engineering Factors. Applied Sciences. 2022; 12(12):6186. https://doi.org/10.3390/app12126186

Chicago/Turabian StyleLi, Yang, Yongjie Wang, Xinli Xiong, Jingye Zhang, and Qian Yao. 2022. "An Intelligent Penetration Test Simulation Environment Construction Method Incorporating Social Engineering Factors" Applied Sciences 12, no. 12: 6186. https://doi.org/10.3390/app12126186

APA StyleLi, Y., Wang, Y., Xiong, X., Zhang, J., & Yao, Q. (2022). An Intelligent Penetration Test Simulation Environment Construction Method Incorporating Social Engineering Factors. Applied Sciences, 12(12), 6186. https://doi.org/10.3390/app12126186