Agreement of the Discrepancy Index Obtained Using Digital and Manual Techniques—A Comparative Study

Abstract

1. Introduction

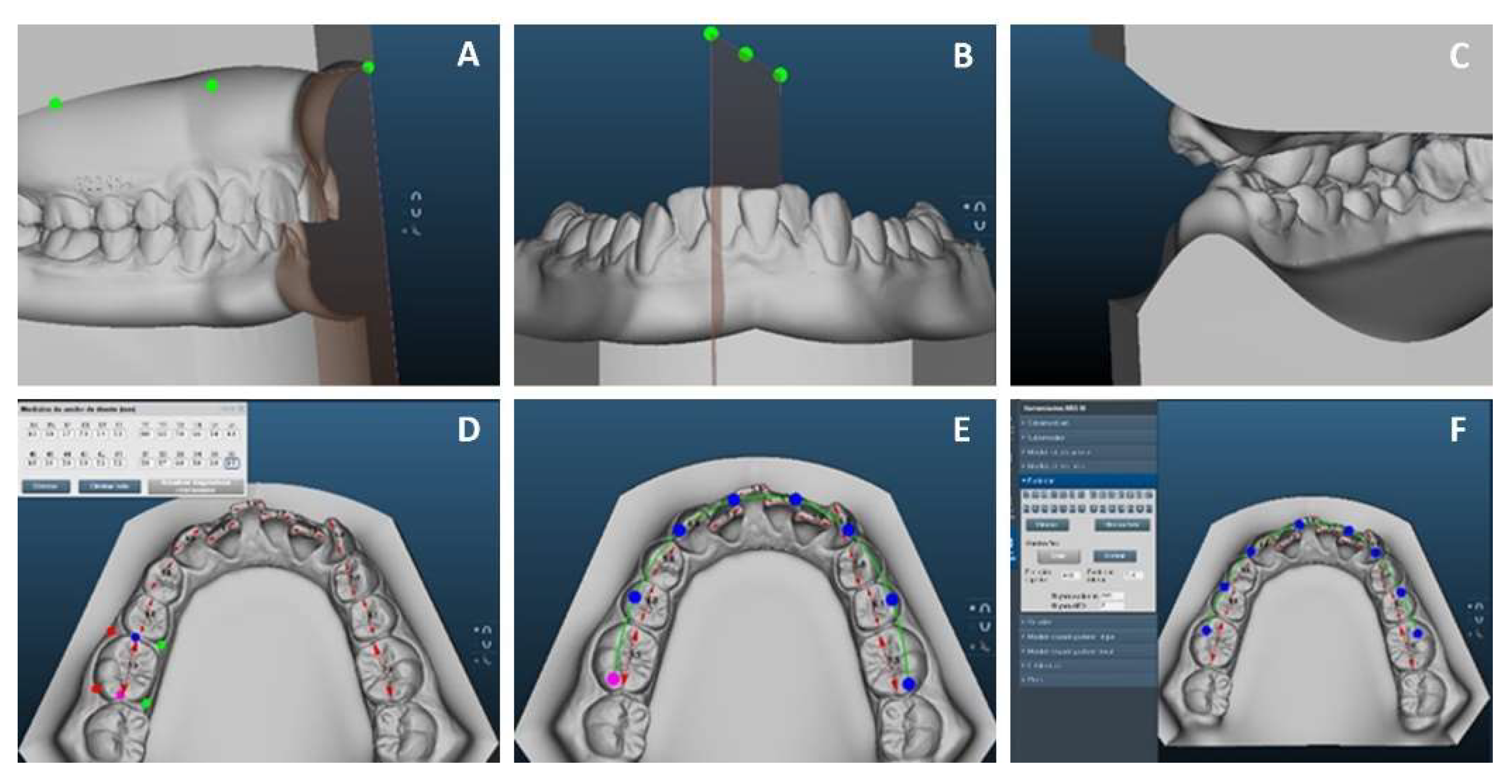

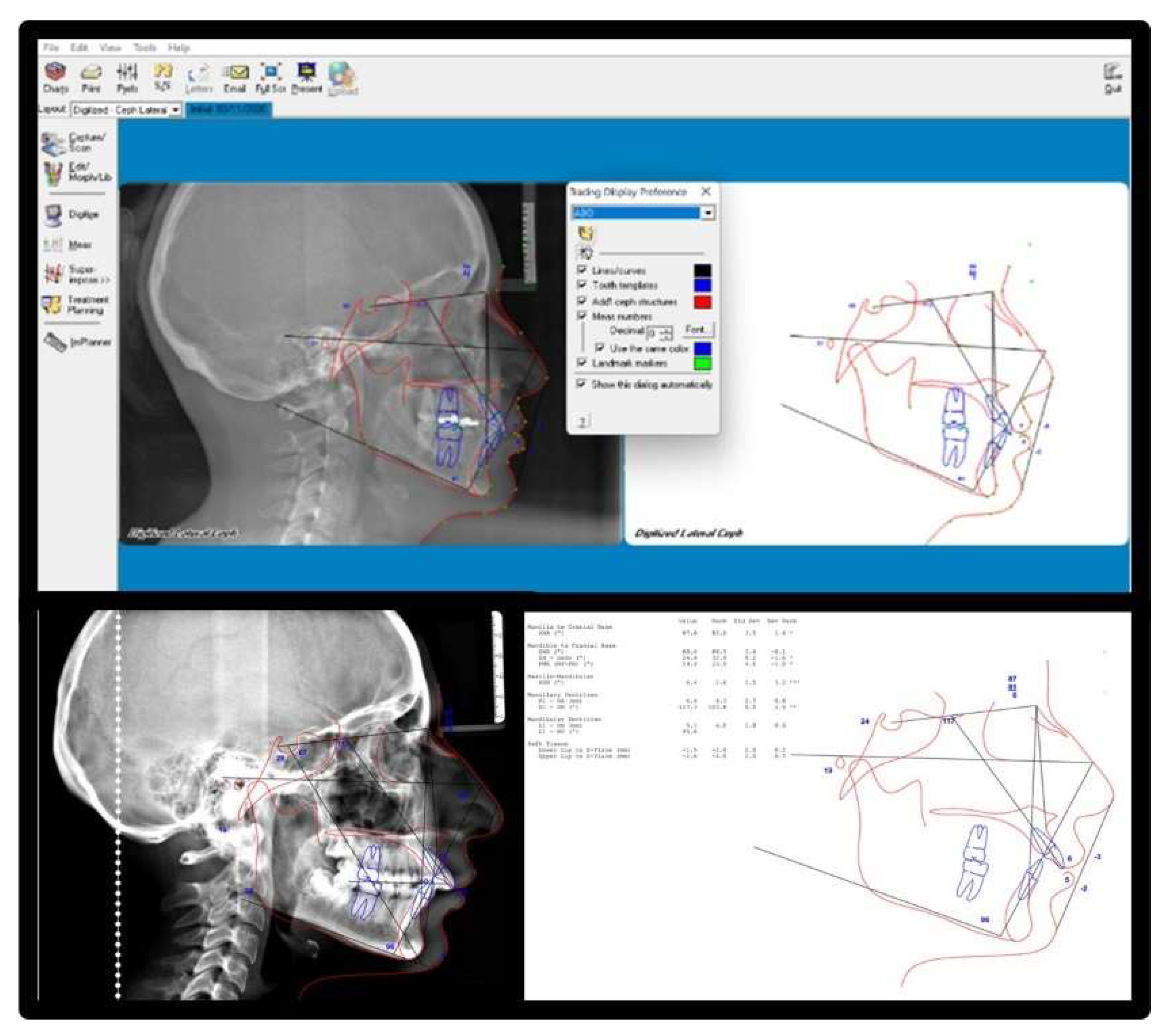

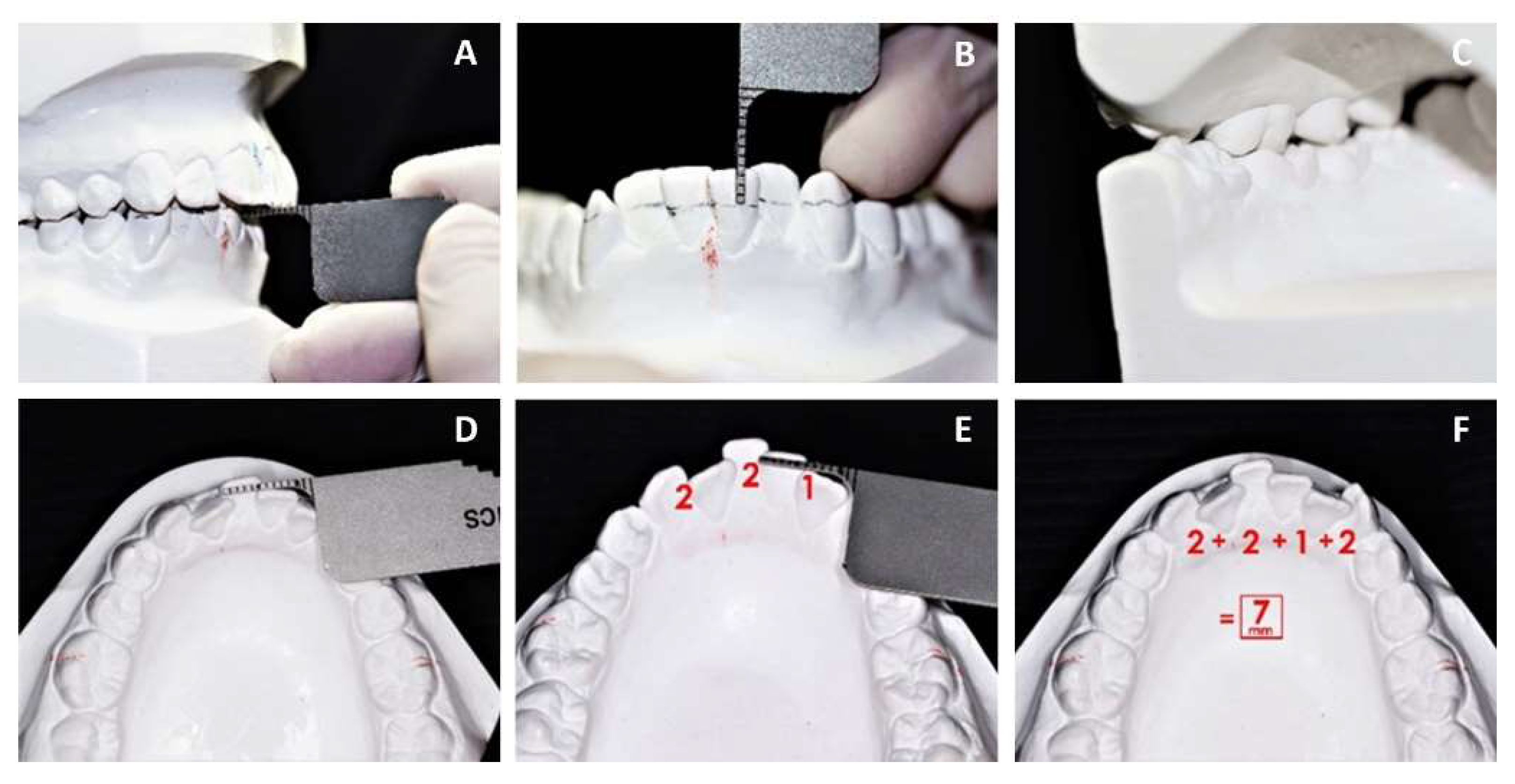

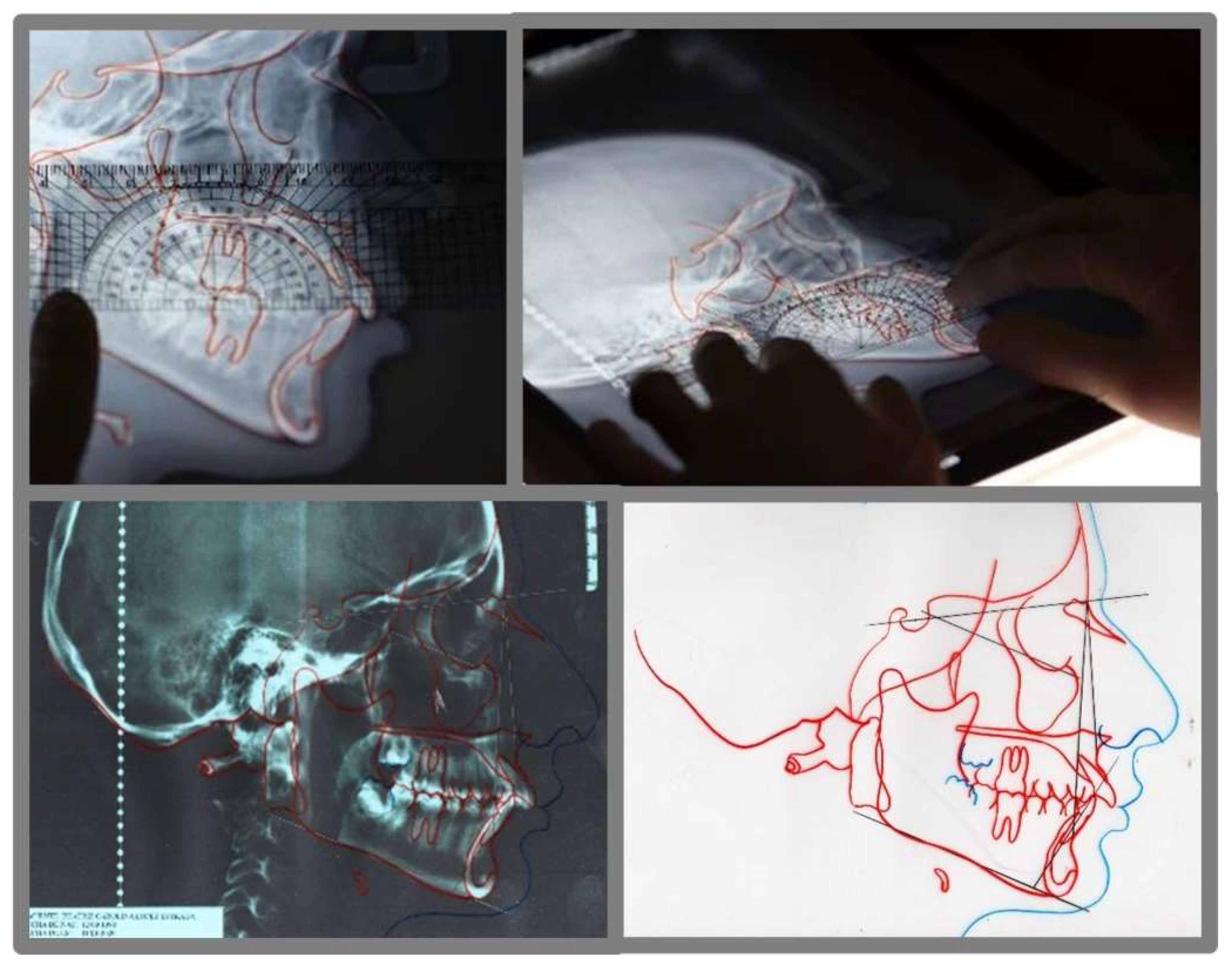

2. Materials and Methods

2.1. Interrater and Intrarater Reliability

2.2. Statistical Analysis

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Barreto, G.M.; Feitosa, H.O. Iatrogenics in Orthodontics and Its Challenges. Dental Press J. Orthod. 2016, 21, 114–125. [Google Scholar] [CrossRef][Green Version]

- Khandakji, M.N.; Ghafari, J.G. Evaluation of Commonly Used Occlusal Indices in Determining Orthodontic Treatment Need. Eur. J. Orthod. 2020, 42, 107–114. [Google Scholar] [CrossRef]

- Kwak, J.H.; Chen, E. An Overview of the American Board of Orthodontics Certification Process. APOS Trends Orthod. 2018, 8, 14–20. [Google Scholar] [CrossRef]

- Rossini, G.; Parrini, S.; Castroflorio, T.; Deregibus, A.; Debernardi, C.L. Diagnostic Accuracy and Measurement Sensitivity of Digital Models for Orthodontic Purposes: A Systematic Review. Am. J. Orthod. Dentofac. Orthop. 2016, 149, 161–170. [Google Scholar] [CrossRef]

- Camardella, L.T.; Vilella, O.V.; van Hezel, M.M.; Breuning, K.H. Genauigkeit von Stereolitographisch Gedruckten Digitalen Modellen Im Vergleich Zu Gipsmodellen. J. Orofac. Orthop. 2017, 78, 394–402. [Google Scholar] [CrossRef]

- Kihara, H.; Hatakeyama, W.; Komine, F.; Takafuji, K.; Takahashi, T.; Yokota, J.; Oriso, K.; Kondo, H. Accuracy and Practicality of Intraoral Scanner in Dentistry: A Literature Review. J. Prosthodont. Res. 2020, 64, 109–113. [Google Scholar] [CrossRef]

- Mangano, F.; Gandolfi, A.; Luongo, G.; Logozzo, S. Intraoral Scanners in Dentistry: A Review of the Current Literature. BMC Oral Health 2017, 17, 149. [Google Scholar] [CrossRef]

- Bohner, L.; Gamba, D.D.; Hanisch, M.; Marcio, B.S.; Tortamano Neto, P.; Laganá, D.C.; Sesma, N. Accuracy of Digital Technologies for the Scanning of Facial, Skeletal, and Intraoral Tissues: A Systematic Review. J. Prosthet. Dent. 2019, 121, 246–251. [Google Scholar] [CrossRef]

- Park, S.H.; Byun, S.H.; Oh, S.H.; Lee, H.L.; Kim, J.W.; Yang, B.E.; Park, I.Y. Evaluation of the Reliability, Reproducibility and Validity of Digital Orthodontic Measurements Based on Various Digital Models among Young Patients. J. Clin. Med. 2020, 9, 2728. [Google Scholar] [CrossRef]

- Sun, L.J.; Lee, J.S.; Choo, H.H.; Hwang, H.S.; Lee, K.M. Reproducibility of an Intraoral Scanner: A Comparison between In-Vivo and Ex-Vivo Scans. Am. J. Orthod. Dentofac. Orthop. 2018, 154, 305–310. [Google Scholar] [CrossRef]

- Brown, M.W.; Koroluk, L.; Ko, C.C.; Zhang, K.; Chen, M.; Nguyen, T. Effectiveness and Efficiency of a CAD/CAM Orthodontic Bracket System. Am. J. Orthod. Dentofac. Orthop. 2015, 148, 1067–1074. [Google Scholar] [CrossRef]

- Schafer, S.M.; Maupome, G.; Eckert, G.J.; Roberts, W.E. Discrepancy Index Relative to Age, Sex, and the Probability of Completing Treatment by One Resident in a 2-Year Graduate Orthodontics Program. Am. J. Orthod. Dentofac. Orthop. 2011, 139, 70–73. [Google Scholar] [CrossRef]

- Alsaeed, S.A.; Kennedy, D.B.; Aleksejuniene, J.; Yen, E.H.; Pliska, B.T.; Flanagan, D.C. Outcomes of Orthodontic Treatment Performed by Individual Orthodontists vs 2 Orthodontists Collaborating on Treatment. Am. J. Orthod. Dentofac. Orthop. 2020, 158, 59–67. [Google Scholar] [CrossRef]

- Cangialosi, T.J.; Riolo, M.L.; Owens, S.E.; Dykhouse, V.J.; Moffitt, A.H.; Grubb, J.E.; Greco, P.M.; English, J.D.; James, R.D. The ABO Discrepancy Index: A Measure of Case Complexity. Am. J. Orthod. Dentofac. Orthop. 2004, 125, 270–278. [Google Scholar] [CrossRef]

- American Board of Orthodontics. The ABO Discrepancy Index (DI) A Measure of Case Complexity. J. World Fed. Orthodont. 2016, 11, 270–278. [Google Scholar]

- Plaza, S.P.; Aponte, C.M.; Bejarano, S.R.; Martínez, Y.J.; Serna, S.; Barbosa-Liz, D.M. Relationship between the Dental Aesthetic Index and Discrepancy Index. J. Orthod. 2020, 47, 213–222. [Google Scholar] [CrossRef]

- Liu, S.; Oh, H.; Chambers, D.W.; Baumrind, S.; Xu, T. Validity of the American Board of Orthodontics Discrepancy Index and the Peer Assessment Rating Index for Comprehensive Evaluation of Malocclusion Severity. Orthod. Craniofacial Res. 2017, 20, 140–145. [Google Scholar] [CrossRef]

- Azeem, M.; Ahmad, A.; ul Haq, A. Orthodontic Treatment; orthodontic treatment need at Faisalabad Medical University and de’Montmorency College of Dentistry. Prof. Med. J. 2018, 25, 1013–1017. [Google Scholar] [CrossRef]

- Parrish, L.D.; Roberts, W.E.; Maupome, G.; Stewart, K.T.; Bandy, R.W.; Kula, K.S. The Relationship between the ABO Discrepancy Index and Treatment Duration in a Graduate Orthodontic Clinic. Angle Orthod. 2011, 81, 192–197. [Google Scholar] [CrossRef]

- Swan, M.; Tabbaa, S.; Buschang, P.; Toubouti, Y.; Bashir, R. Correlation between Adolescent Orthodontic Quality of Life and ABO Discrepancy Index in an Orthodontic Treatment-Seeking Population: A Cross-Sectional Study. J. Orthod. 2021, 48, 360–370. [Google Scholar] [CrossRef]

- Vu, C.Q.; Roberts, W.E.; Hartsfield, J.K.; Ofner, S. Treatment Complexity Index for Assessing the Relationship of Treatment Duration and Outcomes in a Graduate Orthodontics Clinic. Am. J. Orthod. Dentofacial Orthop. 2008, 133, 9.e1–13. [Google Scholar] [CrossRef]

- Ata-Ali, F.; Ata-Ali, J.; Lanuza-Garcia, A.; Ferrer-Molina, M.; Melo, M.; Plasencia, E. Clinical Outcomes of Lingual Fully Customized vs Labial Straight Wire Systems: Assessment Based on American Board of Orthodontics Criteria. J. Orofac. Orthop. 2021, 82, 13–22. [Google Scholar] [CrossRef]

- Wagner, D.J. A Beginning Guide for Dental Photography: A Simplified Introduction for Esthetic Dentistry. Dent. Clin. North Am. 2020, 64, 669–696. [Google Scholar] [CrossRef]

- de Oliveira Meira, A.C.L.; Custodio, W.; Vedovello Filho, M.; Borges, T.M.; de Marcelo, M.; Santamaria, M.; Vedovello, S.A.S. How Is Orthodontic Treatment Need Associated with Perceived Esthetic Impact of Malocclusion in Adolescents? Am. J. Orthod. Dentofac. Orthop. 2020, 158, 668–673. [Google Scholar] [CrossRef]

- López, M.F.C.; Rojo, M.F.G.; Rojo, J.F.G.; García, A.R.R. Comparación de Los Índices ICON y El Componente Estético Del IOTN Para Determinar La Necesidad de Tratamiento Ortodóncico. Rev. Mex. Ortod. 2017, 5, 11–14. [Google Scholar] [CrossRef]

- Horriat, M.; Bailey, N.; Atout, B.; Santos, P.B.; de Sa Leitao Pinheiro, F.H. American Board of Orthodontics (ABO) Discrepancy Index and Peer Assessment Rating (PAR) Index with Models versus Photographs. J. World Fed. Orthod. 2022, 11, 83–89. [Google Scholar] [CrossRef]

- Kunz, F.; Stellzig-Eisenhauer, A.; Zeman, F.; Boldt, J. Artificial Intelligence in Orthodontics: Evaluation of a Fully Automated Cephalometric Analysis Using a Customized Convolutional Neural Network. J. Orofac. Orthop. 2020, 81, 52–68. [Google Scholar] [CrossRef]

- Farooq, M.U. Assessing the Reliability of Digitalized Cephalometric Analysis in Comparison with Manual Cephalometric Analysis. J. Clin. Diagn. Res. 2016, 10, 20–23. [Google Scholar] [CrossRef]

- Koretsi, V.; Kirschbauer, C.; Proff, P.; Kirschneck, C. Reliability and Intra-Examiner Agreement of Orthodontic Model Analysis with a Digital Caliper on Plaster and Printed Dental Models. Clin. Oral Investig. 2019, 23, 3387–3396. [Google Scholar] [CrossRef]

- Aragón, M.L.C.; Pontes, L.F.; Bichara, L.M.; Flores-Mir, C.; Normando, D. Validity and Reliability of Intraoral Scanners Compared to Conventional Gypsum Models Measurements: A Systematic Review. Eur. J. Orthod. 2016, 38, 429–434. [Google Scholar] [CrossRef]

- Jheon, A.H.; Oberoi, S.; Solem, R.C.; Kapila, S. Moving towards Precision Orthodontics: An Evolving Paradigm Shift in the Planning and Delivery of Customized Orthodontic Therapy. Orthod. Craniofac. Res. 2017, 20, 106–113. [Google Scholar] [CrossRef]

- Rajshekar, M.; Julian, R.; Williams, A.M.; Tennant, M.; Forrest, A.; Walsh, L.J.; Wilson, G.; Blizzard, L. The Reliability and Validity of Measurements of Human Dental Casts Made by an Intra-Oral 3D Scanner, with Conventional Hand-Held Digital Callipers as the Comparison Measure. Forensic Sci. Int. 2017, 278, 198–204. [Google Scholar] [CrossRef]

- Yılmaz, H.; Özlü, F.Ç.; Karadeniz, C.; Karadeniz, E.İ. Efficiency and Accuracy of Three-Dimensional Models Versus Dental Casts: A Clinical Study. Turk. J. Orthod. 2019, 32, 214–218. [Google Scholar] [CrossRef]

- Ellakany, P.; Al-Harbi, F.; El Tantawi, M.; Mohsen, C. Evaluation of the Accuracy of Digital and 3D-Printed Casts Compared with Conventional Stone Casts. J. Prosthet. Dent. 2022, 127, 438–444. [Google Scholar] [CrossRef]

- Jedliński, M.; Mazur, M.; Grocholewicz, K.; Janiszewska-Olszowska, J. 3D Scanners in Orthodontics-Current Knowledge and Future Perspectives-A Systematic Review. Int. J. Environ. Res. Public Health 2021, 18, 1121. [Google Scholar] [CrossRef]

- Song, J.; Kim, M. Accuracy on Scanned Images of Full Arch Models with Orthodontic Brackets by Various Intraoral Scanners in the Presence of Artificial Saliva. Biomed Res. Int. 2020, 2020, 2920804. [Google Scholar] [CrossRef]

- Winkler, J.; Gkantidis, N. Trueness and Precision of Intraoral Scanners in the Maxillary Dental Arch: An In Vivo Analysis. Sci. Rep. 2020, 10, 1172. [Google Scholar] [CrossRef]

- Jacob, H.B.; Wyatt, G.D.; Buschang, P.H. Reliability and Validity of Intraoral and Extraoral Scanners. Prog. Orthod. 2015, 16, 38. [Google Scholar] [CrossRef]

- Burzynski, J.A.; Firestone, A.R.; Beck, F.M.; Fields, H.W.; Deguchi, T. Comparison of Digital Intraoral Scanners and Alginate Impressions: Time and Patient Satisfaction. Am. J. Orthod. Dentofac. Orthop. 2018, 153, 534–541. [Google Scholar] [CrossRef]

- Ko, H.C.; Liu, W.; Hou, D.; Torkan, S.; Spiekerman, C.; Huang, G.J. Agreement of Treatment Recommendations Based on Digital vs Plaster Dental Models. Am. J. Orthod. Dentofac. Orthop. 2019, 155, 135–142. [Google Scholar] [CrossRef]

- Gül Amuk, N.; Karsli, E.; Kurt, G. Comparison of Dental Measurements between Conventional Plaster Models, Digital Models Obtained by Impression Scanning and Plaster Model Scanning. Int. Orthod. 2019, 17, 151–158. [Google Scholar] [CrossRef] [PubMed]

- Tomita, Y.; Uechi, J.; Konno, M.; Sasamoto, S.; Iijima, M.; Mizoguchi, I. Accuracy of Digital Models Generated by Conventional Impression/Plaster-Model Methods and Intraoral Scanning. Dent. Mater. J. 2018, 37, 628–633. [Google Scholar] [CrossRef] [PubMed]

- Scott, J.D.; English, J.D.; Cozad, B.E.; Borders, C.L.; Harris, L.M.; Moon, A.L.; Kasper, F.K. Comparison of Automated Grading of Digital Orthodontic Models and Hand Grading of 3-Dimensionally Printed Models. Am. J. Orthod. Dentofac. Orthop. 2019, 155, 886–890. [Google Scholar] [CrossRef] [PubMed]

- Dragstrem, K.; Galang-Boquiren, M.T.S.; Obrez, A.; Costa Viana, M.G.; Grubb, J.E.; Kusnoto, B. Accuracy of Digital American Board of Orthodontics Discrepancy Index Measurements. Am. J. Orthod. Dentofac. Orthop. 2015, 148, 60–66. [Google Scholar] [CrossRef]

| Section | Diagnostic Elements | Measurement Technique |

|---|---|---|

| The final discrepancy index score | Sum of the different sections | The points obtained for each section of the discrepancy index were added. |

| Overjet | Digital/dental casts | Measured from the buccal surface of the most lingualized lower tooth to the middle of the incisal edge of the most vestibularized upper tooth. (Figure 1A and Figure 3A) |

| Overbite | Digital/dental casts | Measured from the incisal edge of the upper tooth to the incisal edge of the lower central or lateral tooth. (Figure 1B and Figure 3B) |

| Anterior open bite | Digital/dental casts | Measured from canine to canine, taking into account a ratio of ≥0 mm. |

| Lateral open bite | Digital/dental casts | Each maxillary posterior tooth was measured at a ratio of ≥0.5. |

| Crowding | Most crowded arch | The most crowded arch was measured from the mesial contact point of the right first molar to the mesial contact point of the left first molar. (Figure 1D–F and Figure 3D–F) |

| Occlusal relationship | Digital/dental casts | The molar angle classification was used for each side of the model. |

| Lingual posterior crossbite | Digital/dental casts | Each maxillary posterior tooth was measured where the maxillary buccal cusp is >0 mm lingual to the buccal cusp tip of the opposing tooth. |

| Buccal posterior crossbite | Digital/dental casts | Each maxillary posterior tooth was measured where the maxillary palatal cusp is >0 mm buccal to the buccal cusp of the opposing tooth. (Figure 1C and Figure 3C) |

| Cephalometrics | Digital and printed radiographs | ANB, SN-MP and to MP angles. |

| Other | Models, radiographs, photographs | Other conditions, which increase treatment complexity. |

| Category | Mean (SD) PR1 | Mean (SD) PR2 | CI 95% IL | CI 95% SL | Ca | ICC |

|---|---|---|---|---|---|---|

| Manual Technique | ||||||

| Overjet | 2.57 (1.39) | 2.71 (1.38) | 0.903 | 0.997 | 0.981 | 0.981 * |

| Overbite | 1.43 (1.39) | 1.29 (1.38) | 0.903 | 0.997 | 0.981 | 0.981 * |

| Anterior open bite | 1.57 (3.04) | 1.43 (2.99) | 0.980 | 0.999 | 0.996 | 0.996 * |

| Lateral open bite | 0.57 (1.51) | 0.43 (1.13) | 0.896 | 0.996 | 0.980 | 0.980 * |

| Crowding | 3.14 (2.03) | 3.00 (2.00) | 0.955 | 0.998 | 0.991 | 0.991 * |

| Occlusal relationship | 2.57 (1.90) | 2.43 (1.61) | 0.941 | 0.998 | 0.988 | 0.988 * |

| Lingual posterior crossbite | 1.29 (1.49) | 1.43 (1.61) | 0.924 | 0.997 | 0.985 | 0.985 * |

| Buccal posterior crossbite | 0.29 (0.75) | 0.43 (1.13) | 0.795 | 0.993 | 0.960 | 0.960 * |

| Cephalometrics | 11.57 (9.65) | 11.29 (9.23) | 0.995 | 1.000 | 0.999 | 0.999 * |

| Other | 4.29 (3.20) | 4.14 (3.07) | 0.981 | 0.999 | 0.996 | 0.996 * |

| Final discrepancy index score | 29.29 (14.45) | 28.57 (13.90) | 0.988 | 1.000 | 0.999 | 0.998 * |

| Digital Technique | ||||||

| Overjet | 3.14 (1.95) | 2.86 (1.67) | 0.951 | 0.876 | 0.996 | 0.978 * |

| Overbite | 1.00 (1.29) | 1.14 (1.46) | 0.902 | 0.997 | 0.981 | 0.981 * |

| Anterior open bite | 1.00 (1.91) | 0.86 (1.57) | 0.940 | 0.998 | 0.988 | 0.988 * |

| Lateral open bite | 0.57 (1.51) | 0.71 (1.89) | 0.937 | 0.998 | 0.988 | 0.988 * |

| Crowding | 3.14 (2.03) | 3.00 (2.00) | 0.955 | 0.998 | 0.991 | 0.991 * |

| Occlusal relationship | 2.29 (1.79) | 2.43 (1.81) | 0.943 | 0.998 | 0.989 | 0.989 * |

| Lingual posterior crossbite | 1.14 (1.21) | 1.29 (1.38) | 0.890 | 0.996 | 0.978 | 0.978 * |

| Buccal posterior crossbite | 0.14 (0.378) | 0.29 (0.756) | 0.430 | 0.980 | 0.889 | 0.889 |

| Cephalometrics | 10.43 (9.41) | 10.29 (9.26) | 0.998 | 1.000 | 1.000 | 1.000 * |

| Other | 3.43 (3.15) | 3.29 (3.09) | 0.981 | 0.999 | 0.996 | 0.996 * |

| Final discrepancy index score | 26.99 (16.22) | 26.14 (15.76) | 0.996 | 1.000 | 0.999 | 0.999 * |

| Category | Mean (SD) PR | Mean (SD) R2 | CI 95% IL | CI 95% SL | Ca | ICC |

|---|---|---|---|---|---|---|

| Manual Technique | ||||||

| Overjet | 2.57 (1.39) | 2.29 (1.25) | 0.769 | 0.993 | 0.965 | 0.958 * |

| Overbite | 1.43 (1.39) | 1.57 (1.61) | 0.919 | 0.997 | 0.984 | 0.984 * |

| Anterior open bite | 1.57 (3.04) | 1.43 (2.69) | 0.978 | 0.999 | 0.996 | 0.996 * |

| Lateral open bite | 0.57 (1.51) | 0.43 (1.13) | 0.896 | 0.996 | 0.980 | 0.980 * |

| Crowding | 3.14 (2.03) | 3.00 (1.63) | 0.819 | 0.994 | 0.967 | 0.964 * |

| Occlusal relationship | 2.57 (1.90) | 2.57 (1.61) | 0.860 | 0.996 | 0.973 | 0.976 * |

| Lingual posterior crossbite | 1.29 (1.49) | 1.14 (1.21) | 0.900 | 0.997 | 0.980 | 0.980 * |

| Buccal posterior crossbite | 0.29 (0.75) | 0.43 (1.13) | 0.795 | 0.993 | 0.960 | 0.960 * |

| Cephalometrics | 11.57 (9.65) | 11.57 (9.05) | 0.986 | 1.000 | 0.997 | 0.998 * |

| Other | 4.29 (3.20) | 4.00 (3.16) | 0.959 | 0.999 | 0.994 | 0.993 * |

| Final discrepancy index score | 29.29 (14.45) | 28.14 (13.85) | 0.966 | 0.999 | 0.998 | 0.996 * |

| Digital Technique | ||||||

| Overjet | 3.14 (1.95) | 3.00 (1.91) | 0.951 | 0.998 | 0.990 | 0.990 * |

| Overbite | 1.00 (1.29) | 1.14 (1.57) | 0.910 | 0.997 | 0.982 | 0.982 * |

| Anterior open bite | 1.00 (1.91) | 1.14 (2.03) | 0.953 | 0.998 | 0.991 | 0.991 * |

| Lateral open bite | 0.57 (1.51) | 0.71 (1.89) | 0.937 | 0.998 | 0.988 | 0.988 * |

| Crowding | 3.14 (2.03) | 3.14 (1.67) | 0.875 | 0.996 | 0.975 | 0.979 * |

| Occlusal relationship | 2.29 (1.79) | 2.29 (1.49) | 0.839 | 0.995 | 0.969 | 0.973 * |

| Lingual posterior crossbite | 1.14 (1.21) | 1.14 (1.21) | 0.686 | 0.991 | 0.940 | 0.948 * |

| Buccal posterior crossbite | 0.14 (0.37) | 0.29 (0.75) | 0.430 | 0.980 | 0.889 | 0.889 |

| Cephalometrics | 10.43 (9.41) | 10.43 (9.64) | 0.995 | 1.000 | 0.999 | 0.999 * |

| Other | 3.43 (3.15) | 3.43 (3.20) | 0.958 | 0.999 | 0.992 | 0.993 * |

| Final discrepancy index score | 26.99 (16.22) | 26.71 (16.03) | 0.996 | 1.000 | 1.000 | 1.000 * |

| Statistic | Freedom Degree | p Value |

|---|---|---|

| 0.162 | 28 | 0.098 |

| 0.094 | 28 | 0.084 |

| Category | Digital Measurement | Manual Measurement |

|---|---|---|

| Mild (S) | 5 (17.9%) | 5 (17.8%) |

| Moderate (S) | 7 (25.0%) | 7 (25.0%) |

| Complex (C) | 9 (32.1%) | 8 (28.6%) |

| Very complex (C) | 7 (25.0%) | 8 (28.6%) |

| Total | 12(S) + 16(C) = 28 (100%) | 12(S) + 16(C) = 28 (100%) |

| ABO Discrepancy Index | Mean (SD) Digital | Mean (SD) Manual | t | p-Value |

|---|---|---|---|---|

| Total discrepancy index score | 24.61 (13.34) | 24.86 (14.14) | 0.296 | 0.769 |

| Overjet | 2.21 (2.21) | 1.75 (1.91) | −1.72 | 0.097 |

| Overbite | 0.61 (1.44) | 0.79 (1.52) | 1.544 | 0.134 |

| Anterior open bite | 1.25 (1.50) | 1.82 (2.76) | −1.384 | 0.178 |

| Lateral open bite | 1.00 (2.27) | 0.93 (1.99) | 0.570 | 0.573 |

| Crowding | 3.00 (2.09) | 2.96 (2.21) | 0.107 | 0.916 |

| Occlusal relationship | 2.14 (1.79) | 2.14 (1.71) | 0.000 | 1.000 |

| Lingual posterior crossbite | 0.75 (1.11) | 0.89 (1.25) | −2.121 | 0.063 |

| Buccal posterior crossbite | 0.00 (0.00) | 0.07 (0.37) | −1.000 | 0.326 |

| Cephalometrics | 10.29 (8.77) | 9.79 (8.92) | 0.706 | 0.486 |

| Other | 2.86 (2.83) | 3.71 (3.47) | −2.213 | 0.066 |

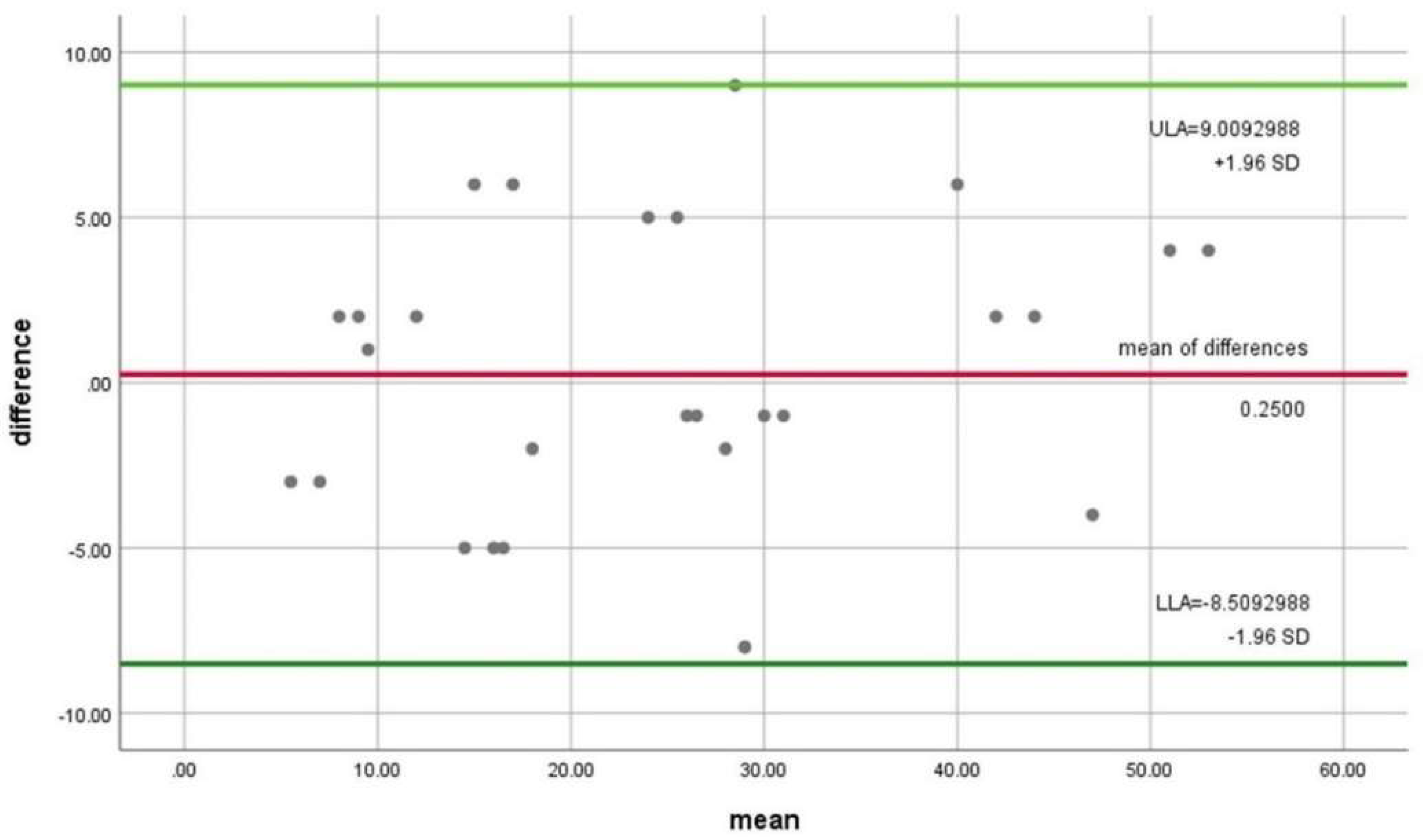

| Mean Digital Technique | Mean Manual Technique | Mean of Differences (Bias) | Standard Deviation | 95% Limits of Agreement Superior/Lower | Regression Beta Value | p-Value | |

|---|---|---|---|---|---|---|---|

| 24.61 | 24.86 | 0.2500 | 4.46903 | 9.0092988 | −8.5092988 | 0.60 | 0.355 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Burgos-Arcega, N.A.; Scougall-Vilchis, R.J.; Morales-Valenzuela, A.A.; Hegazy-Hassan, W.; Lara-Carrillo, E.; Toral-Rizo, V.H.; Velázquez-Enríquez, U.; Salmerón-Valdés, E.N. Agreement of the Discrepancy Index Obtained Using Digital and Manual Techniques—A Comparative Study. Appl. Sci. 2022, 12, 6105. https://doi.org/10.3390/app12126105

Burgos-Arcega NA, Scougall-Vilchis RJ, Morales-Valenzuela AA, Hegazy-Hassan W, Lara-Carrillo E, Toral-Rizo VH, Velázquez-Enríquez U, Salmerón-Valdés EN. Agreement of the Discrepancy Index Obtained Using Digital and Manual Techniques—A Comparative Study. Applied Sciences. 2022; 12(12):6105. https://doi.org/10.3390/app12126105

Chicago/Turabian StyleBurgos-Arcega, Nestor A., Rogelio J. Scougall-Vilchis, Adriana A. Morales-Valenzuela, Wael Hegazy-Hassan, Edith Lara-Carrillo, Víctor H. Toral-Rizo, Ulises Velázquez-Enríquez, and Elias N. Salmerón-Valdés. 2022. "Agreement of the Discrepancy Index Obtained Using Digital and Manual Techniques—A Comparative Study" Applied Sciences 12, no. 12: 6105. https://doi.org/10.3390/app12126105

APA StyleBurgos-Arcega, N. A., Scougall-Vilchis, R. J., Morales-Valenzuela, A. A., Hegazy-Hassan, W., Lara-Carrillo, E., Toral-Rizo, V. H., Velázquez-Enríquez, U., & Salmerón-Valdés, E. N. (2022). Agreement of the Discrepancy Index Obtained Using Digital and Manual Techniques—A Comparative Study. Applied Sciences, 12(12), 6105. https://doi.org/10.3390/app12126105