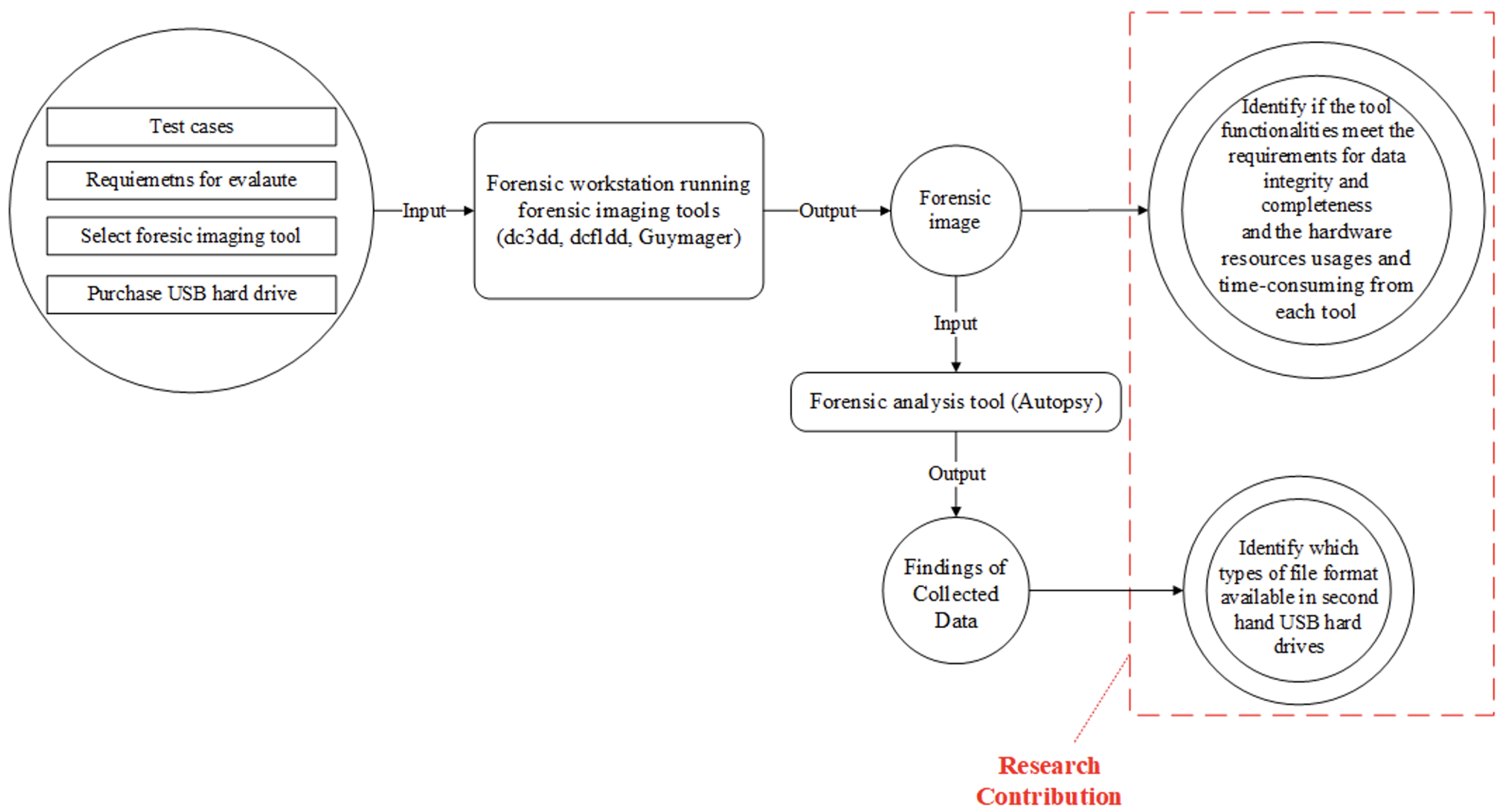

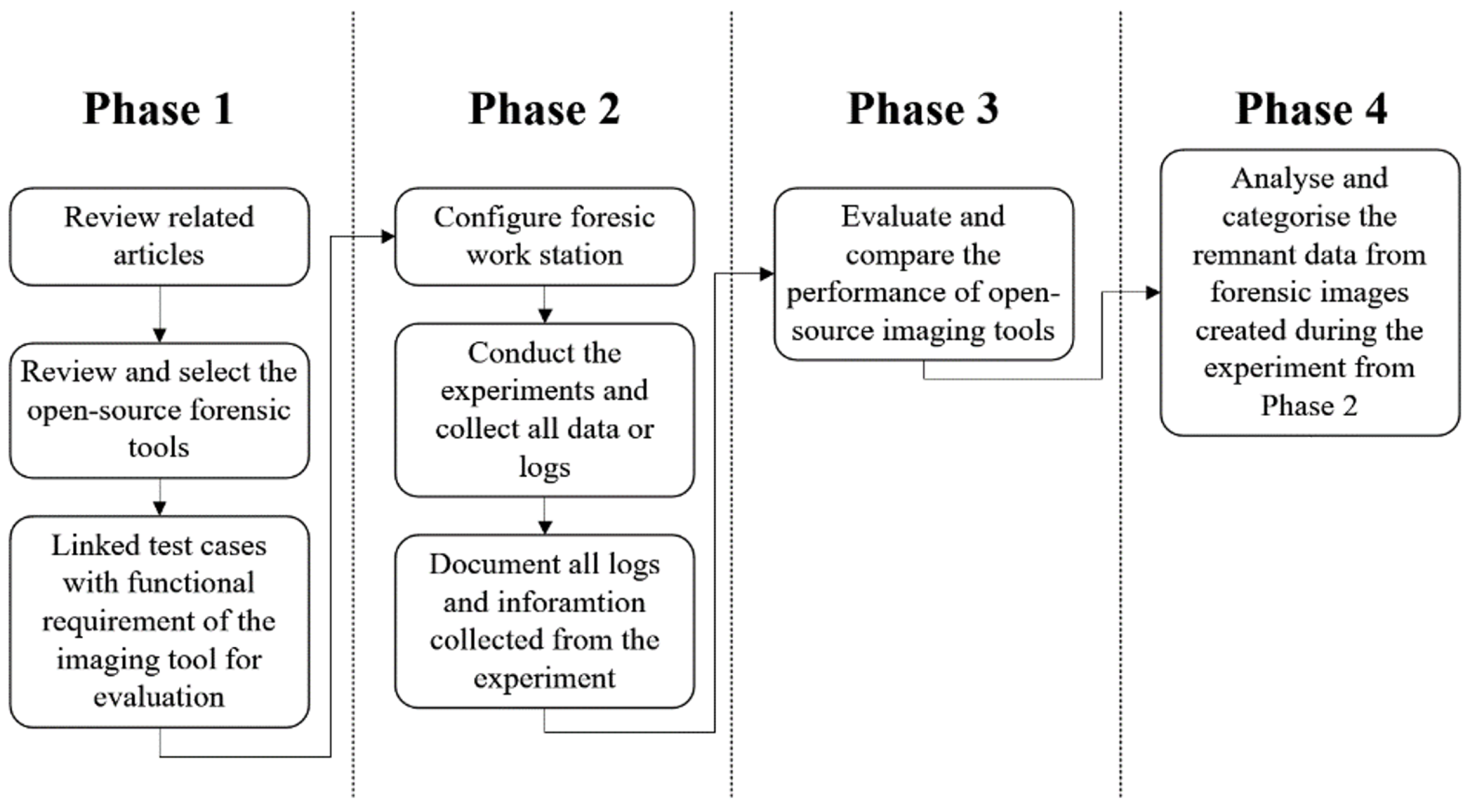

This section discusses the findings from the forensic disk imaging tool experiments and compares the selected tools’ performance (functionalities, hardware usage, and time consumption) in detail. We also discuss the analysis of collected remnant data and present several solutions/guidelines for completely erasing the data to prevent security or privacy risks.

5.1. Findings of Evaluated Forensic Imaging Tools

This section discusses and compares the performance data collected from the experiments of the three selected forensic tools that are best suited for investigating remnant data present in second-hand USB storage devices.

Test Case FIT-ID-01: For this test case, we note that the

DC3DD and

DCFLDD tools successfully passed all the functional requirements, as presented in

Table 5. However, the

DCFLDD tool could not generate a log file after creating a forensic image or calculate the hash value of created image, even though these functions are mentioned in the tool manual. In addition, log file generated by

DCFLDD provided more information (e.g., total time to create the image and verify, source device information, and hash values comparison) compared to

DC3DD (presented in Appendix D (

https://github.com/imdadullahunsw/forensics, accessed on 7 June 2022)). The forensic images created by these tools had the same hash values as the source. In addition, hash values in generated log files were the same as forensic images after being rechecked. Based on experimental data collected for CPU usage, we note that the

DCFLDD used more CPU resources (31%) than

DC3DD (5%) or

DCFLDD (4.8%). In addition, only with hard drive ‘USB-HD-05’ was the percentage of CPU resources used by

DCFLDD during the experiment higher compared to

DC3DD. Furthermore,

DCFLDD used more CPU resources in peak time (77% CPU) compared to

DC3DD (76%) or

DCFLDD (60%). The percentage of CPU resources used by

DCFLDD did not change with different hard drives in the test. However,

DCFLDD and

DC3DD used more CPU resources in peak time (i.e., highest CPU usage) compared to downtime (lowest CPU usage). On average,

DCFLDD used more CPU resources (around 48%) compared to

DC3DD (around 41%) or

DCFLDD (around 43%). Furthermore, we note that the amount of CPU usage from selected tools did not change too much with the hard drive of different storage capacities.

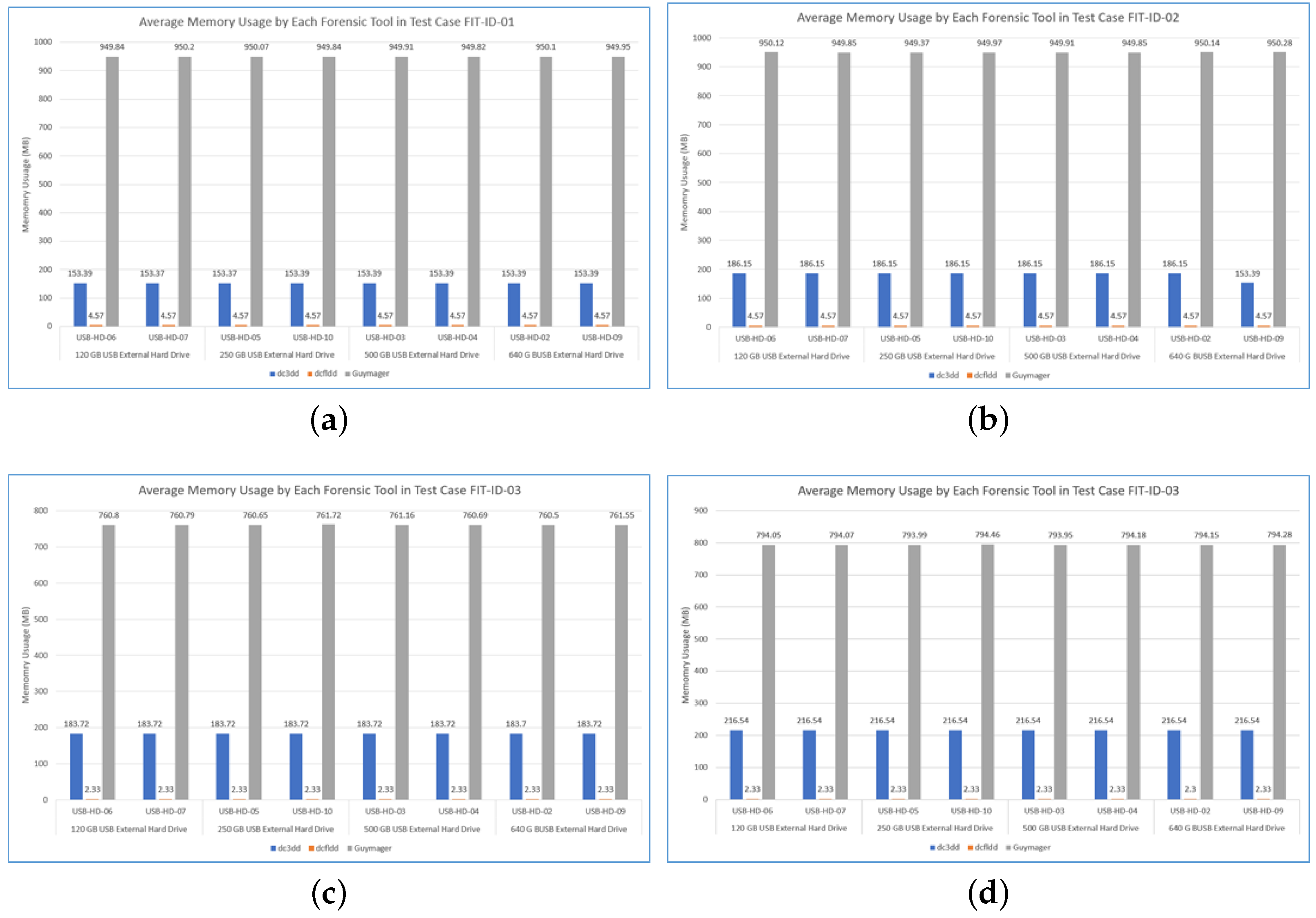

From the memory usage viewpoint, the

DCFLDD used much higher memory resources (949 MB) than

DC3DD (119 MB) or

DCFLDD (4.57 MB). In peak time (i.e., highest memory usage), the amount of memory resources used by

DCFLDD did not change too much (around 0.6 MB), and

DCFLDD did not even change the memory usage, while

DC3DD increased the amount of memory resources from 119 MB to 187.23 MB during peak time. On average, the

DCFLDD tool used most of the memory resources compared to

DC3DD and

DCFLDD. In addition, the amount of memory used by

DCFLDD was six times higher than

DC3DD and 41 times higher than

DCFLDD. Recall from

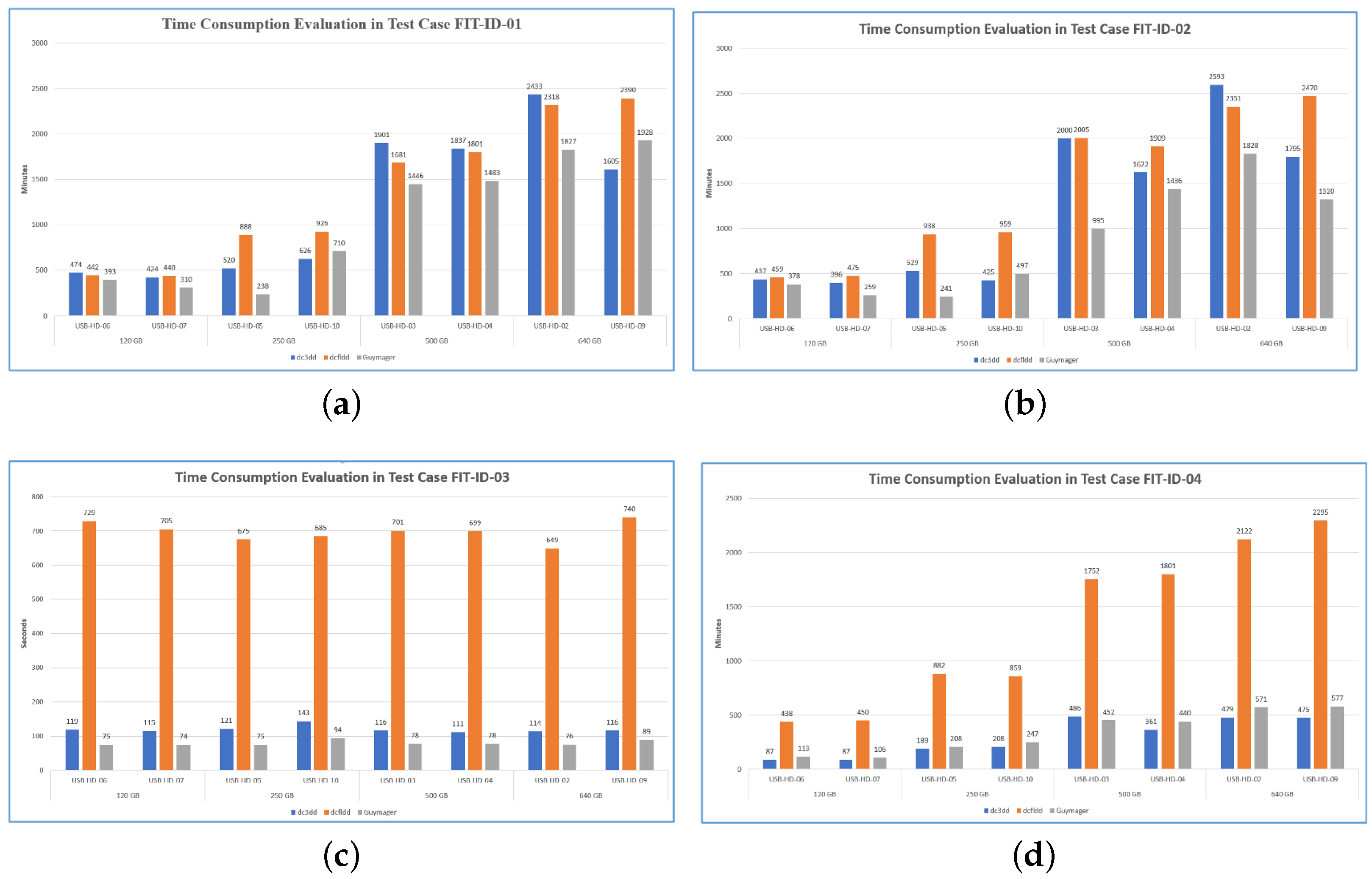

Figure 3a,

DCFLDD was the first tool to finish the process, the second tool was

DC3DD, and the last one was

DCFLDD. When overviewing the total amount of CPU usage, there is not a big difference in the use of CPU between

DC3DD and

DCFLDD. Even though sometimes the CPU resources used by

DC3DD were even higher than

DCFLDD,

DCFLDD was still faster than

DC3DD. However, the amount of memory resources usage affected the speed of the imaging process. The amount of memory usage used by

DCFLDD was significantly higher compared to

DC3DD or

DCFLDD, and

DC3DD also used more memory resources than

DCFLDD (i.e.,

Figure 5a). Although when running each tool in several hard drives that have the same storage capacity, the time it takes for the tool to create forensic images still varies. This may happen because of the differences of USB external hard drives and how the tools were developed to collect the data.

In conclusion for this test, the DCFLDD and DC3DD met the tool functional requirements. However, the information provided by DCFLDD was more detailed, compared to DC3DD, and its friendly graphical user interface (GUI) was a significant advantage compared to other tools. In terms of hardware resources usage, DCFLDD was the tool that used most of the hardware resources compared to others, though it helped DCFLDD to finish the imaging process faster than DC3DD or DCFLDD. Overall, with all the advantages above, DCFLDD was the best tool in the test case.

Test Case FIT-ID-02: In this test case, all the selected forensic imaging tool’s functionalities met the tool functional requirements, as presented in

Table 5. These tools successfully created multiple forensic images and still ensured the integrity of the source device. The

DCFLDD generated the hash values and recorded them into the log file; however, it failed to provide other important information (e.g., reading speed from hard drive, starting time and ending time, and hard drive information). In addition, due to the creation of each forensic image being limited to only 2 GB, it may help the

DCFLDD tool to save the log information and check the hash values for all created forensic images. On the other hand,

DC3DD and

DCFLDD generated more information in log files than

DCFLDD, and

DCFLDD provided more information about the source device than

DC3DD. However,

DC3DD and

DCFLDD created detailed log files which contained the hash values of each created forensic image in the SHA-256 format. On the other hand,

DCFLDD did not log the hash values for each created forensic image.

DCFLDD only logged the overall SHA-256 values and compared it with the source device (Appendix D (

https://github.com/imdadullahunsw/forensics, accessed on 7 June 2022)).

As depicted in

Figure 4b, for low CPU usage calculation, the

DCFLDD used most of the CPU resources (around 40%); however, the CPU usage from

DC3DD and

DCFLDD was inconsistent compared to

DCFLDD. In peak time (i.e., highest CPU usage), there is a significant difference in how

DC3DD and

DCFLDD used CPU resources.

DC3DD used 100% CPU resources with different hard drive storage capacities.

DCFLDD used up to 95.53% CPU resources, whereas

DCFLDD only used up to 62% CPU resources. On average (i.e.,

Figure 4b),

DC3DD used most of the CPU resources (53% to 60%), whereas

DCFLDD used the least (i.e., 34% to 56%). Furthermore,

DCFLDD was the tool with a more consistent use of CPU resources than other tools (around 51%) in different USB hard drives. Similarly, as shown in

Figure 5b, the

DCFLDD used more memory resources (949.72 MB) compared to

DC3DD (119.55 MB) and

DCFLDD (4.57 MB). During the highest memory consumption calculation,

DCFLDD utilised the majority of the memory resources compared to other tools, while this usage did not increase too much between the lowest memory used and highest memory used (only 1.25 MB extra in highest used). On the other hand,

DC3DD used more memory resources during peak time compared to the lowest used, apart from

DCFLDD, which did not change the memory resources even in the highest used and lowest used. On average (as shown in

Figure 5b),

DCFLDD used most of the memory resources (950 MB), followed by

DC3DD (186 MB) and

DCFLDD (4.57 MB).

Base on the experimental results, as shown in

Figure 3b, it took

DCFLDD more time to finish the imaging process compared to

DCFLDD and

DCFLDD. The amount of time to finish the process by

DCFLDD was the most consistent when using different hard drives. Overall,

DCFLDD was the fastest tool, and the slowest one was

DCFLDD. As shown in

Figure 4b,

DC3DD used more CPU resources than

DCFLDD or

DCFLDD. However, the amount of memory used by

DCFLDD (

Figure 5b) on average, was significantly higher than

DC3DD or

DCFLDD, which explains why

DCFLDD was the fastest tool in this test. We note that in this test case, all of the selected forensic tools met the expected tool functionalities. However, the information logged by

DC3DD and

DCFLDD was less detailed compared to

DCFLDD (e.g., hard drive info, time log, and hash log comparison). In addition, the amount of CPU usage by

DC3DD was higher than other tools on average, even though the amount of CPU resources used by

DCFLDD and

DC3DD was similar, the memory usage by

DCFLDD was higher than

DC3DD or

DCFLDD, which affected the imaging process speed of

DCFLDD and

DC3DD. Overall, in this test case,

DCFLDD was the best tool out of the selected tools.

Test Case FIT-ID-03: For various functionalities shown in

Table 5, all of the selected tools successfully stopped the process when the output location met the limit of the storage capacity. However, none of these tools was successful in changing the output destination during the imaging process (Function ‘DI-RO-07’); these tools only notified that the output destination reached the limit storage capacity and stopped the imaging process. In addition, only

DCFLDD sent out a pop-up warning that the output destination was smaller than the forensic image before the starting of the imaging process (Appendix D (

https://github.com/imdadullahunsw/forensics, accessed on 7 June 2022)). The

DC3DD and

DCFLDD started the imaging process without any notifications and only showed the error after the process stopped. All the selected tools logged the correct error for limited output destination storage capacity, except for

DCFLDD’s log files, which contained more information about the source device compared to

DC3DD and

DCFLDD. Furthermore,

DCFLDD did mention whether the output destination had reached the limit storage capacity in the log file. However, no further information (e.g., reading speed, starting time, and ending time) was logged.

The lowest CPU usage from

DCFLDD was inconsistent among the small USB hard drive storage capacity (120 GB and 250 GB hard drive). The amount of CPU resources used by

DC3DD was also inconsistent, except for the percentage of CPU usage from

DCFLDD, which was consistent with any hard drive storage capacities. In the highest CPU usage calculations, the

DCFLDD used more CPU resources (62%) than other tools most of the time, and

DC3DD used 62% CPU resources for only one time. On average (as shown in

Figure 4c), the

DCFLDD utilised most of the CPU resources, whereas the

DCFLDD used the least. Similarly,

DC3DD used more CPU resources for hard drives with significant storage capacity (500 GB and 650 GB hard drives), while

DCFLDD and

DCFLDD fluctuated within the percentage of CPU usage for different USB storage capacity. Similarly, we note that the

DCFLDD used only a small amount of memory resources (2.3 MB). In contrast,

DCFLDD used up to 194 MB of memory resources, and

DC3DD used 183 MB memory resources. In this test, each tool processed the imaging process in a short amount of time (

Figure 3c), which explains why the number of memory resources used by these tools did not change too much, i.e.,

Figure 5c, except for

DC3DD, which increased the memory usage from 119 MB to 250 MB between the downtime and peak time. On average, we note that the

DCFLDD utilised most of the memory resources (950 MB), followed by

DC3DD (186 MB) and

DCFLDD (2.3 MB).

According to our evaluation of time consumption for various tools, as shown in

Figure 3c, all of the selected tools did not stop the process at a consistent time. The amount of time before the task stop was inconsistent, even within the same tool (e.g., d3dd took 121 s in hard drive ‘USB-HD-05’ and took 116 seconds in hard drive ‘USB-HD-09’). Overall,

DCFLDD was the first tool which completed the imaging process (75 s) in the test with limited output location, followed by

DC3DD (119 s) and

DCFLDD (700 s). As shown in

Figure 4c,

DCFLDD used most of the CPU resources; however (in

Figure 5c),

DCFLDD used most of the memory resources. The reason why

DCFLDD was faster than other tools is because of the amount of memory usage by

DCFLDD was higher than

DCFLDD or

DC3DD. Furthermore, in this test case, apart from the fact that all of the tools did not meet all the expected results, at least

DCFLDD notified the user about the error before the process had started. In addition, the information logged by

DCFLDD had more details than other tools. This was a significant advantage for

DCFLDD when comparing the functionalities of the selected tools in this test case. Overall,

DCFLDD was the best tool in this test case because of the detailed log information, friendly GUI, and the necessary time to finish the task.

Test Case FIT-ID-04: In this test case, based on

Table 5,

DCFLDD did not meet the functional requirements. The tool evaluation results from this test are the same as the test case ‘FIT-ID-01’; the

DCFLDD was not able to check the created forensic image and log any information into the log file (i.e., for tool function ‘DI-RO-05’ and ‘DI-RO-17’).

DCFLDD and

DCFLDD generated the log and calculated the hash values after the imaging process as expected, aside from

DC3DD, which did not log any information and verify the source device integrity. Additionally, the log information generated by

DCFLDD contained more information compared to

DC3DD (e.g., source device information, average imaging speed, and total time spent).

For lower CPU usage calculation, we note that the

DCFLDD (30%) and

DCFLDD (31%) used more CPU resources than

DCFLDD (15%). Similarly, the amount of CPU resources used by

DCFLDD did not change when using different hard drive storage capacities. In peak time,

DCFLDD was the tool which used most of the CPU resources (84%) compared to

DC3DD (76%) and

DCFLDD (61%). Even though

DCFLDD used more CPU resources in peak time than

DC3DD, on average (

Figure 4d),

DC3DD used more CPU resources (52%) than

DCFLDD (49.83%), followed by

DCFLDD (46%). In addition, for lower memory usage, the

DCFLDD used only a small amount of memory resources (2.33 MB) compared to

DCFLDD (183.72) and

DCFLDD (727 MB). The amount of memory resources used by

DC3DD and

DCFLDD did not change in peak time, except for

DCFLDD, which used more memory resources (from 727 MB to 793 MB). Likewise,

DCFLDD,

DCFLDD and

DC3DD used the same amount of memory resources in different hard drive storage capacities. On average (

Figure 5d),

DCFLDD was the tool used most of the memory resources (760 MB), followed by

DC3DD (183 MB) and

DCFLDD (2.33 MB).

During our experimentation, we note that

DC3DD first finished the tasks, followed by

DCFLDD and

DC3DD (

Figure 3d). We note that among all the previous experimentations with the three test cases,

DCFLDD was always the fastest tool. However, the result from

Figure 3d is different from other test cases, where the performance of

DC3DD is better than

DCFLDD. The only difference between this test case and test case ‘FIT-ID-01’ is that the source devices were connected directly to the forensic station without a write blocker. The

DC3DD used more CPU resources than

DCFLDD on average. In addition,

DCFLDD used more memory resources than

DC3DD. However, in the test cases ‘FIT-ID-01’ and ‘FIT-ID-02’,

DC3DD used more CPU and memory resources than in the test cases (‘FIT-ID-04’). Furthermore, with the write blocker (test cases ‘FIT-ID-01’ and ‘FIT-ID-02’)

DCFLDD used less CPU and memory resources than in test case ‘FIT-ID-04’, which explains why in this test case,

DCFLDD finished the task slower than

DC3DD. Likewise, in the test case ‘FIT-ID-04’,

DCFLDD did not meet the functional requirements, and the generated log file by

DC3DD had less information than the generated log file by

DCFLDD. However,

DC3DD was the fastest tool in this test and used less memory than

DCFLDD. Nevertheless,

DC3DD used more CPU resources than any other tools in this test case. Overall, even though

DC3DD was faster than

DCFLDD for a few minutes, in terms of functionaries and user friendliness,

DCFLDD was better than

DC3DD.