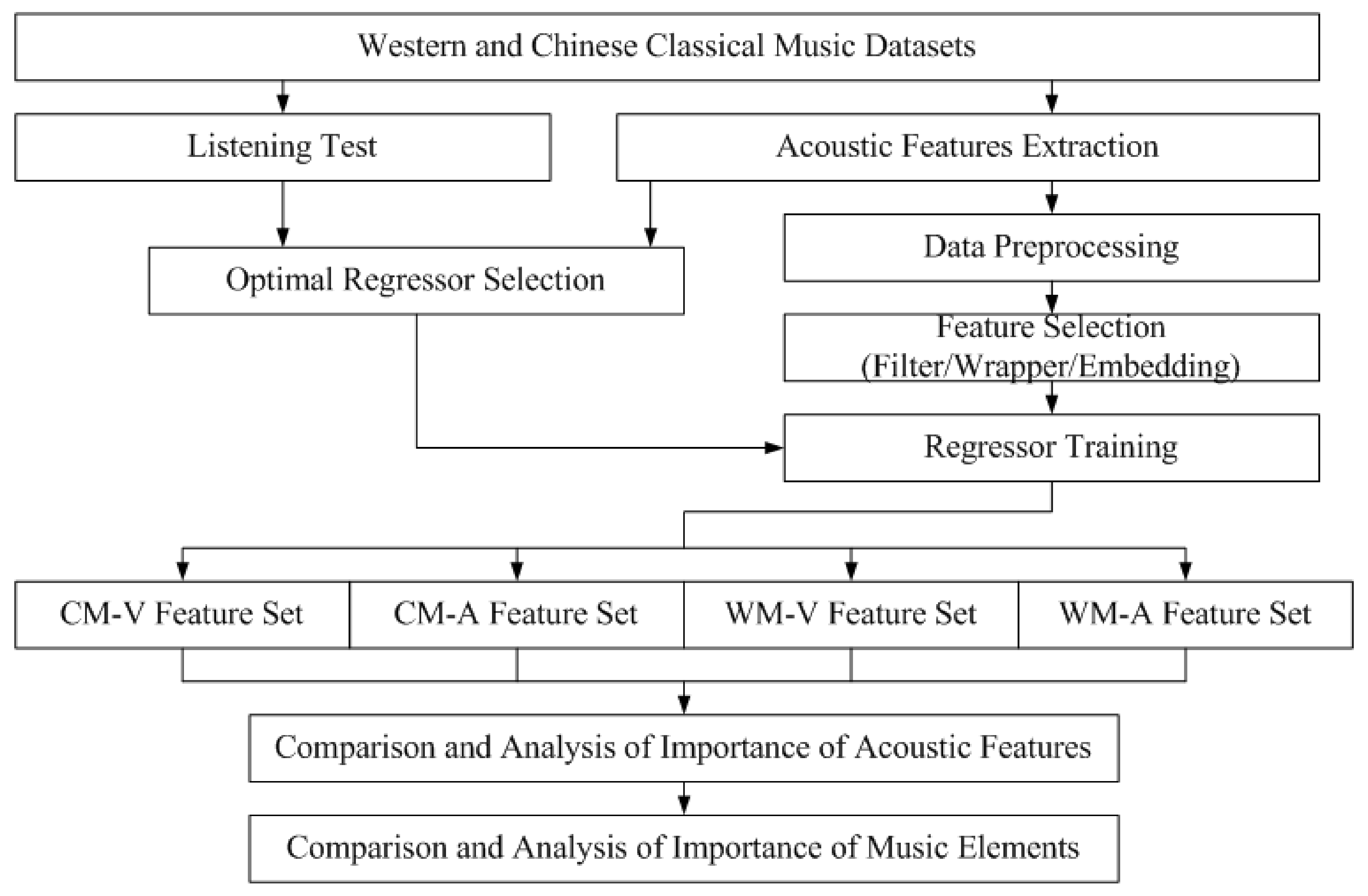

Comparison and Analysis of Acoustic Features of Western and Chinese Classical Music Emotion Recognition Based on V-A Model

Abstract

:1. Introduction

- For Western and Chinese classical MER datasets, what kind of combination algorithm of pre-processing and feature selection methods can achieve the optimal effect of emotion regression prediction?

- For Western and Chinese classical music, which acoustic features should be selected as being the most culturally representative and effective for participants’ MER, respectively?

- Based on extracted feature sets for different music datasets, what are the differences in the influences of different music elements on emotion regression prediction for different datasets?

2. The Datasets and Acoustic Features

2.1. The Datasets

- Western Classical Music Dataset

- Chinese Classical Music Dataset

2.2. Acoustic Features

- Timbre

- Pitch

- Loudness

- Rhythm

3. Music Emotion Regression Experiment

3.1. Optimal Regression Model Selection

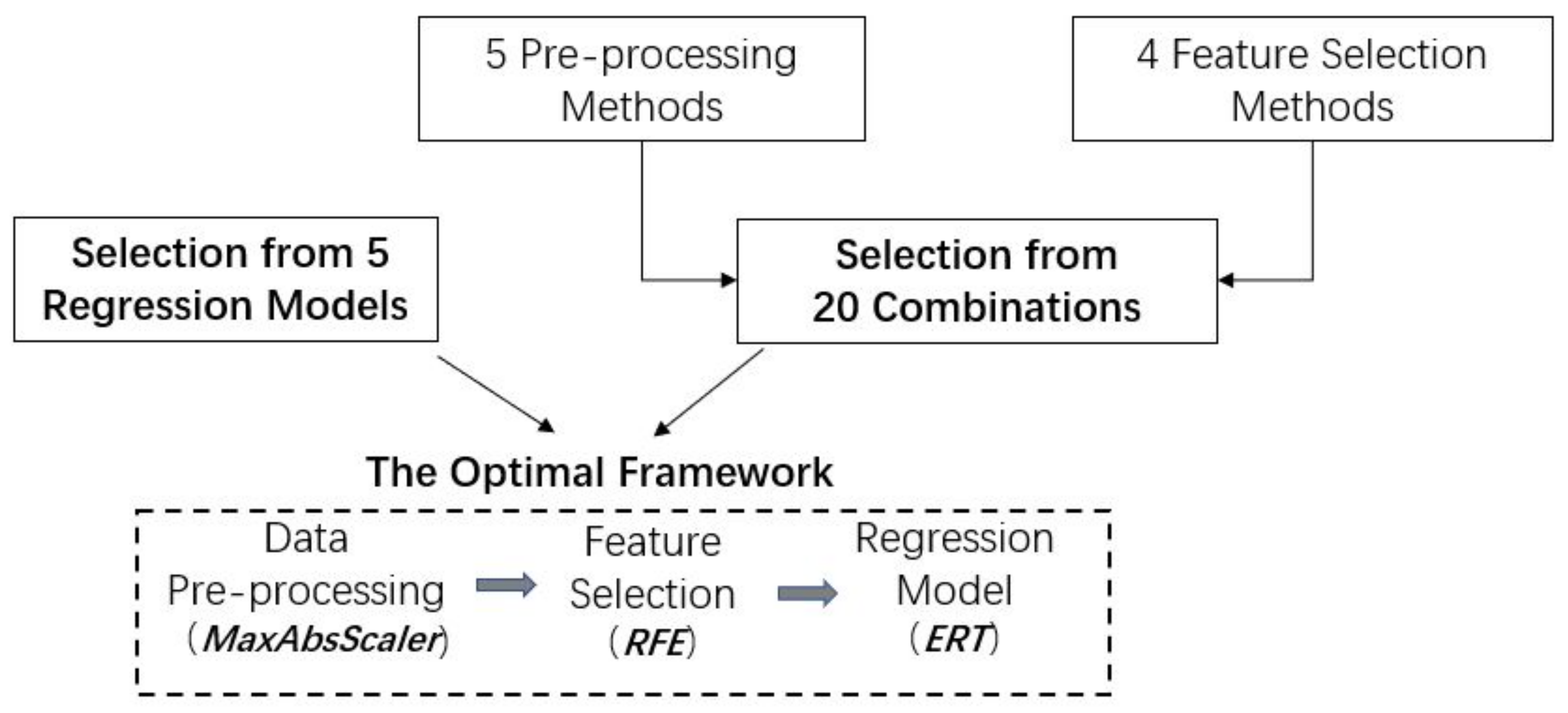

3.2. Optimal Combination Algorithm for Pre-Processing and Feature Selection

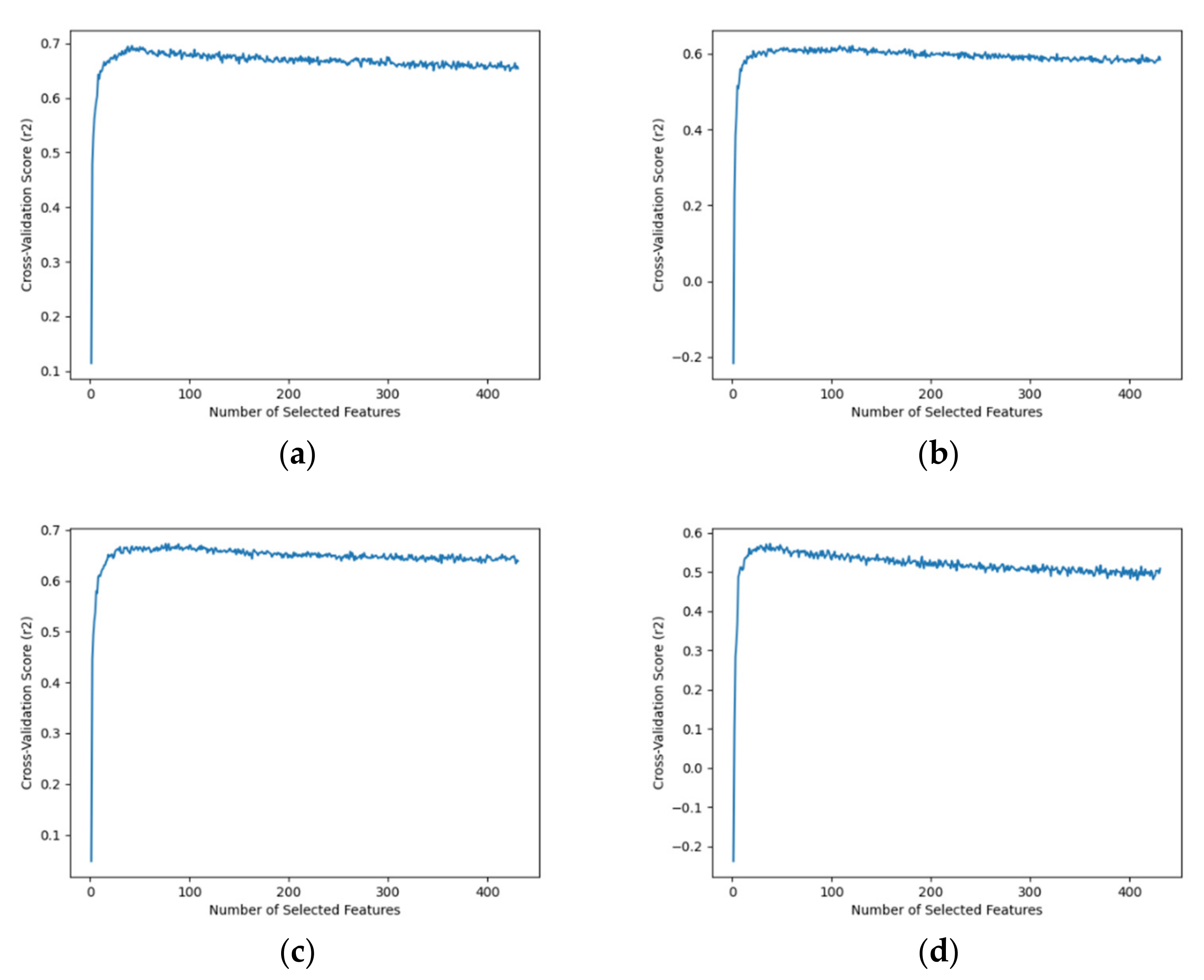

3.3. Determination of Music Emotion Feature Sets

4. Results and Discussion

4.1. Feature Sets of Western and Chinese Classical Music Emotion Regression

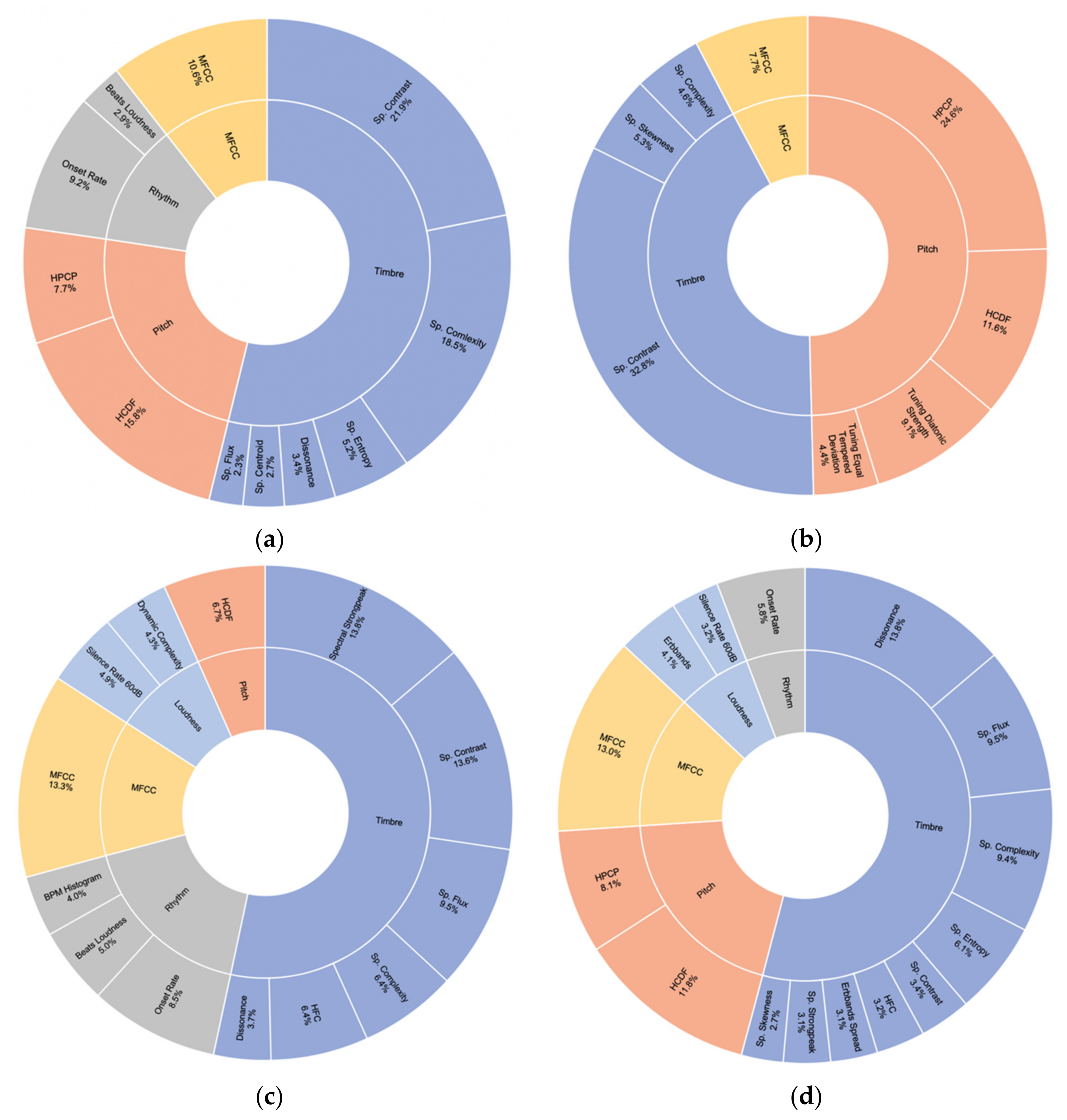

4.2. Importance of Acoustic Features for Different Feature Sets

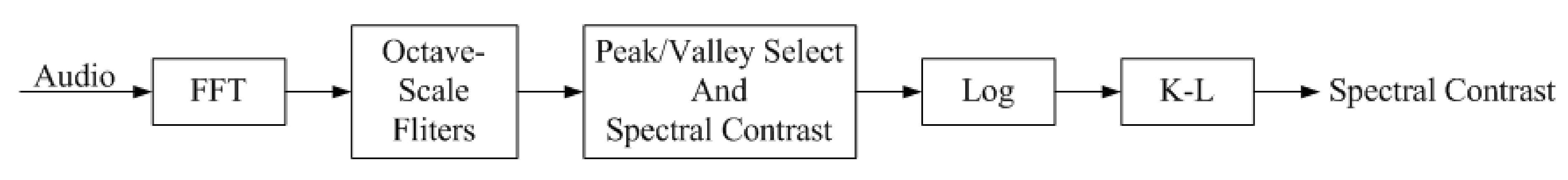

- Spectral Contrast

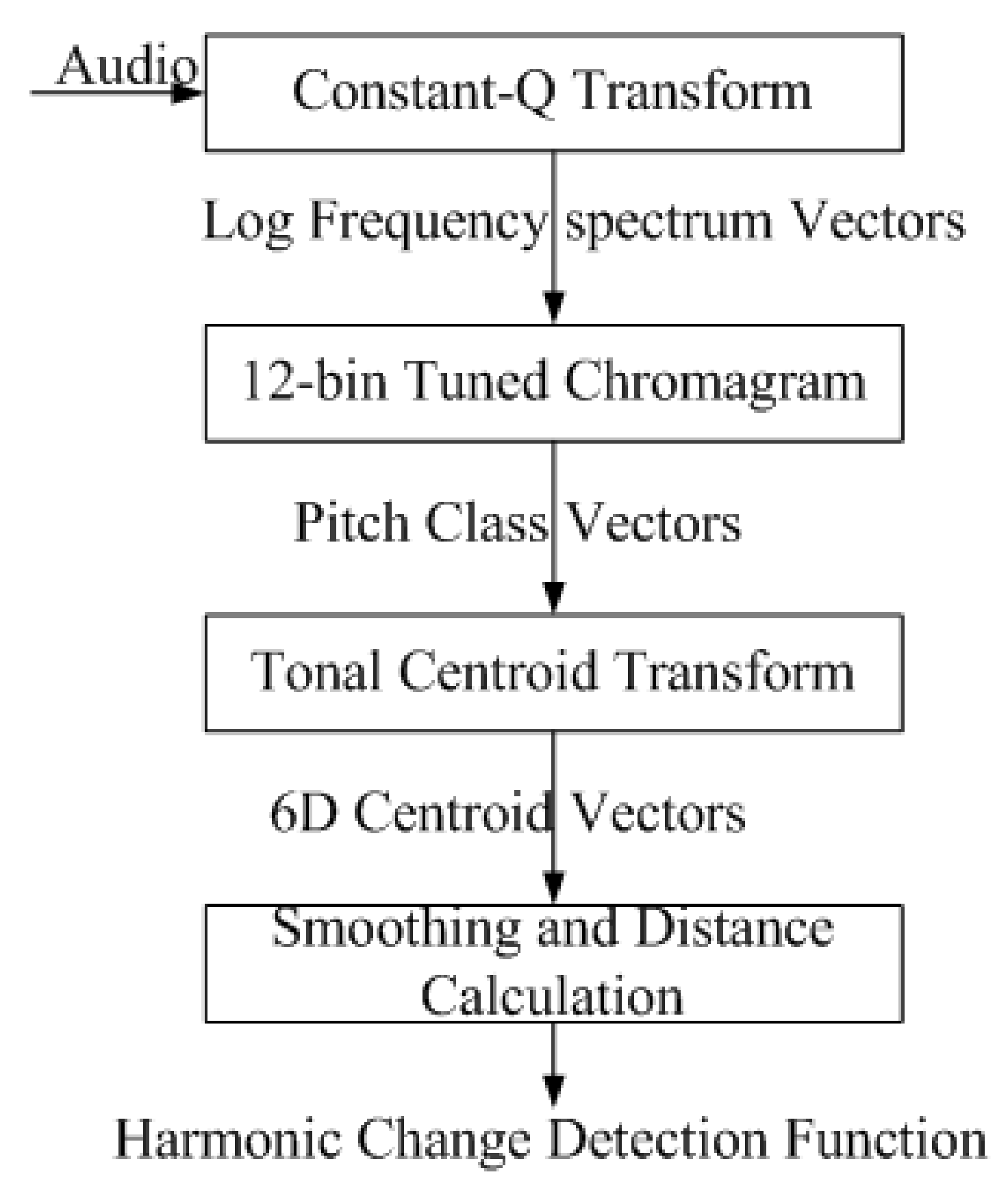

- HCDF

- Onset Rate

- Spectral Complexity

- Spectral Flux

- Dissonance

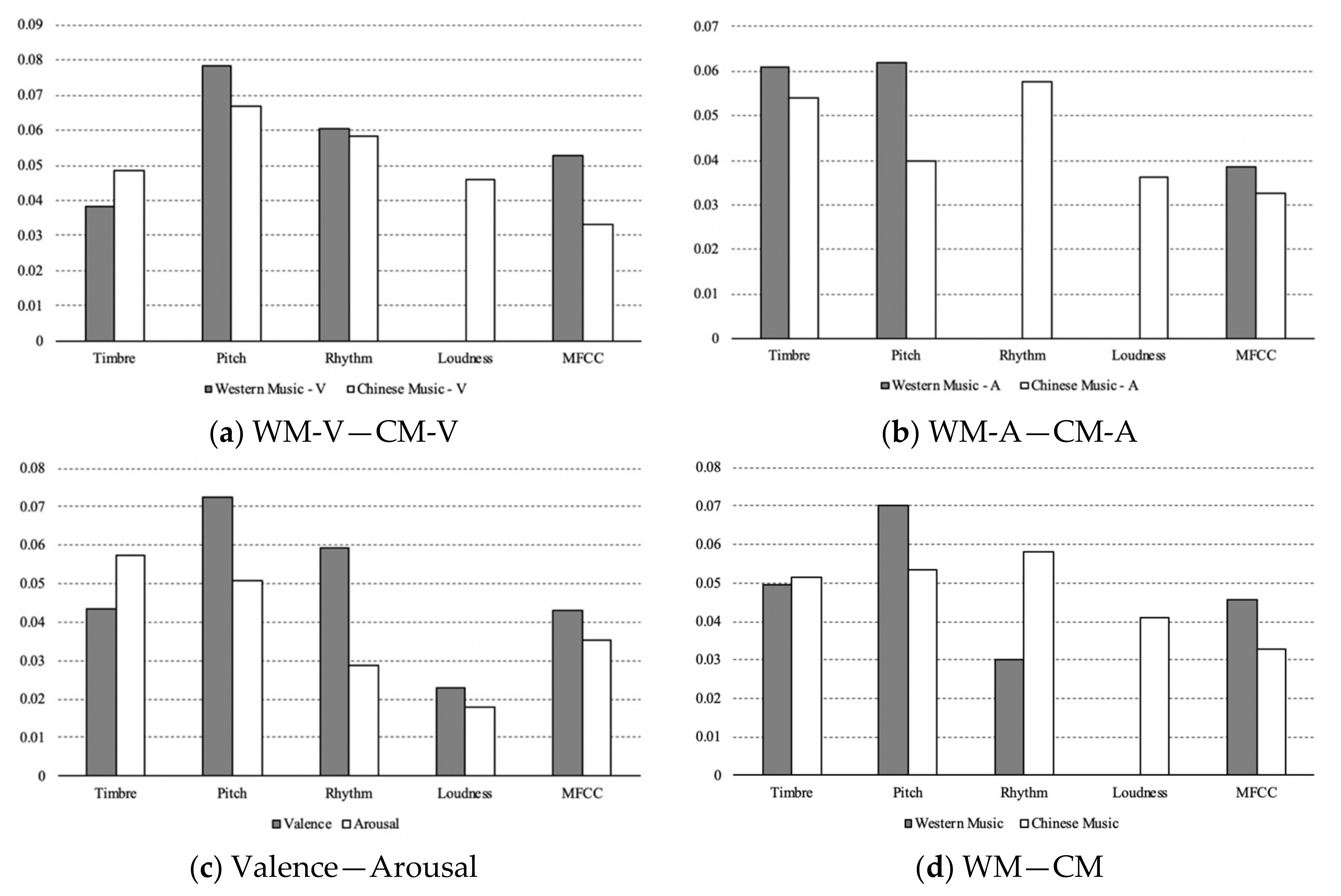

4.3. Importance of Musical Elements for Different Feature Sets

5. Conclusions

- Based on our cross-cultural dataset, the optimal combination algorithm of pre-processing and feature selection is MaxAbsScaler pre-processing and the wrapper method using RFE based on extremely randomized trees.

- The number of important acoustic features for Western classical music dataset is larger than that for Chinese classical music dataset. For the Western classical music dataset, the distribution of the importance of acoustic features is mainly concentrated on several features such as spectral contrast, whereas for the Chinese classical music dataset, the difference in the importance of each feature is relatively small.

- Spectral contrast is the most significant feature for both valence and arousal perception of the Western classical music dataset. HCDF is significant for both valence and arousal perception of the Western and Chinese classical music datasets, which indicates that HCDF is culturally universal. Regardless of whether it is a Western or Chinese classical music dataset, the onset rate’s influence on valence perception is stronger than its influence on arousal. Compared with Western classical music dataset, spectral flux is more important for valence and arousal perception of the Chinese classical music dataset. Dissonance is a culturally specific feature of the Chinese classical music dataset.

- For valence, although pitch and rhythm are very important for both cultures’ classical music dataset, loudness is specifically significant for Chinese classical music dataset. For arousal, pitch and timbre are the most important musical elements for the Western classical music dataset, whereas all musical elements have a significant impact on the Chinese classical music dataset, especially rhythm features.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Kim, Y.E.; Schmidt, E.M.; Migneco, R.; Morton, B.G.; Richardson, P.; Scott, J.; Speck, J.A.; Turnbull, D. State of the Art Report: Music Emotion Recognition: A State of the Art Review. In Proceedings of the 11th International Society for Music Information Retrieval Conference (ISMIR), Utrecht, The Netherlands, 9–13 August 2010; pp. 255–266. [Google Scholar]

- Yang, X.; Dong, Y.; Li, J. Review of Data Features-Based Music Emotion Recognition Methods. Multimed. Syst. 2018, 24, 365–389. [Google Scholar] [CrossRef]

- Yang, Y.H.; Chen, H.H. Music Emotion Recognition; CRC Press: Boca Raton, FL, USA, 2011. [Google Scholar]

- Mitrovic, D.; Zeppelzauer, M.; Breiteneder, C. Features for Content-Based Audio Retrieval. Adv. Comput. 2010, 78, 71–150. [Google Scholar]

- Juslin, P.N.; Sloboda, J.A. Handbook of Music and Emotion: Theory, Research, Applications; Oxford University Press: New York, NY, USA, 2010. [Google Scholar]

- Russell, J.A. A Circumplex Model of Affect. J. Pers. Soc. Psychol. 1980, 39, 1161–1178. [Google Scholar] [CrossRef]

- Posner, J.; Russell, J.A.; Peterson, B.S. The Circumplex Model of Affect: An Integrative Approach to Affective Neuroscience, Cognitive Development and Psychopathology. Dev. Psychopathol. 2005, 17, 715–734. [Google Scholar] [CrossRef] [PubMed]

- Eerola, T.; Vuoskoski, J.K. A Review of Music and Emotion Studies: Approaches, Emotion Models, and Stimuli. Music Percept. 2013, 30, 307–340. [Google Scholar] [CrossRef]

- Panda, R.; Rocha, B.; Paiva, R.P. Music Emotion Recognition with Standard and Melodic Audio Features. Appl. Artif. Intell. 2015, 30, 313–334. [Google Scholar] [CrossRef]

- Downie, J.S.; Ehmann, A.F.; Bay, M.; Jones, M.C. The Music Information Retrieval Evaluation Exchange: Some Observations and Insights. In Advances in Music Information Retrieval; Springer: Berlin/Heidelberg, Germany, 2010; pp. 93–115. [Google Scholar]

- Schmidt, E.M.; Turnbull, D.; Kim, Y.E. Feature Selection for Content-Based, Time-Varying Musical Emotion Regression. In Proceedings of the International Conference on Multimedia Information Retrieval, Philadelphia, PA, USA, 29–31 March 2010; Association for Computing Machinery: New York, NY, USA; pp. 267–274. [Google Scholar]

- Liu, Y.; Liu, Y.; Zhao, Y.; Hua, K.A. What Strikes the Strings of Your Heart?—Feature Mining for Music Emotion Analysis. IEEE Trans. Affect. Comput. 2015, 6, 247–260. [Google Scholar] [CrossRef]

- Zhang, J.L.; Huang, X.L.; Yang, L.F.; Xu, Y.; Sun, S.T. Feature Selection and Feature Learning in Arousal Dimension of Music Emotion by Using Shrinkage Methods. Multimed. Syst. 2017, 23, 251–264. [Google Scholar] [CrossRef]

- Grekow, J. Audio Features Dedicated to the Detection and Tracking of Arousal and Valence in Musical Compositions. J. Inf. Telecommun. 2018, 2, 322–333. [Google Scholar] [CrossRef] [Green Version]

- Rolls, E.T. Neurobiological Foundations of Aesthetics and Art. New Ideas Psychol. 2017, 47, 121–135. [Google Scholar] [CrossRef] [Green Version]

- Kılıç, B.; Aydın, S. Classification of Contrasting Discrete Emotional States Indicated by EEG Based Graph Theoretical Network Measures. Neuroinformatics 2022, 1–15. [Google Scholar] [CrossRef] [PubMed]

- Jeon, B.; Kim, C.; Kim, A.; Kim, D.; Park, J.; Ha, J.-W. Music Emotion Recognition via End-to-End Multimodal Neural Networks. In Proceedings of the RecSys’17 Posters Proceedings, Como, Italy, 27–31 July 2017. [Google Scholar]

- Hizlisoy, S.; Yildirim, S.; Tufekci, Z. Music emotion recognition using convolutional long short term memory deep neural networks. Eng. Sci. Technol. Int. J. 2020, 24, 760–767. [Google Scholar] [CrossRef]

- Orjesek, R.; Jarina, R.; Chmulik, M. End-to-End Music Emotion Variation Detection using Iteratively Reconstructed Deep Features. Multimed. Tools Appl. 2022, 81, 5017–5031. [Google Scholar] [CrossRef]

- Lee, J.H.; Hill, T.; Work, L. What Does Music Mood Mean for Real Users? In Proceedings of the iConference, Toronto, ON, Canada, 7–10 February 2012; pp. 112–119. [Google Scholar]

- Lee, J.H.; Hu, X. Cross-Cultural Similarities and Differences in Music Mood Perception. In Proceedings of the iConference, Berlin, Germany, 4–7 March 2014; pp. 259–269. [Google Scholar]

- Wu, W.; Xie, L. Discriminating Mood Taxonomy of Chinese Traditional Music and Western Classical Music with Content Feature Sets. In Proceedings of the IEEE Congress on Image and Signal Processing, Sanya, China, 27–30 May 2008; pp. 148–152. [Google Scholar]

- Hu, X.; Lee, J.H.; Choi, K.; Downie, J.S. A Cross-Cultural Study on the Mood of K-POP Songs. In Proceedings of the 15th International Society for Music Information Retrieval Conference (ISMIR), Taipei, Taiwan, 27–31 October 2014; pp. 385–390. [Google Scholar]

- Yang, Y.H.; Hu, X. Cross-Cultural Music Mood Classification: A Comparison on English and Chinese Songs. In Proceedings of the 13th International Society for Music Information Retrieval Conference (ISMIR), Porto, Portugal, 8–12 October 2012; pp. 19–24. [Google Scholar]

- Yang, Y.H.; Lin, Y.C.; Su, Y.F.; Chen, H.H. A regression approach to music emotion recognition. IEEE Trans. Audio Speech Lang. Process. 2008, 16, 448–457. [Google Scholar] [CrossRef]

- Hu, X.; Yang, Y.H. Cross-Dataset and Cross-Cultural Music Mood Prediction: A Case on Western and Chinese Pop Songs. IEEE Trans. Affect. Comput. 2017, 8, 228–240. [Google Scholar]

- Soleymani, M.; Caro, M.N.; Schmidt, E.M.; Sha, C.Y.; Yang, Y.H. 1000 Songs for Emotional Analysis of Music. In Proceedings of the ACM International Workshop on Crowdsourcing for Multimedia, Barcelona, Spain, 21–25 October 2013; pp. 1–6. [Google Scholar]

- Eerola, T.; Vuoskoski, J.K. A Comparison of the Discrete and Dimensional Models of Emotion in Music. Psychol. Music 2010, 39, 18–49. [Google Scholar]

- Schimmack, U.; Grob, A. Dimensional Models of Core Affect: A Quantitative Comparison by Means of Structural Equation Modeling. Eur. J. Pers. 2000, 14, 325–345. [Google Scholar]

- Lerch, A. An Introduction to Audio Content Analysis: Applications in Signal Processing and Music Informatics; Wiley-IEEE Press: Piscataway, NJ, USA, 2012. [Google Scholar]

- Vijayavani, E.; Suganya, P.; Lavanya, S.; Elakiya, E. Emotion Recognition Based on MFCC Features using SVM. Int. J. Adv. Res. Comput. Sci. Manag. Stud. 2014, 2, 31–36. [Google Scholar]

- Panda, R.; Malheiro, R.; Paiva, R. Novel Audio Features for Music Emotion Recognition. IEEE Trans. Affect. Comput. 2018, 11, 614–626. [Google Scholar] [CrossRef]

- Homepage—Essentia 2.1-Beta6-Dev Documentation. Available online: https://essentia.upf.edu (accessed on 5 April 2020).

- Lartillot, O.; Toiviainen, P. A MATLAB Toolbox for Musical Feature Extraction from Audio. In Proceedings of the 10th International Conference on Digital Audio Effects (DAFx), Bordeaux, France, 10–15 September 2007; pp. 237–244. [Google Scholar]

- Zwicker, E.; Feldtkeller, R. Das Ohr als Nachrichtenempfänger, 2nd ed.; S. Hirzel Verlag: Stuttgart, Germany, 1967. [Google Scholar]

- Moore, B. An Introduction to the Psychology of Hearing, 4th ed.; Academic Press: London, UK, 1997. [Google Scholar]

- Eyben, F. Real-Time Speech and Music Classification by Large Audio Feature Space Extraction; Springer: Cham, Switzerland, 2016. [Google Scholar]

- Streich, S.; Herrera, P. Detrended Fluctuation Analysis of Music Signals: Danceability Estimation and further Semantic Characterization. In Proceedings of the AES 118th Convention, Barcelona, Spain, 28–31 May; 2005; pp. 765–773. [Google Scholar]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-Learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Jiang, D.; Lu, L.; Zhang, H.; Tao, J.; Cai, L. Music Type Classification by Spectral Contrast Feature. In Proceedings of the IEEE International Conference on Multimedia and Expo (ICME’02), Lausanne, Switzerland, 26–29 August 2002; pp. 113–116. [Google Scholar]

- Harte, C.; Sandler, M.; Gasser, M. Detecting Harmonic Change in Musical Audio. In Proceedings of the ACM International Multimedia Conference and Exhibition, Santa Barbara, CA, USA, 23–27 October 2006; pp. 21–26. [Google Scholar]

- Brossier, P.M.; Bello, J.P.; Plumbley, M.D. Fast Labelling of Notes in Music Signals. In Proceedings of the 5th International Conference on Music Information Retrieval (ISMIR), Barcelona, Spain, 10–14 October 2004; pp. 331–336. [Google Scholar]

- Laurier, C.; Meyers, O.; Serrà, J.; Blech, M.; Herrera, P.; Serra, X. Indexing Music by Mood: Design and Integration of an Automatic Content-Based Annotator. Multimed. Tools Appl. 2009, 48, 161–184. [Google Scholar] [CrossRef] [Green Version]

- Sethares, W.A. Tuning, Timber, Spectrum, Scale; Springer: Berlin/Heidelberg, Germany, 2004. [Google Scholar]

- Zhou, W. A study on change of the aesthetics of timbre of Chinese pop music. In Proceedings of the 1st Asia International Symposium on Arts, Literature, Language and Culture, Fuzhou, China, 25–26 May 2019; pp. 105–110. [Google Scholar]

- Xin, W.; Zihou, M. The consonance evaluation method of Chinese plucking instruments. Acta Acust. 2013, 38, 486–492. [Google Scholar]

| Category | Feature | Dimensionality |

|---|---|---|

| Timbre | Spectral characteristics—Centroid, Complexity, Decrease, Entropy, Skewness, Kurtosis, RMS, Rolloff, Strongpeak, Spread, Contrast Coeffs (1–6), Irregularity, Spectral Flux, High Frequency Content (HFC) | 78 |

| Spectral characteristics of ERB Bands—Crest, Flatness, Kurtosis, Skewness, Spread | 20 | |

| Temporal characteristics—Lowenergy, ZCR | 5 | |

| Mel-frequency cepstral coefficients (MFCC) | 52 | |

| Perception characteristic—Dissonance | 4 | |

| Pitch | Harmonic Pitch Class Profile (HPCP), HPCP Entropy | 148 |

| Harmonic Change Detection Function (HCDF) | 6 | |

| Tuning-related characteristics—Tuning Diatonic Strength, Tuning Equal Tempered Deviation, Tuning Frequency, Tuning Nontempered Energy Ratio | 4 | |

| Loudness | Dynamic Complexity | 1 |

| Silence Rate (30 dB/60 dB) | 8 | |

| ERB Bands Energy | 160 | |

| Spectral Bands Energy—High, Middle High, Middle Low, Low | 16 | |

| Rhythm | Onset Rate, BPM, Tempo | 6 |

| BPM Histogram—First Peak BPM, First Peak Spread, First Weight, Second Peak BPM, Second Peak Spread, Second Weight | 24 | |

| Beats Loudness Band | 24 | |

| Danceability | 1 |

| Dataset/Classifier | LR | SVR | XGBoost | ERT | KNN |

|---|---|---|---|---|---|

| Valence | −1.37 | −0.01 | 0.62 | 0.66 | −0.15 |

| Arousal | −1.37 | −0.01 | 0.39 | 0.52 | −0.11 |

| Dataset | Method | StandardScaler | MinMaxScaler | MaxAbsScaler | QTU | QTG |

|---|---|---|---|---|---|---|

| Western Music Valence | Filter-C | 0.5743 | 0.5623 | 0.6289 | 0.6492 | 0.6725 |

| Filter-I | 0.6520 | 0.6401 | 0.6698 | 0.6244 | 0.6693 | |

| Wrapper | 0.6442 | 0.6697 | 0.6804 | 0.6614 | 0.6232 | |

| Embedded | 0.6718 | 0.6798 | 0.6772 | 0.6497 | 0.6520 | |

| Western Music Arousal | Filter-C | 0.6164 | 0.5268 | 0.5277 | 0.5178 | 0.5917 |

| Filter-I | 0.6140 | 0.6037 | 0.5326 | 0.5861 | 0.5822 | |

| Wrapper | 0.5377 | 0.6193 | 0.5967 | 0.6036 | 0.5797 | |

| Embedded | 0.5917 | 0.5659 | 0.5927 | 0.5931 | 0.5821 | |

| Chinese Music Valence | Filter-C | 0.5851 | 0.6055 | 0.6248 | 0.5964 | 0.5658 |

| Filter-I | 0.5561 | 0.5895 | 0.6084 | 0.5161 | 0.6247 | |

| Wrapper | 0.6101 | 0.6424 | 0.6707 | 0.6747 | 0.6189 | |

| Embedded | 0.6628 | 0.5429 | 0.6306 | 0.6909 | 0.6737 | |

| Chinese Music Arousal | Filter-C | 0.6661 | 0.6280 | 0.6712 | 0.6155 | 0.5823 |

| Filter-I | 0.5746 | 0.4715 | 0.6027 | 0.6325 | 0.5890 | |

| Wrapper | 0.6786 | 0.6654 | 0.6821 | 0.6589 | 0.6344 | |

| Embedded | 0.6113 | 0.6222 | 0.6146 | 0.6787 | 0.6069 |

| Data Subset | Global Optimal Score (R2) | Global Optimal Feature Number | Local Optimal Score (R2) | Local Optimal Feature Number |

|---|---|---|---|---|

| Western Classical Music—V | 0.695 | 42 | 0.674 | 21 |

| Western Classical Music—A | 0.572 | 38 | 0.560 | 17 |

| Chinese Classical Music—V | 0.621 | 121 | 0.607 | 21 |

| Chinese Classical Music—A | 0.673 | 76 | 0.652 | 22 |

| Data Subset | Feature Sets |

|---|---|

| Western Classical Music—Valence | HCDF_mean, HPCP_dmean_28, Onset_Rate, MFCC_dmean_1, MFCC_dmean_2, Spectral_complexity_dmean, Spectral_flux _mean Spectral_complexity_dvar, Spectral_complexity_mean, Spectral_complexity_var, Spectral_entropy_dmean, Spectral_entropy_mean, Spectral_contrast_coeffs_dmean_1, Spectral_contrast_coeffs_dmean_2, Spectral_contrast_coeffs_dmean_6, Spectral_contrast_coeffs_dvar_1, Spectral_contrast_coeffs_dvar_6, Spectral_contrast_coeffs_mean_6, Dissonance_mean, Beats_loudness_band_ratio_mean_5, Spectral_centroid_dmean |

| Western Classical Music—Arousal | Tuning_diatonic_strength, Tuning_equal_tempered_deviation, Spectral_contrast_coeffs_mean_2, Spectral_contrast_coeffs_mean_3, Spectral_contrast_coeffs_dmean_2, Spectral_contrast_coeffs_dmean_6, Spectral_contrast_coeffs_dvar_6, Spectral_skewness_dvar, Spectral_complexity_var, HPCP_entropy_dmean, HPCP_dmean_12, HPCP_dvar_12, HPCP_entropy_mean, HCDF_Mean, HCDF_PeriodAmp, MFCC_var_5, MFCC_var_6 |

| Chinese Classical Music—Valence | Onset_rate, HCDF_mean, Spectral_strongpeak_dmean, Spectral_complexity_dmean, HFC_dmean, Spectral_flux_mean, Spectral_flux_dmean, Spectral_contrast_coeffs_mean_2, Spectral_contrast_coeffs_dmean_4, Spectral_strongpeak_dvar, Spectral_strongpeak_var, Beats_loudness_band_ratio_mean_3, BPM_histogram_first_peak_weight_mean, Silence_rate_60 dB_var, Dynamic_complexity, MFCC_dmean_2, MFCC_mean_6, MFCC_dmean_9, MFCC_dvar_13, Dissonance_mean |

| Chinese Classical Music—Arousal | Spectral_flux_mean, Spectral_complexity_dmean, Dissonance _mean, Dissonance_var, HCDF_PeriodAmp, Spectral_entropy_mean, AHFC_dmean, Spectral_skewness_mean, Spectral_strongpeak_dmean, Spectral_contrast_coeffs_mean_2, Onset_rate, HCDF_mean, ERBbands_mean_30, ERBbands_spread_dmean, Silence_rate_60 dB_var, HPCP_entropy_mean, HPCP_dvar_1, HPCP_dmean_1, MFCC_dmean_2, MFCC_mean_1, MFCC_dmean_7, MFCC_dmean_8 |

| 1 | 2 | 3 | 4 | 5 | |

|---|---|---|---|---|---|

| W-V | Spectral contrast | Spectral complexity | HCDF | MFCC | Onset rate |

| 21.9% | 18.5% | 15.8% | 10.6% | 9.2% | |

| W-A | Spectral contrast | HPCP | HCDF | Tuning diatonic strength | MFCC |

| 32.8% | 24.6% | 11.6% | 9.1% | 7.7% | |

| C-V | Spectral strongpeak | Spectral contrast | MFCC | Spectral flux | Onset rate |

| 13.8% | 13.6% | 13.3% | 9.5% | 8.5% | |

| C-A | Dissonance | MFCC | HCDF | Spectral flux | Spectral complexity |

| 13.6% | 13.0% | 11.8% | 9.5% | 9.4% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, X.; Wang, L.; Xie, L. Comparison and Analysis of Acoustic Features of Western and Chinese Classical Music Emotion Recognition Based on V-A Model. Appl. Sci. 2022, 12, 5787. https://doi.org/10.3390/app12125787

Wang X, Wang L, Xie L. Comparison and Analysis of Acoustic Features of Western and Chinese Classical Music Emotion Recognition Based on V-A Model. Applied Sciences. 2022; 12(12):5787. https://doi.org/10.3390/app12125787

Chicago/Turabian StyleWang, Xin, Li Wang, and Lingyun Xie. 2022. "Comparison and Analysis of Acoustic Features of Western and Chinese Classical Music Emotion Recognition Based on V-A Model" Applied Sciences 12, no. 12: 5787. https://doi.org/10.3390/app12125787

APA StyleWang, X., Wang, L., & Xie, L. (2022). Comparison and Analysis of Acoustic Features of Western and Chinese Classical Music Emotion Recognition Based on V-A Model. Applied Sciences, 12(12), 5787. https://doi.org/10.3390/app12125787