Abstract

Artificial intelligence is applied to many fields and contributes to many important applications and research areas, such as intelligent data processing, natural language processing, autonomous vehicles, and robots. The adoption of artificial intelligence in several fields has been the subject of many research papers. Still, recently, the space sector is a field where artificial intelligence is receiving significant attention. This paper aims to survey the most relevant problems in the field of space applications solved by artificial intelligence techniques. We focus on applications related to mission design, space exploration, and Earth observation, and we provide a taxonomy of the current challenges. Moreover, we present and discuss current solutions proposed for each challenge to allow researchers to identify and compare the state of the art in this context.

1. Introduction

Over the past 60 years, artificial intelligence (AI) has been used in several applications, such as automated reasoning, intelligent systems, knowledge representation, and game theory, to cite some famous examples. However, recent advances in computational power, the number of available data, and new algorithms have highlighted that AI can have a crucial role in the digital transformation of society and must be a priority for any country. For this reason, the research community has devoted much effort to designing and developing new AI techniques in many strategic fields such as cybersecurity, e-Health, military applications, and smart cities.

The importance of AI is witnessed by the numerous survey papers describing recent advances in AI in almost all fields. However, we have found that in recent years, AI has been strongly exploited in an important field that is not covered by any specific survey: space applications. This field includes technologies for space vehicles (spacecraft, satellites, etc.) and their communications and services for terrestrial use (weather forecasting, remote sensing, etc.).

In all these cases, using AI-based solutions has given and can give many advantages.

To the best of our knowledge, no survey published in the last ten years has focused on this topic. Indeed, Reference [1] focuses only on a specific branch of AI (i.e., robotics) and provides a survey on robotics and autonomous systems for space exploration. Reference [2] is limited to an interesting but very specific field of space applications, which concerns satellite communication. The only survey remotely related to this topic is Reference [3], which focuses on the following specific topics: autonomous planning and scheduling of operations, self-awareness, anomaly detection, on-board data analysis, and on-board operations and processing of Earth-observation data. However, these topics represent a specific and limited aspect of space applications (this paper received 12 citations after more than two years since its publication).

In this paper, we provide a survey of the current challenges in space applications and discuss the most relevant state-of-the-art proposals. In order to present a self-contained discussion on the topic, we start by briefly introducing the major branches of AI exploited in this field. Then, we identify thirteen challenges related to space applications, grouped into three main categories: mission design and planning, space exploration, and Earth observation. Each challenge is defined and discussed, and the most effective AI-based solutions proposed in the literature are presented. To collect all relevant papers on this topic, Google Scholar archive was used, and we searched for the terms “space applications” and “artificial intelligence”. We extended this search, also substituting the term artificial intelligence with its main branches (such as machine learning and neural networks). These searches resulted in more than 200 papers, which were filtered by excluding unpublished papers (e.g., theses) or published by low-relevance publishers. Then, we excluded papers considered out of scope for this survey after a manual check. This resulted in more than one hundred papers published in the last five years (2017–2022): these papers are presented and discussed as solutions for space application challenges. The organization of this paper allows the reader to both find the most recent solutions proposed for a given problem and to identify the problems in which a given AI-based technique has been exploited.

The structure of the paper is as follows. In Section 2, we briefly provide the background on AI needed to classify the different techniques. Then, in Section 3, we present the current challenges in space applications that AI is helping to address. In Section 4, we discuss the most recent solutions proposed for addressing each challenge. In Section 5, we provide a high-level discussion about the problems and the solutions from recent years in the field of space applications. Finally, in Section 6, we draw our conclusions.

2. Background on AI

This section briefly describes the major branches of AI exploited in the literature to solve problems in space applications. These techniques are divided into macro-categories.

The first category is one of the most common forms of machine learning: supervised learning. This type of algorithms trains on labeled datasets to predict and classify new input data [4]. A typical application of supervised learning regards image recognition, in which a set of labeled images are received, and the models can learn to distinguish common attributes. An example of a supervised learning technique is the support vector machine [5], a linear model used for classification and regression problems. This algorithm generates a line that separates the data into classes. Another technique is random forest, whose algorithm builds decision trees on different samples, also made of continuous variables, and calculates their majority occurrence for classification and average for regression [6,7].

A second category is unsupervised learning, in which algorithms learn by unlabeled data and can discover hidden patterns without any human intervention in data cataloging [8]. Clustering is an unsupervised learning technique that aims to find groups or clusters in a feature based on their similarities or differences. There are several clustering algorithms: for example, K-means clustering [9] is used mainly for image segmentation compression, market segmentation, or document clustering. This algorithm assigns data points into K clusters, where K is the number of groups based on the distance from each cluster. Another interesting unsupervised learning technique is dimensionality reduction, whose aim is to reduce the complexity of a problem. It is exploited to reduce the number of random variables under consideration by transforming data from a high-dimensional space into a low-dimensional space to optimize the meaningful properties of the original data.

Semi-supervised learning stands between supervised learning and unsupervised learning and is a combination of them [10]. These algorithms involve a small number of labeled data and many unlabeled data. They learn from the labeled data and extract knowledge from the unlabeled data. An example is a document classifier where it is impossible to find many labeled text documents. A small number of labeled training data are used to train the model and, again, an unlabeled training dataset to predict the outputs.

Reinforcement learning is a quite different approach from the previously described paradigms because it does not require a labeled dataset or a supervisor. Instead, it consists of an agent able to observe and experiment in a complex environment where the agent learns the optimal behavior through interactions and obtains the maximum reward. Therefore, any problem in which an agent must interact with an uncertain environment to meet a specific goal can be a potential application of reinforcement learning [11]. For this reason, this technique is widely used in robotics and autonomous driving.

Neural networks are a subset of machine learning that try to mimic how biological neurons communicate with each other. Neural networks learn from large numbers of data, discovering intricacies while attempting to simulate the behavior of the human brain. The backpropagation algorithm is the basis of how the model changes its internal parameters that are used to compute the representation in each layer from the representation in the previous layer [12].

Convolutional neural networks (CNNs) are mainly used to analyze visual images. CNNs are structured as a series of stages: the first few stages are composed of two types of layers, named convolutional layers and pooling layers. These layers act as a feature extraction tool to derive the base-level features from the images fed into the model. Then, the fully connected layer and the output layer, which are the final stages, use the feature extraction layers’ output and predict a class for the image depending upon the features extracted. These networks are designed to process data in multiple arrays, including language, images or audio spectrograms, video, or volumetric images.

Recurrent neural networks (RNNs) are used to interpret sequential and temporal information. These networks can analyze time series, audio, text, and speech with the more profound understanding of a sequence they allow. RNNs process an input sequence one element at a time, maintaining information about the history of all the past elements of the sequence [13]. Furthermore, recurrent networks can compress the whole history into low-dimensional space and form short-term memory [14].

Deep learning is a branch of AI implementing computational models composed of multiple processing layers able to learn representations of data with multiple levels of abstraction [13]. Generally, neural networks and deep learning are improving the state of the art in image recognition, speech recognition, and natural language processing.

Natural language processing (NPL) [15] focuses on the analysis of language structure in order to develop systems for speech synthesis and recognition. These systems are applied in several applications where a machine can understand fundamental human interactions, such as translation engines, robot assistants, and sentiment analysis.

Furthermore, fuzzy logic reflects human reasoning in making decisions and includes all the intermediate possibilities between digital values of yes or no [16]. This technique represents uncertain information by analyzing how much the hypothesis is true. Key application areas of fuzzy logic are automotive systems and electronic and environmental devices.

We conclude this background on AI branches by introducing expert systems (ESs). Originally defined as computer programs able to solve complex problems, ESs can replace human experts in decision-making. These systems extract knowledge stored in their knowledge base and are called experts because they contain the expert knowledge of a specific domain and can solve any complex problem. The performances improve by increasing the amount of knowledge available, as well as the accuracy and efficiency [17]. An ES responds to any complex query in a brief period and in a human-understandable way. Indeed, it takes inputs in human language and provides outputs in the same form.

The techniques presented above will be referred to in the following sections as they are used to solve space application problems.

3. Challenges in Space Applications

In this section, we discuss and analyze the current challenges in space applications that AI is helping to address.

Over the years, humans have tried to address several challenges in space and for space. In this process, the collaboration between humans and machines, along with enabling and innovative technologies, has been crucial and has made discoveries and innovation possible. If, on the one hand, there is a necessity to expand the presence of humans in space, on the other hand, it is the task of humans to manage the constraints of in-space resources.

Therefore, all efforts lead to two common goals: (i) to push toward space exploration and scientific discoveries; (ii) to improve life on Earth. Human beings play an essential role in all this, such as specialists who work on the objectives of space missions, astronauts who experience challenging conditions in space, and ordinary citizens involved in the achievement of these challenges, particularly those of improving life on Earth.

In this survey, we have investigated the above challenges, starting from the mission execution level. We live in the age of the Internet of Everything, where connections between objects enable services and improve processes. Indeed, in designing and planning a space mission, several connected entities collaborate to carry out the mission, including robots in space, smart architecture and construction in space, wearable devices, and apps [18].

Such systems work through data and, at the same time, produce data in large quantities. For example, these data concern satellites’ critical and vital conditions, so it is necessary to work on systems capable of generating reliable responses to these parameters. Furthermore, it is necessary to identify tools to improve access to information in the mission-design and planning phases to manage the information necessary for its success. These tools are called design engineering assistants and can support decision-making for complex engineering problems such as initial input estimation, assisting experts by answering queries related to previous design decisions, and offering new design options.

For the success of a mission, the psycho-physical conditions of the astronauts are crucial. Some challenges regard the mitigation of adverse effects of space environments on human physical and behavioral health with the help of intelligent assistants able to optimize human performance in space or provide helpful information on the health of astronauts. On the mission object level, we come to the challenges linked to reducing the threat to spacecraft from natural and human-made space debris and developing capabilities to detect and mitigate the risk of these objects as possible catastrophic threats to Earth. For this reason, it is necessary to seek solutions that are able to improve the accuracy and reliability of space situational awareness (SSA) and space surveillance and tracking (SST) systems.

Concerning space exploration, the community requires more reliable and accurate techniques to learn about the universe and collect, analyze, and distribute information. For example, robot exploration needs to be enhanced by tools to produce better maps of planets’ surfaces. These tools can also enhance the accuracy of technologies used to observe planetary bodies.

Space applications can help improve its condition and future directions for life on Earth. The main challenge is developing systems and technologies that can detect, analyze, and support decision-making in high-stress situations on Earth, such as emergencies and disasters, thanks to data or images coming from satellites. The applications are broad and include Sustainable Development Goals [19] that have to be carried out according to the 2030 Agenda: improving life on the land, estimating and reducing the effect of climate change, protecting the biodiversity on Earth, and assisting in clean water and atmosphere.

We discuss such challenges in terms of three main categories, which are presented in the following sections.

3.1. Mission Design and Planning

The design and plan of a mission require incredible knowledge and expertise from all the human and computer assets involved, and AI can help manage information, knowledge, procedures, and operations during a space mission.

The first use of AI in mission design and planning regards the knowledge gathered by previous missions, which, in most cases, is not fully accessible. Therefore, researchers are working on designing an engineering assistant to gather and analyze relevant data and produce reliable answers to save many human work hours. Daphne is an example of an intelligent assistant that is used for designing Earth observation satellite systems [20,21], which can assess the strengths and weaknesses of architectures proposed by engineers and answer specific design problems using natural language processing. This system relies on a historical database of all Earth-observing satellite missions, domain-specific expert knowledge associated with this field, and information extracted from data mining in the current design space.

Another challenge regards the monitoring of satellite health. On-board AI functionalities can address this challenge. Indeed, the performance of equipment and sensors is continuously controlled by a system monitoring able to provide helpful information such as alerts. Scientists use AI systems that can notify ground control about any problems and solve them independently. An example is taken from SpaceX satellites [22], equipped with a system of sensors that can track the device’s position and adjust it to keep them safe from colliding with other objects in space. Furthermore, the Italian start-up company AIKO developed an AI for mission autonomy with their MiRAGE library [23]. AIKO technology can detect events and allow satellites to perform autonomous replanning and react accordingly, avoiding the delays introduced by the decision-making loops on the ground. AI is also helping Perseverance, the Mars rover, for landing [24] and navigation on the planet, along with many other scientific tasks. The rover also uses Planetary Instrument for X-ray Lithochemistry, or PIXL, an AI-powered device, to search for traces of microscopic materials [25].

Moreover, the manufacturing process of satellites and spaceships can be improved by AI algorithms. Indeed, these operations involve many repetitive procedures requiring high precision and accuracy and should be performed in clean rooms to avoid biological contamination. In addition, the use of collaborative robots, cobots, can provide more reliable manufacturing steps that can be prone to human errors. Consequently, the exploitation of AI can significantly speed up production processes and minimize contact with humans, which is equally relevant to increasing production. Furthermore, a personal assistant in space can provide immediate help and support in everyday activities within astronauts’ missions or in case of emergencies on-board, eliminating the communication delays with the ground control. For example, IBM, in partnership with the German space agency DLR and Airbus, created CIMON, the Crew Interactive Mobile Companion [26]. CIMON is an AI robot that is able to converse with astronauts at the International Space Station and answer astronauts’ questions.

3.2. Space Exploration

The impact of AI on defining and improving the future of space exploration is relevant. Many AI applications exist, from planetary navigation to robot exploration and deep space exploration of astronomical bodies. For example, the first image of a black hole was obtained by a Bayesian algorithm used to perform deconvolution on images created in radio astronomy [27]. Now, researchers are working on more complex AI algorithms to obtain more accurate images of black holes. These algorithms will probably help scientists identify and classify different deep-space objects [28]. An example is the discovery of two Kepler Exoplanets, namely Kepler 80g and Kepler 90i, orbiting Kepler 80 star system and Kepler 90 star system, respectively. The knowledge of the population of exoplanets is a challenge for astronomers because such retrieval of information requires an automatic and unbiased way to identify the exoplanets in these regions [29].

The management of space debris, such as abandoned launch vehicle stages and fragmentation debris, is a demanding problem [30,31] included in the field of space situational awareness (SSA) [32]. Indeed, researchers stress the importance of maintaining a resident space object database because of the necessary operation of SSA for a future Space Traffic Management system [33,34]. However, the real concern with space debris is the contingency of collisions with satellites or the spacecraft, generating undesirable space accidents. Deep learning techniques can enhance the accuracy of laser-range technology traditionally used to overcome this problem. Another challenge regards the production of better maps of the planets’ surface. One example is a project developed by NASA and Intel on an AI system that can help astronauts find their way on planets [35]. The program was applied to the moon’s surface and then to the Mars exploration program. The collaboration of both humans and swarm robots can meet the challenges of space exploration. Indeed, multi-robot systems can be trained by humans using AI techniques [36]. Other efforts have been carried out in autonomous and intelligent space guidance problems with the support of deep and reinforcement learning [37,38,39,40].

At the same time, during the space exploration missions to Mars, monitoring and assessing the health of astronauts is crucial [41]. The applications of AI in healthcare focus on deep learning algorithms for scientific discovery or for advanced pattern recognition software for diagnosis, giving new perspectives on the future of astronauts’ medical care.

Furthermore, detecting astronauts’ speech and language disorder signs to interpret their psychological behavior and to support human–robot cooperation has been explored using natural language processing [42,43].

3.3. Earth Observation

Satellites generate thousands of images every minute of the day, collecting terabytes of data. There are several applications of Earth Observation (EO), such as agriculture, forestry, water and ocean resources, urban planning and rural development, mineral prospecting, and environment and disaster management [44]. Without a data analysis tool, humans were previously in charge of understanding these data and gathering relevant information for the required application. This effort resulted in a long time to select, filter, refine, and analyze a vast number of images. With the advancement of AI, there have been significant signs of progress in reviewing and analyzing millions of images produced by satellites. Furthermore, these algorithms can detect any issues with the images by speeding up the image-gathering process.

Satellites are often used to respond to natural disasters from space. For example, these images can help ground operators to detect the course of the disaster or determine victims and damages to buildings and the environment [45]. Furthermore, geospatial information of the area affected by the event is rapidly provided to the first responders thanks to EO satellites. Indeed, satellite data can provide information to reduce the disaster risk assessment and plan the post-disaster response [46]. Studies about the population’s exposure to air pollution and demographic data have been carried out to highlight the highest exposure risks [47]. Therefore, EO can analyze several of Earth’s physical, chemical, and biological parameters to understand the Earth’s conditions better and propose action plans to improve life on the planet.

4. State of the Art

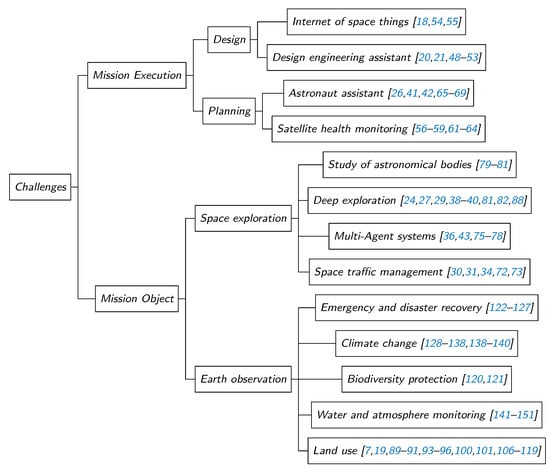

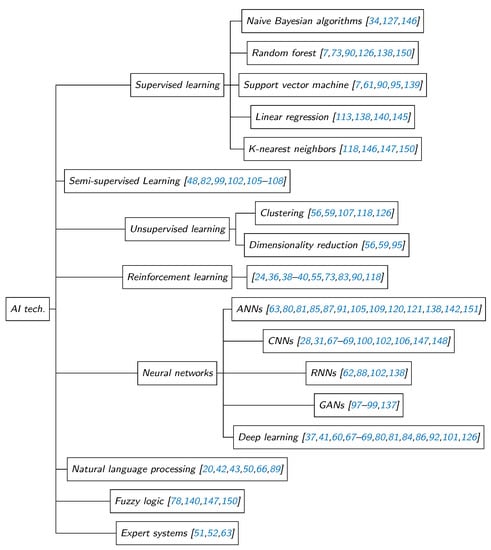

Now that we have defined the challenges in space applications and presented the basic approaches, in this section, we discuss the most recent solutions proposed for addressing each challenge. Moreover, in Figure 1, we provide a mind map that associates the solutions proposed in the literature with each challenge. In addition, in Figure 2, we group these solutions based on the AI technique exploited.

4.1. Works on Mission Design and Planning

In the early stages of a space mission, the development of AI presents several advantages. To enhance information retrieval and knowledge reuse in the field of space missions, the study presented in [48] proposes a semi-supervised Latent Dirichlet Allocation (LDA) model for space mission design. First, the general model is trained and then used to generate specific LDA models focused on different spacecraft subsystems or topics. The authors of [49] analyze how knowledge management and human–machine interaction, machine learning, and natural language processing methods can improve the responsible use of information during a concurrent engineering study and design an AI assistant, called the design engineering assistant, to support knowledge management strategies. A natural language processing system, called AstroNLP, is presented in [50]. The solution can automatically extract and visualize useful information from the mission in a direct graph to optimize the documentation’s inspection of a mission. The study in [51] stresses the importance of developing an expert system used as a design engineering assistant in the field of space mission design. The detailed software architecture of both front and back ends and the tool requirements are presented. Given the diversification and number of available data (e.g., implicit and explicit knowledge), the highlighted problem consists in querying and finding the needed information in a reasonable time.

Considering the perspective of the ground segment, the increase in volume and type of data, processes, and procedures requires innovative solutions and methodologies. Therefore, in [52], an expert system is proposed to tackle the challenges of systems engineering, optimization of development, management, and implementation of the ground system. In [53], an AI-driven design tool for the transfer of orbital payload via a space tether is proposed. The authors investigate the recent proposals on space tethers, utilized in applications such as the orbiting or deorbiting of space vehicles, payload transfer, inter-planetary propulsion, plasma physics, and electrical science in the Earth’s upper layer atmosphere. In addition, AI tools support recent space elevator projects. Indeed, the proposed solution relies on a computational-intelligence-assisted design framework that exploits the Artificial Wolf Pack algorithm with logsig randomness.

The optimization of resource-constrained Internet-of-Space-Things [54] during next-generation space missions requires a flexible and autonomous framework that overcomes the limitations of existing space architecture. In this regard, the authors of [55] propose an AI-based cognitive cross-layer decision engine to bolster next-generation space missions. The system exploits deep reinforcement learning and cross-layer optimization. The papers [56,57] review existing satellite health monitoring solutions and present an analysis of remote data mining and AI techniques used to address the needs in this field. Usually, satellite telemetry data and integrated information are used to produce an advanced health monitoring system and prevent unexpected failures. The authors of [58] present a guideline for intelligent health monitoring solutions exploiting data mining techniques. Furthermore, the study contains operations and applications of such systems related to the ground control station.

Multimodality and high dimensionality are two critical characteristics of satellite data. In [56,59], the reduction and clustering of probabilistic dimensionality are applied to telemetry data of the Japan Aerospace Exploration Agency (JAXA)’s Small Demonstration Satellite-4. These methods analyze anomalies and understand the health status of the system. The study [60] investigates the importance of integrating a fault-diagnosis system into a space vehicle to isolate, detect, and classify faults in the system. In [61], a support vector machine for regression is applied to data coming from the Egyptsat-1 satellite launched in April 2007 to analyze the satellite performance and build a fault diagnosis system to determine the origin of this satellite failure.

The authors of [62] envision a new deep learning approach for telemetry analysis of space operations. The proposed architecture consists of three modules developed for automatic feature extraction, anomaly detection, and telemetry prediction. First, an autoencoder neural network is created for feature vector extraction and anomaly detection. Then, a multi-layer long short-term memory network is evaluated for telemetry forecasting and anomaly prediction. The study in [63] provides an analysis aimed at improving the autonomy level of space missions, and in particular of small satellites. The author presents several case studies such as event and failure detection tradespace exploration where neural networks, expert systems, and genetic algorithms are used. AI-Express is a space system providing services to demonstrate AI algorithms, qualify technologies, and validate mission concepts, in-orbit and as-a-service [64]. The system is built to consider the requirements of small satellite missions and explore innovative approaches in communications, planning, tasks, and data processing.

The authors of [65] envision the need to integrate machine learning with medical care during a space mission. The current human missions and future deep space missions open several challenges so that the medical care of the crew needs to become more and more independent and autonomous. Aligned with this need, improving astronauts’ work efficiency and mental health becomes crucial for space missions. Indeed, monitoring crew members’ stress and reliability in isolation is an essential parameter for the success of a human mission. In [66], natural language processing is exploited to monitor the crew’s psychological health in stressful conditions during long missions. In [67,68,69], in-cabin astronaut assistance and person-following nanosatellites are studied and developed for the China Space Station. The satellite follows assisted flying autonomously and offers immediate assistance. Furthermore, a deep convolution neural network (DCNN)-based detection module and a probabilistic-model-based tracking module are trained to accomplish the cited tasks.

In conclusion, we observed that gathering and retrieving information for a space mission is widely achieved with the support of expert systems coupled with natural language processing techniques. These solutions aim to enable access to useful information in a reasonable time. However, natural language processing techniques and convolutional neural network approaches are exploited to support astronauts by offering immediate assistance in conditions of need. Concerning satellite health monitoring, several proposals show how dimensionality reduction, clustering, and deep learning can be applied for telemetry analysis of space operations to improve the usability and the exploitation of these data.

4.2. Works on Space Exploration

Artificial intelligence will enhance space exploration in the near future [70]. Indeed, intelligent tools that analyze data and predict events will be used in every aspect of space settlement. Automation and robotics have always played a significant role in space exploration [71], opening new frontiers and improvements since the beginning. The exploitation of AI contributes to creating independent and intelligent robots able to learn while in orbit. In addition, the management of space traffic and the assistance of ground operators can be supported by AI techniques. In [72], the authors trained predictive models through Multiple Regression Analysis. Their study aimed to forecast collision avoidance maneuvers and better manage space traffic.

Improving the autonomous functions of space robots, spacecraft, and equipment is a need that can be achieved through AI systems. The authors of [73] survey the impact of AI on the field of spacecraft guidance dynamics and control. They focus on evolutionary optimization, tree searches, and machine learning using deep learning and reinforcement learning, analyzing interesting use cases where these algorithms improved the performances of guidance and control of well-known problems [74]. The authors of [75] consider the advantages of a multi-agent system in terms of robustness and reliability of space operations. Furthermore, the intelligent use of multi-robots integrated with a human-to-loop approach mission costs and human efforts. The study in [76] provides a methodology for designing and controlling elastic, computing self-organization for AI space exploration. The use of swarm cooperative rovers is based on a set of shared information supporting the mission planned to reach a common target [77]. In [75], a cooperative, coevolutionary algorithm able to train a multi-robot system is presented.

Any space exploration scenario presents several challenges that can be met jointly by both humans and swarm robots. The authors of [36] explore a scenario based on Mars, where human scientists train robots and multi-robot systems to perform primary tasks. A multi-agent deep deterministic policy gradient algorithm is exploited. In [78], a decentralized multi-robot system operation technique is presented. The approach is evaluated in a rough terrain environment artificially generated to perform a collaborative object transportation mission. The technique is based on a fuzzy genetic system exploiting a genetic algorithm to optimize a fuzzy inference system.

AI techniques play an important role in astrophysics and astronomy. AI searches space in planetary studies and conducts real-time monitoring of instruments and investigations of stellar clusters and non-stellar components of the Milky Way (for example, see [79]). Notably, as suggested in [80], deep learning and neural networks can automate the detection of astronomical bodies and sustain future exploration missions. The authors of [81] introduce simulator-based methods using artificial intelligence to obtain images during deep-space journeys in NASA Starlight and Breakthrough Starshot using a Grow When Required Network, an unsupervised technique. In addition, to better understand Mars, several Mars images have been collected and need to be analyzed. In [82], semi-supervised contrastive learning is exploited to classify Mars rover images.

Regarding the Jovian system, the authors of [83] proposed an approach based on reinforcement learning for the solution of the J2-perturbed Lambert problem in the field of space trajectory design [84] and to overcome errors due to incomplete information about pursuit-evasion missions. Furthermore, ANNs have been exploited to model accurate and efficient maps of Jovian-moon gravity-assisted transfers [85,86,87].

Convolutional neural networks coupled with a recurrent neural network are exploited to create a vision-based navigation system for a pinpoint landing on the Moon [88]. The regression task is executed by a long short-term memory (LSTM) recurrent neural network, while a CNN is used to extract features and process them. A CNN is trained with a dataset of synthetic images of the landing area at different relative poses and illumination conditions. Indeed, in a no-space application, the matched exploitation of CNN-LSTM has already been proven to have excellent performance in providing image processing and model prediction.

To summarize our analysis, we highlight that AI techniques, such as deep and reinforcement learning, are widely used in the field of spacecraft guidance dynamics and control to improve the autonomous functionalities of space equipment, spacecraft, and space robots. The main goal of such systems is also to enable constructive cooperation between multi-robot systems and humans. As far as deep space exploration, approaches based on neural networks make possible the automation of the detection of astronomical bodies and the accessibility to better maps of planets’ surfaces.

4.3. Works on Earth Observation

The survey [89] reviews machine learning models used to predict and understand components of the Earth observation system and divides the addressed problems into four categories: (1) classification of land cover types, (2) modeling of land–atmosphere and ocean–atmosphere, (3) detection of anomalies and extreme events, and (4) causal discovery. AI and Earth observations (EO) can meet the sustainable development goals (SDGs) and specifically contribute to them. Indeed, in [19] the authors demonstrate how a convolutional neural network, U-net with SE blocks, can perform an efficient segmentation of satellite imagery for crop detection. The study [90] reviews machine learning techniques applied to monitor SDGs and proposes explore furthering random forest, support vector machine, and neural networks to analyze EO data. Finally, in [91,92], deep neural networks are exploited for on-board spacecraft. The main motivations of this approach concern the full use of real-time sensing data of multiple satellites from a smart constellation and therefore the on-the-edge processing of data and the resulting benefits in terms of the imaging product value.

AI algorithms can be considered for consuming data at the source rather than on the ground, lightening the down-link load, and allowing the development of value-added applications in space. The studies in [19,93] present a Copernicus Access Platform Intermediate Layers SmallScale Demonstrator, where AI methods are used to analyze and interpret Earth observation satellite images. As a primary step, the authors of [19] include the instantiation of a deep neural network able to extract meaningful information from the archived images and create a semantic scheme with several EO sensors (e.g., TerraSAR-X, WorldView, Sentinel-1, and Sentinel-2). The authors of [94] review the efficiency of Gaussian processes (GPs) in solving EO problems. GPs can accurately estimate parameters in acquired images at local and global scales. Furthermore, these processes can quickly adapt to multimodal data from different sensors and multitemporal acquisitions. In [95], the Google Earth Engine and Google Cloud Platform are used to generate a feature set where dimensionality-reduction methods are tested for object-based land cover classification with SVM, with the support of Sentinel-1 and Sentinel-2 data. The authors of [96] apply dimensionality reduction to classify hyperspectral images and generate low-dimensional embedding features of these images. Generative adversarial networks (GANs) and CNNs are coupled in [97] for hyperspectral image classification. The study [97] presents several opportunities for the application of GANs in remote sensing [98]. In addition, noticed anomalies on hyperspectral images increase the possibilities of errors and decrease the estimation accuracy. In [99], a framework based on semi-supervised spectral learning and GANs is proposed for hyperspectral image anomaly detection. The authors of [94] review the efficiency of Gaussian processes (GPs) in solving EO problems. GPs can accurately estimate parameters in acquired images at local and global scales. Furthermore, these processes can quickly adapt to multimodal data from different sensors and multitemporal acquisitions.

Convolutional neural networks are exploited in [100] to classify and detect land use and land cover from Sentinel-2 satellite images. The dataset EuroSAT contains 27,000 labeled and geo-referenced images made public. Furthermore, the study proposed in [101] exploits deep learning neural networks for remote sensing to improve the production of land cover maps and land use. In particular, the authors of [101] consider the southern agricultural region of Manitoba in Canada.

In recent years, deep networks such as convolutional and recurrent neural networks have been broadly used to classify hyperspectral images. In [102], the authors build a network able to analyze the whole image with the help of a non-local graph using convolutional layers to extract features. A semi-supervised learning algorithm enables high-quality map classification. Nevertheless, the authors of [103,104] analyze the negative effect of microscopic material mixtures on the processing of hyperspectral images. Another relevant property of these data is the non-local smoothness, which has been analyzed in [104]. Again, in [103], an innovative spectral mixture model is proposed. The authors demonstrate that their approaches can obtain a more accurate abundance estimation and a better reconstruction of abundance maps.

The authors of [105] develop a neural network that is able to synthesize EO images. Notably, an energy-based model is applied, and datasets are adapted to semi-supervised settings. Furthermore, in [106,107,108], semi-supervised learning is evaluated due to the lack of labels and the speckle presence on raw images coming from EO data. Indeed, the authors of [107] propose the MiniFrance suite, a large-scale dataset for semi-supervised and unsupervised semantic segmentation in EO. Finally, in [106], DCNNs are coupled with semi-supervised learning to build a framework called Boundary-Aware Semi-Supervised Semantic Segmentation Network, which is able to obtain more accurate segmentation results. In [109], the combination of drone-borne thermal imaging with neural networks is produced to locate ground-nests of birds on agricultural land with high performance. Several studies [110,111,112] have addressed the need to review the application of AI to solve different agriculture-related problems [113] and improve decision support at the farm level [110,114].

One of the most urgent needs is to respond to adversarial and life-critical situations such as pandemics, climate change, and resource scarcity [115,116] with prompt and sustainable agricultural and food production and improved food supply systems. As suggested in [117,118], automation of agriculture and farming is a solution for the increasing demands of food and nutrition, which are also aggravated by above-mentioned problems, such as pollution and climate change, resource depletion, population growth, and the need to control irrigation and harmful pesticides. Indeed, AI can contribute to improving and developing sustainable food systems [119] able to revolutionize current agricultural and supply chain systems.

Biodiversity assessments can strengthen our environments and the entire human society. The development of innovative and high-quality models to also monitor the impact of human activities on ecosystems is explored in [120] with the support of neural network algorithms. Indeed, AI will improve the conservation of ecosystems and species. The authors of [121] propose CAPTAIN (Conservation Area Prioritization Through Artificial Intelligence Networks), a methodology based on reinforcement learning, exploring and analyzing multiple biodiversity metrics to protect species from extinction.

Another application field of AI that has gained close attention is disaster management. The studies [122,123,124] collect different solutions exploiting AI techniques to support the challenges of the different phases of disaster management phases, i.e., mitigation, preparedness, response, and recovery. The methods are discussed and categorized according to these phases.

Furthermore, public engagement in AI-driven applications for disaster management is analyzed in [125] thanks to data provided by Australian residents. The results confirm the benefits of using AI for mitigating and responding to a disaster, and they encourage public and private sectors to adopt AI in disaster-management applications. The authors of [126] analyzed reactions on social media during the 2017 Atlantic Hurricane season to develop a system able to improve situational awareness and help organizations handle crises. The information retrieved consists of textual and imagery content from several tweets posted during the disaster. The tweet classification was performed by developing a random forest learning scheme. Furthermore, the K-means algorithm was used to find high-level categories of similar messages. Finally, deep-learning-based techniques were advocated to extract useful information from social media images. In [127], a machine-learning-based disaster prediction model was proposed to identify disaster situations by analyzing information coming from social messaging platforms such as Twitter. Multinomial Naive Bayes and XGB classifiers are used to individuate both tweets that are relevant to a flood and tweets irrelevant to a flood, with an accuracy of above 90%.

Climate change is having a considerable impact on the future of lives on Earth by modifying crucial climatic parameters such as temperature, precipitation, and wind [128,129]. On the other hand, the future implications of greenhouse gas emissions are discussed [130,131] related to these parameters. AI offers the means to reduce uncontrollable risks and predict extreme weather events and climate conditions [132] and, at the same time, to assess human responsibilities and duties [133,134,135]. As suggested in [136], leveraging AI techniques holds the opportunities to combat the climate crisis effectively by finding procedures to monitor, analyze, and predict climate change indicators. One application is proposed in [137], where generative adversarial networks are used to develop an interactive climate impact visualization tool that is able to predict and visualize the future of a specific location based on the effect of current climate indicators, such as floods, storms, and wildfires. The study [138] goes in the same direction to predict daily energy consumption of a buildnig using a data-driven explainable AI model. Instead, the authors of [139] show the effectiveness of support vector machine models to predict solar and wind energy resources. The authors of [138] apply different AI models to the proposed problem: extreme gradient boosting, linear regression, random forest, support vector machine, deep neural networks, and long short-term memory.

The impact of climate change on precipitation and temperature over Famagusta, Nicosia, and Kyrenia stations is analyzed in [140]. With the support of a neural network, an adaptive neuro-fuzzy inference system, and multiple linear regression models, these parameters are analyzed and forecasted for 2018–2040. Climate change is intensifying challenges in water resource management [141]. Indeed, understanding our oceans and monitoring the surface water quality are presently priorities that AI can meet, as suggested in [142,143]. Notably, in [142], a systematic literature analysis on the application of AI models is presented, focusing on the physical location of experiments, input parameters, and output metrics. In [144], a concern about the application of responsible AI in the water domain is investigated. Although recent contributions show that this domain is limited for AI, insights on its optimization are reported.

The monitoring of water is challenging and crucial for every organism on Earth and for water precipitation measurements. For example, the authors of [145] adopt artificial neural networks and multiple linear regression for the downscaling of satellite precipitation estimates. In [146], non-linear autoregressive neural networks and long short-term memory deep learning algorithms were developed to model and predict water quality. Then, support vector machines, K-nearest neighbors, and Naive Bayes were used to predict water classification. The authors of [147] exploit an adaptive neuro-fuzzy inference system to predict the water quality index, a feed-forward neural network and a K-nearest neighbors algorithm to classify water quality. In [147], the focus is shifted towards the case of drinking water. The presence of bacteria in water is monitored by automated identification of colors and their intensity from sensor image using a deep convolutional neural networks algorithm developed in [148].

The problem of air pollutants requires attention and puts public health in danger if not adequately monitored [149]. The authors of [150] analyze one of the most critical air pollutants in the atmosphere, namely particulate matter. Several AI techniques are exploited to study and predict its components measured in a selected industrial zone, namely the neuro-fuzzy inference system, support vector regression, classification and regression trees, random forest, K-nearest neighbor, and extreme learning machine methods. Ozone concentration is another crucial measure related to global warming, air pollution management, and many relevant environmental issues. In [151], a wavelet transform approach combined with an artificial neural network predict one-hour-ahead ozone gas concentration.

From our analysis, we note that among the diversified contributions, the necessity to classify and analyze hyperspectral images emerges and converges towards several scopes such as land use, agriculture, food supply systems, and water and atmosphere cleaning. However, most of the solutions consider the benefit of AI-based systems regarding the imaging product value. An important number of proposals couple the use of semi-supervised learning and deep learning. Furthermore, convolutional and generative neural networks are employed to increase the accuracy of hyperspectral images.

5. Discussion

The challenges in the space sector impact our lives and the perception of our future more than we can imagine. Nevertheless, at the same time, AI is enabling progress and innovation in several sectors. Notably, in the space sector, AI is helping scientists and industries provide robust solutions to the most relevant problems, but most of all, AI enables discoveries. The problems that AI addresses are categorized into three main areas: (i) mission design and planning, (ii) space exploration, and (iii) Earth observation, as shown in Figure 1 and Figure 2. It is worth noting that we did not explore these categories as watertight compartments, because we found several studies that promote the exploitation of AI in new, overlapping, and disruptive areas [70,73,89,90,115,117,122,123,124,136]. Indeed, many of the solutions that can innovate one area also impact the results and possibilities of other areas. For example, improving health satellite monitoring systems is helping both space exploration and Earth observation applications [57]. Again, supporting knowledge management strategies can facilitate the planning of a space mission, but at the same time, it provides input for the challenges in data management for space situational awareness [32].

Moreover, new AI-based techniques used to map and investigate the lunar or Martian surfaces [24,82,88] can improve our knowledge of the health status of the Earth [21]. Therefore, the presented challenges and discoveries that we report here are all connected, as Figure 2 shows. Firstly, the diversified approach of reinforcement learning has applications in new frontiers of space exploration [38,39,40], where multi-robot systems on Mars [36,152] or internet space things [55] can be trained to perform primary tasks by adapting to the complex environment as requested by the technique. At the same time, this approach is also exploited to automate processes in the areas of agriculture and farming [90,118].

Deep learning has recently been widely used by researchers to respond to the space sector’s countless challenges. For example, neural networks are used to improve the land use for agriculture [101,109], mitigate the consequences of climate change [138,140], and monitor water quality [147]. Another example is the use of CNNs [67,68] to assist astronauts during a mission by facilitating the astronauts’ work and lives through an intelligent assistant. Furthermore, RNNs can improve telemetry analysis of space operations [62] in order to temporally predict anomalies on board. GANs are also eing applied to improve the classification of hyperspectral images and to detect anomalies in them [97,98,99].

For the same aims, unsupervised learning has been already exploited with satellite telemetry data to produce an advanced health monitoring system and prevent unexpected failures [56,59]. Another challenge pertains to information retrieval and knowledge reuse in space missions: there is a need to develop a design engineering assistant to design a space mission. Several approaches exploit expert systems to address this problem [52,63], but also a semi-supervised technique [48].

In addition, semi-supervised learning has been exploited in remote sensing, particularly for high-resolution EO images [106,107,108] but also for the classification of Mars imagery [82]. The application of supervised learning covers the most disparate problems, starting from supporting the challenges of the disaster management phases [126,127], to satellite fault diagnosis [61], to automation of agriculture [118], and environmental issues such as water quality prediction [146,147] and mitigation of climate change’s effects [138,140,150]. Furthermore, fuzzy logic solves similar issues related to climate change [140], water [147] and atmosphere [150] quality monitoring, but also to space exploration with multi-robot systems [78].

Natural language processing is widely exploited to ensure the physiological health of astronauts on a mission and improve their work efficiency [42,43] or help astronauts with the support of an intelligent assistant [20,21].

Figure 1.

Challenges in Space Applications.

Figure 2.

Artificial Intelligence techniques for space challenges.

6. Conclusions

AI is exploited in the contexts of many application to perform tasks in a human-like way, and it has an important position in the digital transformation of society. As always, the research community has an important role in developing new AI-based solutions for the most arduous engineering challenges, and survey papers have been published discussing such solutions in almost all fields except the field of space applications.

In this paper, we filled this gap by presenting a framework that surveys all the recent AI-based solutions used in space applications and classifies them based on (1) the specific problem to which they are applied and (2) the typology of the AI approach used to solve the problem, thus developing a taxonomy of all recently proposed solutions. In particular, we organized and classified over a hundred papers based on two main challenges (mission execution and mission object) and thirteen sub-challenges.

The presented classification allows the reader to identify the most recent solutions applied to solve specific challenges and the most important challenges of space applications that AI-based approaches can help face.

Our analysis has highlighted some future directions to follow. A first direction concerns applying artificial intelligence techniques to the field of satellite communication with the purpose of making communication systems more efficient and secure. Another interesting direction regards the next frontiers of artificial intelligence for navigation in terms of the global navigation satellite systems.

Author Contributions

Conceptualization, A.R. and G.L.; methodology, A.R.; validation, A.R. and G.L.; investigation, A.R.; resources, A.R.; data curation, A.R.; writing—original draft preparation, A.R.; writing—review and editing, G.L.; visualization, A.R.; supervision, G.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Abbreviations

The following abbreviations are used in this manuscript:

| AI | Artificial Intelligence |

| ES | Expert System |

| SVM | Support Vector Machine |

| ANN | Artificial Neural Network |

| CNN | Convolutional Neural Network |

| RNN | Recurrent Neural Network |

| DCNN | Deep Convolutional Neural Network |

| GAN | Generative Adversarial Network |

| NPL | Natural Language Processing |

| LSTM | Long-Short Term Memory |

| EO | Earth Observation |

| SSA | Space Situational Awareness |

| SST | Space Surveillance and Tracking |

References

- Gao, Y.; Chien, S. Review on space robotics: Toward top-level science through space exploration. Sci. Robot. 2017, 2, eaan5074. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Fourati, F.; Alouini, M.S. Artificial intelligence for satellite communication: A review. Intell. Converg. Netw. 2021, 2, 213–243. [Google Scholar] [CrossRef]

- Meß, J.G.; Dannemann, F.; Greif, F. Techniques of artificial intelligence for space applications—A survey. In European Workshop on On-Board Data Processing (OBDP2019); European Space Agency: Paris, France, 2019. [Google Scholar]

- Saravanan, R.; Sujatha, P. A state of art techniques on machine learning algorithms: A perspective of supervised learning approaches in data classification. In Proceedings of the 2018 Second International Conference on Intelligent Computing and Control Systems (ICICCS), Madurai, India, 14–15 June 2018; pp. 945–949. [Google Scholar]

- Cervantes, J.; Garcia-Lamont, F.; Rodríguez-Mazahua, L.; Lopez, A. A comprehensive survey on support vector machine classification: Applications, challenges and trends. Neurocomputing 2020, 408, 189–215. [Google Scholar] [CrossRef]

- Speiser, J.L.; Miller, M.E.; Tooze, J.; Ip, E. A comparison of random forest variable selection methods for classification prediction modeling. Expert Syst. Appl. 2019, 134, 93–101. [Google Scholar] [CrossRef] [PubMed]

- Sheykhmousa, M.; Mahdianpari, M.; Ghanbari, H.; Mohammadimanesh, F.; Ghamisi, P.; Homayouni, S. Support vector machine versus random forest for remote sensing image classification: A meta-analysis and systematic review. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 6308–6325. [Google Scholar] [CrossRef]

- Berry, M.W.; Mohamed, A.; Yap, B.W. Supervised and Unsupervised Learning for Data Science; Springer: Cham, Switzerland, 2019. [Google Scholar]

- Sinaga, K.P.; Yang, M.S. Unsupervised K-means clustering algorithm. IEEE Access 2020, 8, 80716–80727. [Google Scholar] [CrossRef]

- Van Engelen, J.E.; Hoos, H.H. A survey on semi-supervised learning. Mach. Learn. 2020, 109, 373–440. [Google Scholar] [CrossRef] [Green Version]

- Botvinick, M.; Ritter, S.; Wang, J.X.; Kurth-Nelson, Z.; Blundell, C.; Hassabis, D. Reinforcement learning, fast and slow. Trends Cogn. Sci. 2019, 23, 408–422. [Google Scholar] [CrossRef] [Green Version]

- Kelleher, J.D. Deep Learning; MIT Press: Cambridge, MA, USA, 2019. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Mikolov, T.; Karafiát, M.; Burget, L.; Cernockỳ, J.; Khudanpur, S. Recurrent neural network based language model. In Proceedings of the Interspeech, Makuhari, Japan, 26–30 September 2010; Volume 2, pp. 1045–1048. [Google Scholar]

- Chowdhary, K. Natural language processing. In Fundamentals of Artificial Intelligence; Springer: New Delhi, India, 2020; pp. 603–649. [Google Scholar]

- Zadeh, L.A.; Klir, G.J.; Yuan, B. Fuzzy Sets, Fuzzy Logic, and Fuzzy Systems: Selected Papers; World Scientific: Singapore, 1996; Volume 6. [Google Scholar]

- Liebowitz, J. The Handbook of Applied Expert Systems; CRC Press: Boca Raton, FL, USA, 2019. [Google Scholar]

- Kua, J.; Loke, S.W.; Arora, C.; Fernando, N.; Ranaweera, C. Internet of Things in Space: A Review of Opportunities and Challenges from Satellite-Aided Computing to Digitally-Enhanced Space Living. Sensors 2021, 21, 8117. [Google Scholar] [CrossRef]

- Dumitru, C.O.; Schwarz, G.; Castel, F.; Lorenzo, J.; Datcu, M. Artificial intelligence data science methodology for Earth Observation. In Advanced Analytics and Artificial Intelligence Applications; InTech Publishing: London, UK, 2019; pp. 1–20. [Google Scholar]

- Bang, H.; Virós Martin, A.; Prat, A.; Selva, D. Daphne: An intelligent assistant for architecting earth observing satellite systems. In Proceedings of the 2018 AIAA Information Systems-AIAA Infotech@ Aerospace, Kissimmee, FL, USA, 8–12 January 2018; p. 1366. [Google Scholar]

- Viros Martin, A.; Selva, D. Explanation Approaches for the Daphne Virtual Assistant. In Proceedings of the AIAA Scitech 2020 Forum, Orlando, FL, USA, 6–10 January 2020; p. 2254. [Google Scholar]

- Joey Roulette. OneWeb, SpaceX Satellites Dodged a Potential Collision in Orbit. 2021. Available online: https://www.theverge.com/2021/4/9/22374262/oneweb-spacex-satellites-dodged-potential-collision-orbit-space-force (accessed on 15 September 2021).

- Weiss, T.R. AIKO: Autonomous Satellite Operations Thanks to Artificial Intelligence. 2019. Available online: https://www.esa.int/Applications/Telecommunications_Integrated_Applications/Technology_Transfer/AIKO_Autonomous_satellite_operations_thanks_to_Artificial_Intelligence (accessed on 15 September 2021).

- Gaudet, B.; Linares, R.; Furfaro, R. Deep reinforcement learning for six degree-of-freedom planetary landing. Adv. Space Res. 2020, 65, 1723–1741. [Google Scholar] [CrossRef]

- Weiss, T.R. The AI Inside NASA’s Latest Mars Rover, Perseverance. 2021. Available online: https://www.datanami.com/2021/02/18/the-ai-inside-nasas-latest-mars-rover-perseverance (accessed on 15 September 2021).

- Airbus. “Hello, I am CIMON*!”. 2018. Available online: https://www.airbus.com/newsroom/press-releases/en/2018/02/hello--i-am-cimon-.html (accessed on 15 September 2021).

- Larry Hardesty. A Method to Image Black Holes. 2016. Available online: https://news.mit.edu/2016/method-image-black-holes-0606 (accessed on 15 September 2021).

- Linares, R.; Furfaro, R.; Reddy, V. Space objects classification via light-curve measurements using deep convolutional neural networks. J. Astronaut. Sci. 2020, 67, 1063–1091. [Google Scholar] [CrossRef]

- Dattilo, A.; Vanderburg, A.; Shallue, C.J.; Mayo, A.W.; Berlind, P.; Bieryla, A.; Calkins, M.L.; Esquerdo, G.A.; Everett, M.E.; Howell, S.B.; et al. Identifying exoplanets with deep learning. ii. two new super-earths uncovered by a neural network in k2 data. Astron. J. 2019, 157, 169. [Google Scholar] [CrossRef] [Green Version]

- Li, B.; Huang, J.; Feng, Y.; Wang, F.; Sang, J. A machine learning-based approach for improved orbit predictions of LEO space debris with sparse tracking data from a single station. IEEE Trans. Aerosp. Electron. Syst. 2020, 56, 4253–4268. [Google Scholar] [CrossRef]

- Linares, R.; Furfaro, R. Space object classification using deep convolutional neural networks. In Proceedings of the 2016 19th International Conference on Information Fusion (FUSION), Heidelberg, Germany, 5–8 July 2016; pp. 1140–1146. [Google Scholar]

- Oltrogge, D.L.; Alfano, S. The technical challenges of better space situational awareness and space traffic management. J. Space Saf. Eng. 2019, 6, 72–79. [Google Scholar] [CrossRef]

- Hilton, S.; Cairola, F.; Gardi, A.; Sabatini, R.; Pongsakornsathien, N.; Ezer, N. Uncertainty Quantification for Space Situational Awareness and Traffic Management. Sensors 2019, 19, 4361. [Google Scholar] [CrossRef] [Green Version]

- Furfaro, R.; Linares, R.; Gaylor, D.; Jah, M.; Walls, R. Resident space object characterization and behavior understanding via machine learning and ontology-based Bayesian networks. In Proceedings of the Advanced Maui Optical and Space Surveillance Technologies Conference (AMOS), Maui, HI, USA, 20–23 September 2016. [Google Scholar]

- Lonnie Shekhtman. NASA Takes a Cue From Silicon Valley to Hatch Artificial Intelligence Technologies. 2019. Available online: https://www.nasa.gov/feature/goddard/2019/nasa-takes-a-cue-from-silicon-valley-to-hatch-artificial-intelligence-technologies (accessed on 15 September 2021).

- Huang, Y.; Wu, S.; Mu, Z.; Long, X.; Chu, S.; Zhao, G. A Multi-agent Reinforcement Learning Method for Swarm Robots in Space Collaborative Exploration. In Proceedings of the 2020 6th International Conference on Control, Automation and Robotics (ICCAR), Singapore, 20–23 April 2020; pp. 139–144. [Google Scholar]

- Furfaro, R.; Bloise, I.; Orlandelli, M.; Di Lizia, P.; Topputo, F.; Linares, R. Deep learning for autonomous lunar landing. In Proceedings of the 2018 AAS/AIAA Astrodynamics Specialist Conference, Snowbird, UT, USA, 19–23 August 2018; Volume 167, pp. 3285–3306. [Google Scholar]

- Gaudet, B.; Furfaro, R.; Linares, R. Reinforcement learning for angle-only intercept guidance of maneuvering targets. Aerosp. Sci. Technol. 2020, 99, 105746. [Google Scholar] [CrossRef] [Green Version]

- Furfaro, R.; Scorsoglio, A.; Linares, R.; Massari, M. Adaptive generalized ZEM-ZEV feedback guidance for planetary landing via a deep reinforcement learning approach. Acta Astronaut. 2020, 171, 156–171. [Google Scholar] [CrossRef] [Green Version]

- Scorsoglio, A.; D’Ambrosio, A.; Ghilardi, L.; Gaudet, B.; Curti, F.; Furfaro, R. Image-Based Deep Reinforcement Meta-Learning for Autonomous Lunar Landing. J. Spacecr. Rocket. 2022, 59, 153–165. [Google Scholar] [CrossRef]

- Cinelli, I. The Role of Artificial Intelligence (AI) in Space Healthcare. Aerosp. Med. Hum. Perform. 2020, 91, 537–539. [Google Scholar] [CrossRef]

- Trofin, R.S.; Chiru, C.; Vizitiu, C.; Dinculescu, A.; Vizitiu, R.; Nistorescu, A. Detection of Astronauts’ Speech and Language Disorder Signs during Space Missions using Natural Language Processing Techniques. In Proceedings of the 2019 E-Health and Bioengineering Conference (EHB), Iasi, Romania, 21–23 November 2019; pp. 1–4. [Google Scholar]

- Yan, F.; Shiqi, L.; Kan, Q.; Xue, L.; Li, C.; Jie, T. Language-facilitated human–robot cooperation within a human cognitive modeling infrastructure: A case in space exploration task. In Proceedings of the 2020 IEEE International Conference on Human-Machine Systems (ICHMS), Rome, Italy, 7–9 September 2020; pp. 1–3. [Google Scholar]

- Durbha, S.S.; King, R.L. Semantics-enabled framework for knowledge discovery from Earth observation data archives. IEEE Trans. Geosci. Remote Sens. 2005, 43, 2563–2572. [Google Scholar] [CrossRef]

- Denis, G.; de Boissezon, H.; Hosford, S.; Pasco, X.; Montfort, B.; Ranera, F. The evolution of Earth Observation satellites in Europe and its impact on the performance of emergency response services. Acta Astronaut. 2016, 127, 619–633. [Google Scholar] [CrossRef]

- The European Commission’s Science and Knowledge Service. Earth Observation. 2021. Available online: https://ec.europa.eu/jrc/en/research-topic/earth-observation (accessed on 15 September 2021).

- OECD. Earth Observation for Decision-Making. 2017. Available online: https://www.oecd.org/env/indicators-modelling-outlooks/Earth_Observation_for_Decision_Making.pdf (accessed on 15 September 2021).

- Berquand, A.; McDonald, I.; Riccardi, A.; Moshfeghi, Y. The automatic categorisation of space mission requirements for the Design Engineering Assistant. In Proceedings of the 70th International Astronautical Congress, Washington, DC, USA, 21–25 October 2019. [Google Scholar]

- Murdaca, F.; Berquand, A.; Riccardi, A.; Soares, T.; Gerené, S.; Brauer, N.; Kumar, K. Artificial intelligence for early design of space missions in support of concurrent engineering sessions. In Proceedings of the 8th International Systems & Concurrent Engineering for Space Applications Conference, Glasgow, UK, 26–28 September 2018. [Google Scholar]

- Simpson, B.C.; Selva, D.; Richardson, D. Extracting Science Traceability Graphs from Mission Concept Documentation using Natural Language Processing. In Proceedings of the AIAA SCITECH 2022 Forum, San Diego, CA, USA, 3–7 January 2022; p. 1182. [Google Scholar]

- Berquand, A.; Murdaca, F.; Riccardi, A.; Soares, T.; Generé, S.; Brauer, N.; Kumar, K. Artificial intelligence for the early design phases of space missions. In Proceedings of the 2019 IEEE Aerospace Conference, Big Sky, MT, USA, 2–9 March 2019; pp. 1–20. [Google Scholar]

- Ferreirab, P.M.G.V.; Ambrosioc, P.A.M. A proposal an innovative Framework for the Conception of the Ground Segment of Space Systems. In Proceedings of the 71st International Astronautical Congress (IAC)—The CyberSpace Edition, IAC 2020, Online, 12–14 October 2020. [Google Scholar]

- Ren, X.; Chen, Y. How Can Artificial Intelligence Help With Space Missions—A Case Study: Computational Intelligence-Assisted Design of Space Tether for Payload Orbital Transfer Under Uncertainties. IEEE Access 2019, 7, 161449–161458. [Google Scholar] [CrossRef]

- Akyildiz, I.F.; Kak, A. The internet of space things/cubesats. IEEE Netw. 2019, 33, 212–218. [Google Scholar] [CrossRef]

- Jagannath, A.; Jagannath, J.; Drozd, A. Artificial intelligence-based cognitive cross-layer decision engine for next-generation space mission. In Proceedings of the 2019 IEEE Cognitive Communications for Aerospace Applications Workshop (CCAAW), Cleveland, OH, USA, 25–26 June 2019; pp. 1–6. [Google Scholar]

- Yairi, T.; Fukushima, Y.; Liew, C.F.; Sakai, Y.; Yamaguchi, Y. A Data-Driven Approach to Anomaly Detection and Health Monitoring for Artificial Satellites. In Advances in Condition Monitoring and Structural Health Monitoring; Springer: Singapore, 2021; pp. 129–141. [Google Scholar]

- Hassanien, A.E.; Darwish, A.; Abdelghafar, S. Machine learning in telemetry data mining of space mission: Basics, challenging and future directions. Artif. Intell. Rev. 2020, 53, 3201–3230. [Google Scholar] [CrossRef]

- Abdelghafar, S.; Darwish, A.; Hassanien, A.E. Intelligent health monitoring systems for space missions based on data mining techniques. In Machine Learning and Data Mining in Aerospace Technology; Springer: Cham, Switzerland, 2020; pp. 65–78. [Google Scholar]

- Yairi, T.; Takeishi, N.; Oda, T.; Nakajima, Y.; Nishimura, N.; Takata, N. A data-driven health monitoring method for satellite housekeeping data based on probabilistic clustering and dimensionality reduction. IEEE Trans. Aerosp. Electron. Syst. 2017, 53, 1384–1401. [Google Scholar] [CrossRef]

- Geng, F.; Li, S.; Huang, X.; Yang, B.; Chang, J.; Lin, B. Fault diagnosis and fault tolerant control of spacecraft attitude control system via deep neural network. Chin. Space Sci. Technol. 2020, 40, 1. [Google Scholar]

- Ibrahim, S.K.; Ahmed, A.; Zeidan, M.A.E.; Ziedan, I.E. Machine learning techniques for satellite fault diagnosis. Ain Shams Eng. J. 2020, 11, 45–56. [Google Scholar] [CrossRef]

- OMeara, C.; Schlag, L.; Wickler, M. Applications of deep learning neural networks to satellite telemetry monitoring. In Proceedings of the 2018 SpaceOps Conference, Marseille, France, 28 May–1 June 2018; p. 2558. [Google Scholar]

- Feruglio, L. Artificial Intelligence for Small Satellites Mission Autonomy; Politecnico di Torino: Torino, Italy, 2017; p. 165. [Google Scholar]

- Amoruso, L.; Abbattista, C.; Antonetti, S.; Drimaco, D.; Feruglio, L.; Fortunato, V.; Iacobellis, M. AI-express In-orbit Smart Services for Small Satellites. In Proceedings of the 2020 International Astronautical Congress (IAC), Online, 12–14 October 2020. [Google Scholar]

- Asrar, M.F.; Saint-Jacques, D.; Williams, D.; Clark, J. Assessing current medical care in space, and updating medical training & machine based learning to adapt to the needs of Deep Space Human Missions. In Proceedings of the 2020 International Astronautical Congress (IAC), Online, 12–14 October 2020. [Google Scholar]

- Alcibiade, A.; Schlacht, I.L.; Finazzi, F.; Di Capua, M.; Ferrario, G.; Musso, G.; Foing, B. Reliability in extreme isolation: A natural language processing tool for stress self-assessment. In International Conference on Applied Human Factors and Ergonomics; Springer: Cham, Switzerland, 2020; pp. 350–357. [Google Scholar]

- Zhang, R.; Wang, Z.; Zhang, Y. Astronaut visual tracking of flying assistant robot in space station based on deep learning and probabilistic model. Int. J. Aerosp. Eng. 2018, 2018, 6357185. [Google Scholar] [CrossRef]

- Rui, Z.; Zhaokui, W.; Yulin, Z. A person-following nanosatellite for in-cabin astronaut assistance: System design and deep-learning-based astronaut visual tracking implementation. Acta Astronaut. 2019, 162, 121–134. [Google Scholar] [CrossRef]

- Zhang, R.; Zhang, Y.; Zhang, X. Tracking In-Cabin Astronauts Using Deep Learning and Head Motion Clues. IEEE Access 2020, 9, 2680–2693. [Google Scholar] [CrossRef]

- Kumar, S.; Tomar, R. The role of artificial intelligence in space exploration. In Proceedings of the 2018 International Conference on Communication, Computing and Internet of Things (IC3IoT), Chennai, India, 15–17 February 2018; pp. 499–503. [Google Scholar]

- Acquatella, P. Development of automation & robotics in space exploration. In Proceedings of the AIAA SPACE 2009 Conference & Exposition, Pasadena, CA, USA, 14–17 September 2009; pp. 1–7. [Google Scholar]

- Vasile, M.; Rodríguez-Fernández, V.; Serra, R.; Camacho, D.; Riccardi, A. Artificial intelligence in support to space traffic management. In Proceedings of the 68th International Astronautical Congress: Unlocking Imagination, Fostering Innovation and Strengthening Security, IAC 2017, Adelaide, Australia, 25–29 September 2007; pp. 3822–3831. [Google Scholar]

- Izzo, D.; Märtens, M.; Pan, B. A survey on artificial intelligence trends in spacecraft guidance dynamics and control. Astrodynamics 2019, 3, 287–299. [Google Scholar] [CrossRef]

- Huang, X.; Li, S.; Yang, B.; Sun, P.; Liu, X.; Liu, X. Spacecraft guidance and control based on artificial intelligence: Review. Acta Aeronaut. Astronaut. Sin 2021, 42, 524201. [Google Scholar]

- Colby, M.; Yliniemi, L.; Tumer, K. Autonomous multiagent space exploration with high-level human feedback. J. Aerosp. Inf. Syst. 2016, 13, 301–315. [Google Scholar] [CrossRef] [Green Version]

- Semenov, A. Elastic computing self-organizing for artificial intelligence space exploration. J. Phys. Conf. Ser. 2021, 1925, 012071. [Google Scholar] [CrossRef]

- Carpentiero, M.; Sabatini, M.; Palmerini, G.B. Swarm of autonomous rovers for cooperative planetary exploration. In Proceedings of the 2017 International Astronautical Congress (IAC), Adelaide, Australia, 25–29 September 2017. [Google Scholar]

- Choi, D.; Kim, D. Intelligent Multi-Robot System for Collaborative Object Transportation Tasks in Rough Terrains. Electronics 2021, 10, 1499. [Google Scholar] [CrossRef]

- Fluke, C.J.; Jacobs, C. Surveying the reach and maturity of machine learning and artificial intelligence in astronomy. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2020, 10, e1349. [Google Scholar] [CrossRef] [Green Version]

- Bird, J.; Colburn, K.; Petzold, L.; Lubin, P. Model Optimization for Deep Space Exploration via Simulators and Deep Learning. arXiv 2020, arXiv:2012.14092. [Google Scholar]

- Bird, J.; Petzold, L.; Lubin, P.; Deacon, J. Advances in deep space exploration via simulators & deep learning. New Astron. 2021, 84, 101517. [Google Scholar]

- Wang, W.; Lin, L.; Fan, Z.; Liu, J. Semi-Supervised Learning for Mars Imagery Classification. In Proceedings of the 2021 IEEE International Conference on Image Processing (ICIP), Anchorage, AK, USA, 19–22 September 2021; pp. 499–503. [Google Scholar]

- Yang, B.; Liu, P.; Feng, J.; Li, S. Two-stage pursuit strategy for incomplete-information impulsive space pursuit-evasion mission using reinforcement learning. Aerospace 2021, 8, 299. [Google Scholar] [CrossRef]

- Yang, B.; Li, S.; Feng, J.; Vasile, M. Fast solver for J2-perturbed Lambert problem using deep neural network. J. Guid. Control Dyn. 2021, 45, 1–10. [Google Scholar] [CrossRef]

- Yang, H.; Yan, J.; Li, S. Fast computation of the Jovian-moon three-body flyby map based on artificial neural networks. Acta Astronaut. 2022, 193, 710–720. [Google Scholar] [CrossRef]

- Yang, B.; Feng, J.; Huang, X.; Li, S. Hybrid method for accurate multi-gravity-assist trajectory design using pseudostate theory and deep neural networks. Sci. China Technol. Sci. 2022, 65, 595–610. [Google Scholar] [CrossRef]

- Yan, J.; Yang, H.; Li, S. ANN-based method for fast optimization of Jovian-moon gravity-assisted trajectories in CR3BP. Adv. Space Res. 2022, 69, 2865–2882. [Google Scholar] [CrossRef]

- Silvestrini, S.; Lunghi, P.; Piccinin, M.; Zanotti, G.; Lavagna, M. Artificial Intelligence Techniques in Autonomous Vision-Based Navigation System for Lunar Landing. In Proceedings of the 71st International Astronautical Congress (IAC 2020), Online, 12–14 October 2020; pp. 1–11. [Google Scholar]

- Salcedo-Sanz, S.; Ghamisi, P.; Piles, M.; Werner, M.; Cuadra, L.; Moreno-Martínez, A.; Izquierdo-Verdiguier, E.; Muñoz-Marí, J.; Mosavi, A.; Camps-Valls, G. Machine learning information fusion in Earth observation: A comprehensive review of methods, applications and data sources. Inf. Fusion 2020, 63, 256–272. [Google Scholar] [CrossRef]

- Ferreira, B.; Iten, M.; Silva, R.G. Monitoring sustainable development by means of earth observation data and machine learning: A review. Environ. Sci. Eur. 2020, 32, 1–17. [Google Scholar] [CrossRef]

- Furano, G.; Meoni, G.; Dunne, A.; Moloney, D.; Ferlet-Cavrois, V.; Tavoularis, A.; Byrne, J.; Buckley, L.; Psarakis, M.; Voss, K.O.; et al. Towards the use of artificial intelligence on the edge in space systems: Challenges and opportunities. IEEE Aerosp. Electron. Syst. Mag. 2020, 35, 44–56. [Google Scholar] [CrossRef]

- Meng, Q.; Huang, M.; Xu, Y.; Liu, N.; Xiang, X. Decentralized Distributed Deep Learning with Low-Bandwidth Consumption for Smart Constellations. Space Sci. Technol. 2021, 2021, 9879246. [Google Scholar] [CrossRef]

- Pastena, M.C.; Mathieu, B.; Regan, P.; Esposito, A.; Conticello, M.; Van Dijk, S.; Vercruyssen, C.; Foglia, N.; Koelemann, P.; Hefele, R.J. ESA Earth Observation Directorate NewSpace initiatives. In Proceedings of the USU Conference on Small Satellites, Logan, UT, USA, 3–8 August 2019. [Google Scholar]

- Camps-Valls, G.; Sejdinovic, D.; Runge, J.; Reichstein, M. A perspective on Gaussian processes for Earth observation. Natl. Sci. Rev. 2019, 6, 616–618. [Google Scholar] [CrossRef] [Green Version]

- Stromann, O.; Nascetti, A.; Yousif, O.; Ban, Y. Dimensionality reduction and feature selection for object-based land cover classification based on Sentinel-1 and Sentinel-2 time series using Google Earth Engine. Remote Sens. 2019, 12, 76. [Google Scholar] [CrossRef] [Green Version]