A Survey of Target Detection and Recognition Methods in Underwater Turbid Areas

Abstract

:1. Introduction

2. Research Field Analysis

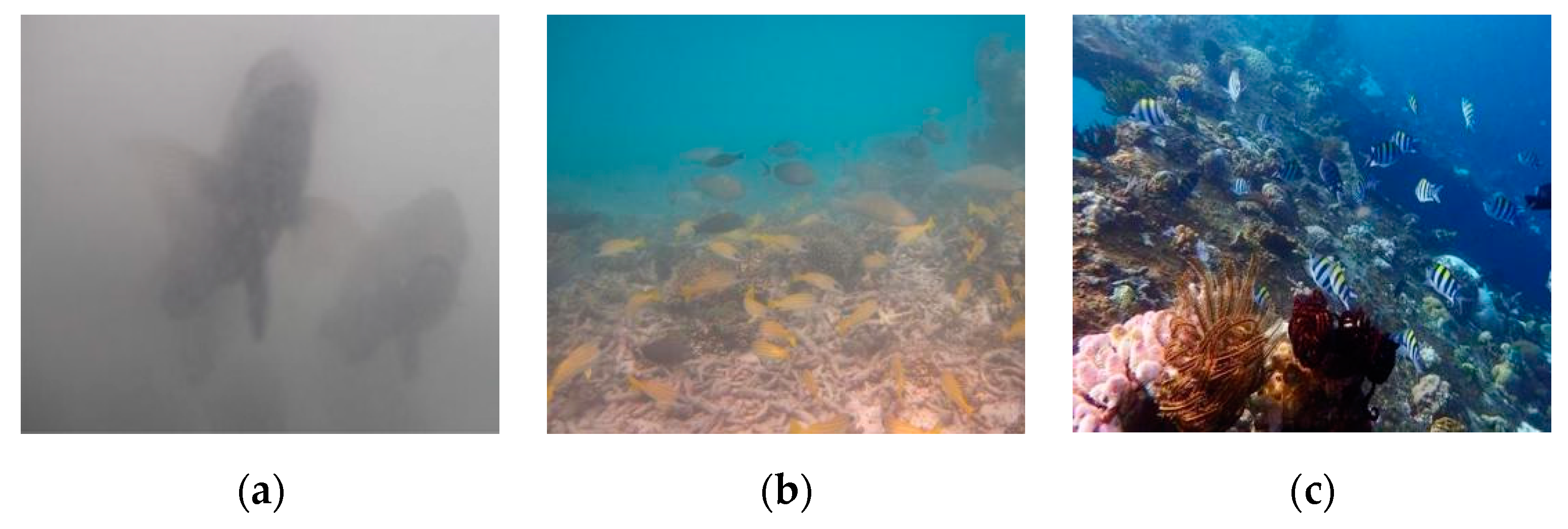

3. Problems in Turbid Areas

4. Research on Target Detection and Recognition in Turbid Waters

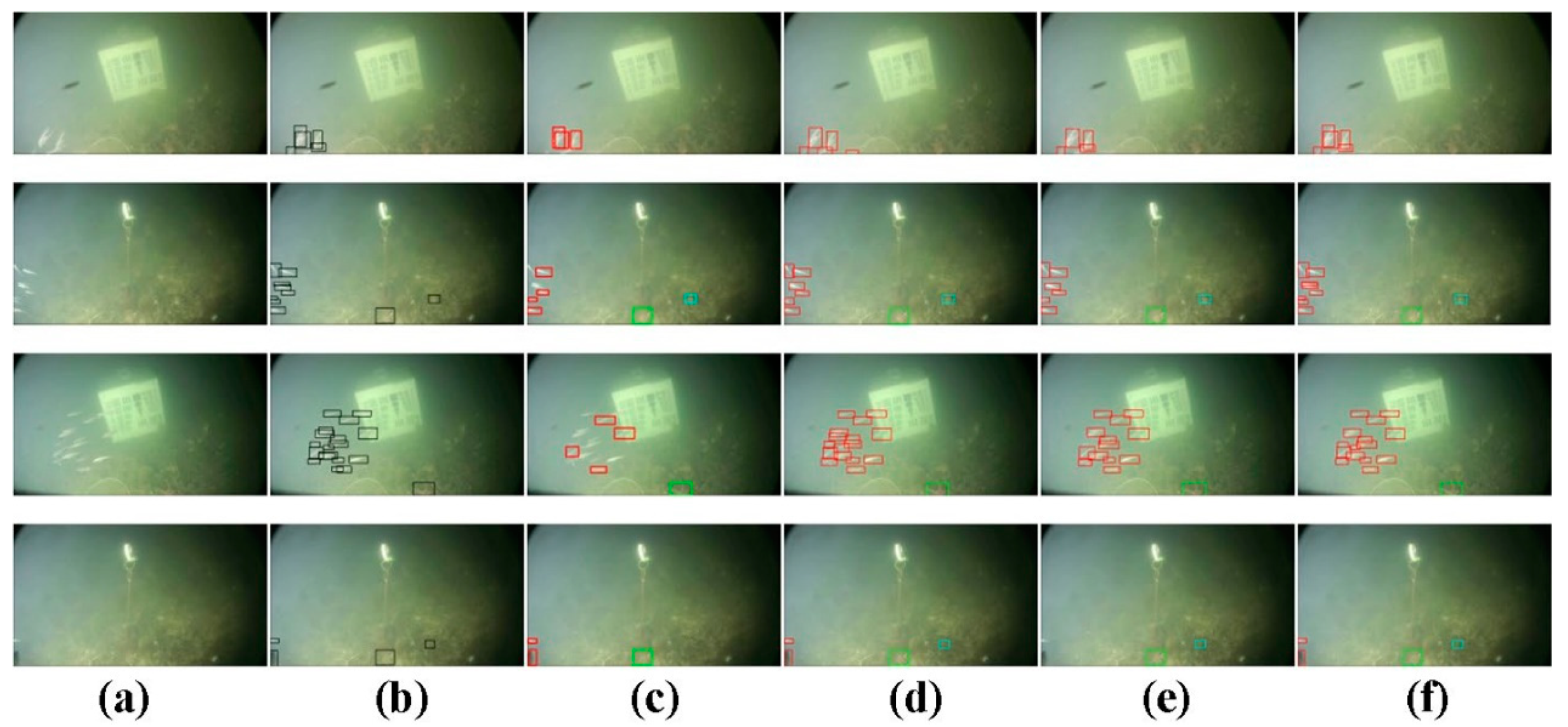

4.1. Target Detection Based on Deep Learning Methods

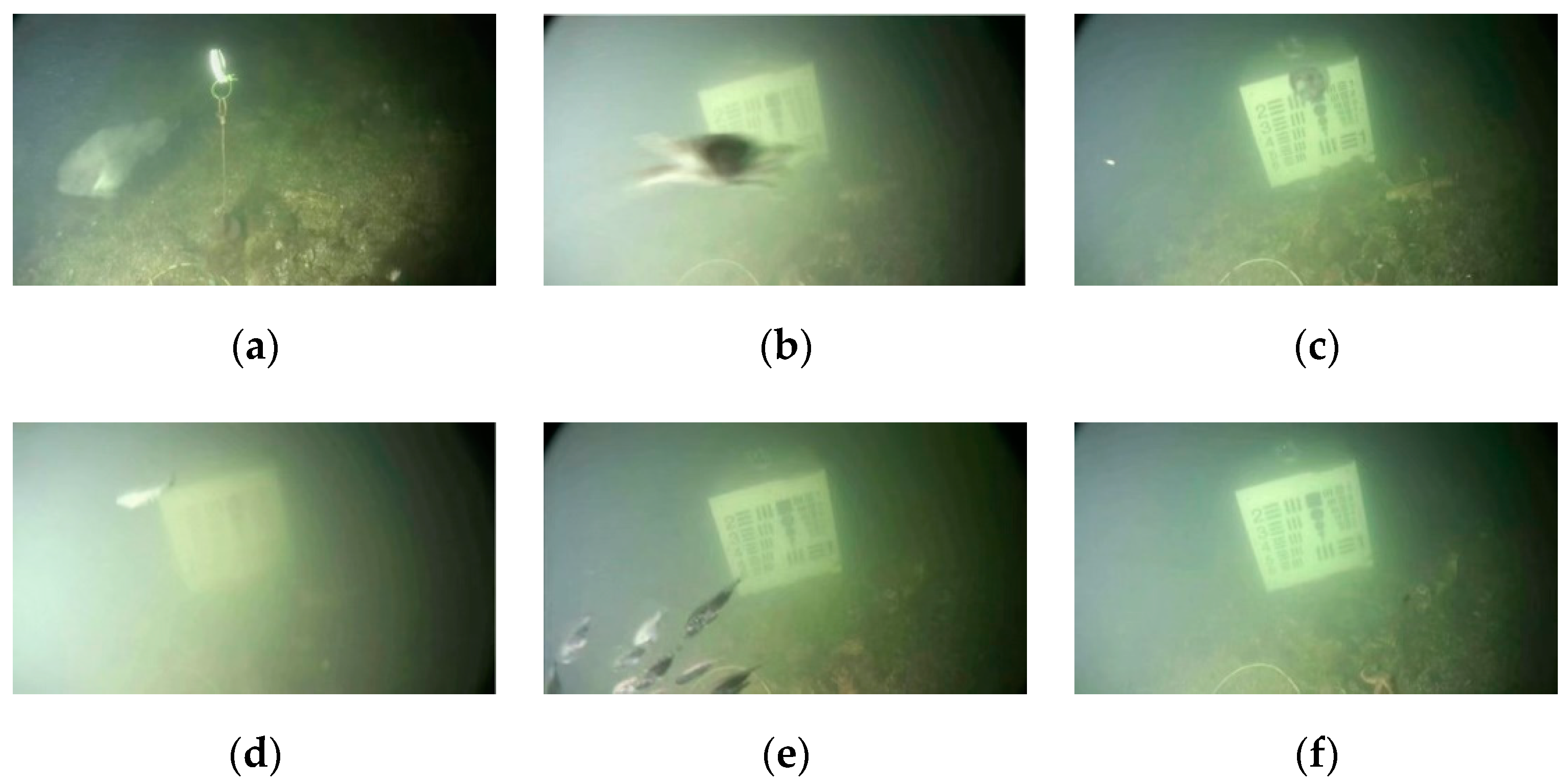

4.2. Underwater Image Restoration and Enhancement Methods

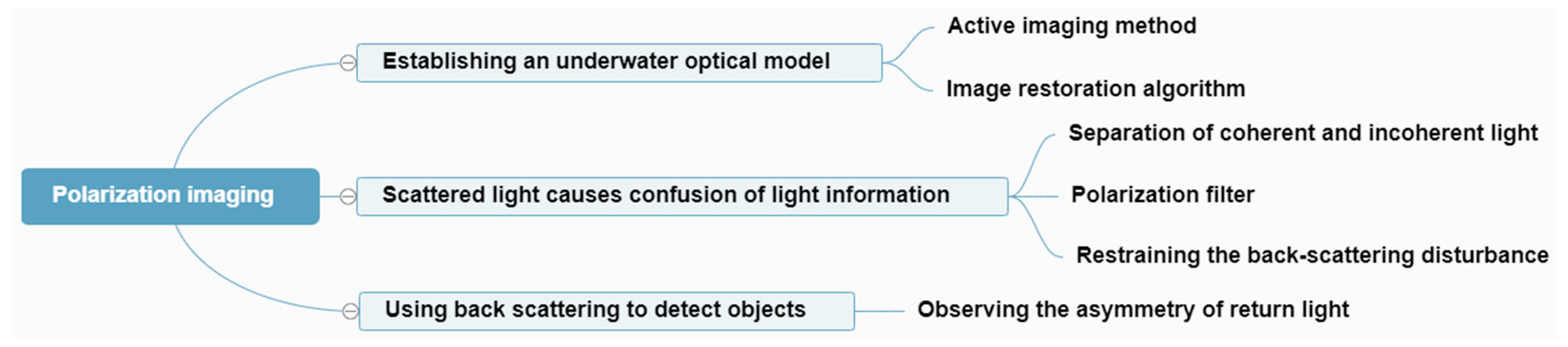

4.3. Underwater Image Processing Based on Polarization Imaging and Scattering

4.4. Other Methods

4.5. Engineering Technology Summary

4.6. Datasets for Target Detection and Recognition in Turbid Water

5. Applications of Underwater Target Detection and Recognition Technology

5.1. Target Detection and Recognition of Underwater Organisms

5.2. Target Detection and Recognition in Underwater Environments

5.3. Underwater Equipment of Target Detection and Recognition

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AUV | Autonomous Underwater Vehicle |

| CNN | Convolution Neural Network |

| DCP | Dark Channel Prior |

| DOP | Degree Of Polarization |

| ENL | Equivalent Numbers of Looks |

| GA | Genetic Algorithm |

| HICRD | Heron Island Coral Reef Dataset |

| IE | Information Entropy |

| LSTM | Long Short-Term Memory |

| MLP | MultiLayer Perceptron |

| MSE | Mean Square Error |

| MUED | Marine Underwater Environment Database |

| OAM | Orbital Angular Momentum |

| PSNR | Peak Signal to Noise Ratio |

| RCNN | Region Convolution Neural Network |

| RNN | Recurrent Neural Network |

| ROV | Remote Operating Vehicle |

| SAM | Spectral Angle Mapper |

| SFPS | Simulated Feature Point Selection |

| SNR | Signal to Noise Ratio |

| SQUID | Stereo Quantitative Underwater Image Dataset |

| SSD | Single Shot multi-box Detector |

| SSIM | Structural Similarity Index Method |

| SVM | Support Vector Machine |

| UDCP | Under Dark Channel Prior method |

| UDCP | Under Dark Channel Prior method |

| UD-ETR | Under Dark Channel Prior based Energy Transmission Restoration |

| UHI | Underwater Hyperspectral Imager |

| UIEB | Underwater Image Enhancement Benchmark |

| YOLO | You Only Live Once |

References

- Ren, S.Q.; He, K.M.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- George, M.; Lakshmi, C. Object Detection using the Canny Edge Detector. Int. J. Sci. Res. 2013, 2, 213. [Google Scholar]

- Cao, N.W. Whight Light Polarization Imaging for Underwater Objects. Chin. J. Quantum Electron. 1999, 16, 6. [Google Scholar]

- Chen, Y.T.; Wang, W.; Li, L.; Kelly, R.; Xie, G. Obstacle effects on electrocommunication with applications to object detection of underwater robots. Bioinspiration Biomim. 2019, 14, 056011. [Google Scholar] [CrossRef]

- Web of Science. Available online: https://www.webofsience.com/wos/alldb/basic-search (accessed on 3 January 2022).

- Yang, X.; Yin, C.; Zhang, Z.; Li, Y.; Liang, W.; Wang, D.; Tang, Y.; Fan, H. Robust Chromatic Adaptation Based Color Correction Technology for Underwater Images. Appl. Sci. 2020, 10, 6392. [Google Scholar] [CrossRef]

- He, D.M.; Seet, G.G.L. Underwater vision enhancement in turbid water by range-gated imaging system. In Proceedings of the Technical Digest. Summaries of Papers Presented at the Conference on Lasers and Electro-Optics Postconference Technical Digest (IEEE Cat. No.01CH37170), Baltimore, MD, USA, 11 May 2001; p. 378. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE 2014 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot multi-box Detector. In Computer Vision—ECCV 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer: Cham, Switzerland, 2016; Volume 9905, pp. 21–37. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolo9000: Better, faster, stronger. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar]

- Lakshmi, M.D.; Santhanam, S.M. Underwater Image Recognition Detector using Deep ConvNet. In Proceedings of the 2020 National Conference on Communications (NCC), Kharagpur, India, 21–23 February 2020. [Google Scholar]

- Lau, P.Y.; Lai, S.C. Localizing fish in highly turbid underwater images. In Proceedings of the International Workshop on Advanced Image Technology, Online, 8–13 March 2021. [Google Scholar]

- Koonce, B. MobileNet v2. In Convolutional Neural Networks with Swift for Tensorflow; Apress: Berkeley, CA, USA, 2021; pp. 99–107. [Google Scholar]

- Wu, Z.; Shen, C.; Hengel, A. Wider or Deeper: Revisiting the ResNet Model for Visual Recognition. Pattern Recognit. 2016, 90, 119–133. [Google Scholar] [CrossRef] [Green Version]

- Wen, F. MOBILENET. U.S. Patent US20120309352A1, 30 April 2019. [Google Scholar]

- Iandola, F.; Moskewicz, M.; Karayev, S.; Girshick, R.; Darrell, T.; Keutzer, K. DenseNet: Implementing Efficient ConvNet Descriptor Pyramids. arXiv 2014, arXiv:1404.1869. [Google Scholar]

- Wei, X.; Yu, L.; Tian, S.; Feng, P.; Ning, X. Underwater target detection with an attention mechanism and improved scale. Multimed. Tools Appl. 2021, 80, 33747–33761. [Google Scholar] [CrossRef]

- Liu, Y.; Meng, W.; Zong, H. Jellyfish Recognition and Density Calculation Based on Image Processing and Deep Learning. In Proceedings of the 2020 Chinese Control and Decision Conference (CCDC), Hefei, China, 22–24 August 2020. [Google Scholar]

- Ahmed, S.; Khan, M.; Labib, M.; Chowdhury, A.Z.M.E. An Observation of Vision Based Underwater Object Detection and Tracking. In Proceedings of the 2020 3rd International Conference on Emerging Technologies in Computer Engineering: Machine Learning and Internet of Things (ICETCE), Jaipur, India, 7–8 February 2020. [Google Scholar]

- Zheng, Y.; Sun, X.H. The Improvement of Laplace Operator in Image Edge detection. J. Shenyang Archit. Civ. Eng. Inst. 2005, 21, 268–271. [Google Scholar]

- Saini, A.; Biswas, M. Object Detection in Underwater Image by Detecting Edges using Adaptive Thresholding. In Proceedings of the 2019 3rd International Conference on Trends in Electronics and Informatics (ICOEI), Tirunelveli, India, 23–25 April 2019. [Google Scholar]

- Yi, Z.; Han, X.; Han, Z.; Zhao, L. Edge detection algorithm of image fusion based on improved Sobel operator. In Proceedings of the 2017 IEEE 3rd Information Technology and Mechatronics Engineering Conference (ITOEC), Chongqing, China, 3–5 October 2017. [Google Scholar]

- Palconit, M.G.B.; Almero, V.J.D.; Rosales, M.A.; Sybingco, E.; Bandala, A.A.; Vicerra, R.R.P.; Dadios, E.P. Towards Tracking: Investigation of Genetic Algorithm and LSTM as Fish Trajectory Predictors in Turbid Water. In Proceedings of the 2020 IEEE Region 10 Conference (TENCON), Osaka, Japan, 16–19 November 2020. [Google Scholar]

- Li, H.; Ji, S.; Tan, X.; Li, Z.; Xiang, Y.; Lv, P.; Duan, H. Effect of Reynolds number on drag reduction in turbulent boundary layer flow over liquid–gas interface. Phys. Fluids 2020, 32, 122111. [Google Scholar] [CrossRef]

- Thomas, R.; Thampi, L.; Kamal, S.; Balakrishnan, A.A.; Mithun Haridas, T.P.; Supriya, M.H. Dehazing Underwater Images Using Encoder Decoder Based Generic Model-Agnostic Convolutional Neural Network. In Proceedings of the 2021 International Symposium on Ocean Technology (SYMPOL), Kochi, India, 9–11 December 2021; pp. 1–4. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error measurement to structural similarity. IEEE Trans. Image Process 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Dudhane, A.; Patil, P.W.; Murala, S. An End-to-End Network for Image De-Hazing and Beyond. IEEE Trans. Emerg. Top. Comput. Intell. 2020, 6, 159–170. [Google Scholar] [CrossRef]

- Yin, X.; Ma, J. General Model-Agnostic Transfer Learning for Natural Degradation Image Enhancement. In Proceedings of the 2021 International Symposium on Computer Technology and Information Science (ISCTIS), Guilin, China, 4–6 June 2021; pp. 250–257. [Google Scholar]

- Li, C.; Anwar, S.; Porikli, F. Underwater scene prior inspired deep underwater image and video enhancement. Pattern Recognit. 2020, 98, 107038. [Google Scholar] [CrossRef]

- Islam, M.J.; Xia, Y.; Sattar, J. Fast Underwater Image Enhancement for Improved Visual Perception. IEEE Robot. Autom. Lett. 2020, 5, 3227–3234. [Google Scholar] [CrossRef] [Green Version]

- Martin, M.; Sharma, S.; Mishra, N.; Pandey, G. UD-ETR Based Restoration & CNN Approach for Underwater Object Detection from Multimedia Data. In Proceedings of the 2nd International Conference on Data, Engineering and Applications (IDEA), Bhopal, India, 28–29 February 2020. [Google Scholar]

- Yang, M.; Sowmya, A.; Wei, Z.Q.; Zheng, B. Offshore Underwater Image Restoration Using Reflection-Decomposition-Based Transmission Map Estimation. IEEE J. Ocean. Eng. 2019, 45, 521–533. [Google Scholar] [CrossRef]

- Cecilia, S.M.; Murugan, S.S. Edge Aware Turbidity Restoration of Single Shallow Coastal Water Image. J. Phys. Conf. Ser. 2021, 1911, 27–29. [Google Scholar]

- Zhou, J.C.; Yang, T.Y.; Ren, W.Q.; Zhang, D.; Zhang, W.S. Underwater image restoration via depth map and illumination estimation based on single image. Opt. Express 2021, 29, 29864–29886. [Google Scholar] [CrossRef]

- Li, Y.; Lu, H.; Li, J.; Li, X.; Li, Y.; Serikawa, S. Underwater image de-scattering and classification by deep neural network. Comput. Electr. Eng. 2016, 54, 68–77. [Google Scholar] [CrossRef]

- Yang, L.M. Research on the Target Enhancement Technology Based on Polarization Imaging. Master’s Thesis, Xi’an University of Technology, Xian, China, 28 May 2018. [Google Scholar]

- Drews, P., Jr.; Nascimento, E.R.; Campos, M.; Elfes, A. Automatic restoration of underwater monocular sequences of images. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots & Systems, Hamburg, Germany, 28 September–3 October 2015. [Google Scholar]

- Mach, C. Random Sample Consensus: A paradigm for model fitting with application to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar]

- Cheng, H.; Wan, Z.; Zhang, R.; Chu, J. Visibility improvement in turbid water by the fusion technology of Mueller matrix images. In Proceedings of the 2021 IEEE International Conference on Artificial Intelligence and Computer Applications (ICAICA), Dalian, China, 28–30 June 2021; pp. 838–842. [Google Scholar]

- Zhou, J.C.; Wang, Y.Y.; Zhang, W.S.; Li, C.Y. Underwater image restoration via feature priors to estimate background light and optimized transmission map. Opt. Express 2021, 29, 28228–28245. [Google Scholar] [CrossRef] [PubMed]

- Bailey, B.C.; Blatt, J.H.; Caimi, F.M. Radiative Transfer Modeling and Analysis of Spatially Variant and Coherent Illumination for Undersea Object Detection. IEEE J. Ocean. Eng. 2003, 28, 570–582. [Google Scholar] [CrossRef]

- Han, P.L. Research on Polarization Imaging Exploration Technology of Underwater Target. Ph.D. Thesis, Xi’an University of Electronic Science and Technology, Xian, China, June 2018. [Google Scholar]

- Huang, B.J. Optimization Technology of Polarization Imaging Contrast in Complex Environment. Master’s Thesis, Tianjin University, Tianjin, China, December 2016. [Google Scholar]

- Cochenour, B.; Rodgers, L.; Laux, A.; Mullen, L.; Morgan, K.; Miller, J.K.; Johnson, E.G. The detection of objects in a turbid underwater medium using orbital angular momentum (OAM). In Proceedings of the SPIE Defense + Security 2017, Anaheim, CA, USA, 9–13 April 2017. Society of Photo-Optical Instrumentation Engineers (SPIE) Conference Series. [Google Scholar]

- Amer, K.O.; Elbouz, M.; Alfalou, A.; Brosseau, C.; Hajjami, J. Enhancing underwater optical imaging by using a low-pass polarization filter. Opt. Express 2019, 27, 621. [Google Scholar] [CrossRef] [PubMed]

- Hui, W.; Zhao, L.; Huang, B.; Li, X.; Wang, H.; Liu, T. Enhancing Visibility of Polarimetric Underwater Image by Transmittance Correction. IEEE Photonics J. 2017, 9, 6802310. [Google Scholar] [CrossRef]

- Zhao, R.X. Research on the Target Detection Method in Turbid Media Based on Polarization Differential Imaging. Master’s Thesis, Nanjing University of Technology, Nanjing, China, 2020. [Google Scholar]

- Hunt, C.F.; Young, J.T.; Brothers, J.A.; Hutchins, J.O.; Rumbaugh, L.K.; Illig, D.W. Target Detection in Underwater Lidar using Machine Learning to Classify Peak Signals. In Proceedings of the Global Oceans 2020, Singapore—U.S. Gulf Coast, Biloxi, MS, USA, 5–30 October 2020; pp. 1–6. [Google Scholar]

- Hu, H.; Zhao, L.; Li, X.; Wang, H.; Liu, T. Underwater image recovery under the non-uniform optical field based on polarimetric imaging. IEEE Photonics J. 2018, 10, 6900309. [Google Scholar] [CrossRef]

- Wu, C.; Lee, R.; Davis, C. Object detection and geometric profiling through dirty water media using asymmetry properties of backscattered signals. In Proceedings of the Ocean Sensing and Monitoring X, Orlando, FL, USA, 15–18 April 2018; Volume 10631. [Google Scholar]

- Liu, L.X. Research on Target Detection and Tracking Technology of Imaging Sonar. Ph.D. Thesis, Harbin Engineering University, Harbin, China, 20 November 2015. [Google Scholar]

- Github. Available online: https://github.com/openimages/dataset (accessed on 30 January 2022).

- Jian, M.; Qiang, Q.; Yu, H.; Dong, J.; Cui, C.; Nie, X.; Zhang, H.; Yin, Y.; Lam, K.-M. The extended marine underwater environment database and baseline evaluations. Appl. Soft Comput. 2019, 80, 425–437. [Google Scholar] [CrossRef]

- Pedersen, M.; Haurum, J.B.; Gade, R.; Moeslund, T.B. Detection of Marine Animals in a New Underwater Dataset with Varying Visibility. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Li, C.; Guo, C.; Ren, W.; Cong, R.; Hou, J.; Kwong, S.; Tao, D. An Underwater Image Enhancement Benchmark Dataset and Beyond. IEEE Trans. Image Process. 2019, 29, 4376–4389. [Google Scholar] [CrossRef] [Green Version]

- Cecilia, S.M.; Murugan, S.; Padmapriya, N. Analysis of Various Dehazing Algorithms for Underwater Images. In Proceedings of the 2019 International Symposium on Ocean Technology (SYMPOL), SSN College of Engineering, Chennai, India, 11–13 December 2019. [Google Scholar]

- Han, J.; Shoeiby, M.; Malthus, T.; Botha, E.; Anstee, J.; Anwar, S.; Wei, R.; Armin, M.A.; Li, H.; Petersson, L. Underwater Image Restoration via Contrastive Learning and a Real-world Dataset. arXiv 2021, arXiv:2106.10718. [Google Scholar]

- Galdran, A.; Pardo, D.; Picón, A.; Alvarez-Gila, A. Automatic Red-Channel underwater image restoration. J. Vis. Commun. Image Represent. 2015, 26, 132–145. [Google Scholar] [CrossRef] [Green Version]

- Kandimalla, V.; Richard, M.; Smith, F.; Quirion, J.; Torgo, L.; Whidden, C. Automated detection, classification and counting of fish in fish passages with deep learning. Front. Mar. Sci. 2022, 8, 2049. [Google Scholar] [CrossRef]

- Yu, X.; Wang, Y.; An, D.; Wei, Y. Identification methodology of special behaviors for fish school based on spatial behavior characteristics. Comput. Electron. Agric. 2021, 185, 106169. [Google Scholar] [CrossRef]

- Li, Z.; Li, G.; Niu, B.; Peng, F. Sea cucumber image dehazing method by fusion of retinex and dark channel. IFAC-Pap. 2018, 51, 796–801. [Google Scholar] [CrossRef]

- Zhang, H.; Yu, F.; Sun, J.; Shen, X.; Li, K. Deep learning for sea cucumber detection using stochastic gradient descent algorithm. Eur. J. Remote Sens. 2020, 53, 53–62. [Google Scholar] [CrossRef] [Green Version]

- Li, J.; Xu, C.; Jiang, L.; Xiao, Y.; Deng, L.; Han, Z. Detection and Analysis of Behavior Trajectory for Sea Cucumbers Based on Deep Learning. IEEE Access 2020, 8, 18832–18840. [Google Scholar] [CrossRef]

- Cao, S.; Zhao, D.; Liu, X.; Sun, Y. Real-time robust detector for underwater live crabs based on deep learning. Comput. Electron. Agric. 2020, 172, 105339. [Google Scholar] [CrossRef]

- Lu, H.; Uemura, T.; Wang, D.; Zhu, J.; Huang, Z.; Kim, H. Deep-Sea Organisms Tracking Using Dehazing and Deep Learning. Mob. Netw. Appl. 2020, 25, 1008–1015. [Google Scholar] [CrossRef]

- Rasmussen, C.; Zhao, J.; Ferraro, D.; Trembanis, A. Deep Census: AUV-Based Scallop Population Monitoring. In Proceedings of the 2017 IEEE International Conference on Computer Vision Workshops (ICCVW), Venice, Italy, 22–29 October 2017; pp. 2865–2873. [Google Scholar]

- Gonzalez-Rivero, M.; Beijbom, O.; Rodriguez-Ramirez, A.; Bryant, D.E.; Ganase, A.; Gonzalez-Marrero, Y.; Herrera-Reveles, A.; Kennedy, E.V.; Kim, C.J.; Lopez-Marcano, S.; et al. Monitoring of coral reefs using artificial intelligence: A feasible and cost-effective approach. Remote Sens. 2020, 12, 489. [Google Scholar] [CrossRef] [Green Version]

- Oladi, M.; Ghazilou, A.; Rouzbehani, S.; Polgardani, N.Z.; Kor, K.; Ershadifar, H. Photographic application of the Coral Health Chart in turbid environments: The efficiency of image enhancement and restoration methods. J. Exp. Mar. Biol. Ecol. 2022, 547, 151676. [Google Scholar] [CrossRef]

- Sture, O.; Ludvigsen, M.; Aas, L.M.S. Autonomous underwater vehicles as a platform for underwater hyperspectral imaging. In Proceedings of the Oceans 2017, Aberdeen, UK, 19–22 June 2017; pp. 1–8. [Google Scholar]

- Dumke, I.; Nornes, S.M.; Purser, A.; Marcon, Y.; Ludvigsen, M.; Ellefmo, S.L.; Johnsen, G.; Søreide, F. First hyperspectral imaging survey of the deep seafloor: High-resolution mapping of manganese nodules. Remote Sens. Environ. 2018, 209, 19–30. [Google Scholar] [CrossRef]

- Diegues, A.; Pinto, J.; Ribeiro, P.; Frias, R.; Alegre, D.C. Automatic habitat mapping using convolutional neural networks. In Proceedings of the 2018 IEEE/OES Autonomous Underwater Vehicle Workshop (AUV), Porto, Portugal, 6–9 November 2018; pp. 1–6. [Google Scholar]

- Wasserman, J.; Claassens, L.; Adams, J.B. Mapping subtidal estuarine habitats with a remotely operated underwater vehicle (ROV). Afr. J. Mar. Sci. 2020, 42, 123–128. [Google Scholar] [CrossRef]

- Fatan, M.; DaLiri, M.R.; Shahri, A.M. Underwater cable detection in the images using edge classification based on texture information. Measurement 2016, 91, 309–317. [Google Scholar] [CrossRef]

- Thum, G.W.; Tang, S.H.; Ahmad, S.A.; Alrifaey, M. Underwater cable detection in the images using edge classification based on texture informatio. J. Mar. Sci. Eng. 2020, 8, 924. [Google Scholar] [CrossRef]

- Khan, A.; Ali, S.S.A.; Anwer, A.; Adil, S.H.; Mériaudeau, F. Subsea Pipeline Corrosion Estimation by Restoring and Enhancing Degraded Underwater Images. IEEE Access 2018, 6, 40585–40601. [Google Scholar] [CrossRef]

- Soares, L.; Botelho, S.; Nagel, R.; Drews, P.L. A Visual Inspection Proposal to Identify Corrosion Levels in Marine Vessels Using a Deep Neural Network. In Proceedings of the 2021 Latin American Robotics Symposium (LARS), 2021 Brazilian Symposium on Robotics (SBR), and 2021 Workshop on Robotics in Education (WRE), Online, 11–15 October 2021; pp. 222–227. [Google Scholar]

- Shi, P.; Fan, X.; Ni, J.; Wang, G. A detection and classification approach for underwater dam cracks. Struct. Health Monit. 2016, 15, 541–554. [Google Scholar] [CrossRef]

- Liu, S.; Zhang, H.; Jia, J.; Li, B. Feature recognition for underwater weld images. In Proceedings of the 29th Chinese Control Conference, Beijing, China, 29–31 July 2010; pp. 2729–2734. [Google Scholar]

- Duan, Y. Welding Seam Recognition Robots Based on Edge Computing. In Proceedings of the Welding Seam Recognition Robots Based on Edge Computing Conference, Stanford, CA, USA, 1–2 August 2020; pp. 27–30. [Google Scholar]

| Type | Number of Parameters | Number of Trainable Parameters | Training Model Storage | Accuracy (%) |

|---|---|---|---|---|

| ResNet50 [17] | 34,386,842 | 34,356,164 | 137.8 MB | 78.28% |

| MobileNet [18] | 8,280,256 | 8,255,236 | 33.3 MB | 82.19% |

| MobileNetV2 [16] | 6,743,096 | 6,701,188 | 27.2 MB | 84.57% |

| DenseNet [19] | 42,726,388 | 42,672,772 | 171.4 MB | 83.98% |

| Type | Accuracy | Accuracy Evaluation |

|---|---|---|

| Laplacian [23] | 68.9% | Normal |

| Sobel X [24] | 79.26% | High |

| Sobel Y [24] | 79% | High |

| Combined Sobel [25] | 88.9% | Very high |

| Canny [3] | 89.13% | Very high |

| Parameters | Existing Approach (Transmission Map Estimation) [35] | Proposed Approach (UD-ETR-Based Restoration) |

|---|---|---|

| Contract luminance | 39 | 89 |

| UCIQE | 12 | 26 |

| Saturation | 0.1 | 0.5 |

| Chroma | 2.5 | 5.5 |

| PSNR | 5 | 14 |

| RMSE | 140 | 50 |

| MSE | 1.7 | 0.3 |

| Dataset | Main Content | Analysis |

|---|---|---|

| Open Images Dataset [55] https://github.com/openimages/dataset (accessed on 30 January 2022) | Comprehensive dataset | Open source, diversity, wide extending, suitable for multi-class classifiers. |

| MUED Dataset [56] https://zenodo.org/record/2542305#.Ynd05YxBxEZ (accessed on 30 January 2022) | aquatic organisms image set | Complex background with large number of targets, which is suitable for the high-order training and the verification of a deep learning network. |

| Galdran A et al. [61] https://github.com/agaldran/UnderWater (accessed on 3 February 2022) | underwater biological image set | Includes some species of underwater organisms. |

| SQUID Dataset [59] https://paperswithcode.com/dataset/squid (accessed on 3 February 2022) | Underwater stereo quantitative image dataset | Contains different water properties, which is suitable for image enhancement and restoration. |

| Brackish dataset [57] https://www.kaggle.com/aalborguniversity/brackish-dataset (accessed on 2 February 2022) | Underwater bios video (including many small aquatic creatures) | Includes small aquatic organisms, which is suitable for verifying the ability of high-precision recognition. |

| HICRD Dataset [60] https://paperswithcode.com/dataset/hicrd (accessed on 3 February 2022) | Underwater image dataset | Large dataset for underwater image restoration. |

| UIEB Dataset [58] https://li-chongyi.github.io/proj_benchmark.html (accessed on 2 February 2022) | Underwater enhanced image dataset | Contains reference images and non-reference images, which is conducive to the verification of results. |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yuan, X.; Guo, L.; Luo, C.; Zhou, X.; Yu, C. A Survey of Target Detection and Recognition Methods in Underwater Turbid Areas. Appl. Sci. 2022, 12, 4898. https://doi.org/10.3390/app12104898

Yuan X, Guo L, Luo C, Zhou X, Yu C. A Survey of Target Detection and Recognition Methods in Underwater Turbid Areas. Applied Sciences. 2022; 12(10):4898. https://doi.org/10.3390/app12104898

Chicago/Turabian StyleYuan, Xin, Linxu Guo, Citong Luo, Xiaoteng Zhou, and Changli Yu. 2022. "A Survey of Target Detection and Recognition Methods in Underwater Turbid Areas" Applied Sciences 12, no. 10: 4898. https://doi.org/10.3390/app12104898

APA StyleYuan, X., Guo, L., Luo, C., Zhou, X., & Yu, C. (2022). A Survey of Target Detection and Recognition Methods in Underwater Turbid Areas. Applied Sciences, 12(10), 4898. https://doi.org/10.3390/app12104898