1. Introduction

Human Motion Capture (HMC) is a highly researched field and covers the detection of all kinds of human motion, including movements of the whole body or smaller parts such as the face or hands [

1]. In their publications from 2001 and 2006, Moesland et al. found more than 450 publications researching vision-based HMC and analysis [

1,

2], not considering HMC using different technologies such as inertial or magnetic sensors.

Traditional HMC systems are bound to an off-field setting [

3,

4] and are expensive in installation and operation [

5,

6], limiting their application to professional use cases. In their review of motion capture systems in 2018, van der Kruk and Reijne identified five types of motion capture systems: Optoelectronic Measurement Systems (OMS), Inertial Sensor Measurement Systems, Electromagnetic Measurement Systems (EMS), Ultrasonic Localization Systems (ULS), and Image Processing Systems (IPS) [

7]. They introduce OMS as the gold standard for motion capture [

7]. Indeed, many studies [

8,

9,

10,

11,

12,

13] used OMS such as the Vicon motion capture system (Vicon, Oxford, UK) [

14] or the Qualisys motion capture system (Qualisys AB, Göteborg, Sweden) [

15] as reference measurement systems in their studies. OMS require multiple cameras or sensors around a subject and reflection markers on the subject’s anatomical landmarks, which are then captured by the cameras or sensors. The Inertial Measurement Sensor Systems rely on Inertial Measurement Units (IMU), which are placed on the subject’s body to capture motion and mapped onto a rigid-body model. Examples for IMU-based systems are the Xsens systems (Xsens Technologies B.V., Enschede, The Netherlands) [

16] or Perception Neuron (Noitom Ltd., Miami, FL, USA) [

17]. Through the traveling time of electromagnetic or ultrasonic waves between a tagged person and a base station, EMS and ULS track the position of the subject [

7,

18]. In contrast to the other systems, these systems allow tracking one or more subjects’ positions, but do not capture joint kinematics [

7]. While the described systems are well-validated systems for HMC, their complex setup and costs prevent them from application in mHealth applications. With the advancements in technology and machine learning, IPS became more relevant in human motion capture. IPS rely on video input and different machine learning approaches to detect specific body landmarks and capture human motion. Among the most researched systems is Kinect (Microsoft Corp., Redmond, WA, USA), which uses a combination of an RBG-camera and infrared sensors and can capture motion in 3-dimensional space [

10,

11]. However, the Kinect still requires a specialized setup for motion capture. The offer of IPS has been extended by recent advances in technology, such as enhanced sensors and processing units. These advances enable computer-vision-based motion capture on smartphones and tablets. These IPS systems offer new possibilities for HMC in mobile scenarios such as in mHealth applications. Examples for IPS software which can run on mobile devices are OpenPose (CMU, Pittsburgh, PA, USA) [

19], ARKit (Apple Inc., Cupertino, CA, USA) [

20], Vision (Apple Inc., Cupertino, CA, USA) [

21], and TensorFlow Pose Estimate (Google, Mountain View, CA, USA) [

22]. All of these IPS can be integrated into custom applications by developers. The detection of the human body and its position is realized through computer-vision algorithms, which can use Convolutional Neural Networks (CNNs) or Part Affinity Fields (PAFs) [

23]. In most systems, a predefined humanoid model is then applied to estimate the shape and kinematic structure of the tracked person [

2]. The algorithms deliver the joint coordinates in two or three dimensions for every video frame.

Moeslund et al. identified three main use cases for HMC: (1) surveillance of crowds and their behavior, (2) controlling software through specific movements or gestures or controlling virtual characters in the entertainment industry such as in movies, and (3) analysis of motion for diagnostics, for example in orthopedic patients or performance improvements in athletes [

2]. While use case (1) focuses on tracking multiple subjects, (2) and (3) focus on capturing body motion of a single subject and thus require tracking of several parts of the human body. Especially use case (3) offers several applications of HMC, which are often limited to professional use cases such as gait analysis [

24] or sports applications [

7] due to the lack of a reliable, accessible, and low-priced solution in on-field settings.

In the sports and health sector, the usage of mobile applications has significantly increased in the past years [

25,

26]. Research has shown that such apps can positively impact their user’s health and lifestyle [

27]. However, most fitness and health apps only allow limited tracking and analysis of motion [

28]. While smartphone-based motion capture promises a lightweight and consumer-friendly motion capture and analysis, the software systems have only been evaluated to a limited extent. Moreover, research has been focused on 2D systems. Several studies have shown that in 2D-motion analysis, the reliability and validity of the kinematic measurements are dependent on the performed task, which reliability is measured, video quality, and position of the recording device [

8,

13,

29,

30]. Especially the camera position influences the accuracy of tracked joint angles. A slightly different viewing angle already distorts the result of the joint angle, which is why triangulation with multiple devices is often performed to overcome the limitations of monocular camera setups [

31]. Among mobile 2D motion capture systems, the OpenPose software is widely used and evaluated in several studies [

19,

23,

30,

32,

33,

34,

35,

36,

37]. The results show that OpenPose delivers accurate biomechanical measurements, especially when tracking the joint trajectories. However, the compared joint angles differed significantly from the gold standard systems. D’Antonio et al. measured up to 9.9 degrees difference in the minima and maxima of the tracked joint angles during gait analysis [

35], Nakano et al. experienced deviations of more than 40 mm in their study [

37]. The measuerements can by improved by using multiple devices to calculate the body position in 3D as in the study by Zago et al. [

30]. Mobile 2D motion capture systems have been recently complemented by 3D motion capture algorithms, which estimate the 3D joint positions based on 2D monocular video data [

20,

38,

39,

40,

41,

42]. They detect and calculate the body’s joint coordinates in all three movement planes, making the motion capture more robust against the camera’s viewing angle. Mobile 3D motion capture frameworks could overcome the limitations of 2D motion capture systems. Some of the 3D motion capture frameworks use additional smartphone sensors such as integrated accelerometers to determine the smartphone’s position or depth sensors such as the integrated Light Detection and Ranging (LiDAR) depth sensor to additionally enhance the position detection of the human body [

20,

38,

39]. The LiDAR data can be used to create a dense depth map from an RGB image through depth completion [

43]. Among the most well-known mobile 3D motion capture systems is Apple ARKit, which released a body-tracking feature as part of their Software Development Kit (SDK) for developers in 2019 [

20]. In contrast to other 3D motion capture frameworks, ARKit is free and easy to use, and widely accessible. On the latest devices, it uses the smartphone’s IMUs and integrated LiDAR sensor to improve the measurements, promising enhanced mobile motion capture. However, only a few scientific studies have evaluated the accuracy of mobile 3D motion capture frameworks and ARKit in particular. Studies mostly focused on evaluating the lower extremity tracking of ARKit [

44,

45].

Due to the 3D calculations, ARKit is a promising IPS software that has the potential to enable new use cases for mobile HMC previously limited to traditional HMC systems. This research evaluated ARKit’s performance against the Vicon system in a laboratory experiment in eight exercises targeting the whole body. We investigate the following two research questions:

2. Materials and Methods

2.1. Study Overview

To evaluate Apple ARKit’s body tracking accuracy, we performed a laboratory experiment in which we compared the joint angles detected ARKit against the joint angles detected by the Vicon System for marker-based, optical motion tracking. In the experiment, ten subjects were instructed to perform eight different body-weight exercises with ten repetitions each, resulting in 80 recorded exercises.

During the exercises, the complete body of the subjects was recorded using the Vicon system and two iPads running ARKit from two different perspectives. All exercises were recorded simultaneously with the Vicon system and the two iPads. The study focused on comparing the motion capture data of each iPad against the data of Vicon to answer the underlying research questions. We calculated the weighted Mean Absolute Error (wMAE) and Spearman Rank Correlation Coefficient (SRCC) between the two systems in our data analysis. In addition, we performed factor analysis using ANOVA, t-tests, and logistic regression to quantify the impact of specific factors on the accuracy of the ARKit performance.

2.2. Participants

We included ten subjects () in the study, six males and four females. Their age ranged from 22 to 31 years, with an average of 25.7 years. The subjects’ height ranged between 156 cm and 198 cm with an average of 176 cm, and their weight was between 53 kg and 90 kg, with an average of 69.5 kg. All subjects had a normal body mass index between 20.4 and 25.5 (average: 22.7) and light skin color. All subjects were in good physical condition and did not have any orthopedic or neurological impairments.

2.3. Ethical Approval and Consent to Participate

The study was conducted according to the guidelines of the Declaration of Helsinki. The ethics proposal was submitted to and approved by the Ethics Committee of the Technical University of Munich on 19 August 2021—Proposal 515/21 S. All participants were informed about the process of the study upfront, and informed written consent was obtained from all subjects involved in the study. Due to the non-interventional character of this study, the risks involved for the study participants were low. We further minimized the risk through a sports scientist who supervised the physiologically correct execution of all exercises during the study, preventing the participants from performing potentially harmful movements.

2.4. Exercise Selection

Eight exercises were selected: Squat, Front Lunge, Side Squat, Single Leg Deadlift, Lateral Arm Raise, Reverse Fly, Jumping Jacks, and Leg Extension Crunch. The main objective of the exercise selection was to create a full-body workout to track all selected joints from different angles.

All exercises were tested for the suitability of tracking in both systems to ensure stable tracking of the angles. Both ARKit and the Vicon system exposed problems with the correct detection of exercises, where more extensive parts of the body were hidden from the cameras, for example, push-ups, and were therefore excluded. The testing was done in two steps: (1) We manually inspected the screen recording to see if the ARKit app model recognized the subject. (2) We checked the screen recording to whether the ARKit model overlayed with the subject’s body parts during all parts of the exercise and whether the Vicon system could track all markers in the majority of recorded frames so that the full joint trajectory could be calculated.

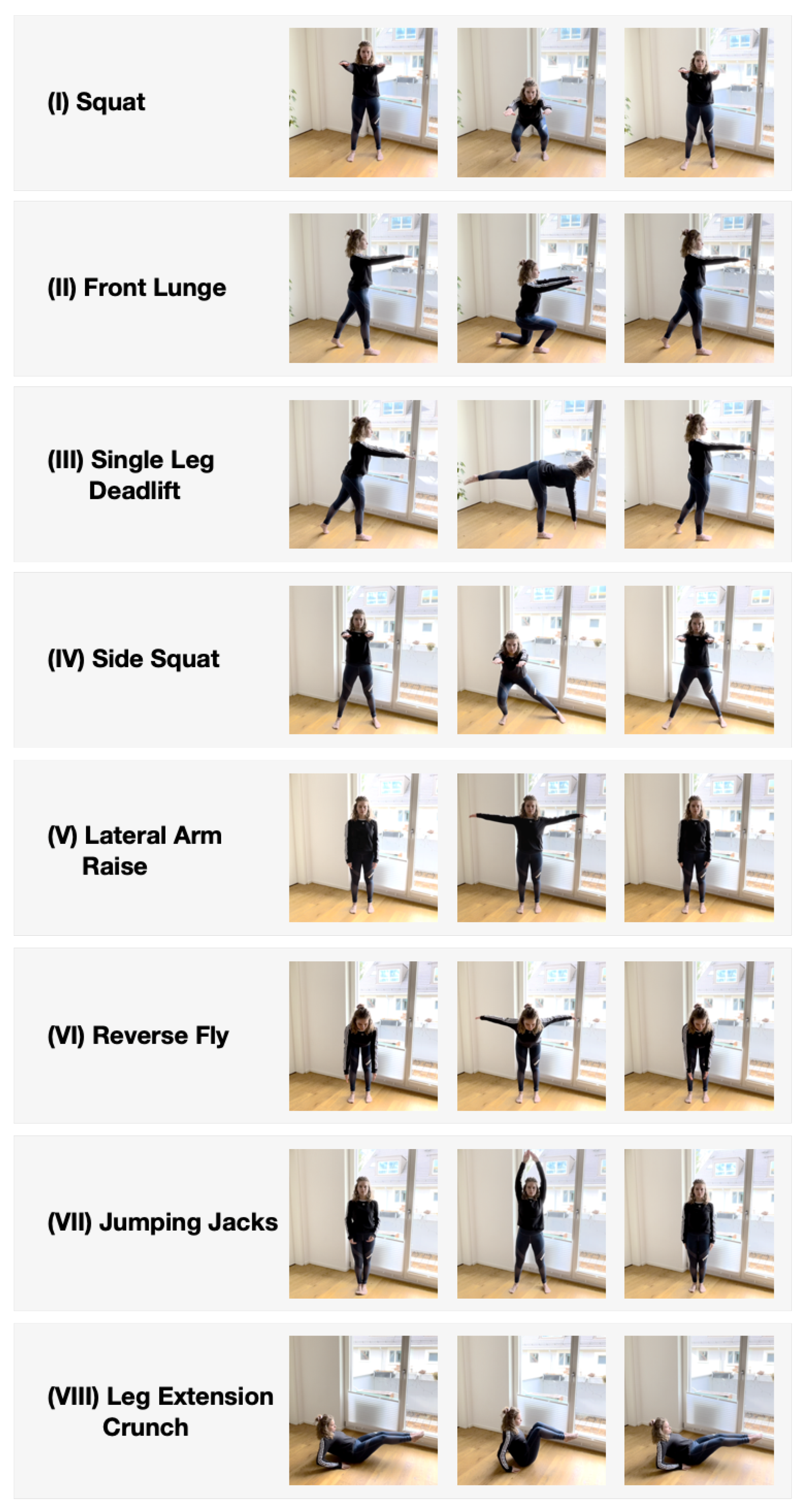

Only if both requirements were fulfilled, we selected the exercise for the study. The final exercise selection included eight exercises. Their execution (E, see

Figure 1) and targeting muscle groups (TMG) are explained in the following, and tracked joint angles (TJA) are explained in the following.

(I) Squat: (E:) The subject starts this exercise in an upright standing position. The subject squats down from the starting position by flexing the ankle, knee, and hip without movement compensations such as flexing the trunk and raising the heel. Each subject was asked to hold their arms stretched in front of the body. (TMG:) This exercise targets the lower body, especially the gluteus, quadriceps, hamstrings, and calves. (TJA:) The tracked joint angles include the left and right hip, and left and right knee.

(II) Front Lunge: (E:) The starting position of the exercise is an upright standing with spreading legs front and back. The arms’ position is the same as the squat. From the starting position, the subject goes down by flexing the ankle, knee, and hip in the front leg, flexing the knee and hip, and raising the heel in the back leg. (TMG:) This exercise targets lower body muscles, especially the gluteus, quadriceps, hamstrings, and calves. (TJA:) The tracked joint angles include the left and right hip, and left and right knee.

(III) Side Squat: (E:) The starting position of the exercise is an upright standing with spreading legs laterally. The arms’ position is the same as the squat. From the starting point, the subject squats down with either side with either leg while the other leg is kept straight. (TMG:) This exercise targets similar muscle groups to squats, focusing on adductor muscles. (TJA:) The tracked joint angles include the left and right hip, and left and right knee.

(IV) Single Leg Deadlift: (E:) The starting position of the exercise is an upright standing with a single leg. The arms’ initial position is the same as in the Squat. Th subject leans forward from the starting position by flexing the hip with minimum knee flexion. As the subject leans forward, the arms should be hung in the air. The other side of the leg in the air should be extended backward to maintain balance as the subject leans forward. (TMG:) The exercise targets lower body muscles, especially the hamstring and gluteal muscles. (TJA:) The tracked joint angles include the left and right hip, and left and right knee.

(V) Lateral Arm Raise: (E:) The subject starts the exercise in an upright standing position. Then, the subject laterally abducts the arms. (TMG:) The exercise targets upper body muscles, especially the deltoid muscles. (TJA:) The tracked joint angles include the left and right shoulder, and left and right elbow.

(VI) Reverse Fly: (E:) The subject leans forward with slight knee flexion and hangs the arms in the air in a starting position. The subject horizontally abducts the arms from the position without raising the upper body. (TMG:) The exercise targets upper body muscles such as the rhomboid, posterior deltoid, posterior rotator cuff, and trapezius muscles. (TJA:) The tracked joint angles include the left and right shoulder, and left and right elbow.

(VII) Jumping Jack: (E:) This exercise starts from an upright standing position. Then, the subject abducts both sides of the legs and arms simultaneously with a hop. (TMG:) This exercise targets lower body and upper body muscles, especially the gluteal and deltoid muscles. (TJA:) The tracked joint angles include the left and right shoulder, left and right elbow, left and right hip, and left and right knee.

(VIII) Leg Extension Crunch: (E:) The subject starts this exercise by sitting down on the ground with a backward lean of the upper body. The subject should place the hands on the ground to support the upper body as leaning backward. Then, the subject brings the legs in the air with knee and hip flexion. From the position, the subject extends the knee and hip horizontally on both sides together. (TMG:) This exercise targets core muscles, especially abdominal muscles. (TJA:) The tracked joint angles include the left and right hip, and left and right knee.

2.5. Data Collection

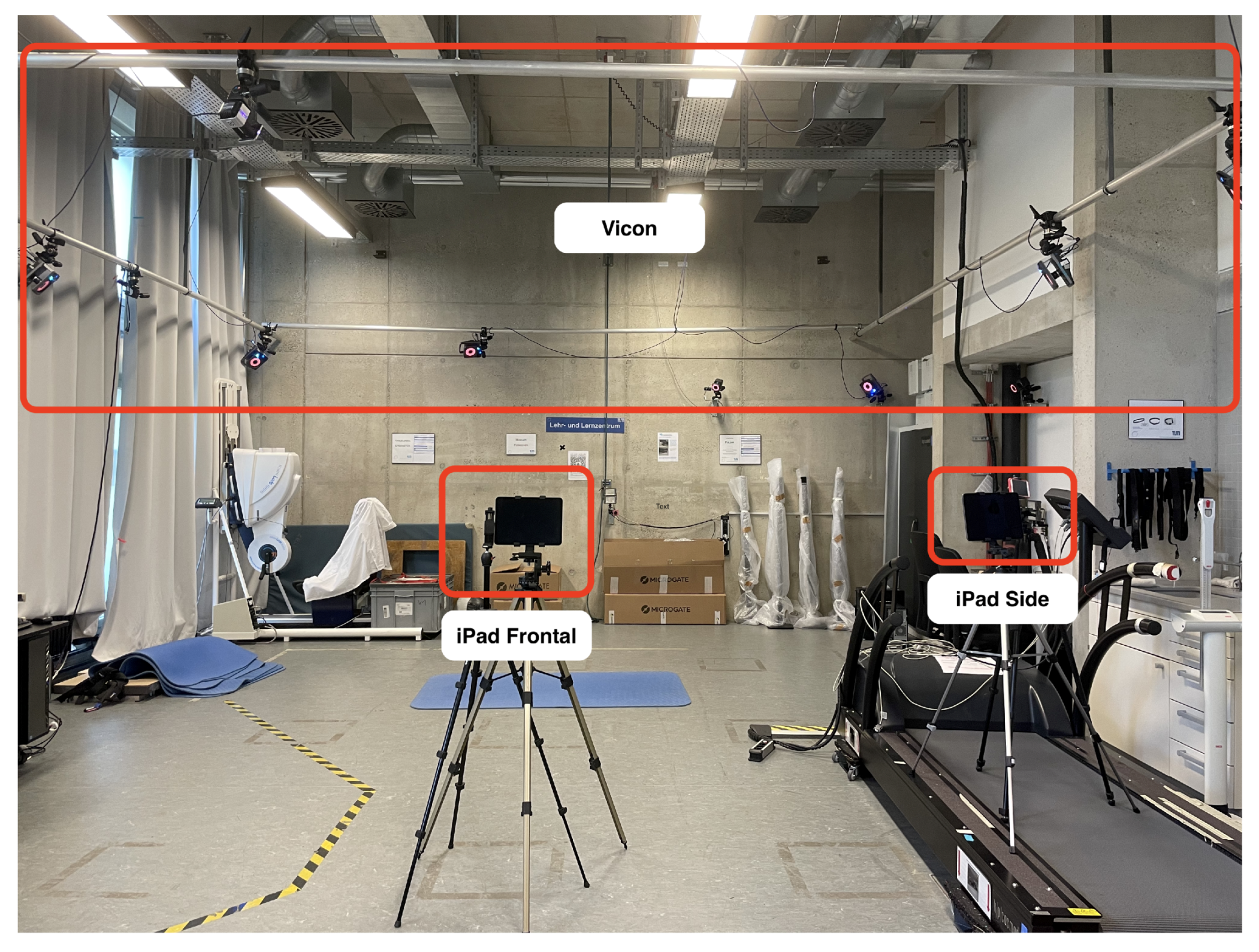

We prepared the laboratory before the subjects arrived to ensure similar conditions for all recordings. Four tripods were positioned, each of them approximately three meters away from the area of the subjects’ position to enable tracking of the entire body. Two tripods held an iPad Pro 11″ (2021 Model; Apple Inc., Cupertino, CA, USA), which were used to run the ARKit motion capture. Two other tripods were equipped with regular cameras to record videos of the experiment. One iPad and one camera were placed facing the subject’s position frontally, the other iPad and camera were placed at an approximate angle of 30° facing the subject, as shown in

Figure 2. The Vicon system (Nexus 2.8.2, Version 2.0; Vicon Motion Systems Ltd., Oxford, UK) was installed on the lab ceiling and configured to track the subjects’ whole body.

We developed a protocol to guarantee a similar experiment execution for all participants. The experiment consisted of three phases: (1) the onboarding, (2) the explanation of the exercises, and (3) performing the exercises. During phase (1), the participants entered the lab. We explained the setup, and the participants signed the consent forms. In phase (2), a sports scientist explained each of the eight exercises and showed the participants how they are performed. The participants were asked to perform the exercises once under the supervision of the sports scientist to guarantee correct execution. The actual experiment was performed in phase (3). The participants performed ten repetitions of each exercise.

2.5.1. Vicon Setup

The Vicon setup consisted of 14 infrared cameras. The setup included eight MX-T10-S cameras, four Vero v2.2 cameras, and two Bonita 10 cameras. All cameras were set to a sampling frequency of 250 Hz. We used the Nexus software (version 2.8.2) with the Full-Body Plug-in Gait marker placement model provided by Vicon Motion Systems, Ltd. [

46] to capture the motion. A Vicon calibration wand was used to calibrate all the Vicon cameras and determine the coordinate system. Static calibration was done by capturing a subject performing a T-pose.

2.5.2. ARKit Setup

The ARKit setup included two iPad Pro 11″ 2021 with an M1 processor and an additional LiDAR sensor for depth information. Both iPads ran a custom-developed software based on the ARKit 5 framework provided by Apple Inc., which was used for extracting the motion capture information from the iPads’ sensors. Both iPads recorded the motion capture data independently and were not synchronized. The motion capture data included the timestamp of the detection, the performed exercise, and the three-dimensional, positional information of 14 body joints. These data were later used to calculate the joint angles. All joint coordinates are given relatively to the pelvis center, which serves as the origin of ARKit’s coordinate system. ARKit differentiates between bigger joints, which are actively tracked, and calculated joints, which are smaller joints such as the toes and fingers. We decided only to include actively tracked joints in our comparison, as previous tests showed that the calculated position of the smaller joints and their related angles rarely change. The ARKit data were recorded with a default sampling frequency of 60 Hz. However, the sampling frequency of ARKit is variable, as ARKit internally only updates the joint positions when a change is detected. This means that if a subject is standing still, fewer data points are received from ARKit and more when the subject is moving fast. As the toe and finger joints are calculated by ARKit and not actively recognized, we limited the comparison to the actively tracked joints: shoulders, elbows, hips, and knees.

2.5.3. Data Export

After each recorded subject, the collected motion data were exported from the three systems: the frontally positioned iPad (iPad Frontal), the iPad set in a 30° Side Angle (iPad Side) (

Figure 2), and the Vicon system. The motion data were stored in CSV files and included the joint center coordinates for each detected frame for the three systems separately. The ARKit data were exported in one file per iPad, resulting in two CSV files per subject. For the Vicon system, each exercise was stored in a separate CSV file. In addition, an XCP file was exported from the Vicon system, which contained meta-information about the cameras, including the start and end timestamps of each recording.

Due to export problems, the upper body joint coordinates of the iPad Side were only included for three of the ten subjects. The Vicon system could not track each joint coordinate throughout the whole exercise due to hidden markers, leading to gaps in the exported data. Smaller gaps were compensated during the Data Analysis, whereas more significant gaps led to the exclusion of the respective angle.

2.6. Preprocessing & Data Analysis

The basis for the data analysis part is 220 files, 22 for each subject. It contains two comma-separated value (CSV) files from the respective ARKit systems (frontal and side view) and ten CSV files from the Vicon system, which records each exercise in a separate file. The remaining ten files are given in the XCP format, which contains the relevant metadata of the Vicon system, such as camera position, the start time, and the end time of the data acquisition process. The following preprocessing steps are performed for each subject to merge all files into a data frame for further analysis.

The Vicon and ARKit data are modified to fit a matrix-like structure in which the rows represent time and columns the joints. Augmentation enhances the data with information such as the timestamp, subject, exercise, and in the case of ARKit, whether the values were recorded frontal or lateral.

The

Section 2.5.1 and

Section 2.5.2 explain different sampling rates for the systems and the non-equidistant sampling rate of ARKit (57 Hz on average). It motivates to evaluate strategies to merge the system’s data based on the timestamp. Vicon samples the data at a frequency of 250 Hz and implies a maximum of 2 ms distance for a randomly chosen timestamp. Due to this maximal possible deviation, the nearest timestamp is the criterion for merging the Vicon data onto the ARKit data.

The Vicon system records absolute coordinates, while the ARKit system provides normalized coordinates relative to the center of the hip. It still allows for comparing angles since they are invariant under scaling, rotating, translating, and reflecting the coordinate system. Accordingly, the adjacent three-dimensional joint coordinates extraction calculates the angles of interest (AOI). An angle

is determined by three joints

or associated vectors

and

given the formula

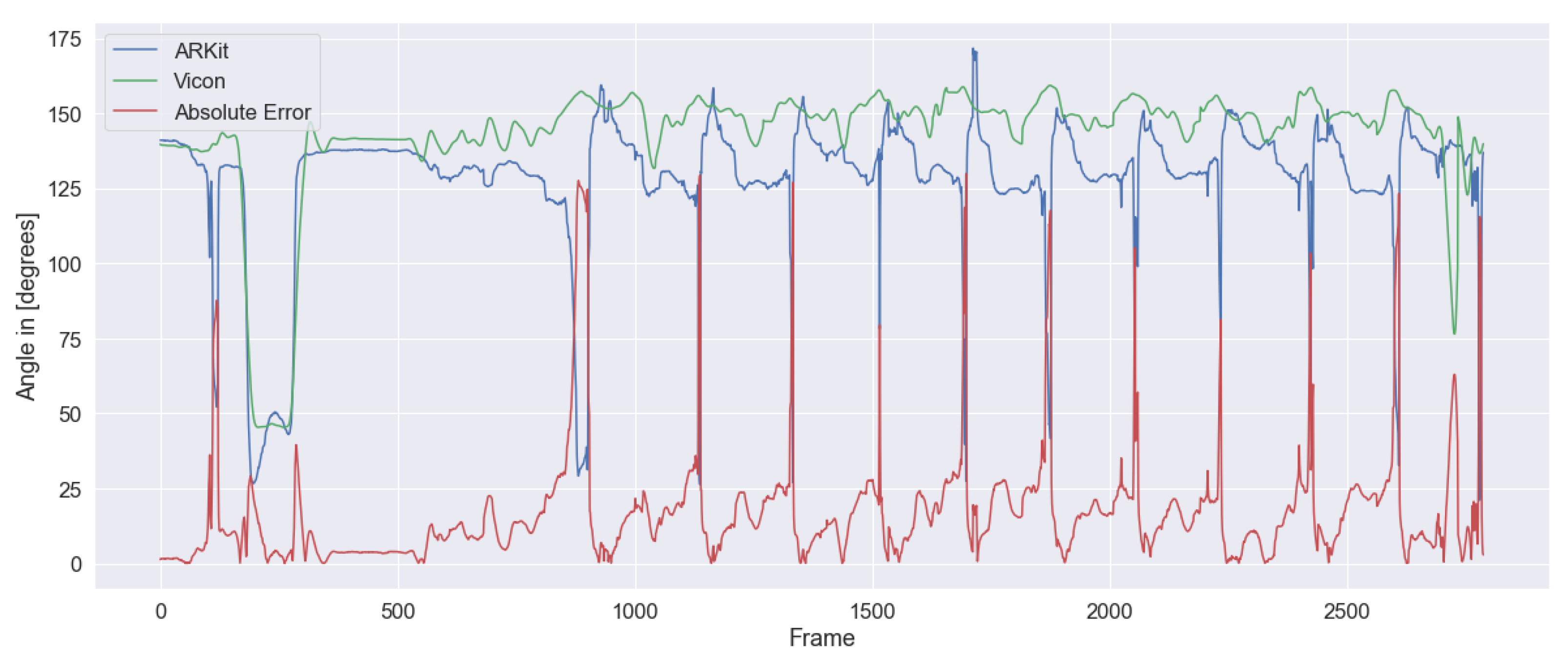

The data reveal a time lag which leads to a misalignment between the Vicon and ARKit angles along the time axis. Accordingly, the related time series require shifting with the objective to maximize the mutual Pearson correlation coefficient. The shift operation is subjected to a maximum of 60 frames to each side. It includes the assumption that the time series of the two systems match best if they exhibit similar behavior in their linear trends.

Figure 3 shows two examples of misaligned time series on the left and the result of the shift on the right. The time series alignment is performed brute force and individually for any combination of view, subject, exercise, and AOI. The procedure outputs 1048 ARKit-Vicon time series pairs, 634 for the comparison Vicon—iPad Frontal, and 414 for the comparison Vicon—iPad Side. The number does not correspond to 2 × 10 × 8 × 8 = 1280 pairs due to the missing ARKit recordings of the upper body joints for lateral recording.

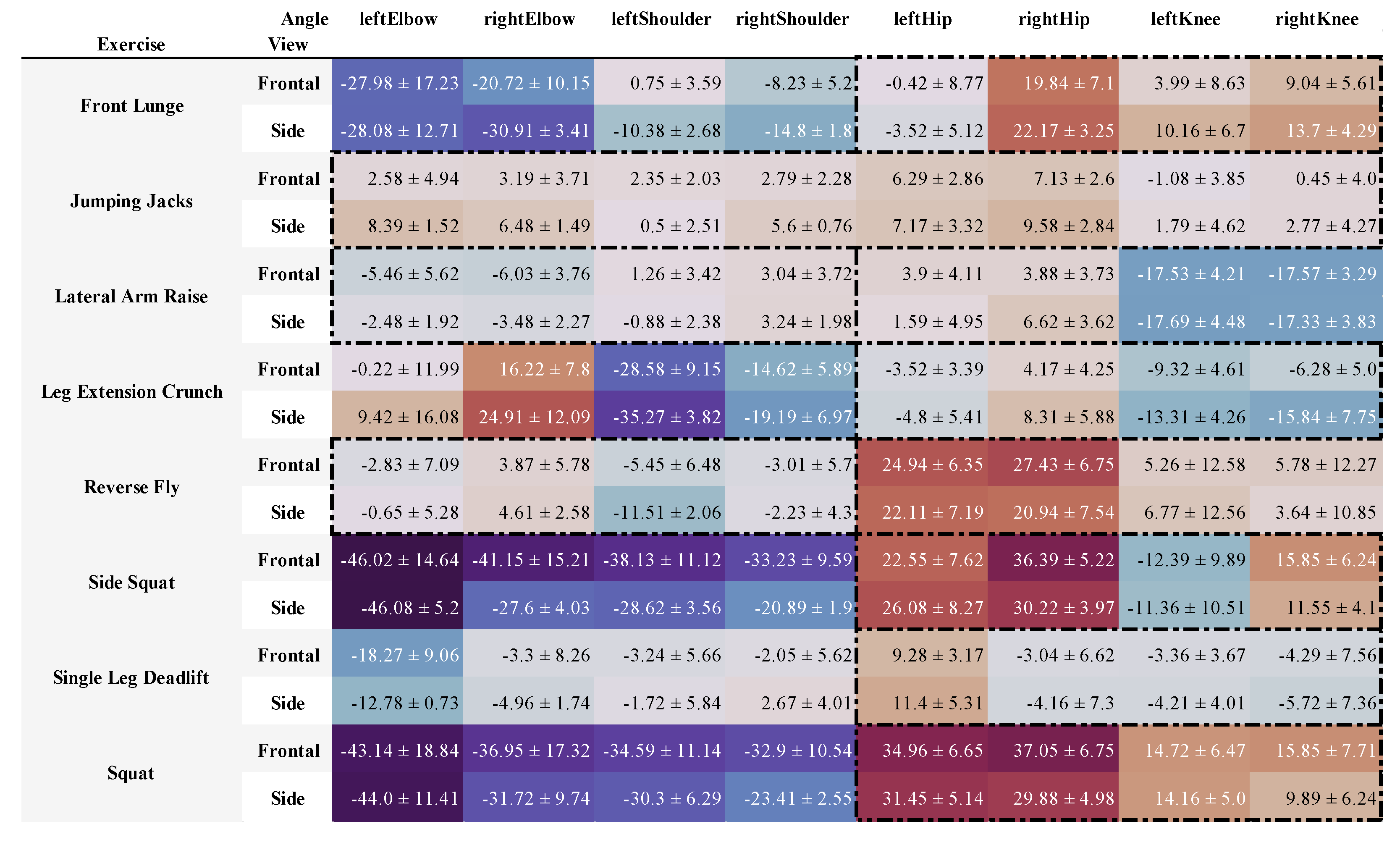

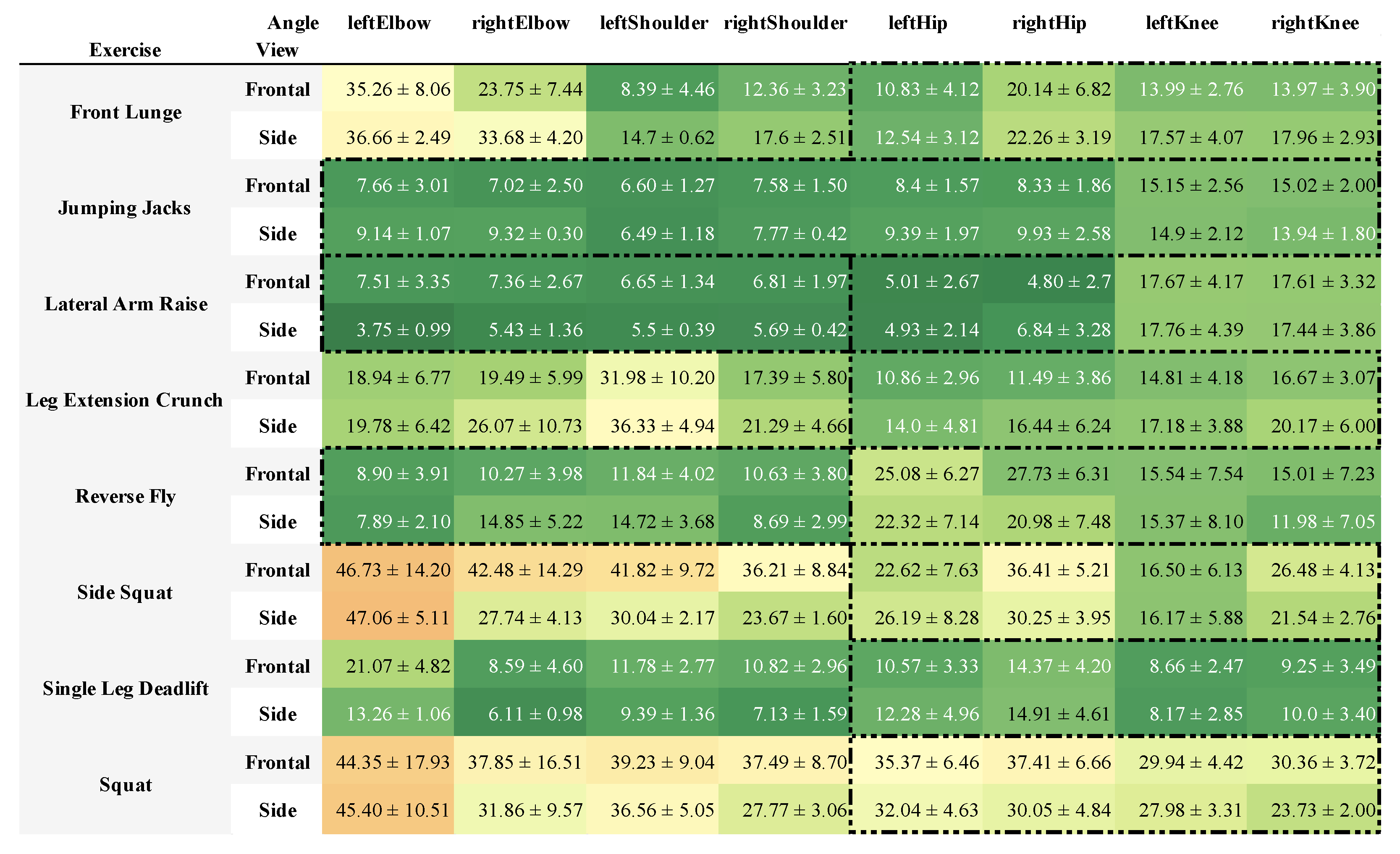

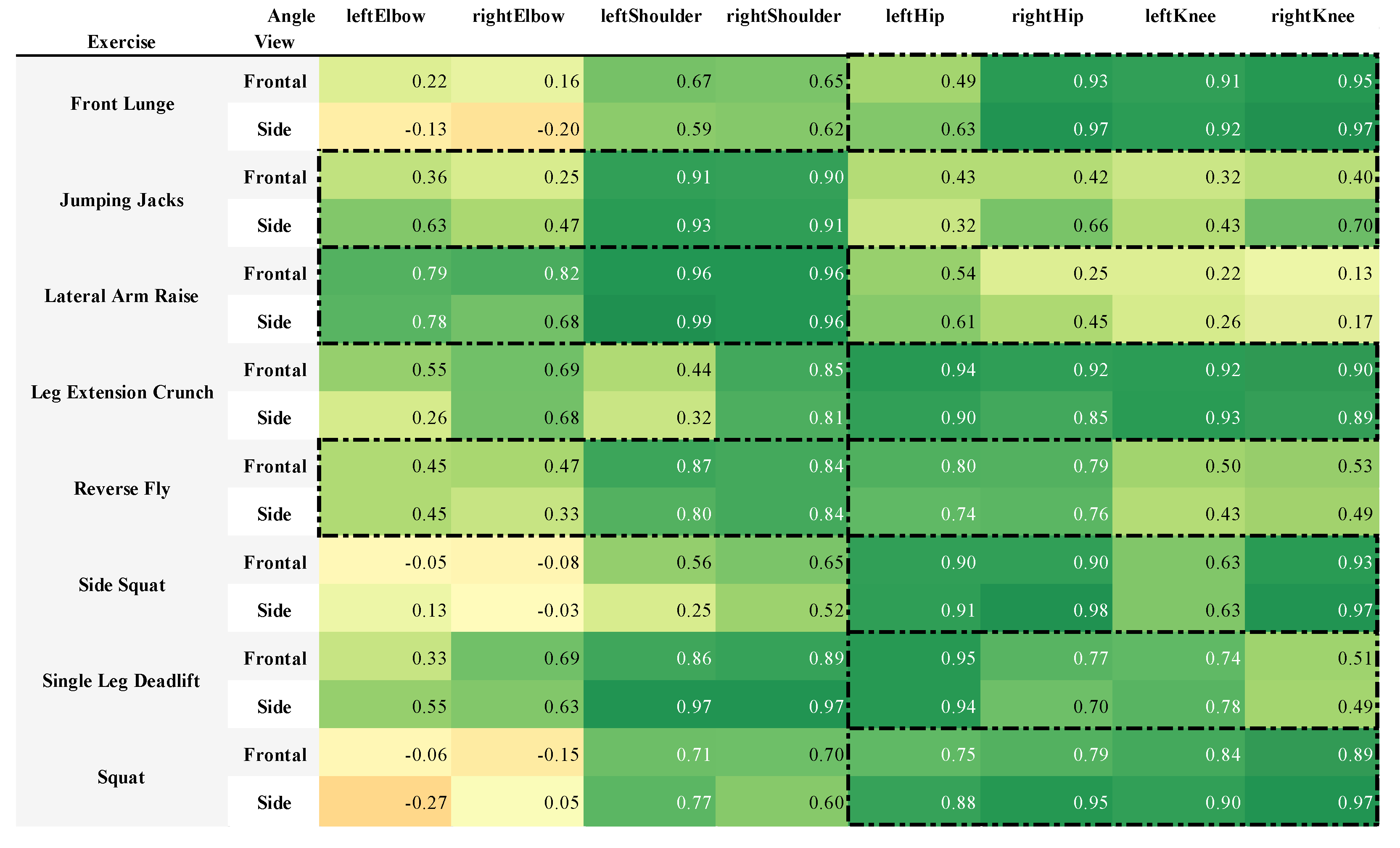

Computing two metrics validates the angle similarity of the systems for each pair of time series, the mean absolute error (MAE) and the non-parametric Spearman’s rank correlation coefficient (SRCC). The obtained MAE and SRCC values of the 1048 time series are aggregated according to predefined grouping criteria, such as exercise, angle, or view. Calculating the sample size’s weighted mean and standard deviation (std) defines a grouping operation for the MAE (

Table 1). SRCC values require first a transformation to a normally distributed random variable using the Fisher

z-transformation

where

r is the SRCC. It constitutes the prerequisite to applying the averaging operation along with the variables. The result is again a normally distributed variable that needs back transformation into the correlation space using the inverse of (

1).

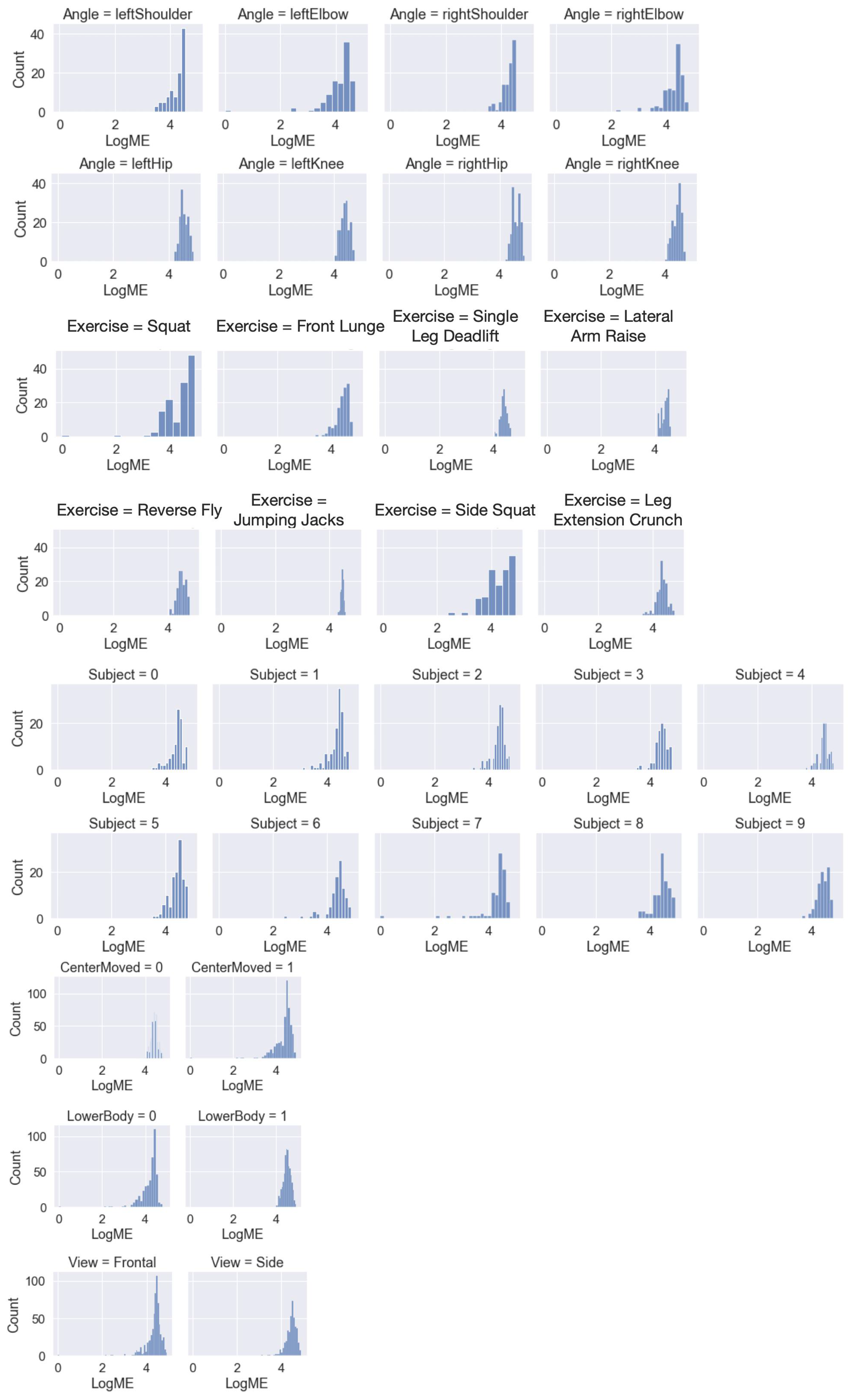

A drawback of the MAE is the lack of interpretation regarding systematic over- or underestimation of the angles. The mean error (ME), which is the average of the time series pair’s difference, can conclude the occurrence of bias but at a granular level, for example segments of the exercise. However, aggregation of the ME is prone to involve effects such as error cancellation. The ratio of ME and MAE, for instance

, draws insights into the occurrence of systematic bias (

Figure A4). A value close to

implies less tendency of ARKit to fluctuate around the Vicon’s angle estimation, for example either under-, perfect- or overestimation takes place. Values nearby zero indicate the ME’s cancellation effect (over- and underestimation) but require further analysis, such as the difference between MAE and ME, for conclusions.

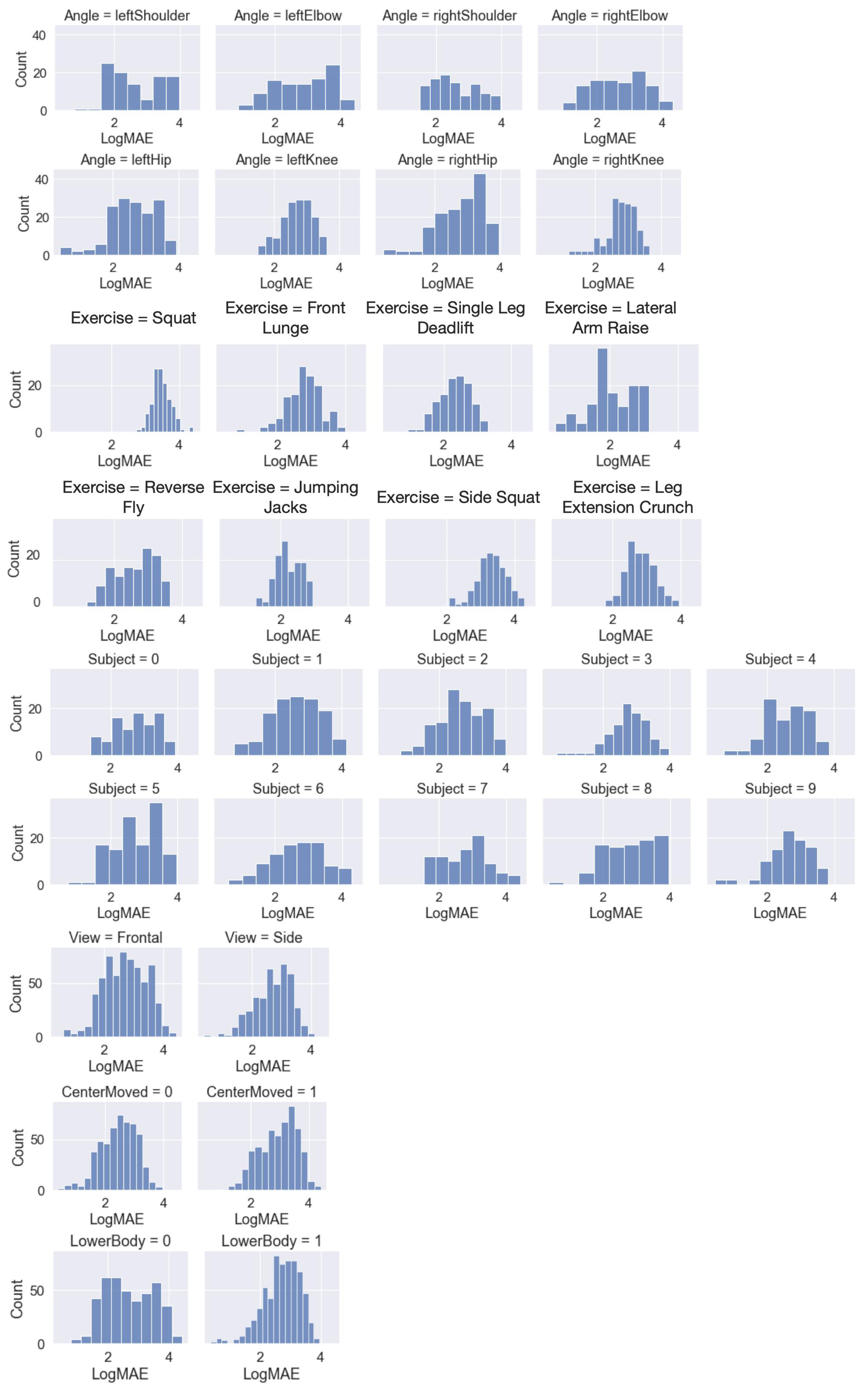

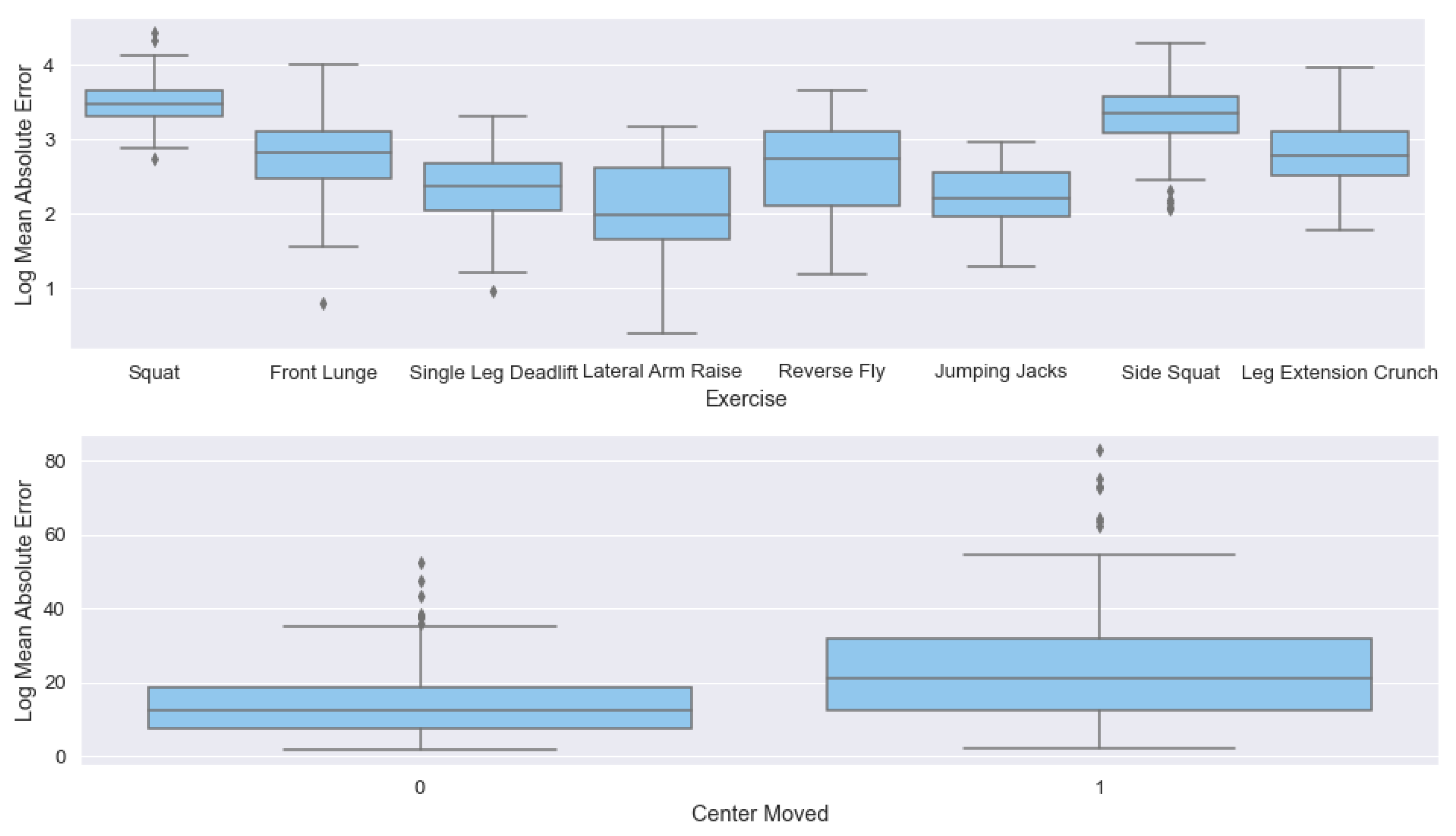

One-way analysis of variance (ANOVA) is performed to quantify the effects of the categorical metadata such as angle (fixed effect), exercise (fixed effect), and subject (random effect), on the continuous variable MAE. The random effect was taken into account performing one-way ANOVA using a random effects model. The distribution of MAE shows a divergence towards the normal distribution, which is one of the requirements in ANOVA. However, research verified robustness in violating this assumption in certain bounds [

47]. A logarithm (basis 10) transform on the MAE variable ensures stronger normalization (

Appendix A,

Figure A1). In particular, it makes the model multiplicative and more robust to dispersion. The visual inspection of histograms reveals a lack of homogeneous intergroup variance and motivates to apply Welch’s ANOVA. Finally, the Games-Howell post-hoc test [

48] compares the individual categorical factors for significant results (here defined as an effect size larger than 0.1).

Besides view (frontal or side), the binary independent variables are the body segment of the angle (lower or upper) and information on the movement of the pelvis. The latter is declared as the variable center moved and indicates whether the proper execution of the exercise involves the movement of the pelvic’s center, the origin of the ARKit coordinate system. To quantify the binary variables’ effect, we fitted a logistic regression model based on the MAE and applied Welch’s t-test. The results, including coefficient, p-value, and confidence interval, are compiled in a table.

Assumptions about the data are made and can restrict the interpretation of the results. A more detailed outline of this topic is given in the limitations section (

Section 5.8).

5. Discussion

5.1. Factors Influencing ARKit’s Performance

Based on the findings presented in

Section 4, we identified several factors that influence the accuracy of ARKit’s motion capture. The main requirement for good tracking is ensuring that the joints of interest are well visible to the camera and not hidden by other parts of the body during the movement. The exercise or motion itself is also of relevance. The results of the

t-test hinted at a relevance of the coordinate system’s stability during the exercise. However, this was not supported by the results of the logistic regression, so that the interpretation is unclear and requires further investigation.

The results of capturing human motion using ARKit could be influenced by several other factors, which were not further investigated within this research. This includes technical factors such as the device’s processing power and additional sensors to improve the motion capture, the tracking environment such as lighting conditions or the background, or factors regarding the captured person, such as their clothing, body mass index, or skin color.

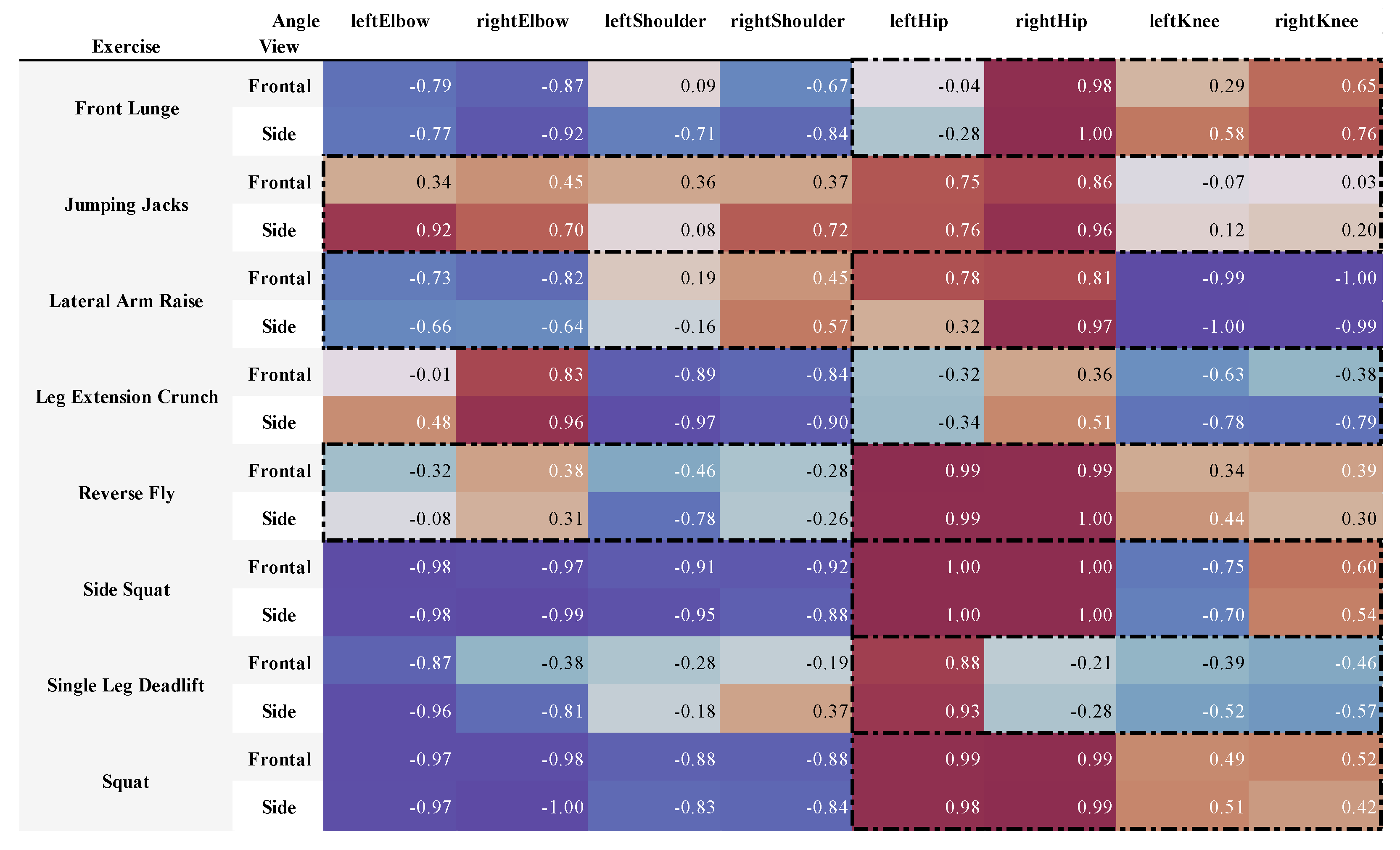

5.2. Bias of the Motion Capture Results

The upper body angles exposed a tendency of underestimation, and the results of the hips hinted at systematic overestimation as described in

Section 3.1.2. Several values were located close to −1 or 1, which hints at a tendency to either systematic rather than cyclically occurring over- or underestimation. When aggregating the values for the different joints (

Table 8), the results suggest that the upper body angles are underestimated, while the hip gets overestimated. The knee angles remain inconclusive with values relatively close to zero. They could hint at the mentioned cyclically occurring over- and underestimations or over- and underestimation based on the executed movement.

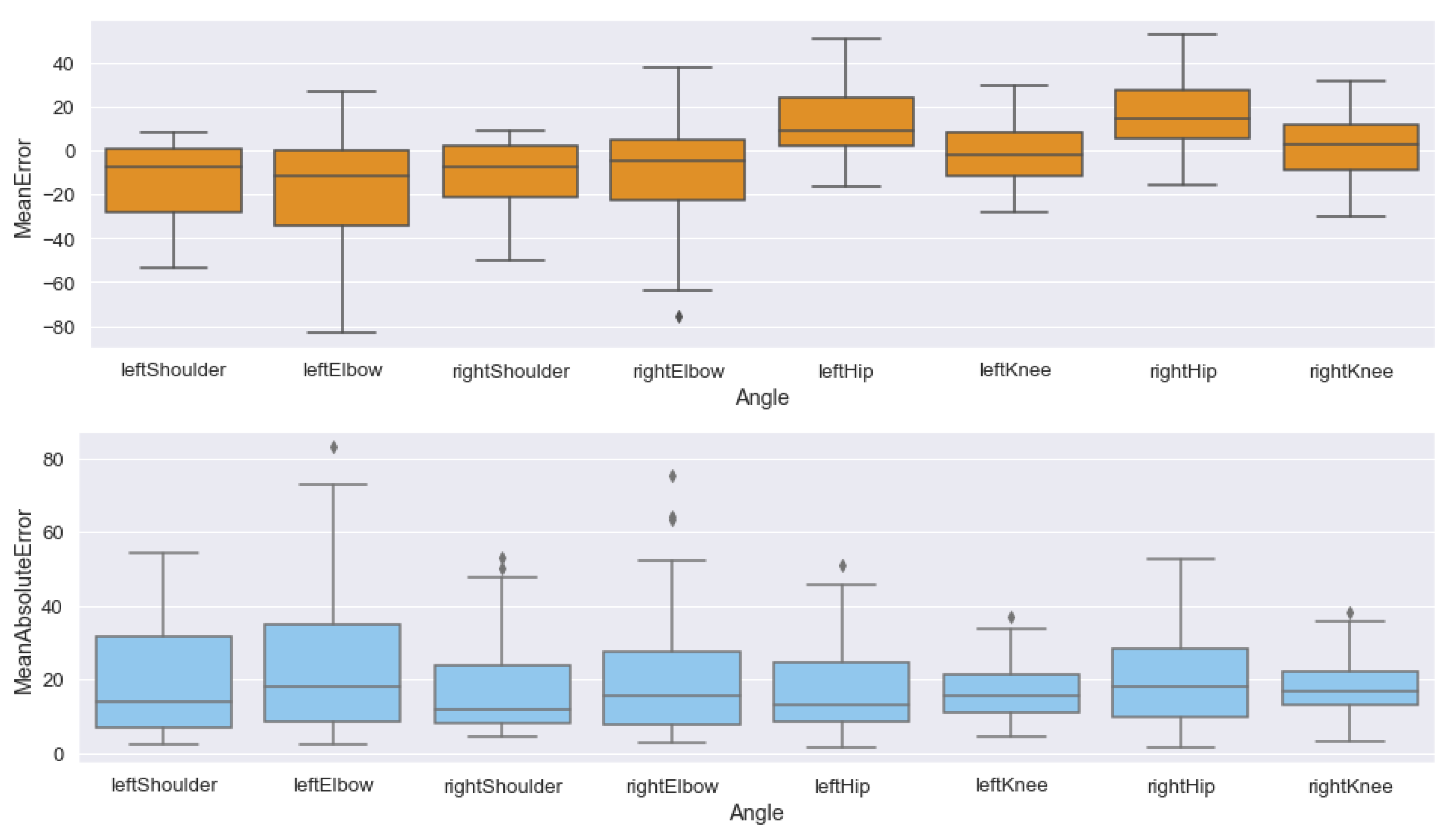

5.3. Influence of the Tracked Joint Angle

The logistic regression results indicated a small, but significant effect of the lower body variable. These impressions are supported when inspecting the boxplot of the angles in the ME (

Figure 12). The boxplot shows a tendency of underestimating the upper body angles, overestimating the hip angles, and a difference in the mean between the knee and hip angles. To investigate this effect, we performed the ANOVA analysis on the ME. We shifted the ME to only include positive values and applied the logarithmic transformation similar to our proceedings of the MAE as described in

Section 2.6. The observed angles show an influence on the result (

,

). Post-hoc analysis using Games-Howell supports the suggestions that the differences lie between the upper body angles and lower body angles and between the hip and knee angles (

Appendix C,

Table A2).

Interestingly, the exercise and movement of the hip center were the influencing factors for the MAE in contrast to the results of the ME. In the MAE, the difference between the angles is not observable anymore. The upper body error is mapped to a similar MAE as the lower body joints by only considering the absolute error (

Figure 12). The ME for the whole dataset is −0.83°, meaning that overestimating the lower body joints and underestimating the upper body joints could be subject to error cancellation when considering the entire body. This effect could explain the MAE’s dependency on the selected exercise while no dependency on the angle was observed.

The ANOVA results show an effect for the upper body variable and support the respective tendency of over-and underestimation. However, as explained in

Section 2.6, the ME is prone to error cancellation effects. This unclear influence impacts the explanatory power, so we did not include these thoughts in the results and findings.

5.4. Impact of Incorrect Hip Detection

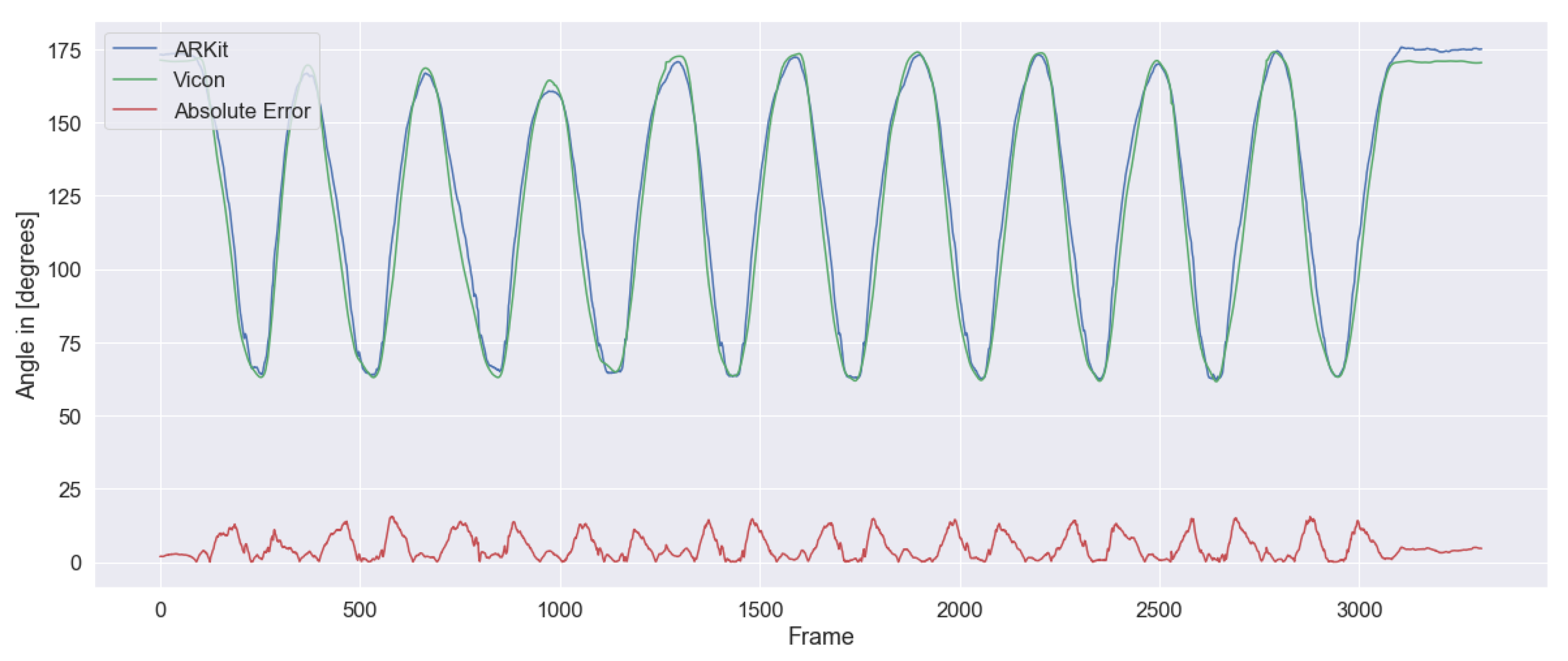

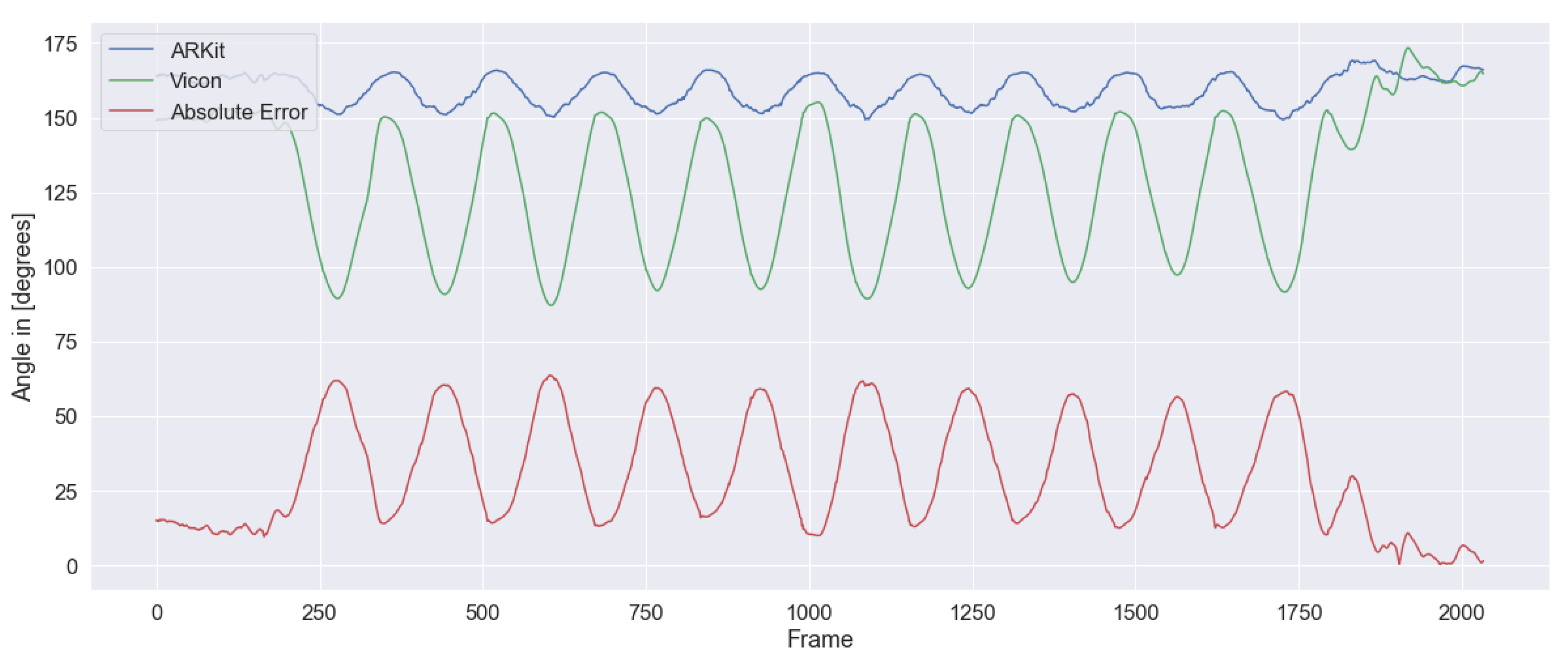

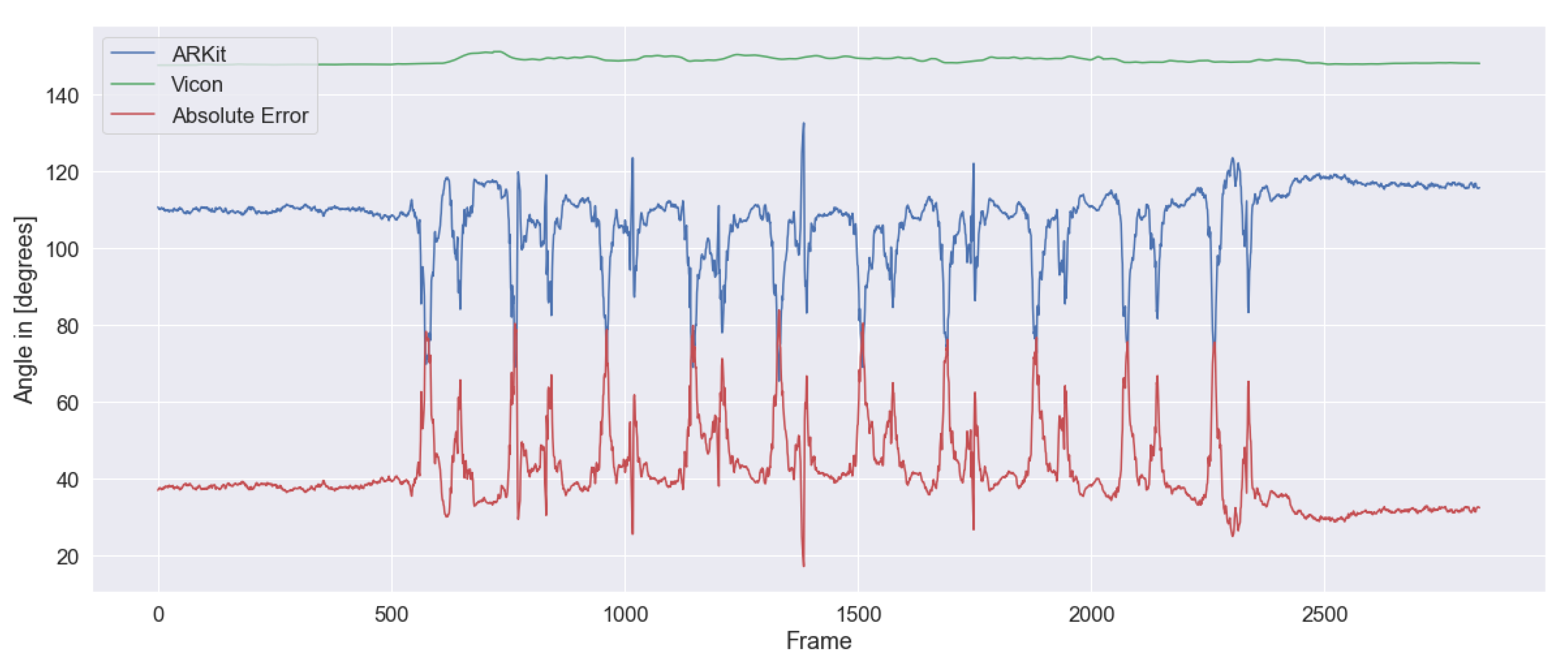

A commonly observed issue with the ARKit data were a reduced amplitude, and a baseline drift along the

y-axis (see

Figure 7), though the motion was tracked quite reliable. This issue was particularly the case for the lower body joints and led to a higher wMAE in those joints, but was also observed in other joints. In the screencasts of the recording, we often noticed that the detection of the hip joints was incorrect (

Figure 13) and even varied during the execution of the exercise. Such shifts on the sagittal plane explain both the baseline drift and the amplitude reduction in the hip, knee, and shoulder angles, as all of them rely on the hip joints for their calculation. Especially from a side perspective, the hip joints allow for the most considerable deviations along the sagittal plane due to the amount or muscle and fat tissue around the pelvis. In the example of

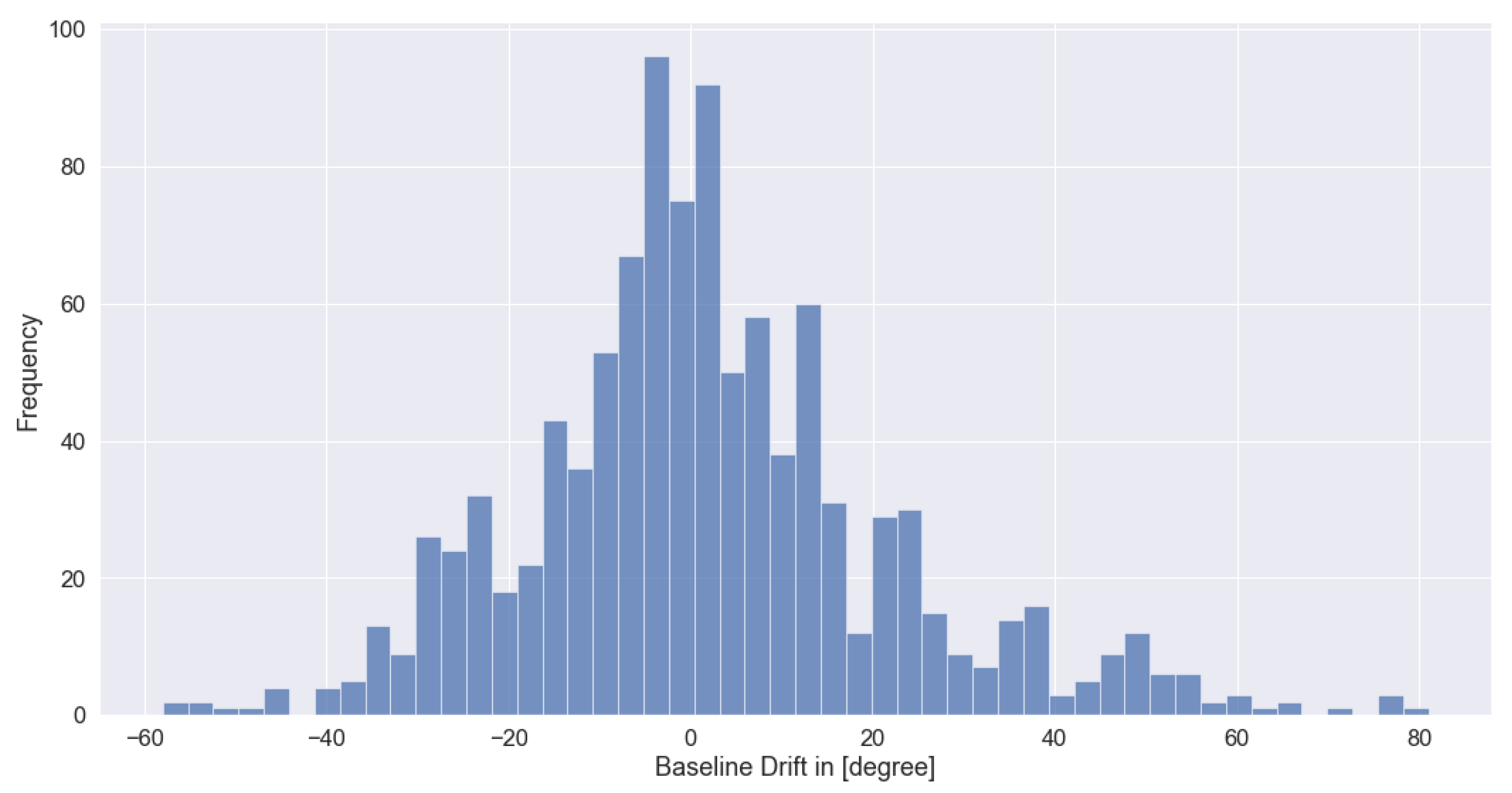

Figure 13, another issue aggravates the correct detection of the hip joints: the camera perspective was optimized for tracking the legs’ position, which in this case means that the right joint hides the left hip joint. This positioning implies that ARKit needs to rely on other body landmarks to estimate its position. Finding an optimal camera position in which all joints are completely visible might not be possible for all movements.

5.5. Improving the ARKit Data during Post-Processing

The good correlation results opened up the question of whether it is possible to improve the ARKit motion capture data through post-processing to approximate the Vicon data. A systematic error concerning detecting the hip joints in a position too far anterior is a possible explanation and is subject to further investigation. If this is the case, both the baseline shift and the amplitude reduction could be corrected by applying a scale factor and shifting the data on the

y-axis. Compensating the baseline shift would reduce the wMAE results by

and lead to more reliable and accurate results. However, no systematic error could be found when shifting the ARKit data along the

y-axis by vertically shifting the ARKit data (

Figure 9). The observed shift instead seems to be caused by other factors such as the incorrect detection of joints.

During the data analysis, we used a sliding window approach to maximizing the cross-correlation between the ARKit and Vicon data to compensate for possible time lags, as no synchronization of the iPads and the Vicon system was possible during the experiment. Possible reasons for lags are different hardware clocks and the delay of the body detection algorithm of the ARKit framework. The sliding window was set to a maximum of 120 frames, which equals approximately 2 s, only to allow reasonable shifts within the exercises and compensate for the lag caused by technical limitations. The approach was chosen to maximize the comparability between the results of the two systems. However, as the sliding window approach was applied individually to each angle, exercise, subject, and view, each configuration was shifted to its optimal result within the given time window. This approach does not consider potential lags within ARKit’s motion capture, for example, a slower recognition of changes for some parts of the recognized body.

5.6. Comparing the Results of 2D and 3D Motion Capture Systems

As stated in the analysis of Sarafianos et al. [

31], monocular video-based motion capture systems exhibit several limitations, which reduce their applicability to real-world scenarios. Among the most significant limitations are the ambiguities of the detected poses due to occlusion and distortion of the camera image caused by the camera’s viewing angle and position [

31], which is a relevant limitation in both 2D and 3D motion capture systems. In this research, we were able to show that ARKit, as an example for 3D motion capture systems supported by different smartphone sensors, is robust against a variation of 30° regarding the positioning of the device. The factor analysis did not expose an influence of the device position. However, poor visibility of joints still led to significant decreases in the accuracy of the measured angles. Mobile 3D motion capture frameworks based on monocular video data such as ARKit improve some of the limitations of 2D motion capture systems but cannot overcome them completely.

5.7. Potential Use Cases for Mobile 3D Motion Capture-Based Applications

The findings of this research raise the question of possible application areas for human motion capture using mobile 3D motion capture frameworks such as Apple ARKit. Referring to the three categories defined by Moeslund et al. [

2], such frameworks could be applied to use cases in categories (2) interacting with software or (3) motion analysis for medical examinations or performance analysis, as it focuses on tracking single bodies rather than observing crowds. The results suggest that ARKit can track a motion’s progression reliably but with relatively high error rates, depending on the joint of interest. Human motion capture using ARKit is further limited to a relatively small set of trackable joints. For example, the hand and toe joints are not actively tracked but calculated based on the angle and wrist joints, limiting the trackable joint angles to the shoulder, elbow, hip, and knee. However, mobile 3D motion capture frameworks are a promising technology for use cases that focus on tracking a specific motion of body parts rather than the exact joint position. Such use cases can be seen in category (2), such as interacting with software through gestures or other movements. Potential use cases in (3) include sports applications for amateurs or physiotherapy applications, which could focus on counting repetitions of a specific exercise. Depending on the motion and joint of interest, specific use cases relying on the exact joint position and angle data might be possible if the two main requirements for a good tracking presented at the beginning of this section can be met. For example, such use cases could include measuring the possible range of motion of a joint before and after a particular intervention and monitoring the progress in the medical field, or correcting the execution of a specific exercise in sports and physiotherapy applications. Using mobile 3D motion capture frameworks in these use cases would extend the usage of human motion capture technologies beyond professional settings and allow day-to-day usage at home, performed by consumers. ARKit and other mobile IPS systems enable new use cases, especially in mHealth, which were not possible with previous HMC systems. Our findings show how mobile 3D motion capture frameworks can be applied and how mHealth applications could leverage the software for future applications. However, the limitations of 3D motion capture frameworks and ARKit’s boundaries, in particular, need to be considered and should be evaluated before applying the technology to specific use cases.

5.8. Limitations

The design of this research includes several limitations. While the lab experiment produced a data set of over 1000 exercise executions, the data were collected from ten study participants only due to the restrictions caused by the ongoing COVID-19 pandemic. The limited number of participants might limit the external validity of this research. The participants’ traits further limit the external validity. While covering heights between 156 cm and 198 cm, their body mass index was in a normal range. In addition, all participants had a lighter skin tone. The experiment was conducted in a laboratory with controlled background and lighting conditions.

Even though the study setup aimed at reducing possible influences on the study’s internal validity which were not part of the observation, the impact of additional factors cannot be eliminated. Possible factors include the influence of the specific performance of the exercises by the subjects or the effect of the clothing worn. Furthermore, the subjects were recruited from the social surroundings of the researchers. They might not be representative of the whole population. The internal validity is further affected by the sliding window approach to compensate for the time lag due to missing clock synchronization and processing time. While the approach is limited to a maximum window of approximately two seconds, this shift could still have improved the results above the observable results. Additionally, the data set contained a reduced amount of exercise data for the upper body joints due to the export problems of the iPad on the side position. We applied the Welch ANOVA test to identify dependencies of the MAE instead of the ANOVA test, as the variance of the individual factors was not equally distributed. However, another prerequisite for (Welch) ANOVA and Welch t-test, normally distributed data, was only partially given for the MAE, even though the ANOVA analysis is said to be quite robust against this problem. We applied a logarithmic transformation to the data before performing the ANOVA and t-tests to overcome these limitations. Moreover, the observations used in (Welch) ANOVA should be independent of each other. In our experiment setup, the recording of angle motion happened simultaneously in all subjects and exercises. The observed angle deviations of the systems are expected to be independent. However, a poorly tracked angle might cause a higher risk to affect another angle’s accuracy in a real-world scenario. Thus, the assumption of independent observations is hard to verify. Moreover, ARKit is only one example of a mobile 3D motion capture framework. Other frameworks rely on different technologies and algorithms and could exhibit different results and limitations.