Moving Vehicle Tracking with a Moving Drone Based on Track Association

Abstract

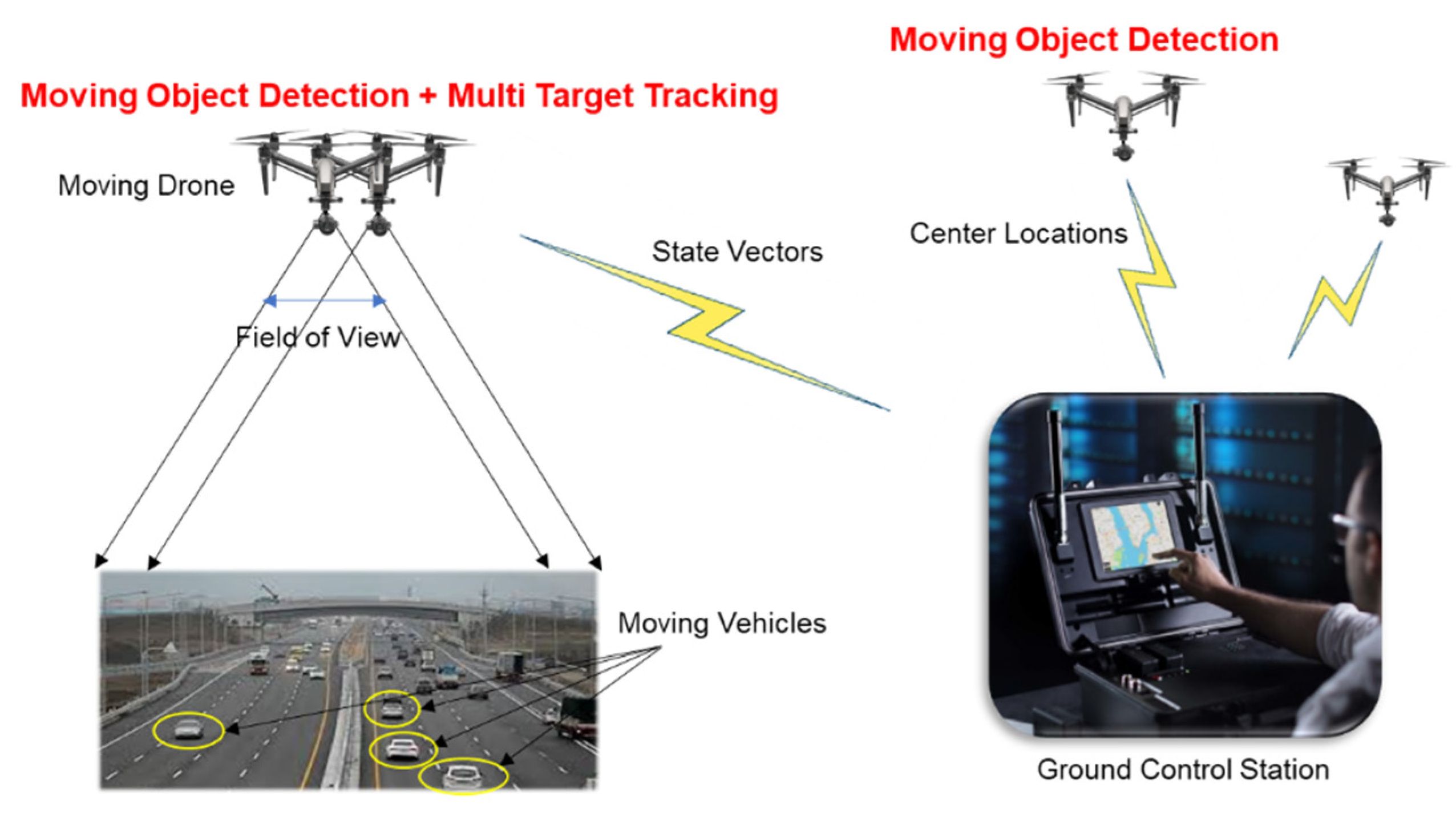

1. Introduction

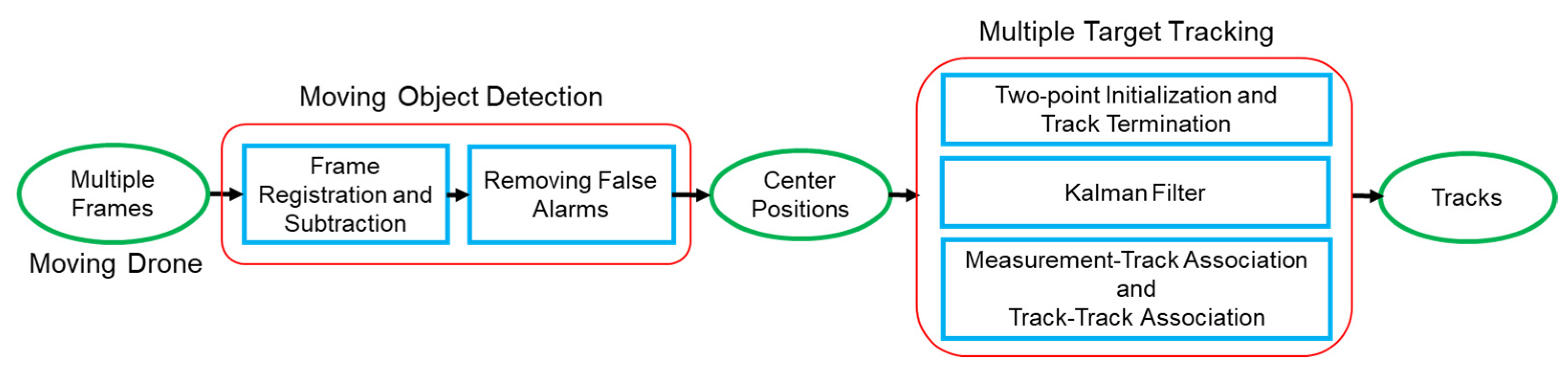

2. Moving Object Detection

3. Multiple Target Tracking

3.1. System Modeling

3.2. Two Point Intialization

3.3. Prediction and Filter Gain

3.4. Measurement–Track Association

3.5. State Estimate and Covariance Update

3.6. Track–Track Association

4. Results

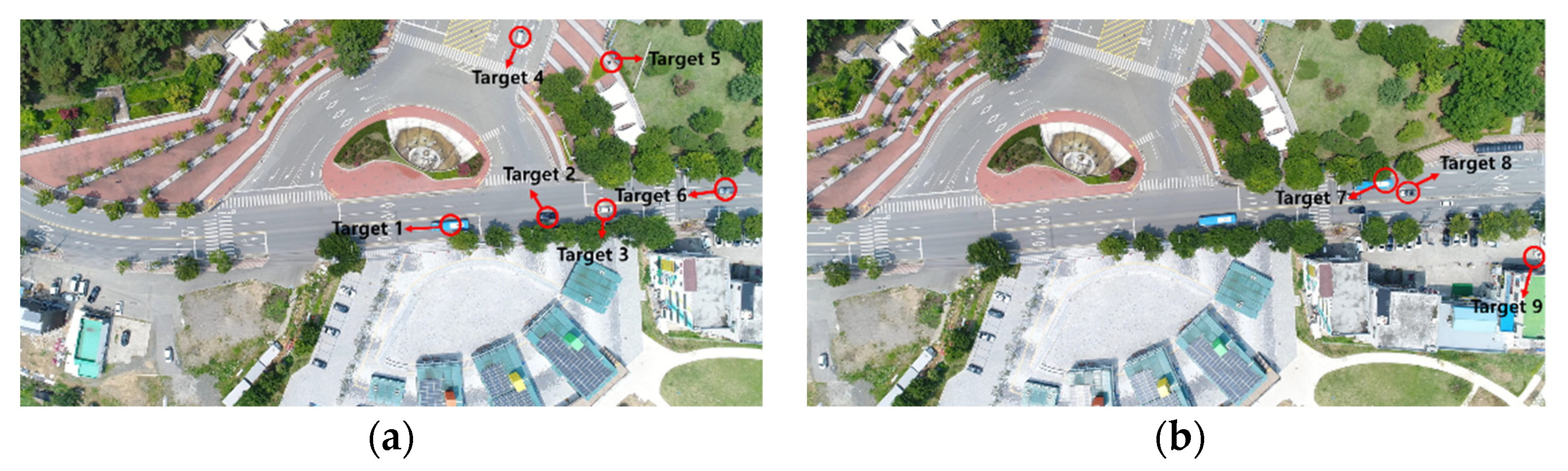

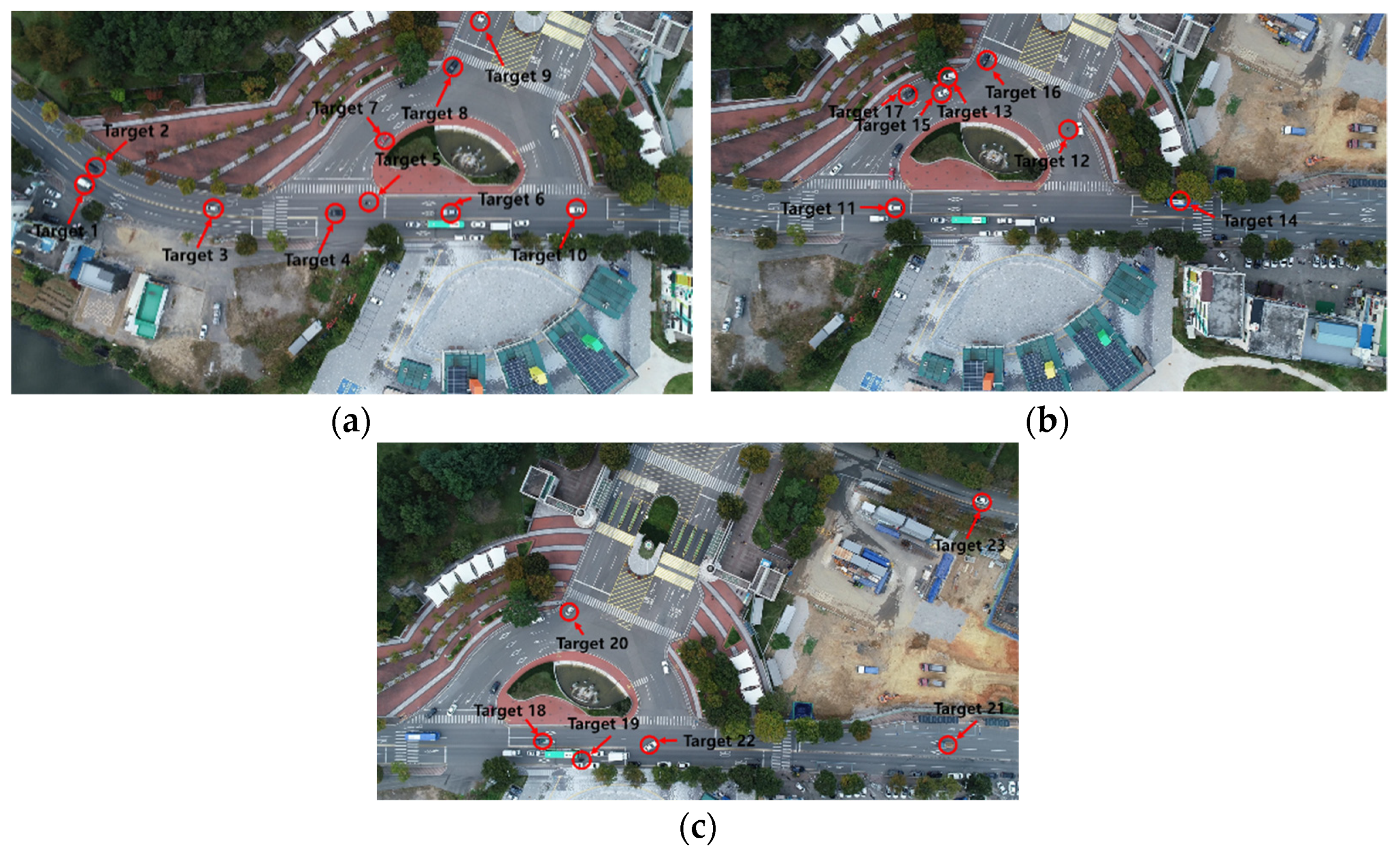

4.1. Video Description

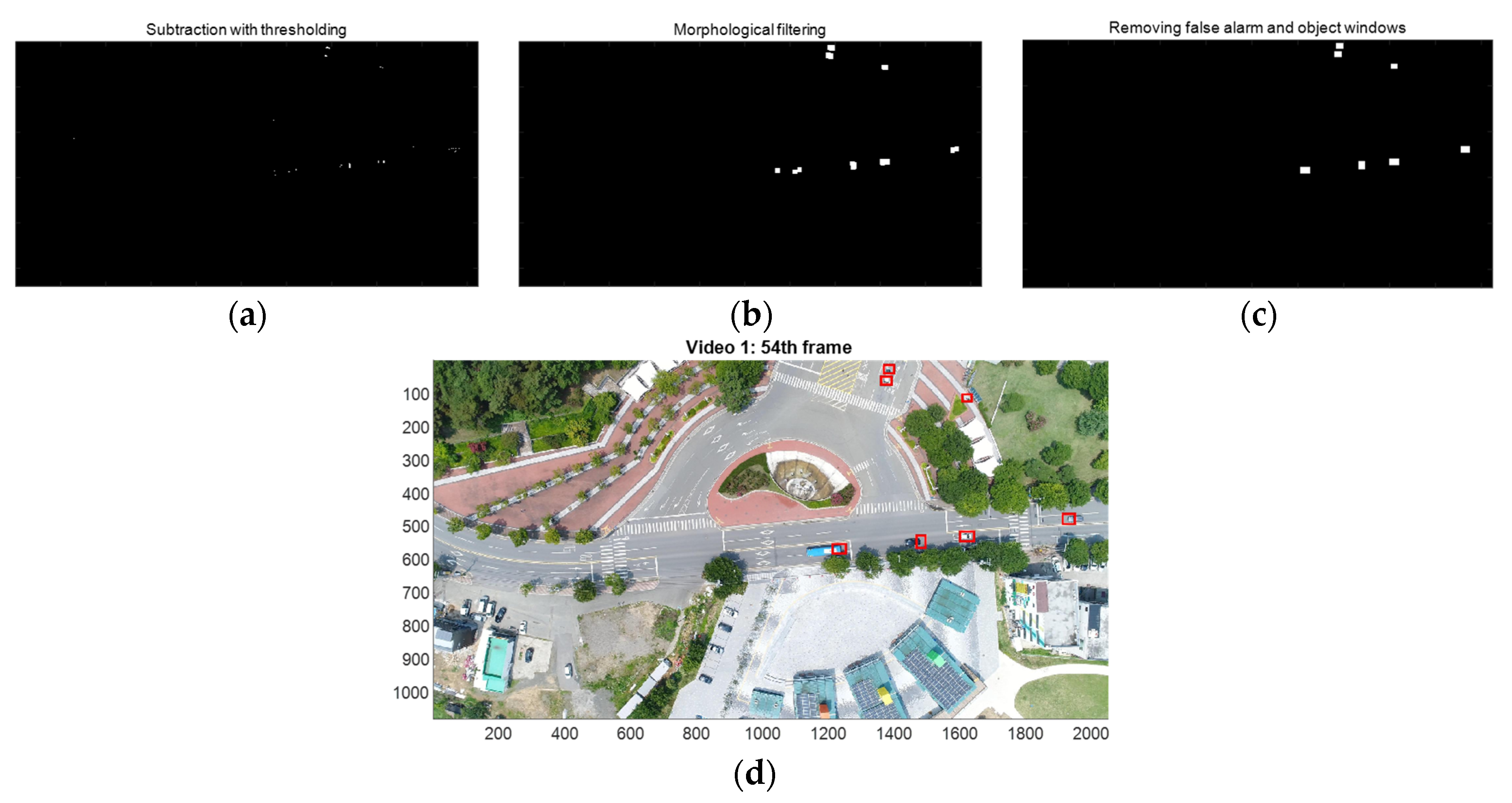

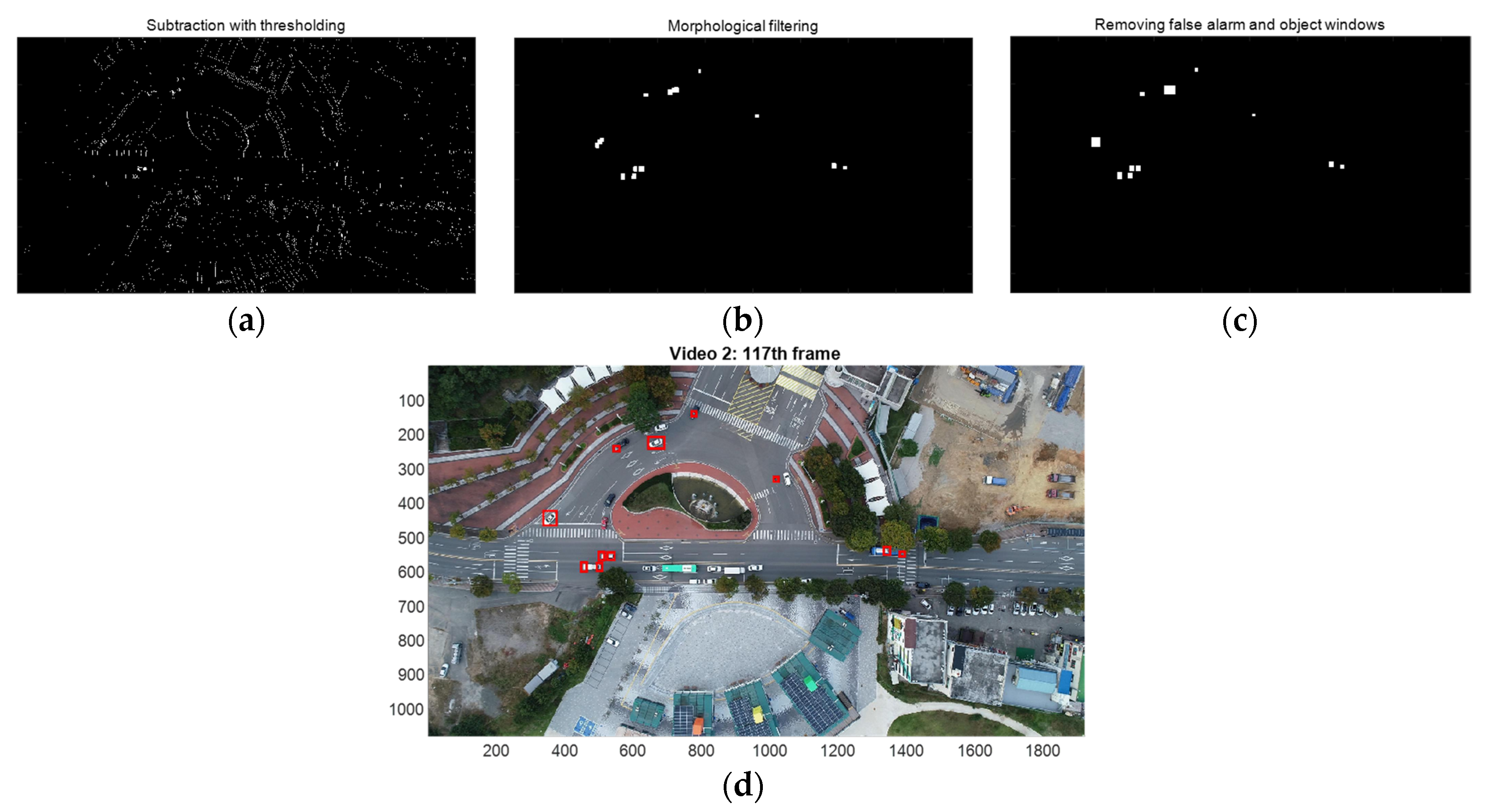

4.2. Moving Object Detection

4.3. Multiple Target Trackig

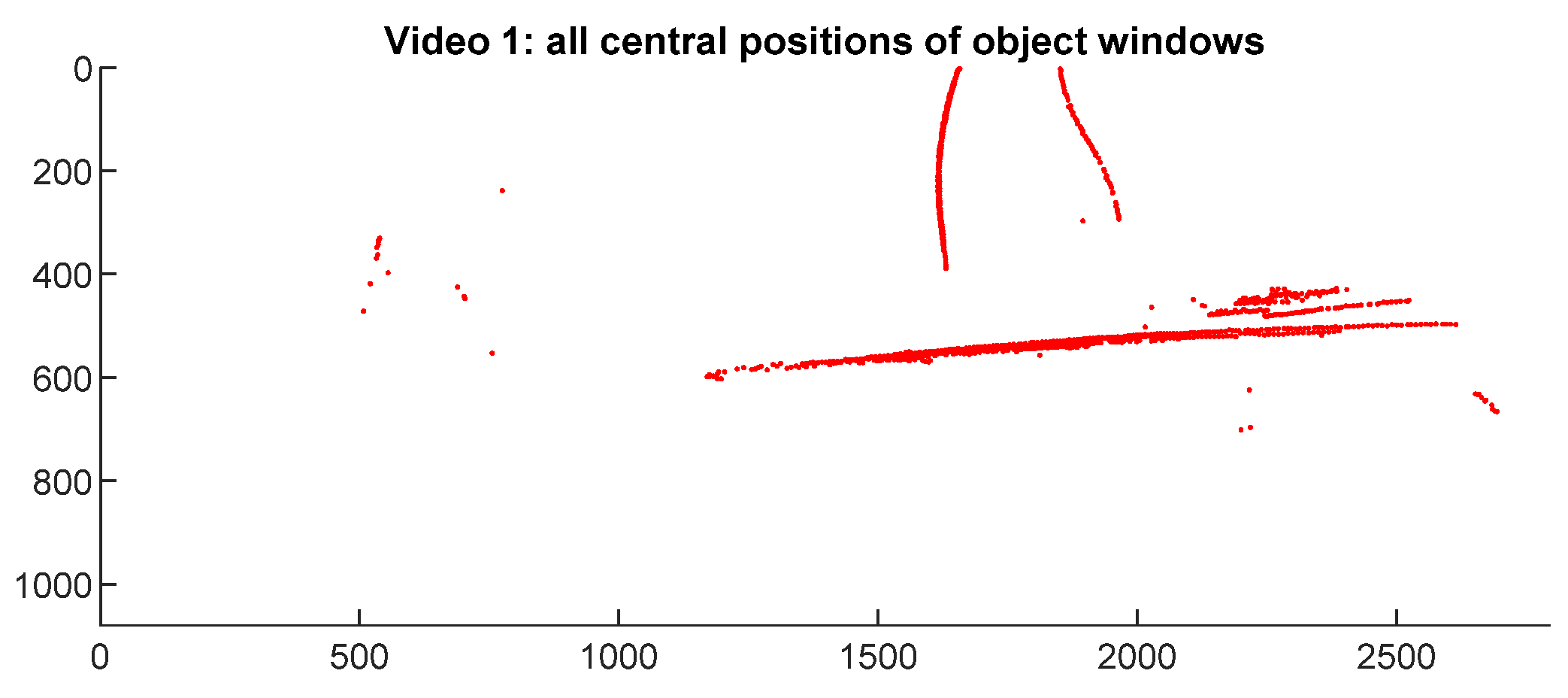

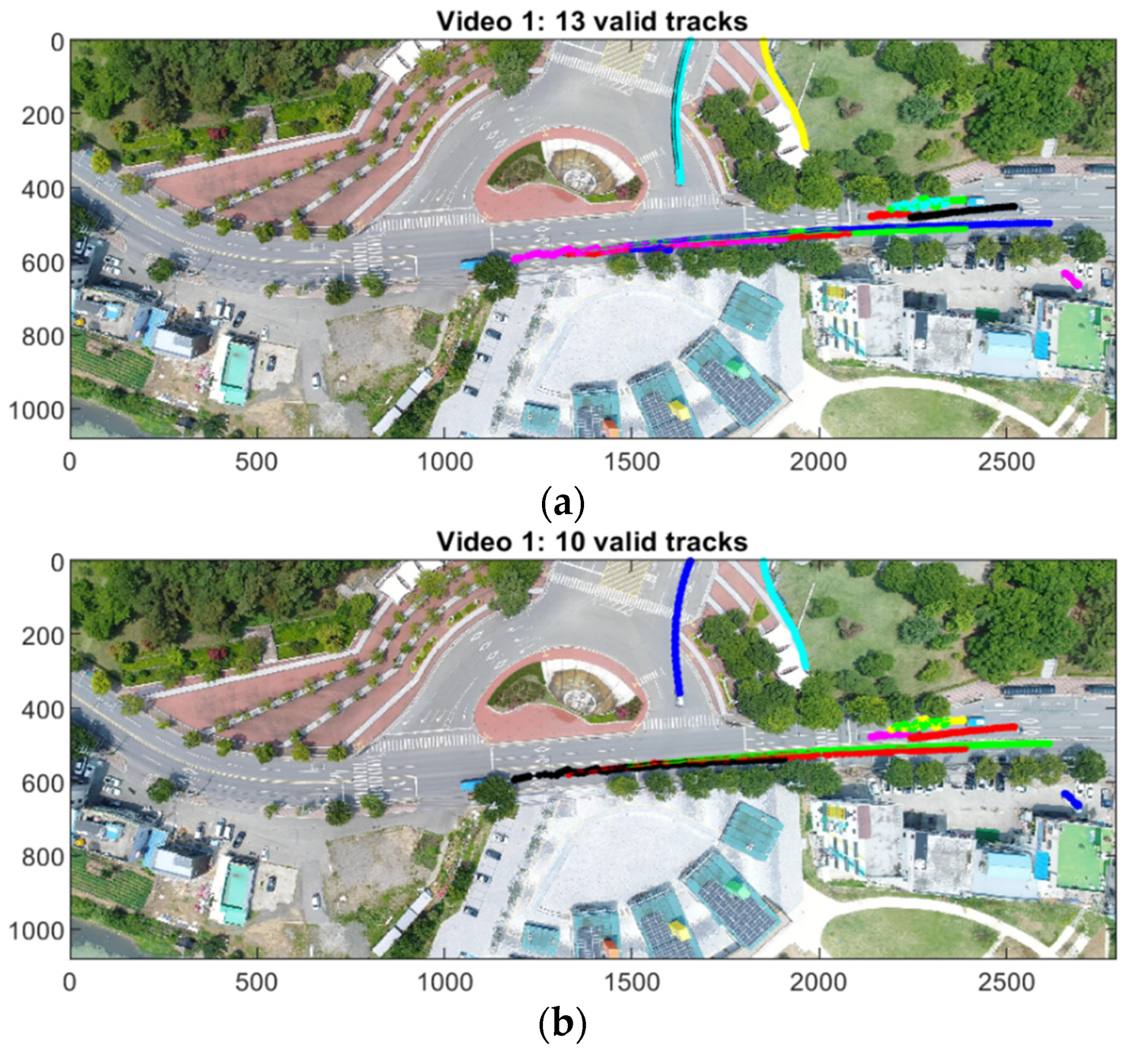

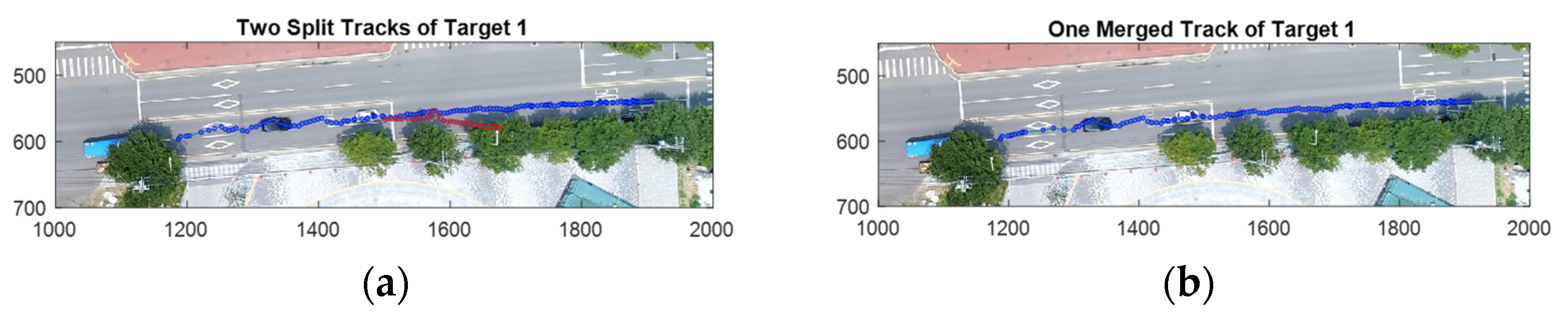

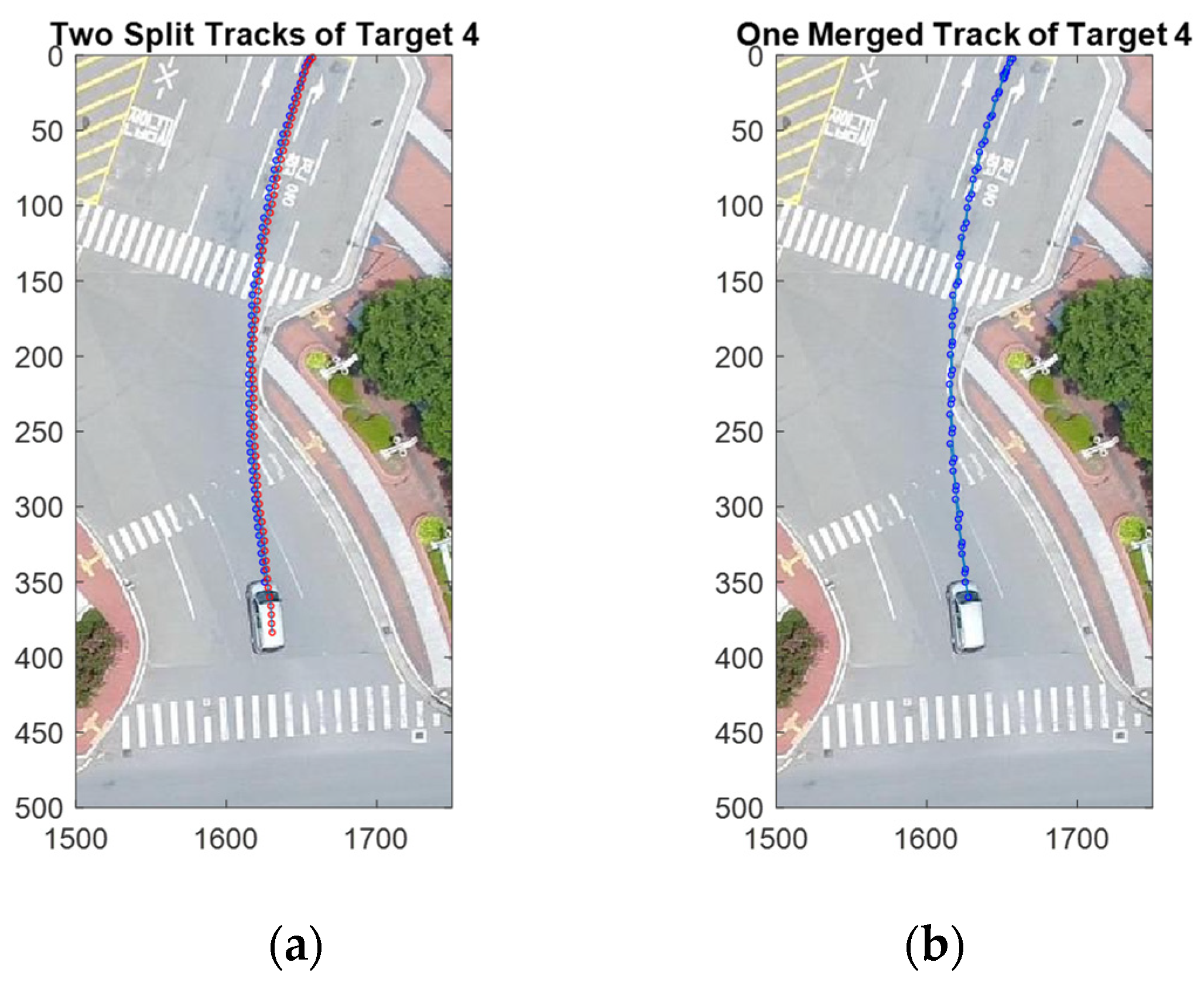

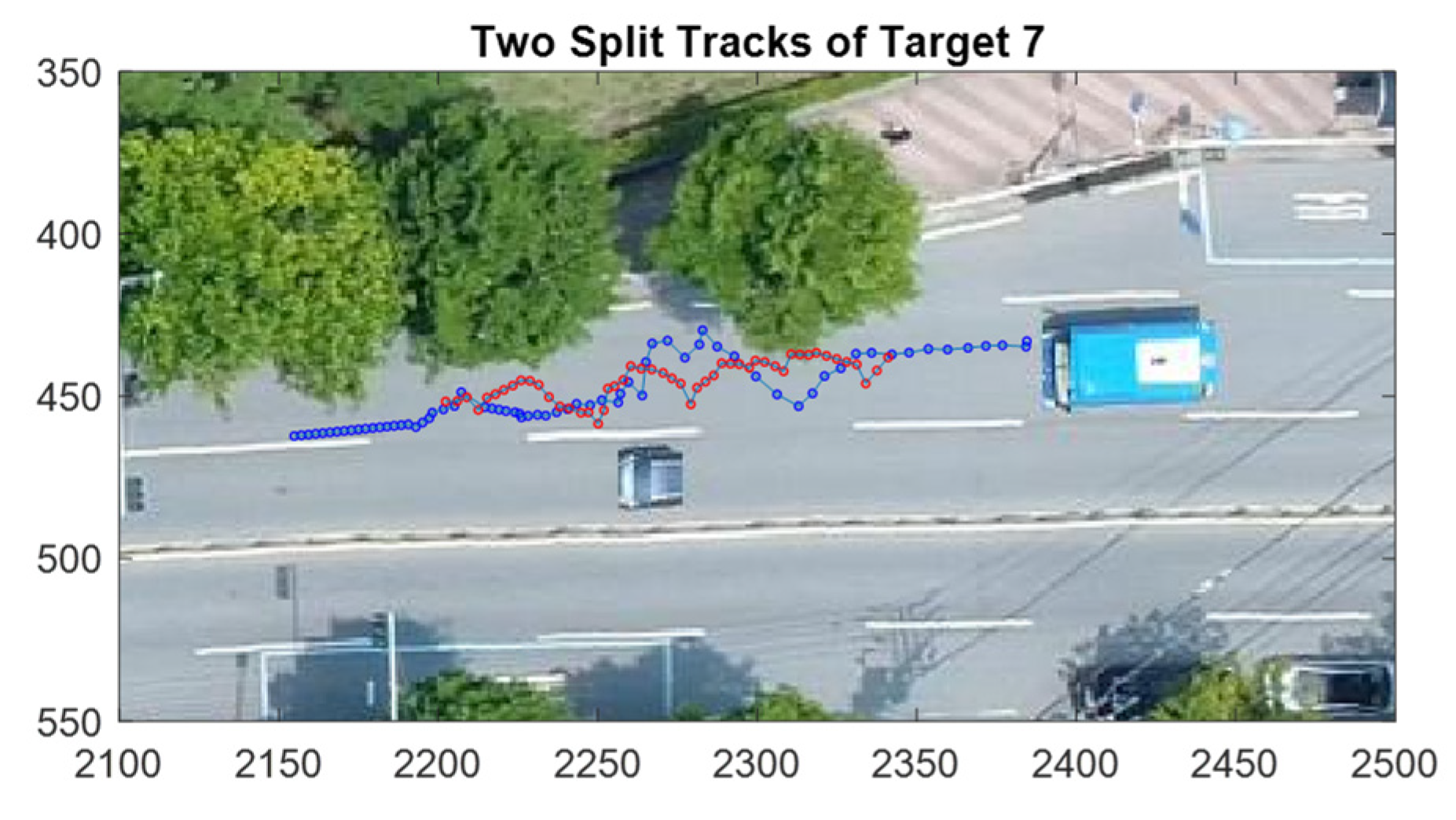

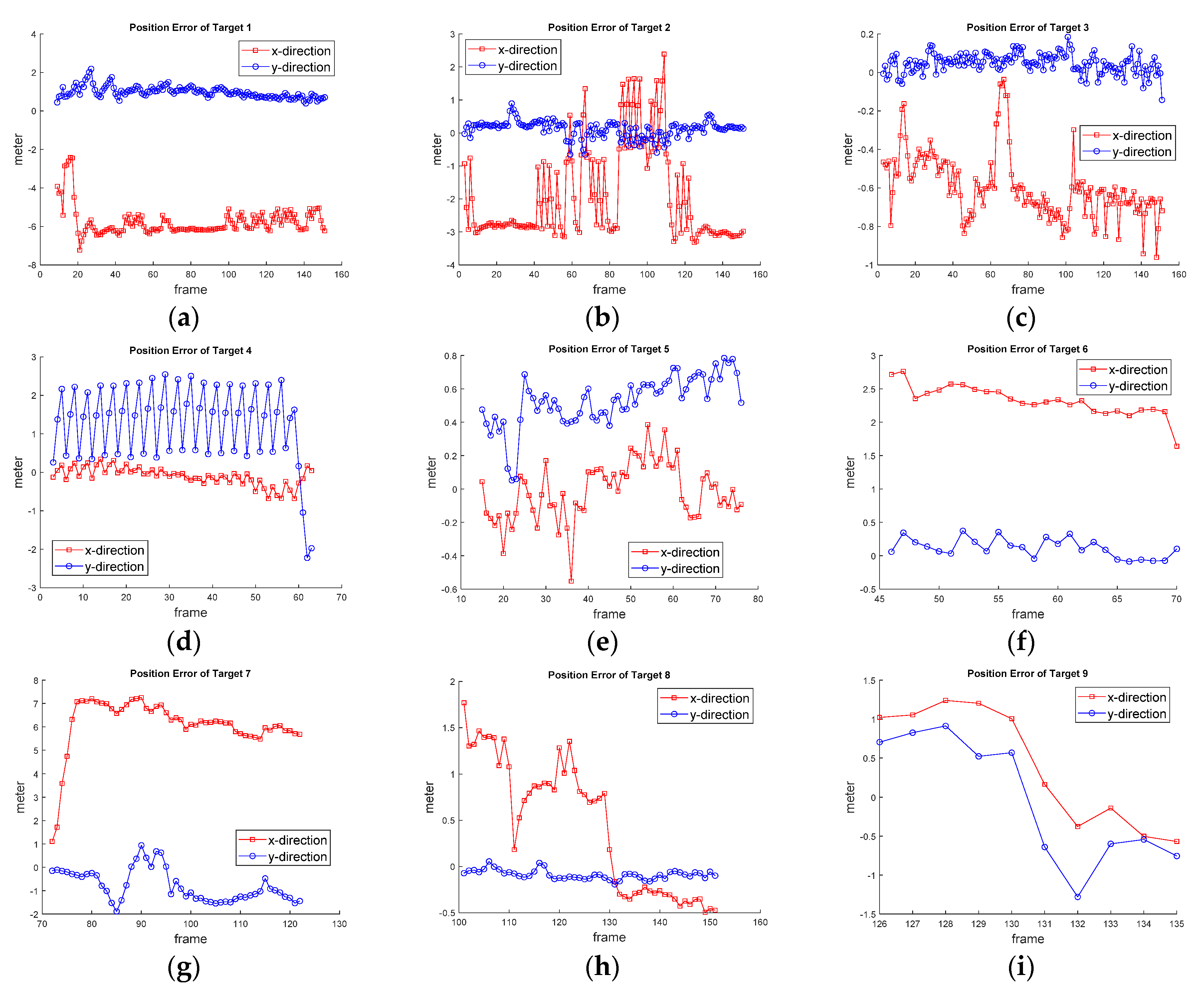

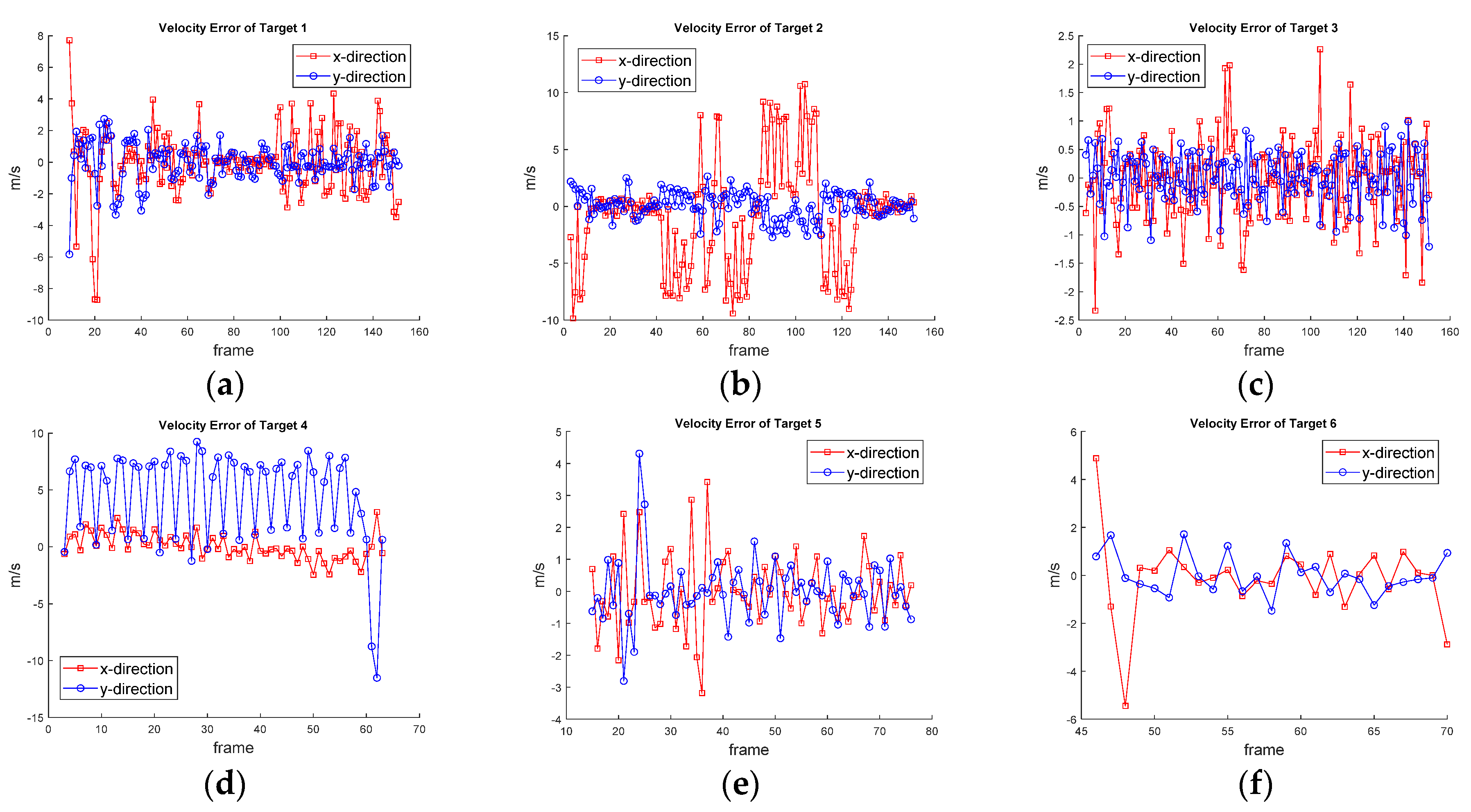

4.3.1. Tracking Results of Video 1

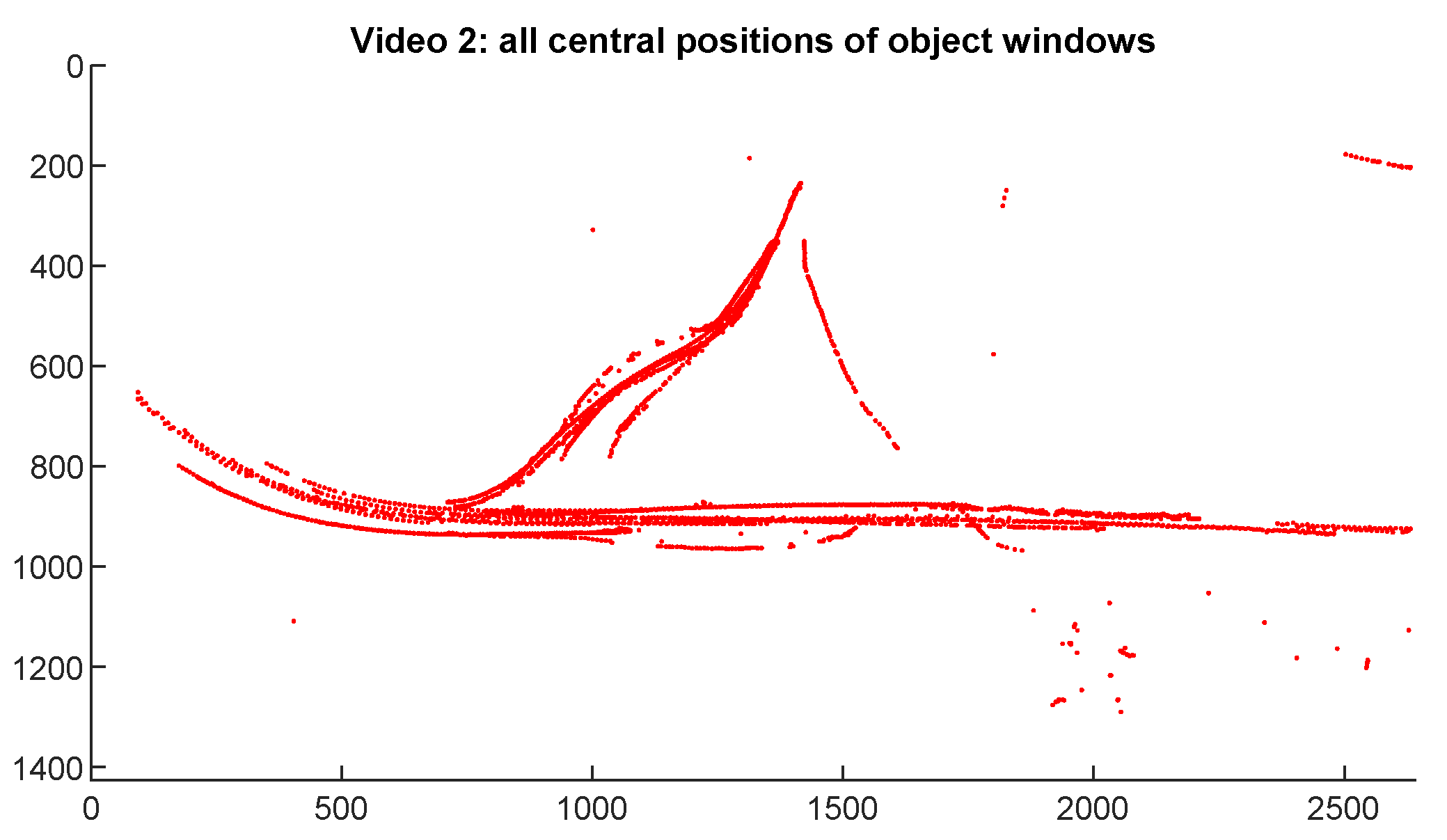

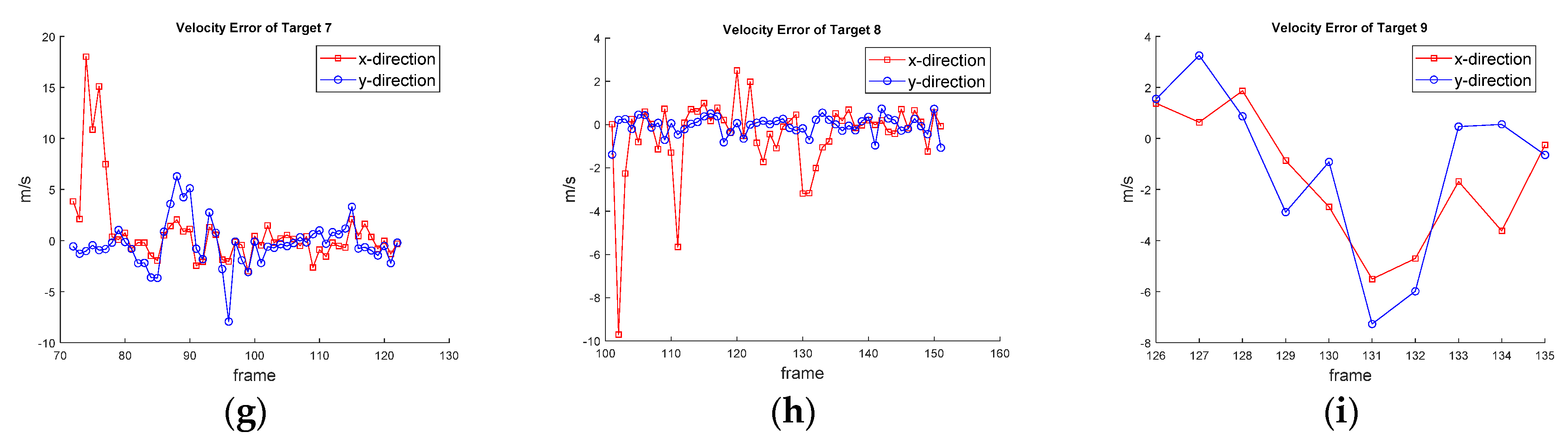

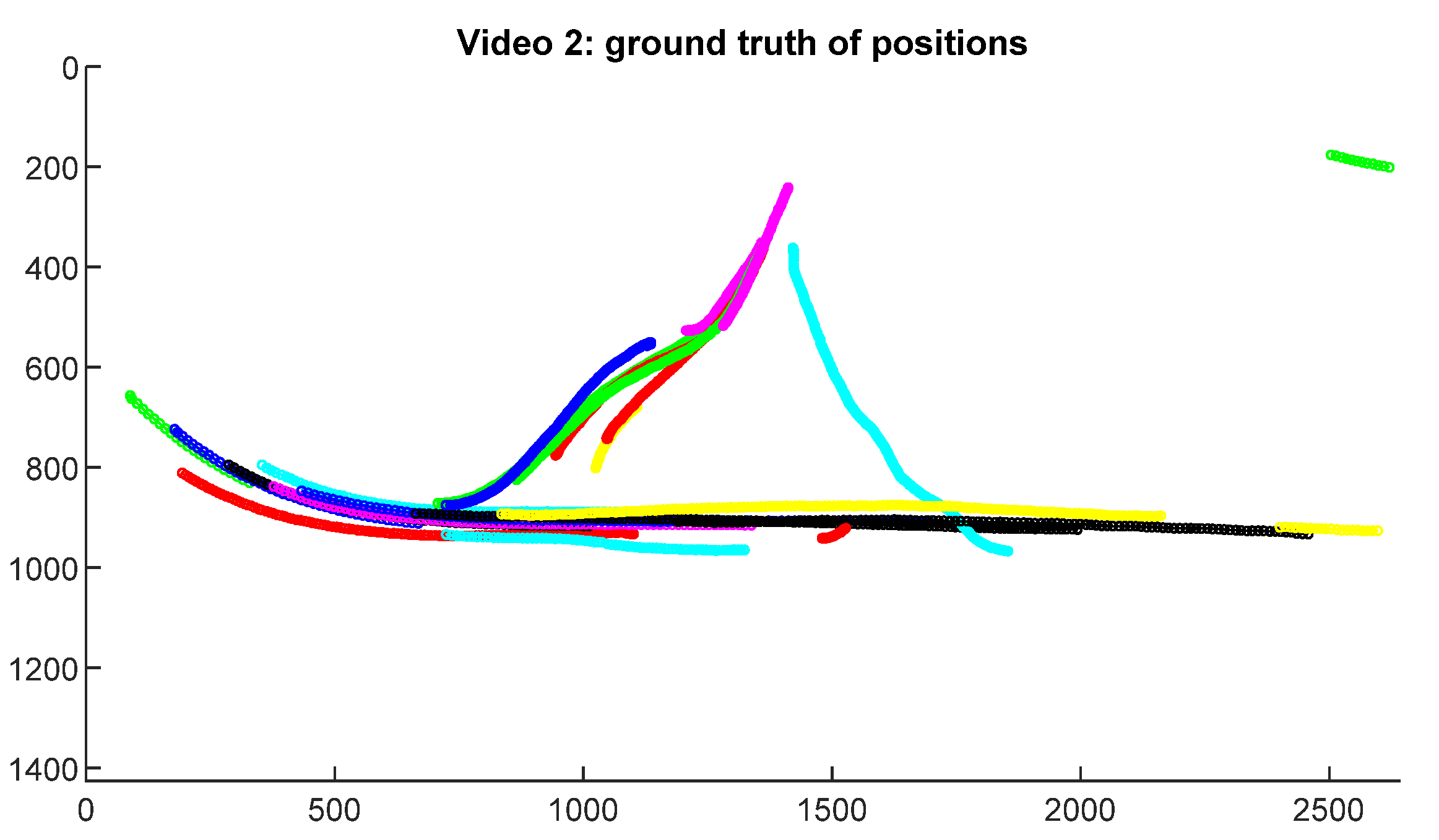

4.3.2. Tracking Results of Video 2

5. Discussion

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Alzahrani, B.; Oubbati, O.S.; Barnawi, A.; Atiquzzaman, M.; Alghazzawi, D. UAV assistance paradigm: State-of-the-art in applications and challenges. J. Netw. Comput. Appl. 2020, 166, 102706. [Google Scholar] [CrossRef]

- Zaheer, Z.; Usmani, A.; Khan, E.; Qadeer, M.A. Aerial surveillance system using UAV. In Proceedings of the 2016 Thirteenth International Conference on Wireless and Optical Communications Networks (WOCN), Hyderabad, India, 21–23 July 2016; pp. 1–7. [Google Scholar]

- Theys, B.; Schutter, J.D. Forward flight tests of a quadcopter unmanned aerial vehicle with various spherical body di-ameters. Int. J. Micro Air Veh. 2020, 12, 1–8. [Google Scholar]

- Zitová, B.; Flusser, J. Image registration methods: A survey. Image Vision. Comput. 2003, 21, 977–1000. [Google Scholar] [CrossRef]

- Tico, M.; Pulli, K. Robust image registration for multi-frame mobile applications. In Proceedings of the 2010 Conference Record of the Forty Fourth Asilomar Conference on Signals, Systems and Computers, Pacific Grove, CA, USA, 7–10 November 2010; pp. 860–864. [Google Scholar]

- Uchiyama, H.; Takahashi, T.; Ide, I.; Murase, H. Frame Registration of In-Vehicle Normal Camera with Omni-Directional Camera for Self-Position Estimation. In Proceedings of the 2008 3rd International Conference on Innovative Computing Information and Control, Dalian, China, 18–20 June 2008; p. 7. [Google Scholar]

- Tzanidou, G.; Climent-Pérez, P.; Hummel, G.; Schmitt, M.; Stütz, P.; Monekosso, D.; Remagnino, P. Telemetry assisted frame recognition and background subtraction in low-altitude UAV videos. In Proceedings of the 12th IEEE International Conference on Advanced Video and Signal Based Surveillance, Karlsruhe, Germany, 25–28 August 2015; pp. 1–6. [Google Scholar]

- Nam, D.; Yeom, S. Moving Vehicle Detection and Drone Velocity Estimation with a Moving Drone. Int. J. Fuzzy Log. Intell. Syst. 2020, 20, 43–51. [Google Scholar] [CrossRef]

- Li, S.; Yeung, D.-Y. Visual object tracking for unmanned aerial vehicles: A benchmark and new motion models. In Proceedings of the Thirty-Frist AAAI conference on Artificial Intelligence (AAAI-17), San Francisco, CA, USA, 4–9 February 2017; pp. 4140–4146. [Google Scholar]

- Du, D.; Qi, Y.; Yu, H.; Yang, Y.; Duan, K.; Li, G.; Zhang, W.; Huang, Q.; Tian, Q. The Unmanned Aerial Vehicle Benchmark: Object Detection and Tracking. Lect. Notes Comput. Sci. 2018, 375–391. [Google Scholar] [CrossRef]

- Zhang, S.; Zhuo, L.; Zhang, H.; Li, J. Object Tracking in Unmanned Aerial Vehicle Videos via Multifeature Discrimination and Instance-Aware Attention Network. Remote Sens. 2020, 12, 2646. [Google Scholar] [CrossRef]

- Kouris, A.; Kyrkou, C.; Bouganis, C.-S. Informed Region Selection for Efficient UAV-Based Object Detectors: Altitude-Aware Vehicle Detection with Cycar Dataset; IEEE/RSJ IROS: Las Vegas, NV, USA, 2019. [Google Scholar]

- Kamate, S.; Yilmazer, N. Application of Object Detection and Tracking Techniques for Unmanned Aerial Vehicles. Procedia Comput. Sci. 2015, 61, 436–441. [Google Scholar] [CrossRef]

- Fang, P.; Lu, J.; Tian, Y.; Miao, Z. An Improved Object Tracking Method in UAV Videos. Procedia Eng. 2011, 15, 634–638. [Google Scholar] [CrossRef]

- He, Y.; Fu, C.; Lin, F.; Li, Y.; Lu, P. Toward Robust Visual Tracking for Unmanned Aerial Vehicle with Tri-Attentional Correlation Filters; IEEE/RSJ IROS: Las Vegas, NV, USA, 2020; pp. 1575–1582. [Google Scholar]

- Chen, P.; Dang, Y.; Liang, R.; Zhu, W.; He, X. Real-Time Object Tracking on a Drone with Multi-Inertial Sensing Data. IEEE Trans. Intell. Transp. Syst. 2018, 19, 131–139. [Google Scholar] [CrossRef]

- Bian, C.; Yang, Z.; Zhang, T.; Xiong, H. Pedestrian tracking from an unmanned aerial vehicle. In Proceedings of the 2016 IEEE 13th International Conference on Signal Processing (ICSP), Chengdu, China, 6–10 November 2016; IEEE: New York, NY, USA, 2016; pp. 1067–1071. [Google Scholar]

- Sinha, A.; Kirubarajan, T.; Bar-Shalom, Y. Autonomous Ground Target Tracking by Multiple Cooperative UAVs. In Proceedings of the IEEE 2005 IEEE Aerospace Conference, Big Sky, MT, USA, 5–12 March 2005; IEEE: New York, NY, USA, 2005; pp. 1–9. [Google Scholar]

- Guido, G.; Gallelli, V.; Rogano, D.; Vitale, A. Evaluating the accuracy of vehicle tracking data obtained from Unmanned Aerial Vehicles. Int. J. Transp. Sci. Technol. 2016, 5, 136–151. [Google Scholar] [CrossRef]

- Rajasekaran, R.K.; Ahmed, N.; Frew, E. Bayesian Fusion of Unlabeled Vision and RF Data for Aerial Tracking of Ground Targets; IEEE/RSJ IROS: Las Vegas, NV, USA, 2020; pp. 1629–1636. [Google Scholar]

- Bar-Shalom, Y.; Li, X.R. Multitarget-Multisensor Tracking: Principles and Techniques; YBS Publishing: Storrs, CT, USA, 1995. [Google Scholar]

- Stone, L.D.; Streit, R.L.; Corwin, T.L.; Bell, K.L. Bayesian Multiple Target Tracking, 2nd ed.; Artech House: Boston, MA, USA, 2014. [Google Scholar]

- Blom, H.A.P.; Bar-shalom, Y. The interacting multiple model algorithm for systems with Markovian switching coeffi-cients. IEEE Trans. Autom. Control 1988, 33, 780–783. [Google Scholar] [CrossRef]

- Yeom, S.-W.; Kirubarajan, T.; Bar-Shalom, Y. Track segment association, fine-step IMM and initialization with doppler for improved track performance. IEEE Trans. Aerosp. Electron. Syst. 2004, 40, 293–309. [Google Scholar] [CrossRef]

- Lee, M.-H.; Yeom, S. Detection and Tracking of Multiple Moving Vehicles with a UAV. Int. J. Fuzzy Log. Intell. Syst. 2018, 18, 182–189. [Google Scholar] [CrossRef]

- Lee, M.-H.; Yeom, S. Multiple target detection and tracking on urban roads with a drone. J. Intell. Fuzzy Syst. 2018, 35, 6071–6078. [Google Scholar] [CrossRef]

- Yeom, S.; Cho, I.-J. Detection and Tracking of Moving Pedestrians with a Small Unmanned Aerial Vehicle. Appl. Sci. 2019, 9, 3359. [Google Scholar] [CrossRef]

- Yeom, S. Efficient multi-target tracking with sub-event IMM-JPDA and one-point prime initialization. LNEE 2009, 35, 127. [Google Scholar]

- Reid, D. An algorithm for tracking multiple targets. IEEE Trans. Autom. Control 1979, 24, 843–854. [Google Scholar] [CrossRef]

- Streit, R.L. Maximum likelihood method for probabilistic multi-hypothesis tracking. In Proceedings of the SPIE Aerosense Symposium Conference on Signal and Data processing of Small Targets, Orland, FL, USA, 5–7 April 1994; pp. 394–405. [Google Scholar]

- Vo, B.-N.; Ma, W.-K. The Gaussian Mixture Probability Hypothesis Density Filter. IEEE Trans. Signal Process. 2006, 54, 4091–4104. [Google Scholar] [CrossRef]

- Deb, S.; Yeddanapudi, M.; Pattipati, K.R.; Bar-Shalom, Y. A generalized S-D assignment algorithm for multisen-sor-multitarget state estimation. IEEE Trans. Aerosp. Electron. Syst. 1997, 33, 523–538. [Google Scholar]

- Tian, X.; Bar-Shalom, Y. Track-to-track fusion configurations and applications in a sliding window. J. Adv. Inf. Fusion 2009, 4, 146–164. [Google Scholar]

- Bar-Shalom, Y.; Willet, P.K.; Tian, X. Track and Data Fusion. In A Handbook of Algorithms; YBS Publishing: Storrs, CT, USA, 2011. [Google Scholar]

- Li, X.R.; Jilkov, V.P. Survey of maneuvering target tracking, part I: Dynamic models. IEEE Trans. Aerosp. Electron. Syst. 2003, 39, 1333–1364. [Google Scholar]

- Moore, J.R.; Blair, W.D. Multitarget-Multisensor Tracking: Applications and Advances; Bar-Shalom, Y., Blair, W.D., Eds.; Practical Aspects of Multisensor Tracking, Chap. 1; Artech House: Boston, MA, USA, 2000; Volume III. [Google Scholar]

| Video 1 | Video 2 | |

|---|---|---|

| Multicopter/Camera | Phantom 4/Bundle | Inspire 2/Zenmuse X5 |

| Flying speed (m/s) | 5.1 | 5 |

| Flying time (sec) | 15 | 24 |

| Flying direction | West→East | West→East→North |

| Actual fame number | 151 | 241 |

| Actual frame size (pixels) | 2048 × 1080 | 1920 × 1080 |

| Actual frame rate (fps) | 10 | |

| Number of moving vehicles | 9 | 23 |

| Video 1 | Video 2 | |

|---|---|---|

| Vmax (m/s) for speed gating | 30 | |

| (m/s2) | 30 | 10 |

| (m/s) | 0.5 | 1.5 |

| for measurent association | 8 | 10 |

| for track association | 170 | 70 |

| Valid track criteria (Minimum track life) | 9 | |

| Track terminaion criteria (Maximum searching number) | 15 | |

| Target 1 | Target 2 | Target 3 | Target 4 | Target 5 | Target 6 | Target 7 | Target 8 | Target 9 | Avg. | ||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Position (m) | Previous | 6.01 | 2.88 | 0.62 | 2.34 | 0.58 | 2.34 | 6.29 | 0.84 | 1.13 | 2.56 |

| Proposed | 5.89 | 2.41 | 1.64 | 2.42 | |||||||

| Velocity (m/s) | Previous | 2.32 | 1.06 | 0.86 | 1.15 | 1.56 | 1.89 | 4.61 | 1.95 | 4.41 | 2.20 |

| Proposed | 2.45 | 4.82 | 6.12 | 3.19 |

| Target 2 | Target 4 | Avg. | ||

|---|---|---|---|---|

| Position (m) | Previous | 2.88 | 2.34 | 2.61 |

| Fused State only | 0.85 | 0.49 | 0.67 | |

| Velocity (m/s) | Previous | 1.06 | 1.15 | 1.11 |

| Fused State only | 2.33 | 1.21 | 1.77 |

| Target 1 | Target 2 | Target 3 | Target 4 | Target 5 | Target 6 | Target 7 | Target 8 | Target 9 | Target 10 | Target 11 | Target 12 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Previous | 2.40 | 2.61 | 1.94 | 2.31 | 0.60 | 1.53 | 1.65 | 1.08 | 0.27 | 0.34 | 1.42 | 0.26 |

| Proposed | 1.41 | 2.55 | 0.84 | 0.47 | 0.66 | 0.31 | 0.66 | |||||

| Target 13 | Target 14 | Target 15 | Target 16 | Target 17 | Target 18 | Target 19 | Target 20 | Target 21 | Target 22 | Target 23 | Avg. | |

| Previous | 1.55 | 2.73 | 0.21 | 1.92 | 3.04 | 2.94 | 1.61 | 0.96 | 4.01 | 1.29 | 0.99 | 1.64 |

| Proposed | 0.73 | 2.8 | 0.39 | 1.83 | 1.04 | 0.48 | 1.82 | 0.87 | 0.76 | 1.21 |

| Target 1 | Target 2 | Target 3 | Target 4 | Target 5 | Target 6 | Target 7 | Target 8 | Target 9 | Target 10 | Target 11 | Target 12 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Previous | 0.95 | 1.27 | 1.36 | 0.89 | 1.85 | 2.39 | 5.97 | 1.05 | 1.12 | 0.95 | 1.89 | 0.81 |

| Proposed | 1.95 | 1.32 | 1.65 | 1.68 | 2.31 | 0.91 | 1.76 | |||||

| Target 13 | Target 14 | Target 15 | Target 16 | Target 17 | Target 18 | Target 19 | Target 20 | Target 21 | Target 22 | Target 23 | Avg. | |

| Previous | 1.18 | 2.66 | 1.10 | 1.15 | 1.49 | 1.14 | 0.99 | 0.89 | 1.12 | 1.54 | 4.28 | 1.65 |

| Proposed | 2.17 | 4.03 | 1.30 | 1.31 | 2.29 | 1.43 | 3.10 | 1.74 | 4.15 | 1.97 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yeom, S.; Nam, D.-H. Moving Vehicle Tracking with a Moving Drone Based on Track Association. Appl. Sci. 2021, 11, 4046. https://doi.org/10.3390/app11094046

Yeom S, Nam D-H. Moving Vehicle Tracking with a Moving Drone Based on Track Association. Applied Sciences. 2021; 11(9):4046. https://doi.org/10.3390/app11094046

Chicago/Turabian StyleYeom, Seokwon, and Don-Ho Nam. 2021. "Moving Vehicle Tracking with a Moving Drone Based on Track Association" Applied Sciences 11, no. 9: 4046. https://doi.org/10.3390/app11094046

APA StyleYeom, S., & Nam, D.-H. (2021). Moving Vehicle Tracking with a Moving Drone Based on Track Association. Applied Sciences, 11(9), 4046. https://doi.org/10.3390/app11094046