A Two-Phase Fashion Apparel Detection Method Based on YOLOv4

Abstract

1. Introduction

2. Related Work

2.1. Fashion Apparel Detection

2.2. YOLO

2.3. Transfer Learning

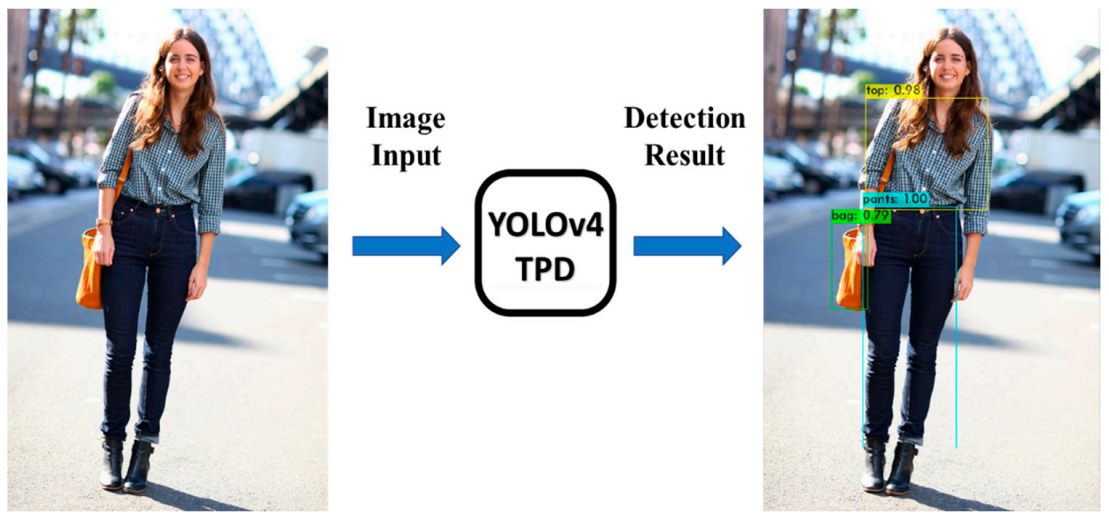

3. Proposed Method

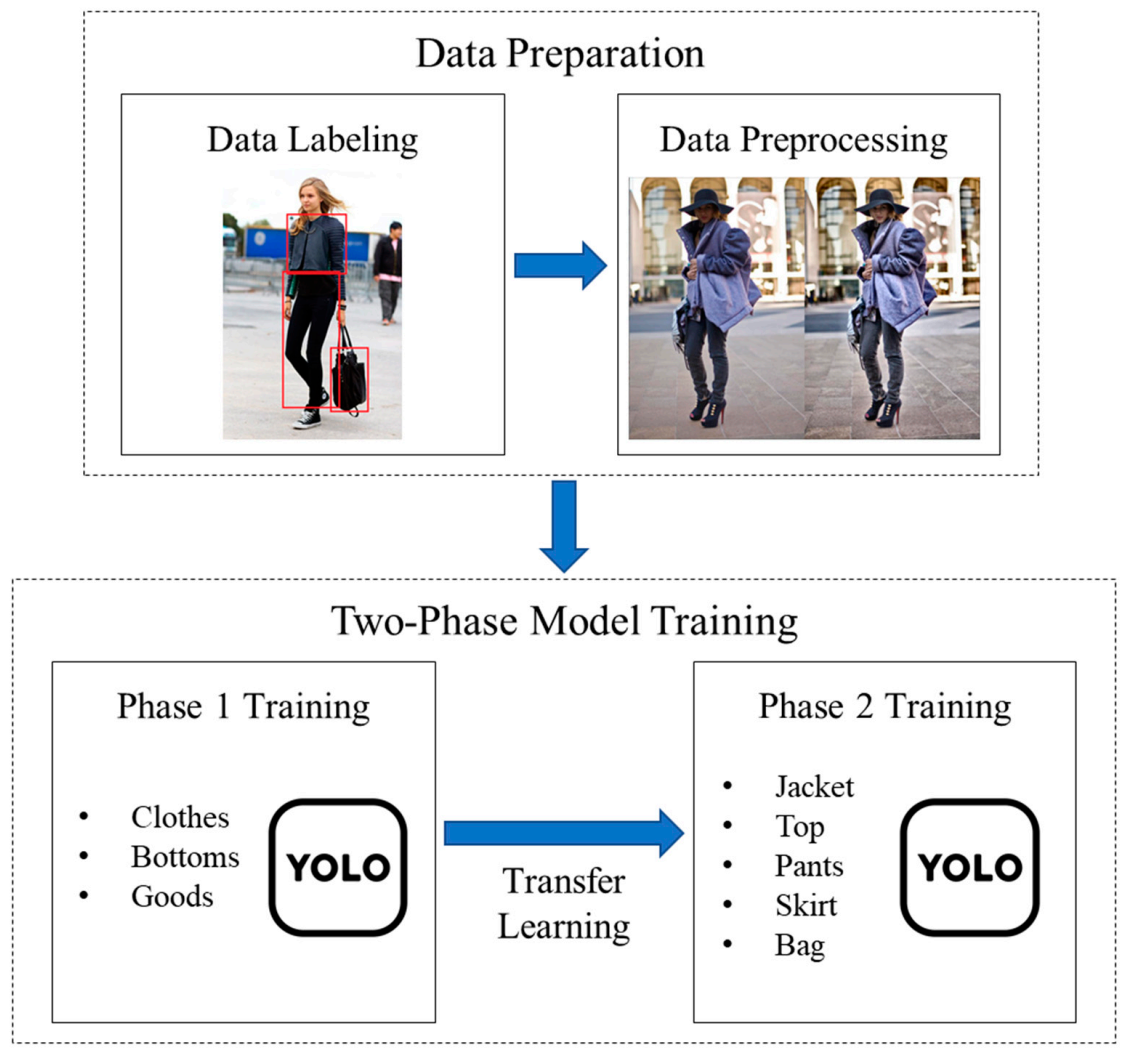

3.1. Data Preparation

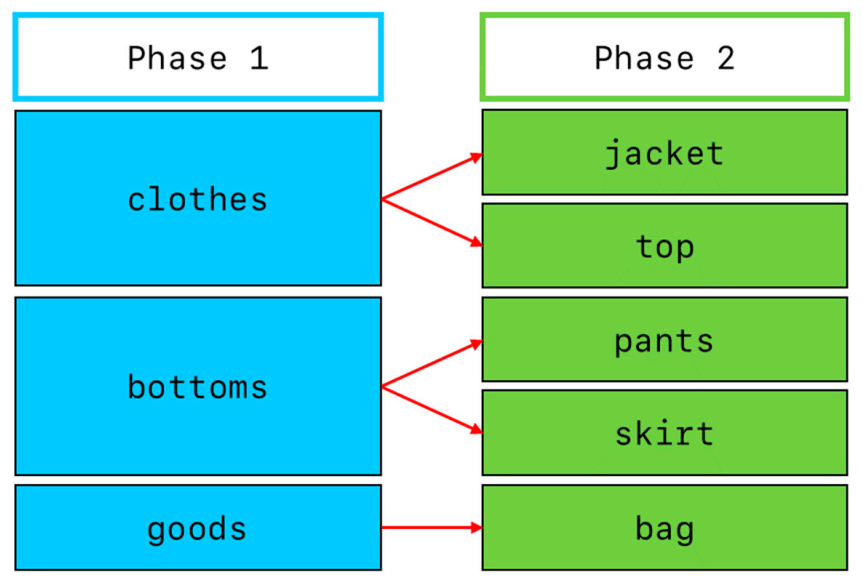

3.2. Two-Phase Model Training

4. Experimental Results

4.1. Experimental Environment

4.2. Dataset

4.3. Hyperparameter Setting

4.4. Evaluation Criterion

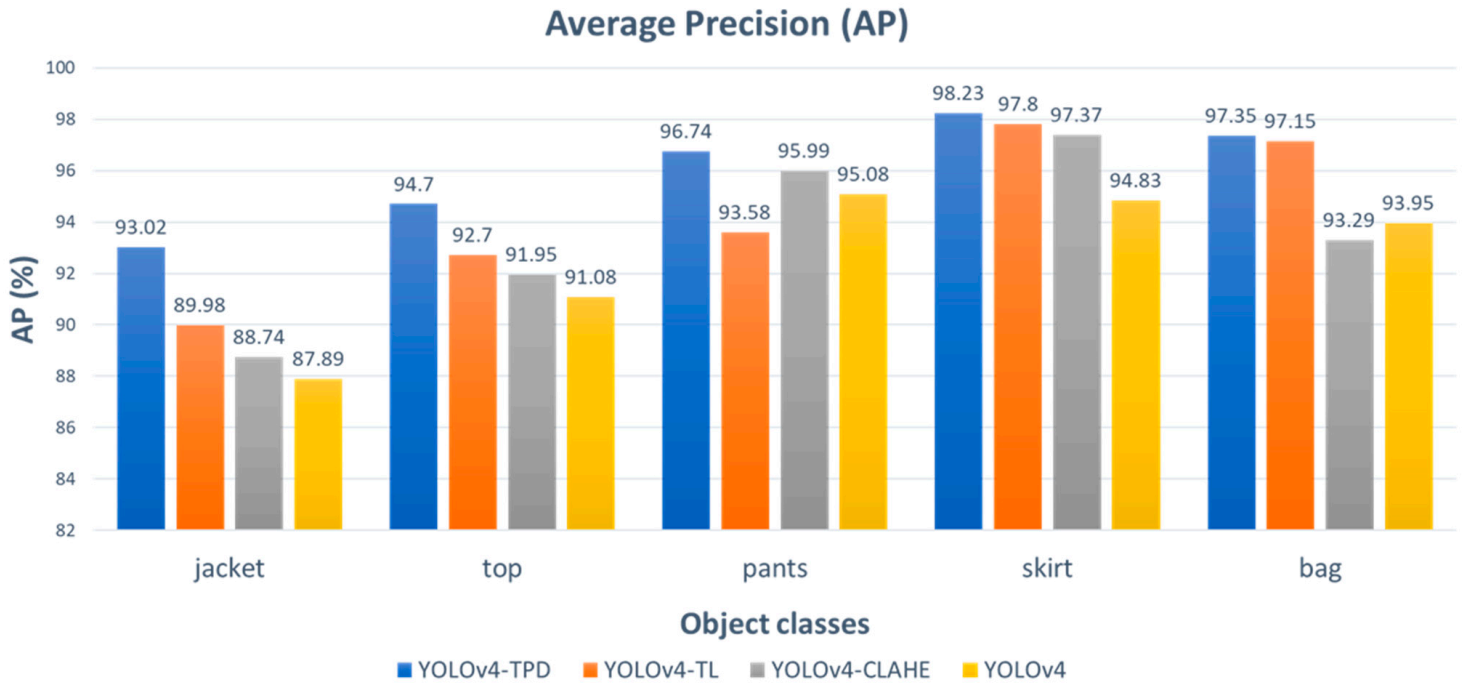

4.5. Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Seo, Y.; Shin, K. Hierarchical Convolutional Neural Networks for Fashion Image Classification. Expert Syst. Appl. 2019, 116, 328–339. [Google Scholar] [CrossRef]

- Benjdira, B.; Khursheed, T.; Koubaa, A.; Ammar, A.; Ouni, K. Car Detection Using Unmanned Aerial Vehicles: Comparison between Faster R-CNN and YOLOv3. In Proceedings of the 2019 1st International Conference on Unmanned Vehicle Systems-Oman, UVS, Muscat, Oman, 5–7 February 2019; pp. 1–6. [Google Scholar]

- Lyu, H.; Fu, H.; Hu, X.; Liu, L. Esnet: Edge-Based Segmentation Network for Real-Time Semantic Segmentation in Traffic Scenes. In Proceedings of the 2019 IEEE International Conference on Image Processing, ICIP, Taipei, Taiwan, 22–25 September 2019; pp. 1855–1859. [Google Scholar]

- Yi, J.; Wu, P.; Hoeppner, D.J.; Metaxas, D. Pixel-Wise Neural Cell Instance Segmentation. In Proceedings of the 2018 IEEE 15th International Symposium on Biomedical Imaging, Washington, DC, USA, 4–7 April 2018; pp. 373–377. [Google Scholar]

- Wang, X.; Zhang, T. Clothes Search in Consumer Photos via Color Matching and Attribute Learning. In Proceedings of the MM’11—2011 ACM Multimedia Conference and Co-Located Workshops, Scottsdale, AZ, USA, 28 November–1 December 2011; ACM Press: New York, NY, USA, 2011; pp. 1353–1356. [Google Scholar]

- Liu, S.; Song, Z.; Liu, G.; Xu, C.; Lu, H.; Yan, S. Street-to-Shop: Cross-Scenario Clothing Retrieval via Parts Alignment and Auxiliary Set. In Proceedings of the 2012 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 3330–3337. [Google Scholar]

- Song, Z.; Wang, M.; Hua, X.S.; Yan, S. Predicting Occupation via Human Clothing and Contexts. In Proceedings of the 2011 IEEE International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 1084–1091. [Google Scholar]

- Yamamoto, T.; Nakazawa, A. Fashion Style Recognition Using Component-Dependent Convolutional Neural Networks. In Proceedings of the 2019 International Conference on Image Processing, ICIP, Taipei, Taiwan, 22–25 September 2019; pp. 3397–3401. [Google Scholar]

- Shin, Y.G.; Yeo, Y.J.; Sagong, M.C.; Ji, S.W.; Ko, S.J. Deep Fashion Recommendation System with Style Feature Decomposition. In Proceedings of the 2019 IEEE 9th International Conference on Consumer Electronics—Berlin, ICCE-Berlin, Berlin, Germany, 8–11 September 2019; pp. 301–305. [Google Scholar]

- Eshwar, S.G.; Gautham Ganesh Prabhu, J.; Rishikesh, A.V.; Charan, N.A.; Umadevi, V. Apparel Classification Using Convolutional Neural Networks. In Proceedings of the 2016 International Conference on ICT in Business, Industry, and Government, ICTBIG, Indore, India, 18–19 November 2016. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the 2014 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the 14th European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; Springer: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the 2017 30th IEEE Conference on Computer Vision and Pattern Recognition, CVPR, Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Pan, S.J.; Yang, Q. A Survey on Transfer Learning. IEEE Trans. Knowl. Data Eng. 2010, 22, 1345–1359. [Google Scholar] [CrossRef]

- Chen, H.; Gallagher, A.; Girod, B. Describing Clothing by Semantic Attributes. In Proceedings of the Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin/Heidelberg, Germany, 2012; Volume 7574, pp. 609–623. [Google Scholar]

- Yang, M.; Yu, K. Real-Time Clothing Recognition in Surveillance Videos. In Proceedings of the 2011 18th International Conference on Image Processing, ICIP, Brussels, Belgium, 11–14 September 2011; pp. 2937–2940. [Google Scholar]

- Surakarin, W.; Chongstitvatana, P. Classification of Clothing with Weighted SURF and Local Binary Patterns. In Proceedings of the ICSEC 2015—19th International Computer Science and Engineering Conference: Hybrid Cloud Computing: A New Approach for Big Data Era, Chiang Mai, Thailand, 23–26 November 2015. [Google Scholar]

- Lao, B.; Jagadeesh, K. Convolutional Neural Networks for Fashion Classification and Object Detection. Available online: http://cs231n.stanford.edu/reports/2015/pdfs/BLAO_KJAG_CS231N_FinalPaperFashionClassification.pdf (accessed on 11 March 2021).

- Liu, Z. A Deep Learning Method for Suit Detection in Images. In Proceedings of the 2018 14th International Conference on Signal Processing Proceedings, ICSP, Beijing, China, 12–16 August 2018; pp. 439–444. [Google Scholar]

- Liu, K.H.; Liu, T.J.; Wang, F. Cbl: A Clothing Brand Logo Dataset and a New Method for Clothing Brand Recognition. In Proceedings of the 2020 28th European Signal Processing Conference, Amsterdam, The Netherlands, 24–28 August 2021; pp. 655–659. [Google Scholar]

- Feng, Z.; Luo, X.; Yang, T.; Kita, K. An Object Detection System Based on YOLOv2 in Fashion Apparel. In Proceedings of the 2018 IEEE 4th International Conference on Computer and Communications, ICCC, Chengdu, China, 7–10 December 2018; pp. 1532–1536. [Google Scholar]

- Liu, R.; Yan, Z.; Wang, Z.; Ding, S. An Improved YOLOV3 for Pedestrian Clothing Detection. In Proceedings of the 2019 6th International Conference on Systems and Informatics, ICSAI, Shanghai, China, 2–4 November 2019; pp. 139–143. [Google Scholar]

- Kumar, S.; Zheng, R. Hierarchical Category Detector for Clothing Recognition from Visual Data. In Proceedings of the 2017 IEEE International Conference on Computer Vision Workshops, ICCVW, Venice, Italy, 22–29 October 2017; pp. 2306–2312. [Google Scholar]

- Zuiderveld, K. Viii.5.-Contrast Limited Adaptive Histogram Equalization. In Graphics Gems; Heckbert, P.S., Ed.; Academic Press: Cambridge, MA, USA, 1994; pp. 474–485. [Google Scholar] [CrossRef]

- Yang, W.; Luo, P.; Lin, L. Clothing Co-Parsing by Joint Image Segmentation and Labeling. In Proceedings of the 2014 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 3182–3189. [Google Scholar]

- Goutte, C.; Gaussier, E. A Probabilistic Interpretation of Precision, Recall and F-Score, with Implication for Evaluation. In Proceedings of the 27th European Conference on Advances in Information Retrieval Research (ECIR), Santiago de Compostela, Spain, 21–23 March 2005; Springer: Berlin/Heidelberg, Germany, 2005; pp. 345–359. [Google Scholar]

- Everingham, M.; van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes (VOC) Challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

| Transfer Learning | Source and Target Domains | Source and Target Tasks |

|---|---|---|

| Inductive Transfer Learning | the same | different but related |

| Transductive Transfer Learning | different but related | the same |

| Unsupervised Transfer Learning | different but related | different but related |

| Parameters | Phase 1 Model | Phase 2 Model |

|---|---|---|

| classes | 3 | 5 |

| iterations | 6000 | 10,000 |

| steps | 4800, 5400 | 1000, 8000, 9000 |

| YOLOv4-TPD | YOLOv4-TL | YOLOv4-CLAHE | YOLOv4 | |

|---|---|---|---|---|

| Two-phase | o | o | x | x |

| CLAHE | o | x | o | x |

| mAP | 96.01% | 94.24% | 93.47% | 92.57% |

| Recall | 0.94 | 0.90 | 0.93 | 0.90 |

| Precision | 0.85 | 0.81 | 0.78 | 0.81 |

| IoU | 72.36% | 66.91% | 67.50% | 68.07% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, C.-H.; Lin, C.-W. A Two-Phase Fashion Apparel Detection Method Based on YOLOv4. Appl. Sci. 2021, 11, 3782. https://doi.org/10.3390/app11093782

Lee C-H, Lin C-W. A Two-Phase Fashion Apparel Detection Method Based on YOLOv4. Applied Sciences. 2021; 11(9):3782. https://doi.org/10.3390/app11093782

Chicago/Turabian StyleLee, Chu-Hui, and Chen-Wei Lin. 2021. "A Two-Phase Fashion Apparel Detection Method Based on YOLOv4" Applied Sciences 11, no. 9: 3782. https://doi.org/10.3390/app11093782

APA StyleLee, C.-H., & Lin, C.-W. (2021). A Two-Phase Fashion Apparel Detection Method Based on YOLOv4. Applied Sciences, 11(9), 3782. https://doi.org/10.3390/app11093782