Multi-Scale Safety Helmet Detection Based on SAS-YOLOv3-Tiny

Abstract

1. Introduction

2. The Principles of YOLOv3-Tiny

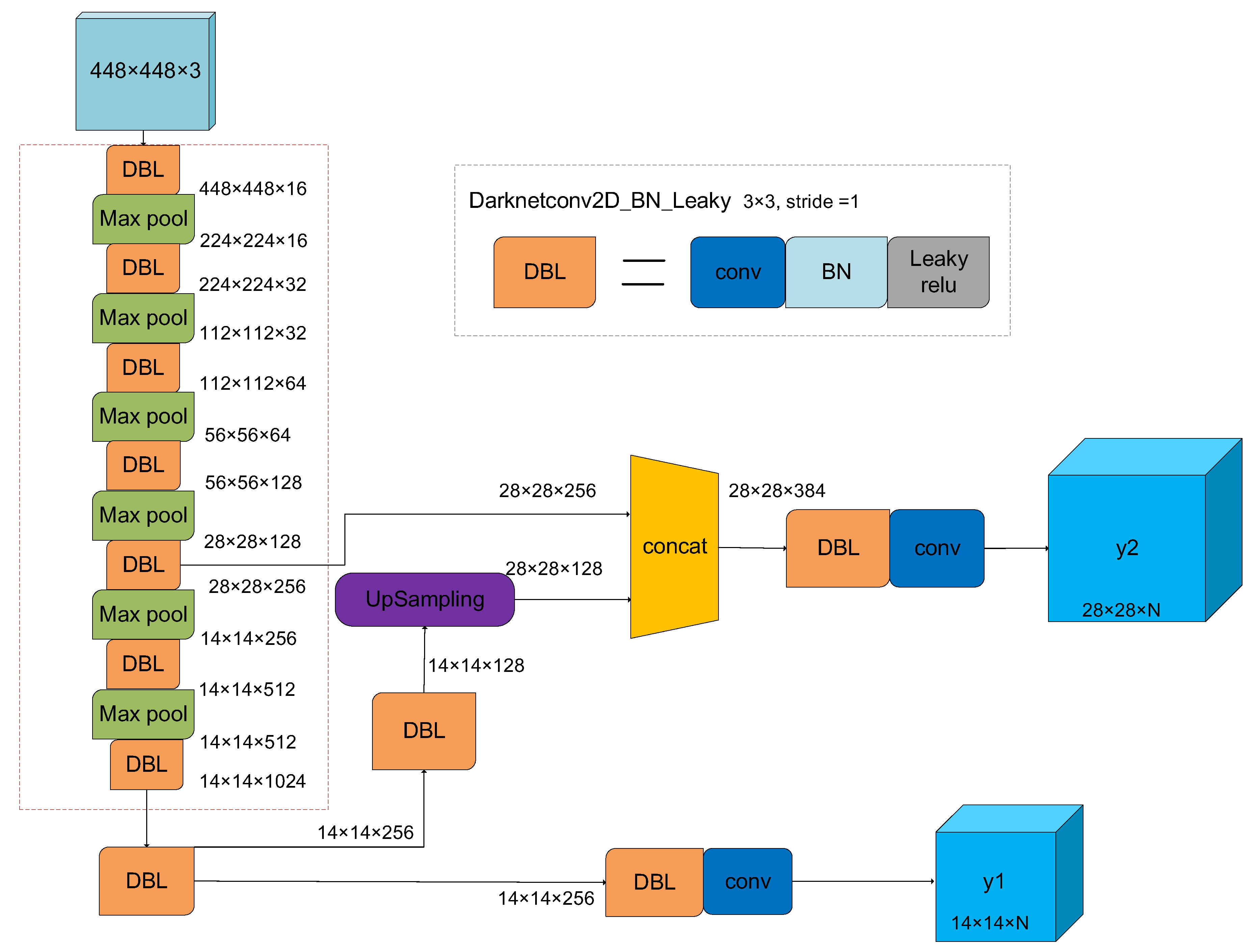

2.1. Network Architecture of YOLOv3-Tiny

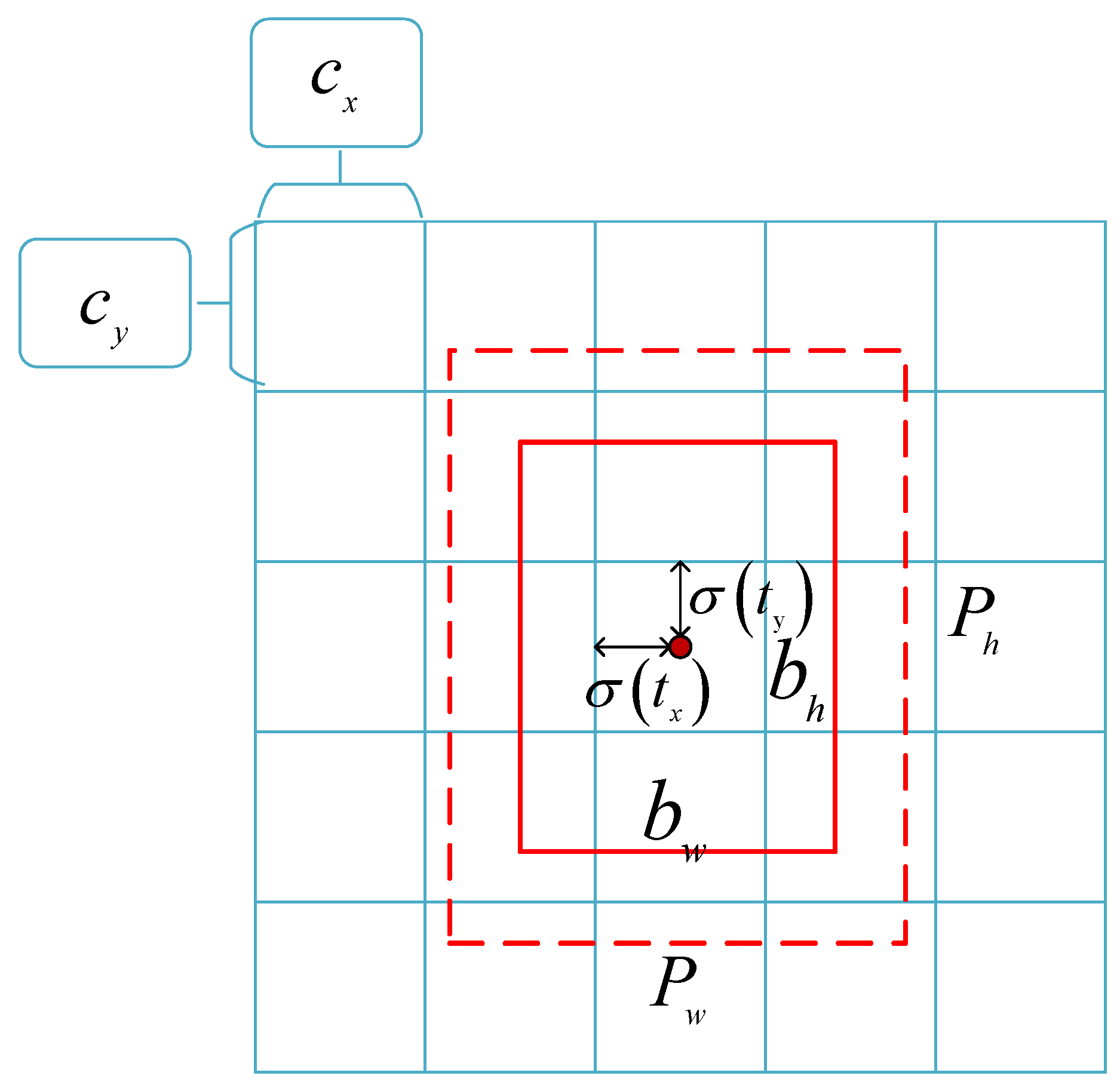

2.2. Bounding Box Prediction

3. SAS-YOLOv3-Tiny Algorithm

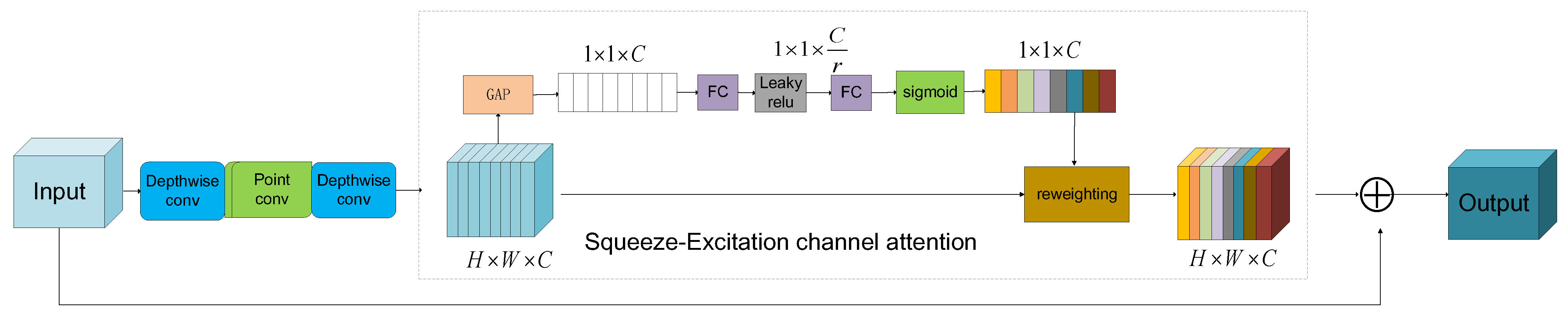

3.1. Sandglass-Residual Module Based on Channel Attention Mechanism

3.2. Improved Spatial Pyramid Pooling Module

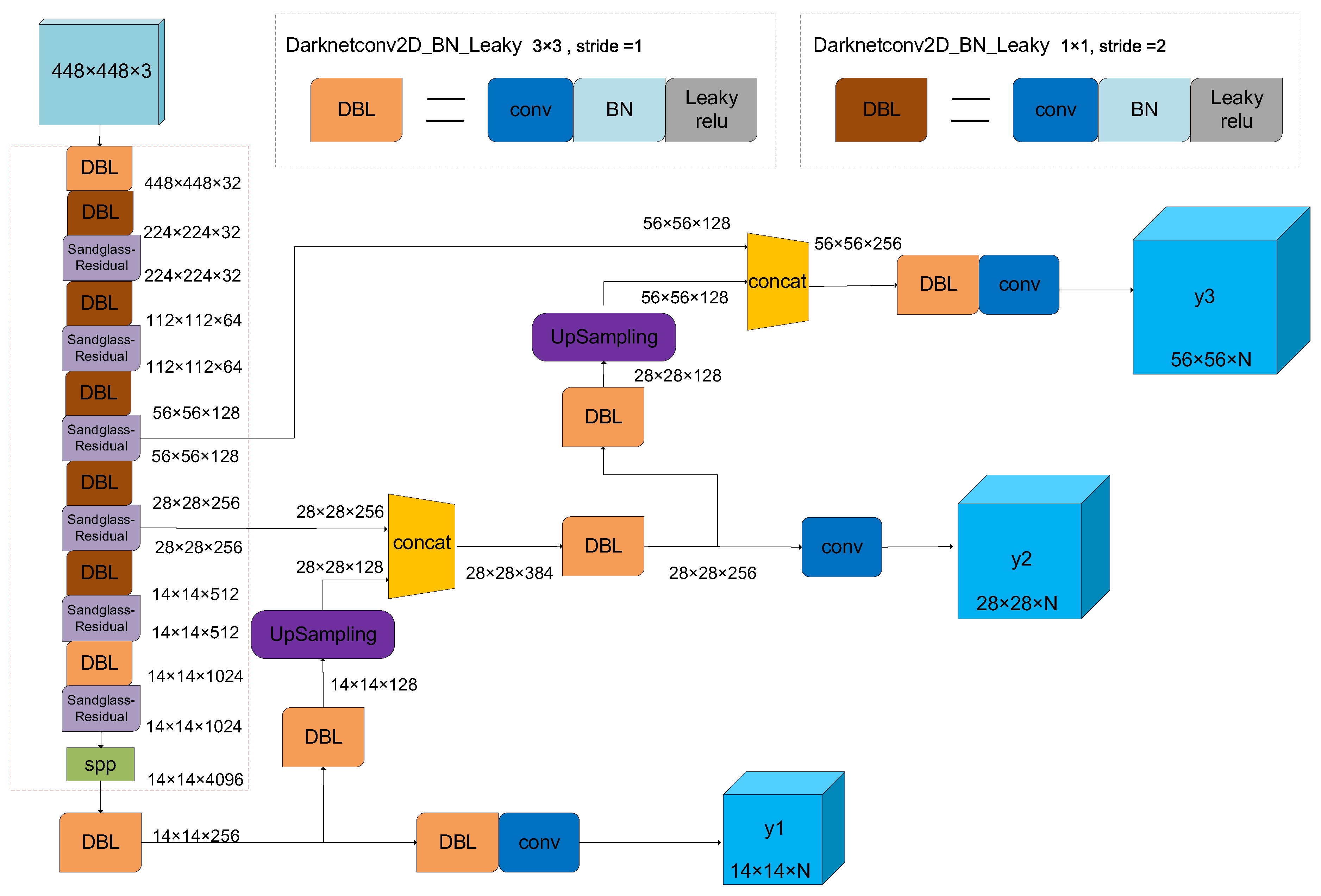

3.3. Network Architecture of SAS-YOLOv3-Tiny

3.4. Improved Loss Function

4. Experiments and Results Analysis

4.1. Dataset and Evaluation Criteria

4.1.1. Dataset

4.1.2. Evaluation Criteria

4.2. Experimental Progress and Result Analysis

4.2.1. Training Setting

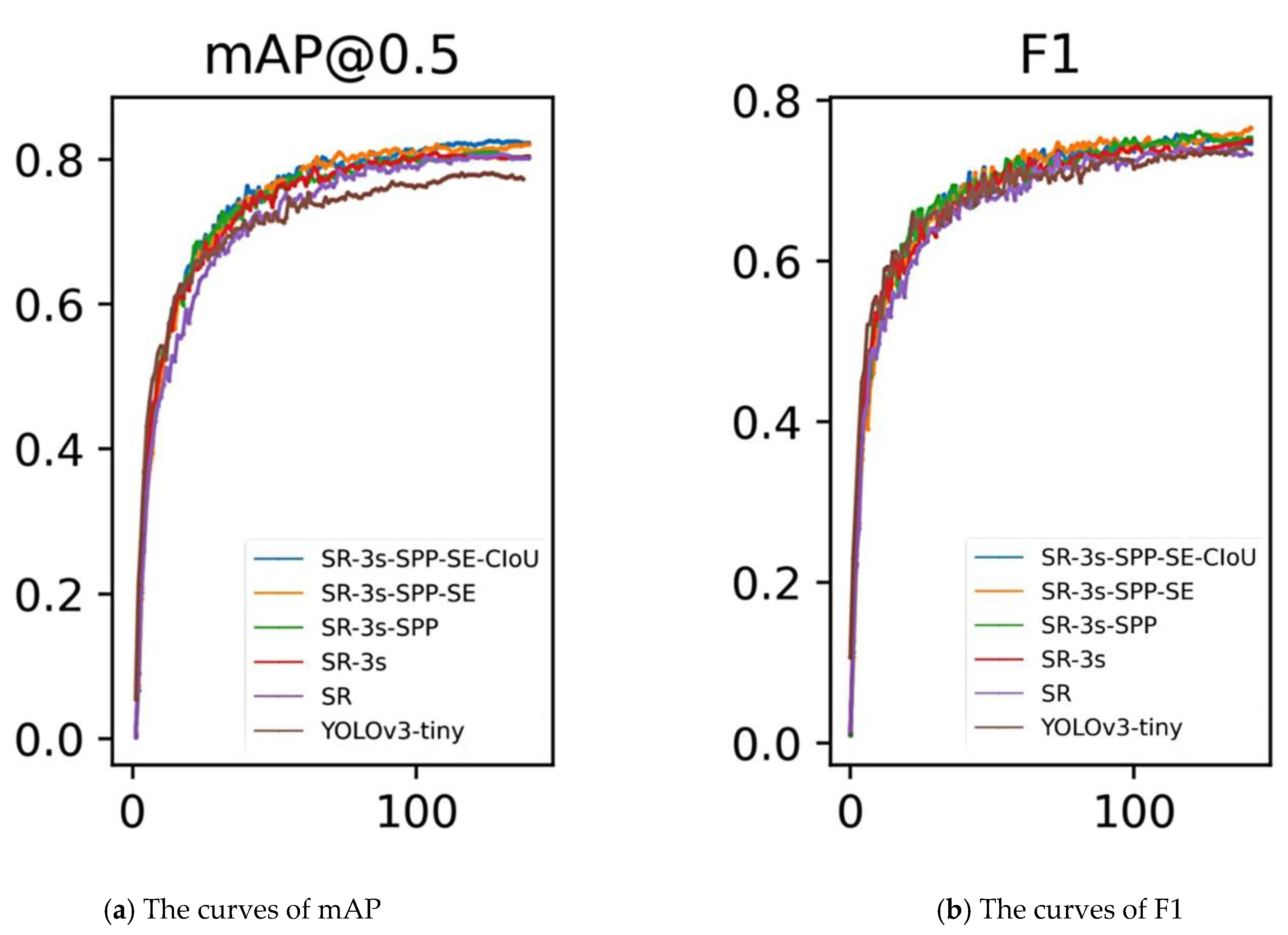

4.2.2. Ablation Experiments

4.2.3. Result Comparison with Other Detection Models

4.2.4. Detection Results under Application Scenarios

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Girshick, R.; Donahue, J.; Darrelland, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Seg-mentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 24–27 June 2014. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [PubMed]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Dai, J.; Li, Y.; He, K.; Sun, J. R-FCN: Object Detection via Region-based Fully Convolutional Networks. In Proceedings of the Conference on Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016. [Google Scholar]

- Lin, T.-Y.; Dollar, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar]

- He, K.; Gkioxari, G.; Dollar, P.; Girshick, R.B. Mask R-CNN. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 386–397. [Google Scholar] [CrossRef] [PubMed]

- Sermanet, P.; Eigen, D.; Zhang, X.; Mathieu, M.; Fergus, R.; Lecun, Y. OverFeat: Integrated Recognition, Localization and Detection using Convolutional Networks. arXiv 2013, arXiv:1312.6229. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y. SSD: Single Shot Multi Box Detector. In Proceedings of the Europe Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; pp. 21–37. [Google Scholar]

- Lin, T.-Y.; Goyal, P.; Girshick, R.B.; He, K.; Dollar, P. Focal Loss for Dense Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 318–327. [Google Scholar] [CrossRef] [PubMed]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Scheme. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 1854–1862. [Google Scholar]

- Huang, L.; Yang, Y.; Deng, Y.; Yu, Y. DenseBox: Unifying Landmark Localization with End to End Object Detection. arXiv 2015, arXiv:1509. 04874. [Google Scholar]

- Law, H.; Deng, J. CornerNet: Detecting Objects as Paired Keypoints. Int. J. Comput. Vis. 2020, 128, 642–656. [Google Scholar] [CrossRef]

- Zhou, X.; Zhuo, J.; Krahenbuhl, P. Bottom-Up Object Detection by Grouping Extreme and Center Points. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 850–859. [Google Scholar]

- Duan, K.; Bai, S.; Xie, L.; Qi, H.; Huang, Q.; Tian, Q. CenterNet: Keypoint Triplets for Object Detection. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 6568–6577. [Google Scholar]

- Zhu, C.; He, Y.; Savvides, M. Feature Selective Anchor-Free Module for Single-Shot Object Detection. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 840–849. [Google Scholar]

- Tian, Z.; Shen, C.; Chen, H.; He, T. FCOS: Fully Convolutional One-Stage Object Detection. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 9626–9635. [Google Scholar]

- Kong, T.; Sun, F.; Liu, H.; Jiang, Y.; Li, L.; Shi, J. FoveaBox: Beyound Anchor-Based Object Detection. IEEE Trans. Image Process. 2020, 29, 7389–7398. [Google Scholar] [CrossRef]

- Wang, H.; Hu, Z.; Guo, Y.; Yang, Z.; Zhou, F.; Xu, P. A Real-Time Safety HelmetWearing Detection Approach Based on CSYOLOv3. Appl. Sci. 2020, 10, 6732. [Google Scholar] [CrossRef]

- Li, Y.; Wei, H.; Han, Z.; Huang, J.; Wang, W. Deep Learning-Based Safety Helmet Detection in Engineering Management Based on Convolutional Neural Networks. Adv. Civ. Eng. 2020, 2020, 1–10. [Google Scholar] [CrossRef]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.-C. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Daquan, Z.; Hou, Q.; Chen, Y.; Feng, J.; Yan, S. Rethinking Bottleneck Structure for Efficient Mobile Network Design. arXiv 2020, arXiv:2007.02269. [Google Scholar]

- Hu, J.; Shen, L.; Albanie, S.; Sun, G.; Wu, E. Squeeze-and-Excitation Networks. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Zheng, Z.; Wang, P.; Ren, D.; Liu, W.; Ye, R.; Hu, Q.; Zuo, W. Enhancing Geometric Factors in Model Learning and Inference for Object Detection and Instance Segmentation. arXiv 2020, arXiv:2005.03572. [Google Scholar]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized Intersection Over Union: A Metric and a Loss for Bounding Box Regression. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; Institute of Electrical and Electronics Engineers (IEEE): Piscataway, NJ, USA, 2019; pp. 658–666. [Google Scholar]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU Loss: Faster and Better Learning for Bounding Box Regression. 2019, pp. 1458–1467. Available online: https://arxiv.org/abs/1911.08287 (accessed on 9 March 2020).

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4: Optimal Speed and Accuracy of Object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Wang, C.-Y.; Liao, H.-Y.M.; Wu, Y.-H.; Chen, P.-Y.; Hsieh, J.-W.; Yeh, I.-H. CSPNet: A New Backbone that can Enhance Learning Capability of CNN. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Glasgow, UK, 23–28 August 2020; pp. 390–391. [Google Scholar]

| Input | Operation | Output |

|---|---|---|

| Dwise conv, leaky | ||

| conv, linear | ||

| conv, leaky | ||

| Dwise conv, linear |

| Scheme | SR | 3-Scale | SPP | SE | CIoU |

|---|---|---|---|---|---|

| SR | √ | ||||

| SR-3s | √ | √ | |||

| SR-3s-SPP | √ | √ | √ | ||

| SR-3s-SPP-SE | √ | √ | √ | √ | |

| SR-3s-SPP-SE-CIoU(Ours) | √ | √ | √ | √ | √ |

| Model | P/% | R/% | mAP/% | F1/% | Weight/MB | Total Parameters/106 | Detection Time/ms |

|---|---|---|---|---|---|---|---|

| YOLOv3-tiny | 70.7 | 73.3 | 73.7 | 71.9 | 69.5 | 8.67681 | 2.5 |

| SR | 69.3 | 77.9 | 78.2 | 73.3 | 36.5 | 4.53490 | 2.8 |

| SR-3s | 69.6 | 79.6 | 80.2 | 74.2 | 39.2 | 4.86658 | 3.0 |

| SR-3s-SPP | 70.3 | 80.4 | 80.1 | 75.0 | 45.4 | 5.65301 | 3.1 |

| SR-3s-SPP-SE | 72.3 | 80.2 | 81.2 | 76.0 | 46.9 | 5.82773 | 3.2 |

| SR-3s-SPP-SE-CIoU(Ours) | 73.2 | 80.2 | 81.6 | 76.4 | 46.9 | 5.82773 | 3.2 |

| Algorithm | Type | Helm-et | AP/ Cap | % Nowear | Safey- Cap | P/% | R/% | mAP/% | F1/% | Weight/MB | Total Parameters/107 | Detection Time/ms |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| YOLOv3-tiny | 70.9 | 74.0 | 76.2 | 77.5 | 71.0 | 75.4 | 74.6 | 72.9 | 69.5 | 0.86768 | 2.5 | |

| YOLOv4-tiny | 80.4 | 74.7 | 80.8 | 79.8 | 72.6 | 80.0 | 78.9 | 76.0 | 47.2 | 0.58779 | 1.8 | |

| YOLOv3 | 84.2 | 81.2 | 92.5 | 86.6 | 75.8 | 85.6 | 86.1 | 80.3 | 492.8 | 6.15399 | 8.6 | |

| YOLOv4 | 86.7 | 80.9 | 92.7 | 89.3 | 79.4 | 88.6 | 87.4 | 83.7 | 420.7 | 5.24798 | 7.4 | |

| SAS-YOLOv3-tiny | 78.2 | 73.3 | 87.8 | 81.9 | 71.6 | 80.9 | 80.3 | 75.2 | 46.9 | 0.58277 | 3.2 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cheng, R.; He, X.; Zheng, Z.; Wang, Z. Multi-Scale Safety Helmet Detection Based on SAS-YOLOv3-Tiny. Appl. Sci. 2021, 11, 3652. https://doi.org/10.3390/app11083652

Cheng R, He X, Zheng Z, Wang Z. Multi-Scale Safety Helmet Detection Based on SAS-YOLOv3-Tiny. Applied Sciences. 2021; 11(8):3652. https://doi.org/10.3390/app11083652

Chicago/Turabian StyleCheng, Rao, Xiaowei He, Zhonglong Zheng, and Zhentao Wang. 2021. "Multi-Scale Safety Helmet Detection Based on SAS-YOLOv3-Tiny" Applied Sciences 11, no. 8: 3652. https://doi.org/10.3390/app11083652

APA StyleCheng, R., He, X., Zheng, Z., & Wang, Z. (2021). Multi-Scale Safety Helmet Detection Based on SAS-YOLOv3-Tiny. Applied Sciences, 11(8), 3652. https://doi.org/10.3390/app11083652