Abstract

In this research, we introduce a classification procedure based on rule induction and fuzzy reasoning. The classifier generalizes attribute information to handle uncertainty, which often occurs in real data. To induce fuzzy rules, we define the corresponding fuzzy information system. A transformation of the derived rules into interval type-2 fuzzy rules is provided as well. The fuzzification applied is optimized with respect to the footprint of uncertainty of the corresponding type-2 fuzzy sets. The classification process is related to a Mamdani type fuzzy inference. The method proposed was evaluated by the F-score measure on benchmark data.

1. Introduction

Nowadays, machine learning and its applications are growing rapidly. Classification is one of the major machine learning problems. As there are various kinds of data, the need for robust techniques has become essential, especially in real-world applications [1,2]. What is more, often the data are not well-defined, and are vague or imbalanced, which is an additional obstacle. Therefore, fuzzy techniques became the right solution. We may divide these techniques into two primary groups: those related to the classical type-1 fuzzy sets, introduced by Zadeh [3] and those related to new concepts concerning type-2 fuzzy sets [4,5]. In practice, the interval type-2 fuzzy sets [6], a particular case of type-2 fuzzy sets, are commonly used for their reduced computational cost and easy implementation. What is more, researchers have proved that interval type-2 fuzzy concepts are better able to handle uncertainties than type-1 fuzzy approaches [6,7,8,9,10,11]. Interval type-2 fuzzy sets are very effective, as they provide better generalisations when it is difficult to determine the exact membership functions of the fuzzy sets applied.

On the other hand, to deal with information issues, data discovery techniques, assumed as the computational process of discovering patterns in data, are widely used to induce knowledge. Information systems and rough sets, introduced by Pawlak [12,13,14], are applied to represent knowledge. Therefore, it seems that the combination of fuzzy techniques and data discovery is very appropriate for the analysis of complex data such as medical data [15,16].

That is how the concept of fuzzy information systems came out. If each attribute of an information system is related to a fuzzy set, a fuzzy information system is defined [17]. Fuzzy information systems find their applications in the field of decision-making [18,19]. In recent research, a novel multi-criteria approach with application in investments was proposed [20]. Many other applications also can be found concerning rule extraction [19,21], feature selection and classification [22,23,24,25]. Mathematical properties of fuzzy information systems are under consideration as well [17,26]. The concept of rough sets was extended by analyzing the properties of lower and upper approximations of fuzzy sets [27,28,29,30,31] with corresponding practicable applications. For example, in the significant field of machine learning [32] or even medical image analyses, as an image segmentation approach [33].

In our research, we introduce a fuzzy information system which induces type-1 fuzzy rules and transforms them into interval type-2 fuzzy rules. We assume the information function values to be fuzzy sets, but still applying the original definitions of indistinguishability relation and rough sets defined by Pawlak. Our research experiments consist of investigating different fuzzification procedures, which determine the optimal fuzzy classifier.

2. Materials and Methods

2.1. Materials

In our research, we have applied our classification proposal on well-known benchmark data [34] for binary classification. The benchmark details are given in Table 1.

Table 1.

The data sets used.

2.2. Methods

This section explains the preliminaries of the type-2 Mamdani fuzzy model [4,40] and information systems rule induction procedure [41,42]. Next, we introduce our proposal of combination information systems with type-2 fuzzy inference to introduce a classification procedure.

2.2.1. Fuzzy Sets and Interval Type-2 Fuzzy Sets

A fuzzy set or type-1 fuzzy set F consists of a domain X of real numbers together with a function : X → [0, 1], [3] i.e.,:

Here, the integral denotes the collection of all points x ∈ X with associated membership grade (x) ∈ [0, 1]. The function is also known as the membership function of the fuzzy set F, as its value represents the grade of membership of the elements of X to the fuzzy set F. The idea is to use membership functions to describe imprecise or vague information.

By expanding this concept with the assumption that membership function values can be fuzzified themselves, the idea of type-2 fuzzy sets was introduced. A type-2 fuzzy set, denoted as , is defined as follows [4]:

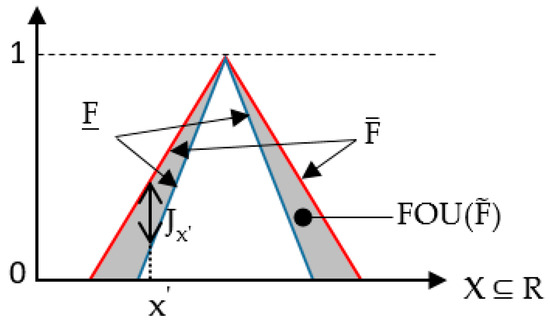

where denotes union over all admissible x and u. Interval type-2 (IT2) fuzzy sets [4], a special case of type-2 fuzzy sets, are the most widely used because of their acceptable computational complexity and easy interpretation. Uncertainty about is conveyed by the so-called footprint of uncertainty (FOU) of :

The size of an FOU (the corresponding surface) is directly related to the uncertainty that is conveyed by an interval type-2 fuzzy set, and what follows, an FOU with more area is more uncertain than the one with less area. The upper membership function and lower membership function of are two type-1 membership functions and that bound the FOU, which might be used to describe Jx, i.e.,:

which leads to the following:

Figure 1 clearly illustrates the above definitions.

Figure 1.

An interval type-2 fuzzy set .

2.2.2. Mamdani Type-2 Fuzzy System

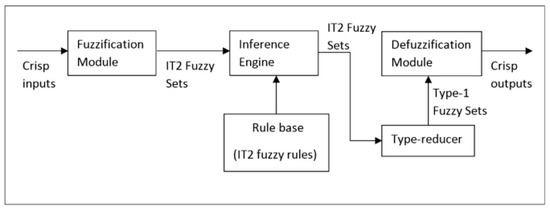

In Figure 2, we show the information flow within an IT2 fuzzy system. It is very similar to its type-1 analogue. The major difference is that because of the IT2 fuzzy sets used in the rulebase, the outputs of the inference engine are IT2 fuzzy sets too. Therefore, a type reducer [6,43] must be applied to convert them into type-1 fuzzy sets to enable the defuzzification procedure.

Figure 2.

Information flow in a type-2 fuzzy system.

Below, we give a brief description of the basic steps of the computations in an IT2 fuzzy system [40]. Let consider the rule base of an IT2 fuzzy system consisting of N rules taking the following form:

where (i = 1, …, I; n = 1, …, N) are IT2 fuzzy sets defined over corresponding domains, and (j = 1, …, M) is an IT2 fuzzy set, assuming a Mamdani type-2 system, which represents the corresponding rule conclusion. The logical operator ‘o’ is defined as a fuzzy conjunction or disjunction, i.e., o ∈ {⊗, ⊕}, where ⊗ and ⊕ are binary operators (t-norm and s-norm respectively) defined over [0, 1] (⊗, ⊕: [0, 1]2 → [0, 1]). The t-norm operator provides the characterization of the AND fuzzy operator, while the s-norm provides the characterization of the OR fuzzy operator [44]. In our research, we applied the Zadeh’s t- and s-norms, which correspond to the min and max operators, respectively.

Assuming an input vector , typical computations of an IT2 fuzzy system consist of the following steps:

- 1)

- Compute the membership intervals of for each , , i = 1, …, I; n = 1, …, N

- 2)

- Compute the firing interval of the n-th rule:

- 3)

- Apply type reduction to combine with corresponding rule consequents.

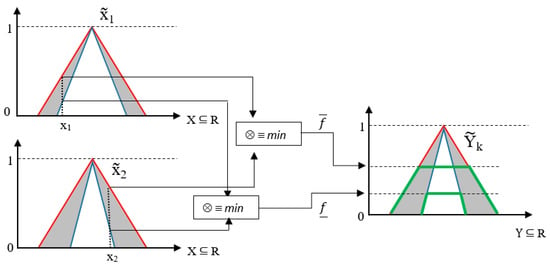

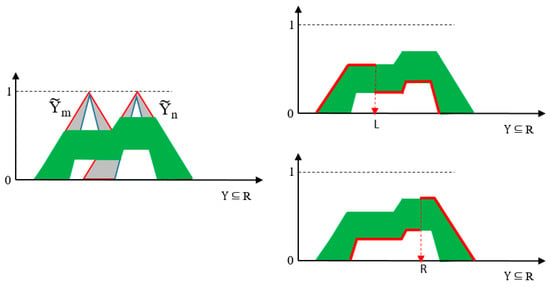

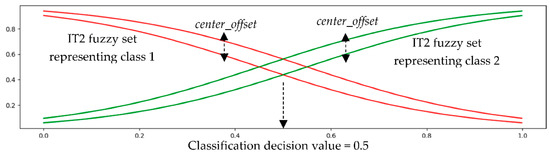

There are some methods of type reduction [6,45,46], but the most commonly used one is the center-of-set (COS) type reducer [6] using the Karnik–Mendel algorithms [6,43] or their variants [47,48]. As the whole procedure concerning Mamdani type-2 fuzzy system is well described in the literature, we will omit further details. For more clarity, in Figure 3 and Figure 4, we show the idea of the introduced calculations.

Figure 3.

Firing interval calculation along with footprint of uncertainty (FOU) rule output calculation for a sample rule: .

Figure 4.

Combined FOU for two sample rules with two interval type-2 (IT2) sets in the conclusions. The final system output is defined as (yl + yr)/2, where yl and yr are calculated with respect to L and R values, which can be determined using the Karnik–Mendel algorithm.

2.2.3. Rule Induction with Information Systems

An information system [12] is defined by the elements: (U, A, V, f), where U is a universe, A is a set of attributes, V represents attributes domains: , with nonempty domain Va of the a-th attribute (a ∈ A) and f is the so called information function f: U × A → V, . Important role in information systems plays the indiscernibility binary relation, which is an equivalence relation, defined over U: IND(B) = df {(x, y) ∈ U × U: }, under which the lower and upper approximations of any subset of U can be defined, respectively:

B↓X = df {x ∈ U: [x]IND ⊆ X},

B↑X = df {x ∈ U: [x]IND ∩ X ≠ ∅}, B ⊆ A and X ⊆ U.

The above approximations define a rough set [13,14]. We can consider an information system as a decision table if a decision attribute is introduced. With this assumption, a decision making approach was introduced by A. Skowron and Z. Suraj [41,42], presenting a rule induction process with respect to all considered decisions. The procedure consists of the following steps:

- Introduce an information system with decision attribute,

- Eliminate information system inconsistency, i.e., objects with the same information function values, but different decision values, applying lower or upper approximation precision analysis,

- Provide attribute reduct,

- Apply rule induction and define rules for all considered decisions, which cover the decision problem.

Below, we explain the main algorithms applied in the procedure above with examples. The reduct generation is omitted, as any other attribute selection method can be applied.

Inconsistency Elimination

Let consider the following information system with decision attribute: (U, A ∪ {a*}, V ∪ Va*, f). If inconsistency is found, then Algorithm 1, which uses the lower approximation precision as a decisive factor, should be applied. The lower approximation precision is defined as follows:

| Algorithm 1 (inconsistency elimination algorithm): |

| Input:Inconsistent information system Output:Consistent information system Let xi and xj are casing inconsistency (xi, xj ∈ U) and let , then:

|

Example 1.

Let U = {x1, x2, x3, x4, x5, x6}, A = {a1, a2, a3}, B = {a1, a3} ⊆ A, = {0, 1, 2, 3, 4} with information function values represented in the matrix below.

In the above matrix, we can discover inconsistency as objects x2 and x4 have the same information function values with respect to B, but different decision values. Therefore, we can apply Algorithm 1:

- 1)

- X1 = {x1, x3, x4, x6}; X2 = {x2, x5},

- 2)

- B↓X1= {x1, x3, x6},= 3/6 = 0.5; B↓X2 = {x5}, = 1/6 ≈ 0.17, which implies U = U\{x2}.

Of course, it is only our assumption that different decision values for the same attribute values cause inconsistency. In the decision making process introduced, such decision values could be considered as a set of acceptable values. If that is the case, then thesets defined below wouldn’t be singletons.

Rule Induction

The aim of this step is to induce rules for each decision regarding Algorithm 2 below.

| Algorithm 2 (rule induction algorithm): |

| Input:Information system with decision attribute Output:Corresponding decision rules Step 1: Generate Mk, k = 1, 2, …, |U| matrixes using the so-called discernibility matrix of the information system, denoted below as M(IS) and defined as: Step 3: Define the set of decision rules. |

Step 1:

Let cij are the elements of M(IS), are the elements of Mk (with respect to k, k = 1, ..., n; n = |U|) and a* is the decision attribute, then:

For each k = 1, ..., n:

- 1)

- If i ≠ k then

- 2)

- Ifandthen

where B ⊆ A and

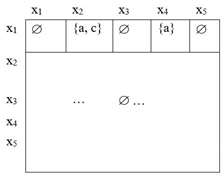

For simplicity, let consider the example below that illustrates the generation of matrix M1

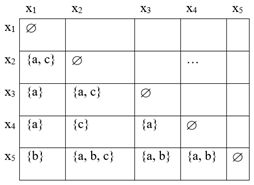

Example 2.

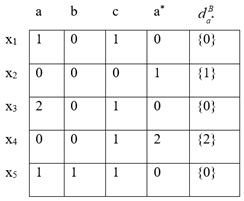

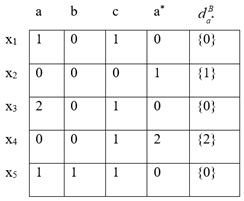

Let assume an information system with decision attribute extended by the corresponding values (U = {x1, x2, x3, x4, x5}, A = B = {a, b, c}, Va = Vb = Vc = Va* = {0, 1, 2}):

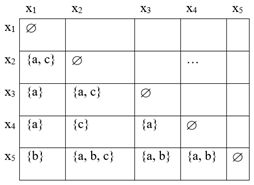

The corresponding discernibility matrix takes the form:

The matrix M(IS) is a symmetric matrix. Next, we can apply Algorithm 2—step 1 and therefore, define the matrix M1 (k = 1):

because:, therefore, and so:,.

Step 2:

The object implicants indicate which attributes and objects are strongly related. The first implicant is obtained from M1 applying the corresponding Boolean algebra reduction rules: Implicant1 (M1): x1 ⇒ a. The rest of the implicants are presented below:

- Implicant2 (M2): x2 ⇒ c,

- Implicant3 (M3): x3 ⇒ a,

- Implicant4 (M4): x4 ⇒ a ∧ c,

- Implicant5 (M5): x5 ⇒ a ∨ b.

Step 3:

Finally, using the above implicants, we can generate the target set of decision rules regarding the decision attribute values. Each rule represents one decision and it is obtained as a sum of the object implicants related to that decision, i.e., concerning decision attribute value ‘0’, we have: f(x1,a*) = f(x3,a*) = f(x5,a*) = 0, and therefore: Rule1 = df. f(x1,a) ∨ f(x3,a) ∨ (f(x5,a) ∨ f(x5,b)) ⇒ (decision: 0)

by analogy:

- Rule2 = df. f(x2,c) ⇒ (decision: 1),

- Rule3 = df. f(x4,a) ∧ f(x4,c) ⇒ (decision: 2).

The main disadvantage of the above decision making approach, in terms of vague or imprecise data, is that the induced rules are crisp, i.e., Rule2 has the following interpretation: if an object of x2 type has an information function value for the attribute ‘c’ exactly equal to ‘0’ then make the decision ‘1’. We solve this disadvantage by extending information systems to our assumption of a type-1 fuzzy information system.

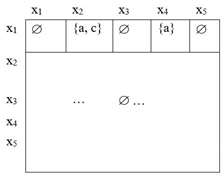

2.2.4. Type-1 Fuzzy Information System

The major assumption of our research is the interpretation of a fuzzy information system. First, we define the values of the information function as type-1 fuzzy sets. This assumption solves the problem of crisp values by providing a generalisation. Therefore, we propose better data granulation and thus more robustness to vague or imprecise data. Next, by assuming Gaussian distribution for each attribute, we are able to define any information function value as one of the very basic fuzzy sets: low, medium, and high. Therefore, the value of any pair object-attribute is generalised by a fuzzy set. Below in Table 2, we give an illustration of a type-1 fuzzy information system with a decision attribute, according to our assumptions.

Table 2.

A type-1 fuzzy information system.

Where:

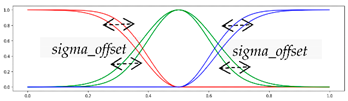

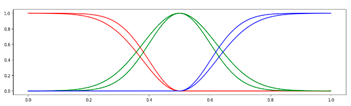

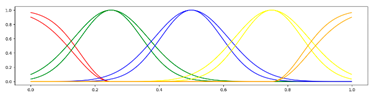

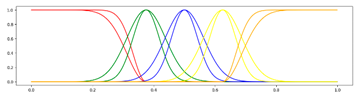

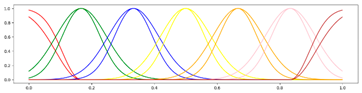

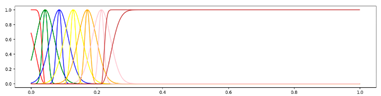

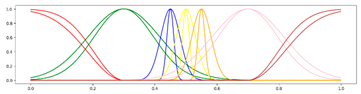

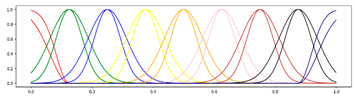

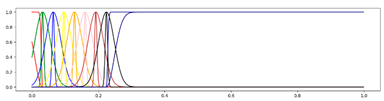

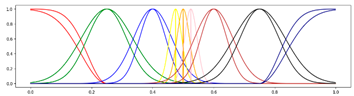

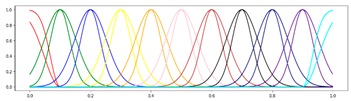

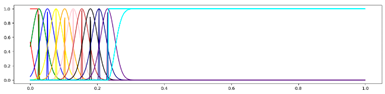

Figure 5.

Type-1 and type-2 fuzzification proposal.

If a pair objecti-attributej has a value defined as low, for example, it means that:

It is important to note that our assumption was also the direct application of the original Skowron and Suraj algorithm [41,42] for rule induction. As a result, we did not have to modify the introduced indistinguishability relation (IND) to define fuzzy rough sets or modify the quality of the approximation introduced by Equation (8). We directly used the fuzzified values of the information function as if they were crisp values. This means that objecti is related to objectj with respect to IND in accordance with our model if the values of the corresponding attributes define the same fuzzy sets. For example, below we show the partition (P) of the set of objects {Object1, …, Object5} implied by IND for a sample information system given in Table 3.

Table 3.

Sample information system with five objects and two attributes.

The corresponding partition with respect to IND has three equivalence classes:

P/IND({Attribute1, Attribute2}) = df. {{Object1, Object5}, {Object2, Object3}, {Object4}}.

The above assumption made possible the use of classical algorithms introduced in information systems theory, while at the same time generalizing the information given by the attributes. The use of membership degrees inferred from corresponding membership functions is performed after the induction of rules and their transformation into fuzzy rules, in accordance with the assumptions of the fuzzy model used. Of course, such an assumption may be too strong a generalization. We solve this problem by introducing various possibilities of fuzzification. Defining additional fuzzy sets and transforming them into interval type-2 fuzzy sets with the corresponding optimization, depending on the examined dataset (see Table 4 below).

Table 4.

The IT2 fuzzy sets used for a sample attribute of the blood transfusion data set. For all attributes, we normalized the corresponding domain values.

Next, we define the decision attribute values as fuzzy sets, which affect the degree of decision. Any induced rules from such an information system can be easily transformed into fuzzy rules for a type-1 Mamdani fuzzy system. For example, if we have pairs: (object1, attribute1): low, (object2, attribute1): high, (object3, attribute2): medium and a rule which defines decision D as:

- (f(object1, attribute1) ∧ f(object2, attribute1)) ∨ f(object3, attribute2),

then we can transform it into the fuzzy rule:

- If ((f(object1, attribute1) is low) ⊗ (f(object2, attribute1) is high)) ⊕ (f(object3, attribute2) is medium) Then D.

2.2.5. Transformation into Type-2 Fuzzy Sets

To apply type-2 fuzzy sets in the fuzzy rule induction procedure described, we propose a simple extension. After induction of type-1 fuzzy rules, we modify the membership functions applied by changing the standard deviation of the ‘medium’ fuzzy set. By doing so, we can define the bounds of the FOU for the corresponding type-2 fuzzy set . This also makes the possibility to define and type-2 fuzzy sets as well. The idea is given in Figure 5.

The above extension gives us the possibility to apply the type-2 Mamdani fuzzy inference procedure. In our research, we have applied the procedure proposed to solve classification problems.

2.2.6. Fuzzy Rules Optimization

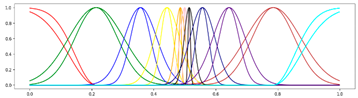

In our experiments, the basic assumption was to analyse the influence of the shape and the FOU of the type-2 fuzzy sets used in the rule premises, derived from the corresponding fuzzy information system, on the classification accuracy. For this purpose, we have used the type-2 fuzzy sets shown in Table 4. Each of these type-2 membership functions was derived for every attribute of all benchmark data analyzed. Below, we explain the descriptions used in our research:

- The number of Gaussian functions for fuzzification: {3, 5, 7, 9, 11}. The fuzzification procedure is as follows: generate the medium membership function and, next, the rest of the membership functions based on it. All Gaussians are transformed into type-2 fuzzy sets by changing the standard deviation value (called sigma_offset parameter). The sigma_offset defines the FOU of the corresponding IT2 fuzzy sets. The same value was applied for each Gaussian function.

- If the standard deviations applied to the Gaussians are the same or not: {equal, progressive}—progressive assumes the concentration of membership functions around the mean or central values. For 3 Gaussians, equal and progressive, give the same fuzzification result.

- If the mean of the medium basic function is derived directly from the corresponding data set or if a fixed value is used: {mean, center}—center assumes Gaussian distribution with fixed mean 0.5.

For example, <3 Gausses, Equal, Mean> is considered as 3 Gaussian membership functions with equal standard deviation values used to define 3 type-2 fuzzy sets, and the medium function is defined by its corresponding mean value derived from the considered data. The <5 Gausses, Equal, Center> is considered as 5 Gaussian membership functions and the medium function is defined by a fixed mean value 0.5. The <7 Gausses, Progressive, Mean> is considered as 7 Gaussian membership functions with different standard deviation values, assuming concentration of membership functions around the mean value of the medium function.

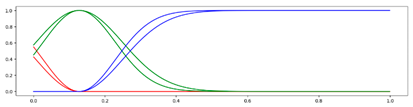

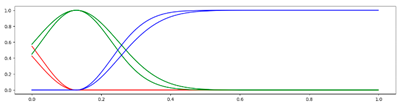

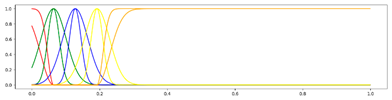

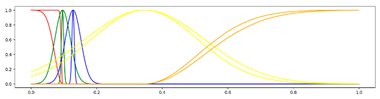

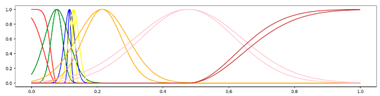

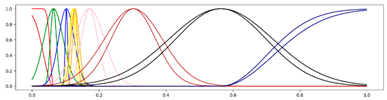

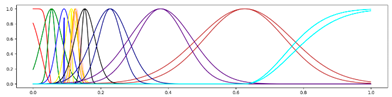

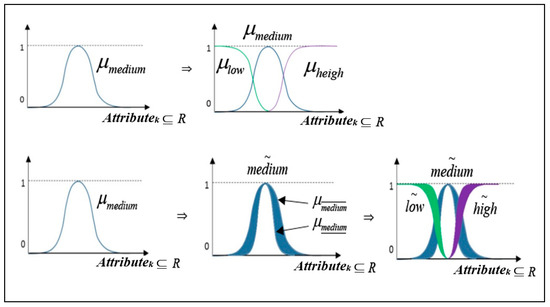

For the type-2 sets used in the conclusions, we define s-shaped functions representing each class in the classification problem considered. We optimize the FOU of each function by changing the cross-points. We called the corresponding parameter under optimization center_offset—see Figure 6. The final classification decision value was fixed = 0.5, assuming normalized domain [0, 1]. Changes of the classification threshold did not show a positive effect on the experiment.

Figure 6.

IT2 fuzzy sets used in the rule conclusions.

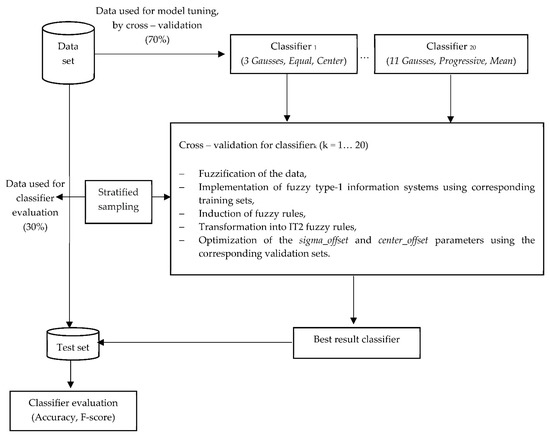

For each benchmark data, we optimized the sigma_offset and center_offset parameters for the type-2 fuzzy sets considered, using the grid search optimization algorithm applied in k-folded cross-validation process with evaluation on a held-out validation set. The sigma_offset takes values in the range [0.01, 0.03] with step 0.005 and the center_offset takes values in the range [0.01, 0.21] with step 0.02. The k value was chosen for any data set assuming a fair number of samples in the corresponding validation sets, presented in Table 5. Additionally, for any data partition, we have used stratified sampling to ensure balanced validation and test sets. For better clarity, we show the experiment information flow in Figure 7.

Table 5.

Number of folds applied in the cross-validation process.

Figure 7.

Experiment information flow.

3. Results

In Table 6 we present the results achieved in our experiments. The number of rules generated for each data set is constant and equal to the cardinality of the decision attribute. In the case of binary classification, two fuzzy rules will be generated, but with very extensive and complex rule premises.

Table 6.

Evaluation of the method proposed.

We achieved quite high classification results for seven data sets, which proves the purposefulness of the methodology used.

Below in Table 7, we show a comparison regarding high performing classifiers for some of the data sets with respect to the F-score measure.

Table 7.

Comparison regarding other classifiers with respect to the F-score measure.

As we can see above, we achieved quite good results for the datasets data banknote authentication and HTRU 2, despite the very general fuzzy approach proposed.

Classification accuracy comparison with fuzzy classification techniques for chosen data sets is shown in Table 8 as well.

Table 8.

Classification accuracy comparison with fuzzy classifiers.

4. Discussion

We distinguish several important conclusions and advantages of our research. First of all, it is possible to aggregate information by interpreting attributes describing objects as fuzzy sets, using the advantages of fuzzy logic for flexible modelling of real data. This enables a large generalization of information, which positively affects unbalanced or inaccurately defined data. Additionally, the introduced interpretation of fuzzy information systems allows direct work on fuzzy sets and the induction of rules that can be easily changed into type-2 fuzzy rules. By appropriate fuzzification, the values of the information function can be derived directly from the data. Removal of inconsistencies, which may appear because of the information generalization applied, is performed by appropriate use of rough sets, i.e., by analyzing the quality of the corresponding approximations. The fuzzification applied is very general. It allows even better adjustment to special cases by optimization of the fuzzy sets in the premises and the conclusions of the rules applied. In this research, we have used only one of the many possible fuzzifications of the data presented. Applying fuzzification adapted to a specific problem, after an in-depth analysis, we should be able to adjust the fuzzy sets in the premises and in the conclusions of the rules to achieve the best possible result. The methodology we introduced in our work is a general approach, aiming to show the advantage of fuzzy inference using a simple and homogeneous model. Despite that our algorithm was not strictly adjusted to any data set, we managed to obtain good results for some of them. It shows practical potential and creates the basis for generating robust classifiers.

5. Conclusions

In this research, we proposed a new fuzzy classification method. Our concept assumes the induction of fuzzy rules from the corresponding fuzzy information system. The applied fuzzification of data is done in a very simple manner by Gaussian type membership functions. Moreover, we are able to extend the induced rules into interval type-2 fuzzy rules. The experiments provided with benchmark data proved the method usefulness. Additionally, by further optimization of the interval type-2 fuzzy sets applied, we were able to improve our classification results. More suitable fuzzification, adjusted to a specific data set, is possible as well. Our concept should be considered as a general approach for unbalanced or inaccurately defined data.

Author Contributions

M.T. conceived of the presented idea, developed the theory and supervised the project. A.C. (Adrian Chlopowiec), A.C. (Adam Chlopowiec) and A.D. performed the computations and the method evaluation. All authors discussed the results and contributed to the final manuscript. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the statutory funds of the Department of Computational Intelligence, Faculty of Computer Science and Management, Wroclaw University of Science and Technology.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Bertsimas, D.; Dunn, J.; Pawlowski, C.; Zhuo, Y.D. Robust Classification. Inf. J. Optim. 2019, 1, 2–34. [Google Scholar] [CrossRef]

- Sarkar, S.K.; Oshiba, K.; Giebisch, D.; Singer, Y. Robust Classification of Financial Risk, NIPS 2018 Workshop on Challenges and Opportunities for AI in Financial Services: The Impact of Fairness, Explainability, Accuracy, and Privacy. arXiv 2018, arXiv:1811.11079. [Google Scholar]

- Zadeh, L. Fuzzy sets. Inf. Control 1965, 8, 338–353. [Google Scholar] [CrossRef]

- Mendel, J.M.; John, R.I. Type-2 fuzzy sets made simple. IEEE Trans. Fuzzy Syst. 2002, 10, 117–127. [Google Scholar] [CrossRef]

- Mendel, J.M. Type-2 Fuzzy Sets and Systems: How to Learn About Them. IEEE Smc Enewsletter 2009, 27, 1–7. [Google Scholar]

- Mendel, J.M. Uncertain Rule-Based Fuzzy Logic Systems: Introduction and New Directions; Prentice-Hall: Upper Saddle River, NJ, USA, 2001. [Google Scholar]

- Castillo, O.; Melin, P. Type-2 Fuzzy Logic Theory and Applications; Springer: Berlin, Germany, 2008. [Google Scholar]

- Hagras, H. A hierarchical type-2 fuzzy logic control architecture for autonomous mobile robots. Ieee Trans. Fuzzy Syst. 2004, 12, 524–539. [Google Scholar] [CrossRef]

- Hagras, H. Type-2 FLCs: A new generation of fuzzy controllers. Ieee Comput. Intell. Mag. 2007, 2, 30–43. [Google Scholar] [CrossRef]

- Wu, D.; Tan, W.W. Genetic learning and performance evaluation of type-2 fuzzy logic controllers. Eng. Appl. Artif. Intell. 2006, 19, 829–841. [Google Scholar] [CrossRef]

- Wu, D.; Tan, W.W. A simplified type-2 fuzzy controller for real-time control. ISA Trans. 2006, 15, 503–516. [Google Scholar]

- Pawlak, Z. Information systems—Theoretical foundations. Inf. Syst. 1981, 6, 205–218. [Google Scholar] [CrossRef]

- Pawlak, Z. Rough Sets: Theoretical Aspects of Reasoning about Data, System Theory, Knowledge Engineering and Problem Solving; Kluwer Academic Publishers: Dordrecht, The Netherlands, 1991; Volume 9. [Google Scholar]

- Pawlak, Z. Rough Sets; Basic notions, Report no 431; Institute of Computer Science, Polish Academy of Sciences: Warsaw, Poland, 1981. [Google Scholar]

- Halder, A.; Kumar, A. Active learning using rough fuzzy classifier for cancer prediction from microarray gene expression data. J. Biomed. Inform. 2019, 92, 1–13. [Google Scholar] [CrossRef] [PubMed]

- Onan, A. A fuzzy-rough nearest neighbor classifi-er combined with consistency-based subset evaluation and instance selection for automated diagnosis of breast cancer. Expert Syst. Appl. 2015, 42, 6844–6852. [Google Scholar] [CrossRef]

- Wang, C.; Chen, D.; Hu, Q. Fuzzy information systems and their homomorphisms. Fuzzy Sets Syst. 2014, 249, 128–138. [Google Scholar] [CrossRef]

- Dubois, D. The role of fuzzy sets in decision sciences: Old techniques and new directions. Fuzzy Sets Syst. 2011, 184, 3–28. [Google Scholar] [CrossRef]

- Lee, M.-C.; Chang, T. Rule Extraction Based on Rough Fuzzy Sets in Fuzzy Information Systems. Trans. Cci Iii Lncs 2011, 6560, 115–127. [Google Scholar]

- Ye, J.; Zhan, J.; Xu, Z. A novel decision-making approach based on three-way decisions in fuzzy information systems. Inf. Sci. 2020, 541, 362–390. [Google Scholar] [CrossRef]

- Cheruku, R.; Edla, D.R.; Kuppili, V.; Dharavath, R. RST-Bat-Miner: A fuzzy rule miner integrating rough set feature selection and bat optimization for detection of diabetes disease. Appl. Soft. Comput. 2018, 67, 764–780. [Google Scholar] [CrossRef]

- Dai, J.H.; Chen, J.L. Feature selection via normative fuzzy information weight with application into tumor classification. Appl. Soft Comput. 2020, 92, 106299. [Google Scholar] [CrossRef]

- Jensen, R.; Shen, Q. Semantics-preserving dimensionality reduction: Rough and fuzzy-rough-based approaches. Ieee Trans. Knowl. Data Eng. 2004, 16, 1457–1471. [Google Scholar] [CrossRef]

- Lin, Y.; Li, Y.; Wang, C.; Chen, J. Attribute reduction for multi-label learning with fuzzy rough set. Knowl. Based Syst. 2018, 152, 51–61. [Google Scholar] [CrossRef]

- Maji, P.; Garai, P. Fuzzy–Rough Simultaneous Attribute Selection and Feature Extraction Algorithm. Ieee Trans. Cybern. 2013, 43, 1166–1177. [Google Scholar] [CrossRef]

- Yu, G. Characterizations and uncertainty measurement of a fuzzy information system and related results. Soft Comput. 2020, 24, 12753–12771. [Google Scholar] [CrossRef]

- de Cock, M.; Cornelis, C.; Kerre, E.E. Fuzzy rough sets: The forgotten step. Ieee Trans. Fuzzy Syst. 2007, 15, 121–130. [Google Scholar] [CrossRef]

- Dubois, D.; Prade, H. Rough fuzzy sets and fuzzy rough sets. Int. J. Gen. Syst. 1990, 17, 191–209. [Google Scholar] [CrossRef]

- Mieszkowicz-Rolka, A.; Rolka, L. Fuzziness in information systems. In Electronic Notes in Theoretical Computer Science; Elsevier: Amsterdam, The Netherlands, 2003; Volume 82. [Google Scholar]

- Wu, W.; Mi, J.; Zhang, W. Generalized fuzzy rough sets. Inf. Sci. 2003, 151, 263–282. [Google Scholar] [CrossRef]

- Yeung, D.S.; Chen, D.G.; Tsang, E.; Lee, J.; Wang, X.Z. On the generalization of fuzzy rough sets. Ieee Trans. Fuzzy Syst. 2005, 13, 343–361. [Google Scholar] [CrossRef]

- Vluymans, S.; D’eer, L.; Saeys, Y.; Cornelis, C. Applications of Fuzzy Rough Set Theory in Machine Learning: A Survey. Fundam. Inform. 2015, 142, 53–86. [Google Scholar] [CrossRef]

- Tabakov, M.; Kwasnicka, H.; Kozak, P.; Dziegiel, P.; Pula, B. Recognition of HER-2/neu breast cancer cell membranes with fuzzy rough sets. Recent researches in medicine, biology and bioscience. In Proceedings of the 4th International Conference on Bioscience and Bioinformatics (ICBB ’13), Chania, Crete Island, Greece, 27–29 August 2013; WSEAS Press; pp. 169–176. [Google Scholar]

- Dua, D.; Graff, C. UCI Machine Learning Repository; University of California, School of Information and Computer Science: Irvine, CA, USA, 2019; Available online: http://archive.ics.uci.edu/ml (accessed on 31 December 2020).

- Yeh, I.C.; Yang, K.J.; Ting, T.M. Knowledge discovery on RFM model using Bernoulli sequence. Expert Syst. Appl. 2009, 36, 5866–5871. [Google Scholar] [CrossRef]

- Mangasarian, O.L.; Wolberg, W.H. Cancer diagnosis via linear programming. SIAM News 1990, 23, 1–18. [Google Scholar]

- Lyon, R.J.; Stappers, B.W.; Cooper, S.; Brooke, J.M.; Knowles, J.D. Fifty Years of Pulsar Candidate Selection: From simple filters to a new principled real-time classification approach. Mon. Not. R. Astron. Soc. 2016, 459, 1104–1123. [Google Scholar] [CrossRef]

- Khozeimeh, F.; Alizadehsani, R.; Roshanzamir, M.; Khosravi, A.; Layegh, P.; Nahavandi, S. An expert system for selecting wart treatment method. Comput. Biol. Med. 2017, 81, 167–175. [Google Scholar] [CrossRef]

- Little, M.A.; McSharry, P.E.; Roberts, S.J.; Costello, D.A.E.; Moroz, I.M. Exploiting Nonlinear Recurrence and Fractal Scaling Properties for Voice Disorder Detection. Biomed. Eng. Online 2007, 6. [Google Scholar] [CrossRef]

- Wu, D. On the Fundamental Differences Between Interval Type-2 and Type-1 Fuzzy Logic Controllers. IEEE Trans. Fuzzy Syst. 2012, 20, 832–848. [Google Scholar] [CrossRef]

- Skowron, A.; Suraj, Z. A Rough Set Approach to Real-Time State Identification for Decision Making; Institute of Computer Science Report 18/93; Warsaw University of Technology: Warsaw, Poland, 1993; p. 27. [Google Scholar]

- Skowron, A.; Suraj, Z. A Rough Set Approach to Real-Time State Identification. Bull. Eur. Assoc. Comput. Sci. 1993, 50, 264. [Google Scholar]

- Karnik, N.N.; Mendel, J.M.; Liang, Q. Type-2 fuzzy logic systems. IEEE Trans. Fuzzy Syst. 1999, 7, 643–658. [Google Scholar] [CrossRef]

- Bronstein, I.N.; Semendjajew, K.A.; Musiol, G.; Mühlig, H. Taschenbuch der Mathematik; Verlag Harri Deutsch: Frankfurt, Germany; Zurich, Switzerland, 2001; p. 1258. [Google Scholar]

- Wu, D. An overview of alternative type-reduction approaches for reducing the computational cost of interval type-2 fuzzy logic controllers. In Proceedings of the 2012 IEEE International Conference on Fuzzy Systems, Brisbane, Australia, 10–15 June 2012. [Google Scholar]

- Wu, D. Approaches for reducing the computational cost of interval type-2 fuzzy logic systems: Overview and comparisons. IEEE Trans. Fuzzy Syst. 2013, 21, 80–99. [Google Scholar] [CrossRef]

- Wu, D.; Mendel, J.M. Enhanced Karnik–Mendel algorithms. IEEE Trans. Fuzzy Syst. 2009, 17, 923–934. [Google Scholar]

- Wu, D.; Nie, M. Comparison and practical implementation of type reduction algorithms for type-2 fuzzy sets and systems. In Proceedings of the 2011 IEEE International Conference on Fuzzy Systems, Taipei, Taiwan, 27–30 June 2011; pp. 2131–2138. [Google Scholar]

- Peng, L.; Chen, W.; Zhou, W.; Li, F.; Yang, J.; Zhang, J. An immune-inspired semi-supervised algorithm for breast cancer diagnosis. Comput. Methods Programs Biomed. 2016, 134, 259–265. [Google Scholar] [CrossRef]

- Utomo, C.P.; Kardiana, A.; Yuliwulandari, R. Breast Cancer Diagnosis using Artificial Neural Networks with Extreme Learning Techniques. Int. J. Adv. Res. Artif. Intell. Ijarai 2014, 3, 10–14. [Google Scholar]

- Akay, A.F. Support vector machines combined with feature selection for breast cancer diagnosis. Expert Syst. Appl. 2009, 36, 3240–3247. [Google Scholar] [CrossRef]

- Kumar, G.R.; Nagamani, K. Banknote authentication system utilizing deep neural network with PCA and LDA machine learning techniques. Int. J. Recent Sci. Res. 2018, 9, 30036–30038. [Google Scholar]

- Kumar, C.; Dudyala, A.K. Bank note authentication using decision tree rules and machine learning techniques. In Proceedings of the 2015 International Conference on Advances in Computer Engineering and Applications, Ghaziabad, India, 19–20 March 2015; pp. 310–314. [Google Scholar]

- Jaiswal, R.; Jaiswal, S. Banknote Authentication using Random Forest Classifier. Int. J. Digit. Appl. Contemp. Res. 2019, 7, 1–4. [Google Scholar]

- Sarma, S.S. Bank Note Authentication: A Genetic Algorithm Supported Neural based Approach. Int. J. Adv. Res. Comput. Sci. 2016, 7, 97–102. [Google Scholar]

- Wang, Y.; Pan, Z.; Zheng, J.; Qian, L.; Li, M. A hybrid ensemble method for pulsar candidate classification. Astrophys. Space Sci. 2019, 8, 1–13. [Google Scholar] [CrossRef]

- Basarslan, M.S.; Kayaalp, F. A hybrid classification example in the diagnosis of skin disease with cryotherapy and immunotherapy treatment. In Proceedings of the 2018 2nd International Symposium on Multidisciplinary Studies and Innovative Technologies (ISMSIT), Ankara, Turkey, 19–21 October 2018. [Google Scholar]

- Cüvitoglu, A.; Isik, Z. Evaluation machine learning approaches for classification of cryotherapy and immunotherapy datasets. Int. J. Mach. Learn. Comput. 2018, 4, 331–335. [Google Scholar]

- Nilashi, M.; Ibrahim, O.; Ahmadi, H.; Shahmoradi, L. A knowledge-based system for breast cancer classification using fuzzy logic method. Telemat. Inform. 2017, 34, 133–144. [Google Scholar] [CrossRef]

- V, P.; Souza, d.; Torres, L.C.B.; Guimarães, A.J.; Araujo, V.S. Pulsar detection for wavelets SODA and regularized fuzzy neural networks based on andneuron and robust activation function. Int. J. Artif. Intell. Tools 2019, 28, 1950003. [Google Scholar]

- Souza, P.V.d.; Silva, G.R.L.; Torres, L.C.B. Uninorm based regularized fuzzy neural networks. In Proceedings of the 2018 IEEE conference on evolving and adaptive intelligent systems (EAIS), Rhodes, Greece, 25–27 May 2018; pp. 1–8. [Google Scholar]

- De Campos Souza, P.V. Pruning fuzzy neural networks based on unineuron for problems of classification of patterns. J. Intell. Fuzzy Syst. 2018, 35, 1–9. [Google Scholar]

- Guimarães, A.J.; Souza, P.V.d.; Araújo, V.J.S.; Rezende, T.S.; Araújo, V.S. Pruning Fuzzy Neural Network Applied to the Construction of Expert Systems to Aid in the Diagnosis of the Treatment of Cryotherapy and Immunotherapy. Big Data Cogn. Comput. 2019, 3, 22. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).