Bio-Inspired Modality Fusion for Active Speaker Detection

Abstract

1. Introduction

2. Related Work

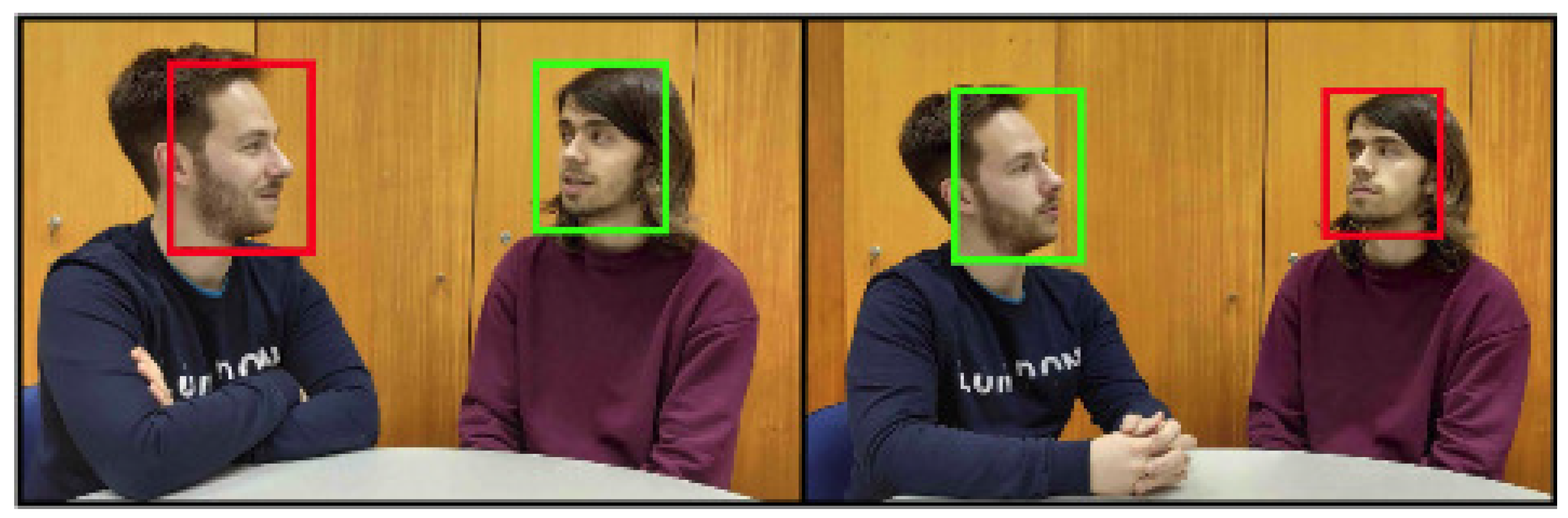

2.1. ASD Recent Research

2.2. Datasets

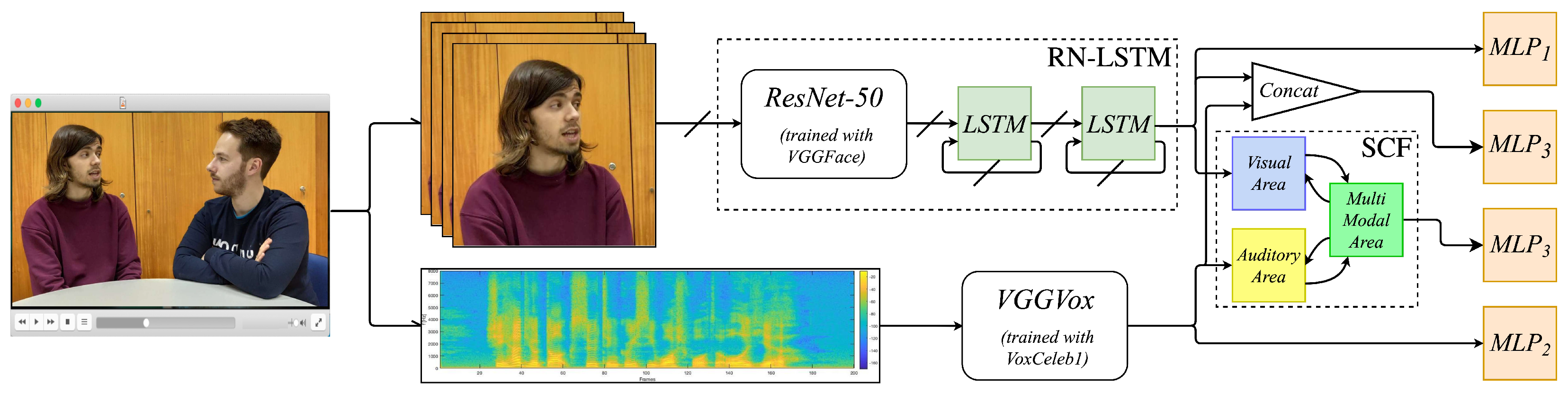

3. Methodology

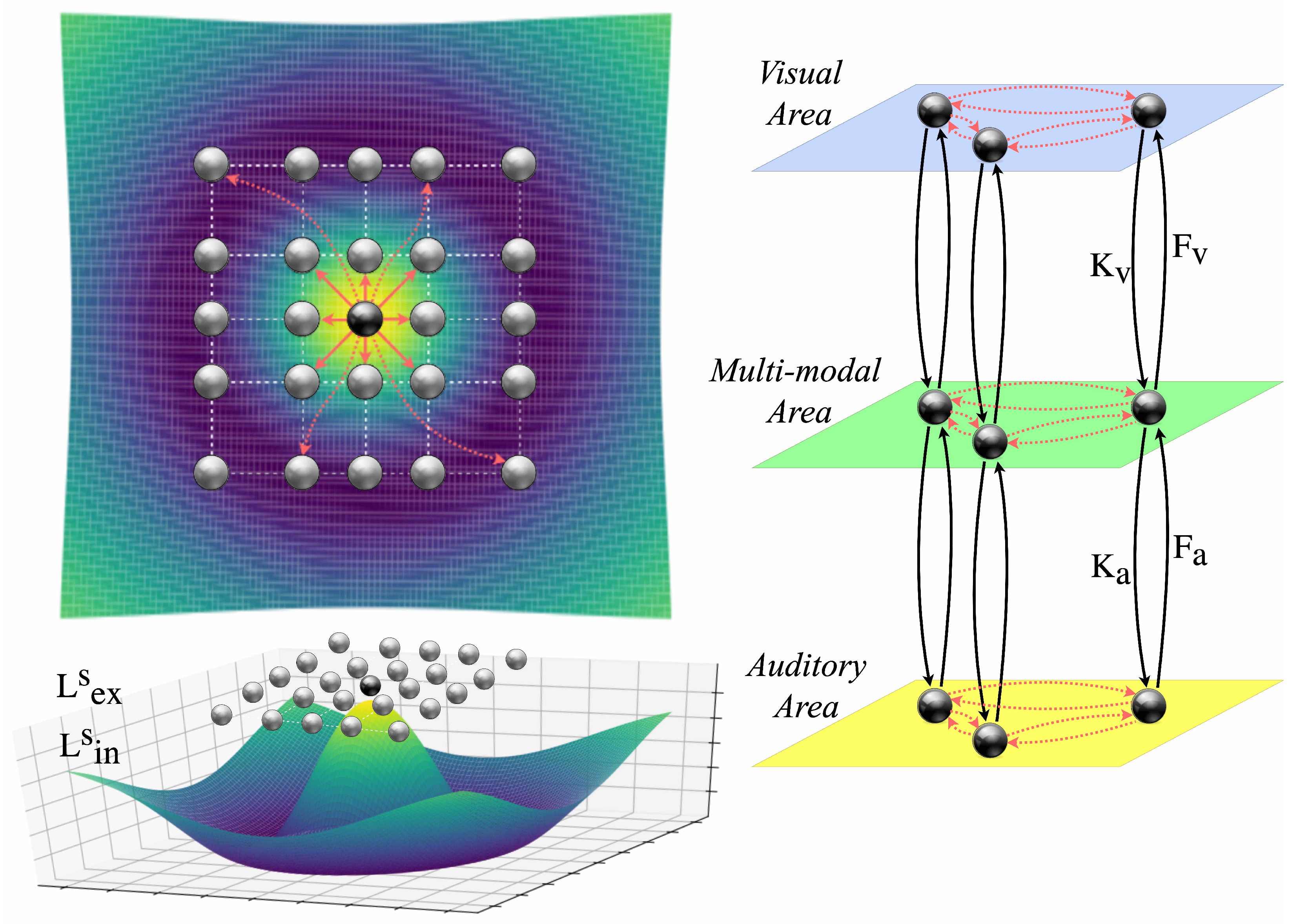

3.1. Bio-Inspired Embedding Fusion

3.2. Audio Clips and VGGVox

3.3. Image Sequences and RN-LSTM

4. Experiments

4.1. Data Preparation

4.2. Modality Experimentation

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| ASD | Active speaker detection |

| VAD | Voice activity detection |

| TDOA | Time difference of arrival |

| CNN | Convolutional neural network |

| LSTM | Long short term memory |

| RN-LSTM | Resnet-LSTM |

| SC | Superior colliculus |

| SCF | Superior colliculus fusion |

References

- Nagrani, A.; Chung, J.S.; Zisserman, A. Voxceleb: A large-scale speaker identification dataset. arXiv 2017, arXiv:1706.08612. [Google Scholar]

- Ephrat, A.; Mosseri, I.; Lang, O.; Dekel, T.; Wilson, K.; Hassidim, A.; Freeman, W.T.; Rubinstein, M. Looking to listen at the cocktail party: A speaker-independent audio-visual model for speech separation. ACT Trans. Graph. 2018, 37, 1–11. [Google Scholar] [CrossRef]

- Vajaria, H.; Sarkar, S.; Kasturi, R. Exploring co-occurrence between speech and body movement for audio-guided video localization. IEEE Trans. Circuits Syst. Video Technol. 2008, 18, 1608–1617. [Google Scholar] [CrossRef]

- Tranter, S.E.; Reynolds, D.A. An overview of automatic speaker diarization systems. IEEE Trans. Audio Speech Lang. Process. 2006, 14, 1557–1565. [Google Scholar] [CrossRef]

- le Bouquin-Jeannès, R.; Faucon, G. Study of a voice activity detector and its influence on a noise reduction system. Speech Comm. 1995, 16, 245–254. [Google Scholar] [CrossRef]

- Liu, D.; Kubala, F. Online speaker clustering. In Proceedings of the 2003 IEEE International Conference on Acoustics, Speech, and Signal Processing, 2003 Proceedings, (ICASSP ’03), Hong Kong, China, 6–10 April 2003. [Google Scholar]

- Maraboina, S.; Kolossa, D.; Bora, P.K.; Orglmeister, R. Multi-speaker voice activity detection using ICA and beampattern analysis. In Proceedings of the 2006 14th European Signal Processing Conference, Florence, Italy, 4–8 September 2006; pp. 1–5. [Google Scholar]

- Bertrand, A.; Moonen, M. Energy-based multi-speaker voice activity detection with an ad hoc microphone array. In Proceedings of the 2010 IEEE International Conference on Acoustics, Speech and Signal Processing, Dallas, TX, USA, 14–19 March 2010; pp. 85–88. [Google Scholar]

- Siatras, S.; Nikolaidis, N.; Krinidis, M.; Pitas, I. Visual Lip Activity Detection and Speaker Detection Using Mouth Region Intensities. IEEE Trans. Circuits Syst. Video Technol. 2009, 19, 133–137. [Google Scholar] [CrossRef]

- Ahmad, R.; Raza, S.P.; Malik, H. Visual Speech Detection Using an Unsupervised Learning Framework. In Proceedings of the 2013 12th International Conference on Machine Learning and Applications, Miami, FL, USA, 4–7 December 2013; pp. 525–528. [Google Scholar]

- Stefanov, K.; Sugimoto, A.; Beskow, J. Look who’s talking: Visual identification of the active speaker in multi-party human–robot interaction. In Proceedings of the Workshop Advancements in Social Signal Processing for Multimodal Interaction, Tokyo, Japan, 16 November 2016; pp. 22–27. [Google Scholar]

- Minotto, V.P.; Jung, C.R.; Lee, B. Simultaneous-Speaker Voice Activity Detection and Localization Using Mid-Fusion of SVM and HMMs. IEEE Trans. Multimed. 2014, 16, 1032–1044. [Google Scholar] [CrossRef]

- Cutler, R.; Davis, L. Look who’s talking: Speaker detection using video and audio correlation. In Proceedings of the 2000 IEEE International Conference on Multimedia and Expo, ICME2000, Proceedings, Latest Advances in the Fast Changing World of Multimedia (Cat. No.00TH8532), New York, NY, USA, 30 July–2 August 2000; Volume 3, pp. 1589–1592. [Google Scholar]

- Chakravarty, P.; Mirzaei, S.; Tuytelaars, T.; Vanhamme, H. Who’s speaking? audio-supervised classification of active speakers in video. In Proceedings of the 2015 ACM on International Conference on Multimodal Interaction, Seattle, WA, USA, 9 November 2015. [Google Scholar]

- Chakravarty, P.; Tuytelaars, T. Cross-modal supervision for learning active speaker detection in video. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2016; pp. 1–5. [Google Scholar]

- Stefanov, K.; Beskow, J.; Salvi, G. Vision-based active speaker detection in multiparty interaction. In Proceedings of the GLU 2017 Inter-national Workshop on Grounding Language Understanding, Stockholm, Sweden, 25 August 2017; pp. 47–51. [Google Scholar]

- Fukushima, K. Neocognitron: A self-organizing neural network model for a mechanism of pattern recognition unaffected by shift in position. Biol. Cybern. 1980, 36, 93–202. [Google Scholar] [CrossRef] [PubMed]

- Hubel, D.H.; Wiesel, T.N. Receptive fields and functional architecture of monkey striate cortex. J. Physiol. 1968, 195, 215–243. [Google Scholar] [CrossRef] [PubMed]

- Stefanov, K.; Beskow, J.; Salvi, G. Self-Supervised Vision-Based Detection of the Active Speaker as Support for Socially-Aware Language Acquisition. IEEE Trans. Cogn. Dev. Syst. 2019, 12, 250–259. [Google Scholar] [CrossRef]

- Ren, J.; Hu, Y.; Tai, Y.-W.; Wang, C.; Xu, L.; Sun, W.; Yan, Q. Look, listen and learn—A multimodal LSTM for speaker identification. In Proceedings of the Thirtieth AAAI Conference on Artificial Intelligence, Phoenix, AR, USA, 12–17 February 2016; pp. 3581–3587. [Google Scholar]

- Cech, J.; Mittal, R.; Deleforge, A.; Sanchez-Riera, J.X.; Alameda-Pineda, X.; Horaud, R. Active-speaker detection and localization with microphones and cameras embedded into a robotic head. In Proceedings of the 2013 13th IEEE-RAS International Conference on Humanoid Robots (Humanoids), Atlanta, GA, USA, 15–17 October 2013; pp. 203–210. [Google Scholar]

- Gebru, I.D.; Alameda-Pineda, X.; Horaud, R.; Forbes, F. Audio-visual speaker localization via weighted clustering. In Proceedings of the 2014 IEEE International Workshop on Machine Learning for Signal Processing (MLSP), Reims, France, 21–24 September 2014; pp. 1–6. [Google Scholar]

- Hoover, K.; Chaudhuri, S.; Pantofaru, C.; Sturdy, I.; Slaney, M. Using audio-visual information to understand speaker activity: Tracking active speakers on and off screen. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 6558–6562. [Google Scholar]

- Jegou, H.; Perronnin, F.; Douze, M.; Sanchez, J.; Perez, P.; Schmid, C. Aggregating Local Image Descriptors into Compact Codes. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 1704–1716. [Google Scholar] [CrossRef] [PubMed]

- Roth, J.; Chaudhuri, S.; Klejch, O.; Marvin, R.; Gallagher, A.C.; Kaver, L.; Ramaswamy, S.; Stopczynski, A.; Schmid, C.; Xi, Z.; et al. AVA-ActiveSpeaker: An Audio-Visual Dataset for Active Speaker Detection. arXiv 2019, arXiv:abs/1901.01342. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Cao, Q.; Shen, L.; Xie, W.; Parkhi, O.; Zisserman, A. VGGFace2: A Dataset for Recognising Faces across Pose and Age. In Proceedings of the IEEE International Conference on Automatic Face & Gesture Recognition, Xi’an, China, 15–19 May 2018. [Google Scholar]

- The Keras-VGGFace Package. Available online: https://pypi.org/project/keras-vggface/ (accessed on 23 November 2020).

- Ursino, M.; Cuppini, C.; Magosso, E.; Serino, A.; di Pellegrino, G. Multisensory integration in the superior colliculus: A neural network model. J. Comput. Neurosci. 2008, 26, 55–73. [Google Scholar] [CrossRef] [PubMed]

- Chatfield, K.; Simonyan, K.; Vedaldi, A.; Zisserman, A. Return of the devil in the details: Delving deep into convolutional nets. In Proceedings of the British Machine Vision Conference, Nottingham, UK, 1–5 September 2014. [Google Scholar]

- Mermelstein, P. Distance measures for speech recognition, psychological and instrumental. In Pattern Recognition and Artificial Intelligence; Chen, C.H., Ed.; Academic: New York, NY, USA, 1976; pp. 374–388. [Google Scholar]

- He, K.; Sun, J. Convolutional Neural Networks at Constrained Timecost. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Kankanamge, S.; Fookes, C.; Sridharan, S. Facial analysis in the wild with LSTM networks. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 1052–1056. [Google Scholar]

- Xu, Z.; Li, S.; Deng, W. Learning temporal features using LSTM-CNN architecture for face anti-spoofing. In Proceedings of the 2015 3rd IAPR Asian Conference on Pattern Recognition (ACPR), Kuala Lumpur, Malaysia, 3–6 November 2015; pp. 141–145. [Google Scholar]

- Chollet, F. Keras. 2015. Available online: https://keras.io (accessed on 23 November 2020).

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch normalization: Acceleratingdeep network training by reducing internal covariate shift. arXiv 2015, arXiv:1502.03167. [Google Scholar]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

| Dataset | Sequences | Frames | Unique Facial Trackings | Image Conditions |

|---|---|---|---|---|

| Columbia | 4914 | 30 | 34 (6 speakers) | Identical |

| AVA-ActiveSpeaker | 5510 (3 labels) 5470 (2 labels) | [30, 300] | 4321 (20 videos) | Varied |

| Speaker Dependent | Speaker Independent | |

|---|---|---|

(Audio Only) | 47.37 (0.52) [%] | 17.34 (9.14) [%] |

| - (Video Only) | 97.68 (0.39) [%] | 64.89 (14.44) [%] |

| Video Dependent | Video Independent | |

|---|---|---|

(Audio Only) | 87.73 (0.87) [%] | 84.96 (1.78) [%] |

| - (Video Only) | 78.68 (1.79) [%] | 72.48 (2.17) [%] |

| Speaker Dependent | Speaker Independent | |

|---|---|---|

(Baseline) | 97.60 (0.49) [%] | 41.78 (15.46) [%] |

(New Approach) | 98.53 (0.21) [%] | 58.50 (7.71) [%] |

| Video Dependent | Video Independent | |

|---|---|---|

(Baseline) | 89.10 (0.41) [%] | 87.52 (2.49) [%] |

(New Approach) | 91.68 (0.51) [%] | 88.46 (2.06) [%] |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Assunção, G.; Gonçalves, N.; Menezes, P. Bio-Inspired Modality Fusion for Active Speaker Detection. Appl. Sci. 2021, 11, 3397. https://doi.org/10.3390/app11083397

Assunção G, Gonçalves N, Menezes P. Bio-Inspired Modality Fusion for Active Speaker Detection. Applied Sciences. 2021; 11(8):3397. https://doi.org/10.3390/app11083397

Chicago/Turabian StyleAssunção, Gustavo, Nuno Gonçalves, and Paulo Menezes. 2021. "Bio-Inspired Modality Fusion for Active Speaker Detection" Applied Sciences 11, no. 8: 3397. https://doi.org/10.3390/app11083397

APA StyleAssunção, G., Gonçalves, N., & Menezes, P. (2021). Bio-Inspired Modality Fusion for Active Speaker Detection. Applied Sciences, 11(8), 3397. https://doi.org/10.3390/app11083397