1. Introduction

Scheduling problems such distribution, transportation, management, construction, engineering and manufacturing, are omnipresent in real-world applications [

1]. These scheduling problems are complex and very difficult to solve using conventional techniques. The complexity of these scheduling problems arises due to the presence of a large number of constraints. Due to these reasons, there was ample research conducted in the past few decades that focused on developing different techniques for the optimization of these scheduling problems. Permutation flow-shop scheduling problem (PFSSP), a variation of the classical flow-shop scheduling problem (FSSP), is a problem in operation research in which n ideal jobs, J1, J2, …, Jn, of varying processing times are assigned to m resources (usually machines). The objective is to allocate the available resources to the jobs to maximize (or minimize) performance indicators, such as makespan, tardiness, etc. In classical FSSP, for n jobs on m machines, there are (n!)m different alternatives for sequencing jobs on machines, while in permutation problems, the search space is reduced to n!. Like any scheduling problem, there are constraints and assumptions associated with PFSSP. In this paper, these constraints and assumptions are as follows.

- (1)

On the shop floor, all machines are independent from each other.

- (2)

The operation of all jobs have deterministic processing times.

- (3)

No pre-emption of job operation is permitted.

- (4)

The set-up times are not considered in these problems.

- (5)

No machine down-time or maintenance time is considered.

- (6)

The machine used for each operation of a job is known and fixed.

- (7)

A job cannot revisit a machine group for more than one operation.

- (8)

Operation constraints must always be followed.

- (9)

All jobs must enter the machines in the same order.

Since PFSSP are commonly encountered in various industries, researchers developed many methods to optimize the key performance indicators (KPIs) of PFSSP. Liu and Liu [

2] used a hybrid discrete artificial bee colony algorithm for minimizing the makespan in PFSSP. They utilized Greedy Randomized Adaptive Search Procedure (GRASP) to create the initial population and operators such as insert, swap, path relinking to generate new solutions. The authors also improved the local search by further optimizing the best solution. When applied to PFFP, their algorithm had superior performance compared to other algorithms like particle sawm optimization (PSO) algorithm, hybrid GA (HGA), etc. Govindan et al. [

3] combined decision tree (DT) and scatter search (SS) algorithms to solve PFSSP. DT was used initially to convert the problem into a tree structure using the entropy function, followed by SS, to do an extensive investigation of the solution space. Simulation results and statistical test comparisons showed the advantage of the authors’ proposed algorithm. Ancău [

4] proposed two algorithms for solving PFSSP. (1) A constructive greedy heuristic (CG) and (2) stochastic greedy heuristic (SG). The CG was based on a greedy selection, while the SG was a modified version of CG with iterative stochastic start. The authors validated the proposed algorithms by using them to solve benchmark problems, and the results showed that the algorithm was able to come within 6% of the best-known solution. Zobolas et al. [

5] created a hybrid algorithm by combining greedy heuristic, GA, and variable neighborhood search (VNS) algorithm. The hybrid algorithm was able to take advantage of both GA and VNS, and obtain the best-known makespan for the benchmark problems in a short computational time.

Zhang et al. [

6] proposed an enhanced GA to solve the distributed assembly permutation flow shop scheduling problems (DAPFSP). The authors used a greedy mating pool to select better candidates. They also updated the crossover strategy and incorporated local search strategies to improve local convergence. Andrade et al. [

7] minimized the total flowtime of PFFSP by using a Biased Random-Key Genetic Algorithm (BRKGA). Their proposed technique used a shaking strategy to perturb individuals from the elite population, while resetting the remainder of the population. The authors validated their proposed approach by its application to 120 benchmark problems and comparing the results to those obtained by Iterative Greedy Search and Iterated Local Search. The results showed that the proposed approach was more efficient and was able to identify new bounds as well as optimal solutions for the problems. Ruiz et al. [

8] used the iterated Greedy (IG) search algorithm to solve the distributed permutation flow shop scheduling problems. The authors improved the initialization, construction, and deconstruction procedures, and improved the local search (ILS) to obtain better results. A big advantage of the proposed technique was that it required very little problem-specific knowledge to be implemented. Pan et al. [

9] proposed three constructive heuristics, DLR (inspired by LR heuristic), DNEH (inspired by NEH heuristic), a hybrid DLR-DNEH heuristic, and four metaheuristics—Discrete Artificial Bee Colony (DABC), Scatter Search (SS), ILS, & IG—for minimizing the total flowtime criterion of the distributed permutation flow shop scheduling problem (DPFSP). Both the heuristics and metaheuristic were able to significantly improve upon the existing results in literature. Their results also showed that the trajectory-based metaheuristics were considerably better than the population-based metaheuristics.

Though ample work is done in solving PFSSP, the current techniques only provide a single optimal schedule upon execution. Furthermore, real-life operators and schedulers have preferences that cannot be modeled and added to the fitness function of optimization algorithms. The single optimal schedule found using these techniques might theoretically satisfy the objective function. However, it might not be able to satisfy the aforementioned complexities. Although multimodal optimization provides multiple similar solutions for the same problem under consideration, it cannot handle complex situations such as machine breakdowns, delayed supply chain, etc., without changing the fitness function and constraints. However, having multiple similar solutions for the same problem could make it possible to provide opportunity for the operators to have the flexibility to handle emergency using their experience, while waiting to solve the underlying problem. Therefore, it is important to obtain multiple optimal schedules that satisfy the objective function, i.e., perform multimodal optimization (MMO). Over the years, many different techniques were developed to accomplish MMO, such as niching techniques [

10,

11,

12,

13,

14,

15,

16], differential evolutionary strategies [

17,

18,

19,

20,

21,

22,

23,

24], use of GA [

25,

26,

27,

28] etc. However, these techniques were only tested on benchmark mathematical problems. Furthermore, the aim of these techniques was to find both global and local optima, which increases their computational complexity. Additionally, it is possible for local optima to have much worse objective value than global optima, which is undesirable in scheduling problems.

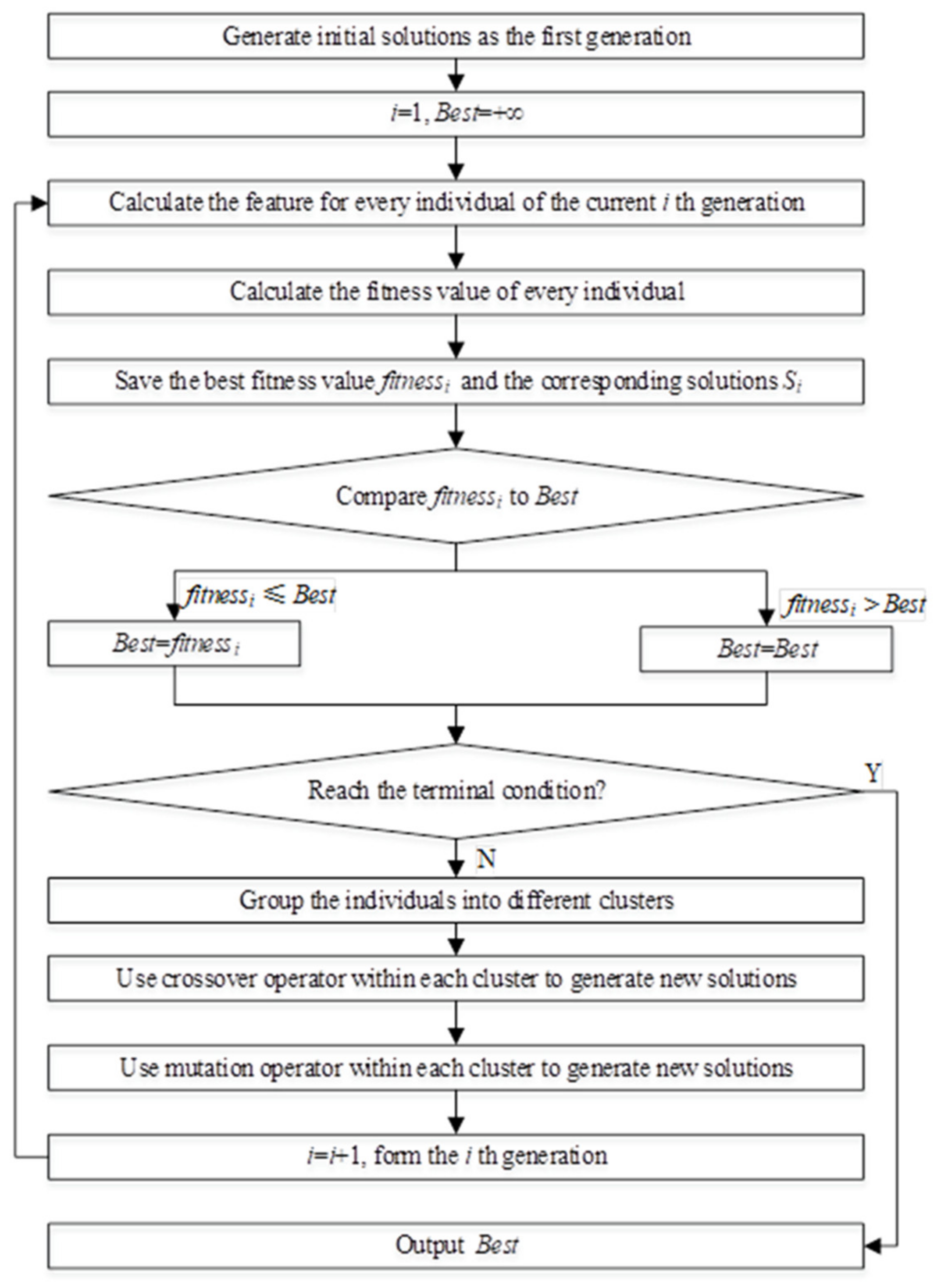

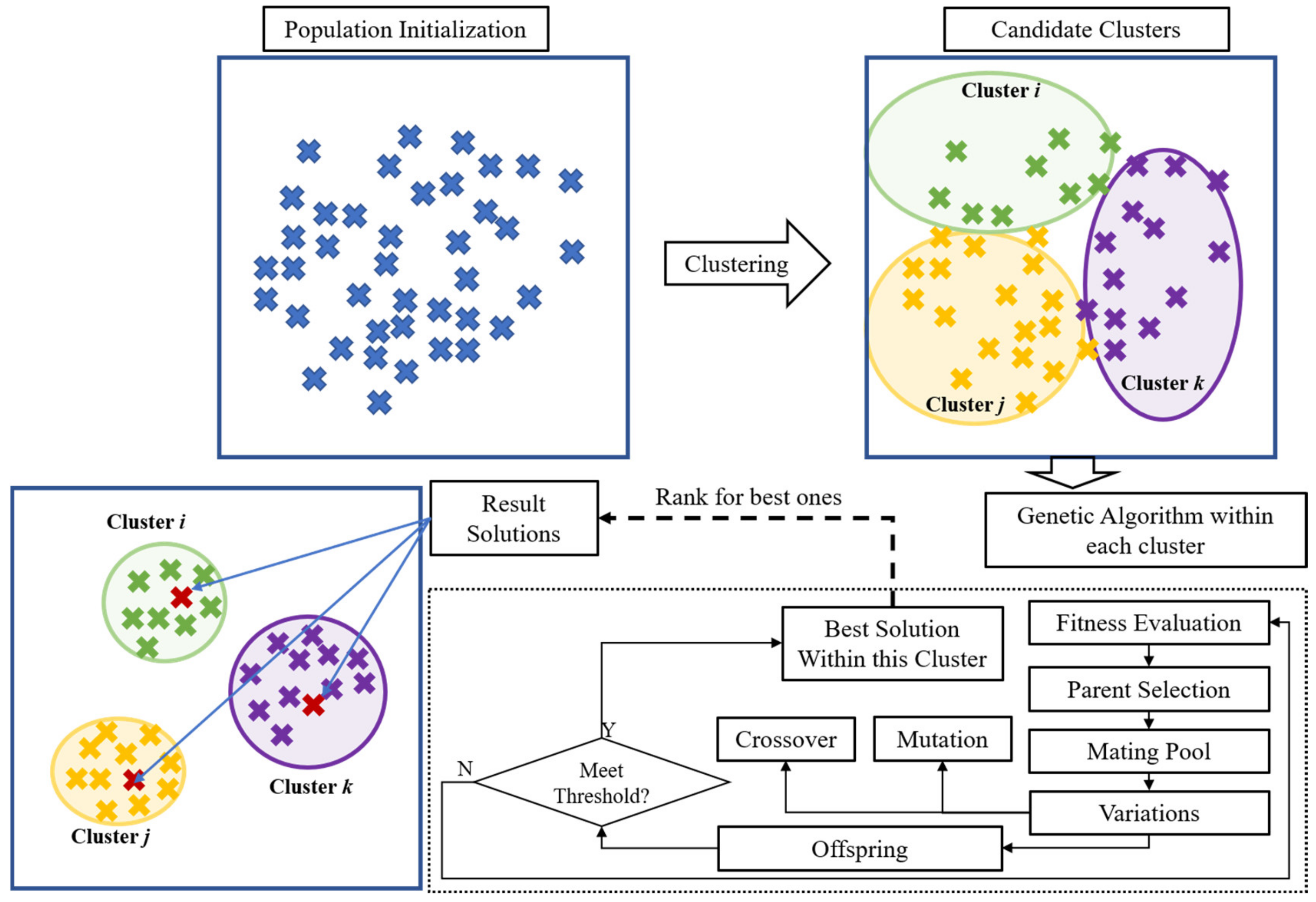

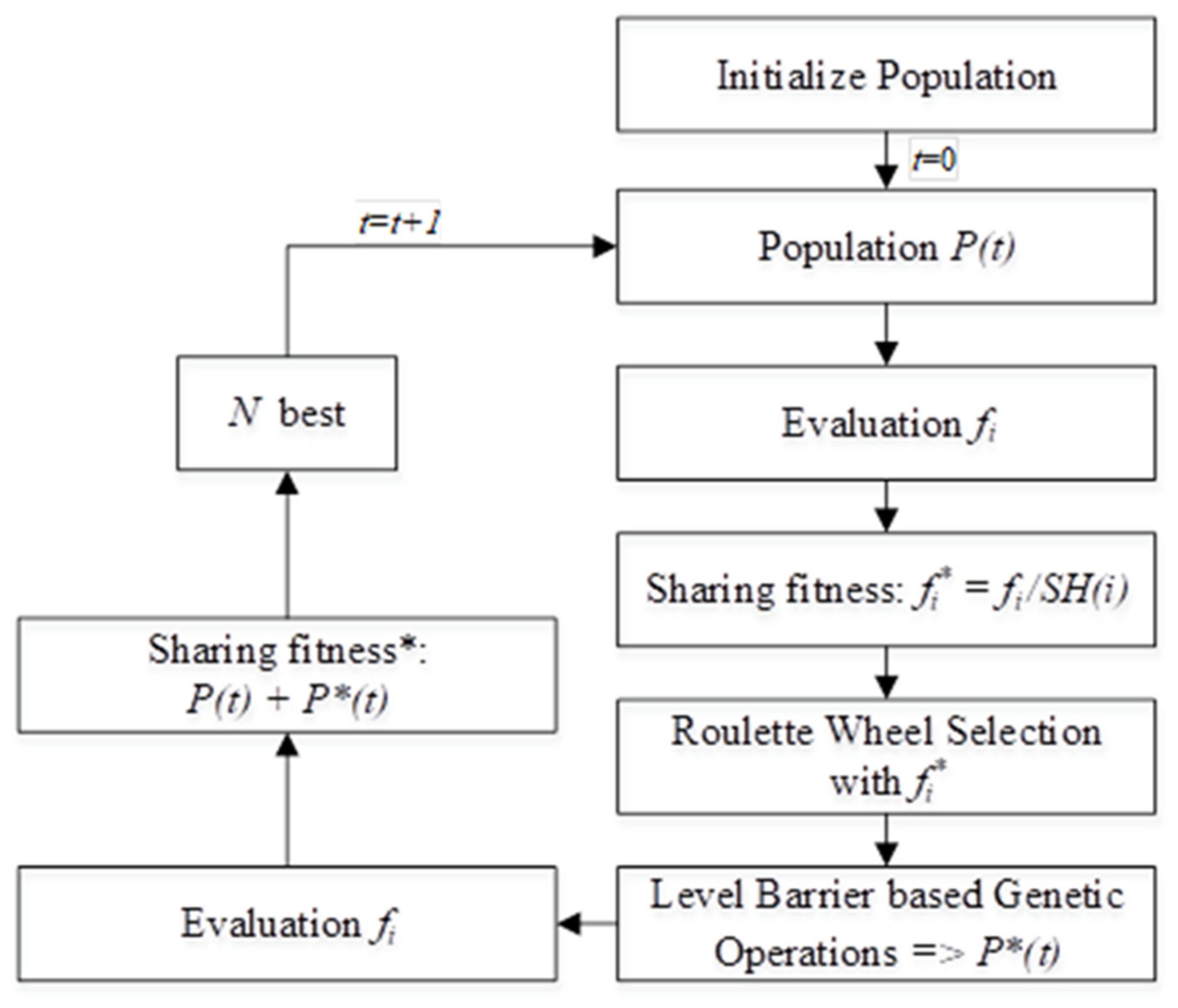

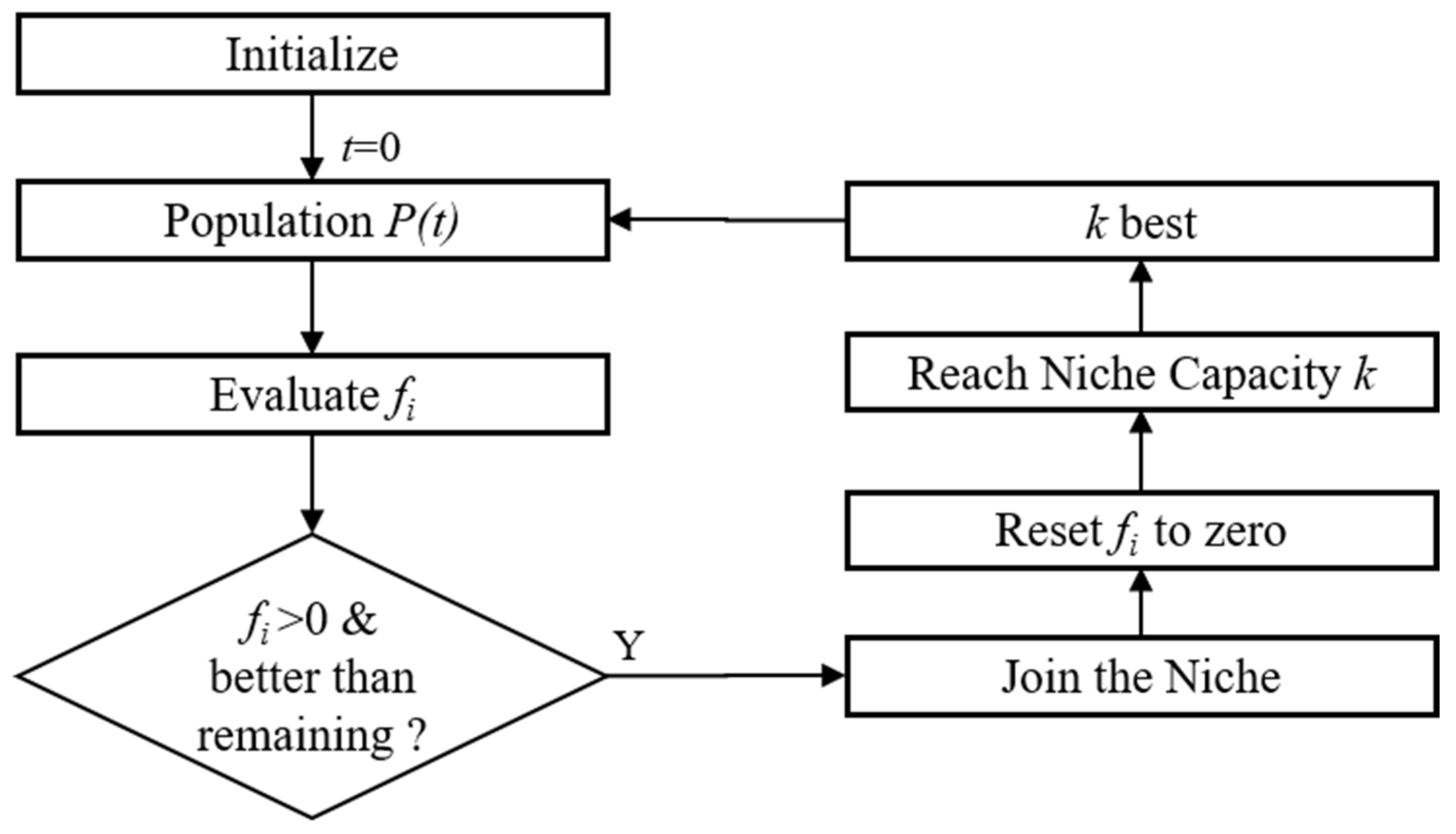

Therefore, in this study, a new algorithm is proposed for the MMO of PFSSP. This is accomplished by using the k-means clustering algorithm with GA. In each iteration of the proposed algorithm, first, solutions are clustered together, using k-means clustering algorithm. Next, the operators of GA are utilized within each cluster to generate solutions for the next generation. Various techniques used by Perez et al. were also modified and utilized for the MMO of PFSSP and the performance of the algorithms was tested on benchmark PFSSP. The rest of the paper is organized as follows.

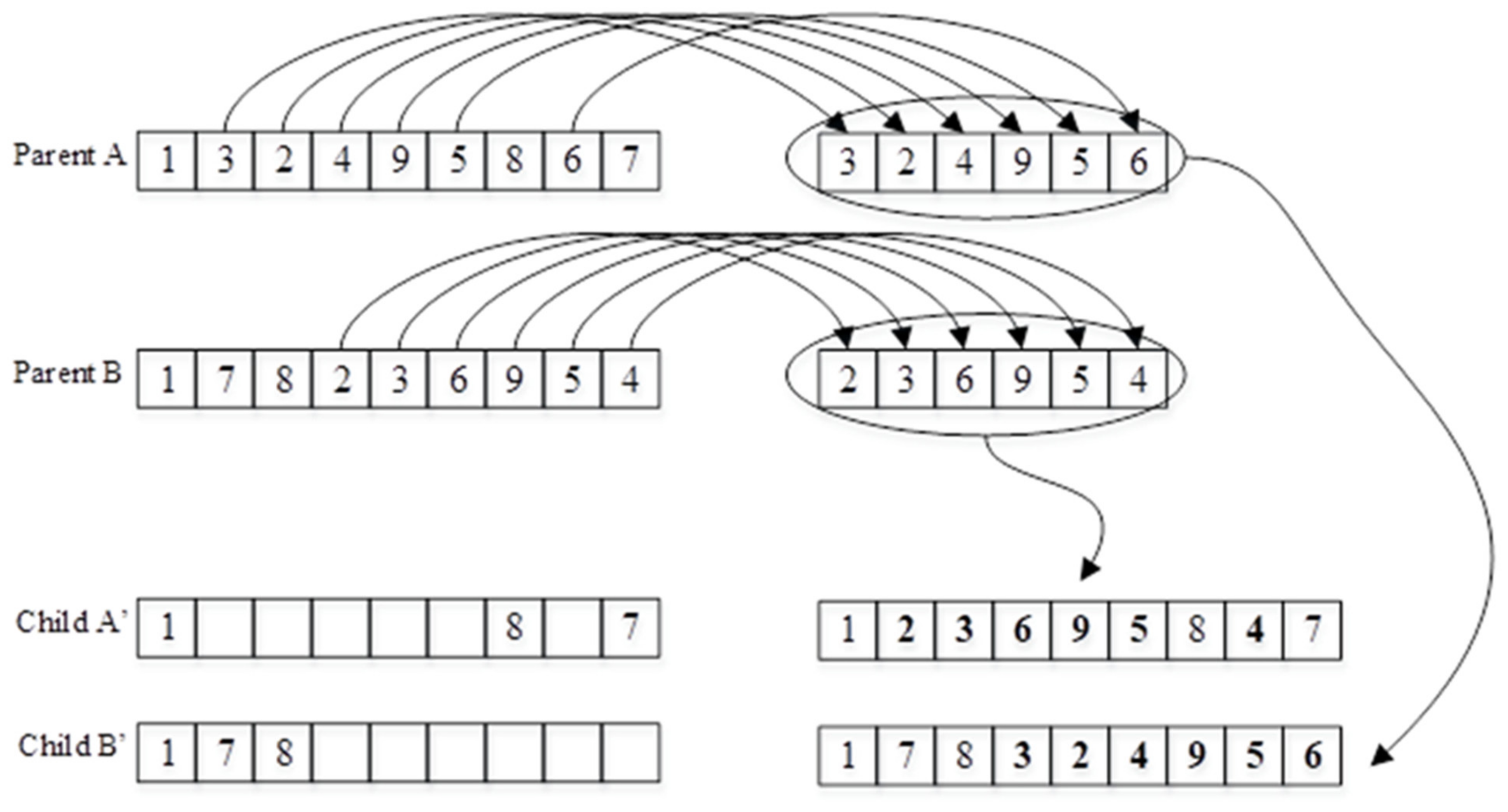

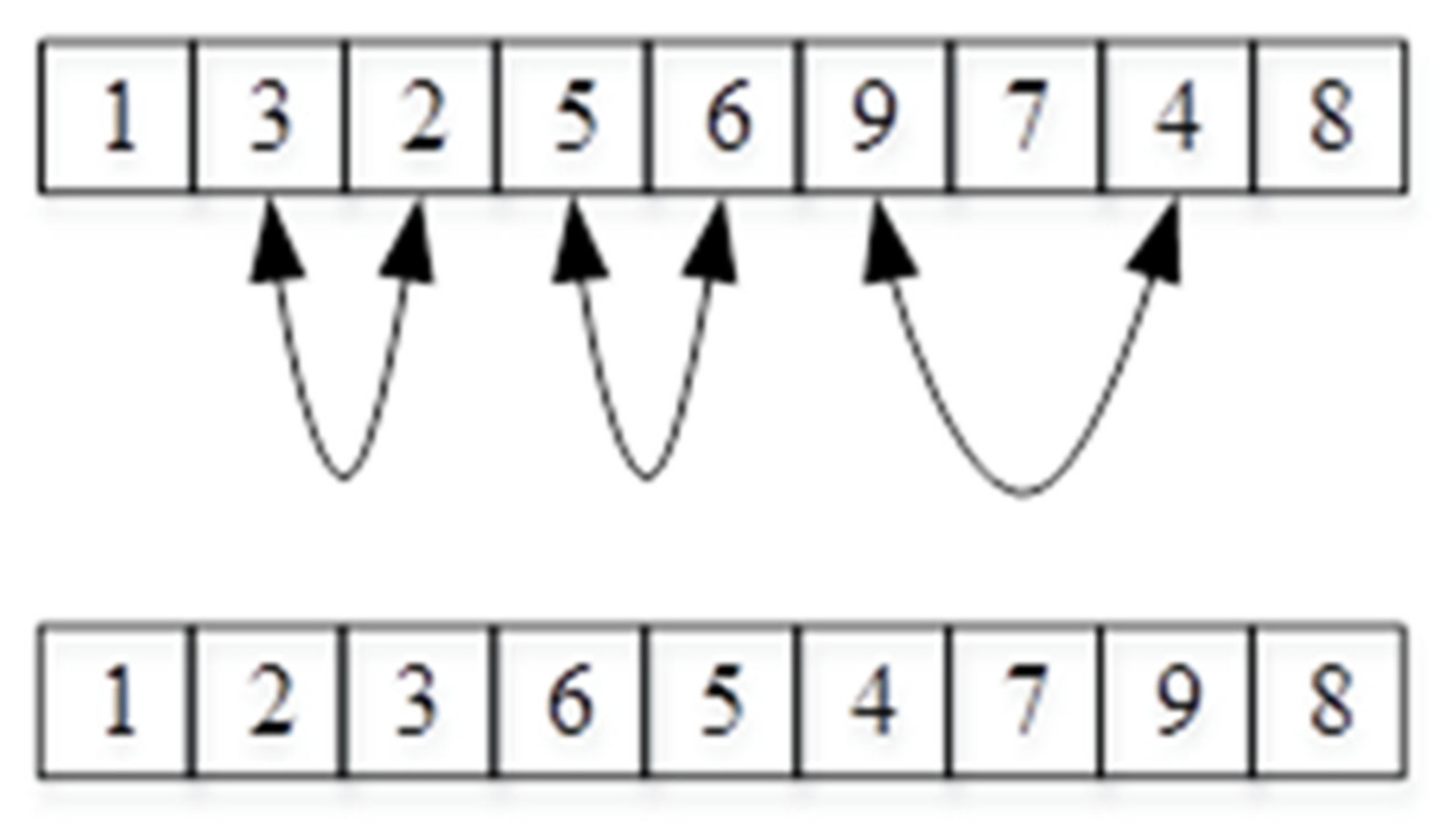

Section 2 presents information about the modified GA operators used in the proposed algorithm to represent a solution and create new solutions during the iterations of the algorithm.

Section 3 provides detailed information about the proposed algorithm, the clearing method (CM), and the sharing fitness methods used for the MMO of PFSSP, as well as the features used to cluster the solutions.

Section 4 presents information about the benchmark problems used to test the proposed algorithm and presents the results obtained by utilizing various algorithms.

Section 5 draws upon conclusions based on the results obtained from the case studies and mentions the future direction of the proposed technique.

4. Experimental Study and Performance Benchmarking

To measure the performance of the algorithms outlined above, these were used for the MMO of multiple PFSSP. Eighteen benchmark problems from various datasets were taken for experiments. The first nine problems were proposed by Carlier [

35], the next eight were proposed by Reeves [

36] and the last one was proposed by Heller [

37]. To better assess the algorithms, 10 independent simulations were performed for each test problems. The performance of the algorithms used was then measured on the basis of four indicators. (1) The best solution found in the 10 simulations. (2) The mean value and the standard deviation of the optimal solution found for the 10 simulations. (3) The number of times the algorithms converged to the best optimal solution. (4) The average number of best optimal solutions found for the 10 simulations. For indicator number 4, only simulations in which the algorithm converged to the best solution from (1) were taken into consideration i.e., if the algorithm only converged to the best solution in 4 out of 10 simulations, then the average number of optimal solutions found was calculated using those 4 simulations. The parameters used for the three algorithms were determined after numerous simulations and are given in

Table 1. Additionally, the performance of the three algorithms was also compared to the performance of Improved Efficient Genetic Algorithm (IEGA) and the Hybrid IEGA (HIEGA) proposed by Basset et al. [

38], when applicable. These algorithms were selected as they showed promising results when used for the optimization of benchmark PFSSPs.

4.1. The Best Solution Found

As mentioned above, 10 independent simulations were ran for each test problem using the three algorithms. The first method used to compare the performance of these algorithms included observing the best solution obtained during 10 simulations. The results obtained by these algorithms were also compared to the best-known solution, as well as the best-known solutions obtained using different algorithms in the available literature. These results are shown in

Table 2.

According to

Table 2, the proposed algorithm, sharing fitness algorithm, and HIEGA were able to find the best-known solutions for all Carlier benchmark problems. IEGA was able to converge to the best-known solution for all Carlier problems besides Car-03, while CM was able to find the best-known solution for Car-01, 05, 06, 07, and 08. For the Reeves problems, the HIEGA had the best performance, as it was able to converge to the best-known solution for 6 out of the 9 problems. The proposed algorithm was able to obtain the best-known solution for Rec-07, Rec-09, and Rec-11, and was able to obtain a better solution than HEIGA for Rec-17. It was also able to converge within 1% of the best-known solution for the remainder of the problems. Sharing fitness, IEGA, and CM were unable to converge to any of the best-known solutions for the Reeves problems and were within 4% and 15% of the best-known solutions. Similarly, only HIEGA converged to the best-known solution of the Heller problem. However, the proposed algorithm was within 0.7% of the best solution, while sharing fitness, IEGA, and CM were unable to do so.

4.2. Mean Value and Standard Deviation of the Optimal Solution

Next, to evaluate the stability of the algorithms, the mean value and the standard deviation of the optimal solution found in the 10 simulations was calculated. Since these were 10 independent simulations, the starting points were randomized in each simulation. These results are shown in

Table 3.

Even though HIEGA had a better performance than the proposed algorithm when converging to the best-known solution, the proposed algorithm was able to consistently converge to a better solution than HEIGA, as indicated by the mean value and standard deviation. For the Reeves problems, the proposed algorithm had a better performance than HEIGA. For 7 out of 9 problems, its average optimal value was much closer to the best-known solution than that of HEIGA, IEGA, sharing fitness, and CM. The standard deviation varied between 0.00 and 16.54 for the proposed algorithm, while it varied between 4.64 and 35.10 for HEIGA, 11.74 and 29.53 for IEGA, 15.61 and 39.63 for sharing fitness and 20.34 and 30.15 for CM. The proposed algorithm had the best results for all Carlier problems, as it always converged to the best-known solution. HEIGA had a 100% convergence rate for 4 out of the 8 problems, sharing fitness had a 100% convergence rate to the best-known solutions of problems 1, 2, and 8, while CM and IEGA had a 100% convergence rate to the best-known solutions for 2 out of 8 problems. Lastly, for Heller, the proposed algorithm consistently converged to within 0.7% of the best-known solution. However, HIEGA converged to a better average. These results show the robustness of the proposed algorithm i.e., its ability to consistently converge to a better optimal solution.

4.3. Number of Times the Algorithm Converged to the Optimal Solution

To ensure that the algorithms could find multiple solutions consistently, the number of simulations in which the algorithm converged to the best-known solution (

NC) was also recorded. Since none of the algorithms converged to majority of the best-known solution for the Reeves problems, the best optimal solution found was used instead. For example, the best-known solution for Rec-01 was 1247, while the best optimal solution found by the algorithms was 1249, therefore, 1249 was used to calculate

NC. The results for

NC are given in

Table 4. A complete analysis for HEIGA and IEGA could not be done for this metric as these results were not reported by the authors (this is indicated by ‘NA’ in

Table 4). However, some inferences could be made based on the results reported. For example, we could assume that both HEIGA and IEGA always converged to the best-known solution for Car-01 and Car-02, as the mean values were equal to the best-known solution and the standard deviations were zero.

Since CM had the worst optimal solution of the three algorithms for the Reeves problems, its NC’s were 0. Similarly, sharing fitness was unable to find the best optimal solution for all but problem 1, and therefore, its NC for those problems was 0. The proposed algorithm had the best performance of all algorithms (where results were available). It had a NC of 10 for problems 7 and 9, 1 for problems 5, 13, and 17, 2 for problems 1 and 15, 4 for problem 11, and 8 for problem 3.

The proposed algorithm had an NC of 10 for all Carlier problems, indicating that it had a 100% converge rate to the best-known solution for those problems. Sharing fitness also had an NC of 10 for problems, 1, 2, and 8, and had an NC of 4, 9, 3, 3, and 8 for the remaining problems. CM had the worst performance as it was unable to converge to the best-known solution for problems 2, 3, and 4. It had an NC of 2, 6, and 9 for problems 1, 5, and 6, respectively, and had an NC of 10 for problems 7 and 8. Lastly, for the Heller problem, the proposed algorithm had an NC of 10 while the other two algorithms had an NC of 0. These results further solidified the argument that the proposed algorithm had the best performance of the three algorithms, as it was able to consistently converge to the best optimal solution.

4.4. Average Number of Best Optimal Solutions Found

Lastly, the average number of best optimal solutions found for each test problem using the different algorithms was compared. For this index, only simulations that had an

NC greater than 0 were used. For example, since the proposed algorithm had an

NC of two for Rec-01,

NO was calculated using the results of those two simulations.

NO for the different test problems using the different algorithms are given in

Table 5. Since the purpose of HIEGA and IEGA was to only find a single optimal solution, these were excluded for the purpose of this metric.

Like the previous three indicators, the proposed algorithm had a significantly better performance than the sharing fitness and CM. Though the sharing fitness found 18 solutions for Rec-01, it was unable to find multiple best optimal solutions for rest of the Reeves problems. The proposed algorithm was able to find 2–6.8 best optimal solutions for all Reeves. CM was unable to find multiple best optimal solutions for any of the Reeves problems, as it never converged to the best optimal solution.

Thought both CM and sharing fitness found more optimal solutions compared to the proposed algorithm for Car-01, their performance was worse for problems 2, 3, 4, and 5. The proposed algorithm found 30.40, 6.20, 13.50, and 1.90 different best-known solutions on average for those problems, while sharing fitness only obtained 9.89, 1.25, 8.67, and 1.00 and the CM obtained 0.00 for all. All algorithms were only able to find 1.00 different optimal solutions for problems 6–8, indicating that the global optimal solution is a unique one. Lastly, for the Heller problem, the proposed algorithm again had the best performance, as it was able to obtain 6.30 different optimal solutions on average, while both sharing fitness and CM obtained 0.00. These results indicate that the proposed algorithm was able to consistently converge to the best optimal solution for all test problems, while finding multiple best optimal solutions.

An attempt was also made to combine the proposed algorithm with sharing fitness, to take advantage of both algorithms, i.e., the ability of the proposed algorithm to consistently converge to the best optimal solution and the ability of sharing fitness to find multiple best optimal solutions. The above task was accomplished by calculating the distance used in the sharing fitness method using the feature matrix of the proposed method. This distance could be calculated using Equation (5).

where

Fi is the feature matrix of individual

i and

Fj is the feature matrix of individual

j. For the hybrid algorithm, the value of

was changed to 10. The performance of the hybrid algorithm was then compared to the performances of sharing fitness. The proposed algorithm and the results for the four indicators are given in

Table 6,

Table 7,

Table 8 and

Table 9.

As can be seen from the results above, the new hybrid algorithm was able to achieve the same best optimal makespan for the Carlier problems but not for the Reeves and Heller problems. In terms of the mean value and standard deviation of the optimal solution found, the new hybrid algorithm had a similar performance to the proposed algorithm for the Carlier problems 1, 4, and 8, while it had a higher mean value and standard deviation for rest of the benchmark problems. When comparing Nc, the new hybrid algorithm was able to converge to the best optimal solutions in all 10 simulations for Carlier problems 1, 4, and 8, but was unable to achieve similar results for the remaining problems. In terms of NO, the new hybrid algorithm was unable to obtain more optimal solutions for any benchmark problem. These results indicate that the new hybrid algorithm did not have a superior performance compared to sharing fitness or the proposed algorithm. The reason that the proposed algorithm was able to achieve better performance than sharing fitness, CM, and the hybrid algorithm, was its utilization of a feature vector to separate the individuals into different clusters rather than a single value.

In this section, the performance of the three algorithms was measured by their application to 18 benchmark PFSSP. Four indicators were used to measure the performance—(1) best optimal found, (2) mean value and standard deviation of the optimal solution found in 10 simulations, (3) number of simulations in which the algorithm converged to the best optimal solution, and (4) average number of best optimal solution found. The results indicated that the proposed algorithm had a better performance than the sharing fitness and CM, and also a hybrid algorithm created by combining the proposed algorithm and sharing fitness.

5. Conclusions

In this study, a new algorithm is proposed for the MMO of PFSSP, i.e., provide multiple solutions with the same objective value. The proposed algorithm utilizes GA for the optimization procedure with the k-means clustering algorithm being used to cluster the individuals of each generation. Unlike standard GA, where all individuals belong to the same cluster, the individuals in the proposed approach were split into multiple clusters and crossover operator is restricted to individuals belonging to the same cluster. Doing so enabled the possibility of the proposed algorithm to find multiple global optima in each cluster. Sharing fitness and CM utilized by Perez et al. for the MMO of JSSP were also modified and used for the MMO of PFSSP. The performance of the three algorithms was tested on various benchmark PFSSP and the results indicated that the proposed algorithm had a better performance than CM and sharing fitness, in terms of its ability to find the best-known optimal solution, consistently provide a better optimal solution, and converge to multiple optimal solutions.

As the sharing fitness method had a better performance on a few problems in terms of finding more optimal solutions, an attempt was made to combine the sharing fitness method with the proposed algorithm. This was done by using the feature matrix of the proposed algorithm in the sharing fitness method. When applied for MMO of benchmark PFSSP, the new hybrid algorithm was unable to outperform or equal the proposed algorithm.

Though the proposed algorithm had the best performance of the algorithms tested, further research needs to be performed in the following areas:

Introduction of more rigorous similarity measure metrics for a more efficient clustering.

Comparison of the performance of the proposed approach to algorithms specifically developed for optimization of PFSSP.

Embedding the proposed approach in algorithms specifically developed for optimization of PFSSP and use it to achieve the MMO of PFFSP.

Applying the proposed approach to real-life operational environment, testing the robustness of the proposed algorithms and its parameters, and measuring its performance under different criteria.