VAGADRONE: Intelligent and Fully Automatic Drone Based on Raspberry Pi and Android

Abstract

:1. Introduction

- Their reduced size and maneuverability allow them to fly in closed or open environments and close to obstacles, unlike conventional helicopters.

- The can perform vertical take-off and landing.

- This configuration is controlled by only varying the speed of the four engines.

2. Related Works

3. VAGADRONE

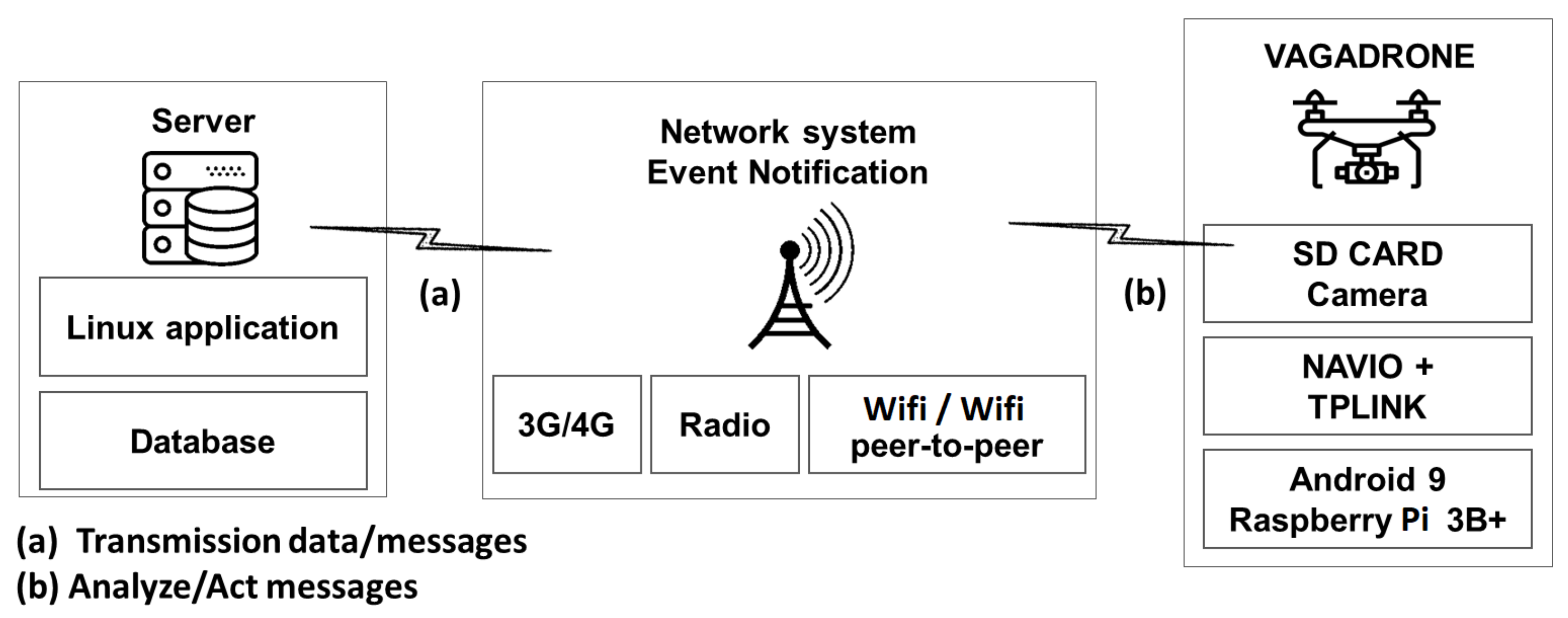

3.1. VAGADRONE: Architecture

3.1.1. Global Architecture

- VGADRONE: This is the main component of the solution. It is an intelligent and autonomous UAV based on the unique Pi 3B and Android 9 map allowing autonomous navigation and live streaming, etc. This component processes messages sent by the server in the form of several types of data (image, command, and text).

- Network system/event notification: This is the component that ensures on the one hand a secure communication between the UAV and the server via three standards (radio, WiFi, 3G/4G); on the other hand, it allows standardizing the communication between the Android UAV and the Linux server. Actually, the standardization is based on a state machine allowing the synchronization between the different requests sent by the server and the notifications sent by the UAV to the server.

- Server: It monitors and controls the UAV. It allows images to be sent to the UAV in order to launch a search session for individuals and objects and records the UAV’s navigation history.

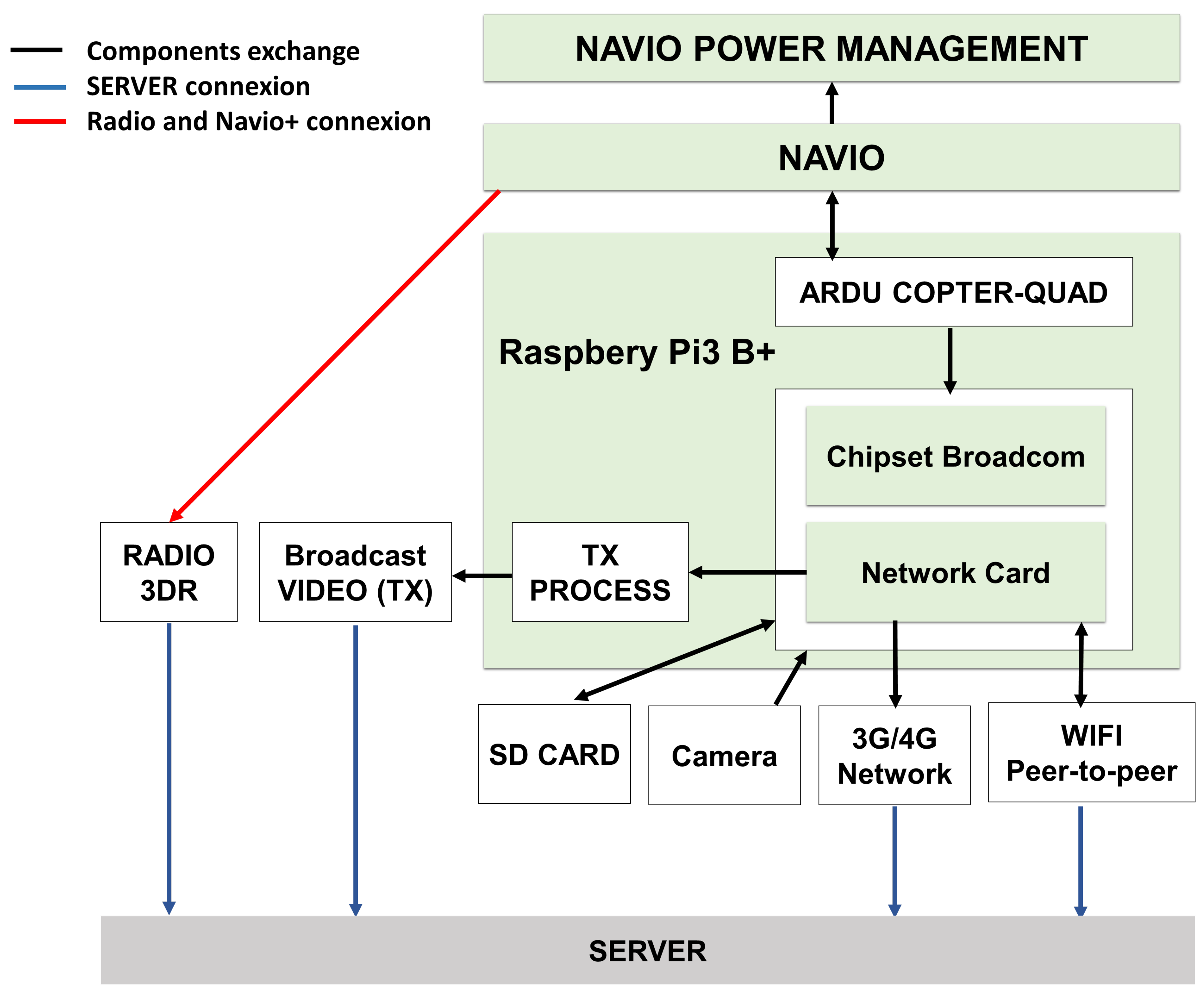

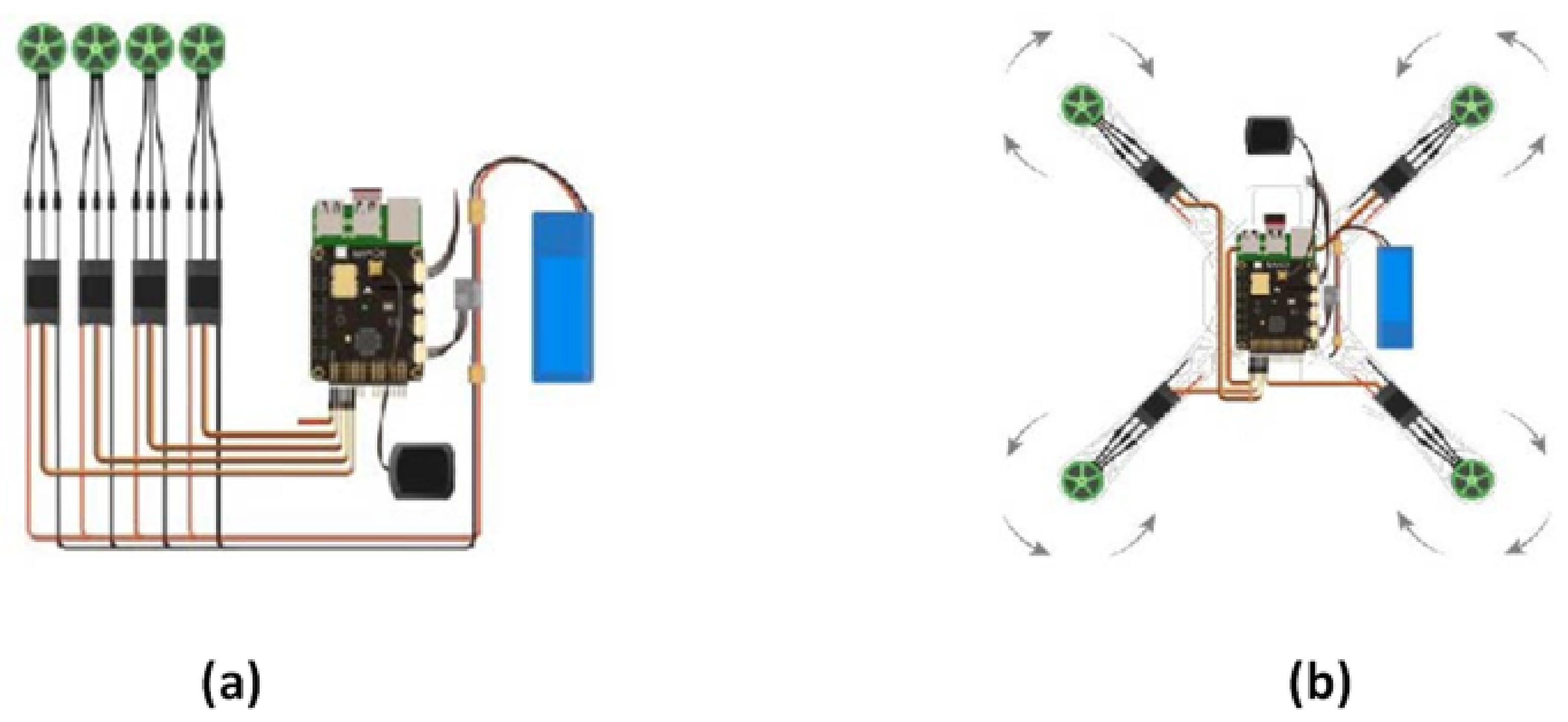

3.1.2. Hardware Architecture

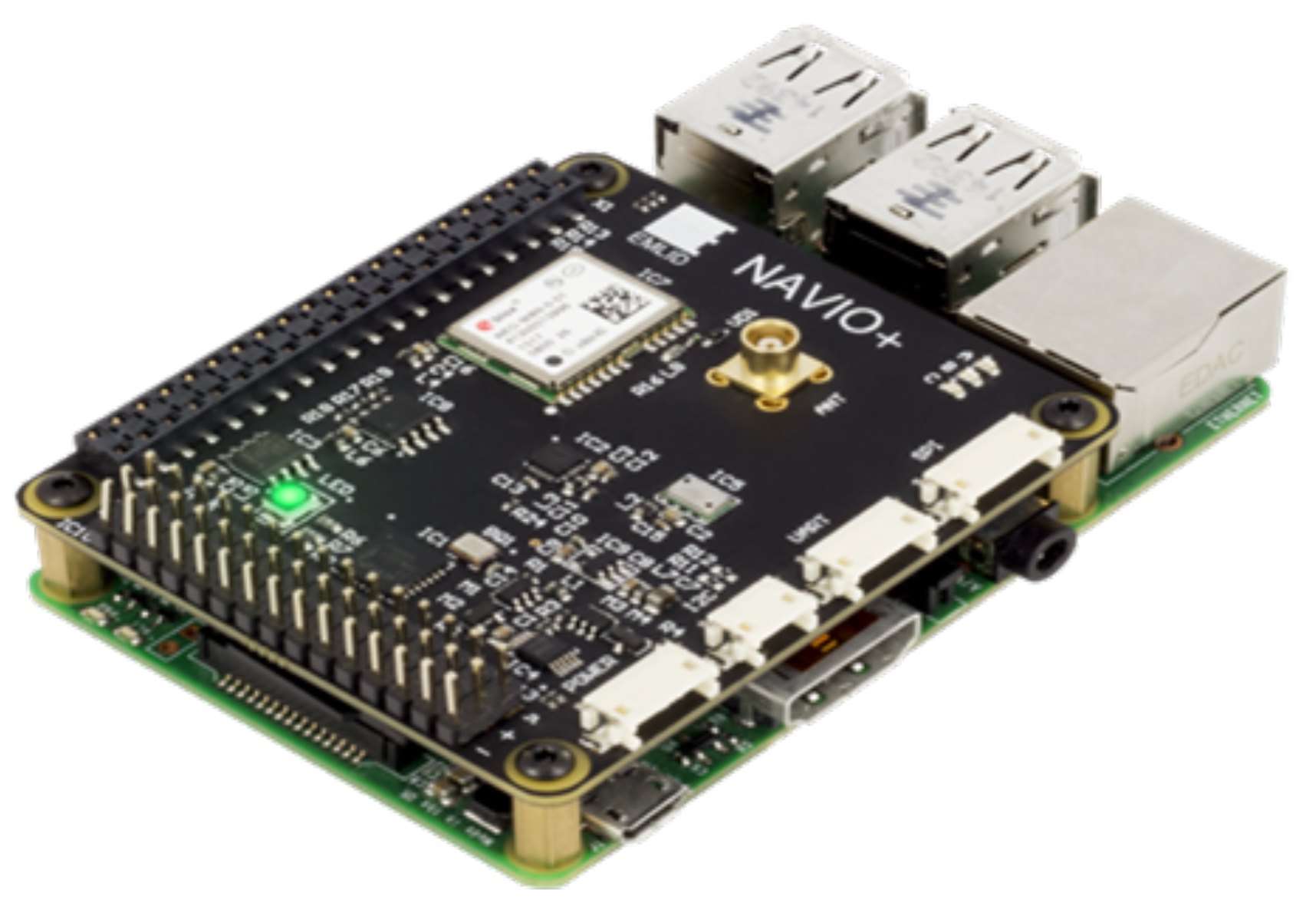

- NAVIO+: Linux autopilot for the Raspberry Pi 3B+. It allows ensuring the autonomous navigation of the UAV via radio communication technology.

- Ardu Copter-quad: This is the flight controller that allows the operation of multi-torque unmanned aircraft and traditional radio-controlled helicopters.

- Smart card reader (4G Shield): This is the module that provides a 4G connection and offers ultra-fast Internet connectivity for live streaming.

- Camera: This is the CSI interface camera that allows video streams to be recorded on the SD card built into the Raspberry Pi 3B+.

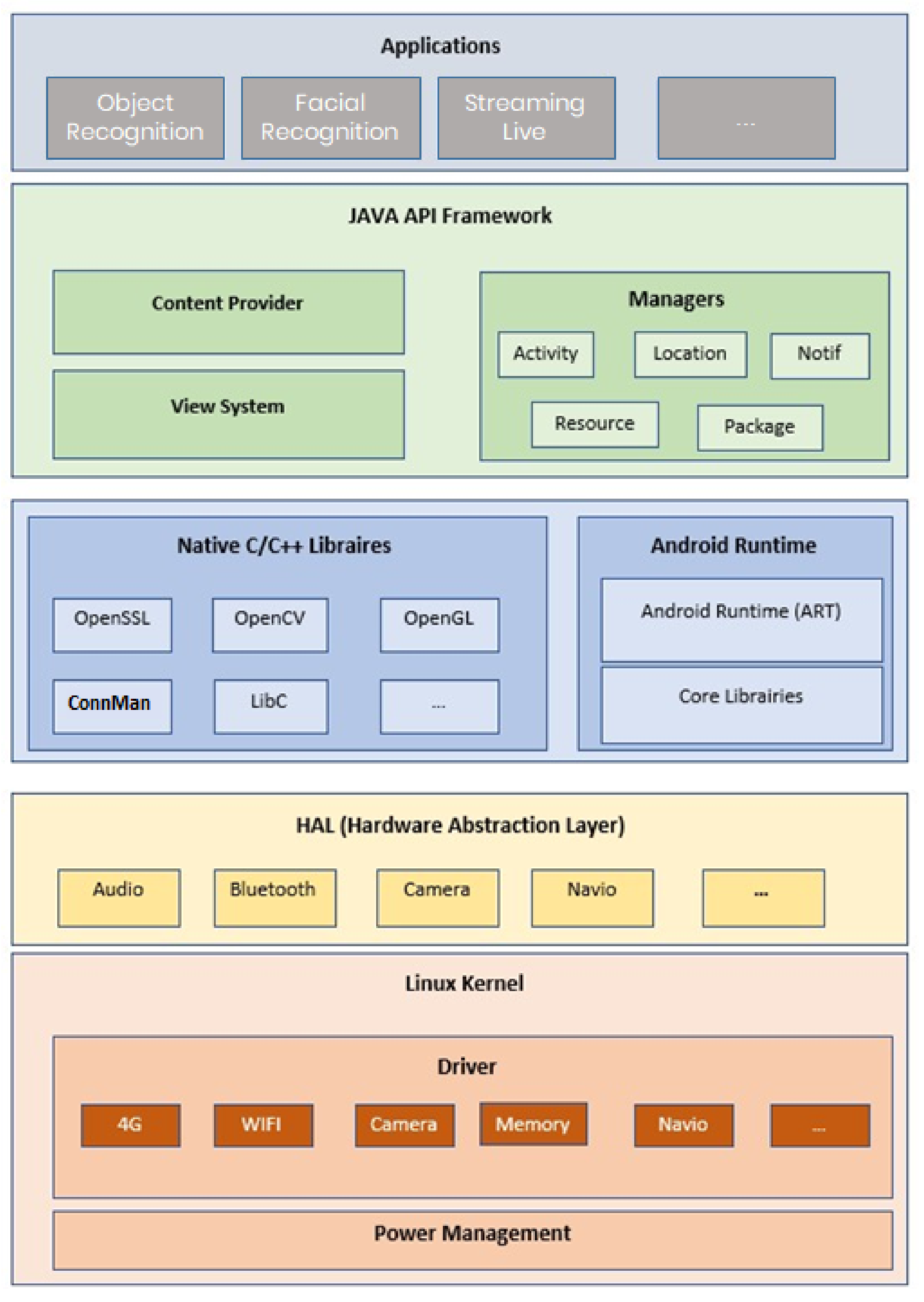

3.1.3. Software Architecture

- The application layer acts as an interface between the UAV and the various functionalities required by the server.

- The second layer, the Java API Framework, enables the acquisition and processing of the functionalities and access to programs. It is the application execution layer.

- The third layer, Android Runtime, represents the OS execution environment.

- The fourth layer is the abstraction layer between the kernel environment and the application environment.

- Finally, the fifth pilot layer contains the drivers and the Linux kernel.

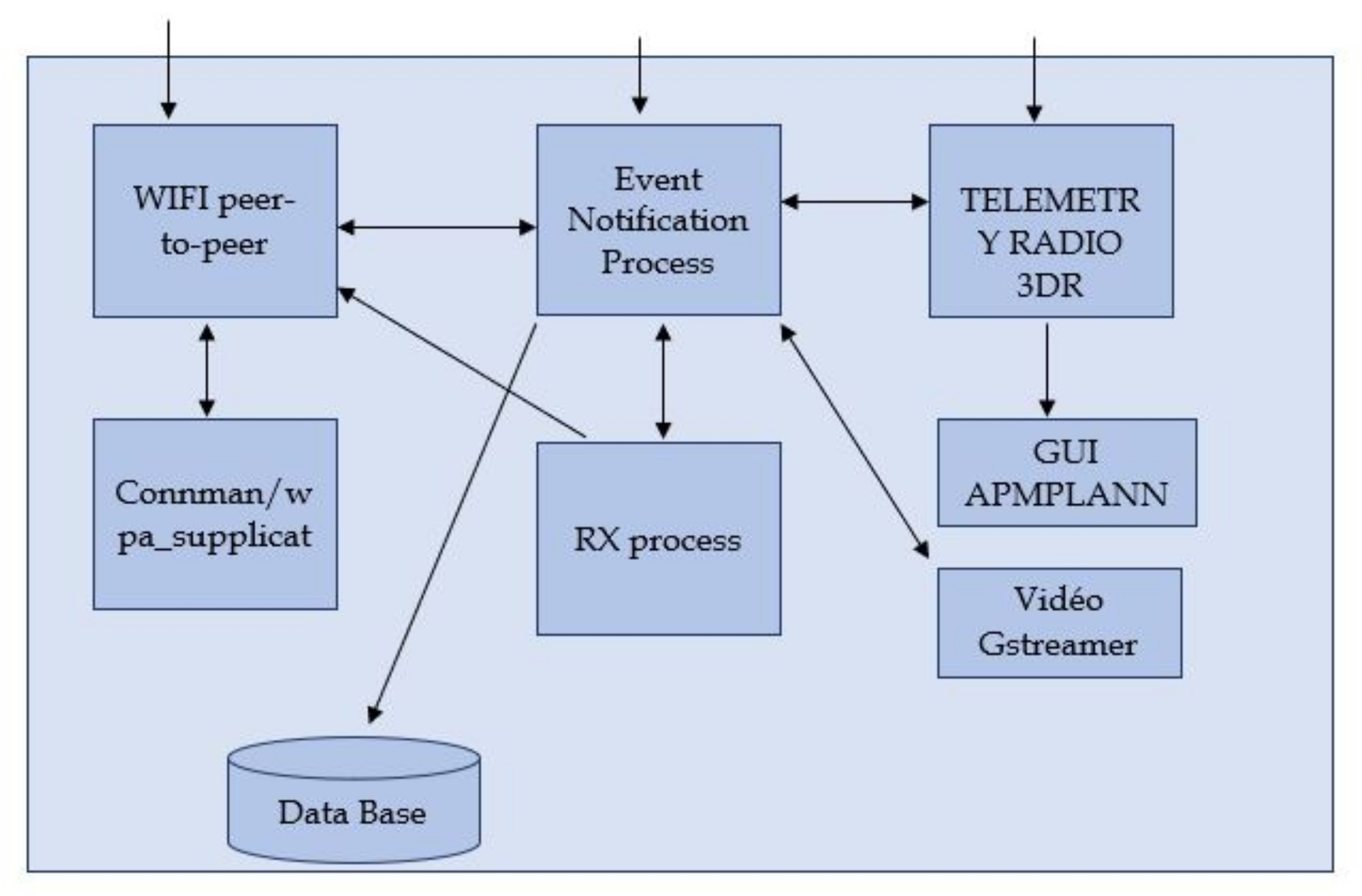

3.1.4. Server

- WiFi peer-to-peer: This is the network card installed in the Linux Ubuntu server certified as 802.11 a/g/n to support high-speed communication between the server and the Raspberry Pi 3B+ card.

- ConnMan/WPA supplicant: This is the network manager and the application to open a peer-to-peer connection between two devices.

- Event notification process: This is a state machine allowing the sending and receiving of notifications between the UAV and the server.

- Database: This is used to record the flight histories.

- Video GStreamer: This is the module that decodes the video streams sent by the UAV.

3.2. VAGADRONE: Features

- The calculation of the optimal UAV navigation trajectory to cover a search area.

- Automatic piloting of the Raspberry Pi 3B+ embedded Android from the Ubuntu server.

- Detection of obstacles during the navigation of the UAV.

- Live streaming of the mission

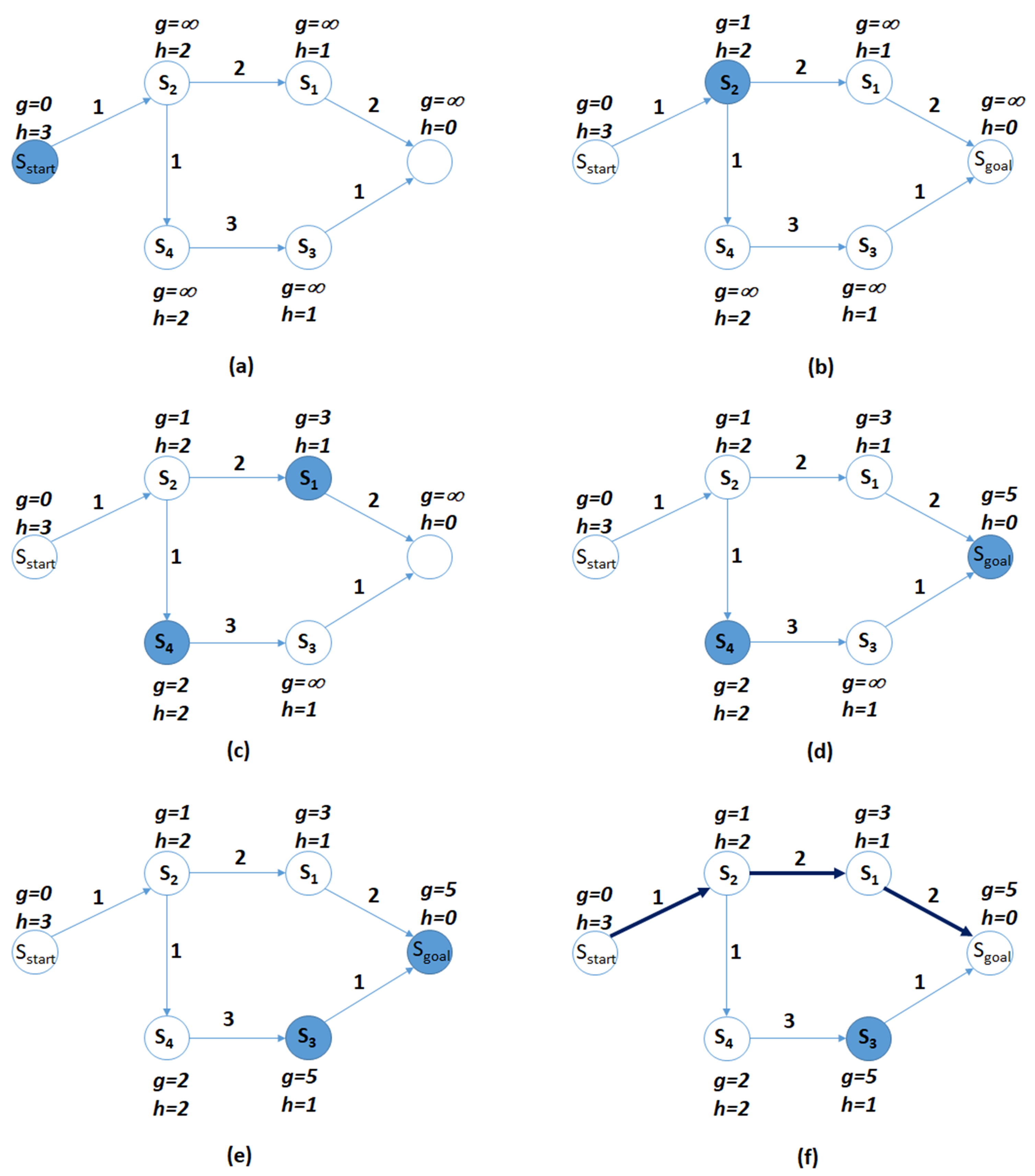

3.2.1. Optimal Trajectory Computation

3.2.2. Automatic Control of the UAV

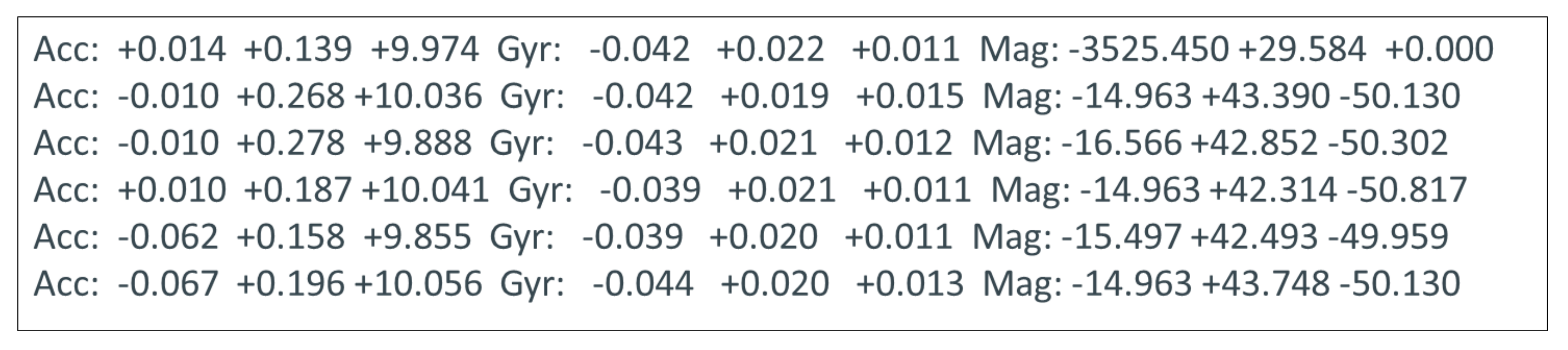

- Dual IMU: MPU-9250: This has all the necessary sensors for the knowledge of the angular position and linear acceleration of our system. It integrates an accelerometer, a gyroscope, and a magnetometer with very high sensitivities, which helped us greatly in the automation of the UAV.

- GNSS receiver: NEO-M8M: This is the GPS module integrated in the NAVIO+ card. This module communicates with the card via an SPI link, sends messages containing location information, and receives configuration data.

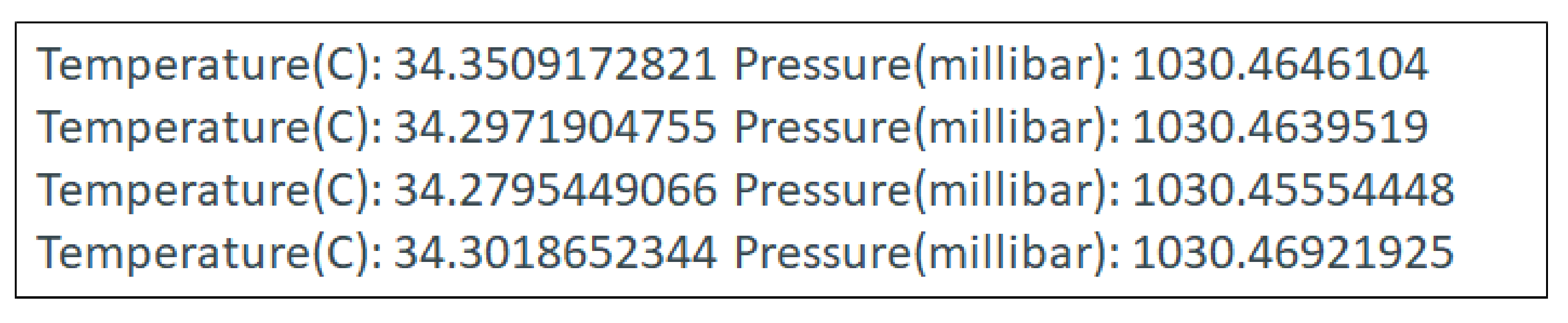

- High-resolution barometer: MS5611: This is a high-resolution barometer with a resolution of 10 cm for accurate UAV altitude values.

- Extension ports: The NAVIO+ card has a variety of interfaces available for possible extensions, namely ADC, I2C, and UART interfaces.

3.2.3. Obstacle Detection while Navigating

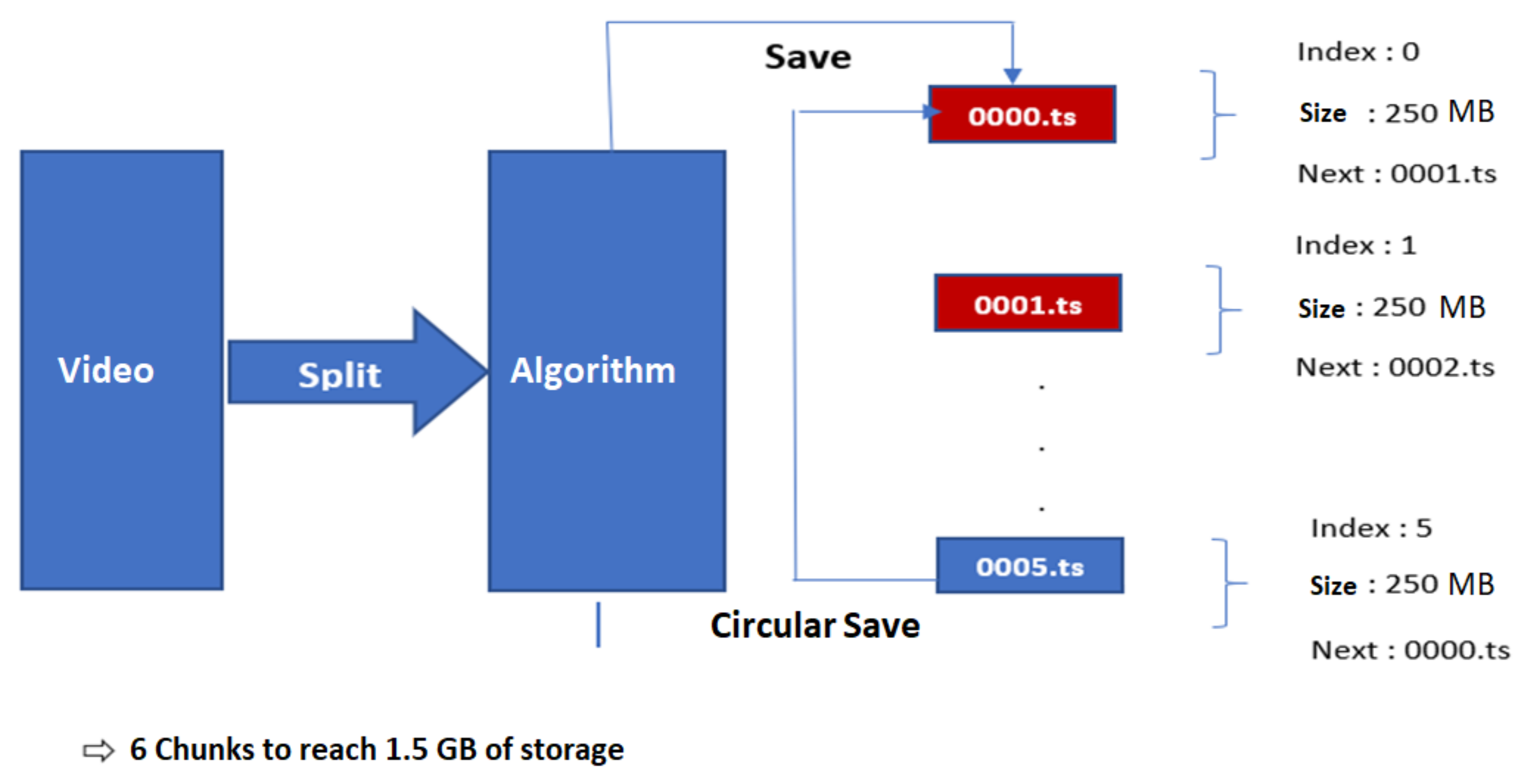

3.2.4. Live Streaming

- Latency in the operation of the UAV’s navigation module (navigation, obstacle detection, etc.).

- The problem of real-time image processing by the facial recognition algorithm.

- Macroblocks in captured videos.

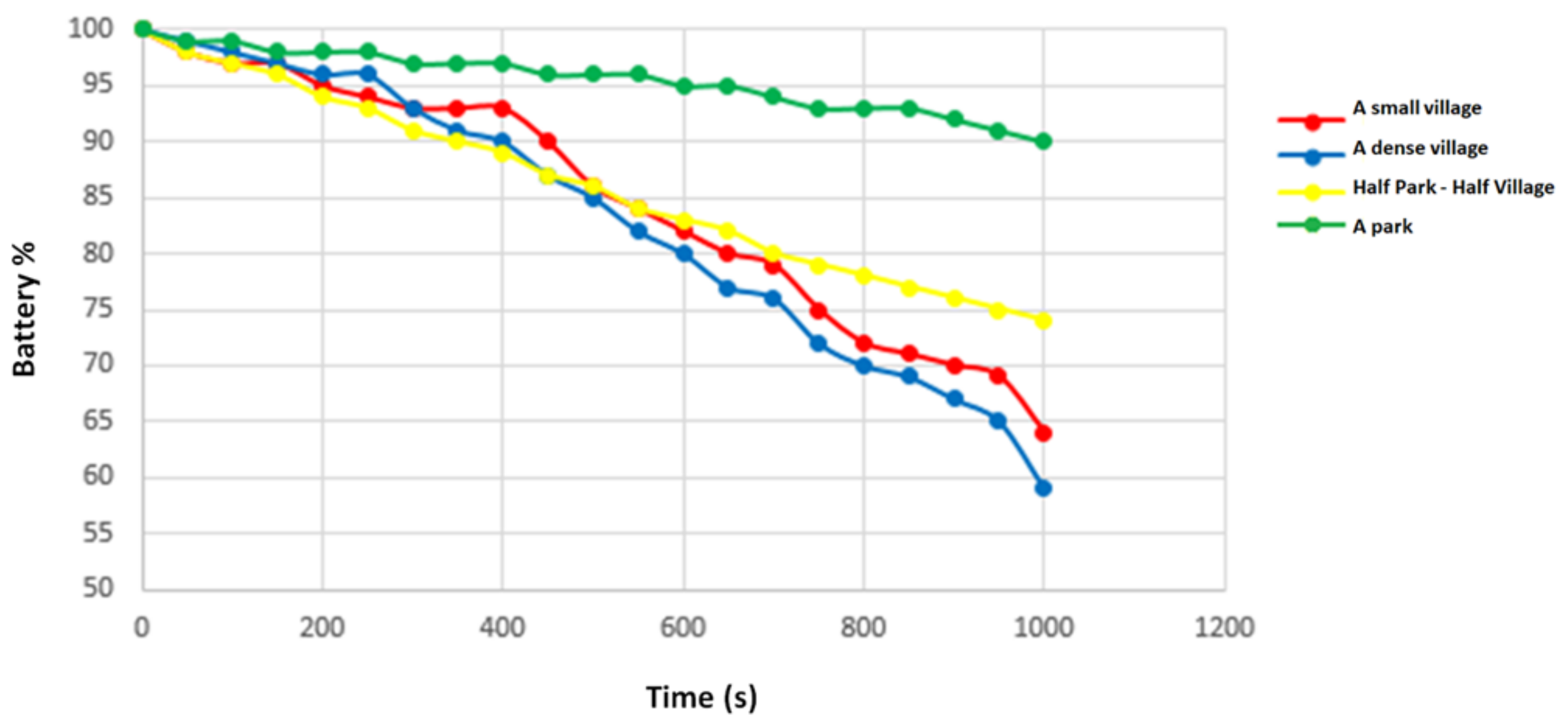

4. Experiments and Results

- A Raspberry Pi 3B+ board based on an ARM Cortex-A53 64 bit, quad-core 1.2 GHz processor. We chose this board for several reasons:

- The size of the Raspberry Pi 3B+ is small, and so, the card is perfectly suited to be used in a drone.

- The Raspberry Pi 3B+ is very powerful. It includes a 4 core processor, a 1 GB processor, and 1 GB of RAM, so one can install a real operating system like Android and run Android and applications that can be used during the realization of the project.

- The NAVIO2 flight controller described in the previous section.

- A camera attached to the Raspberry Pi 3 Model B, by means of a Pin 15 ribbon cable, via the dedicated 15 pin serial interface (CSI) camera. It supports 1080 p × 720 p with 60 frames per second thanks to the 5 MP sensor. This module was intended to be used in the context of a broadcast. It should allow monitoring its environment in real time and saving a history of images if necessary.

- Ultrasonic sensors (E18-D80NK infrared sensor).

- Brushless motors Readytosky 920KV defined by several characteristics:

- KV refers to the number of turns per minute per Volt. A motor with a very high KV will fall into the category of very high speed, but low torque motors, while a motor with a low KV will fall into the opposite category. The chosen motor was a Readytosky Motor with a KV of 920 powered by a battery that provides about 11.1 V; our motor would run at 11.1 × 920 = 10.212 rpm.

- The voltage that the motor can withstand is generally a range expressed as a number S, 1 S being equivalent to 3.7 V. In the case of the motor we chose, its operation must be with batteries of type 3 S.

- Another element to take into account is the number of amperes that the motor will consume at full load, because this value will be used to choose the electronic speed control (ESC).

- The ESC driver. This controller allows managing the motor rotation speed.

- A LiPo battery.

- -

- We launched a recording of a video with the CSI interface camera under the Raspberry Pi 3B+.

- -

- Under the terminal of the Raspberry Pi 3B+, we ran the command below to launch the program to generate the circular buffer.

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Gregory, D. From a view to a kill: Drones and late modern war. Theory Cult. Soc. 2011, 28, 188–215. [Google Scholar] [CrossRef]

- Mandal, S.; Maheta, K.; Kumar, V.; Prasad, M.S. Control of UAV Using GSM Technology. In Proceedings of the International Conference on Modern Research in Aerospace Engineering; Springer: Singapore, 2016; pp. 77–85. [Google Scholar]

- Venkatesh, G.A.; Sumanth, P.; Jansi, K.R. Fully autonomous UAV. In Proceedings of the 2017 International Conference on Technical Advancements in Computers and Communications (ICTACC), Melmaurvathur, India, 10–11 April 2017; pp. 41–44. [Google Scholar]

- Dunkley, O.; Engel, J.; Sturm, J.; Cremers, D. Visual-inertial navigation for a camera-equipped 25g nano-quadrotor. In Proceedings of the IROS2014 Aerial Open Source Robotics Workshop, Chicago, IL, USA, 27 June–11 July 2014; p. 2. [Google Scholar]

- Briod, A.; Zufferey, J.C.; Floreano, D. Optic-flow based control of a 46g quadrotor. In Proceedings of the Workshop on Vision-based Closed-Loop Control and Navigation of Micro Helicopters in GPS-denied Environments, IROS 2013 (No. CONF), Tokyo, Japan, 7 November 2013. [Google Scholar]

- Moore, R.J.; Dantu, K.; Barrows, G.L.; Nagpal, R. Autonomous MAV guidance with a lightweight omnidirectional vision sensor. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–5 June 2014; pp. 3856–3861. [Google Scholar]

- Oleynikova, H.; Honegger, D.; Pollefeys, M. Reactive avoidance using embedded stereo vision for mav flight. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 50–56. [Google Scholar]

- Noh, J.; Cho, K.; Kim, S.; Kim, W.; Jeong, J.; Sang, J.; Gong, M. Development of application for guidance and controller unit for low cost and small UAV missile based on smartphone. J. Korean Soc. Aeronautical Space Sci. 2017, 45, 610–617. [Google Scholar]

- Daryanavard, H.; Harifi, A. Implementing Face Detection System on UAV Using Raspberry Pi Platform. In Proceedings of the Iranian Conference on Electrical Engineering (ICEE), Mashhad, Iran, 8–10 May 2018; pp. 1720–1723. [Google Scholar]

- Bekaroo, G.; Santokhee, A. Power consumption of the Raspberry Pi: A comparative analysis. In Proceedings of the 2016 IEEE International Conference on Emerging Technologies and Innovative Business Practices for the Transformation of Societies (EmergiTech), Balaclava, Mauritius, 3–6 August 2016; pp. 361–366. [Google Scholar]

- Kurniawan, A. Getting Started with Android Things for Raspberry Pi 3; PE Press: Riverside, CA, USA, 2017. [Google Scholar]

- Pampattiwar, K.; Lakhani, M.; Marar, R.; Menon, R. Home automation using raspberry pi controlled via an android application. Int. J. Curr. Eng. Technol. 2017, 7, 962–967. [Google Scholar]

- Dubins, L.E. On curves of minimal length with a constraint on average curvature, and with prescribed initial and terminal positions and tangents. Am. J. Math. 1957, 79, 497–516. [Google Scholar] [CrossRef]

| Value | State |

|---|---|

| −1 | Obstacle |

| 0 | Start |

| 1 | Goal |

| 2 | Crossable cell |

| Epsilon | 0 | 1 | 1.5 | 5 |

|---|---|---|---|---|

| Number of iterations | 145 | 72 | 36 | 28 |

| Length of the path | 23.8995 | 23.8995 | 25.3137 | 29.7990 |

| Chunk (.ts) | Duration (s) | Size (MB) | Comment |

|---|---|---|---|

| First cycle (recorded content of 1500 MB) | |||

| 0000.ts | 25 | 250 | |

| 0001.ts | 25 | 250 | |

| 0002.ts | 25 | 250 | |

| 0003.ts | 25 | 250 | |

| 0004.ts | 25 | 250 | |

| 0005.ts | 25 | 250 | |

| Second cycle (recorded content of 1500 MB) | |||

| 0000.ts | 25 | 250 | Removed old content |

| 0001.ts | 25 | 250 | Removed old content |

| 0002.ts | 25 | 250 | Removed old content |

| 0003.ts | 25 | 250 | Removed old content |

| 0004.ts | 25 | 250 | Removed old content |

| 0005.ts | 25 | 250 | Removed old content |

| Third cycle (recorded content of 1500 MB) | |||

| 0000.ts | 25 | 250 | Removed old content |

| 0001.ts | 25 | 250 | Removed old content |

| 0002.ts | 25 | 250 | Removed old content |

| 0003.ts | 25 | 250 | Removed old content |

| 0004.ts | 25 | 250 | Removed old content |

| 0005.ts | 25 | 250 | Removed old content |

| Fourth cycle (recorded content of 500 MB) | |||

| 0000.ts | 25 | 250 | Removed old content |

| 0001.ts | 25 | 250 | Removed old content |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Benhadhria, S.; Mansouri, M.; Benkhlifa, A.; Gharbi, I.; Jlili, N. VAGADRONE: Intelligent and Fully Automatic Drone Based on Raspberry Pi and Android. Appl. Sci. 2021, 11, 3153. https://doi.org/10.3390/app11073153

Benhadhria S, Mansouri M, Benkhlifa A, Gharbi I, Jlili N. VAGADRONE: Intelligent and Fully Automatic Drone Based on Raspberry Pi and Android. Applied Sciences. 2021; 11(7):3153. https://doi.org/10.3390/app11073153

Chicago/Turabian StyleBenhadhria, Saifeddine, Mohamed Mansouri, Ameni Benkhlifa, Imed Gharbi, and Nadhem Jlili. 2021. "VAGADRONE: Intelligent and Fully Automatic Drone Based on Raspberry Pi and Android" Applied Sciences 11, no. 7: 3153. https://doi.org/10.3390/app11073153

APA StyleBenhadhria, S., Mansouri, M., Benkhlifa, A., Gharbi, I., & Jlili, N. (2021). VAGADRONE: Intelligent and Fully Automatic Drone Based on Raspberry Pi and Android. Applied Sciences, 11(7), 3153. https://doi.org/10.3390/app11073153