Psychological Stress Detection According to ECG Using a Deep Learning Model with Attention Mechanism

Abstract

1. Introduction

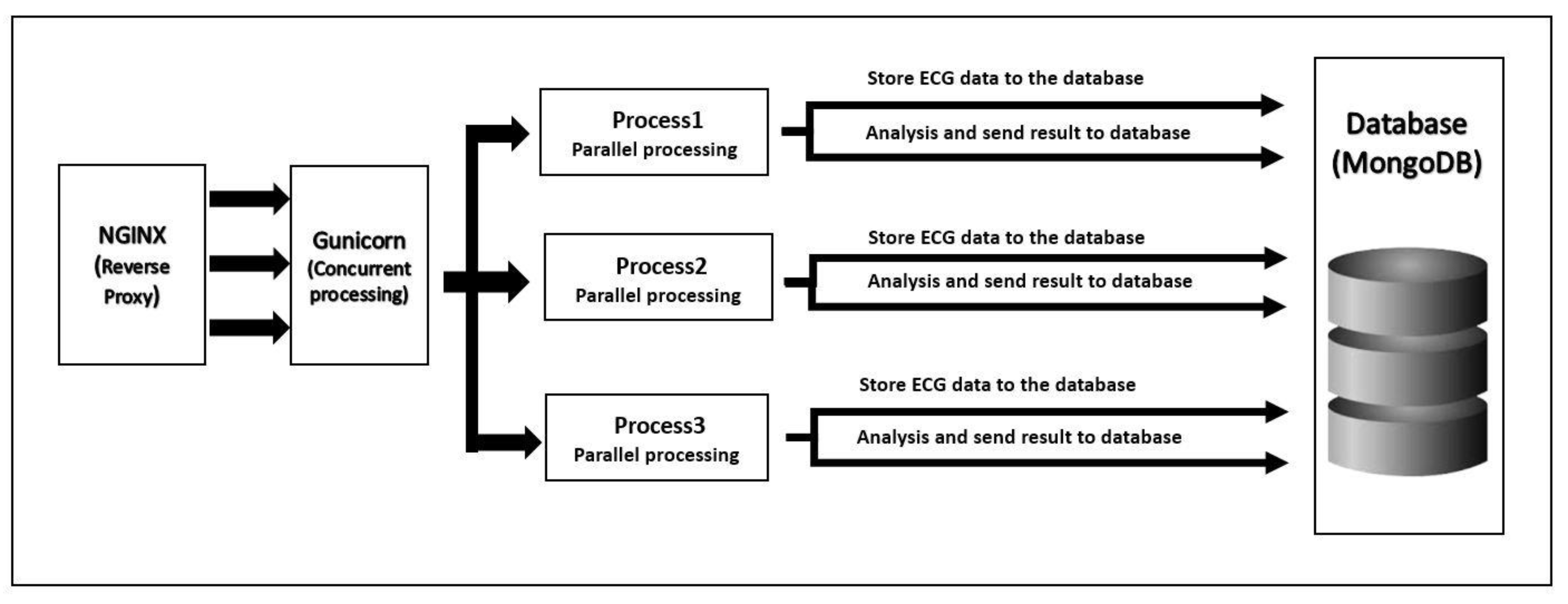

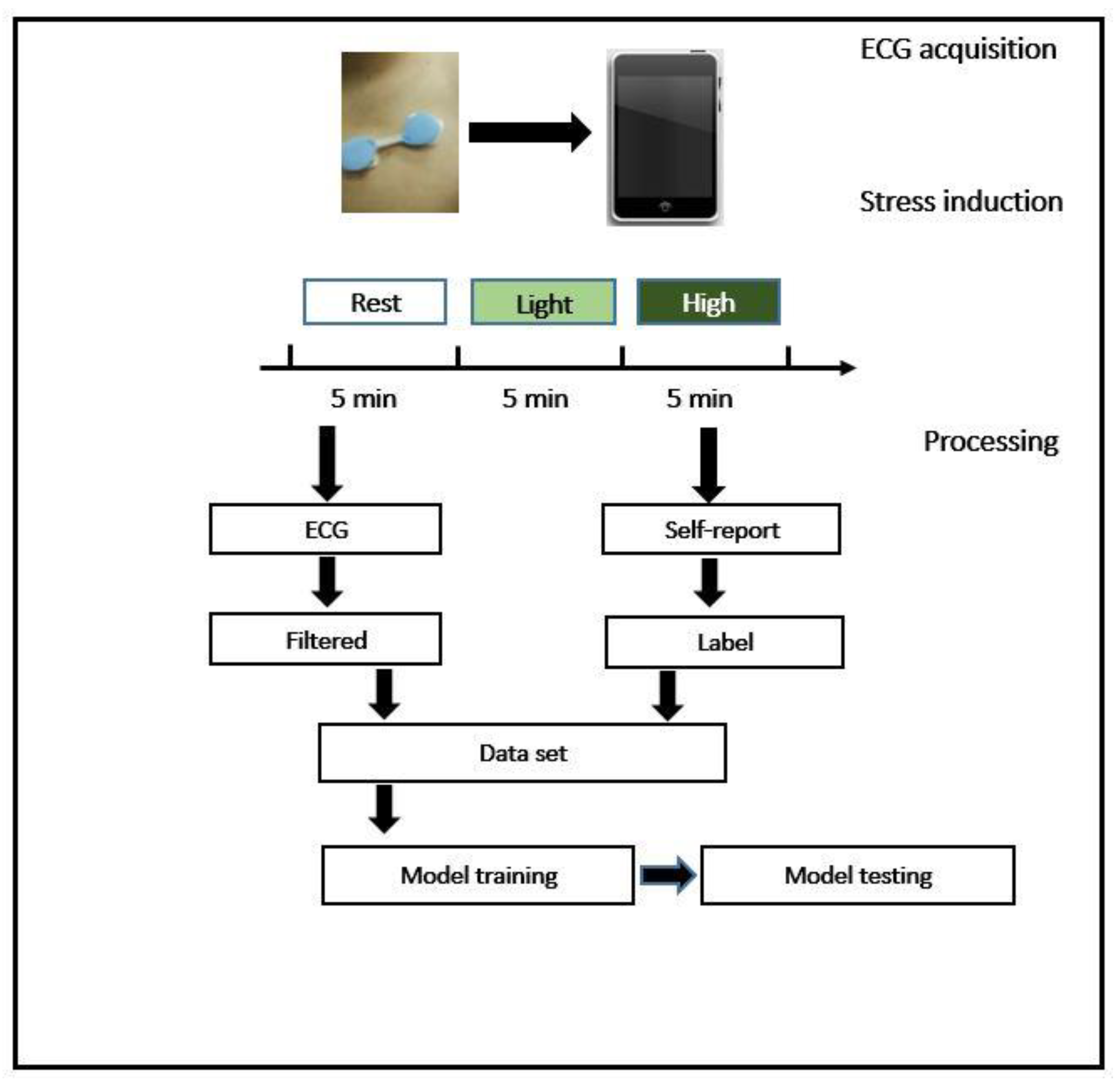

2. Materials and Methods

2.1. Experiments and Data Acquisition

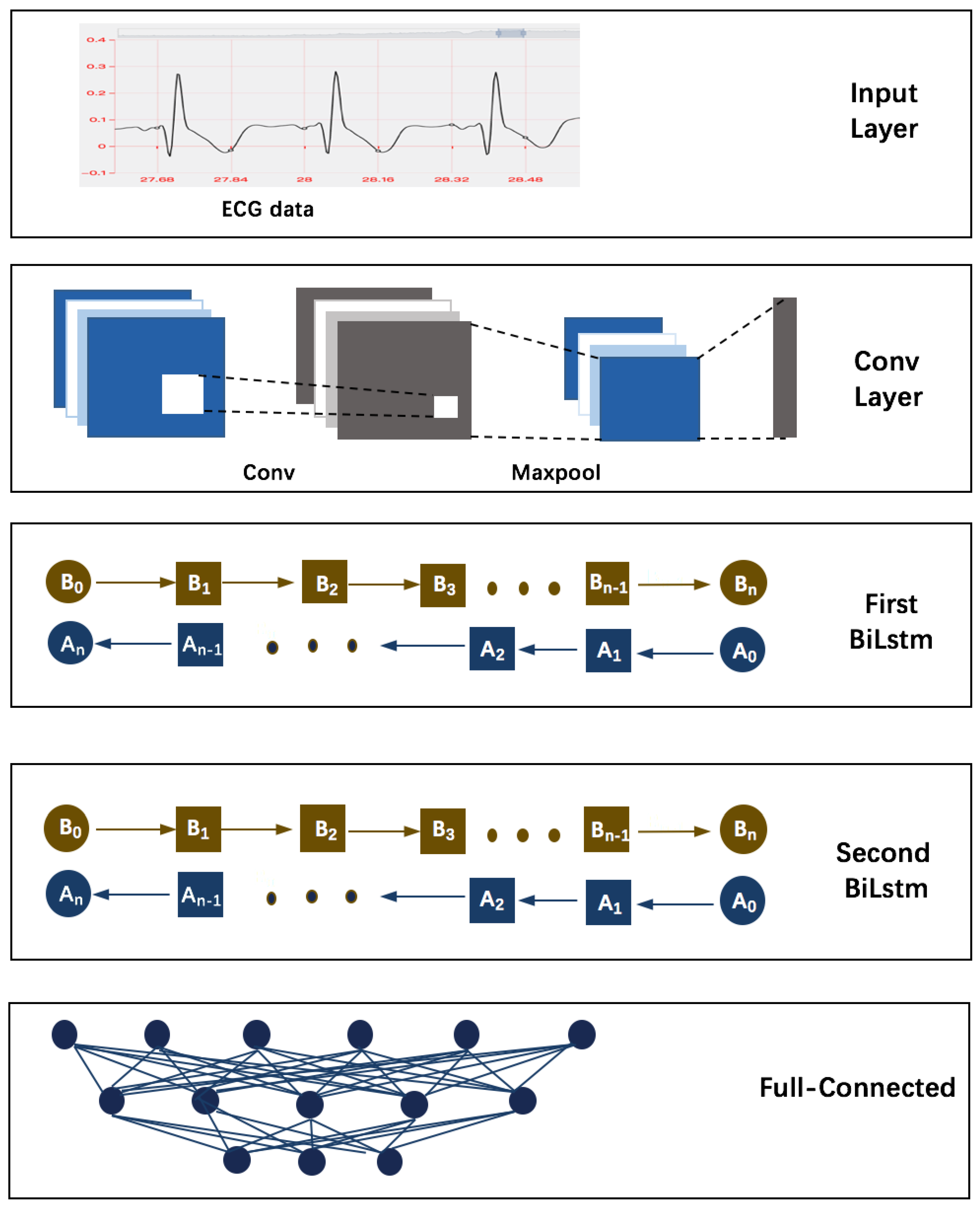

2.2. CNN-BiLSTM

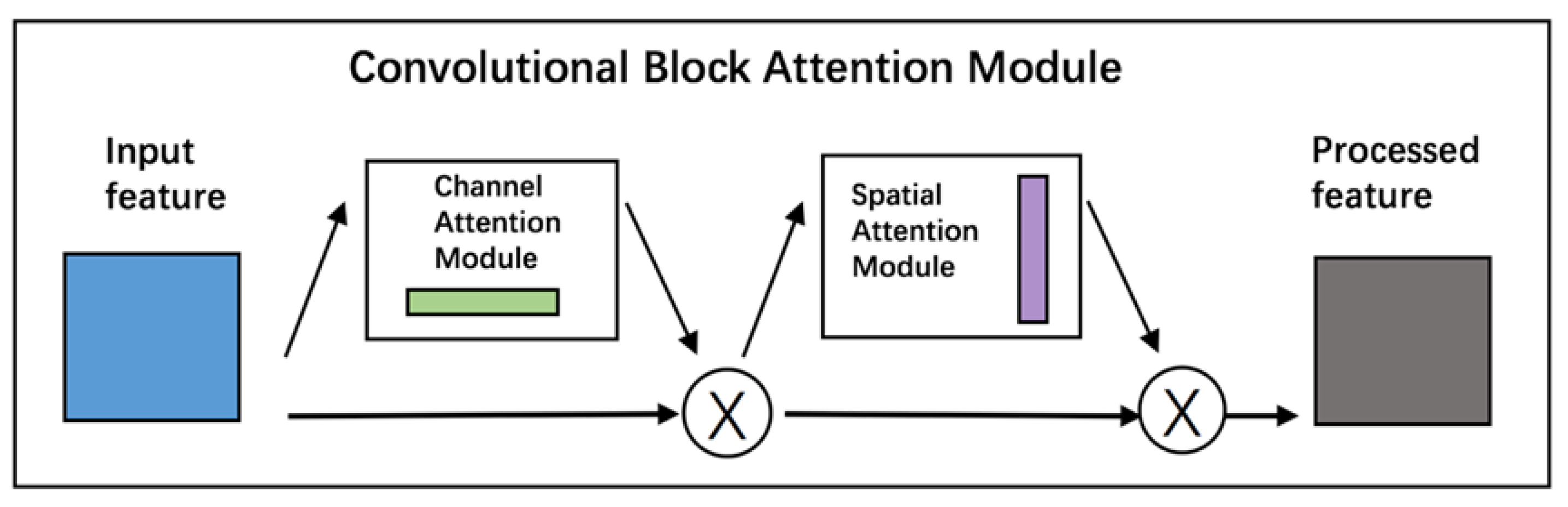

2.3. The Attention Mechanism with CNN-BiLSTM

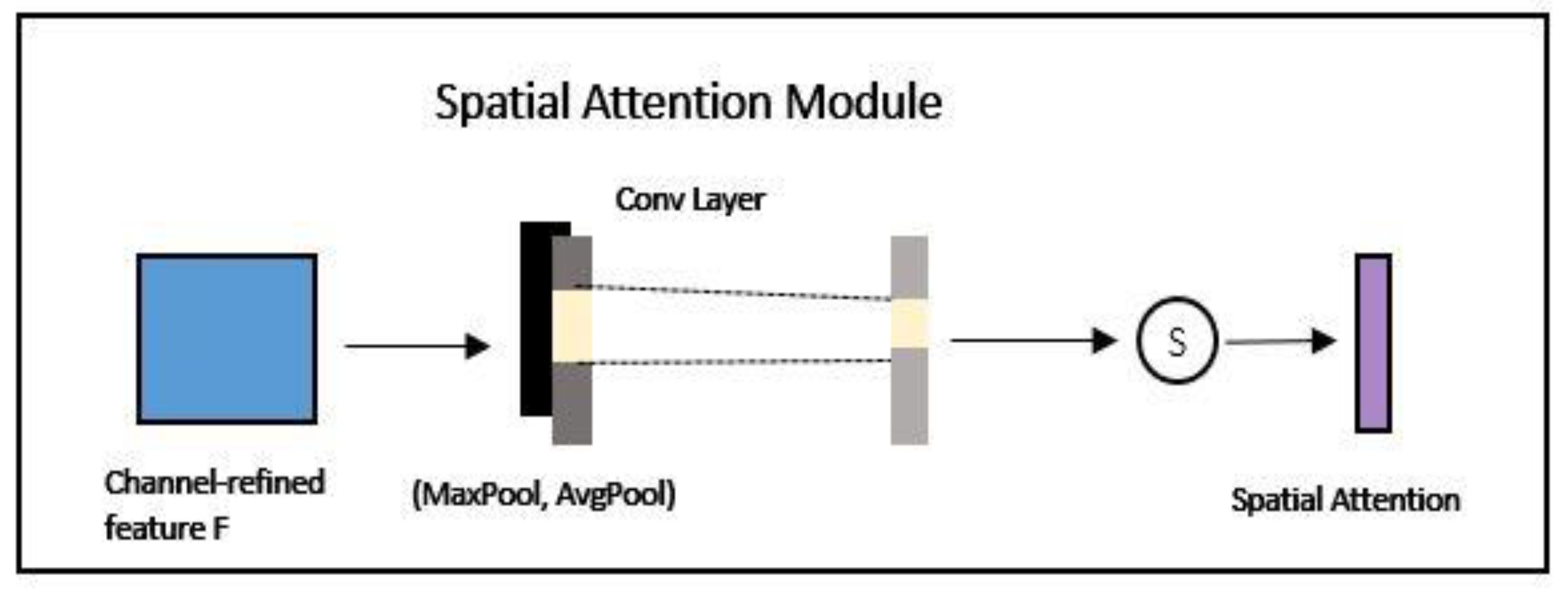

2.3.1. The Convolutional Block Attention Module (CBAM)

2.3.2. Non-Local Neural Networks

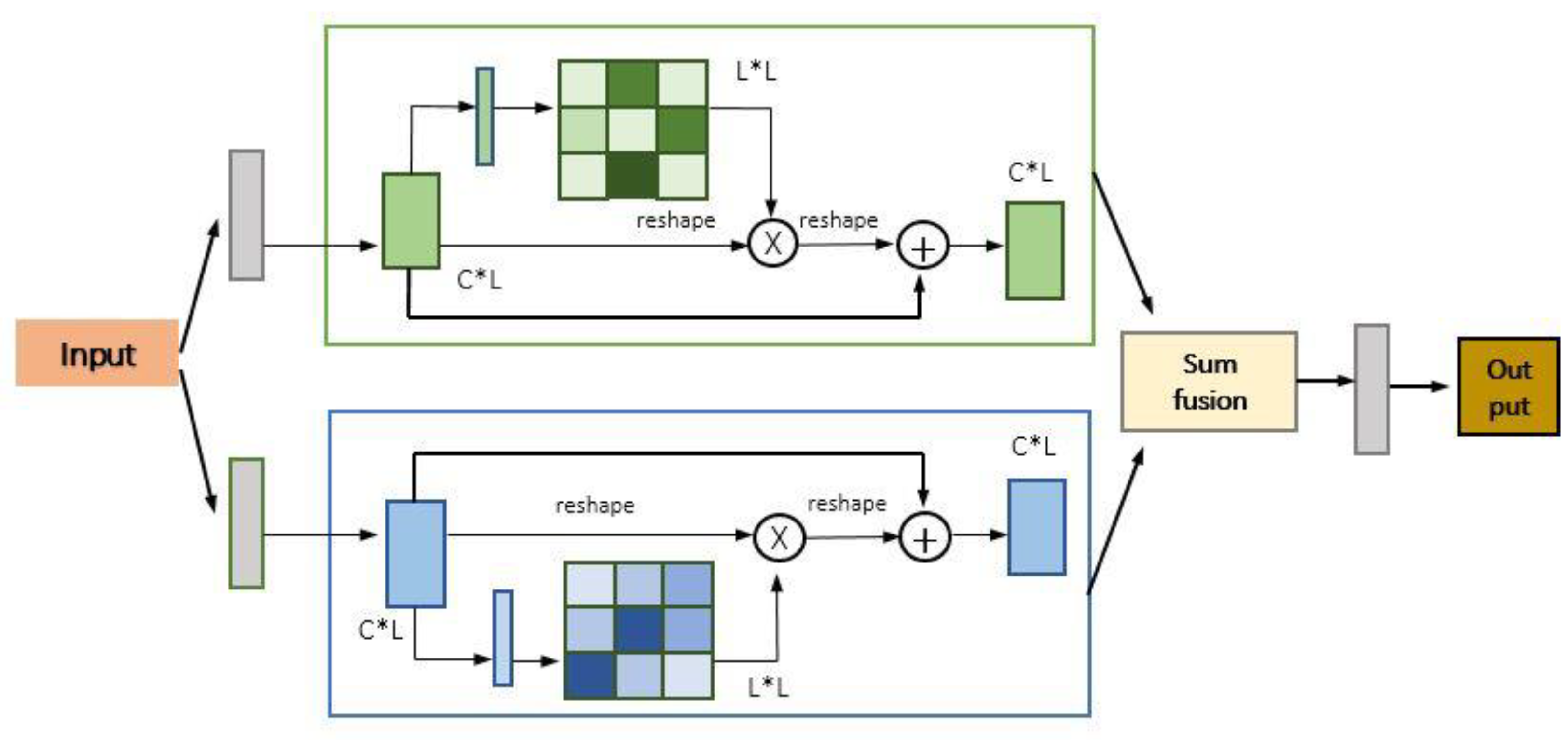

2.3.3. The Dual Attention Network (DA-NET)

2.3.4. Attention-Based Bidirectional Long Short-Term Memory Networks

2.4. The Structure and Parameters of the Models with Attention Mechanism

3. Result

4. Conclusions and Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Sauter, S.L.; Murphy, L.R.; Hurrell, J.J. Prevention of work-related psychological disorders: A national strategy proposed by the National Institute for Occupational Safety and Health (NIOSH). Am. Psychol. 1990, 45, 1146–1158. [Google Scholar] [CrossRef] [PubMed]

- Hillebrandt, J. Work-Related Stress and Organizational Level Interventions-Addressing the Problem at Source; GRIN Verlag: London, UK, 2008. [Google Scholar]

- Ebner-Priemer, U.W.; Trull, T.J. Ecological momentary assessment of mood disorders and mood dysregulation. Psychol. Assess. 2009, 21, 463–475. [Google Scholar] [CrossRef] [PubMed]

- Spruijt-Metz, D.; Nilsen, W. Dynamic Models of Behavior for Just-in-Time Adaptive Interventions. IEEE Pervasive Comput. 2014, 13, 13–17. [Google Scholar] [CrossRef]

- Carrasco, G.A.; Kar, L.D.V.D. Neuroendocrine pharmacology of stress. Eur. J. Pharmacol. 2003, 463, 235–272. [Google Scholar] [CrossRef]

- Tsigos, C.; Chrousos, G.P. Hypothalamic-pituitary-adrenal axis, neuroendocrine factors and stress. J. Psychosomat. Res. 2002, 53, 865–871. [Google Scholar] [CrossRef]

- Jin, L.; Xue, Y.; Li, Q.; Feng, L. Integrating Human Mobility and Social Media for Adolescent Psychological Stress Detection. In International Conference on Database Systems for Advanced Applications; Springer: Berlin/Heidelberg, Germany, 2016. [Google Scholar]

- Lin, H.; Jia, J.; Guo, Q.; Xue, Y.; Huang, J.; Cai, L.; Feng, L. Psychological stress detection from cross-media microblog data using Deep Sparse Neural Network. In Proceedings of the IEEE International Conference on Multimedia and Expo (ICME), Chengdu, China, 14–18 July 2014. [Google Scholar]

- Hwang, B.; You, J.; Vaessen, T.; Myin-Germeys, I.; Park, C.; Zhang, B.T. Deep ECGNet: An Optimal Deep Learning Framework for Monitoring Mental Stress Using Ultra Short-Term ECG Signals. Telemed. e-Health 2018, 24, 753–772. [Google Scholar] [CrossRef] [PubMed]

- Chorowski, J.; Bahdanau, D.; Serdyuk, D.; Cho, K.; Bengio, Y. Attention-based models for speech recognition. arXiv 2015, arXiv:1506.07503. [Google Scholar]

- Guo, H.R.; Wang, X.J.; Zhong, Y.X.; Peng, L.U. Traffic signs recognition based on visual attention mechanism. J. China Univ. Posts Telecommun. 2011, 18 (Suppl. S2), 12–16. [Google Scholar] [CrossRef]

- Huang, C.W.; Narayanan, S.S. Deep convolutional recurrent neural network with attention mechanism for robust speech emotion recognition. In Proceedings of the IEEE International Conference on Multimedia & Expo, Hong Kong, China, 10–14 July 2017. [Google Scholar]

- Choi, E.; Bahadori, M.T.; Kulas, J.A.; Schuetz, A.; Stewart, W.F.; Sun, J. Retain: An interpretable predictive model for healthcare using reverse time attention mechanism. arXiv 2016, arXiv:1608.05745. [Google Scholar]

- Mnih O’Shea, K.; Nash, R. An introduction to convolutional neural networks. arXiv 2015, arXiv:1511.08458. [Google Scholar]

- Dedovic, K.; Renwick, R.; Mahani, N.K.; Engert, V.; Lupien, S.J.; Pruessner, J.C. The Montreal Imaging Stress Task: Using functional imaging to investigate the effects of perceiving and processing psychosocial stress in the human brain. J. Psychiatry Neurosci. 2005, 30, 319. [Google Scholar] [PubMed]

- Zaremba, W.; Sutskever, I.; Vinyals, O. Recurrent neural network regularization. arXiv 2014, arXiv:1409.2329. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Wang, X.; Girshick, R.; Gupta, A.; He, K. Non-local neural networks. In Proceedings of the IEEE conference on computer vision and pattern recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7794–7803. [Google Scholar]

- Fu, J.; Liu, J.; Tian, H.; Li, Y.; Bao, Y.; Fang, Z.; Lu, H. Dual attention network for scene segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3146–3154. [Google Scholar]

- Zhou, P.; Shi, W.; Tian, J.; Qi, Z.; Li, B.; Hao, H.; Xu, B. Attention-based bidirectional long short-term memory networks for relation classification. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics, Berlin, Germany, 7–12 August 2016; Volume 2, pp. 207–212. [Google Scholar]

| CNN | |

|---|---|

| Convolutional layer | Filter = 32, kernel size = 200, stride = 8 |

| Rectified Linear Unit | |

| Batch normalization + dropout (0.70) | |

| Max pooling | Pool size = 8, stride = 8 |

| First BiLSTM | |

| LSTM layer + dropout (0.70) | Step size = 25, LSTM size = 40 |

| The stitching method of LSTM | Adding |

| Second BiLSTM | |

| LSTM layer + dropout (0.70) | Step size = 25, LSTM-size = 40 |

| The stitching method of LSTM | Adding |

| CNN | |

|---|---|

| Convolutional layer | Filter = 32, kernel size = 200, stride = 8 |

| Rectified Linear Unit | |

| Batch normalization + dropout (0.70) | |

| Max-pooling | Pool size = 8, stride = 8 |

| CBAM | K = 0.3, kernel size = 200 |

| First BiLSTM | |

| Second BiLSTM |

| CNN | |

|---|---|

| Convolutional layer | Filter = 32, kernel size = 200, stride = 8 |

| Rectified Linear Unit | |

| Batch normalization + dropout (0.70) | |

| Max-pooling | Pool size = 8, stride = 8 |

| Non-local neural network | F = 16 (1/2*Filter) |

| First BiLSTM | |

| Second BiLSTM |

| CNN | |

|---|---|

| Convolutional layer | Filter = 32, kernel size = 200, stride = 8 |

| Rectified Linear Unit | |

| Batch normalization + dropout (0.70) | |

| Max-pooling | Pool size = 8, stride = 8 |

| Dual Attention Network | The number of filters in the conv layer to generate B and C in the position module (F) = 8 |

| First BiLSTM | |

| Second BiLSTM |

| CNN | |

|---|---|

| Convolutional layer | Filter = 32, kernel size = 200, stride = 8 |

| Rectified Linear Unit | |

| Batch normalization + dropout (0.70) | |

| Max-pooling | Pool size = 8, stride = 8 |

| First Attention-Based BiLSTM | |

| Second Attention-Based BiLSTM |

| CNN | |

|---|---|

| Convolutional layer | Filter = 32, kernel size = 200, stride = 8 |

| Rectified Linear Unit | |

| Batch normalization + dropout (0.70) | |

| Max-pooling | Pool size = 8, stride = 8 |

| Non-local-neural network | F = 100 (1/2*Kernel size) |

| First Attention-Based BiLSTM | |

| Second Attention-Based BiLSTM |

| CNN | |

|---|---|

| Convolutional layer | Filter = 32, kernel size = 200, stride = 8 |

| Rectified Linear Unit | |

| Batch normalization + dropout (0.70) | |

| Max-pooling | Pool size = 8, stride = 8 |

| Non-local neural network | F = 100 (1/2*Kernel size) |

| First Attention-Based BiLSTM | |

| Second Attention-Based BiLSTM |

| Model | Accuracy | Specificity | Accuracy Compared with CNN-BiLSTM |

|---|---|---|---|

| CNN-BiLSTM | 0.865 | 0.928 | |

| CNN-BiLSTM with CBAM | 0.862 | 0.926 | |

| CNN-BiLSTM with DA-Net | 0.861 | 0.926 | ↓ |

| CNN-BiLSTM with non-local neural network | 0.857 | 0.923 | ↓ |

| CNN-attention-based BiLSTM | 0.868 | 0.930 | ↑ |

| CNN-attention-based BiLSTM with CBAM | 0.862 | 0.926 | ↓ |

| CNN-attention-based BiLSTM with non-local neural network | 0.860 | 0.924 | ↓ |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, P.; Li, F.; Du, L.; Zhao, R.; Chen, X.; Yang, T.; Fang, Z. Psychological Stress Detection According to ECG Using a Deep Learning Model with Attention Mechanism. Appl. Sci. 2021, 11, 2848. https://doi.org/10.3390/app11062848

Zhang P, Li F, Du L, Zhao R, Chen X, Yang T, Fang Z. Psychological Stress Detection According to ECG Using a Deep Learning Model with Attention Mechanism. Applied Sciences. 2021; 11(6):2848. https://doi.org/10.3390/app11062848

Chicago/Turabian StyleZhang, Pengfei, Fenghua Li, Lidong Du, Rongjian Zhao, Xianxiang Chen, Ting Yang, and Zhen Fang. 2021. "Psychological Stress Detection According to ECG Using a Deep Learning Model with Attention Mechanism" Applied Sciences 11, no. 6: 2848. https://doi.org/10.3390/app11062848

APA StyleZhang, P., Li, F., Du, L., Zhao, R., Chen, X., Yang, T., & Fang, Z. (2021). Psychological Stress Detection According to ECG Using a Deep Learning Model with Attention Mechanism. Applied Sciences, 11(6), 2848. https://doi.org/10.3390/app11062848