1. Introduction

Nowadays, many people regularly perform gym-workouts to prevent enervation and invigorate the activities of daily living. The gym-workout protocol has been encouraged for both promoting an individual fitness and expediting patient’s rehabilitation process [

1,

2,

3]. Prerequisite for maximizing the efficacy of exercise and preventing unexpected injury is that a person must work out with a correct posture as well as target muscle stimulation while performing exercises such as arm-curl, dead-lift, kettle-bell squat, and so on [

1,

4]. Habitually failing to fulfill either the former or the latter prerequisite may cause serious injuries on muscular-tendon or musculo-skeletal mechanism [

5,

6]. Accordingly, people’s interests about exercise monitoring systems have been grown as the number of both gym-goers and home trainees increase.

Computer vision-based approach is one main pillar to build exercise monitoring systems. In the computer vision-based approaches, the exercise monitoring system is typically equipped with RGB-d (or RGB) cameras such as Microsoft Kinect, Intel Realsense, etc. [

7,

8]. For the captured video frame or image, people’s joint positions are detected and estimated with the support of deep learning-based algorithms, then their posture is displayed as a skeleton [

9,

10]. Torres et al. proposed an upper limb strength training system in which a user’s posture was detected by Kinect v2 [

11]. Nagarkoti et al. presented a mobile phone video recording-based approach to realtime indoor workout analysis [

12] and a similar approach was reported by Liu and Chu [

13]. The aforementioned studies in [

1,

2,

3] are of computer vision-based approaches as well; specifically, exercise posture correction for home trainee [

1], posture modeling [

2], and posture correction during rehabilitation training [

3].

Surface electromyography (sEMG)-based approach is the other main pillar which enables to monitor body posture and movements. Due to the inter-subject variability (or difference) of the signal, the sEMG-based approach is used to be supported by advanced neural networks; especially, since sEMG is multichannel time series data, recurrent neural network (RNN), long short-term memory (LSTM), and deep belief network (DBN) are mostly used for desired classification [

14,

15,

16]. Quivira et al. proposed an approach to classify dynamic hand motions by translating sEMG signals via RNN [

17]. Orjuela-Cañón et al. employed deep neural network (DNN) architecture to solve the classification problem of wrist position based on sEMG data [

18]. The more dexterous motion is, the more advantageous information sEMG carries; accordingly, similar approaches for the classification of finger, hand and arm movements were introduced in [

19,

20,

21]. In addition, the monitored muscle activation as well as generated force can directly serve as an indicator to tell whether the target muscle is stimulated during workouts. The sEMG-based force monitoring approaches can be categorized as follows: neural network (NN)-based approach [

22,

23,

24,

25,

26], dynamic-model and software-based approach [

27,

28,

29], optimization-based approach [

30,

31].

The above introduced approaches, so far now, have solely used either computer-vision or sEMG. In contrast, we know that it would be beneficial to endow exercise monitoring systems with multimodality in a sense of information gathering. Kim et al. proposed a posture monitoring system in which both sEMG and inertial measurement unit (IMU) were employed to estimate human motion [

32] and Xu et al. introduced a similar approach [

33]. Wang used IMU together with Apple Watch to reconstruct arm posture for upper-body exercises [

34]. Several studies utilized both sEMG sensor and computer-vision; for instance, equinus foot treatment system by Araújo et al. [

35], prosthesis control interface by Blana et al. [

36], and rehabilitation video games by Rincon et al. [

37] and Esfahlani et al. [

38], respectively. As aforementioned, “an individual is performing a proper and exact workout” means that his or her joints are following a correct posture and target muscles are being stimulated primarily. Even though there have been existing studies, an exercise monitoring approach in perspectives of muscle physiology as well as joint kinematics has not yet been fully considered.

Studies have striven for demystifying a relationship between sEMG activation and joint kinematics. Michieletto et al. and Triwiyanto et al. proposed Gaussian–Markov process-based elbow joint angle estimation techniques, respectively [

39,

40]. Initiated from Hill’s muscle model, Han et al. introduced a state space model for elbow joint and Zeng et al. presented their works for knee joint [

41,

42]. Pradhan et al. used VICON-based 3D motion capture and sEMG data and reported that a relationship between those two were still fuzzy [

43]. As seen in the case of sEMG-based force monitoring above, NN-based approach might be a reasonable solution to estimate elbow joint kinematics from sEMG signals [

44,

45,

46,

47]. Namely, due to the characteristics of sEMG signal (including individual differences), a relationship between sEMG activation and joint kinematics not so straightforward and existing findings are still debatable [

48,

49].

To address the issues above, we propose a systemic approach to upper arm gym-workout classification via convolutional neural network (CNN). The proposed approach can be regarded as a subsolution of the larger class of problem in the design of exercise monitoring system with a specific example of upper arm gym-workout. The main idea of our approach is to merge joint kinematic data in-between sEMG channels and visualize as one image which will serve as an input to the CNN. The following research questions represent the motivation for this study:

“If muscle physiologic data is visualized together with kinematic data as a spatio-temporal image, can a relationship between these two data be imputed by the CNN since it consists of an automatic feature extractor followed by a classifier?”

“If the input image is manipulated to show more distinctive spatio-temporal features from a point of view of human eyes, then how this manipulation does affect to the CNN performances in terms of training loss and test accuracy?”

The above motivation leads us to the following validation procedure. First, the measured sEMG and joint angle data set is augmented using amplitude adjusted Fourier transform to generate the surrogate data while consistency is being sustained. Next, we visualize the measured sEMG and joint angle data as one heatmap by setting a horizontal and a vertical axis to be the time and the channels of the measured data, respectively. Then, we utilize the heatmap as an input data to CNN and then investigate the classification accuracy via CNN varies according to the merging location of the joint angle, e.g., in-between sEMG channels (just as a separate bar) or at the bottom of all sEMG channels. Furthermore, the measured sEMG and joint angle are also visualized with the patches of a Hadamard product matrix made of the joint angle and each sEMG channel, which is believed that, at the first glance, spatio-temporal features become challenging to be recognized. Finally, we perform statistical analysis to substantiate the main effects of the human-eye friendly image manipulation and the level of data augmentation as well as the interaction effect between those two.

To our best knowledge, the idea of the merging of muscle physiology and joint kinematics as well as the human-eye friendly image manipulation have not yet been fully studied in a field of CNN-based classification. Therefore, the contribution of this study can be summarized as follows:

We propose a novel approach for upper arm gym-workout classification by imputing a relationship between muscle physiology and joint kinematics via CNN feature extraction.

We introduce a data augmentation technique for time series, present various visualization methods according to human-eye friendly image manipulation, and statistically analyze the CNN classification performance based on experimental evaluations.

The outcomes from this study can be utilized to advance the state of the art in the problem of developing exercise monitoring systems by providing the level of data augmentation and the visualization method which guarantees the best CNN classification performance.

The rest of the paper is organized as follows: the proposed approach for gym-workout classification is introduced in

Section 2; specifically, our approach is explained through corresponding subsections as follows: system architecture, experimental setup and procedure, data augmentation technique, post data-processing, and is culminated to the main idea about imputing a biopotential-kinematic relationship and performing classification via CNN. In

Section 3, the classification result is reported in terms of training loss and test accuracy, which is followed by statistical analysis. In

Section 4, significant outcomes and findings are discussed. Lastly,

Section 5 will be the conclusion of this paper.

2. Methods

2.1. System Architecture, Experimental Setup and Protocol

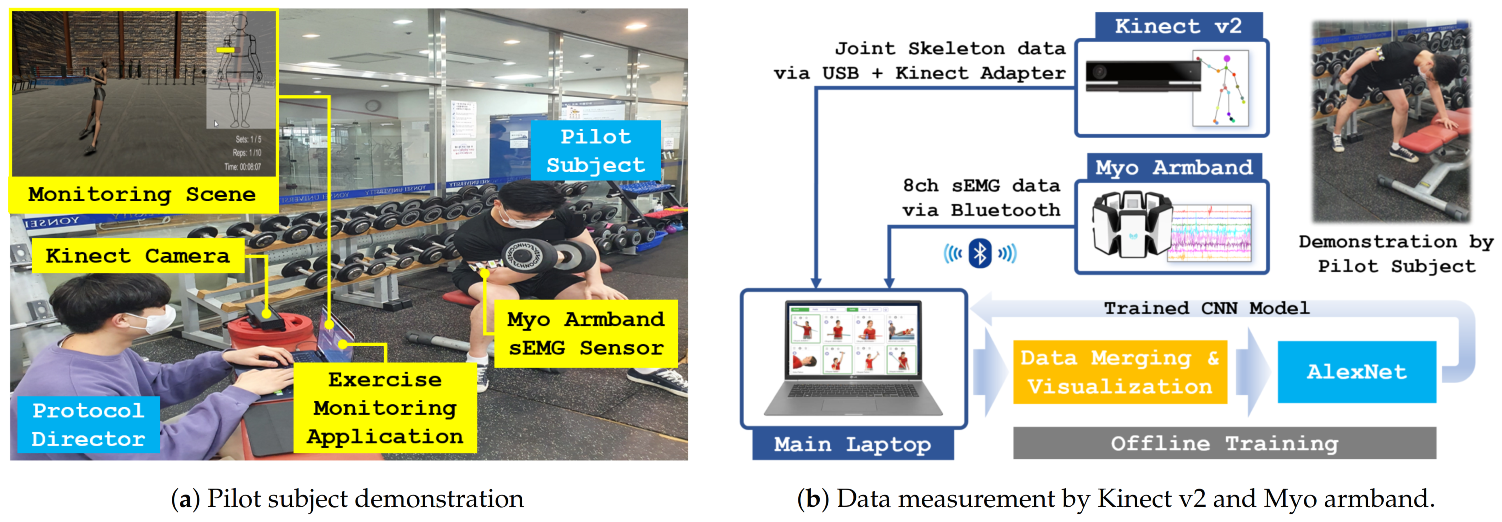

Figure 1 depicts the overall system architecture, data measurement and flows, data processing, and CNN training for the proposed upper arm gym-workout classification.

Our exercise monitoring system consisted of a laptop with a custom-developed Unity3D-based software application, an armband type 8-channel sEMG sensor (Myo armband, Thalmic Labs, Brooklyn, NY, USA), a RGB-d camera (Kinect v2, Microsoft, Redmond, WA, USA). Throughout the paper, the sEMG sensor and the RGB-d camera will be referred to as more common name: Myo armband and Kinect v2.

A professional trainer was recruited as a pilot subject (PS). The Myo armband was mounted on PS’s right upper arm under a protocol director (PD)’s supervision.

Figure 2 shows two exercises demonstrated by the PS and the 8-channel deployment on his right upper arm. After being equipped with Myo armband, the PS was guided by the PD in front of Kinect v2 and given instructions about experimental procedure and safety. The PS was instructed to perform a dumbbell curl (target muscle: biceps brachii) at the first visit and a dumbbell kickback (target muscle: triceps brachii) at the second visit. The experimental protocol was as follows:

According to PD’s hand signal at every 1.6 s, the PS performed a dumbbell curl one time (one trial).

After performing 10 trials, the PS was supposed to take a 15 min break (the end of one session) to minimize the effect of muscle fatigue.

Repeat six sessions.

While the PS was performing the exercise, sEMG data and joint skeleton data were sent via Bluetooth and USB+Kinect adapter connections, respectively. The sampling rate of Myo armband was set to 20 ms; For each channel, hence, 80 time series data samples were recorded for one trial (corresponds to 1.6 s). An elbow joint angle was calculated by VITRUVIUS using skeleton data from Kinect v2 [

10]. The same sampling rate was applied to Kinect v2; therefore, 80 data samples were stored for the elbow joint angle. After two days from the first visit, dumbbell kickback was measured under the same experimental protocol as well.

2.2. Data Augmentation Using AAFT and Signal Processing

In contrast to mechanical or electrical signal measurement, it is difficult to measure a large number of consistent sEMG signals for a repetitive task due to muscle fatigue. To prevent either under- or over-fitting and increase the generalization ability of a neural network, therefore, a proper data augmentation technique must be applied. The measured both sEMG and joint angle data are augmented by adopting the amplitude adjusted Fourier transform (AAFT) (Python code for AAFT can be found at

https://github.com/manu-mannattil/nolitsa, accessed on 2 February 2021 [

50]). We here recapitulate AAFT by the following [

51]:

Generate a Gaussian sequence, say , using pseudo-random generator;

Reorder according to the rank of the measured original data , say this reordered sequence ;

Perform Fourier transform to

:

Randomize phase: , where

When data are even:

When data are odd:

Perform inverse Fourier transform:

According to the rank of , reorder the measured original data ; this yields the surrogate data .

The AAFT generates the surrogates of the original data in terms of temporal correlation, amplitude distribution, power spectral density [

51,

52]. For the sEMG measurement, since it is rather difficult to obtain a large amount of consistent data due to muscle fatigue, the AAFT is frequently used for data augmentation. In this study, the AAFT was employed with the same purpose and similar application can be found in [

53]. We note that a ratio between the number of data after being augmented and that of the original data will be referred to as the level of data augmentation. For instance, if the total number of data was tripled, then the level of data augmentation was 3 and will be denoted by AAFT(3).

After data augmentation by AAFT, moving root mean square (RMS) filtering was applied to both sEMG and joint angle data (original and surrogates) with a sliding window length 10. Recall that each sEMG channel as well as the elbow joint angle data contained 80 time series data samples. These data were integrated over every five samples, which yielded for the 8-channel sEMG data and for the elbow joint angle data, respectively. The sEMG data were intrachannel normalized and the elbow joint angle data were interchannel normalized.

2.3. Imputing a Muscle Activation to Joint Kinematics Relationship and Classification via CNN

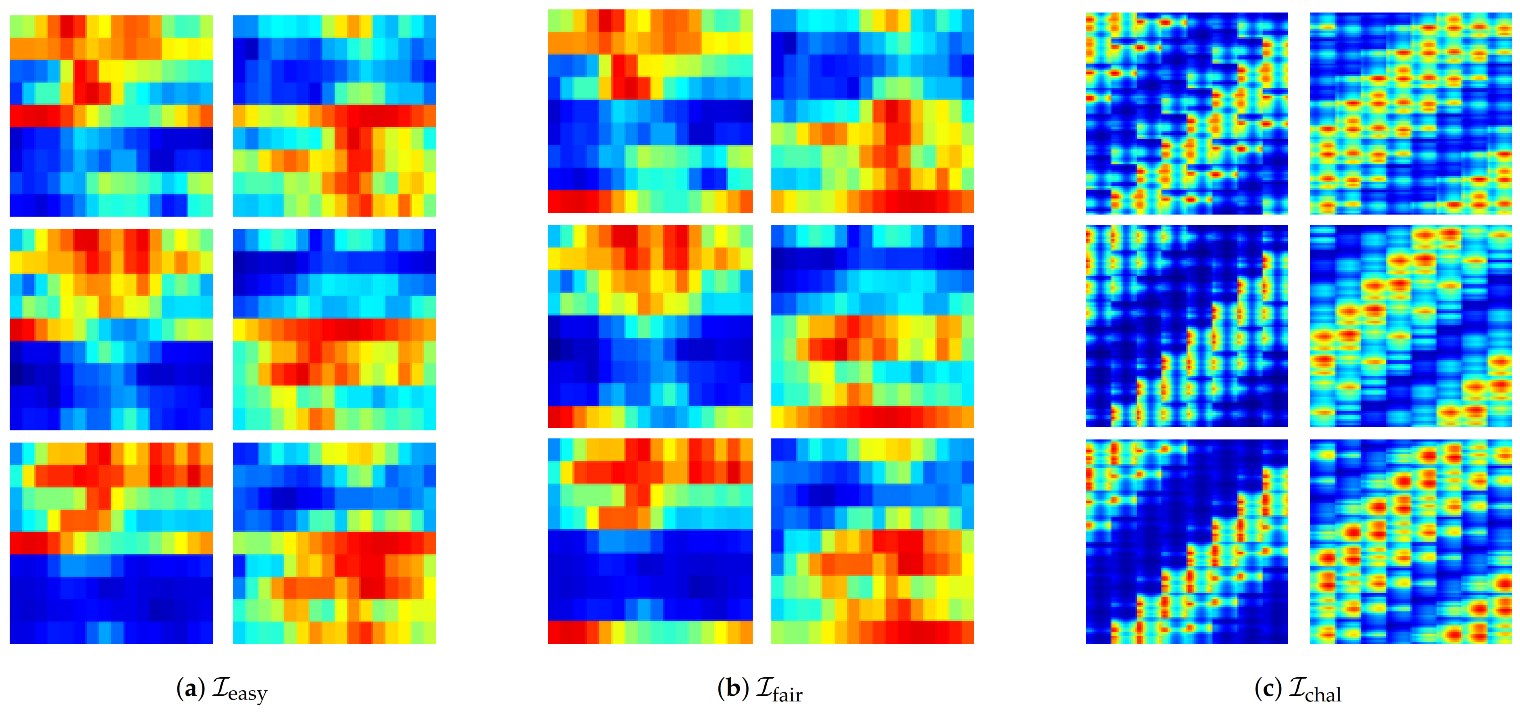

Figure 3 illustrates the examples of

,

, and

, which were produced by our proposed approach introduced below. The subscript of

—easy, fair, and chal(=challenging)—represents the level of manipulation for the recognizability of input image features from human eyes’ point of view. For example, from

Figure 3a, we could easily distinguish dumbbell curl and dumbbell kickback by the spectral color of upper- or lower-stripes. In

Figure 3b, in contrast, the bottom stripe seemed to be related to the upper stripes but separated; especially, in case of dumbbell curl. Indeed,

Figure 3a was produced by interleaving the interchannel normalized elbow joint angle data in-between biceps and triceps channels, whereas the elbow joint angle data were simply added at the bottom of the 8-channel sEMG data. From

Figure 3c, we can see diagonal patterns but the patterns themselves are rather challenging to be described.

The underlying rationale of the above manipulation was that we wanted to know whether producing an input image data set with considering human eyes’ point of view had an effect on classification via CNN or not. Especially, we want to investigate how it affected feature extraction for imputing a muscle activation to joint kinematics relationship, which could be implied by the training loss and the test accuracy. In this sense, we summarized the feature characteristics of , , as follows:

: an image contained features that were easy to be recognized by human eyes, e.g., geometry, color, etc.

: an image contained features that were fair to be recognized by human eyes, e.g., simple rules, simple pattern, local differences, etc.

: an image contained features that were challenging to be recognized by human eyes, e.g., mathematically defined patterns such as correlation, attraction, bifurcation, fractal, etc.

,

,

can be produced according to manipulations introduced below. Let

and

be the

mth channel sEMG data and the elbow joint angle data after signal processing, respectively. Recall that both data were of

. First,

was defined to be

Namely,

was interleaved in-between the biceps and triceps channels of the sEMG. Second, we defined

as

Thus,

was merged at the bottom of the sEMG channels. Third, to define

, we first defined matrices

and

A which could be generated by replicating

and

to row- and column-wise, respectively:

Both

and

A are of

. Now, we can define

by

where ⊙ represents the Hadamard product. Namely,

consists of patches of

. Finally, all

,

, and

were visualized as

(width×height×RGB-channels) heatmaps by upscaling from

,

, and

, respectively. For this upscaling, bilinear interpolation was used. The selected examples of

,

,

are presented in

Figure 4.

To train a model and classify the visualized dumbbell curl and dumbbell kickback, the AlexNet was employed [

54].

Figure 5 shows gym-workout classification via AlexNet with an example of

as an input image. According to a combination of the 5-level of data augmentation by AAFT and the 3-level of manipulation for the recognizability of input image features, there existed 15 conditions. Under each condition, training and classification via CNN (AlexNet) were repeated 10 times with shuffling the sequence of input images for each repetition. The performance of CNN is recorded in terms of the training loss and the test accuracy at the end of each repetition.

2.4. Statistical Analysis and Research Questions

For statistical analysis, the level of data augmentation by AAFT was set to one dependent factor and represented by AAFT(1), AAFT(2), AAFT(3), AAFT(4), AAFT(5). The representation AAFT(4) represents that the original data were augmented by quadruple. The other dependent factor was set to the level of manipulation for the recognizability of input image features which were represented by , , . Statistical analysis was performed to explain the effects of the two factors (either positive or negative) on CNN (AlexNet) performance in terms of the training loss and the test accuracy.

A repeated measures analysis of variance (rANOVA) was performed to identify the effect of two dependent factors with the significance level of (IBM SPSS Statistics, v25, Chicago, IL, USA). If the assumption of sphericity was violated for the main effects, the degree of freedom was corrected using Greenhouse-Geisser estimates of sphericity. The Bonferroni-adjusted pairwise comparison was used, and the result was reported in the form of “mean difference (standard error).” Meanwhile, statistically analyzing the CNN performance, the following research questions were addressed:

- (Q1)

“How did the level of data augmentation by AAFT affect CNN performance?”

- (Q2)

“How did the level of manipulation for the recognizability of input image features affect CNN performance?”

- (Q3)

“What was the optimal combination of the two factors for the best CNN performance?"

(Q1) and (Q2) could be answered by investigating the main effect of the level of data augmentation by AAFT and the level of manipulation for the recognizability of input image features, respectively. The answer for (Q3) could be found by observing the interaction effect between the two factors as well as pairwise comparison result with corresponding mean and standard deviation values.

3. Results

3.1. Overall CNN Performance

To evaluate CNN performance, training:validation:test sets were organized by 8:1:1 ratio. For instance, under AAFT(1) which corresponded to no data augmentation,

,

,

included 60 images each; accordingly, the number of data in training:validation:test sets were 48:6:6. For each condition of AAFT(*) and

, the evaluation was repeated 10 times. The data set was shuffled for every repetition.

Table 1 and

Table 2 show that the mean and standard deviation of training loss and test accuracy according to two dependent factors, respectively. Mean values presented in bold face represent the best performance (the lowest value for training loss and the highest value for test accuracy).

For better readability, we summarize the estimated mean values and pairwise comparison results of the two CNN performance metrics across the five levels of data augmentation by AAFT and the three levels of manipulation for the recognizability of input image features in

Table 3. The explanation about the statistical analysis result of

Table 3 is followed in

Section 3.2,

Section 3.3 and

Section 3.4.

3.2. Effects of the Level of Data Augmentation by AAFT

Effect on training loss: the rANOVA result indicated that there was a significant main effect of the levels of data augmentation by AAFT on training loss, , . The pairwise comparison revealed that AAFT(1) vs. AAFT(2) yields mean(standard error) = , AAFT(1) vs. AAFT(3), , AAFT(1) vs. AAFT(4), , and AAFT(1) vs. AAFT(5), , indicating a significant difference between AAFT(1) and the other levels of data augmentaion except AAFT(2). This means that the data augmentation had an effect on the training loss when greater or equal than triple. In addition, the comparisons of AFFT(2) vs. other greater levels showed significant differences as AFFT(2) vs. AFFT(3), 0.60(0.13), , AFFT(2) vs. AFFT(4), 1.04(1.22), , and AFFT(2) vs. AFFT(5), 1.79(1.30), , implying that the training loss significantly decreased if the level of data augmentation was greater or equal than triple. Between AFFT(3) and AFFT(4), no significant differences were found. AFFT(5) showed significance difference against all the other levels.

Effect on test accuracy: Please note that test accuracy was presented in terms of percentile in

Table 2; therefore, 100% corresponds to 1.00 from now on. Analysis on test accuracy yielded a significant main effect for the level of data augmentation by AAFT on test accuracy as well,

. Pairwise comparisons revealed that AAFT(1) vs. AAFT(2),

,

, AAFT(1) vs. AAFT(3),

, AAFT(1) vs. AAFT(4),

, and AAFT(1) vs. AAFT(5),

, suggesting that the test accuracy increased significantly when the level of data augmentation was greater or equal than triple compared to AAFT(1). There were no significant differences among the test accuracy under AAFT(2), AAFT(3), AAFT(4). In contrast, AAFT(5) indicates significant difference over AAFT(1),

, AAFT(2),

, AAFT(3)

, except AAFT(4). This implies the test accuracy improved significantly as data were augmented by AAFT.

3.3. Effects of the Level of Manipulation for the Recognizability of Input Image Features

Effect on training loss: The level of manipulation for the recognizability of input image features showed the significant main effect on training loss, . However, pairwise comparison revealed no significant difference among , , and . The comparison of vs. only showed marginal tendency, .

Effect on test accuracy: The significant main effect of the level of manipulation for the recognizability of input image features on test accuracy was also found, , . Pairwise comparison yielded a significant difference for both vs. , , and vs. , , which indicated that both and yielded better test accuracy over . No significant difference, however, was found between and .

3.4. Interaction Effects of the Level of Data Augmentation by AAFT × the Level of Manipulation for the Recognizability of Input Image Features

Interaction effect on training loss: The analysis revealed that there was a significant interaction effect between the level of data augmentation by AAFT and the level of manipulation for the recognizability of input image features in training loss,

, as shown in the rightmost column in

Table 3. From

Figure 6a, we can see that the train losses of both

and

were greater than that of

under AAFT(1). They tended to be decreased quickly and became less than that of

as the level of data augmentation increased. This indicates that the level of manipulation for the recognizability of input image features might have had a different effect on training loss depending on

,

, and

. Pairwise comparison revealed a marginal tendency for

vs.

under AAFT(5),

, but no significant difference was found.

Interaction effect on test accuracy: A significant interaction effect between the level of data augmentation by AAFT and the level of manipulation for the recognizability of input image features was also found in test accuracy,

, as reported in the rightmost column in

Table 3.

Figure 6b depicts that the test accuracy mostly tended to be better as AAFT level increased. In addition, the test accuracy of both

and

tended to be more precise than

across all AAFT levels. Pairwise comparison indeed revealed that the test accuracy of both

and

was significantly higher than that of

upto AAFT(4). No significant difference was found between

and

across all AAFT levels.

3.5. Comparison Result for Various Neural Network Models

The comparison results for AlexNet and three layer (input-hidden-output) neural networks (NNs) are presented in

Table 4 and

Table 5. h1 through h4 represent the number of hidden nodes in the hidden layer. To evaluate NNs,

and

(which consist of

real values) were flattened to

vectors, then applied to the NN as an input. Similarly,

was converted into

vector. Accordingly, the number of input nodes was set to 144, 144, and 16,384 for

,

, and

, respectively.

Since the purpose of this comparison was to find classical NN architectures of which performance was equivalent to that of AlexNet, we increased the number of hidden nodes gradually from 1 to 4. The softmax layer with two nodes was adopted as an output layer as the number of upper arm gym-workouts needed to be classified was two. The rest of the evaluation protocol was the same as introduced in

Section 3.1. From

Table 4 and

Table 5, we can see that the training loss of NNs is smaller than AlexNet. The mean performance of NN almost becomes equivalent or surpasses that of AlexNet when the number of hidden node is set to 4.

Table 6 and

Table 7 show the comparison result for AlexNet versus other deep neural network architectures. Interestingly, VGG-19 reported the best performance in terms of both training loss and test accuracy. For our upper arm gym-workout classification, the performances of ResNet-50 and Inception-v4 were lower than the other two architectures. Further discussion about all the comparison results will be followed in the next section.

4. Discussion

Recall that the main idea of our approach is to merge joint kinematic data into sEMG data and visualize as a heatmap. We wanted for the employed CNN (AlexNet) to impute a relationship between muscle activation and joint movement via CNN and solve an upper arm gym-workout classification problem. The novelty of the proposed approach is to control the level of manipulation for the recognizability of input image features as well as the level of data augmentation by AAFT. We wanted to reveal the effect of those two control factors on CNN training loss and test accuracy by statistical analysis. Furthermore, finding the optimal combination of the two factors for the best CNN performance was a part of our research questions.

Our idea of visualizing muscle activation together with joint movement as a heatmap indeed is initiated by approaches to demystify the functional connectivity of a brain across different regions [

55,

56]. However, existing research has been reported that it is not so straightforward to define biopotential-kinematic relationship explicitly [

57,

58,

59]; hence, we want to count on CNN to impute those relationship implicitly. According to the statistical analysis, the two control factors had significant main effects for both training loss and test accuracy. Therefore, this finding could be the answers to our first and second research questions.

Table 1 shows that

has a tendency of having a less value (which means better in terms of training loss) than

in overall. However,

has the least standard deviation than

except AAFT(1). This implies that

might contribute to the consistency of CNN training. From

Table 2, furthermore,

shows the best test accuracy compared to the others under AAFT(1) and AAFT(2). This can be interpreted as

is the best suitable choice when data augmentation is not considered. The effect of data augmentation becomes dominant as the level of AAFT increases; accordingly, the test accuracy for

and

almost reached to 99% approximately even after AAFT(3).

shows the monotonically increasing test accuracy as the level of AAFT increases. Especially, the test accuracy of

increases drastically from 76.6 under AAFT(4) to 95.8 under AAFT(5). This monotonically increasing characteristic can be advantageous over the others for determining design parameters when we consider more complicated motion with the large enough data samples.

The best test accuracy shown by

for AAFT(1) and AAFT(2) might be interpreted as if the relationship between muscle activation and joint movement was somehow successfully imputed via CNN. Additionally, the statistical analysis indicated that the test accuracy of both

and

is significantly higher than that of

upto AAFT(4). Nevertheless, pairwise comparison could not find any significant difference between

and

in terms of the performance metrics of CNN. This might be caused by either a lack of the number of gym-workouts to be classified or a way more dexterous and stronger automatic feature extraction of CNN. These issues can be addressed by increasing the number of gym-workouts to be classified and investigating the sparsity and entropy of

and

using methods presented in [

60]. In addition, the interaction effect of the two control factors indicate that the optimal combination of a representation method for biopotential-kinematic data together with the level of data augmentation must be involved as a design parameter for an exercise monitoring system.

For the comparison result, in

Table 4, the NNs presents the less training loss than that of AlexNet due to a simpler architecture. From

Table 5, we can see that the NNs outperforms AlexNets if the number of hidden nodes is greater than 2; however, further evaluation and investigation is needed for the case of the larger class of upper arm gym-workouts. As a result of comparison among deep neural networks presented in

Table 6 and

Table 7, VGG-19 shows the best performance. This result could be expected since VGG-19 consists of the enhanced convolution and pooling layers compared to AlexNet. For both AlexNet and VGG-19,

yields the best performance under AAFT(1). The training of Inception-v4 improves drastically as the level of AAFT increases and its test accuracy reaches to 90% approximately under AAFT(5). This indicates that Inception-v4 requires more number of data compared to AlexNet and VGG-19. According to

Table 7, the training of ResNet-50 might be prematured due to a lack of number of data samples.

5. Conclusions

Throughout this paper, we proposed an approach to upper arm gym-workout classification problem according to the spatio-temporal features of sEMG and joint kinematic data. First, in our approach, two upper arm gym-workouts—dumbbell curl (target muscle: biceps brachii) and dumbbell kickback (target muscle: triceps brachii), respectively—were demonstrated by a professional trainer. During the demonstration, sEMG data sample and elbow joint angle data sample were measured and stored by Myo armband and Kinect v2 at every 20 ms, respectively. Next, after RMS filtering and integrating, the processed both data were merged into and visualized as one image with considering the level of manipulation for the recognizability of input image features from human eyes’ point of view. Finally, CNN (AlexNet) was employed to impute a relationship between muscle activation and joint kinematics by being trained with the visualized image data set as well as to solve the gym-workout classification problem.

The statistical result on CNN performance metrics showed that our approach—controlling the level of manipulation for the recognizability—had a significant main effect on CNN performance; of course, so did the level of data augmentation. We also found that there were the interaction effects of two control factors, which should be considered to find the optimal combination of the level of data augmentation and the level of manipulation for the recognizability of input image features as design parameter. Pairwise comparison did not reveal any significant difference when an input image data was visualized as or . However, both visualization approaches showed the outperformance over .

The contribution and innovation by disseminating our findings can be summarized as follows. First, this study proposes a novel approach to approximate a relationship between muscle physiology and joint kinematics via CNN feature extraction. Second, by providing a systemic procedure as well as a quantitative analysis, this study advances the state of the art in the problem of developing exercise monitoring systems. Finally, the outcomes related to the level of AAFT and the visualization technique can be utilized to determine design parameters when we develop the exercise monitoring system which is compact and cost-affordable.

Future studies should be followed in the directions of increasing the number of gym-workouts to be classified as well as investigating the sparsity and the entropy of the input image data according to the level of manipulation for the recognizability. Based on findings in this study, the proposed approach will serve as a core and be culminated to the development of an exercise monitoring system by which trainee’s muscular physiology as well as joint kinematics are properly monitored.