1. Introduction

Bridges are prone to deterioration caused by external loads, such as traffic and environmental loading or natural disasters; therefore, structural-health monitoring (SHM) is critical to ensure safe and reliable operation of the bridges during their service life. Numerous vibration-based damage detection techniques have been studied in an attempt to monitor structural health [

1,

2,

3,

4,

5,

6,

7,

8,

9], and they can be classified into two groups: (1) Parametric model-based methods that utilize the finite-element (FE) model of a structure and update the model parameters using acquired sensor data and (2) data-driven vibration-based damage identification techniques that use a database of measurements to fit a statistical model by extracting features (e.g., natural frequencies, mode shapes, modal flexibility, and curvature), and analyzing the condition of a structure. The parametric-model-based method can optimally calculate structural properties, such as modulus of elasticity and moment of inertia, but the precision of these properties heavily depends on the accuracy of the initial FE model and optimization methods. Data-driven methods have received greater attention because they are simple to implement. However, features from measurements, such as mode shapes and natural frequencies, may not properly be extracted because of measurement noise and the poor excitation quality of a structure, resulting in inaccurate damage detection.

Recently, deep-learning-based damage detection (DLDD) has emerged as an alternative [

10,

11,

12,

13,

14,

15,

16,

17,

18,

19,

20,

21,

22,

23,

24,

25,

26] to conventional data-driven approaches. The DLDD automatically learns complex features from raw measurements other than dynamic characteristics and conducts nonparametric nonlinear regression for damage detection. Lin et al. [

10] proposed a time series one-dimensional convolutional neural network (1-D CNN) to extract features directly from only 20 s of raw acceleration without any hand-crafted feature extraction process. The training datasets were simulated with a FE beam model subjected to random excitation. The research used two different types of loss function to locate damage and estimate the quantity of the damage. Zhang et al. [

11] used a deep network structure that was similar to that of Lin et al. [

10] using a 1-D CNN for classifying the states of a bridge. Their research used chunks of every 0.6 s of time-series measurement as an input, and they experimentally validated the performance of their trained network. Lee et al. [

12] proposed an autoencoder-based deep neural network for detecting damage of a tendon in a prestressed girder using 20 s of raw measurement numerically. Utilizing 2-D convolution, Khodabandehlou et al. [

13] converted time-series measurement into an image and used a 2-D CNN for damage state identification. Although the results from the abovementioned research have shown the possibility of using CNNs for automated damage detection, ambient vibration testing requires sufficient measurement time until major vibration modes can be clearly identified. Therefore, increasing the length of inputs for the deep-learning network is critical for accurate structural damage detection.

To address this issue, Guo et al. [

14] and Pathirage et al. [

15] used mode shapes and natural frequencies. Because modal parameters are fixed with the number of sensors, these parameters are extracted from ambient vibration testing and can be used without adjusting the size of the input. However, owing to the sparsity of the mode shapes, the accuracy of these methods depends entirely on the number of sensors instrumented on a structure.

Table 1 summarizes the type of input and deep-learning model used for each method related to automated damage detection.

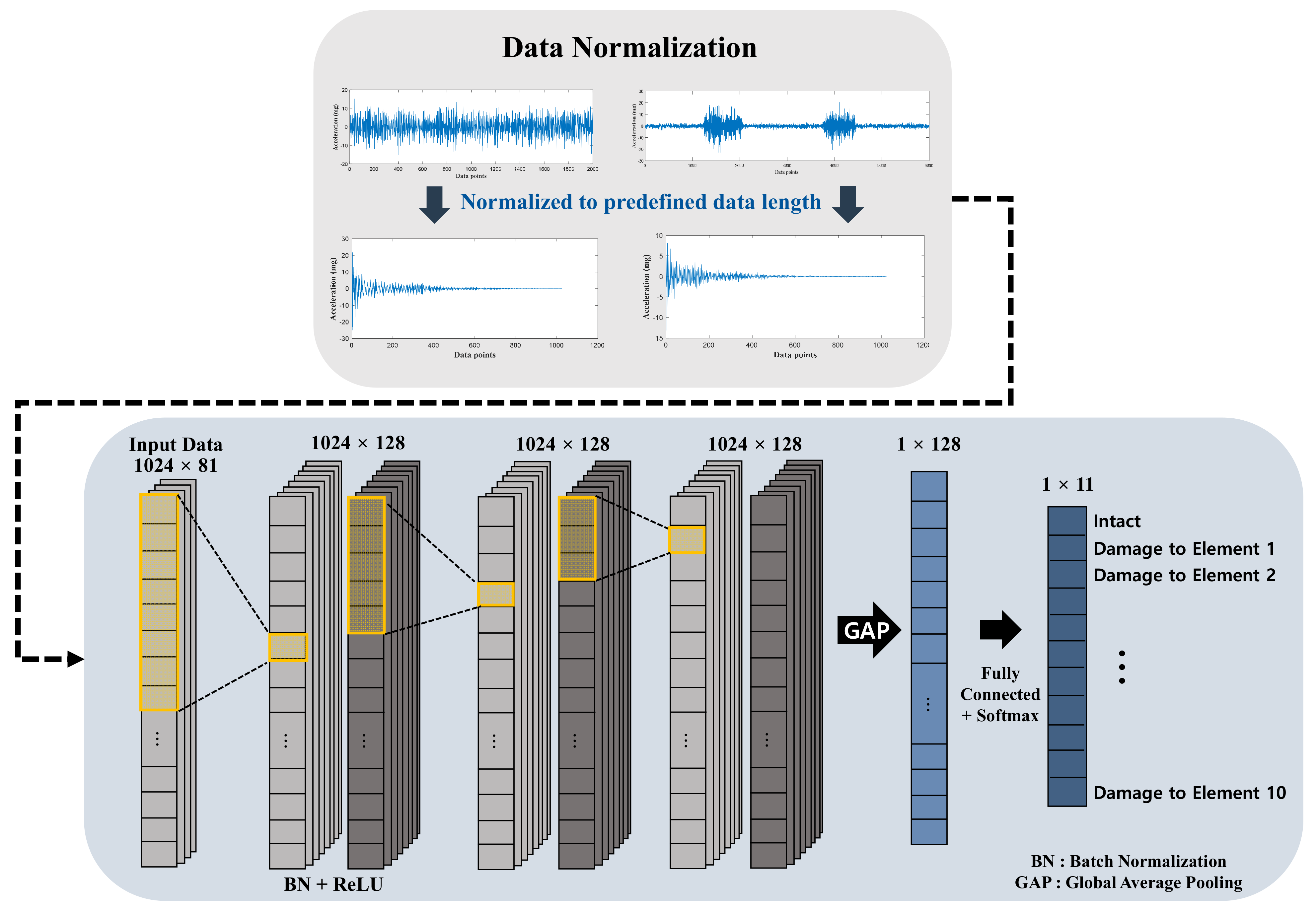

In this report, a time-series deep neural network is presented for automated structural damage detection using a data normalization technique and 1-D CNN. The data normalization technique converts raw measurements with any arbitrary length to a specified size of free vibration, preserving the excitation quality of the measurements, and the 1-D CNN detects and localizes damage on a structure.

For training and validation, the beam was excited with random and traffic loadings for 20 s, and the damage was simulated as 20%, 30%, and 50% of the single elemental stiffness loss. After training, the trained network was tested considering an untrained input size as well as a randomly several damage severities between 20 and 50%.

The remainder of this report is organized as follows. In

Section 2, the proposed data normalization technique and CNN structure used are explained. In

Section 3, datasets for training and validation are generated through numerical simulations conducted on a 10-element beam model, under 20%, 30%, and 50% of damage to a single random element on a beam. In this study, random and traffic loading excitations were used to validate the performance of the proposed network under nonstationary traffic loading to simulate the environment of ambient field vibration testing. In

Section 4, the application of the proposed trained model to training datasets that have different measurement times and damage severities is described, and the accuracy of the proposed method is discussed. Finally, in

Section 5, a summary and the conclusions drawn from the study are provided.

3. Numerical Validation

3.1. Simulation Model

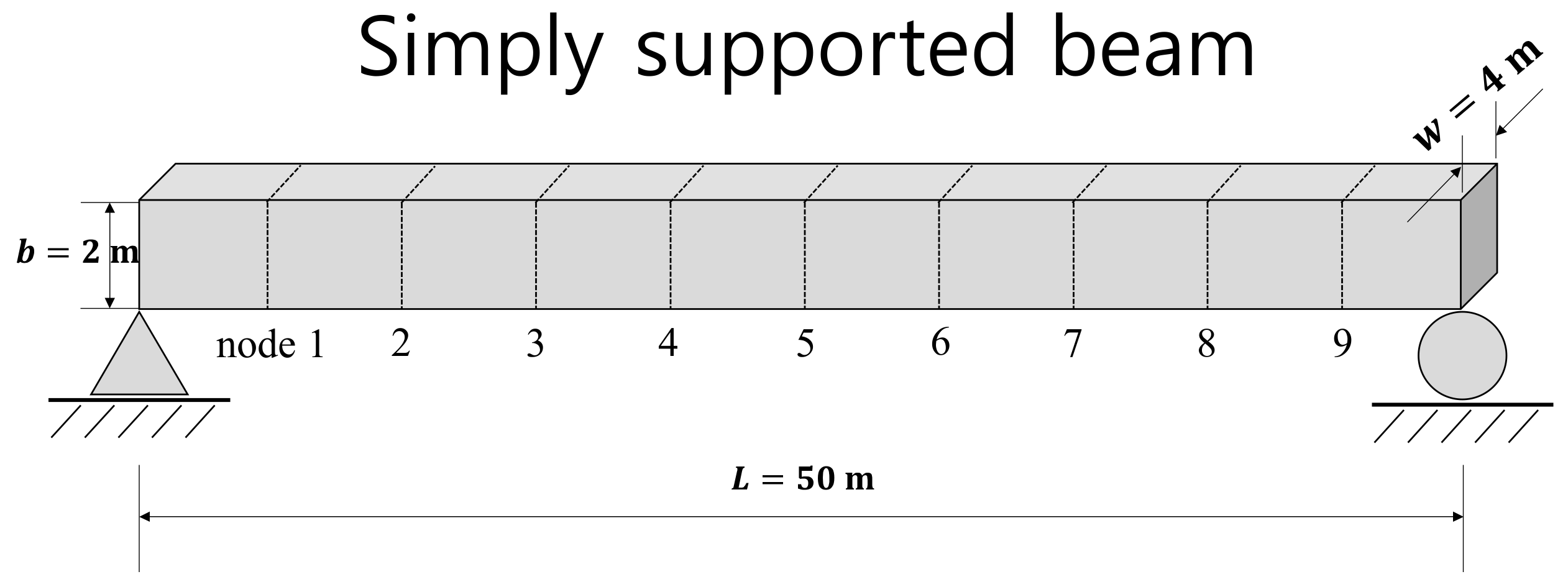

Numerical simulations were conducted to generate a database for the proposed automated structural damage detection method. A simply supported beam with a length of 50 m was modeled with a 10-element Bernoulli beam (see

Figure 4). The cross section of the model was 4 × 2 m (width × height), the modulus of elasticity was 210 GPa, the density was 7850

, and the damping ratio was 2%. The three major natural frequencies of the beam without damage were 1.85, 7.52, and 16.8 Hz. Damage was simulated by reducing the flexural rigidity of the damaged element by 20%, 30%, and 50%.

To excite the structure with different loading cases, two loading conditions—random excitation and traffic loading excitation—were considered. A random excitation was provided to validate the proposed method in an ideal condition where all frequency spectra are excited so as to obtain precise modal parameters. Traffic loading was considered for the actual excitation of a structure, which requires sufficient measurement time for accurate modal analysis.

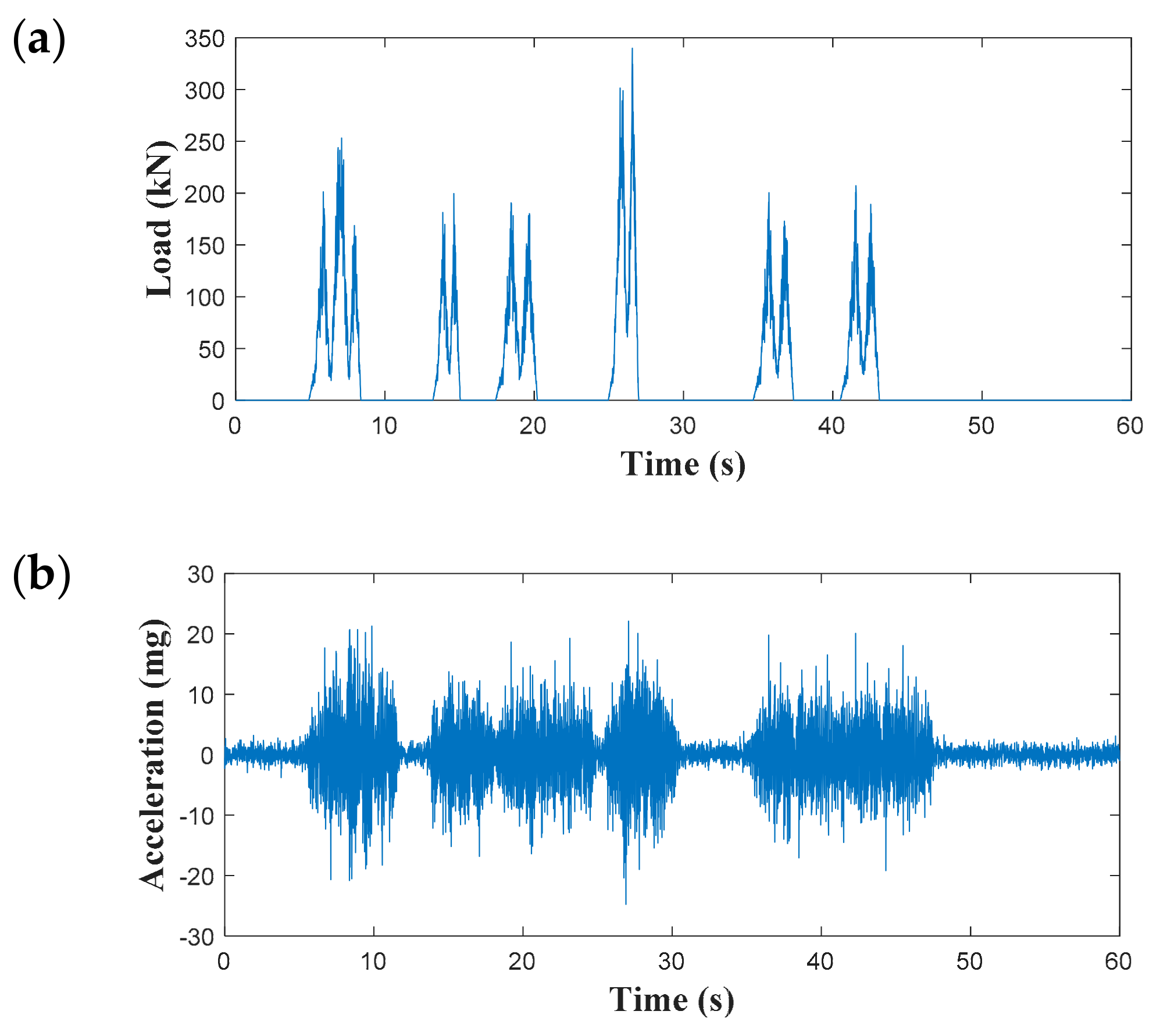

Random excitation was modeled with a normal distribution with a mean of 0 and a standard deviation of 20 kN at a single node randomly on the beam for each simulation case. Traffic loading was modeled as a three-wheel truck moving on the beam at different speeds from left to right (nodes 1 to 9). The force on the three wheels weighed 35 kN with 10% random force on the front and 145 kN with 10% random force added to two rear axles. The distance between the front and rear axles was 4.3 m, and that of the two rear axles was 9.0 m.

Sensor noise was assumed to be white Gaussian noise with a noise density of . The sampling rate was determined to be 100 Hz, and responses were generated using MATLAB Simulink with the ODE8 solver.

3.2. Data Generation for Training

For training of the proposed model, 24,000 datasets were generated with 50% random excitation and 50% traffic loading excitation, 20,000 datasets were generated for training, and 4000 datasets were used for validation. Two training cases were conducted to figure out the effect of data normalization pre-processing step (see

Table 3). The first case consisted of datasets with sampling time of 60 s while varying sampling times from 20 to 60 s were applied to the second case. Each case includes random excitation and traffic loading datasets. Note that 20 to 60 s data was created by randomly truncating 60 s data. These two cases were compared to find out how long data length, i.e., beam excitation, affects the learning outcome and how data normalizing affects. To label the location of damage, 11 categories were prepared. Label 0 indicates an intact condition, whereas labels 1 to 10 indicate a single damage location that corresponds to the element number. Because there were 10 labels for damage (i.e., elements 1 to 10) and one label for the intact condition, the number of intact datasets was increased so that the number of intact datasets was equal to the number of damage datasets to handle data unbalancing [

32,

33,

34,

35].

Figure 5 shows the random excitation and resulting acceleration response measured at node 1. To simulate traffic loading, three to five AASHTO trucks were designed to pass the beam randomly with velocities of 30, 40, and 50 km/h, as summarized in

Table 3. Trucks are set to depart randomly so that various acceleration amplitudes can be generated (see

Figure 6).

The simulated acceleration measurements were normalized by NExT to an input layer of 81 × 1024 and fed into a 1-D CNN.

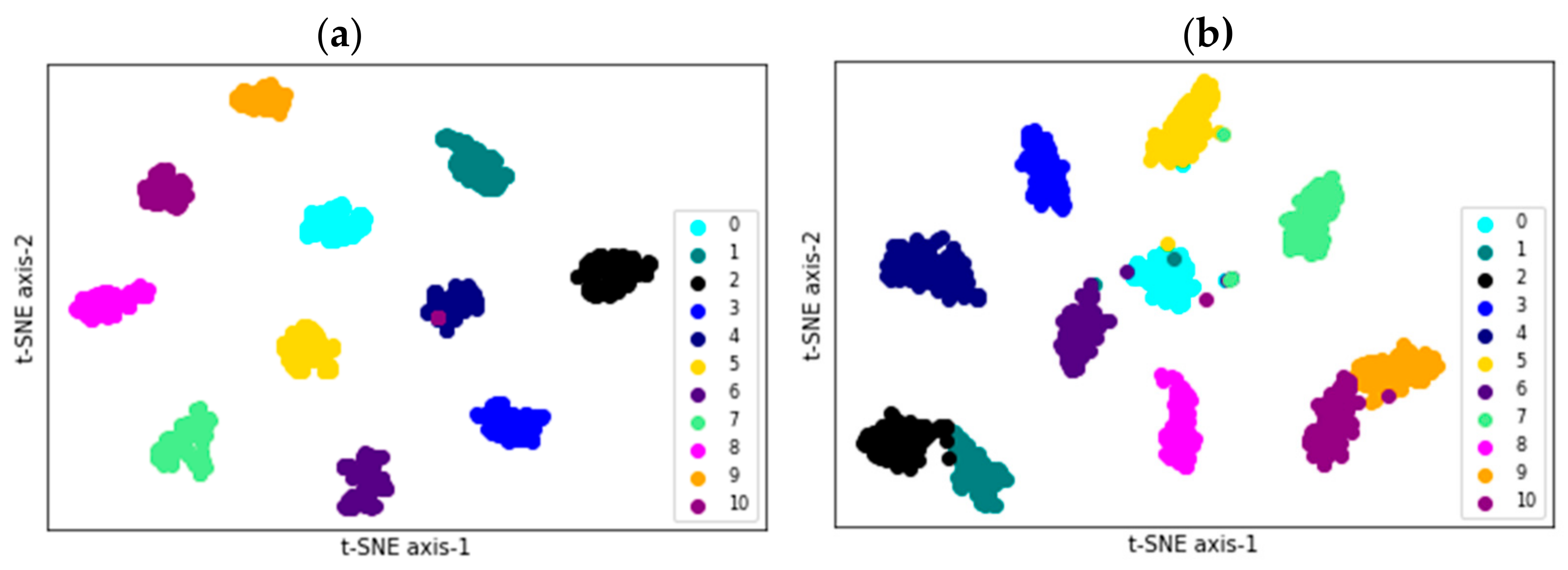

3.3. Training and Validation

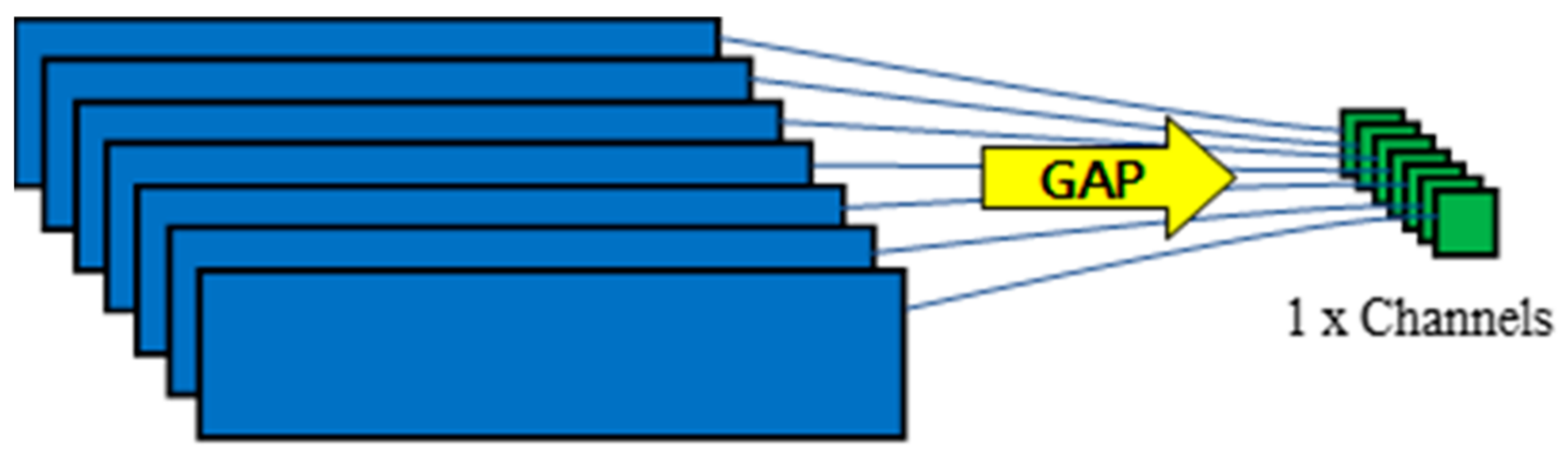

The simulated acceleration measurements were trained with the proposed deep network for automated damage localization. The nine-channel accelerations were normalized using NExT and then standardized to have a mean of 0 and a standard deviation of 1. The preprocessed input data were fed to a series of 1-D convolution layers that have 128 filters with kernel sizes of 8, 5, and 3. For better convergence, the kernels were initialized by the uniform He initialization scheme proposed by He et al. [

36], which samples from the uniform distribution. To avoid the overfitting issue, a batch normalization layer was added at the end of each 1-D convolution layer followed by GAP layers. The detailed configurations of training are summarized in

Table 4. Intel i9-9900x and Nvidia RTX2080Ti were used for training datasets, and a single training epoch cost 12 s.

3.4. Training Results and Discussion

For demonstration of training results, classification accuracy of each validation datasets is shown in

Table 5. According to classification results, Case 1 shows better classification capability in both cases. These results can lead us to the fact that training with longer data, which represents higher degree of excitation can provide more information to train the model. However, the longer the data, the longer the model takes to learn and harder to use for real world applications. For example, training a 60-s-long raw data with an input size of 9 × 6000 takes 34 s per epoch, whereas normalized 81 × 1024 takes 13 s. Thus, to secure learning efficiency in time-series deep learning model for structural damage detection, the proposed data normalization step is essential.

A comparison was made between the proposed method and the model proposed by Lin et al. [

10]. Since the existing model was originally proposed to train with raw acceleration data, 10.24 s of raw acceleration data of which the size is 9 × 1024 was used for training. Furthermore, for demonstration of proposed data normalization, Case 1 dataset was used with the existing model.

As shown in

Table 6, classification accuracy is 99.90% and 81.20% under random excitation for the proposed method and the existing model, respectively. The proposed method showed 18.70% better performance in damage classification compared to existing model. It is noteworthy that the accuracy of the models using traffic loading clearly show excellence of the proposed model. The proposed method showed 99.20% of accuracy while the existing model exhibited 59.80% of classification accuracy. The difference is resulted from the use of the proposed data normalization that allows to aggregate frequency-domain information compared to instant time-domain information presented in the existing model.

As a result of applying the data normalization method to existing model, the classification accuracy was significantly improved. However, in the existing model, the accuracy in the training process reached 100% at 98th epoch, but the validation accuracy did not exceed 93%. Since the existing model used max-pooling layers after convolutional layers, the overfitting issue was presented. On the other hand, GAP layer added to the proposed method instead of max-pooling layers addressed overfitting issue of the exiting model yielding better performance.

5. Conclusions

An automated damage detection method using a deep neural network was presented. The proposed method for automated structural damage detection comprises two main contributions. (1) Data normalization is performed using NExT to compress and normalize the input data length. The acquired data can vary according to the measurement environment and purpose. Normalizing and quantifying the data length are critical to damage detection through deep neural networks because deep neural networks only work for trained data lengths. (2) A CNN is used to localize damaged elements. The proposed convolutional network can localize damaged elements from normalized input acceleration signals without any damage-sensitive extraction process.

A numerical model of a simply supported beam was excited by random ambient load and traffic loading, and acceleration responses were extracted from nine nodes. Sensor noise is considered to demonstrate the reality of the measurement. Noisy acceleration signals from nine nodes were correlated with each other to normalize and quantify the data length, and a fully correlated response matrix was generated. The fully correlated response matrix is the input of the deep neural network, and the output is the location of the damaged element.

For the training of the proposed method, datasets were generated using random excitation and traffic loading, and single damage was randomly applied to one of the elements. A total of 20,000 datasets for training (10,000 for each load) and 4000 datasets for validation (2000 for each load) were used to train the model. The number of intact data (i.e., category 0) was greater than each damage data (from category 1 to category 10) to balance the number of intact to total number of damage data. For representation of effect of data normalization, two training cases were compared. The resulting classification accuracy for Case 1 was 99.90% and 99.20% for random excitation and traffic loading datasets, respectively. Through the results, it was found that by using data normalization technique in the pre-processing step, longer data can be used for training to enhance classification capability of trained model.

The proposed method was validated through tests with different conditions for generating datasets. For random excitation, the sampling time was increased. For traffic loading, the velocity, number of trucks, and sampling time were increased. In addition, 0% or 20–50% of damage was chosen at random for both loads. The classification accuracy of random excitation was 100% and that of traffic loading was 99.00%.

Future work is planned to focus on problems caused by the low severity of damage. The proposed method using different deep neural networks rather than 1-D CNN will be studied to improve classification accuracy. Furthermore, to enhance the effectiveness of the proposed method, the classification of multiple damages and prediction of damage severity will be studied.