Learn-CIAM: A Model-Driven Approach for the Development of Collaborative Learning Tools

Abstract

1. Introduction and Motivation

2. Related Works

3. The Learn-CIAM Proposal: Supporting Model-Driven Development of Collaborative Learning Tools

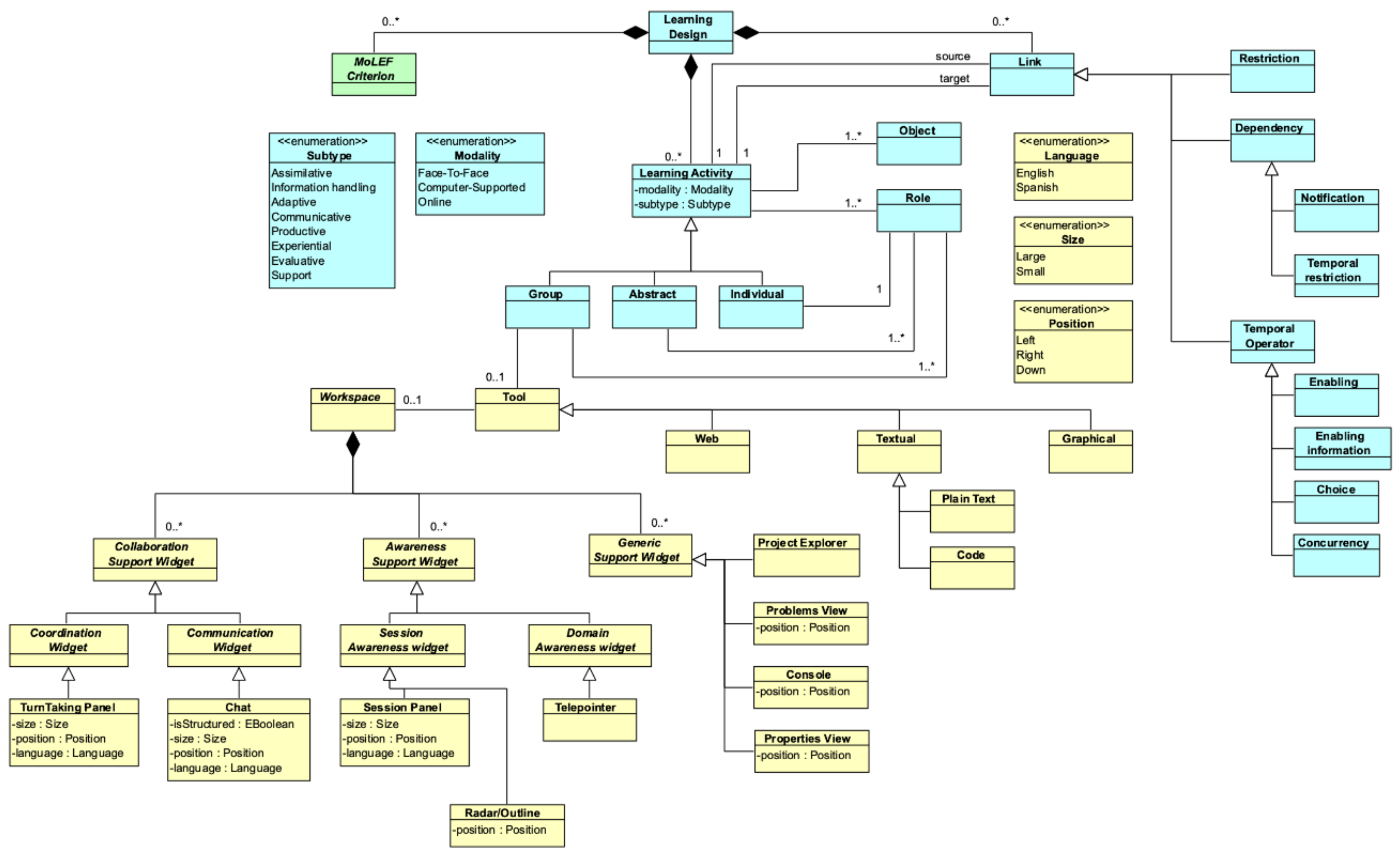

3.1. Conceptual Framework and Specification Language

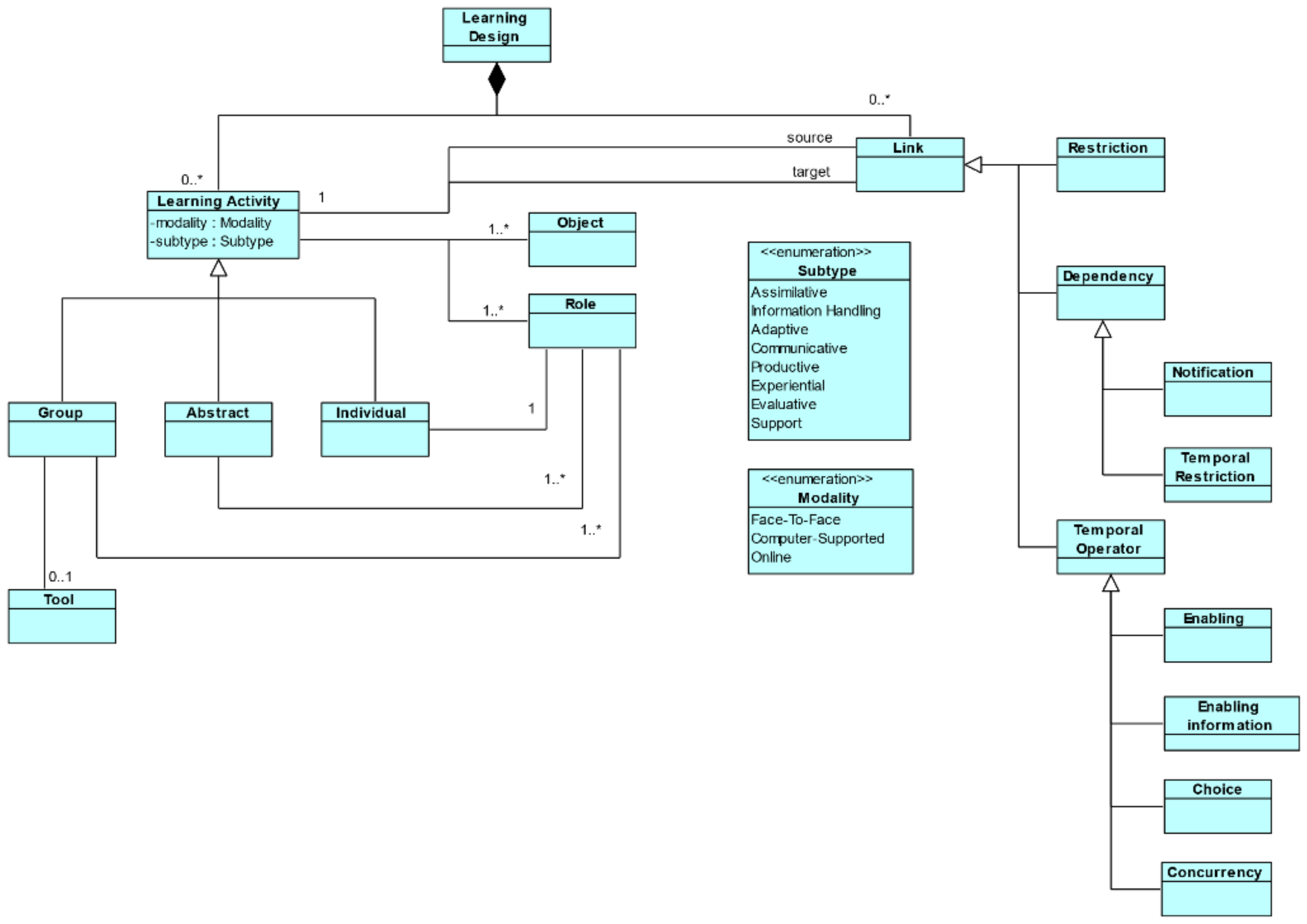

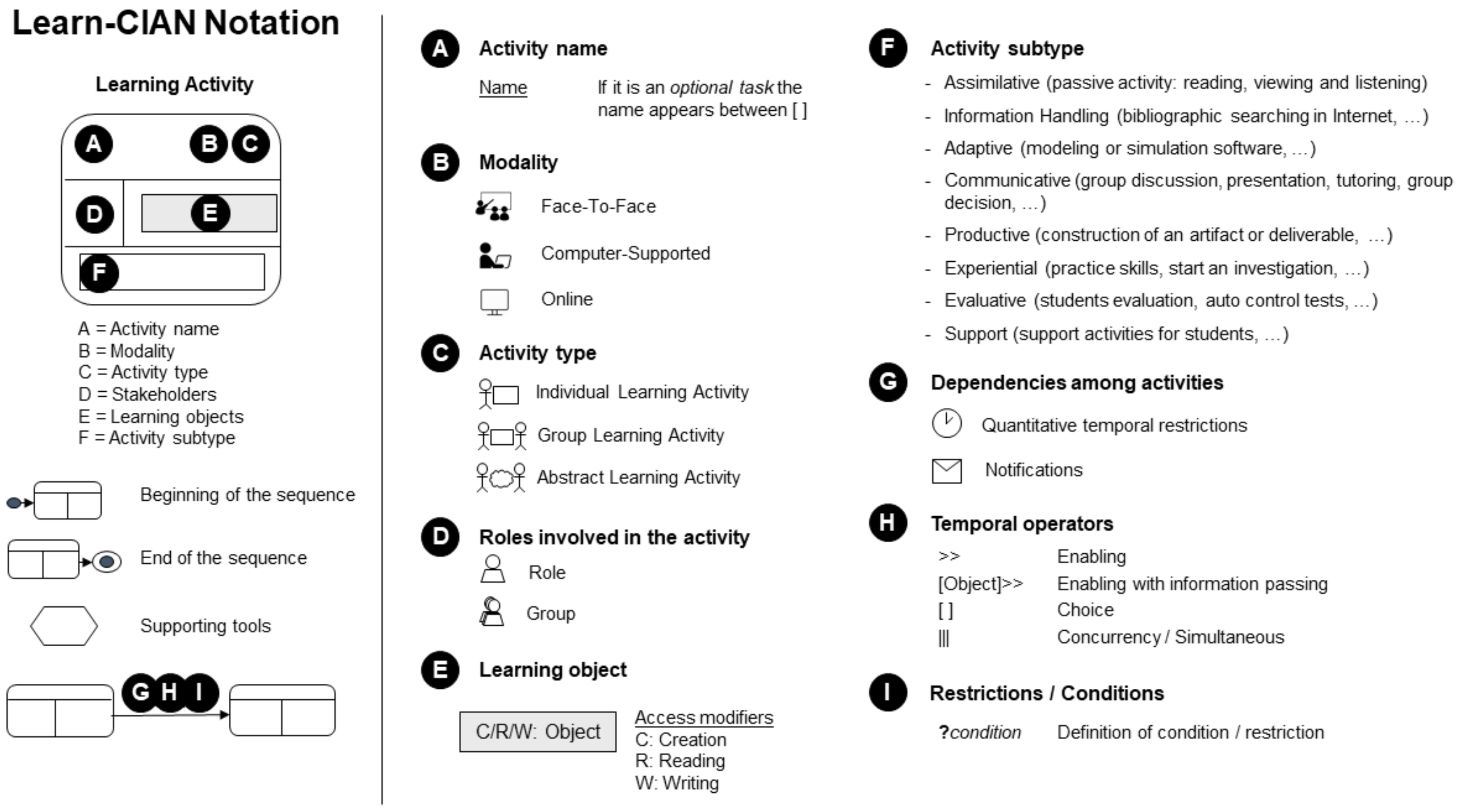

3.1.1. Learn-CIAN Conceptual Framework and Notation

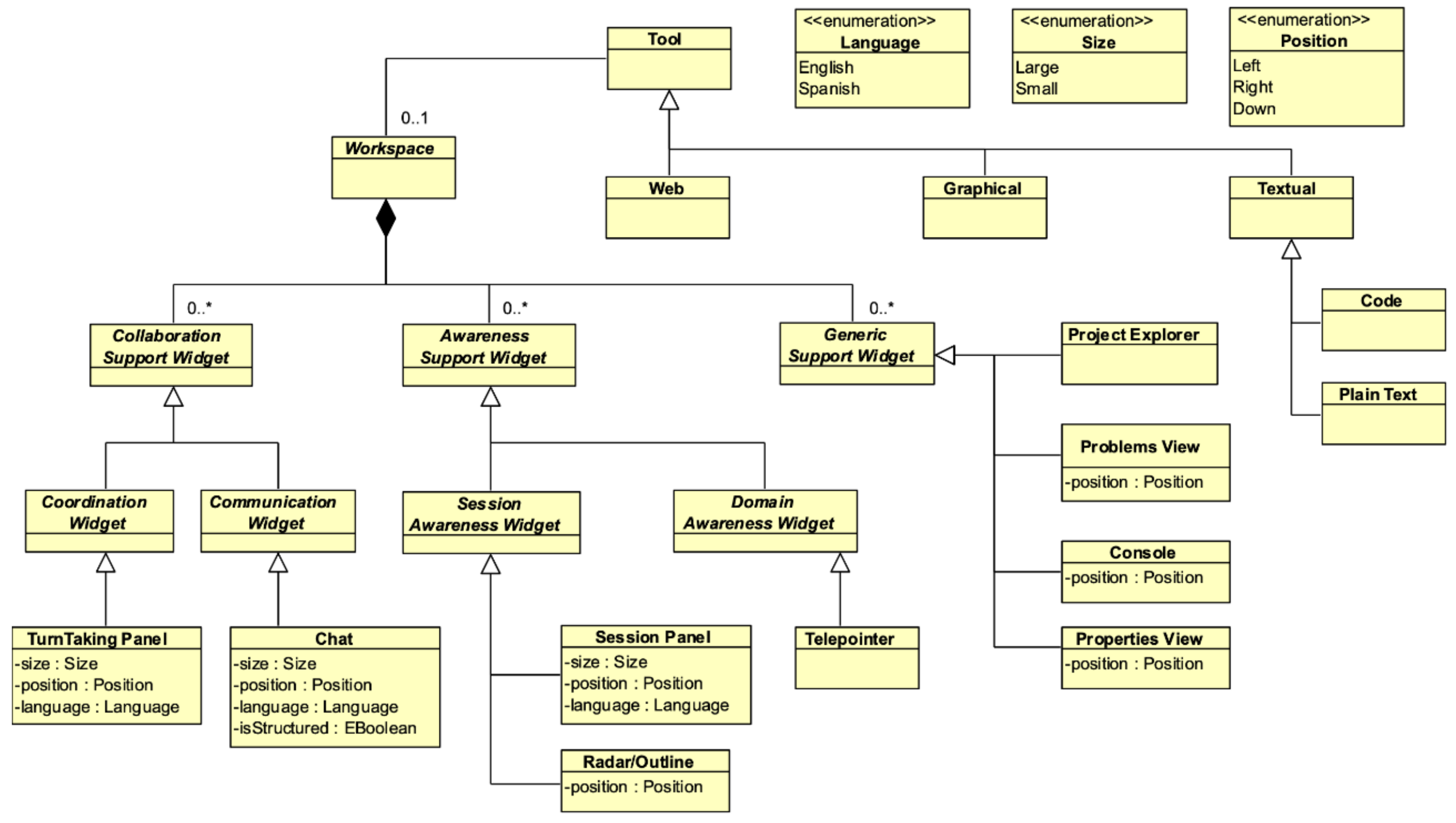

3.1.2. Workspace and Awareness Aspects

- A graphical modeling workspace, whose main tool is a synchronous collaborative graphical editor. It must contain a specific domain, i.e., a specific diagram on which students can work collaboratively. According to the rules established by the Learn-CIAN notation, a workspace with these characteristics would correspond to a group computer-supported and productive kind of learning activity.

- A collaborative workspace in which textual information is shared, providing, for instance, two types of editors: plain text (if the students want to work collaboratively in the elaboration of a manuscript) and code (if students want to address the implementation of a programming problem). This type of workspace would be related to group computer-supported and productive learning activities.

- Finally, a Web browsing workspace is provided, allowing students to collaborate in the search and consultation of information. A workspace of this kind would be a group computer-supported and information handling learning.

3.1.3. Pedagogical Usability Aspects

3.2. Technological Framework

3.2.1. Eugenia and Epsilon Core Languages

- The Epsilon Object Language (EOL), whose main objective is to provide a reusable set of functionalities to facilitate model management. In the Learn-CIAM context, EOL is required when the user wants to customize a graphical editor or add additional functionality (e.g., reduce the distance between labels and nodes, or embed custom figures to the nodes).

- The Epsilon Validation Language (EVL), whose main objective is to contribute to the model validation process. Specifically, it allows the user to specify and evaluate constraints on models which come from different meta-models and modeling technologies. In the Learn-CIAM context, EVL is required when the user needs to implement additional validations to the conceptual framework (e.g., to check whether the specification of a concrete collaborative tool respects some of the Learn-CIAN, the workspace and the pedagogical usability rules).

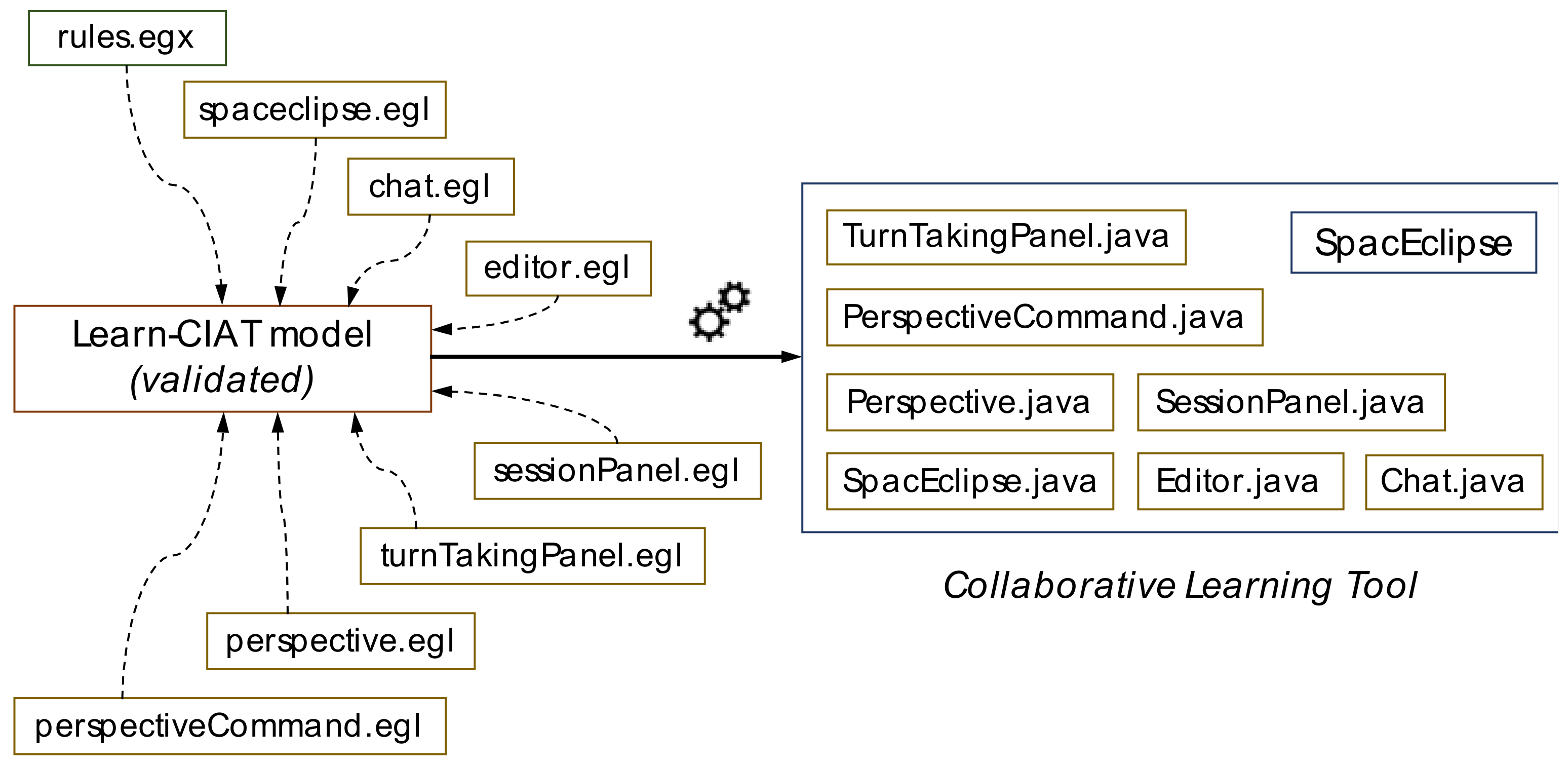

- Finally, the Epsilon Generation Language (EGL), as the language that transforms the information specified in the models into the desired code. In other words, it is the language used to carry out the M2T (model-to-text) transformations. In the Learn-CIAM context, EGL is required to implement the templates that will guide the automatic generation process of the final collaborative tools. This generation process will be triggered from a specific diagram, previously modeled and validated by the professor using the Learn-CIAT graphical editor, introduced in the next subsection.

3.2.2. Learn-CIAT Graphical Editor

3.3. Methodological Framework

3.3.1. Roles

- Domain expert: a user who brings their experience and knowledge of a given context to the development of tools. In the first phase, it is the user in charge of elaborating the domain that is later supported by the collaborative graphical editor. In the second phase, and using the Learn-CIAT graphical editor, it is the user in charge of designing and modeling the flow and/or the collaborative tools to be generated. This role is usually found in most stages of the process and it is linked to the figure of a professor.

- End user: user who will make use of the collaborative tool generated. As it is a collaborative tool, the users will work together to solve a problem previously declared by the domain experts. This role is common in the last phases of the development method and is usually associated with the figure of a student.

- Software engineer: user who has sufficient experience and knowledge of the technology implied in the methodological approach. Thus, they are software engineers who are experts in the field of model-driven development and, specifically, its Eclipse implementation. These kinds of users are spread at various graphical editor development stages and supporting other users at the final development stages of the collaborative tools.

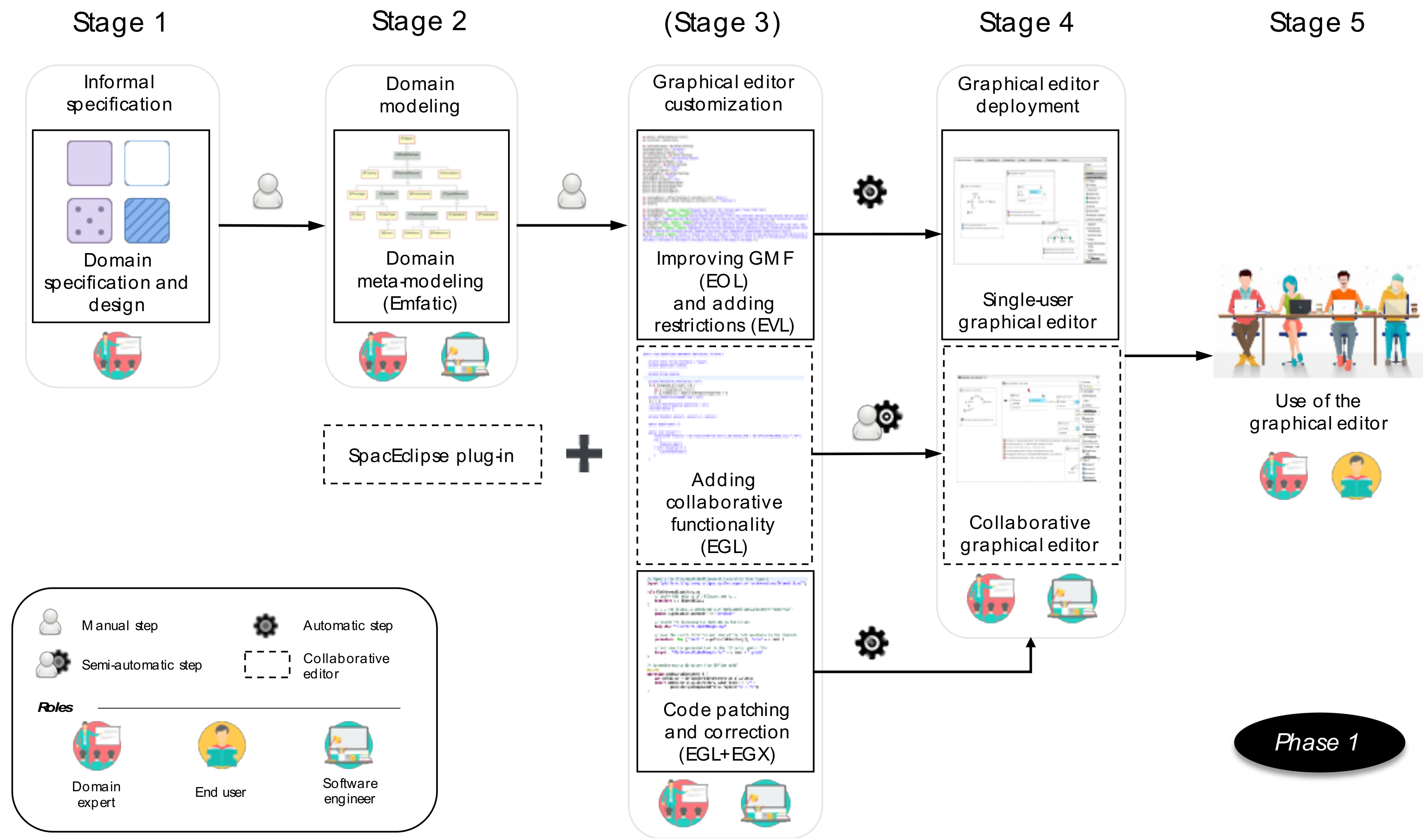

3.3.2. Phases

First Phase: Elaboration of a Graphical Editor Using Eclipse Technology

- Stage 1—Informal specification: in the first stage, the domain experts must generate a first “sketch” of the graphical editor, specifying their working domain. For instance, a graphical editor could be generated for modeling digital circuits, network topologies or use cases, among many other domains.

- Stage 2—Domain modeling: during this stage, the domain experts, with the help of the software engineer, must proceed to model the domain defined previously. For this task, the software engineer must be an expert in the chosen technology, identifying and relating each of the parts of the graphical editor “sketch” with the proper meta-modeling components. Specifically, it must generate the Ecore representation. After that, since Eugenia was the chosen technology, it is also necessary to add the adequate annotations. All this information (Ecore meta-model + Eugenia annotations) must be included in a unique Emfatic file.

- Stage 3—Graphical editor customization: in the third stage, the collaboration between the domain expert and the software engineer is very important. This stage is not mandatory; nonetheless, when the desired editor is more complex than what Eugenia technology can provide/generate by using its basic annotations, it will be necessary to modify the figures and relationships that it generates by default to adapt them to the editor’s needs. To this end, it is necessary to generate (or reuse) an EOL template which contains the appropriate modifications. This template will be detected and applied by Eugenia automatically during the generation process. In addition, the restrictions that are automatically generated from the meta-model will sometimes not be sufficient; therefore, it will be necessary to add more manually. With this aim, one or more EVL templates must be generated and they must contain the restrictions or advices that the editor must support additionally.Furthermore, the users can also provide collaboration functionality to the editor. To achieve that, the SpacEclipse plug-in must be used. This plug-in contains the basic code in order to receive single-user editors and provide them with collaborative functionality, generating a collaborative graphical editor for Eclipse automatically. This is possible by simply pressing a button that applies a prepared script, which adds the necessary collaborative functionality to the editor.Another interesting option provided by the technology is the possibility of modifying the final code generated by Eugenia, using EGL/EGX (EGX files are EGL templates which coordinate the generation of the final code (generating the code in the corresponding path)) templates. This step is usually necessary in those cases in which, despite having made some visual modifications, the generated editor still does not meet expectations (e.g., it may be necessary to bring the nodes closer to the label of one of their attributes).

- Stage 4—Graphical editor deployment: once the graphical editor has been generated and it has been verified that its visual aspect and functionality are the desired ones, the domain expert and the software engineer can proceed to the deployment of a stable version. Basically, they must generate an installer which includes the functionality that assures the correct functioning of the graphical editor in other instances of Eclipse. This installer could be shared with the end users in different ways: physical (USB, HDD, etc.) or web-based (through Eclipse shop or an external URL).

- Stage 5—Use of the graphical editor: finally, once the end users have installed the plug-in in their Eclipse platform, they will be able to start making designs on the graphical editor.

Second Phase: Modeling, Validation and Generation of Collaborative Learning Tools

- Stage 1—Specification of organization, learning objectives and domain: as in the first phase, a domain expert should handle this stage. This user will usually be linked to the figure of a professor, since the main objective is to model flows of learning activities and collaborative learning tools (when considered necessary as support of some of the activities). Before starting the application of the process described in our proposal, the expert should carry out an ethnographic analysis of the end users to whom the system will be directed, and it is necessary a clear understanding of the learning objectives to be achieved. At the same time, they should specify the working domain.Thus, it is worth noting that the requirements elicitation is a process that is considered external to our proposal. However, the obtained information is implicitly considered into the diagrams of our models. First, experts must design through a new “sketch” the flow of a course, that is, the set of learning activities and the order or sequence that constitutes it. This flow may contain, provided the domain expert considers it appropriate, computer-supported group learning activities, which will be used for the automatic generation of the collaborative tools.

- Stage 2—Modeling process: in the second stage, the domain expert must use Learn-CIAT to translate the previous specifications into digital format. In other words, the domain expert must generate a diagram using the graphical editor. This diagram might contain the Learn-CIAN learning flow, and the pedagogical usability criteria that it considers appropriate to achieve the learning objectives initially stated.

- Stage 3—Collaborative tool modeling: this stage can be carried out by the domain expert. So as to model the collaborative tools, Learn-CIAT contains the necessary nodes and relationships to instantiate and configure the computer-supported group activities, as well as the collaborative workspace and its working context, allowing the selection of the tools and awareness features that are considered appropriate. To illustrate, it is possible to model a collaborative program edition supporting task (i.e., a collaborative Java editor), whose workspace/user interface is made up of a session panel, to consult the users who are connected to the session, and a chat, to support the communication between them.

- Stage 4—Collaborative tool generation and deployment: once the group activity has been modeled and validated, the domain expert could generate the final code automatically, including those tools and awareness components that have been defined. The collaborative tools can be generated by the same domain expert since they will only have to execute the transformation process. After that, the software engineer or domain expert must, once again, oversee the plug-in deployment, as in the first phase.

- Stage 5—Use of the collaborative learning tool: the last stage involves the end users. This figure will usually be linked to students. Similar to the first phase, the end users must install the generated collaborative tool on their Eclipse platforms and solve the determined learning task.

4. Application of the Learn-CIAM Methodology

- In the first stage, the domain expert (the professor) must define the learning objectives, the flow of learning activities to be carried out (Figure 8a) and, if they consider it necessary, the collaborative tool to be generated. In this example, a text-based collaborative tool for learning Java will be generated (Figure 8b).

- In the second stage, the professor must transform this information to a digital format. Specifically, they must use the Learn-CIAT graphical editor to generate a diagram in Learn-CIAN notation, similar to that in Figure 8.The third stage is very important, especially when the professor considers that a support tool is essential for one of the group learning activities. In this stage, the professor must define the tool to be generated (one of the ones that we already support: graphical editor, text editor or Web browser). In order to do so, they must select it in the activity (EGL Tool), mark it as it is going to be generated (EGL Transformation) (Figure 9a), and configure its workspace (as stated before, this configuration is not mandatory). To help and guide the professor through this process, we have incorporated a series of validations (warnings and errors) defined in the EVL language (Figure 9b). To launch a model/diagram validation, the professors only need to press a button in the environment.In Figure 9, for instance, Learn-CIAT has detected up to two errors and two recommendations after the validation process of the model: (1) an error showing that a user is trying to add telepointers to a code editor, when these can only be added to graphical modeling environments (Table 2); (2) a warning showing that the computer-supported group activity named “Programming Project” has been declared as assimilative, when these types of tasks should be of a productive nature (Table 1); and (3) lastly, different warnings showing that the professor can configure the widgets if they want (e.g., to introduce the desired position for the session panel of the code editor). In terms of pedagogical usability, since the learning flow designed does not fulfill criterion S2: “The learning systems allow the communication with other uses (chat, e-mail, forum, etc.)” (Table 3), there is one error added to the diagram. This error is displayed since the tool selected to be generated, a code editor, does not incorporate at least one chat into its workspace. Furthermore, the user can correct these errors or warnings automatically by pressing a button which will initiate the corresponding quick fix (if it was declared in the EVL template).

- In stage 4, the professor can initiate the automatic collaborative tool generation process (Figure 10). To this end, we implemented a series of templates written in EGL. Specifically, seven EGL templates have been prepared. These templates are responsible for generating, in a dynamic manner, the Java classes that contain the user interface and the functionality of the final tool, with respect to the configuration of the Learn-CIAT validated model. At the same time, in order to coordinate the generation of each one of the classes, it was necessary to implement an additional EGX template, which is responsible for the generation of each Java class in the path indicated within the SpacEclipse plug-in. This way, the user only needs to focus on modeling and validating the desired system, as it will be able to generate learning tools such as the one shown in Figure 11, by simply pressing a button.It is also important to note that, in this stage, the Software Engineer must launch the database and the server included with SpacEclipse, where the professor must access and create a new learning session. This session will be accessed later by the end users (the students) to start working collaboratively.Figure 11 shows a collaborative code editor (in Java language) surrounded by the following set of components: a session panel (1), a project explorer (2), a chat (3), a console (4), a turn-taking panel (5) and a problem view (6), each of them with their own configuration. For instance, the chat has been placed at the bottom of the editor, it is large, and it is translated into English, while the session panel is large, has been placed on the left of the editor, and has also been generated in this language. In addition, each component incorporates its own awareness characteristics. Thus, for example, the turn-taking panel includes a semaphore, located at the top right, which changes color according to the user’s request: take the turn (in green) or not take the turn (in red), and a voting system at the bottom, which indicates whether this change is accepted.

- In the final stage, either the professor or the Software Engineer must export the collaborative tool generated and share it with the students. Then, the students must proceed to install it in their environments and access the corresponding collaborative learning session.

- In the first stage, the domain expert (professor) must define the visual aspect of the graphical editor. In this example: digital circuit diagrams.

- In the second stage, the professor must share the domain with the Software Engineer who will proceed to its implementation in Emfatic code. At this point, it is possible to generate the single-user graphical editor automatically. However, as it is intended to provide it with collaborative functionality, it is also necessary to execute the third stage.

- To make the graphical editor a collaborative one, the professor must follow the next steps: (1) import the SpacEclipse project into the workspace; and (2) apply a script. This script is already prepared for every graphical editor, regardless of the domain, in the form of a patch named CollaborativeDiagramEditor.patch, adding the Java collaborative functionality that will be added to the SpacEclipse instance. This script can be applied/disapplied by the professor just by clicking on the “patches” folder thanks to the Eugenia technology. This facilitates the work to be done by the professors, as they do not need to know anything about the most technical aspects.

- Now, the collaborative graphical editor is prepared. Then, the professor only needs to follow the steps of the second phase of the method, as in the first example. The difference is that, in this case, the professor needs to select a graphical editor to be generated, not a code editor, and make sure that the graphical editor previously generated is in the workspace next to the learning diagram (at the same root path). Figure 12 shows the collaborative digital circuit modeling editor generated.

5. Validation

5.1. Plannig and Execution of the Experiments

5.1.1. Research Questions

- RQ. What is the subjective perception of experts in the areas of Education and Computer Science regarding the suitability, completeness, usefulness and ease of use of the Learn-CIAM proposal and its main components?

- RQ1. What is the subjective perception of potential users regarding the need to apply a process of modeling and generation of collaborative learning tools?

- RQ2. What is the subjective perception of potential users regarding the convenience of applying the model-based development paradigm to approach the development of collaborative learning tools?

- RQ3. What is the subjective perception of potential users regarding the selection of Eclipse as environment to support the proposed method?

- RQ4. What is the subjective perception of potential users regarding the proposed Learn-CIAM process (its phases and stages and the use of high-level languages)?

- RQ5. What is the subjective perception of potential users regarding the support offered by Learn-CIAM to non-technical users?

- RQ6. What is the subjective perception of potential users regarding the suitability, complexity and completeness of modeling elements supported by the Learn-CIAN notation?

- RQ7. What is the subjective perception of potential users regarding the suitability and completeness of the modeling elements related to awareness and pedagogical usability supported by the proposal?

- RQ8. What is the subjective perception of potential users regarding the difficulty of applying the modeling, validation and generation stages of collaborative learning tools supported by Learn-CIAT?

- RQ9. What is the subjective perception of potential users regarding the quality of the products generated as a result of applying Learn-CIAM, in terms of ease of use and learning, as well as support for communication, coordination and awareness mechanisms?

5.1.2. Participants

5.1.3. Method

- To analyze the Learn-CIAM proposal and its main components (methodological, specification support and technological)

- with the purpose of knowing the subjective perception

- with regard to the suitability, completeness, usefulness and ease of use

- from the point of view of Computer Science (CS) and Education (ED) experts

- within the context of the application a model-driven approach to the generation of collaborative learning tools.

- Introductory presentation: first, there was a presentation in which the methodological framework was introduced as well as the concepts and technologies involved.

- Demonstration/Execution: in the first experiment, the demonstration consisted of modeling and validating a learning course using Learn-CIAT (the same of Figure 9), to end with the generation of a collaborative programming system (the same of Figure 11) to support one of the activities of the course. Moreover, some pedagogical usability and awareness criteria were included in order to show the possibilities provided by the method and the capacities of the Learn-CIAT graphical editor. At the same time, during the whole process, there was an exchange of opinions between the speaker and the experts to find possible defects and improvements.In the second experiment, the group of experts had to use the Learn-CIAT tool to model, validate and generate the same scenario as in the demonstration of the first experiment. This is a more real case study, in which the users gain greater insight so that the subjective information that they provide becomes more realistic and useful.

- Questionnaire to the experts: finally, the experts were provided with a questionnaire which enabled them to evaluate each of the components of Learn-CIAM (methodological, conceptual and technological frameworks). First, they had to fill in a section with which to delimit their profile, differentiating between professors and non-professors (researchers), as well as their expertise, differentiating between technical and non-technical profiles (Education/Pedagogy and Software Engineers). The latter, in turn, could be specialized in three more specific branches, without being exclusive: model-driven development experts, Eclipse development environment experts, or instructional design experts.The questions were distributed concerning the subjective perception of the usefulness, ease of use, usability, manageability, etc. of the three main frameworks (Appendix A, Table A1 and Table A2): methodological (MET), conceptual (CON) and technological (TEC). The methodological framework assessment consisted of 6 questions, as did the conceptual framework, while the technological framework consisted of 8. Each of the questions was scored using a Likert scale of 1 out of 5 points, with 5 being the highest value, denoting “full agreement” with the corresponding statement. In turn, each of these sections contained a blank box where the expert could argue any comment freely.

5.2. Results of the First Experiment

5.3. Results of the Second Experiment

5.4. Reflection

5.5. Study Limitations

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| ID (RQ) | Statement | Mean | Median | Mode | Likert | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Education (ED) | Computer Science (CS) | |||||||||||||

| 1 | 2 | 3 | 4 | 5 | 1 | 2 | 3 | 4 | 5 | |||||

| MET1 (RQ1) | Do you consider the idea of proposing a method to support the modeling, validation and generation of collaborative learning tools to be useful? | 4.46 | 5.00 | 5 | - | - | 50% | - | 50% | - | - | - | 45% | 55% |

| MET2 (RQ2) | Do you think that following a Model-Driven Development paradigm makes the method more understandable and easier to use than a traditional ad-hoc programming development method? | 4.23 | 4.00 | 4 | - | - | - | 100% | - | - | - | 9% | 55% | 36% |

| MET3 (RQ3) | Do you consider the choice of the Eclipse Model-Driven Development approach as a support for the method to be appropriate? | 4.31 | 4.00 | 4 | - | - | - | 100% | - | - | - | - | 64% | 36% |

| MET4 (RQ4) | Do you consider adequate the division of the methodological proposal into different phases and stages? | 4.38 | 5.00 | 5 | - | - | - | 50% | 50% | - | - | 18% | 27% | 55% |

| MET5 (RQ4) | Do you think that the combination of technologies and high-level languages presented as support for the method is adequate? | 4.46 | 5.00 | 5 | - | - | - | 100% | - | - | - | 9% | 27% | 64% |

| MET6 (RQ5) | Do you think that a user without advanced computer knowledge could be able to generate collaborative learning tools by following these steps? | 2.92 | 3.00 | 3 | - | 50% | - | 50% | - | 9% | 18% | 45% | 27% | - |

| CON1 (RQ7) | Do you think that the aspects considered in the meta-models (domain, workspace, awareness and pedagogical usability) are adequate? | 4.38 | 4.00 | 4 | - | - | 50% | 50% | - | - | - | - | 45% | 55% |

| CON2 (RQ6) | Do you consider the Learn-CIAN notation adequate for modeling flows of learning activities? | 4.46 | 4.00 | 4 | - | - | - | 50% | 50% | - | - | - | 55% | 45% |

| CON3 (RQ6) | Do you think that the number of visual elements in the Learn-CIAN notation is sufficient? | 4.38 | 5.00 | 5 | - | - | - | 50% | 50% | - | - | 18% | 27% | 55% |

| CON4 (RQ7) | Do you consider the set of components/widgets and awareness elements that were chosen for the specification of the workspaces to be adequate? | 4.38 | 4.00 | 4 | - | - | - | 100% | - | - | - | 9% | 36% | 55% |

| CON5 (RQ7) | Do you think that the number of components/widgets and awareness elements that were chosen for the specification of workspaces is sufficient? | 4.38 | 5.00 | 5 | - | - | - | 50% | 50% | - | - | 27% | 9% | 64% |

| CON6 (RQ7) | Do you think that the set of criteria selected for specifying aspects related to pedagogical usability is adequate? | 4.38 | 4.00 | 4 | - | - | 50% | 50% | - | - | - | - | 45% | 55% |

| TEC1 (RQ8) | Do you find the modeling process provided by the Learn-CIAT support tool simple? | 3.92 | 4.00 | 4 | - | 50% | - | 50% | - | - | - | 27% | 36% | 36% |

| TEC2 (RQ8) | Do you find the validation process provided by the Learn-CIAT support tool simple? | 4.46 | 5.00 | 5 | - | - | - | 100% | - | - | - | 9% | 27% | 64% |

| TEC3 (RQ8) | Do you find the generation process provided by the Learn-CIAT support tool simple? | 3.85 | 4.00 | 4 | - | - | 50% | 50% | - | - | - | 18% | 64% | 18% |

| TEC4 (RQ9) | Do you find the user interface of the generated tools easy to use and learn? | 4.46 | 5.00 | 5 | - | - | 50% | 50% | - | - | - | - | 27% | 63% |

| TEC5 (RQ9) | Do you think that the collaborative tools generated offer adequate mechanisms to perceive the rest of the users who are working in the same session? | 4.38 | 4.00 | 4 | - | - | - | 50% | 50% | - | - | - | 45% | 55% |

| TEC6 (RQ9) | Do you consider the mechanisms offered by the generated collaborative tools to establish textual communication between the users to be adequate? | 4.23 | 4.00 | 4 | - | - | - | - | 100% | - | - | 9% | 55% | 36% |

| TEC7 (RQ9) | Do you consider the mechanisms that they offer to regulate the use of the shared workspace between the users of the same session to be adequate? | 4.23 | 4.00 | 5 | - | 50% | - | 50% | - | - | - | 9% | 36% | 55% |

| TEC8 (RQ8) | To carry out this activity, a description of the main components was provided. Without this help, do you consider the user interface of the graphical editor sufficiently self-explanatory so that the user does not have difficulty in using it? | 3.23 | 3.00 | 3 | - | - | 50% | - | 50% | - | 27% | 36% | 36% | - |

| ID (RQ) | Statement | Mean | Median | Mode | Likert | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Education (ED) | Computer Science (CS) | |||||||||||||

| 1 | 2 | 3 | 4 | 5 | 1 | 2 | 3 | 4 | 5 | |||||

| MET1 (RQ1) | Do you consider the idea of proposing a method to support the modeling, validation and generation of collaborative learning tools to be useful? | 4.60 | 5.00 | 5 | - | - | 25% | - | 75% | - | - | - | 36% | 64% |

| MET2 (RQ2) | Do you think that following a Model-Driven Development paradigm makes the method more understandable and easier to use than a traditional ad-hoc programming development method? | 4.60 | 5.00 | 5 | - | - | 50% | - | 50% | - | - | - | 18% | 82% |

| MET3 (RQ3) | Do you consider the choice of the Eclipse Model-Driven Development approach as a support for the method to be appropriate? | 3.93 | 4.00 | 3 | - | - | 50% | 50% | - | - | - | 36% | 18% | 45% |

| MET4 (RQ4) | Do you consider adequate the division of the methodological proposal into different phases and stages? | 4.13 | 5.00 | 5 | - | - | 25% | 50% | 25% | - | 18% | 9% | 9% | 64% |

| MET5 (RQ4) | Do you think that the combination of technologies and high-level languages presented as support for the method is adequate? | 4.20 | 4.00 | 5 | - | - | 75% | - | 25% | - | - | 9% | 36% | 55% |

| MET6 (RQ5) | Do you think that a user without advanced computer knowledge could be able to generate collaborative learning tools by following these steps? | 3.00 | 3.00 | 2 | - | - | 75% | 25% | - | - | 45% | 18% | 36% | - |

| CON1 (RQ7) | Do you think that the aspects considered in the meta-models (domain, workspace, awareness and pedagogical usability) are adequate? | 3.93 | 4.00 | 4 | - | - | 25% | 50% | 25% | 9% | - | 9% | 55% | 27% |

| CON2 (RQ6) | Do you consider the Learn-CIAN notation adequate for modeling flows of learning activities? | 4.60 | 5.00 | 5 | - | - | - | 25% | 75% | - | - | - | 45% | 55% |

| CON3 (RQ6) | Do you think that the number of visual elements in the Learn-CIAN notation is sufficient? | 4.53 | 5.00 | 5 | - | - | - | 50% | 50% | - | - | - | 45% | 55% |

| CON4 (RQ7) | Do you consider the set of components/widgets and awareness elements that were chosen for the specification of the workspaces to be adequate? | 4.07 | 4.00 | 4 | - | - | 25% | 75% | - | - | - | 9% | 64% | 27% |

| CON5 (RQ7) | Do you think that the number of components/widgets and awareness elements that were chosen for the specification of workspaces is sufficient? | 4.27 | 4.00 | 4 | - | - | - | 75% | 25% | - | - | 9% | 55% | 36% |

| CON6 (RQ7) | Do you think that the set of criteria selected for specifying aspects related to pedagogical usability is adequate? | 3.80 | 4.00 | 4 | - | 25% | 25% | 50% | - | - | 9% | 9% | 55% | 27% |

| TEC1 (RQ8) | Do you find the modeling process provided by the Learn-CIAT support tool simple? | 4.00 | 4.00 | 3 | - | - | 75% | 25% | - | - | - | 27% | 18% | 55% |

| TEC2 (RQ8) | Do you find the validation process provided by the Learn-CIAT support tool simple? | 4.53 | 5.00 | 5 | - | - | 50% | 50% | - | - | - | 18% | 9% | 73% |

| TEC3 (RQ8) | Do you find the generation process provided by the Learn-CIAT support tool simple? | 4.13 | 4.00 | 4 | - | - | - | 100% | - | - | - | 18% | 45% | 36% |

| TEC4 (RQ9) | Do you find the user interface of the generated tools easy to use and learn? | 4.40 | 4.00 | 4 | - | - | - | 50% | 50% | - | - | 9% | 45% | 45% |

| TEC5 (RQ9) | Do you think that the collaborative tools generated offer adequate mechanisms to perceive the rest of the users who are working in the same session? | 3.73 | 4.00 | 4 | - | - | - | 75% | 25% | - | 18% | 9% | 73% | - |

| TEC6 (RQ9) | Do you consider the mechanisms offered by the generated collaborative tools to establish textual communication between the users to be adequate? | 4.07 | 4.00 | 4 | - | - | - | 50% | 50% | - | - | - | 9% | 91% |

| TEC7 (RQ9) | Do you consider the mechanisms that they offer to regulate the use of the shared workspace between the users of the same session to be adequate? | 4.07 | 4.00 | 4 | - | - | - | 75% | 25% | 9% | - | - | 64% | 27% |

| TEC8 (RQ8) | To carry out this activity, a description of the main components was provided. Without this help, do you consider the user interface of the graphical editor sufficiently self-explanatory so that the user does not have difficulty in using it? | 2.80 | 3.00 | 3 | - | 25% | 75% | - | - | - | 36% | 45% | 18% | - |

References

- Schmidt, D.C. Model-Driven Engineering. 2006. Available online: http://www.computer.org/portal/site/computer/menuitem.e533b16739f5 (accessed on 28 January 2021).

- Bucchiarone, A.; Cabot, J.; Paige, R.F.; Pierantonio, A. Grand challenges in model-driven engineering: An analysis of the state of the research. Softw. Syst. Model. 2020, 19, 5–13. [Google Scholar] [CrossRef]

- Collazos, C.A.; Gutiérrez, F.L.; Gallardo, J.; Ortega, M.; Fardoun, H.M.; Molina, A.I. Descriptive theory of awareness for groupware development. J. Ambient Intell. Humaniz. Comput. 2019, 10, 4789–4818. [Google Scholar] [CrossRef]

- Ellis, C.A.; Gibbs, S.J.; Rein, G. Groupware: Some issues and experiences. ACM Commun. 1991, 34, 39–58. [Google Scholar] [CrossRef]

- González, V.M.; Mark, G. Managing currents of work: Multi-tasking among multiple collaborations. In Proceedings of the 9th European Conference on Computer-Supported Cooperative Work, Paris, France, 18–22 September 2005; pp. 143–162. [Google Scholar] [CrossRef]

- Sire, S.; Chatty, S.; Gaspard-Boulinc, H.; Colin, F.R. How Can Groupware Preserve our Coordination Skills? Designing for Direct Collaboration. In INTERACT ’99; IOS Press: Edinburgh, UK, 1999; pp. 304–312. Available online: http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.74.6271&rep=rep1&type=pdf (accessed on 11 March 2021).

- Agredo-Delgado, V.; Ruiz, P.H.; Mon, A.; Collazos, C.A.; Fardoun, H.M. Towards a Process Definition for the Shared Understanding Construction in Computer-Supported Collaborative Work; Springer: Cham, Germany, 2020; pp. 263–274. [Google Scholar]

- Dourish, P.; Bellotti, V. Awareness and coordination in shared workspaces. In Proceedings of the 1992 ACM Conference on Computer-Supported Cooperative Work—CSCW ’92, Toronto, ON, Canada, 31 October–4 November 1992; pp. 107–114. [Google Scholar] [CrossRef]

- Teruel, M.A.; Navarro, E.; López-Jaquero, V.; Montero, F.; González, P. A comprehensive framework for modeling requirements of CSCW systems. J. Softw.: Evol. Process 2017, 29, e1858. [Google Scholar] [CrossRef]

- Antunes, P.; Herskovic, V.; Ochoa, S.F.; Pino, J.A. Reviewing the quality of awareness support in collaborative applications. J. Syst. Softw. 2014, 89, 146–169. [Google Scholar] [CrossRef]

- Silva, L.; Mendes, A.J.; Gomes, A. Computer-supported Collaborative Learning in Programming Education: A Systematic Literature Review. In Proceedings of the 2020 IEEE Global Engineering Education Conference (EDUCON), Porto, Portugal, 27–30 April 2020; pp. 1086–1095. [Google Scholar] [CrossRef]

- Kabza, E.M.; Berre, A.J.; Murad, H.; Mansuroglu, D. Evaluating pedagogical practices supporting collaborative learning for model-based system development courses. In Proceedings of the Norsk IKT-Konferanse for Forskning og Utdanning, Digital Conference, 24–25 November 2020; no. 4. Available online: http://www.nik.no/ (accessed on 27 January 2021).

- Stahl, G. A decade of CSCL. Int. J. Comput. Collab. Learn. 2015, 10, 337–344. [Google Scholar] [CrossRef]

- Chong, F. The Pedagogy of Usability: An Analysis of Technical Communication Textbooks, Anthologies, and Course Syllabi and Descriptions. Tech. Commun. Q. 2015, 25, 12–28. [Google Scholar] [CrossRef]

- Sales Júnior, F.M.; Ramos, M.A.; Pinho, A.L.; Santa Rosa, J.G. Pedagogical Usability: A Theoretical Essay for E-Learning. Holos 2016, 32, 3–15. [Google Scholar] [CrossRef]

- Nokelainen, P. An empirical assessment of pedagogical usability criteria for digital learning material with elementary school students. Educ. Technol. Soc. 2006, 9, 178–197. Available online: https://www.j-ets.net/ETS/journals/9_2/15.pdf (accessed on 14 February 2019).

- Nielsen, J. Evaluating Hypertext Usability; Springer: Berlin/Heidelberg, Germany, 1990. [Google Scholar]

- Ma, X.; Liu, J.; Liang, J.; Fan, C. An empirical study on the effect of group awareness in CSCL environments. Interact. Learn. Environ. 2020, 1–16. [Google Scholar] [CrossRef]

- Paternò, F. ConcurTaskTrees: An Engineered Notation for Task Models. In The Handbook of Task Analysis for Human-Computer Interaction; CRC Press: Boca Raton, FL, USA, 2003; pp. 483–503. [Google Scholar]

- Van Der Veer, G.C.; Lenting, B.F.; Bergevoet, B.A. GTA: Groupware task analysis—Modeling complexity. Acta Psychol. 1996, 91, 297–322. [Google Scholar] [CrossRef]

- Pinelle, D. Improving Groupware Design for Loosely Coupled Groups; University of Saskatchewan: Saskatoon, SK, Canada, 2004. [Google Scholar]

- Lee, C.P.; Paine, D. From the Matrix to a Model of Coordinated Action (MoCA). In Proceedings of the 18th ACM Conference on Computer Supported Cooperative Work & Social Computing—CSCW ’15, Vancouver, BC, Canada, 14–15 March 2015; pp. 179–194. [Google Scholar] [CrossRef]

- Neumayr, T.; Jetter, H.-C.; Augstein, M.; Friedl, J.; Luger, T. Domino. Proc. Acm Hum.-Comput. Interact. 2018, 2, 1–24. [Google Scholar] [CrossRef]

- Luque, L. Collab4All: A Method to Foster Inclusion in Computer-Supported Collaborative Work; Universidade de Sao Paulo: Sao Paulo, Brazil, 2019. [Google Scholar]

- Miranda, F. G4CVE: A framework based on CSCW for the development of collaborative virtual environments. In Proceedings of the 7th Workshop on Aspects of Human-Computer Interaction for the Social Web, Sao Paulo, Brazil, 9–14 July 2017; pp. 10–17. Available online: https://sol.sbc.org.br/index.php/waihcws/article/view/3869 (accessed on 20 January 2021).

- Blekhman, A.; Dori, D. 3.5.2 Tesperanto—A Model-Based System Specification Methodology and Language. Incose Int. Symp. 2013, 23, 139–153. [Google Scholar] [CrossRef]

- Blekhman, A.; Wachs, J.P.; Dori, D. Model-Based System Specification with Tesperanto: Readable Text from Formal Graphics. IEEE Trans. Syst. Man Cybern. Syst. 2015, 45, 1448–1458. [Google Scholar] [CrossRef]

- KNiemantsverdriet, K.; Van Essen, H.; Pakanen, M.; Eggen, B. Designing for Awareness in Interactions with Shared Systems. ACM Trans. Comput. Interact. 2019, 26, 1–41. [Google Scholar] [CrossRef]

- Penichet, V.M.R.; Lozano, M.D.; Gallud, J.A.; Tesoriero, R.; Rodríguez, M.L.; Garrido, J.L.; Noguera, M.; Hurtado, M.V. Extending and supporting featured user interface models for the development of groupware applications. J. Univers. Comput. Sci. 2008, 14, 3053–3070. Available online: http://www.jucs.org/jucs_14_19/extending_and_supporting_featured (accessed on 19 January 2021).

- Penichet, V.M.R.; Lozano, M.D.; Gallud, J.A.; Tesoriero, R. User interface analysis for groupware applications in the TOUCHE process model. Adv. Eng. Softw. 2009, 40, 1212–1222. [Google Scholar] [CrossRef]

- Penichet, V.M.R.; Lozano, M.D.; Gallud, J.A.; Tesoriero, R. Requirement-based approach for groupware environments design. J. Syst. Softw. 2010, 83, 1478–1488. [Google Scholar] [CrossRef]

- Yu, E.S.K. Towards Modelling and Reasoning Support for Early-Phase Requirements Engineering. In Proceedings of the 3rd IEEE International Symposium on Requirements Engineering, Annapolis, MD, USA, 5–8 January 1997; pp. 226–235. Available online: http://www.cs.toronto.edu/pub/eric/RE97.pdf (accessed on 21 January 2021).

- Teruel, M.A.; Navarro, E.; López-Jaquero, V.; Montero, F.; González, P. CSRML Tool: A Visual Studio Extension for Modeling CSCW Requirements. In Proceedings of the 6th International i* Workshop, Valencia, Spain, 17–18 June 2013; pp. 122–124. Available online: http://ceur-ws.org/Vol-978/paper_21.pdf (accessed on 19 January 2021).

- Botturi, L.; Stubbs, S.T. Handbook of Visual Languages for Instructional Design; Information Science Reference: Hershey, PA, USA, 2011. [Google Scholar]

- Ferraris, C.; Martel, C.; Vignollet, L. LDL for Collaborative Activities. In Instructional Design; IGI Global: Hershey, PA, USA, 2011; pp. 403–430. [Google Scholar]

- Rapos, E.; Stephan, M. IML: Towards an Instructional Modeling Language. In Proceedings of the MODELSWARD 2019, Prague, Czech Republic, 20–22 February 2019; pp. 417–425. Available online: https://www.scitepress.org/Papers/2019/74852/74852.pdf (accessed on 19 January 2021).

- Bakki, A.; Oubahssi, L.; George, S.; Cherkaoui, C. MOOCAT: A visual authoring tool in the cMOOC context. Educ. Inf. Technol. 2018, 24, 1185–1209. [Google Scholar] [CrossRef]

- Bakki, A.; Oubahssi, L.; George, S.; Cherkaoui, C. A Model and Tool to Support Pedagogical Scenario Building for Connectivist MOOC. Technol. Knowl. Learn. 2020, 25, 899–927. [Google Scholar] [CrossRef]

- Martini, R.G.; Henriques, P.R. Automatic generation of virtual learning spaces driven by CaVa DSL: An experience report. ACM Sigplan Not. 2017, 52, 233–245. [Google Scholar] [CrossRef]

- Perez-Berenguer, D.; Garcia-Molina, J. INDIeAuthor: A Metamodel-Based Textual Language for Authoring Educational Courses. IEEE Access 2019, 7, 51396–51416. [Google Scholar] [CrossRef]

- Caeiro, M.; Llamas, M.; Anido, L. PoEML: Modeling learning units through perspectives. Comput. Stand. Interfaces 2014, 36, 380–396. [Google Scholar] [CrossRef]

- Ruiz, A.; Panach, J.I.; Pastor, O.; Giraldo, F.D.; Arciniegas, J.L.; Giraldo, W.J. Designing the Didactic Strategy Modeling Language (DSML) From PoN: An Activity Oriented EML Proposal. IEEE Rev. Iberoam. De Tecnol. Del Aprendiz. 2018, 13, 136–143. [Google Scholar] [CrossRef]

- Moody, D.L. The “Physics” of Notations: Toward a Scientific Basis for Constructing Visual Notations in Software Engineering. IEEE Trans. Softw. Eng. 2009, 35, 756–779. [Google Scholar] [CrossRef]

- Figl, K.; Derntl, M.; Rodríguez, M.C.; Botturi, L. Cognitive effectiveness of visual instructional design languages. J. Vis. Lang. Comput. 2010, 21, 359–373. [Google Scholar] [CrossRef]

- Molina, A.I.; Arroyo, Y.; Lacave, C.; Redondo, M.A. Learn-CIAN: A visual language for the modelling of group learning processes. Br. J. Educ. Technol. 2018, 49, 1096–1112. [Google Scholar] [CrossRef]

- Conole, G. Describing learning activities: Tools and resources to guide practice. In Rethinking Pedagogy for a Digital Age: Designing and Delivering Elearning; Routledge: New York, NY, USA, April 2007; pp. 101–111. [Google Scholar] [CrossRef]

- Marcelo, C.; Yot, C.; Mayor, C.; Sanchez Moreno, M.; Rodriguez Lopez, J.M.; Pardo, A. Learning activities in higher education: Towards autonomous learning of students? Rev. Educ. 2014, 363, 334–359. [Google Scholar] [CrossRef]

- Arroyo, Y.; Molina, A.I.; Lacave, C.; Redondo, M.A.; Ortega, M. The GreedEx experience: Evolution of different versions for the learning of greedy algorithms. Comput. Appl. Eng. Educ. 2018, 26, 1306–1317. [Google Scholar] [CrossRef]

- Kolovos, D.S.; García-Domínguez, A.; Rose, L.M.; Paige, R.F.; Kolovos, D. Eugenia: Towards disciplined and automated development of GMF-based graphical model editors. Softw. Syst. Model. 2015, 16, 229–255. [Google Scholar] [CrossRef]

- Kolovos, D.S.; Paige, R.F. The Epsilon Pattern Language. In Proceedings of the 2017 IEEE/ACM 9th International Workshop on Modelling in Software Engineering, MiSE 2017, Buenos Aires, Argentina, 21–22 May 2017; pp. 54–60. [Google Scholar] [CrossRef]

- Wohlin, C.; Runeson, P.; Höst, M.; Ohlsson, M.C.; Regnell, B.; Wesslén, A. Experimentation in Software Engineering; Springer: Berlin/Heidelberg, Germany, 2012; Volume 9783642290. [Google Scholar]

| Modality | |

|---|---|

| Face-to-Face | Activities that must be carried out physically, attending the learning place with the rest of those involved |

| Computer-Supported | Activities that are not mandatory to be carried out in person, linked to digital tools that facilitate learning |

| Online | Tasks that are done through the Internet, and not in a “face-to-face way” |

| Subtype | |

| Assimilative | Passive activities: reading, seeing and hearing |

| Information Handling | Activities of searching, collecting, and contrasting information through text, audio or video |

| Adaptive | Activities in which students use some modeling or simulation software to put their knowledge into practice |

| Communicative | Spoken activities in which students are asked to present information, discuss, debate, etc. |

| Productive | Activities of an active nature, in which some artifact is generated, such as a manuscript, a computer program, etc. |

| Experiential | Practical activities, for the improvement of skills or abilities in a given context |

| Evaluative | Activities aimed at student assessment |

| Support | Support activities for students |

| Component | Workspace | Configuration | Awareness |

|---|---|---|---|

| Chat | All | Position/Language/Size/isStructured | Structured/Free |

| Telepointers | Graphical | - | Colors |

| Session Panel | All | Position/Language/Size | Colors/Icons/State |

| Console | Textual (Code) | Position | - |

| Outline/Radar View | Textual/Graphical | Position | - |

| Project Explorer | All | Position | - |

| Properties View | Graphical | Position | - |

| Turn-taking Panel | Graphical/Textual | Position/Language/Size | Semaphore/Votes |

| Problems View | Graphical/Textual | Position | - |

| Content | |

|---|---|

| C1 | The content is organized in modules or units |

| C2 | The learning objectives are defined at the beginning of a module or unit |

| C3 | The activities inform or warn that prior knowledge is required |

| C4 | The explanation of the concepts is produced in a clear and concise manner |

| C5 | The modules or units are organized by levels of difficulty |

| C6 | The activities incorporate links to external materials and resources |

| Task and Activities | |

| T1 | The activities have been proposed for the purpose of determining the level of learning acquired (questions, exercises, problem solving, etc.) |

| T2 | The activities facilitate understanding of subject content |

| T3 | The activities help improve the students’ skills |

| T4 | The activities help students to integrate new information with information studied previously |

| T5 | The activities reflect real practices of future professional life |

| T6 | The activities are consistent with students’ abilities (neither too easy nor too difficult) |

| T7 | There are activities that serve to evaluate students (questionnaires, exams, presentations, etc.) |

| Social Interaction | |

| S1 | The learning system allows the students to work collaboratively |

| S2 | The learning system allows you to communicate with other users (chat, e-mail, forum, etc.) |

| S3 | The learning system allows us to share information (photos, videos or documents related to the task) |

| S4 | The learning system motivates competitiveness among users (achievements, scores, etc.) |

| Personalization | |

| P1 | The students have freedom to direct their learning |

| P2 | The students have control over the content that they want to learn and the sequence that they want to follow |

| P3 | The activities represent multiple views of the content |

| P4 | The students can personalize their learning through material (videos, texts or audios) |

| P5 | The questionnaires and assessments can be customized to fit the student’s profile |

| Multimedia | |

| M1 | The activities present elements that facilitate learning (animations, simulations, images, etc.) |

| M2 | The multimedia resources have been appropriately selected to facilitate learning |

| M3 | The multimedia resources have a duration of less than 7 min |

| M4 | The multimedia resources have good video, audio, and image quality |

| M5 | The multimedia resources can be downloaded |

| M6 | There is an adequate proportion of multimedia resources |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Arroyo, Y.; Molina, A.I.; Redondo, M.A.; Gallardo, J. Learn-CIAM: A Model-Driven Approach for the Development of Collaborative Learning Tools. Appl. Sci. 2021, 11, 2554. https://doi.org/10.3390/app11062554

Arroyo Y, Molina AI, Redondo MA, Gallardo J. Learn-CIAM: A Model-Driven Approach for the Development of Collaborative Learning Tools. Applied Sciences. 2021; 11(6):2554. https://doi.org/10.3390/app11062554

Chicago/Turabian StyleArroyo, Yoel, Ana I. Molina, Miguel A. Redondo, and Jesús Gallardo. 2021. "Learn-CIAM: A Model-Driven Approach for the Development of Collaborative Learning Tools" Applied Sciences 11, no. 6: 2554. https://doi.org/10.3390/app11062554

APA StyleArroyo, Y., Molina, A. I., Redondo, M. A., & Gallardo, J. (2021). Learn-CIAM: A Model-Driven Approach for the Development of Collaborative Learning Tools. Applied Sciences, 11(6), 2554. https://doi.org/10.3390/app11062554