A Research Study on Protocol Stack of Space-Based Optical Backbone Network

Abstract

:1. Introduction

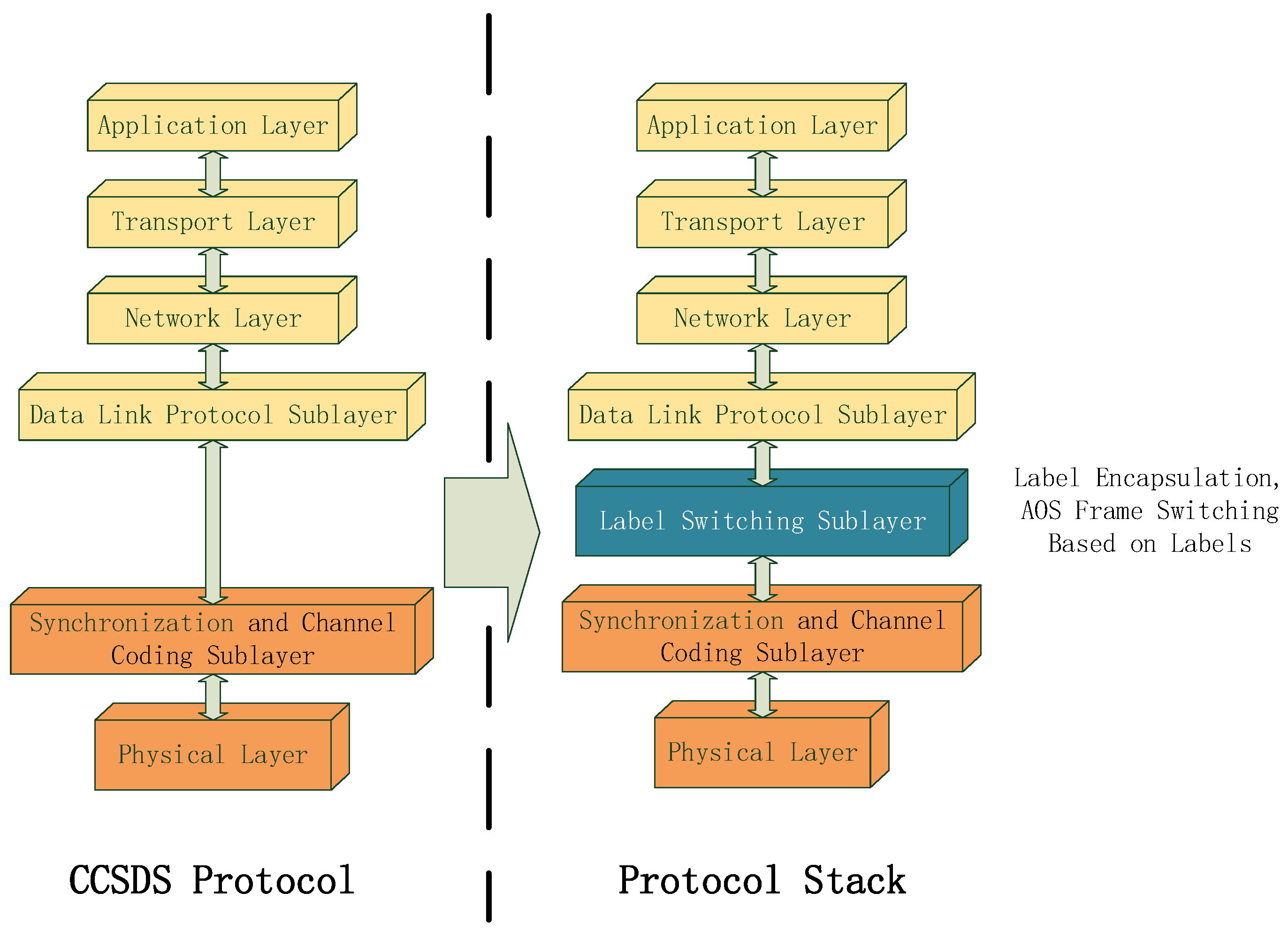

2. Architecture of the Protocol Stack

- Label Field: carries a label to support the AOS frame switching scheme.

- DCN Field: delivers control and management information, such as routing protocol or network management configuration messages.

- OAM Field: transfers information to conduct functions of network monitoring and detection.

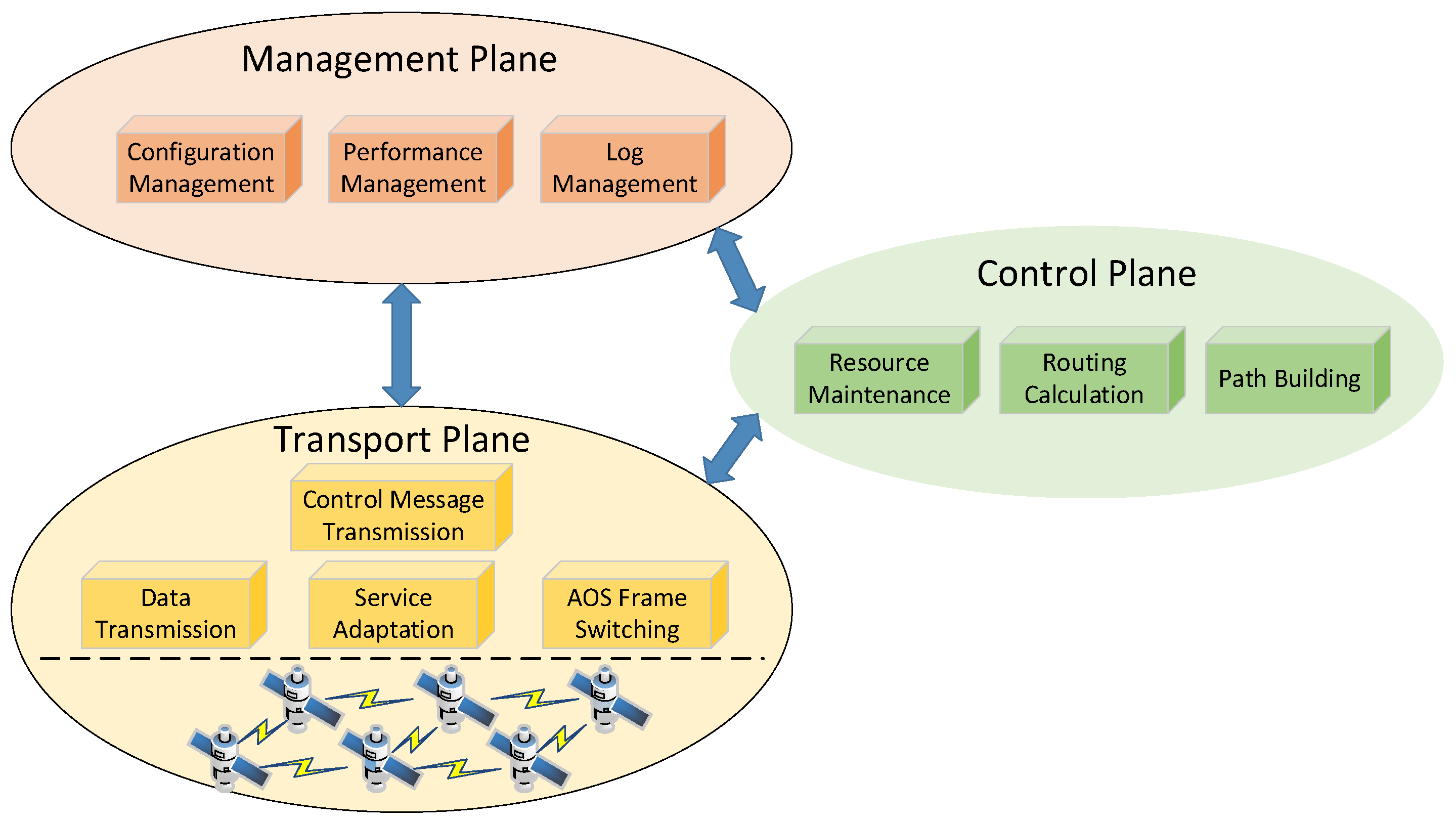

- Management Plane: manages the whole network, with functions of configuration management, performance management, and log management, which helps the network manager manage the network efficiently.

- Control Plane: controls the network automatically, with functions of resource maintenance, routing calculation and path building, which helps the network managers improve their work efficiency.

- Transport Plane: transports data in the network, with functions of control message transmission, data transmission, service adaptation and AOS frame switching.

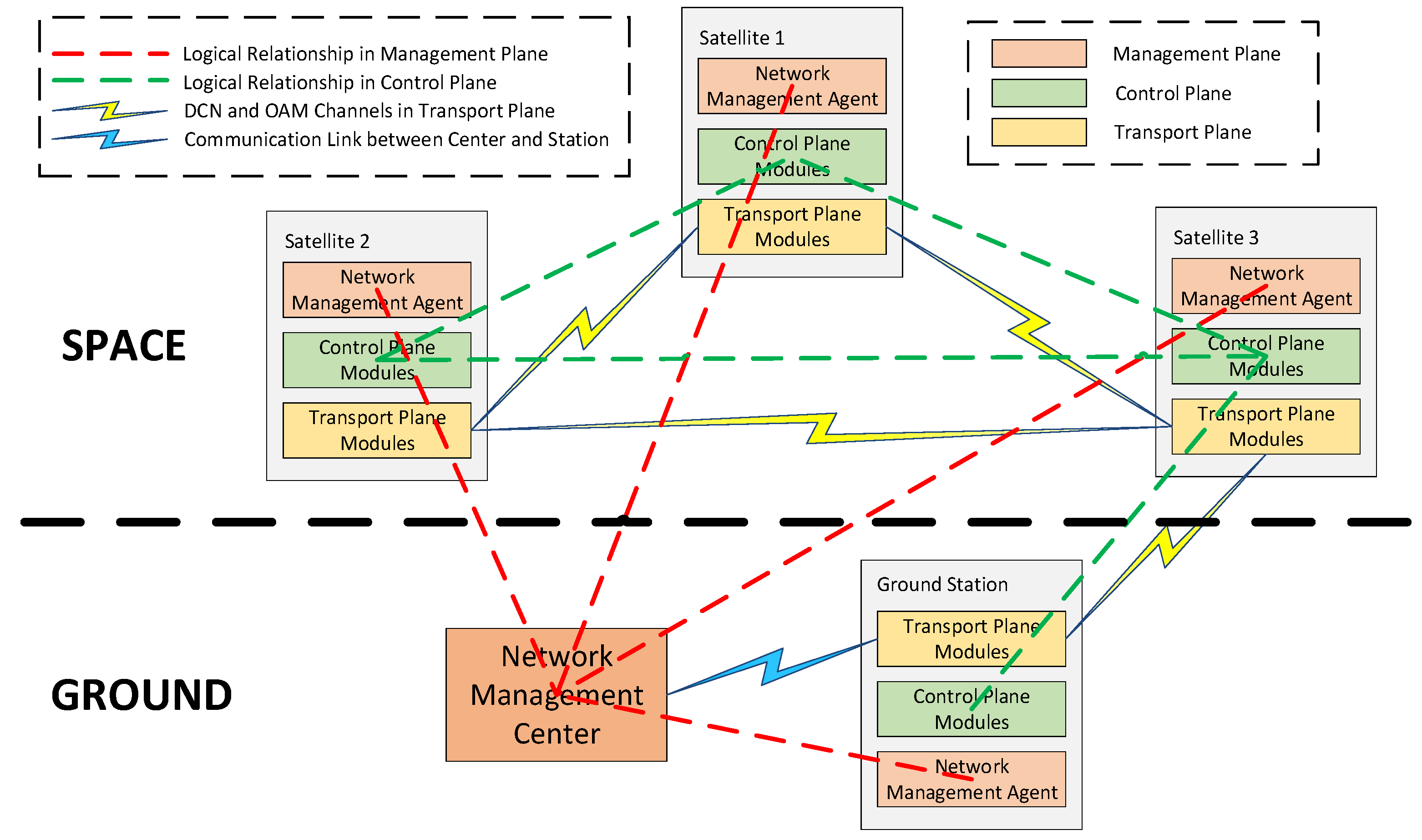

- Management Plane: consists of a network management center (on the ground) and network management agent (in each node). The architecture (red dotted line) is a star schema, which means that the center logically manages each agent directly. The center connects to one of the ground stations (blue lighting) and then manages the whole network.

- Control Plane: composed of control plane modules in all nodes. The architecture (green dotted line) is distributed, which means that the modules in all nodes have the same functions and the relationships between them are logically equal in the control plane. The control plane modules in different nodes collaborate with each other to implement the functions of the control plane.

- Transport Plane: composed of transport plane modules in all nodes and the architecture is also distributed. However, the difference is that the modules in different nodes do not collaborate with each other. It provides DCN and OAM channels (yellow lightning) to transmit management and control messages.

3. Design of the Protocol Stack

3.1. Design of Control Plane

3.1.1. Link Management Protocol

- Receive the ConfigAck message: this means that the corresponding node has agreed on the content of negotiations sent by the Config message, including negotiated parameters such as Channel, HelloInterval, HelloDeadInterval and so on. In this case, the control channel has already been built.

- Receive the ConfigNack message: this means that the corresponding node has disagreed on the content of the negotiations sent by the Config message and replied the ConfigNack message, including the recommended negotiated parameters, which should be prioritized by this node. If this node disagrees on these recommended negotiated parameters, it could resend new negotiated parameters.

- Config message: used to negotiate for the establishment of control channels, including parameters about the control channels.

- ConfigAck message: used to reply to the Config message while the node has agreed on the negotiated parameters.

- ConfigNack message: the message—including the recommended parameters—is used to reply to the Config message when the node disagrees with the negotiated parameters.

- Hello message: this message is sent periodically to maintain the control channels built by the link management protocol.

- Channel: used to confirm the parameters of a control channel, such as specific wavelength, specific field and so on. Here, we used specific field to realize control channels.

- HelloInterval: used as the interval at which the Hello messages are sent.

- HelloDeadInterval: used as the maximum time to decide whether a control channel is invalid. If the port has not received any Hello message within the time, the control channel is invalid.

- Receive the LinkSummaryAck message: means that the corresponding node has agreed on the link-related parameters sent by the LinkSummary message. In this case, the link parameters have been confirmed and will be collected by the link management protocol.

- Receive the LinkSummaryNack message: means that the corresponding node has disagreed on the link-related parameters sent by the LinkSummary message and replied to the LinkSummaryNack message, including stating that the parameters could not be accepted. This node could resend new parameters again until they both come to an agreement.

- LinkSummary message: used to confirm the link-related parameters.

- LinkSummaryAck message: used to reply to the LinkSummary message when the node agrees on the link-related parameters.

- LinkSummaryNack message: used to reply to the LinkSummary message when the node disagrees on the link-related parameters, including the parameters that could not be accepted.

3.1.2. Routing Protocol

- Down: in this state, the port sends Hello messages periodically to discover neighbors automatically.

- Init: when a port has received a Hello message with its own node ID not included, the state changes into this state. In this state, the Hello messages sent by the port include the node ID of the corresponding node.

- Two-way: when a port has received a Hello message with its own node ID included, the state changes into this state. In this state, the port enters the election of DR (designated router)/BDR (backup designated router), which is only made in a MA (multiple access) network. As the space-based optical backbone network is not an MA network, the election will not be made here. That is to say, this state will change into the next state automatically.

- Exstart: in this state, the port holds the primary election to confirm the master/slave relationship by sending an empty DD (database description) message with a DD sequence number in it. In the election, the port with a bigger node ID is the master and the other is the slave. The master port leads the exchange of DD messages.

- Exchange: after the primary election, the port state changes into this state. In this state, the port sends DD messages to exchange description information of the LSDB (link state data base).

- Loading: after the exchange of DD messages, the port state changes into this state. In this state, the port compares the description of the LSDB received from the correspondent node with its own LSDB to find out if there is any LSA (link state advertisement) which does not exist in its own LSDB. If this is the case, the port sends an LSR (link state require) message including the description of the lacking LSAs to acquire the complete information. Accordingly, when the port of the correspondent node receives the LSR message, it replies with an LSU (link state update) message including the complete information of required LSAs.

- Full: after the two ports have exchanged all the information of LSAs, the port state changes into this state. This state means that the LSDBs of the two ports have already been synchronized.

- UnidSnd: this state is added to support the use of unidirectional links. When a port in the full state has received a Hello message with its own node ID not included, the port state changes into this state. In this state, the node where the port is located sends unidirectional messages to the corresponding node, to inform that the link between them is a unidirectional link. In addition, if the port has not received a Hello message within the threshold time (HelloIterval), the node sends NonUnidirectional messages to the corresponding node to inform that the unidirectional link has been broken.

- UnidRcv: this state is also added to support the use of unidirectional links. When a port in the down state has received a unidirectional message, the port state changes into this state. In this state, the port adds the unidirectional link into the LSDB and sends an LSA to all other nodes to update their LSDBs. In addition, when the port in this state has received a nonunidirectional message, the port state changes into the down state.

- Hello Message: used to discover neighbors automatically, including the IDs of its own node and the correspondent node.

- DD Message: used to exchange the brief description of the LSDB to support the rapid comparison between LSDBs in the two nodes.

- LSR Message: used to acquire the corresponding node for the complete information of lacking LSAs, which includes a brief description of them.

- LSU Message: used to send the complete information of the required lacking LSAs to the corresponding node.

- LSAck Message: used to reply to the LSU message to ensure the reliability of synchronization.

- Unidirectional Message: added to support the use of the unidirectional links. This is used to inform the information of the unidirectional link to the corresponding node.

- NonUnidirectional Message: added to support the use of unidirectional links. This is used to inform the corresponding node that the unidirectional link has been broken.

3.1.3. Signal Protocol

- DoD Mode: used for label distribution, which sends a label mapping message to distribute the label to the upstream node only after having received a label request message from it.

- Ordered mode: used for label control, which controls the processing of label distribution. It sends a label mapping message to the upstream node, only after having received a label mapping message from the downstream node.

- After the source node has received a requirement to build an LSP, it firstly performs the resource reservation operation. Then, it creates a label request message and sends this to the next downstream node.

- After the downstream node has received the label request message, it firstly performs the resource reservation operation. Then, the node determines whether it is the destination node.

- If the result is NO, the node forwards the label request message to the next downstream node.

- If the result is YES, the node continues to perform the operations of resource utilization and label distribution. In the end, the node creates a label mapping message to the upstream node to notify the label distributed by it.

- After the upstream node has received a label mapping message, it firstly performs the resource utilization operation. Then, the node determines whether it is the source node.

- If the result is NO, the node continues to perform the label distribution operation. Then, it creates a label mapping message to the upstream node to notify the label distributed by it.

- If the result is YES, the node replies with the information of the LSP. By this stage, the LSP has already been built.

- Label Request Message: used to require the downstream node to distribute labels to the upstream node.

- Label Mapping Message: used to send labels distributed by the downstream node to the upstream node.

- Resource Reservation Operation: each link has a special value called RESERVED BANDWIDTH, which is used to record the usage of the link bandwidth by the link management protocol and is not used in the calculation of route path. That is to say, the changes of RESERVED BANDWIDTH do not affect the calculation of route path. So, we used this operation in the first half of processing to build the LSP. This operation deducts the required bandwidth from the RESERVED BANDWIDTH.

- Resource Utilization Operation: each link has a special value called the AVAILABLE BANDWIDTH, which is used to record the usage of the link bandwidth by the link management protocol and is also used in the calculation of route path. So, changes to the AVAILABLE BANDWIDTH actually affect the calculation of route path. So, we used this operation in the latter half of processing to build the LSP, which deducts the required bandwidth from the AVAILABLE BANDWIDTH.

- Label Distribution Operation: this operation is used to distribute labels to the LSP.

3.2. Design of Transport Plane

3.2.1. DCN Channel

3.2.2. Transmission Guarantee

- When a node sends any management or control message, it saves the message after it has been sent.

- When the corresponding node has received the message, it immediately extracts special parameters to create an ACK message and replies it to the corresponding node.

- Having received the ACK message, the node locates the corresponding message saved previously, and deletes it. If the node has not received the ACK message within a specified timeframe, the node automatically sends the message again.

3.2.3. Service Adaptation

- When an IPv6 package arrives, the adaptation module firstly creates the FEC, in accordance with the rules for extracting the destination address from the package directly as the FEC. Therefore, for the two IPv6 packages shown in the figure, two corresponding FECs (FEC1 and FEC2) was created.

- Using the FEC created above, the adaptation module then queries the FIB to find out the corresponding OutPort and OutLabel.

- Having found the OutPort and OutLabel, the adaptation module creates the corresponding AOS frame with its label field filled with the corresponding OutLabel and its data field filled with the corresponding complete IPv6 package.

- Finally, the adaptation module sends the AOS frame through the port specified by the corresponding OutPort.

3.2.4. AOS Frame Switching Scheme

4. Simulation and Analysis

4.1. Simulation in Simulation Software

- NodeID: used to mark a node, while managing and controlling the network.

- IPv6 Address: used to mark a node, while transmitting IPv6 services.

4.1.1. Simulation of Link Management Protocol

4.1.2. Simulation of Routing Protocol

4.1.3. Simulation of Protocol Stack

4.2. Test in Hardware Platform

- CPU: a place where the protocol stack runs. It implements the functions of the control plane and processing of the management message.

- FPGA: for control and management, this should support the functions of DCN (Figure 5). For service, this is divided into two types:

- Adapter FPGA: used only in source/destination node of a path. It can transform different data (bit stream or IP packet) into AOS frames and plug the label into the label field according to the FIB at the source node. Additionally, it can also transform AOS frames into corresponding services at the destination node.

- Switch FPGA: used to switch AOS frames by their labels according to local LFIBs.

- Computer: composed of three computers. Two of them are used as sources to create and process services. The other is used as the network manager to manage the platform through Ethernet interface of node 1.

- Interface: divided into three kinds:

- Ethernet Interface: used between network manager and node 1 (CPU) to transport the management messages.

- Serial Interface: used between the CPU and FPGA in each node, transmitting control and management messages.

- LVDS Interface: used between different FPGAs to transport AOS frames by using LVDS (low-voltage differential signaling).

4.2.1. Test of Link Management Protocol

4.2.2. Test of Routing Protocol

4.2.3. Test of DCN Channel

4.2.4. Test of AOS Frame Switching Scheme

4.2.5. Test of Protocol Stack

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Acknowledgments

Conflicts of Interest

References

- Wang, Z.H.; Fu, W.; Chen, W.X. Optical inter-satellite links technique. Opt. Technol. 2003, 29, 669–674. [Google Scholar]

- Lindgren, N. Optical communications—A decade of preparations. Proc. IEEE—Special Issue Opt. Commun. 1970, 58, 1410–1421. [Google Scholar] [CrossRef]

- Chan, V.W.S. Optical satellite networks. J. Lightwave Technol. 2003, 21, 2811–2827. [Google Scholar] [CrossRef]

- Chan, V.W. Free-space optical communications. IEEE/OSA J. Lightwave Technol. 2006, 24, 4750–4762. [Google Scholar] [CrossRef]

- Karafolas, N.; Baroni, S. Optical satellite networks. J. Lightwave Technol. 2000, 18, 1792–1806. [Google Scholar] [CrossRef]

- Chan, S.; Chan, V.W.S. Constellation topologies for a space-based information network backbone using optical inter-satellite links. In Proceedings of the IEEE MILCOM 2004, Military Communications Conference, Monterey, CA, USA, 31 October–3 November 2004. [Google Scholar]

- Chen, W.; Zhang, X.; Deng, P.; Gong, Y.; Wu, H. RSN: A space-based backbone network architecture based on space-air-ground integrated network. In Proceedings of the IEEE 9th International Conference on Communication Software and Networks (ICCSN), Guangzhou, China, 6–8 May 2017. [Google Scholar]

- Li, T.T.; Guo, H.X.; Wang, C.; Wu, J. Optical Burst Switching based Satellite Backbone Network. In Proceedings of the 4th Seminar on Novel Optoelectronic Detection Technology and Application, Nanjing, China, 24–26 October 2017. [Google Scholar]

- AOS Space Data Link Protocol; CCSDS 732.0-B-3, Blue Book; CCSDS: Washington, DC, USA, 2015.

- Huang, S.G.; Guo, B.L.; Yuan, Y.B.; Wang, B.; Zhang, Y.; Yang, H.; Jiang, M.; Wang, Y.; Fu, M.; Liu, Y. Control and Management of Optical Inter-Satellite Network based on CCSDS Protocol. In Proceedings of the 46th European Conference on Optical Communication (ECOC), Brussels, Belgium, 6–10 December 2020. [Google Scholar]

- IP over CCSDS Space Links; CCSDS 702.1-B-1, Blue Book; CCSDS: Washington, DC, USA, 2012.

- ITU-T Study Group 15. Architecture for the Automatically Switched Optical Network. ITU-T G.8080. 2012. Available online: www.itu.int/ITU-T/recommendations/rec.aspx?rec=11515&lang=en (accessed on 6 March 2021).

- Eramo, V.; Listanti, M. Input Wavelength Conversion in Optical Packet Switches. IEEE Commun. Lett. 2003, 7, 281–283. [Google Scholar] [CrossRef]

- Rosen, E.; Viswanathan, A.; Callon, R. Multiprotocol Label Switching Architecture. IETF RFC 3031. 2001. Available online: www.rfc-editor.org/info/rfc3031 (accessed on 6 March 2021).

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Y.; Yuan, Y.; Guo, B.; Luo, Q.; Zhao, B.; Zhou, W.; Jiang, M.; Wang, Y.; Fu, M.; Liu, Y.; et al. A Research Study on Protocol Stack of Space-Based Optical Backbone Network. Appl. Sci. 2021, 11, 2367. https://doi.org/10.3390/app11052367

Zhang Y, Yuan Y, Guo B, Luo Q, Zhao B, Zhou W, Jiang M, Wang Y, Fu M, Liu Y, et al. A Research Study on Protocol Stack of Space-Based Optical Backbone Network. Applied Sciences. 2021; 11(5):2367. https://doi.org/10.3390/app11052367

Chicago/Turabian StyleZhang, Yu, Yabo Yuan, Bingli Guo, Qingsong Luo, Bingfeng Zhao, Wei Zhou, Mingyi Jiang, Yixiang Wang, Mingjiang Fu, Yiting Liu, and et al. 2021. "A Research Study on Protocol Stack of Space-Based Optical Backbone Network" Applied Sciences 11, no. 5: 2367. https://doi.org/10.3390/app11052367

APA StyleZhang, Y., Yuan, Y., Guo, B., Luo, Q., Zhao, B., Zhou, W., Jiang, M., Wang, Y., Fu, M., Liu, Y., Wang, B., & Huang, S. (2021). A Research Study on Protocol Stack of Space-Based Optical Backbone Network. Applied Sciences, 11(5), 2367. https://doi.org/10.3390/app11052367