Abstract

Over the last decade, metaheuristic algorithms have emerged as a powerful paradigm for global optimization of multimodal functions formulated by nonlinear problems arising from various engineering subjects. However, numerical analyses of many complex engineering design problems may be performed using finite element method (FEM) or computational fluid dynamics (CFD), by which function evaluations of population-based algorithms are repetitively computed to seek a global optimum. It is noted that these simulations become computationally prohibitive for design optimization of complex structures. To efficiently and effectively address this class of problems, an adaptively integrated swarm intelligence-metamodelling (ASIM) technique enabling multi-level search and model management for the optimal solution is proposed in this paper. The developed technique comprises two steps: in the first step, a global-level exploration for near optimal solution is performed by adaptive swarm-intelligence algorithm, and in the second step, a local-level exploitation for the fine optimal solution is studied on adaptive metamodels, which are constructed by the multipoint approximation method (MAM). To demonstrate the superiority of the proposed technique over other methods, such as conventional MAM, particle swarm optimization, hybrid cuckoo search, and water cycle algorithm in terms of computational expense associated with solving complex optimization problems, one benchmark mathematical example and two real-world complex design problems are examined. In particular, the key factors responsible for the balance between exploration and exploitation are discussed as well.

1. Introduction

With tremendous advances in computational sciences, information technology, and artificial intelligence, design optimization becomes increasingly popular in many engineering subjects, such as mechanical, civil, structural, aerospace, automotive, and energy engineering. It helps to shorten the design-cycle time and to identify creative designs that are not only feasible but also progressively optimal, given predetermined design criteria.

At the outset of design optimization, running a gradient-based algorithm with a multi-start process proves to be very successful in finding the global optimum of simple problems when gradient information is available [1]. While under the pressure of being faced with increasingly complex optimization problems in which derivative information is unreliable or unavailable, researchers gradually focus on the development of derivative-free optimization methods [2] and metaheuristic methods to address this issue. Followed by Glover’s convention [3], modern metaheuristic algorithms such as simulated annealing (SA) [4], genetic algorithms (GA) [5,6], particle swarm optimization (PSO) [7], and ant colony optimization (ACO) [8] have been applied with good success in solving complex nonlinear optimization problems [9,10]. The popularity of these nature-inspired algorithms lies in their ease of implementation and the capability to obtain a solution close to the global optimum. However, for many real-life design problems, more than thousands of calls for high-fidelity simulations (for example, computational fluid dynamics simulation) may be executed to seek a near-optimal solution. This is the overwhelming part of the total run time required in the design cycle. Thus, it is desirable to retain the appeal of metaheuristic algorithms on a global search while replacing as many as possible calls to the solver with evaluations on metamodels for the purpose of less computational cost [11].

The typical techniques for metamodel building include Kriging [12], polynomial response surface (PRS) [13], radial basis function (RBF) [14], artificial network (ANN) [15], etc. Among them, PRS and ANN are regression methods that have advantages in dealing with convex problems; Kriging and RBF belong to interpolation methods that are more appropriate for nonconvex or multi-modal problems [16]. Therefore, metamodels have been successfully employed to assist evolutionary optimizations [17,18,19] and the PSO method. For example, Tang et al. [20] proposed a hybrid surrogate model formed from a quadratic polynomial and a RBF model to develop a surrogate-based PSO method and applied it to solve mostly low-dimensional test problems and engineering design problems. Regis [21] used RBF surrogates on PSO to identify the most promising trail position surrounding the current overall global best position for solving a 36-dimensional bioremediation problem. However, the inherent nature of the PSO method leads to an extremely large number of calls for function evaluations, which might be prohibitive in simulation-based optimization.

In this paper, an adaptively integrated swarm intelligence-metamodelling technique (ASIM) is proposed, which combines multi-level search and model management during the entire optimization process. It orients the solution of the approximate model to the global optimum with a smaller number of iterations of analyses and achieves a higher level of efficiency than conventional approximation methods. Meanwhile, model management in the optimization process has been established, which integrates an adaptive trust-region strategy with a space reduction scheme implemented in the multipoint approximation method (MAM) framework. The model management has been able to facilitate the optimization process and to improve robustness during iterations. It especially has allowed a small perturbation to be assigned to the current position in case of no updates for the optimal position. The developed ASIM makes full use of the global-exploration potential of PSO and local-exploitation advantage of MAM to efficiently and accurately seek the global optimal solution with low computational cost. In comparison to the results by other algorithms such as conventional MAM, particle swarm optimization [22], hybrid cuckoo search [23], water cycle algorithm [24], etc., the superiority of ASIM has been demonstrated in terms of computational expense and accuracy throughout three case studies.

2. Brief Review of the Multipoint Approximation Method (MAM)

The MAM [25,26] was proposed to tackle black-box optimization problems and has gained continuous development in recent years, e.g., Polynkin [27] enhanced MAM to solve large-scale optimization problems, one of which is the optimization of transonic axial compressor rotor blades; Liu [28] implemented discrete capability into MAM. Recently, Caloni [29] has applied MAM to solve a multi-objective problem. Based on a response surface methodology, multipoint approximation method (MAM) aims to construct mid-range approximations and is suitable to solve complex optimization problems owing to (1) producing better-quality approximations that are sufficiently accurate in a current trust region and (2) affordability in terms of computational costs required for their building. These approximation functions have a relatively small number ( where N is number of design variables) of regression coefficients to be determinedm and the corresponding least squares problem can be solved easily [25].

In general, a black-box optimization problem can be formulated as follows:

where refers to the vector of design variables; and are the given lower and upper bounds of the design variable ; N is the total number of design variables; is the objective function; is the jth constraint function and M is the total number of the constraint functions.

In order to represent the detailed physical model using the response functions and to reduce the number of calls for the response function evaluations, the MAM replaces the optimization problem with a sequence of approximate optimization problems as follows:

where and are the functions which approximate the functions and defined in Equation (1); and are the side constraints of a trust sub-region; and k is the iteration number.

Compared with the time spent by evaluation of the actual response functions , the selected form of approximate functions remarkably reduces the computational expense and adequately improves the accuracy in a current trust region. This is achieved by appropriate planning of numerical experiments and use of the trust region defined by the side constraints and . Once the current suboptimization problem is solved, the suboptimal solution becomes the starting point for the next step. Meanwhile, the move limits are modified and the trust region is resized [25,26]. Based on this information, the metamodel is updated in the next iteration until eventually the optimum is reached.

The process of metamodel building in MAM can be described as an assembly of multiple surrogates into one single metamodel using linear regression. Therefore, there are two stages of metamodel building.

In the first stage, the parameter of an individual surrogate is determined by solving a weighted least squares problem involving n fitting points as

where denotes the weighting parameters and F is the original function that needs to be approximated. Here, the selection of weighting factors should reflect the quality of the objective function and the location of a design point with respect to the border between the feasible and the infeasible design subspace [30], which are defined as

where are user-defined constants, where, here, and are used; is the starting point in the kth iteration; and is the ith design point in the fitting points. With this definition, a point with a larger objective function has a smaller weighting coefficient component . For a constraint function , a point that is much closer to the boundary of the feasible region of is given a larger weighting coefficient component . For building a surrogate of the objective function , the weighting coefficient only considers the component . However, for building a surrogate of the constraint function , the weighting coefficient will also take the constraint component into consideration.

It should be noted here that, in MAM, both the objective and constraint functions will be approximated by Equation (3). The simplest case of is the first-order polynomial metamodel, and more complex ones are intrinsically linear functions (ILF) that have been successfully applied for solving various design optimization problems [25,28,29]. ILFs are nonlinear but they can be led to linear ones by simple transformations. Currently, five functions are considered in the regressor pool :

In the second stage, for each function ( or ), different surrogates are assembled into one metamodel:

where is the number of surrogates applied in the model bank and is the regression coefficient corresponding to each surrogate , which reflects the quality of the individual on the set of validation points. Similar to Equation (3), can be determined in the same manner:

It should be noted that, in the process of metamodel building, the design of experiments (DOE) is fixed, i.e., remains unchanged across the aforementioned stages.

Figure 1 illustrates the main steps in MAM. Note that, once the metamodels for the objective and constraint functions have been built, the constrained optimization subproblem formulated in the trust region (Equation (2)) could be solved by any existing optimizers. In this paper, the sequential quadratic programming (SQP) method [31] is applied to solve the constrained optimization subproblem for the optimal solution. Since numerical optimization solvers such as SQP are deterministic, the quality of the obtained solution is highly sensitive to the initial point. In other words, MAM could not perform the global search very well. To address this issue, the ASIM framework in Section 4 has been proposed to integrate the stochastic nature with the exploratory search ability of PSO for the global optimal solution.

Figure 1.

Flow chart of the mulitpoint approximation method (MAM).

3. Brief Review of Particle Swarm Optimization (PSO)

Particle swarm optimization (PSO), inspired from swarm behaviors in nature such as fish and bird schooling, was developed by Kennedy and Eberhart [32]. Since then, PSO has attracted a lot of attention and been developed as a main representative form of swarm intelligence. PSO has been applied to many areas, such as image and video analysis applications, engineering designs and scheduling applications, classification and data mining, etc. [33]. There are at least twenty PSO variants as well as hybrid algorithms obtained by combining PSO with other existing algorithms, which are also becoming increasingly popular [34,35,36].

To integrate PSO with MAM to find the global optimum, adaptive multi-level search is proposed in this paper. PSO is employed for the global-level exploration in the first step. A number of particles are first placed in the search space of the optimization problem with initial positions and velocities. However, the particles can fly over the entire design space not only determined by the individual and collective knowledge of positions from the global-level search but also based on the “local” information of each particle. Here, the “local” information means the local-level exploitation in the second step. In the neighborhood of each particle, an adaptive metamodel is constructed using MAM in Section 2, which replaces the original optimization problem by a sequence of mathematical approximations that use much simpler objective and constraint functions. Hence, the critical information about individual constraint functions is kept and this leads to the improved accuracy of metamodels. During the process of metamodel building, each particle is endowed with a horizon in the surrounding region and then is refined with the current individual position to boost the possibility of finding an optimal position. Eventually, the swarm as a whole, similar to a flock of birds collectively foraging for food while each bird brilliantly and directly finds the most tasty food within the limited horizon, has the ability to move toward to a global optimum.

Each particle in PSO represents a point in the design space of an optimization problem with an associated velocity vector. In each iteration of PSO, the velocity vector is updated by using a linear combination of three terms shown in Equation (10). The first term called inertia or momentum reflects a memory of the previous flight direction and prevents the particle from changing directions thoroughly. The second term, called the cognitive component, describes the tendency of particles returning to the previously found best positions. The last term, called the social component, quantifies the group norm or standard that should be attained. In other words, each particle tends to move toward the position of the current global best and the location of the individual best while moving randomly [33]. The aim is to find the global best among all the current best solutions until the objective no longer improves or a certain number of iterations are reached. The standard iteration procedure of PSO is formulated as follows:

where is the parameter called inertial weight, t is the current iteration number, and are parameters called acceleration coefficients, and and are two homogeneously distributed random vectors generated within the interval , respectively. If the values of , , and are properly chosen ( and ), it has been proven that PSO could converge to an optimum [37].

Even if PSO has been used in a variety of industry applications, it should be noted that the standard PSO suffers the disadvantages of information loss in the penalty function and high computational cost, especially in solving constrained optimization problems. Therefore, the proposed ASIM framework in the following section takes the advantage of PSO in global searching and reduces the burden on computation by introducing the metamodel building technique, model management, and trust region strategy.

4. Adaptively Integrated Swarm Intelligence-Metamodelling Framework

4.1. Methodology of the ASIM Framework

In this paper, an adaptively integrated swarm intelligence-metamodelling (ASIM) framework is proposed to perform a search for the optimal solution in two levels.

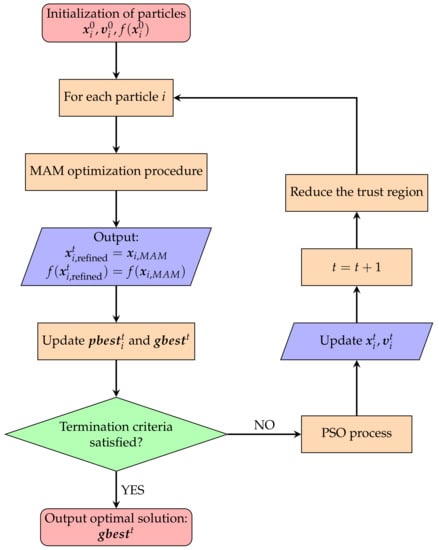

In the first level of optimization, also known as exploration, a number of entities are initially placed in the search space of the particular optimization problem with respective positions and velocities . Each particle i has its movement controlled by Equation (10). The final global best solution is obtained only if the objective no longer improves or after a certain number of iterations. However, distinguished from the conventional PSO, each particle also gains an insight within its neighborhood. That forces each particle to refine their personal best position by exploiting their neighborhood, which is known as the second level of optimization. In this local level search, an adaptive metamodel is built by MAM within a trust region surrounding the particle, and then the personal best solution obtained by MAM is regarded as a local refinement in position. Following that, the personal and global best position is determined and updated until the termination criterion is satisfied. To sum up, the surrogate helps guide the search direction of each particle and assists in refining the current overall best position until the final global best solution is found. Eventually, the swarm as a whole moves close to a global optimum of the objective function. The flowchart of the ASIM framework is depicted in Figure 2.

Figure 2.

Flow chart of ASIM Framework.

It is worth noting that there are three rules applied to compare solutions during the optimization process:

- 1.

- Any feasible solution is preferred to any infeasible solution;

- 2.

- Among feasible solutions, the one with a better objective function value is preferred.

- 3.

- Among infeasible solutions, the one having a fitness value with smaller constraint violations is preferred. In the current implementation, the fitness function is defined by

4.2. Model Management

4.2.1. Strategy for Particles “Flying out” in PSO

For particles located outside the boundary, they should adjust their positions according to the formulations determined by the current bounds, as follows:

where means the kth dimensional position of , and are kth dimensional side constraints, and is a relatively small value randomly generated from the range . This perturbation of positions could actually force the particles back into the design space if particles violate the boundary constraints during the entire search process and could ensure the efficiency and accuracy in local exploitation.

4.2.2. Modified Trust Region Strategy in MAM

The aim of the trust region strategy in MAM is to control the quality of a metamodel constructed. When the approximation gets better, the trust region is further reduced for the optimal solution. The track of the trust regions also indicates a path of the direction from the initial starting point to the optimum over the entire search domain. At each iteration, a new trust region must be updated, i.e., its new size and its location have to be specified. Several indicators are formulated to support the control of the trust region and to facilitate the search process. The basic knowledge about these indicators was introduced in [38].

The first indicator is to evaluate the quality of the metamodel and focuses on the accuracy of the constraint approximations at the obtained suboptimal point . This is based on the following equation:

where and are normalized functions of the approximate and true constraints at the suboptimal point , respectively. In this way, a single maximal error quantity between explicit approximation and implicit simulation is defined. Then, the quality of metamodel can be labeled as “bad”, “reasonable”, or “good” shown below.

where represents the maximum ratio of the dimension length between the present trust region, and the entire design space is defined by

The second indicator is for indicating the location of the current iterate in the present search subregion. For each dimension, if none of the current move limits () is active, this solution is regarded as “internal”; otherwise, it is viewed as “External”.

The third and fourth indicators reflect the movement history for the entire optimization process. For this purpose, the angle between the last two move vectors is calculated. The formulation of this measure is given below:

If holds, the movement will be denoted as “forward”, while is denoted as moving “backward”. Moreover, if , the convergence history is labelled as “curved”; otherwise, it is “Straight”.

The fifth indicator in MAM, as a termination criterion, is the size of the current search subregion. It can be marked as “small” or “large” according to the quality of the metamodel determined by the first indicator. When the approximations are “bad” and , the present search subregion is considered “small”. When the approximations are “reasonable” or “good”, the trust region is denoted as “small” if .

The sixth indicator is based on the most active constraint. It is considered “close” to the boundary between the feasible and infeasible design space if ; otherwise, it is denoted as “far”.

Both reduction and enlargement of the trust region is executed using

where is the resizing parameter.

When the approximations are “bad” and the trust region is “small”, the current trust region is considered too small for any further reduction to achieve reasonable approximations and the process is aborted. When the approximations are “bad” and the trust region is “large”, a reduction in the search region should be applied in order to achieve better approximations. When the approximations are not “bad”, the trust region is “large” and the suboptimal point is not “internal”; a “backward” convergence history means that the iteration point progresses in a direction opposite to the previous move vector. In this situation, the trust region has to be reduced. If the iteration point moves “forward” and the approximations are “good”, the same metamodels are reutilized in the next iteration for the purpose of reducing the computational cost. If the optimization convergence history is labelled as “curved” and the approximations are “reasonable”, the trust region is enlarged as the optimization process moves in the same direction.

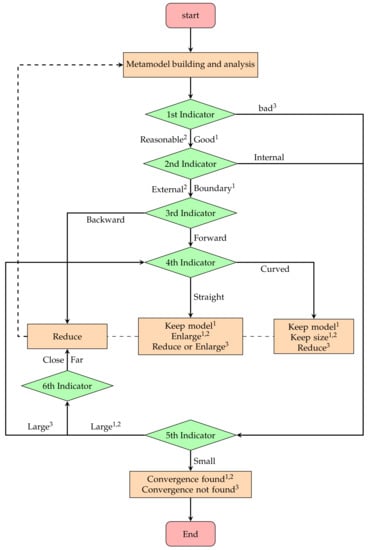

A summary of termination criteria as well as the move limit strategy is presented in Table 1 and Figure 3, respectively. Note that, in Figure 3, some processes are only executed when the indicators have the same superscript. For example, the process can only output the final optimum when the approximation is “good” (with superscript 1) and the current location (2nd indicator) of the solution is within a small (5th indicator) trust region. If the quality of the metamodel is “bad” with the superscript “3” and the 5th indicator has the value “large”, the 4th indicator is triggered and a move limit should be then determined.

Table 1.

Six indicators in MAM.

Figure 3.

Overview of trust region strategy in MAM.

4.2.3. Space Reduction Scheme in the ASIM Framework

As optimization proceeds, the particles narrow down their horizon to improve the local search ability. In other words, for each particle involved, the size of the individual trust region reduces from by a factor of 2 in each iteration, i.e., times the size of the initial design space. Although the particles still fly through the whole design space, each individual seems to behave more cleverly and finds the local optimal position more precisely because the metamodel becomes more accurate.

5. Benchmark Problem

In this section, the parameters used in MAM and the proposed ASIM framework are given in Table 2 for solving complex optimization problems: one benchmark mathematical example and two real-world complex design problems. The MAM parameters (the maximum number of iteration, the number of required sampling points, the size of the initial trust region, and the minimum size of the trust region) are well configured for solving general optimization tasks, as proposed in our previous work [28]. The PSO parameters (the initial weight and the acceleration coefficients) are chosen as the values proposed in [37], which ensure the convergent behavior of the search process.

Table 2.

Default parameters for MAM and ASIM.

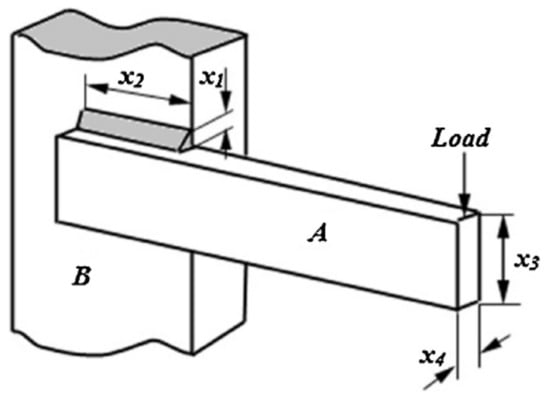

5.1. Welded Beam

The design optimization of a welded beam in Figure 4 is a complex and challenging problem in nature with many variables and constraints. Usually, conventional optimization methods fail to find global optimal solutions. Hence, the welded beam design problem is often used to evaluate the performance of optimization methods. To determine the best set of design variables for minimizing the total fabrication cost of the structure, the minimum cost optimization is performed considering shear stress , bending stress , buckling load , and end deflection constraints. The design variables comprise the thickness of the weld , the length of the welded joint , the width of the beam , and the thickness of the beam , and the mathematical formulation of this problem can be expressed as follows:

Figure 4.

Schematic of the welded beam structure with indication of design variables.

To solve the aforementioned problem, the GA-based method [39], co-evolutionary PSO method (CPSO) [22], ES-based method [40], charged system search (CSS) [41], and colliding bodies optimization (CBO) [42] were used to find the optimal solution.

In Table 3, the optimized design variables and cost obtained by MAM and ASIM have been compared with those obtained in literature. The best solutions () by MAM and ASIM are more competitive than those obtained by other methods. Although Kaveh [42] claimed that was the better cost, the solution actually violated the constraint and it was an infeasible solution. Based on the statistical results in Table 4, it is concluded that the ASIM technique is very robust and efficient because the standard deviation of different runs of simulations is almost 0 () and the number of function analysis (NFEs) is remarkably smaller (565) than that called by other methods except MAM. Both ASIM and MAM demonstrate efficiency in finding the optimal design owing to their accuracy approximations and adaptive trust region strategy at local level exploitation. On average, hundreds of evaluations are required to determine an optimum. It is noted that enhancement of global exploration for the optimal solution by the PSO process in the ASIM framework could be demonstrated by a standard deviation of zero () for statistical results, which is approximately four orders of magnitude smaller than the value by MAM (0.0031358). Furthermore, by comparison with the NFEs (200,000) obtained by co-evolutionary PSO [22], the accurate surrogates built by ASIM framework indeed assist each particle in finding the local refinement position and speed up the converged global optimum. In conclusion, ASIM needs less computational cost for a global optimum with improved accuracy and great robustness.

Table 3.

Comparison of present optimized designs with the literature for welded beams.

Table 4.

Statistical results from different optimization methods for the welded beam design problem.

5.2. Design of a Tension/Compression Spring

This problem, first described by Belegundu [43], has arisen from the wide applications of vibration resistant structures in civil engineering. The design objective is to minimize the weight of a tension/compression spring subject to constraints on the minimum deflection , shear stress , and surge frequency and to limit on the outside diameter . As shown in Figure 5, the design variables include the wire diameter d, the mean coil diameter D, and the number of active coils N. The mathematical description of this problem can be expressed as follows:

Figure 5.

Schematic of the tension/compression spring.

The statistical results by MAM are in Table 5. From the first row to the sixth row, every row is the optimal results of 40 independent runs of MAM and the last line concludes the average results of the 6 parallel experiments, i.e., each experiment comprises 40 independent runs of MAM with randomly generated starting points. The best optimal design represented by is [0.051656122, 0.355902943, 11.33791803] with the objective value of . Moreover, the fourth column “Best” in Table 5 indicates that MAM cannot achieve a converged robust solution and falls into the local optima when faced with multimodal function optimization. The optimal result ranges from (the best design in the fourth row) to (the worst design in the third row). As a general deficiency of the trajectory-based algorithm, MAM could not find the known optimum by balancing the efforts between exploration and exploitation.

Table 5.

Statistical results for the tension/compression spring problem by MAM.

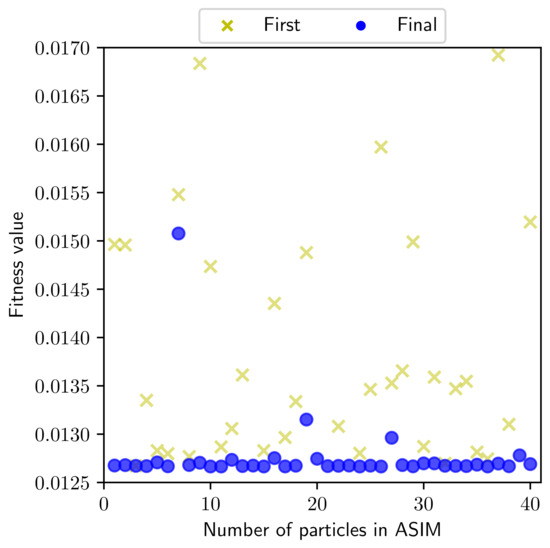

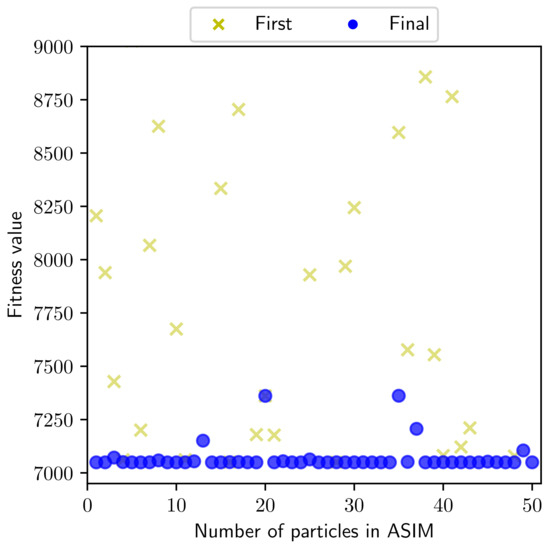

A more intuitive perspective for understanding the global search mechanism using the ASIM framework is represented in Table 6, which includes the optimal results obtained by 8 independent experiments, each of which is initialized with 5 particles. In Figure 6, the results show the objectives of initial designs and global optima for the tested 40 particles. Even the initial designs are remarkably different at the start of the optimization process due to the random nature of statistical tests; the developed ASIM has the capability to eventually find the converged global optimum. It is concluded that the ASIM algorithm can achieve a robust solution for random starting points, and it will not be trapped into local optima due to its multi-level search and model management strategies. Therefore, these 8 independent experiments could almost obtain the same global optimum. The best optimal design found by ASIM framework is , with the objective value , which has a good agreement with the known optimum. Additionally, the global solutions from 8 independent experiments have been proven feasible by function evaluations.

Table 6.

Statistical results for the tension/compression spring problem by adaptively integrated swarm intelligence-metamodelling (ASIM).

Figure 6.

First and final fitness values in ASIM for solving the tension/compression spring.

Other algorithms recently used to optimize this problem include co-evolutionary particle swarm optimization (CPSO) [22], differential evolution with dynamic stochastic selection (DEDS) [44], hybrid evolutionary algorithm with adaptive constraint-handling techniques (HEAA) [45], league championship algorithm (LCA) [46], water cycle algorithm (WCA) [24], and hybrid cuckoo search (HCS) [23]. A comparison of the optimal solutions by the aforementioned methods is given in Table 7, and the statistical results by ASIM, MAM, and other algorithms are shown in Table 8.

Table 7.

Comparison of the best solutions obtained by various studies for the tension/compression spring design optimization problem.

Table 8.

Comparison of statistical results given by different algorithms for the tension/compression spring design optimization problem.

In Table 7, the ASIM framework has the ability to find the optimal solution (0.0126652), which is the best available design compared to that which the other algorithms achieved. Although LCA [46] found a slighter better solution (0.01266523), the corresponding constraint was violated. Therefore, it was not a feasible solution. The same conclusion can be drawn for the results by DEDS [44] and HEAA [45]. Together with the statistical results shown in Table 8, it can be observed that the ASIM method is superior to other methods for the global solution in terms of the number of function evaluations and the accuracy throughout the optimization process. Obviously, the referenced methods used more than 10,000 calls to find the global optimum while ASIM finds the optimum with about half of those calls. Meanwhile, the ASIM could reduce the number of simulations by over compared to MAM.

As a general remark on the comparisons above, ASIM shows a very competitive performance over eight state-of-the-art optimization methods to find the global optimal solution in terms of the efficiency, the quality, and the robustness.

5.3. Mathematical Problem G10

This problem was first described in [47] and then was considered one of the benchmark problems at the 2006 IEEE Congress on Evolutionary Computation [48]. In this optimization example, there are eight variables and six inequality constraints (three linear and three nonlinear). The mathematical formulations are shown below.

The optimal solutions found by ASIM and MAM are given in Table 9 as well as the known optimum. In Table 10, nine independent experiments have been performed and each experiment includes 40 parallel runs of MAM. Although each run by MAM is initialized with a random starting point, there is no guarantee that the converged global optimum can be achieved. As there has a very small feasible region in this challenging example, limited runs by MAM could not find a feasible solution, and normally, a bad design with a very large value of the fitness function (up to 100,000) is obtained. However, a feasible solution could be achieved within 20,000 function evaluations. Applying the developed ASIM, the capability of the adaptive multi-level search for the global optimum was significantly improved, and the statistical results are shown in Table 11. Using the same parameter settings in the previous example, the worst solution found by the particles is about 7361, which is only higher than the global optimum . In the mean time, all nine independent experiments of ASIM could find a decent global optimum, which is slightly higher than the global optimum even in the worst case (number 5 in Table 11). In Figure 7, it shows how 10 independent runs initialized with a total of 50 particles converge to the global optimum by ASIM. It is noted that the initial design varies dramatically for each particle, and finally, all particles succeed in finding the global optimum. It is concluded that the PSO process applied in ASIM remarkably boosts the exploration capability. Owing to advantages such as the guidance of personal memory for the best position and social cognition in addition to the stochastic search behavior, ASIM is a robust and efficient algorithm for solving such a challenging problem.

Table 9.

Optimal solutions of G10 found by ASIM and MAM.

Table 10.

Statistical results for G10 by MAM.

Table 11.

Statistical results for G10 by ASIM.

Figure 7.

First and final output fitness values for G10 in a hybrid optimization framework.

Recently, other algorithms including evolutionary optimization by approximate ranking and surrogate models (EOAS) [49], constraint optimization via particle swarm optimization (COPSO) [50], league championship algorithm (LCA) [46], hybrid cuckoo search (HCS) [23], and surrogate-assisted differential evolution (SADE) [51] have also solved this optimization problem. A comparison of results by ASIM, MAM, and other algorithms is given in Table 12. Although all methods listed are very competitive and has the ability to find global or near global optimum, ASIM demonstrates superiority over the others in terms of computational efficiency. Evolutionary algorithms usually need over 150,000 simulations to find the global optimum, while ASIM could reduce the number of function evaluations to 19,522 by more than . Furthermore, the optimum achieved by ASIM is in a good agreement with the global optimum . Although HCS [23] proposed a best optimum (), the fourth constraint is slightly violated and, therefore, is not a feasible design. Summarily, ASIM outperforms other methods in seeking the global optimal solutions of complex black-box optimization problems in terms of efficiency and accuracy.

Table 12.

Statistical features of the results obtained by various algorithms on G10.

6. Conclusions

In this paper, an adaptively integrated swarm intelligence-metamodelling (ASIM) technique that enables adaptive multi-level adaptive search for the global optimal solution was proposed for solving expensive and complex black-box constrained optimization problems. In the first step, the adaptive swarm-intelligence algorithm carries out global exploration for the near-optimal solution. In the second step, the metamodel-based optimization algorithm multipoint approximation method (MAM) is performed for local exploitation. Essentially, each particle’s current position in ASIM gains local refinement by optimization of metamodel building around their neighborhood and tends to move towards the global best position according to swarm intelligence. Eventually, the swarm as a whole, similar to a flock of birds collectively foraging for food while each bird brilliantly finds the most tasty food with limited horizon directly, possibly moves close to a global optimum position. One mathematical problem and two engineering optimization problems were studied in detail using the ASIM framework. By comparison of the results obtained from ASIM, MAM, and other state-of-art algorithms, it was demonstrated that ASIM has the capability to tackle expensive constrained black-box optimization problems with remarkably less computational effort, higher accuracy, and stronger robustness. The adaptive multi-level search ability of ASIM indeed makes up the local search deficiency and the sensitivity to the starting point observed in MAM. Consequently, the ASIM technique achieves a good balance between exploration and exploitation. Moreover, ASIM provides valuable insight into the development of nature-inspired metaheuristic algorithms for solving nonlinear optimization problems with less computational cost throughout the simulation-based optimization process.

Author Contributions

G.D. contributed to drafting the paper and example validation; C.L. contributed to algorithm development and editing; D.L. contributed to designing and planning the study, approved the final version, and agreed to be accountable for the accuracy and integrity; X.M. contributed to editing, analysing, and commenting on the first version of the manuscript. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Short Term Recruitment Program of Professional Scholars of NLAA in Beihang University.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data is contained within the article.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Hickernell, F.J.; Yuan, Y.X. A Simple Multistart Algorithm for Global Optimization. OR Trans. 1997, 1, 1–12. [Google Scholar]

- Rios, L.M.; Sahinidis, N.V. Derivative-free optimization: A review of algorithms and comparison of software implementations. J. Glob. Optim. 2013, 56, 1247–1293. [Google Scholar] [CrossRef]

- Glover, F. Future paths for integer programming and links to artficial intelligence. Comput. Oper. Res. 1986, 13, 533–549. [Google Scholar] [CrossRef]

- Kirkpatrick, S.; Gelatt, C.D., Jr.; Vecchi, M.P. Optim. Simulated Annealing. Science 1983, 220, 671–680. [Google Scholar] [CrossRef] [PubMed]

- Holland, J.H. Adaptation in Natural and Artificial Systems: An introductory Analysis with Applications to Biology, Control and Artificial Intelligence; MIT Press: Cambridge, MA, USA, 1975; p. 183. [Google Scholar] [CrossRef]

- Borowska, B. Genetic Learning Particle Swarm Optimization with Interlaced Ring Topology. In Computational Science—ICCS 2020; Krzhizhanovskaya, V.V., Závodszky, G., Lees, M.H., Dongarra, J.J., Sloot, P.M.A., Brissos, S., Teixeira, J., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 136–184. [Google Scholar]

- Wang, S.; Liu, G.; Gao, M.; Cao, S.; Guo, A.; Wang, J. Heterogeneous Comprehensive Learning and Dynamic Multi-Swarm Particle Swarm Optimizer with Two Mutation Operators; Elsevier Inc.: Amsterdam, The Netherlands, 2020; Volume 540, pp. 175–201. [Google Scholar] [CrossRef]

- Ahmad, R.; Choubey, N.S. Review on Image Enhancement Techniques Using Biologically Inspired Artificial Bee Colony Algorithms and Its Variants. In Biologically Rationalized Computing Techniques for Image Processing Applications; Hemanth, J., Emilia, B.V., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 249–271. [Google Scholar] [CrossRef]

- Acevedo, H.G.S.; Escobar, C.M.; Andres Gonzalez-Estrada, O. Damage detection in a unidimensional truss using the firefly optimization algorithm and finite elements. arXiv 2018, arXiv:1706.04449. [Google Scholar]

- Mortazavi, A.; Togan, V. Sizing and layout design of truss structures under dynamic and static constraints with an integrated particle swarm optimization algorithm. Appl. Soft Comput. 2016, 51, 239–252. [Google Scholar] [CrossRef]

- soon Ong, Y.; Nair, P.B.; Keane, A.J.; Wong, K.W. Surrogate-Assisted Evolutionary Optimization Frameworks for High-Fidelity Engineering Design Problems. In Knowledge Incorporation in Evolutionary Computation; Yaochu, J., Ed.; Springer: Berlin, Germany, 2005; pp. 307–331. [Google Scholar] [CrossRef]

- Kleijnen, J.P. Kriging metamodeling in simulation: A review. Eur. J. Oper. Res. 2009, 192, 707–716. [Google Scholar] [CrossRef]

- Myers, R.H.; Montgomery, D.T.; Vining, G.G.; Borror, C.M.; Kowalski, S.M. Response Surface Methodology: A Retrospective and Literature Survey. J. Qual. Technol. 2004, 36, 53–78. [Google Scholar] [CrossRef]

- Dyn, N.; Levin, D.; Rippa, S. Numerical procedures for surface fitting of scattered data by radial functions. SIAM J. Sci. Stat. Comput. 1986, 7, 639–659. [Google Scholar] [CrossRef]

- Gerrard, C.E.; McCall, J.; Coghill, G.M.; Macleod, C. Exploring aspects of cell intelligence with artificial reaction networks. Soft Comput. 2014, 18, 1899–1912. [Google Scholar] [CrossRef]

- Dong, H.; Song, B.; Dong, Z.; Wang, P. SCGOSR: Surrogate-based constrained global optimization using space reduction. Appl. Soft Comput. J. 2018, 65, 462–477. [Google Scholar] [CrossRef]

- Zhou, Z.; Ong, Y.S.; Nair, P.B.; Keane, A.J.; Lum, K.Y. Combining Global and Local Surrogate Models to Accelerate Evolutionary Optimization. IEEE Trans. Syst. Man Cybern. Part C Appl. Rev. 2007, 37, 66–76. [Google Scholar] [CrossRef]

- Regis, R.G. Evolutionary Programming for High-Dimensional Constrained Expensive Black-Box Optimization Using Radial Basis Functions. IEEE Trans. Evol. Comput. 2014, 18, 326–347. [Google Scholar] [CrossRef]

- Bouhlel, M.A.; Bartoli, N.; Regis, R.G.; Morlier, J.; Regis, R.G.; Otsmane, A. Efficient global optimization for high-dimensional constrained problems by using the Kriging models combined with the partial least squares method. Eng. Optim. 2018, 50, 2038–2053. [Google Scholar] [CrossRef]

- Tang, Y.; Chen, J.; Wei, J. A surrogate-based particle swarm optimization algorithm for solving optimization problems with expensive black box functions. Eng. Optim. 2013, 45, 557–576. [Google Scholar] [CrossRef]

- Regis, R.G. Particle swarm with radial basis function surrogates for expensive black-box optimization. J. Comput. Sci. 2014, 5, 12–23. [Google Scholar] [CrossRef]

- He, Q.; Wang, L. An effective co-evolutionary particle swarm optimization for constrained engineering design problems. Eng. Appl. Artif. Intell. 2007, 20, 89–99. [Google Scholar] [CrossRef]

- Long, W.; Liang, X.; Huang, Y.; Chen, Y. An effective hybrid cuckoo search algorithm for constrained global optimization. Neural Comput. Appl. 2014, 25, 911–926. [Google Scholar] [CrossRef]

- Eskandar, H.; Sadollah, A.; Bahreininejad, A.; Hamdi, M. Water cycle algorithm—A novel metaheuristic optimization method for solving constrained engineering optimization problems. Comput. Struct. 2012, 110–111, 151–166. [Google Scholar] [CrossRef]

- Toropov, V.V.; Filatov, A.A.; Polynkin, A.A. Multiparameter structural optimization using FEM and multipoint explicit approximations. Struct. Optim. 1993, 6, 7–14. [Google Scholar] [CrossRef]

- Keulen, F.V.; Toropov, V.V. New Developments in Structural Optimization Using Adaptive Mesh Refinement and Multipoint Approximations. Eng. Optim. 1997, 29, 217–234. [Google Scholar] [CrossRef]

- Polynkin, A.; Toropov, V.V. Mid-range metamodel assembly building based on linear regression for large scale optimization problems. Struct. Multidiscip. Optim. 2012, 45, 515–527. [Google Scholar] [CrossRef]

- Liu, D.; Toropov, V.V. Implementation of Discrete Capability into the Enhanced Multipoint Approximation Method for Solving Mixed Integer-Continuous Optimization Problems. Int. J. Comput. Methods Eng. Sci. Mech. 2016, 17. [Google Scholar] [CrossRef]

- Caloni, S.; Shahpar, S.; Toropov, V.V. Multi-Disciplinary Design Optimisation of the Cooled Squealer Tip for High Pressure Turbines. Aerospace 2018, 5, 116. [Google Scholar] [CrossRef]

- Toropov, V.V. Simulation approach to structural optimization. Struct. Optim. 1989, 1, 37–46. [Google Scholar] [CrossRef]

- Kraft, D. A Software Package for Sequential Quadratic Programming; Technical Report; Institut fuer Dynamik der Flugsysteme: Oberpfaffenhofen, Germany, 1988. [Google Scholar]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the ICNN’95—International Conference on Neural Networks, Perth, Australia, 27 November–1 December 1995; Volume 4, pp. 1942–1948. [Google Scholar] [CrossRef]

- Poli, R.; Kennedy, J.; Blackwell, T. Particle swarm optimization. Swarm Intell. 2007, 1, 33–57. [Google Scholar] [CrossRef]

- Zhan, Z.; Zhang, J.; Li, Y.; Chung, H.H. Adaptive Particle Swarm Optimization. IEEE Trans. Syst. Man, Cybern. Part B Cybern. 2009, 39, 1362–1381. [Google Scholar] [CrossRef]

- Garg, H. A hybrid PSO-GA algorithm for constrained optimization problems. Appl. Math. Comput. 2016, 274, 292–305. [Google Scholar] [CrossRef]

- Guo, D.; Jin, Y.; Ding, J.; Chai, T. Heterogeneous Ensemble-Based Infill Criterion for Evolutionary Multiobjective Optimization of Expensive Problems. IEEE Trans. Cybern. 2019, 49, 1012–1025. [Google Scholar] [CrossRef]

- Clerc, M.; Kennedy, J. The particle swarm-explosion, stability, and convergence in a multidimensional complex space. IEEE Trans. Evol. Comput. 2002, 6, 58–73. [Google Scholar] [CrossRef]

- Toropov, V.V.; van Keulen, F.; Markine, V.; Alvarez, L. Multipoint approximations based on response surface fitting: A summary of recent developments. In Proceedings of the 1st ASMO UK/ISSMO Conference on Engineering Design Optimization, Ilkley, UK, 8–9 July 1999; pp. 371–381. [Google Scholar]

- Coello Coello, C.A.; Montes, E.M.; Mezura Montes, E. Constraint-handling in genetic algorithms through the use of dominance-based tournament selection. Adv. Eng. Inform. 2002, 16, 193–203. [Google Scholar] [CrossRef]

- Mezura-Montes, E.; Coello Coello, C.A. An empirical study about the usefulness of evolution strategies to solve constrained optimization problems. Int. J. Gen. Syst. 2008, 37, 443–473. [Google Scholar] [CrossRef]

- Kaveh, A.; Talatahari, S. A novel heuristic optimization method: Charged system search. Acta Mech. 2010, 213, 267–289. [Google Scholar] [CrossRef]

- Kaveh, A.; Mahdavi, V.R. Colliding bodies optimization: A novel meta-heuristic method. Comput. Struct. 2014, 139, 18–27. [Google Scholar] [CrossRef]

- Belegundu, A.D. A Study of Mathematical Programming Methods for Structural Optimization. Ph.D. Thesis, University of Iowa, Iowa City, IA, USA, 1982. [Google Scholar]

- Zhang, M.; Luo, W.; Wang, X. Differential evolution with dynamic stochastic selection for constrained optimization. Inf. Sci. 2008, 178, 3043–3074. [Google Scholar] [CrossRef]

- Wang, Y.; Cai, Z.; Zhou, Y.; Fan, Z. Constrained optimization based on hybrid evolutionary algorithm and adaptive constraint-handling technique. Struct. Multidiscip. Optim. 2009, 37, 395–413. [Google Scholar] [CrossRef]

- Kashan, A.H. An efficient algorithm for constrained global optimization and application to mechanical engineering design: League championship algorithm (LCA). Comput. Aided Des. 2011, 43, 1769–1792. [Google Scholar] [CrossRef]

- Hock, W.; Schittkowski, K. Test Examples for Nonlinear Programming Codes; Springer: Berlin/Heidelberg, Germany, 1981. [Google Scholar]

- Liang, J.; Runarsson, T.; Mezura-Montes, E.; Clerc, M.; Suganthan, P.; Coello, C.A.C.; Deb, K. Problem Definitions and Evaluation Criteria for the CEC 2006 Special Session on Constrained Real-Parameter Optimization; Nangyang Technological University: Singapore, 2006; Volume 41. [Google Scholar]

- Runarsson, T.P. Constrained Evolutionary Optimization by Approximate Ranking and Surrogate Models. In Parallel Problem Solving from Nature—PPSN VIII; Yao, X., Burke, E.K., Lozano, J.A., Smith, J., Merelo-Guervós, J.J., Bullinaria, J.A., Rowe, J.E., Tiňo, P., Kabán, A., Schwefel, H.P., Eds.; Springer: Berlin/Heidelberg, Germany, 2004; pp. 401–410. [Google Scholar] [CrossRef]

- Aguirre, A.H.; Zavala, A.E.M.; Diharce, E.V.; Rionda, S.B. COPSO: Constrained Optimization via PSO Algorithm; Technical Report; Center for Research in Mathematics, CIMAT: Guanajuato, Mexico, 2007. [Google Scholar]

- Garcia, R.d.P.; de Lima, B.S.L.P.; Lemonge, A.C.D.C. A Surrogate Assisted Differential Evolution to Solve Constrained Optimization Problems. In Proceedings of the 2017 IEEE Latin American Conference on Computational Intelligence (LA-CCI), Arequipa, Peru, 8–10 November 2017; pp. 1–6. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).