Hole Concealment Algorithm Using Camera Parameters in Stereo 360 Virtual Reality System

1. Introduction

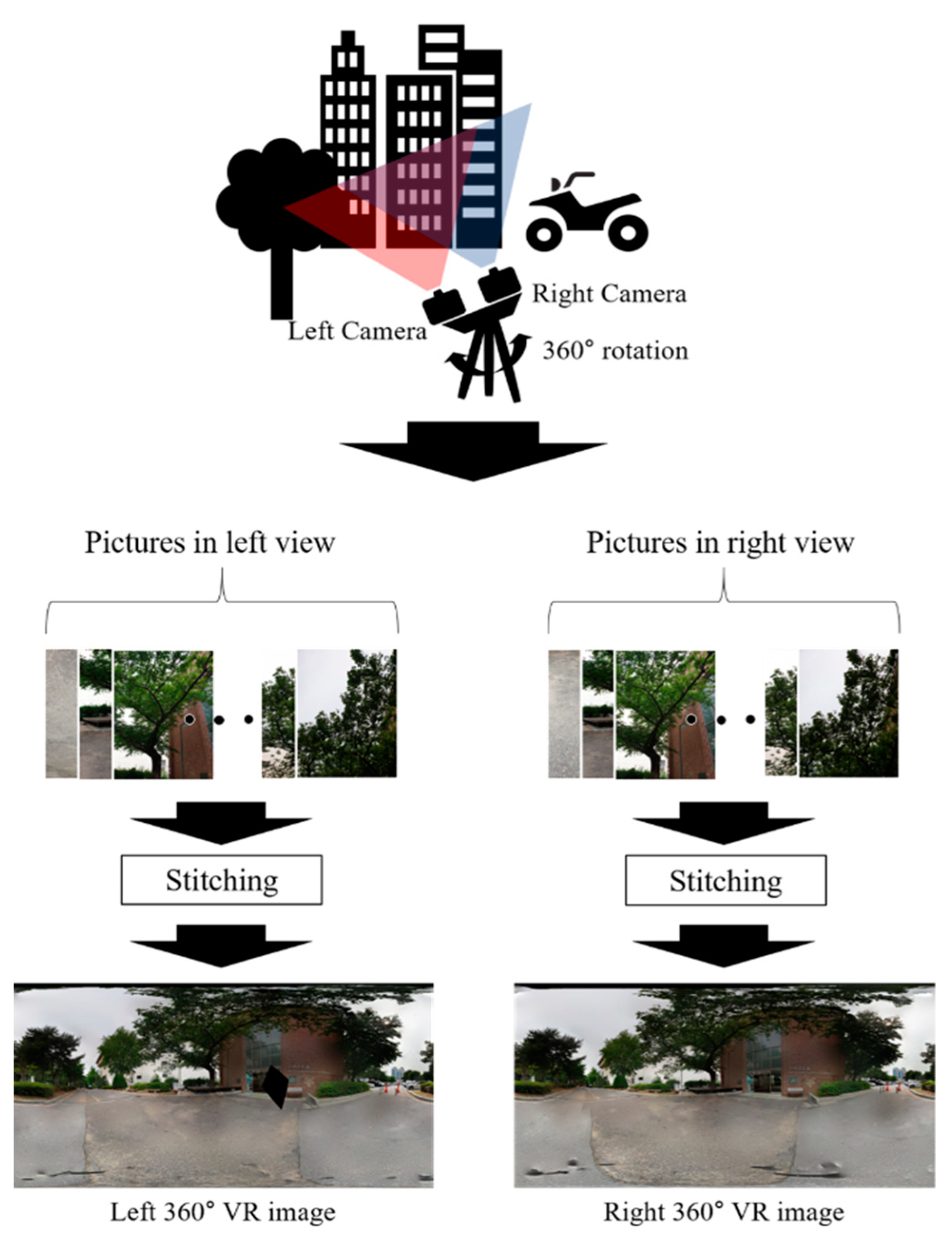

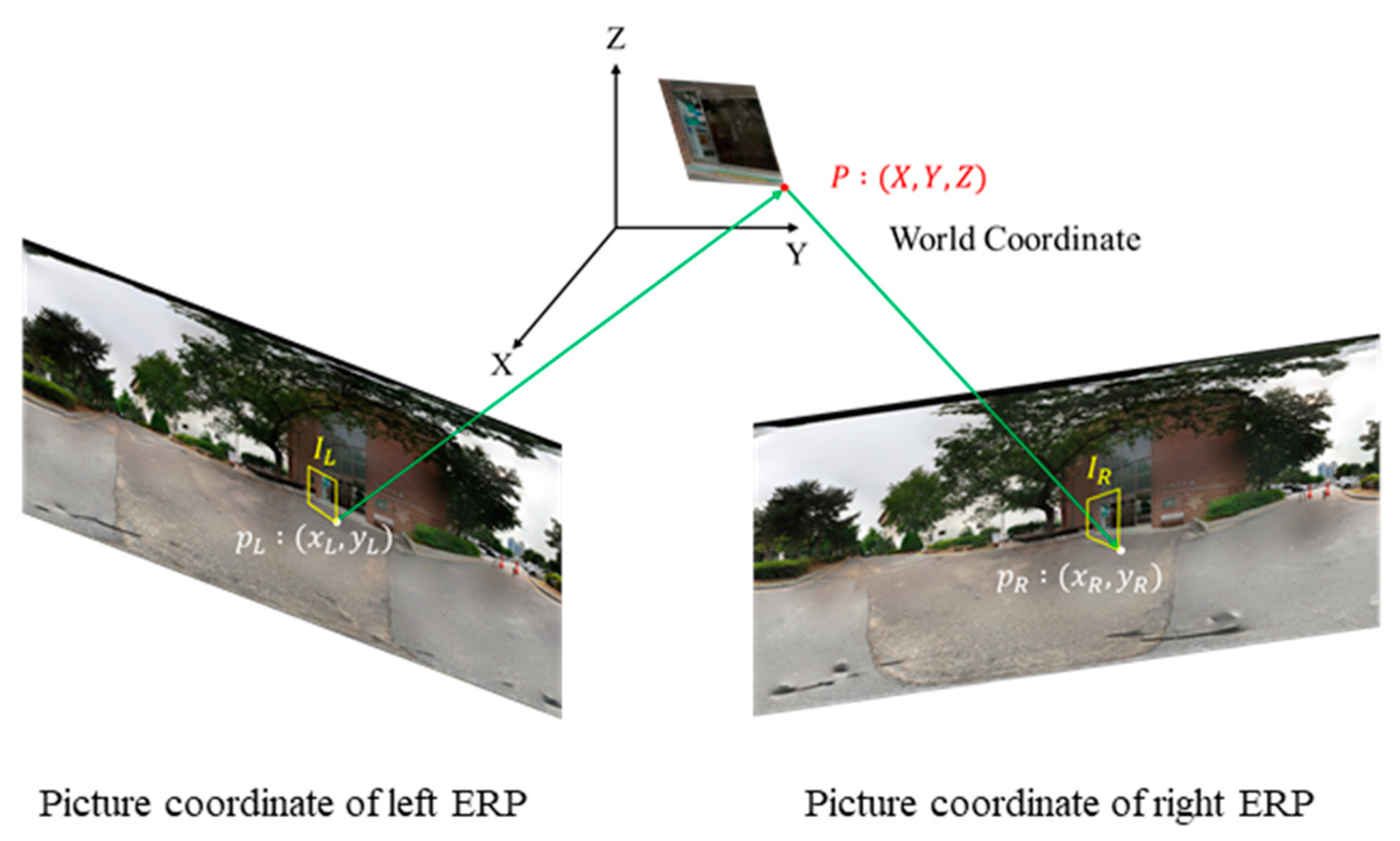

2. Problem Formulation

3. Algorithm Proposed to Conceal a Hole

3.1. Hole Detection

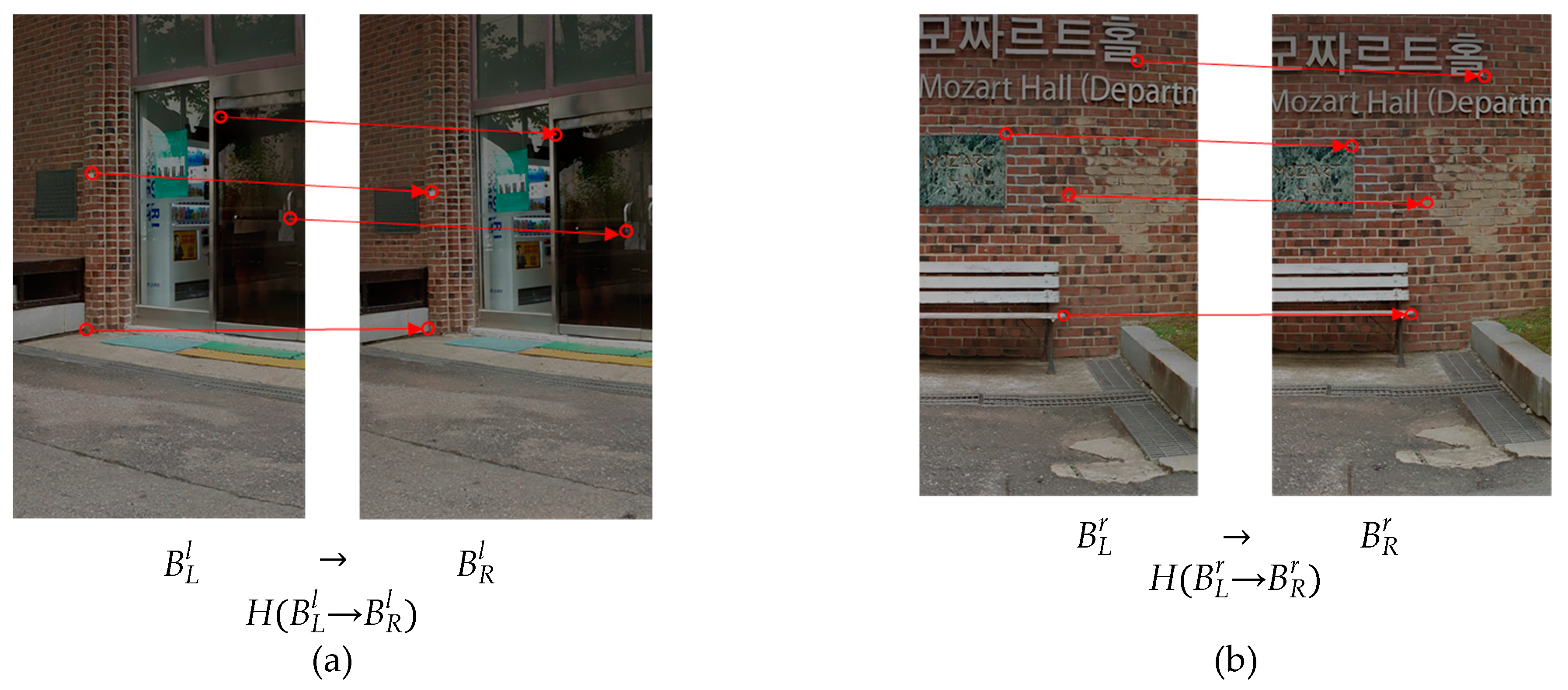

3.2. Camera Parameters of Neighboring Blocks

3.3. Concealment Based on Intrinsic and Extrinsic Matrixes

3.4. Prediction for Camera Parameters

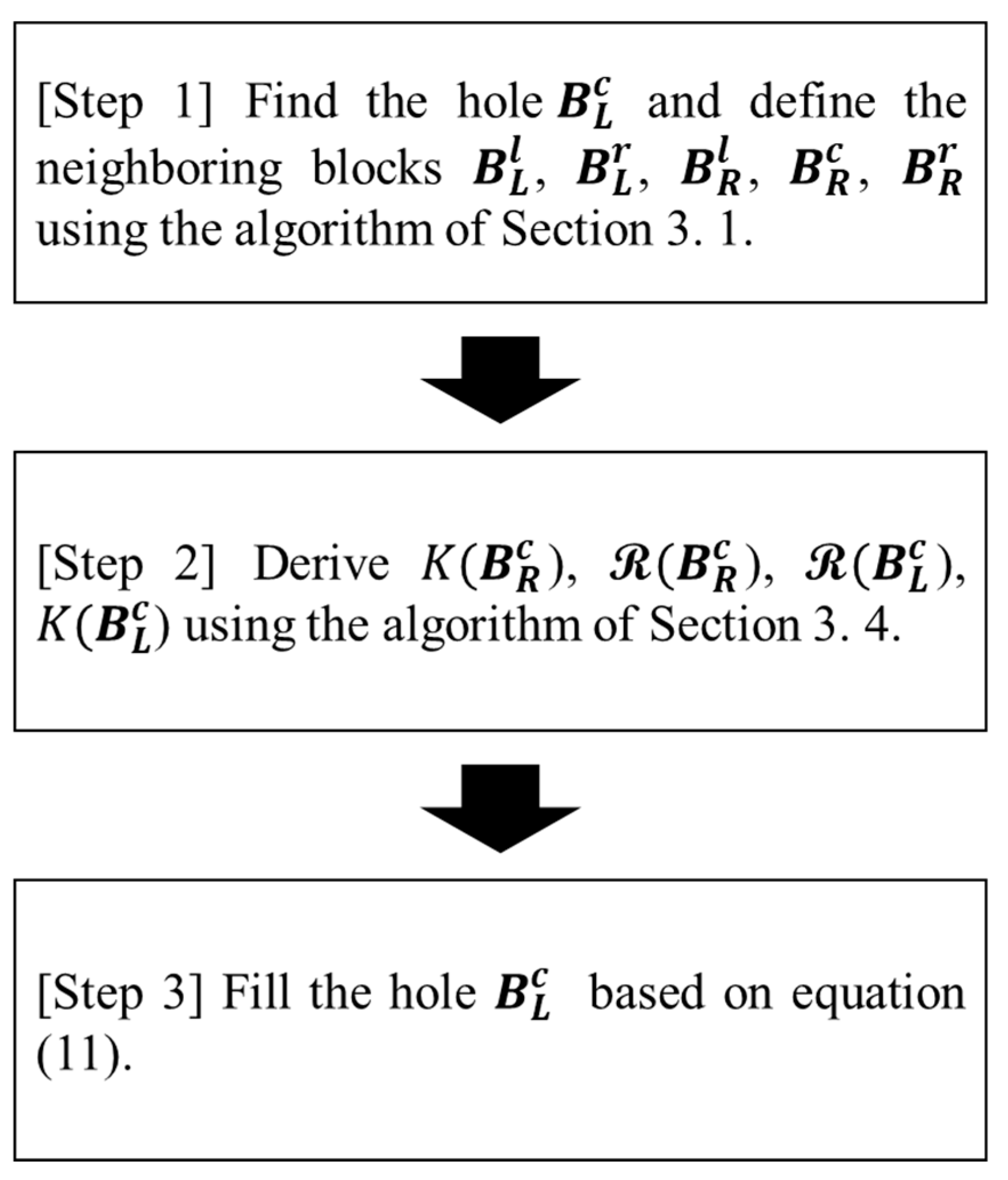

3.5. Summary of the Proposed Algorithm

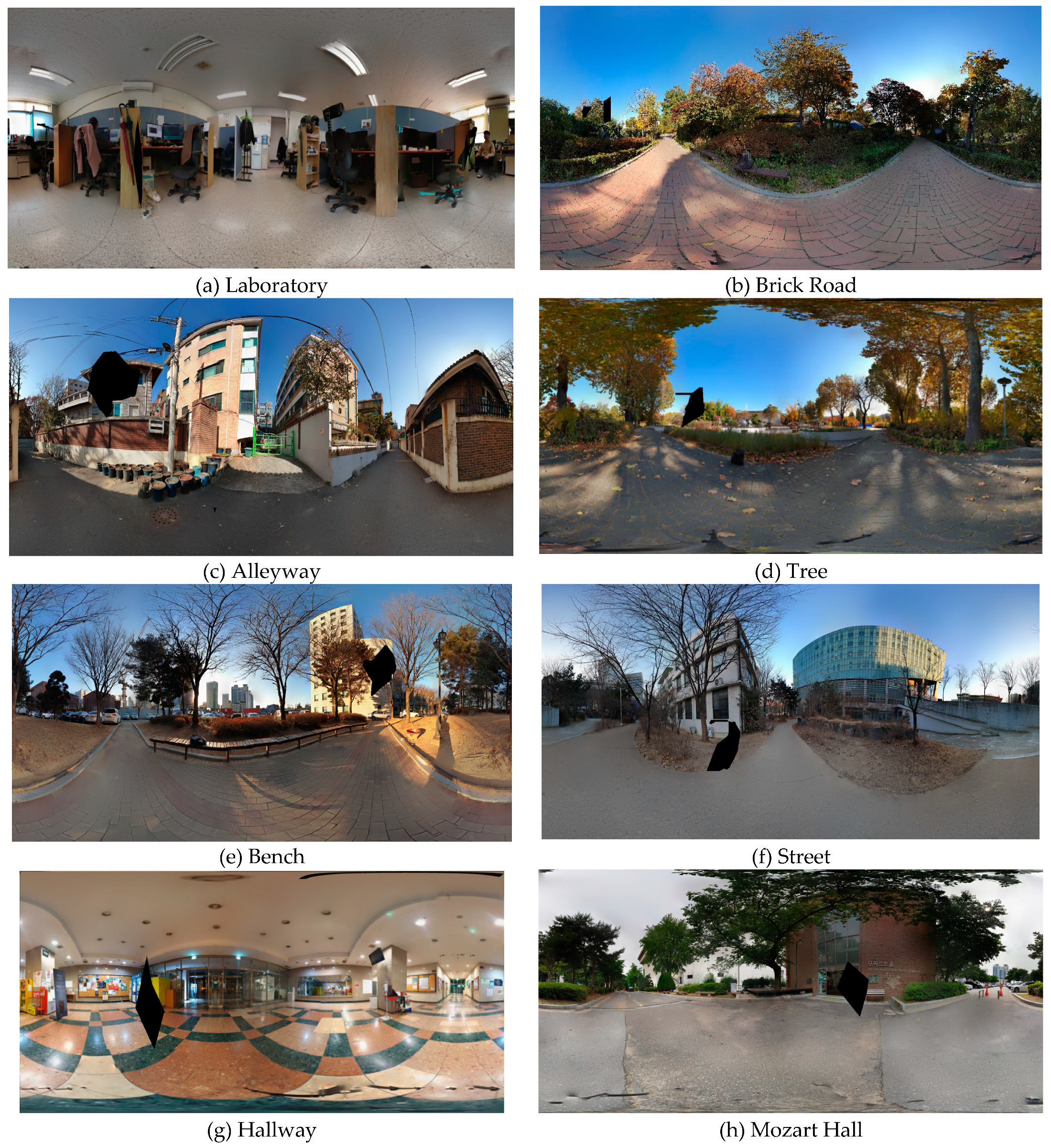

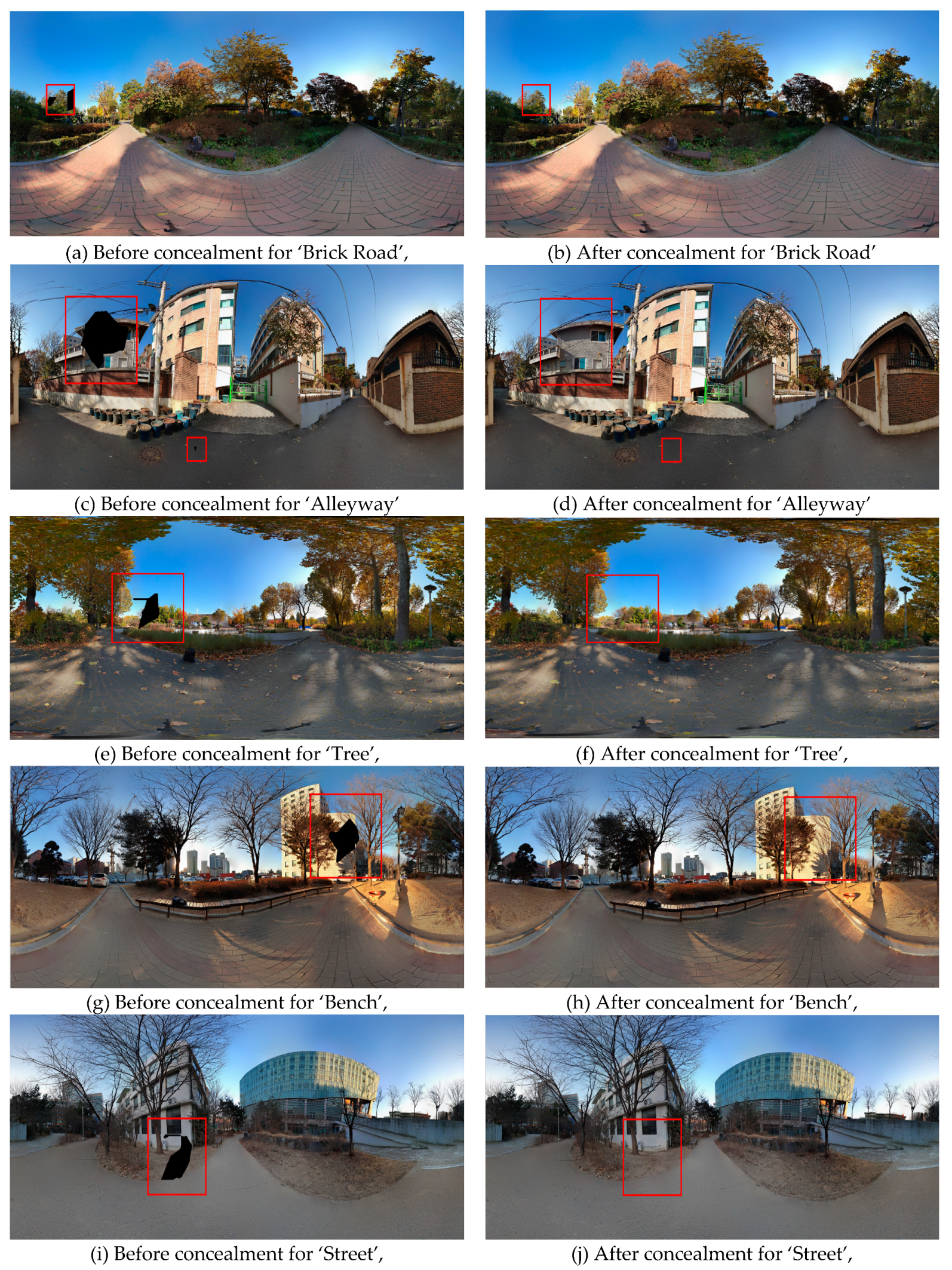

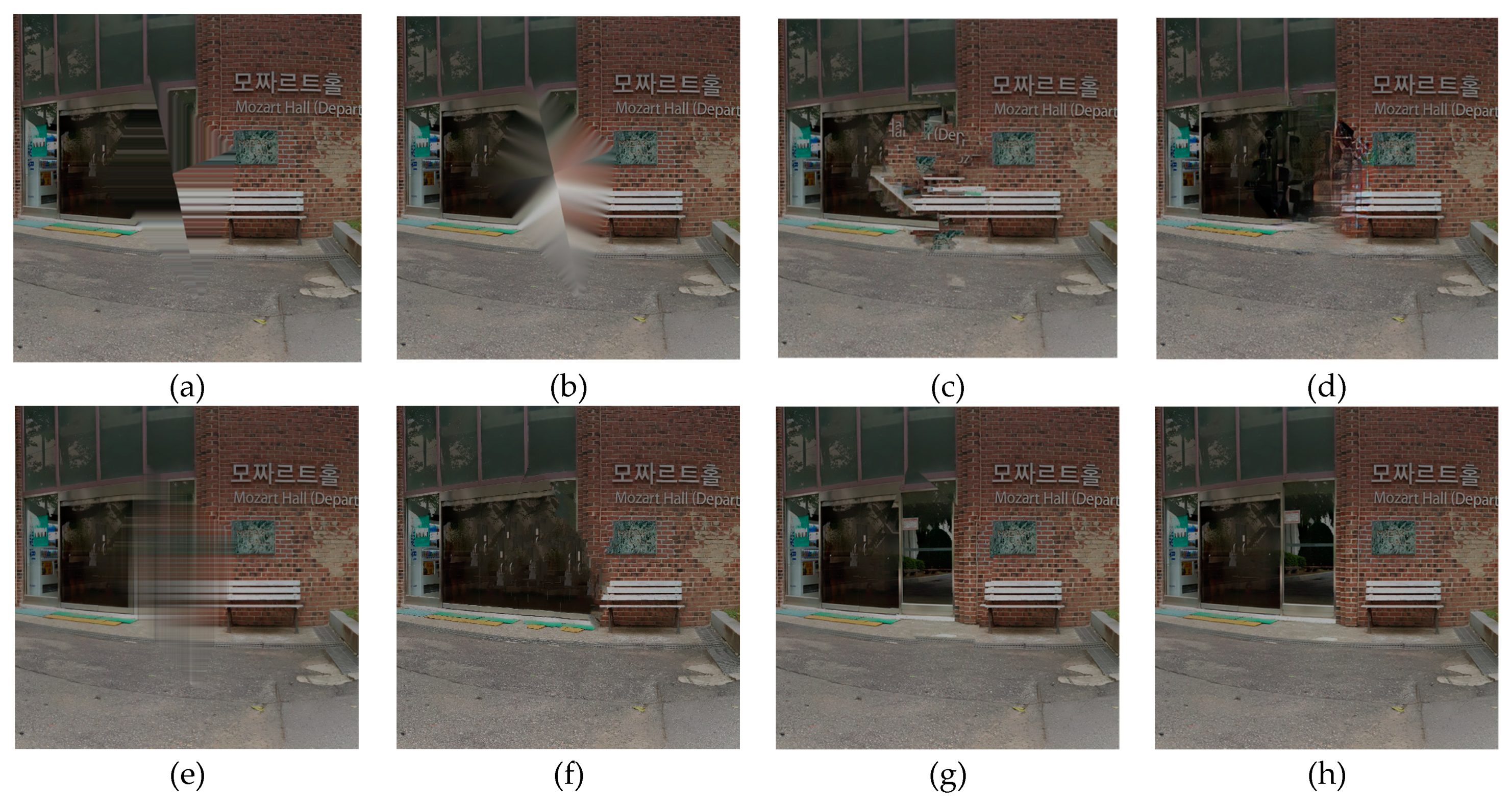

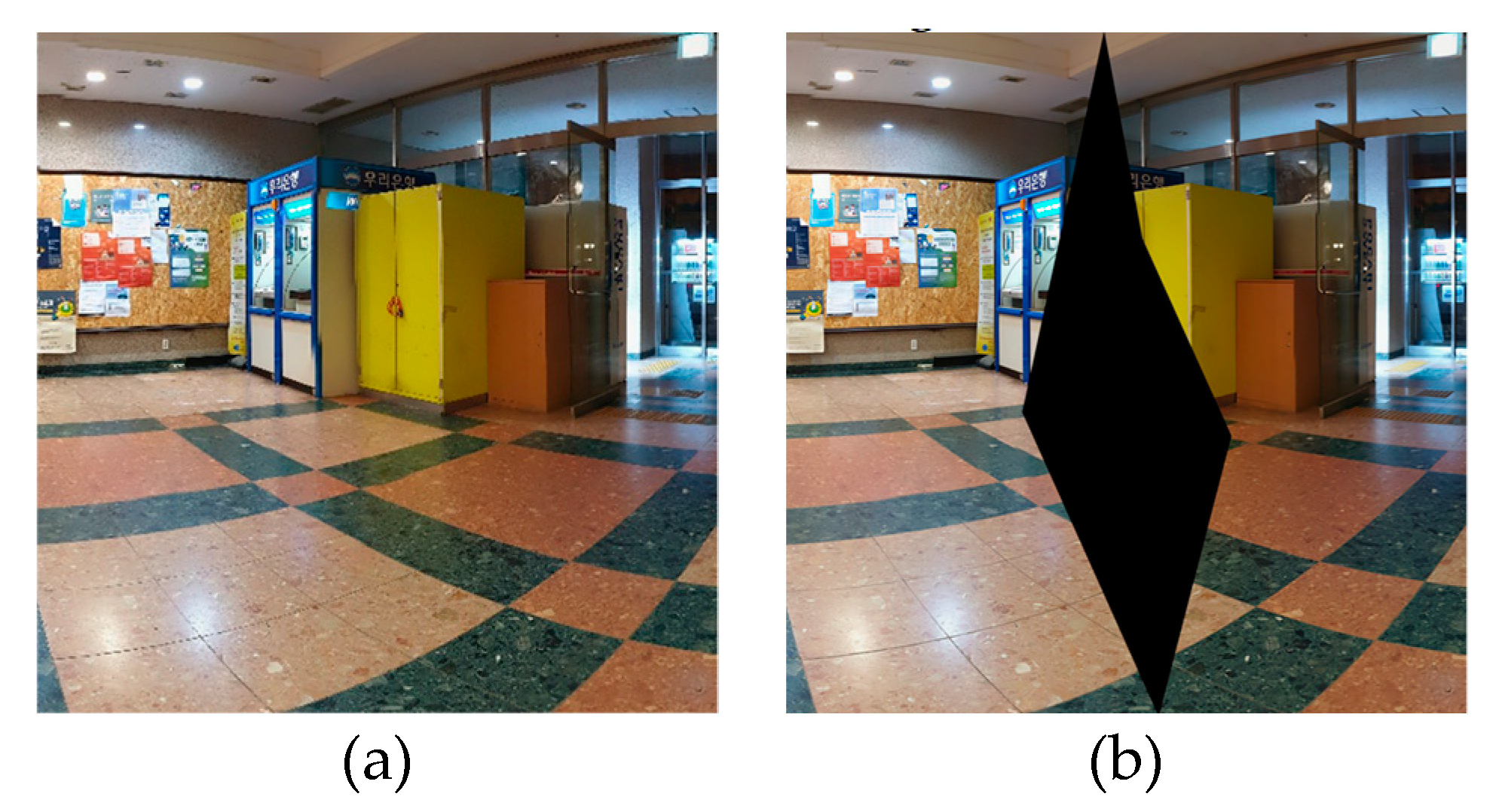

4. Simulation Results

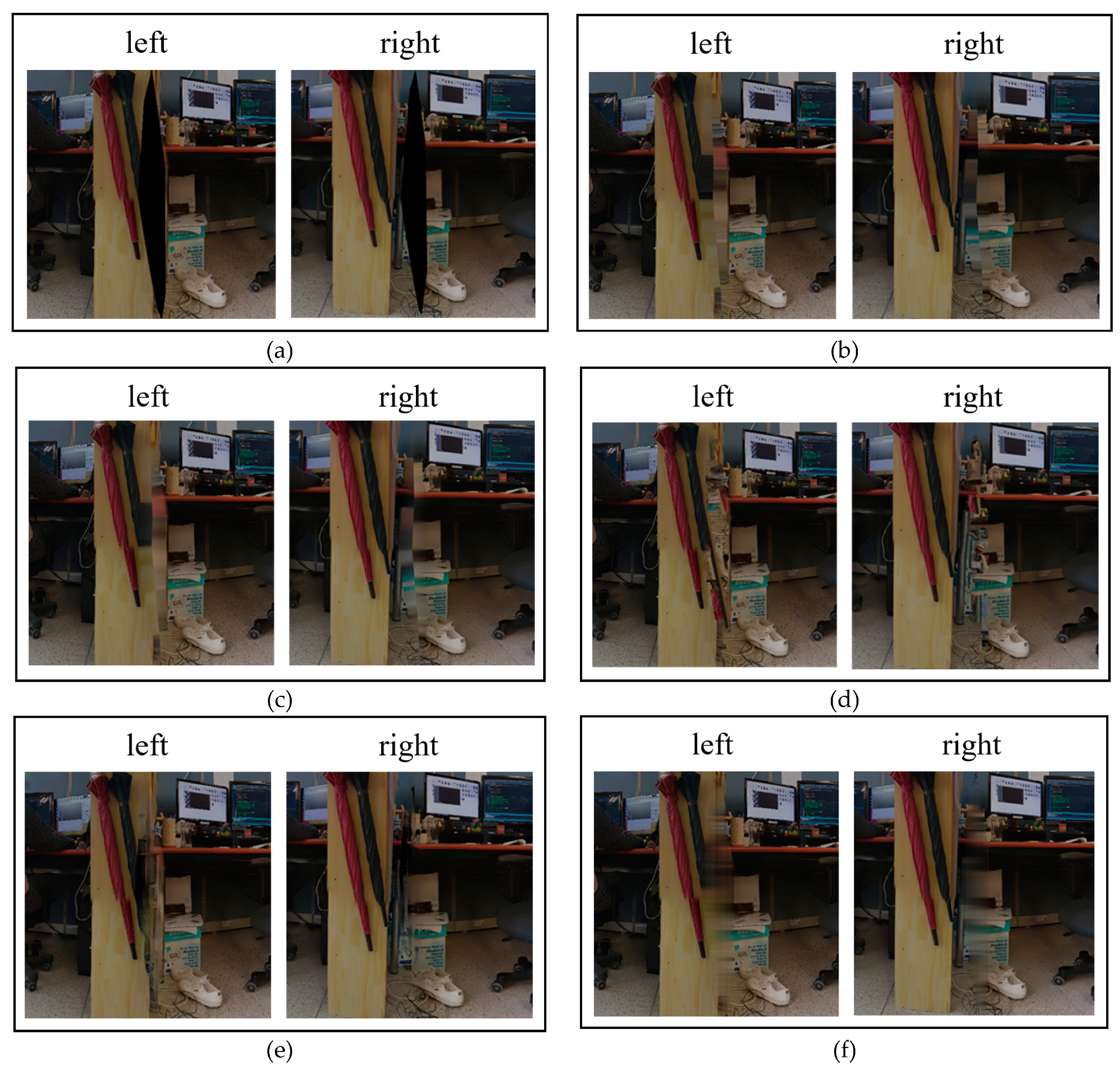

4.1. Subjective Performance

4.2. Objective Performance

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zhang, K.; Liu, S.J. The application of virtual reality technology in physical education teaching and training. In Proceedings of the 2016 IEEE International Conference on Service Operations and Logistics, and Informatics (SOLI), Beijing, China, 10–12 July 2016; pp. 245–248. [Google Scholar]

- Szeliski, R. Image Alignment and Stitching: A Tutorial, Foundations and Trends in Computer Graphics and Computer Vision; Now Publishers: Delft, The Netherlands, 2006; Volume 2, p. 120. [Google Scholar]

- Kim, B.S.; Lee, S.H.; Cho, N.I. Real-time panorama canvas of natural images. IEEE Trans. Consum. Electron. 2011, 57, 1961–1968. [Google Scholar] [CrossRef]

- Xiong, Y.; Pulli, K. Fast panorama stitching for high-quality panoramic images on mobile phones. IEEE Trans. Consum. Electron. 2010, 56, 298–306. [Google Scholar] [CrossRef]

- Brown, M.; Lowe, D.G. Automatic panoramic image stitching using invariant features. Int. J. Comput. Vis. 2007, 74, 59–73. [Google Scholar] [CrossRef]

- Viswanathan, D.G. Features from accelerated segment test (fast). In Proceedings of the 10th Workshop on Image Analysis for Multimedia Interactive Services, London, UK, 6–8 May 2009; pp. 6–8. [Google Scholar]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Bay, H.; Tuytelaars, T.; Van Gool, L. Surf: Speeded up robust features. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2006; pp. 404–417. [Google Scholar]

- Calonder, M. Brief: Binary robust independent elementary features. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2010; pp. 778–792. [Google Scholar]

- Leutenegger, S.; Chli, M.; Siegwart, R.Y. BRISK: Binary robust invariant scalable keypoints. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2548–2555. [Google Scholar]

- Rublee, E. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Triggs, B.; McLauchlan, P.F.; Hartley, R.I.; Fitzgibbon, A.W. Bundle adjustment—A modern synthesis. In International Workshop on Vision Algorithms; Springer: Berlin/Heidelberg, Germany, 1999; pp. 298–372. [Google Scholar]

- Livatino, S. Stereoscopic visualization and 3-D technologies in medical endoscopic teleoperation. IEEE Trans. Ind. Electron. 2014, 62, 525–535. [Google Scholar] [CrossRef]

- Kramida, G. Resolving the vergence-accommodation conflict in head-mounted displays. IEEE Trans. Vis. Comput. Graph. 2015, 22, 1912–1931. [Google Scholar] [CrossRef]

- Bertalbio, M.; Bertozzi, A.L.; Sapiro, G. Navier-stokes, fluid dynamics, and image and video inpainting. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition. CVPR 2001, Kauai, HI, USA, 8–14 December 2001; p. I-I. [Google Scholar]

- Telea, A. An image inpainting technique based on the fast marching method. J. Graph. Tools 2004, 9, 23–34. [Google Scholar] [CrossRef]

- Criminisi, A.; Pérez, P.; Toyama, K. Region filling and object removal by exemplar-based image inpainting. IEEE Trans. Image Process. 2004, 13, 1200–1212. [Google Scholar] [CrossRef]

- Liu, G. Image inpainting for irregular holes using partial convolutions. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 85–100. [Google Scholar]

- Elango, P. Digital image inpainting using cellular neural network. Int. J. Open Probl. Compt. Math. 2009, 2, 439–450. [Google Scholar]

- Yan, Z. Shift-net: Image inpainting via deep feature rearrangement. In Proceedings of the European Conference on Computer vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 1–17. [Google Scholar]

- Wu, X. A light cnn for deep face representation with noisy labels. IEEE Trans. Inform. Forensics Secur. 2018, 13, 2884–2896. [Google Scholar] [CrossRef]

- Zhang, S.; He, R.; Tan, T. Demeshnet: Blind face inpainting for deep meshface verification. IEEE Trans. Inform. Forensics Secur. 2017, 13, 637–647. [Google Scholar] [CrossRef]

- Zhang, K. Learning deep CNN denoiser prior for image restoration. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3929–3938. [Google Scholar]

- Arbeláez, P.; Maire, M.; Fowlkes, C.; Malik, J. Contour detection and hierarchical image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 33, 898–916. [Google Scholar] [CrossRef] [PubMed]

- Lourakis, M.I. A brief description of the Levenberg-Marquardt algorithm implemented by levmar. Found. Res. Technol. 2005, 4, 1–6. [Google Scholar]

- Ye, Y. Algorithm descriptions of projection format conversion and video quality metrics in 360Lib Version 9. In Proceedings of the Joint Video Exploration Team (JVET) of ITU-T SG 16 WP 3 and ISO/IEC JTC 1/SC 29/WG 11 13th Meeting, Marrakech, MA, USA, 9–18 January 2019. [Google Scholar]

- Slabaugh, G.G. Computing Euler angles from a rotation matrix. Retriev. August 1999, 6, 39–63. [Google Scholar]

- Guillemot, C.; le Meur, O. Image Inpainting: Overview and Recent Advances. IEEE Signal Process. Mag. 2014, 31, 127–144. [Google Scholar] [CrossRef]

- Lu, H.; Zhang, Y.; Li, Y.; Zhou, Q.; Tadoh, R.; Uemura, T.; Kim, H.; Serika, S. Depth Map Reconstruction for Underwater Kinect Camera Using Inpainting and Local Image Mode Filtering. IEEE Access 2017, 5, 7115–7122. [Google Scholar] [CrossRef]

- Buyssens, P.; le Meur, O.; Daisy, M.; Tschumperlé, D.; Lézoray, O. Depth-Guided Disocclusion Inpainting of Synthesized RGB-D Images. IEEE Trans. Image Process. 2019, 26, 525–538. [Google Scholar] [CrossRef]

- Serrano, A.; Kim, I.; Chen, Z.; DiVerdi, S.; Gutierrez, D.; Hertzmann, A.; Masia, B. Motion parallax for 360° RGBD video. IEEE Trans. Vis. Comput. Graph. 2019, 25, 1817–1827. [Google Scholar] [CrossRef] [PubMed]

- GiliSoft Video Watermark Removal Tool. Available online: http://www.gilisoft.com/product-video-watermark-removal-tool.htm (accessed on 8 February 2021).

- Theinpaint. Available online: https://theinpaint.com/download (accessed on 8 February 2021).

- Wang, Z. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Bovik, A.C. A universal image quality index. IEEE Signal Process. Lett. 2002, 9, 81–84. [Google Scholar] [CrossRef]

| Method | SSIM | PSNR (dB) | Time (s) |

| Bertalmio [16] | 0.6483 | 19.6982 | 12.9177 |

| Telea [17] | 0.6320 | 16.5155 | 13.1796 |

| Criminisi [18] | 0.4406 | 17.0804 | 30346 |

| Liu [19] | 0.2381 | 17.3725 | N/A |

| Gilisoft [33] | 0.7427 | 24.6347 | 2.1600 |

| Theinpaint [34] | 0.7701 | 25.0058 | 28.1900 |

| Simple copy | 0.6196 | 21.1793 | 0.0160 |

| Proposed algorithm | 0.7954 | 25.4479 | 7.3261 |

| Width Ratio | SSIM | PSNR (dB) | Time (s) |

| 1.0 | 0.7491 | 24.1525 | 5.4439 |

| 1.2 | 0.7585 | 24.4355 | 6.3663 |

| 1.5 | 0.7954 | 25.4479 | 7.3261 |

| 1.75 | 0.7614 | 24.6553 | 8.0263 |

| 2.0 | 0.5986 | 19.8681 | 8.8201 |

| Method | SSIM | PSNR (dB) | Time (s) |

| Bertalmio [16] | 0.7153 | 18.6928 | 15.7136 |

| Telea [17] | 0.7343 | 18.0315 | 16.3712 |

| Criminisi [18] | 0.5691 | 18.6113 | 62791 |

| Liu [19] | 0.3511 | 16.1611 | N/A |

| Gilisoft [33] | 0.7487 | 23.5176 | 0.5500 |

| Theinpaint [34] | 0.7585 | 22.5666 | 6.3600 |

| Simple copy | 0.6544 | 16.9261 | 0.2273 |

| Proposed algorithm | 0.7721 | 23.8020 | 8.8516 |

| Width Ratio | SSIM | PSNR (dB) | Time (s) |

| 1.0 | 0.6031 | 15.8433 | 6.7485 |

| 1.2 | 0.6476 | 18.3133 | 7.6910 |

| 1.5 | 0.7721 | 23.8020 | 8.8516 |

| 1.75 | 0.6505 | 17.6263 | 9.3063 |

| 2.0 | 0.6656 | 18.7562 | 9.9974 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cha, S.; Nam, D.-Y.; Han, J.-K. Hole Concealment Algorithm Using Camera Parameters in Stereo 360 Virtual Reality System. Appl. Sci. 2021, 11, 2033. https://doi.org/10.3390/app11052033

Cha S, Nam D-Y, Han J-K. Hole Concealment Algorithm Using Camera Parameters in Stereo 360 Virtual Reality System. Applied Sciences. 2021; 11(5):2033. https://doi.org/10.3390/app11052033

Chicago/Turabian StyleCha, Sangguk, Da-Yoon Nam, and Jong-Ki Han. 2021. "Hole Concealment Algorithm Using Camera Parameters in Stereo 360 Virtual Reality System" Applied Sciences 11, no. 5: 2033. https://doi.org/10.3390/app11052033

APA StyleCha, S., Nam, D.-Y., & Han, J.-K. (2021). Hole Concealment Algorithm Using Camera Parameters in Stereo 360 Virtual Reality System. Applied Sciences, 11(5), 2033. https://doi.org/10.3390/app11052033