HASPO: Harmony Search-Based Parameter Optimization for Just-in-Time Software Defect Prediction in Maritime Software

Abstract

1. Introduction

- RQ1: How much can HS-based parameter optimization improve SDP performance in maritime domain software?

- RQ2: By how much can HS-based parameter optimization method reduce cost?

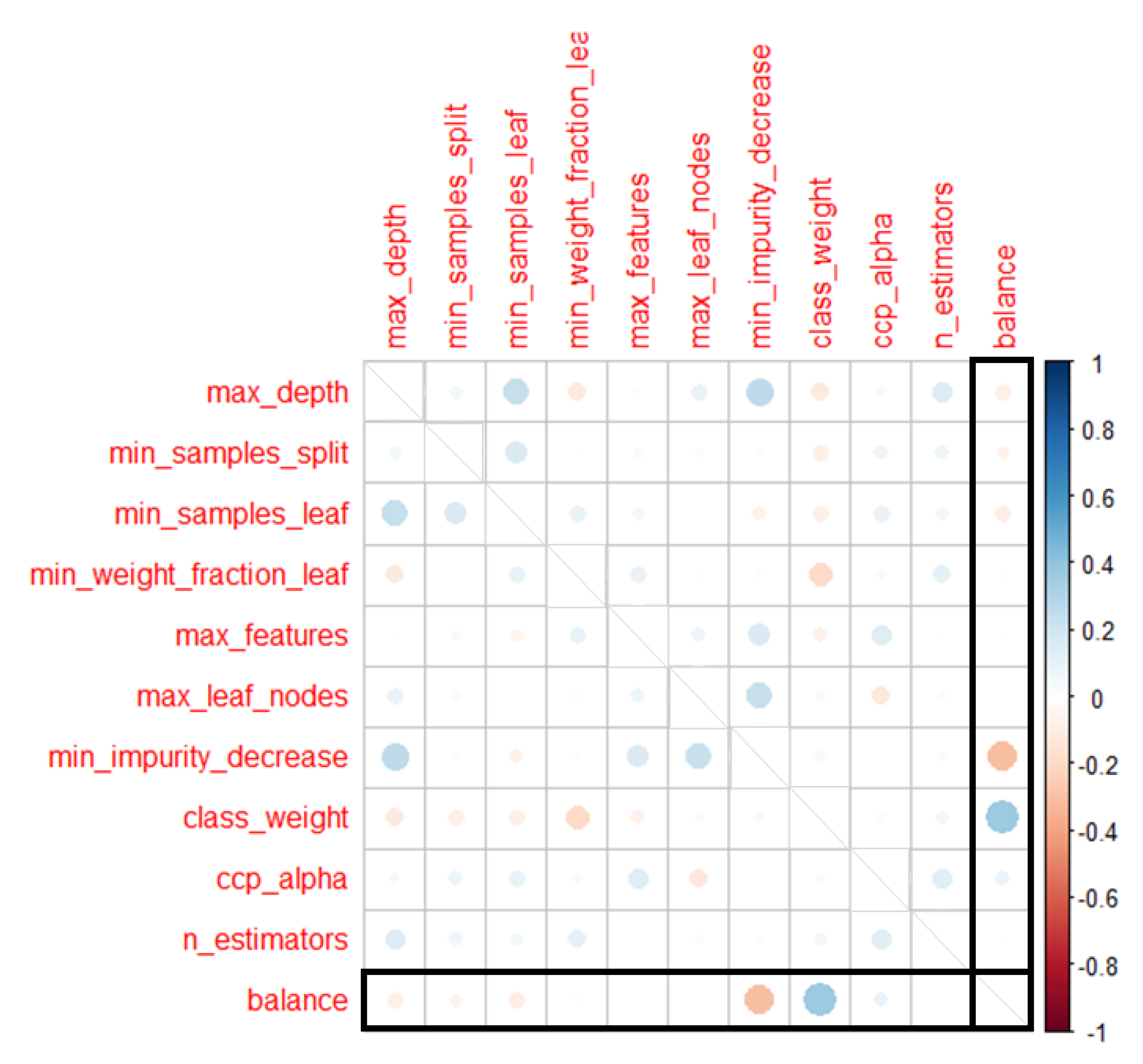

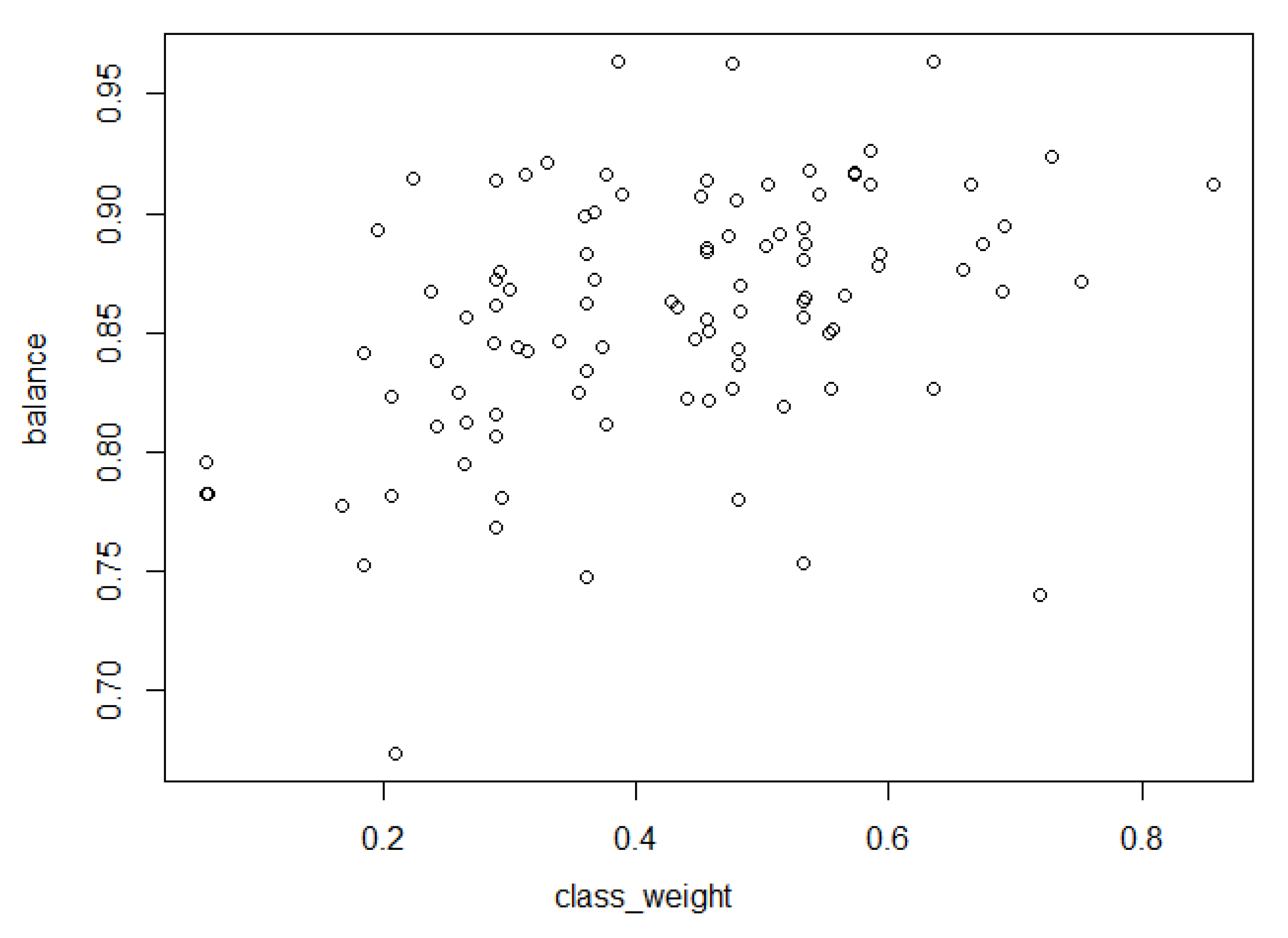

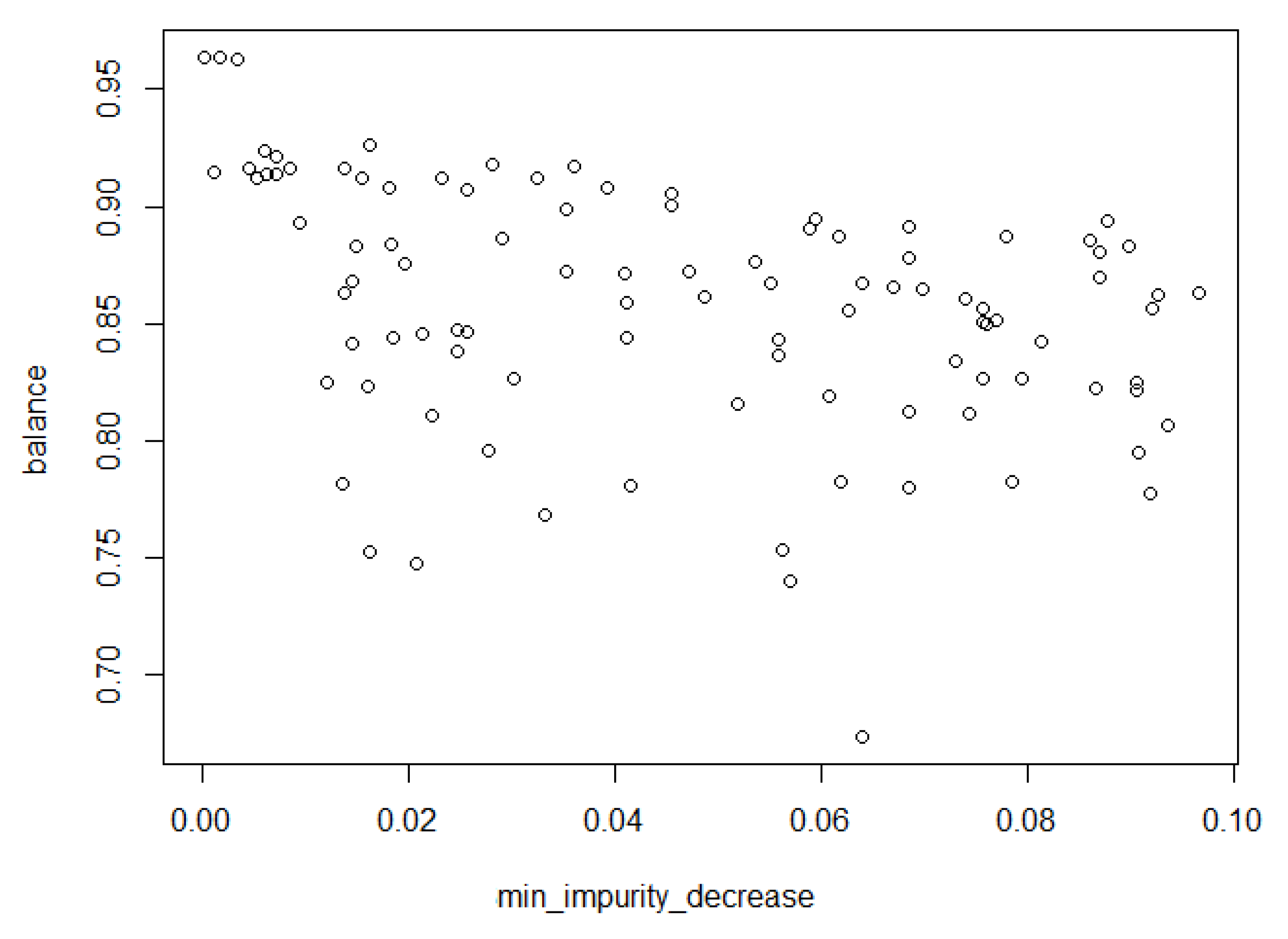

- RQ3: Which parameters are important for improving SDP performance?

- RQ4: How does the proposed technique perform in open source software (OSS)?

- To the best of our knowledge, we first apply HS-based parameter optimization to JIT-SDP and the maritime domain.

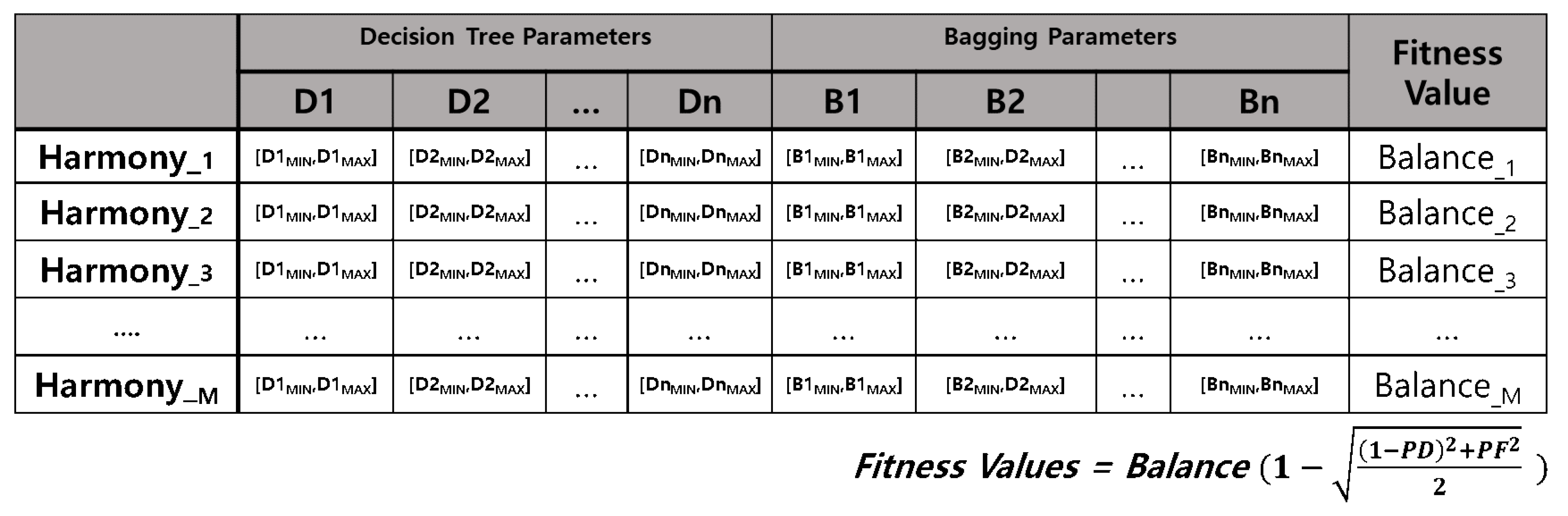

- We first apply HS-based parameter optimization with search space of the decision tree and bagging classifier together with balanced fitness function in JIT-SDP.

2. Background

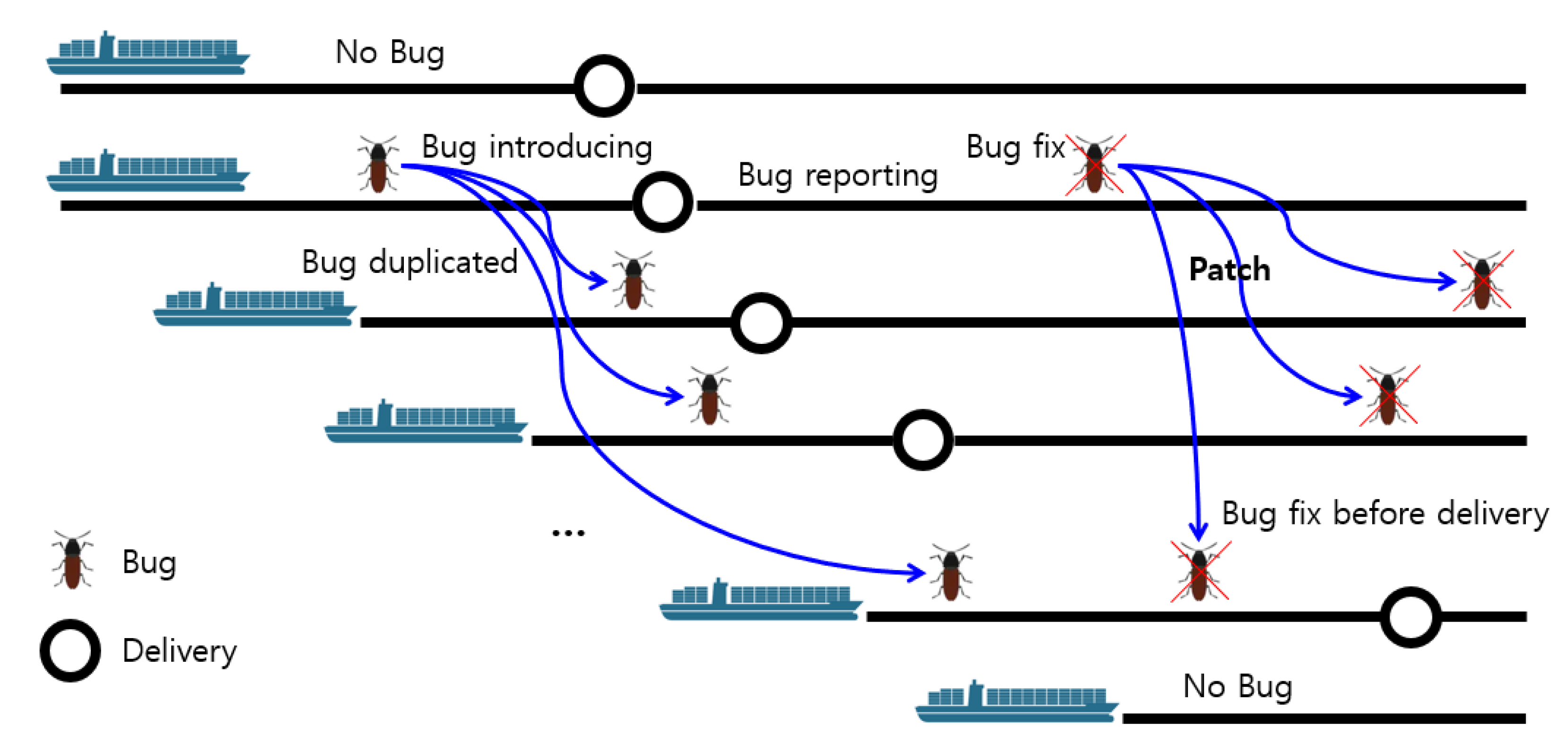

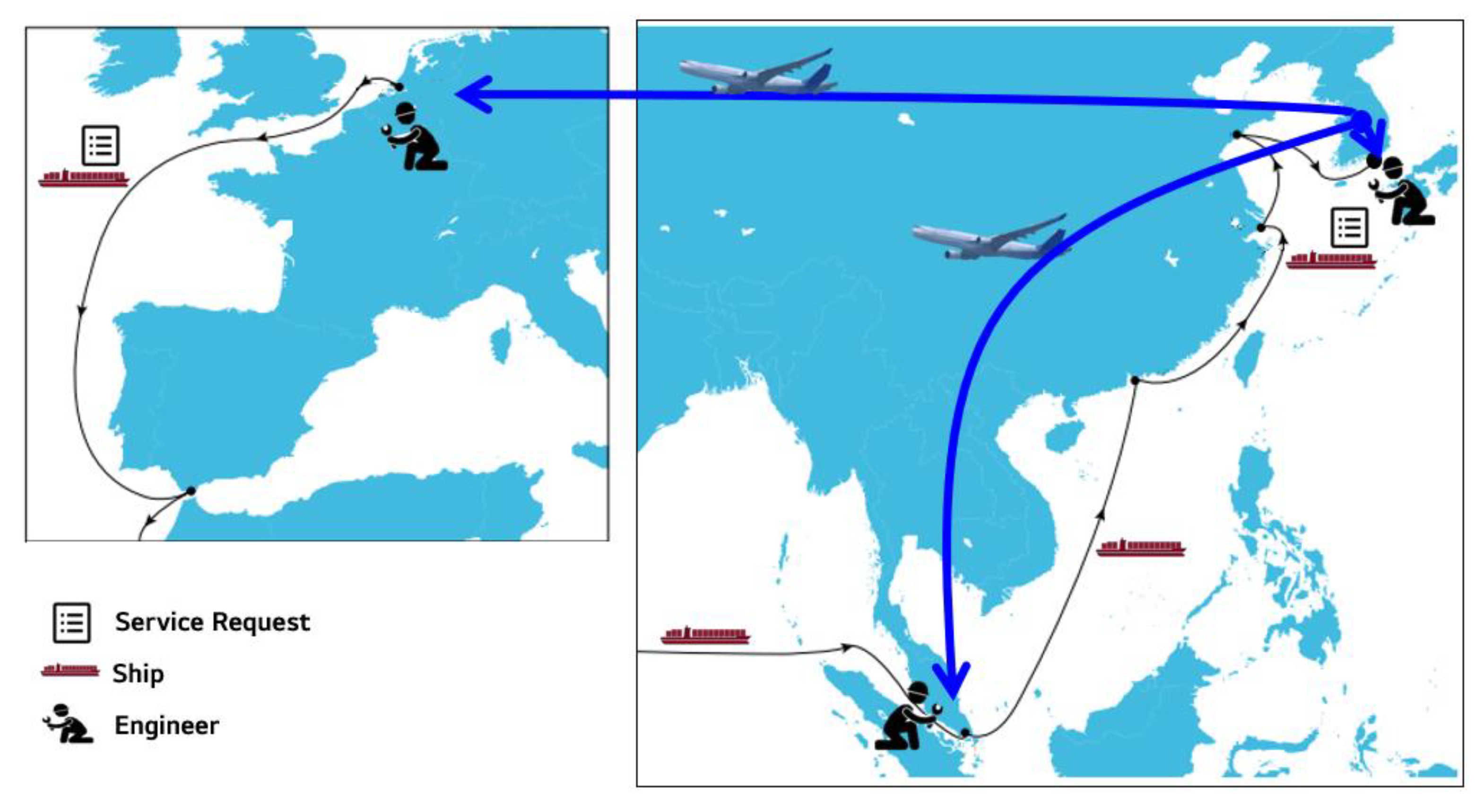

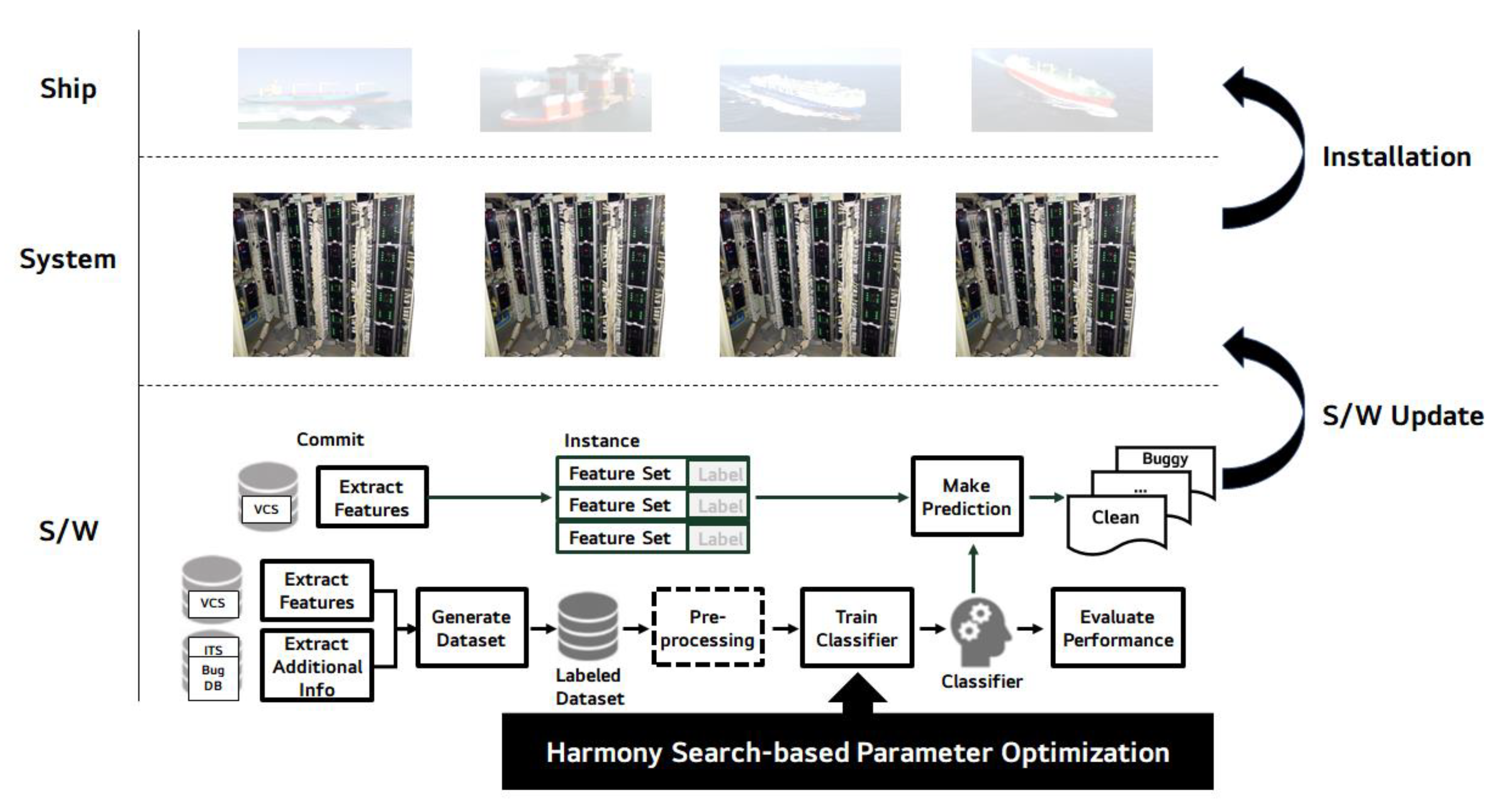

2.1. Software Practices and Unique Characteristics in the Maritime Domain

- In general, ships are made to order and built in series with protoless development.

- Ships move around the world and have low accessibility for defect removal activities.

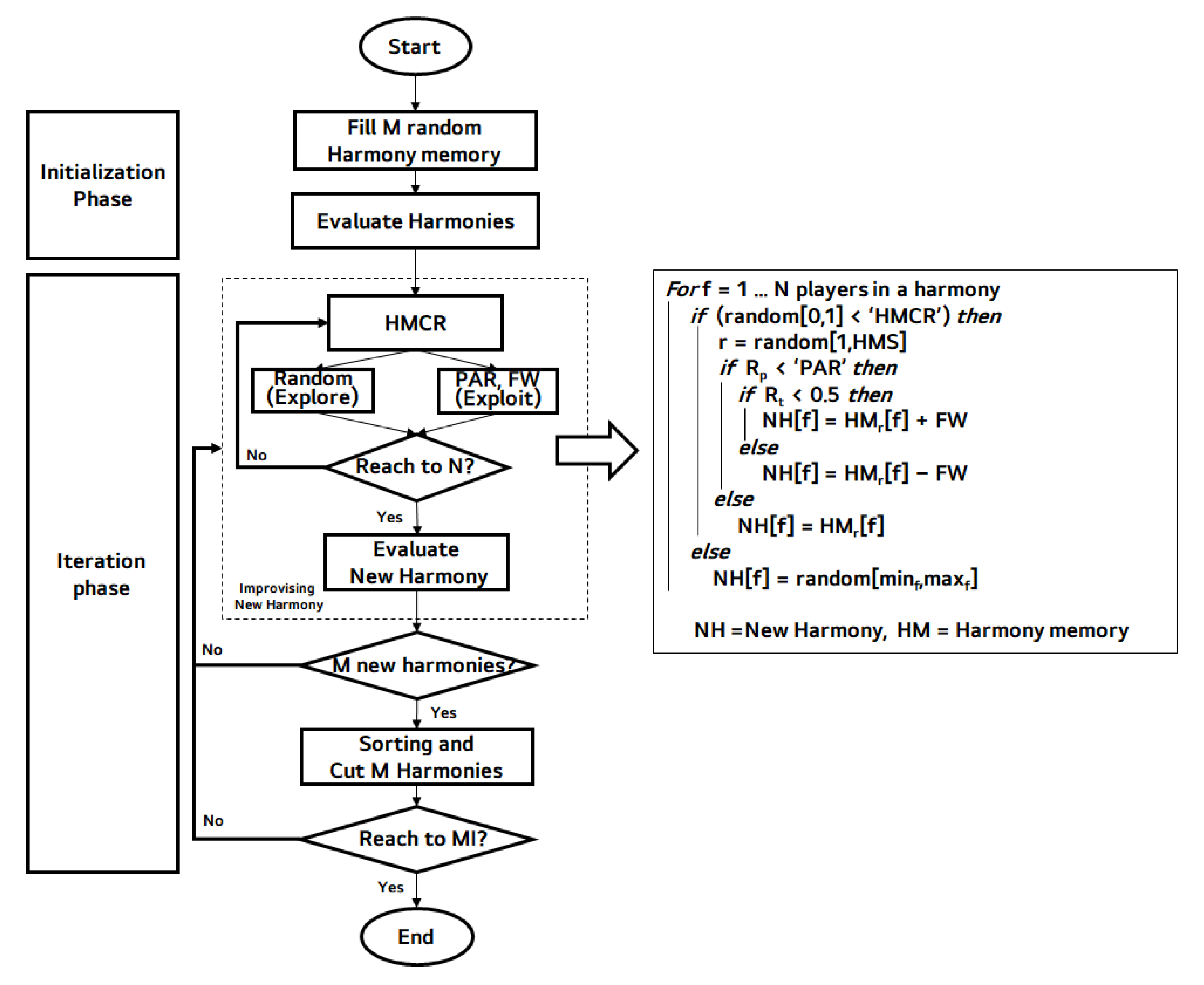

2.2. Harmony Search

- (1)

- Initialization phase: The HS process starts from generating HMS number of randomly generated harmonies, and evaluate performance of the generated harmonies.

- (2)

- Iteration phase:

- The iteration phase starts from improvising a new harmony. Each instrument player decides their notes considering HMCR, PAR, and FW. The pseudocode for these functions is described in the right side of the Figure 3. Finally, this phase evaluates the newly improvising harmony.

- HSA generates M new harmonies, and updates the harmony memory with the new solution which is superior to the worst solution within the HM.

- The A and B processes iterate until the iteration is reached to the MI.

3. Harmony Search-Based Parameter Optimization (HASPO) Approach

- Data source preparation: sources of datasets such as version control systems (VCSs), issue tracking systems (ITSs), and bug databases are managed and maintained by software archive tools. The tools automate and facilitate the next feature extraction and a whole SDP process.

- Feature extraction: this step extracts instances that contain set of features and class labels, i.e., buggy or clean, from the VCSs. The feature set, so-called prediction metrics, play a dominant role in the construction of prediction models. In this article, we picked 14 change-level metrics [7] as a feature set and the class label as either buggy or clean in the binary classification. The reason we used the process metrics is the quick automation of the features and labels and the opportunity to make a prediction early in the project.

- Dataset generation: dataset with instances which were extracted in the previous step can be generated. For each change commit, an instance can be created. In this stage, practitioners relied on a SZZ tool [27] to extract datasets with 14 change-level metrics from the VCS. The detailed explanation can be referred to the original article [14].

- Preprocessing: preprocessing is a step in-between data extraction and model training. Each preprocessing technique (e.g., standardization, selection of features, elimination of outliers and collinearity, transformation and sampling) may be implemented independently and selectively [28]. Representatively, the over-sampling methods of the minority class [29] or under-sampling from the majority class can be used to work with the class imbalance dataset (i.e., the class distribution is uneven).

- Model training with HS-based parameter optimization and performance evaluation: this step is the main contribution of our article. Normally, classifiers can be trained based on the dataset collected previously. The existing ML techniques have been facilitated many SDP approaches [30,31,32,33]. The classifier with the highest performance index is selected by evaluating the performance in terms of balance which reflects class imbalance. A previous case study [14] in the maritime domain showed the best model was constructed using a random forest classifier. Optimizing the parameters of the predictive model was expected to improve the performance of the existing technique. For this problem, we mainly consider HS-based parameter optimization for bagging of decision trees as a base classifier. The detailed explanations of our approach, search space and fitness function are described in Section 3.1 and Section 3.2.

- Prediction: the proposed approach successfully builds a prediction model to determine whether or not a software modification is defective. Once the developer has made a new commit, the results of the prediction have been labeled as either buggy or clean.

3.1. Optimization Problem Formulation

- (1)

- random_state in both classifiers is not selected because we already separated training and test dataset randomly before training.

- (2)

- min_impurity_split for a decision tree is not selected because it has been deprecated.

- (3)

- warm_start, n_jobs, verbose for bagging are not selected because they are not related to prediction performance.

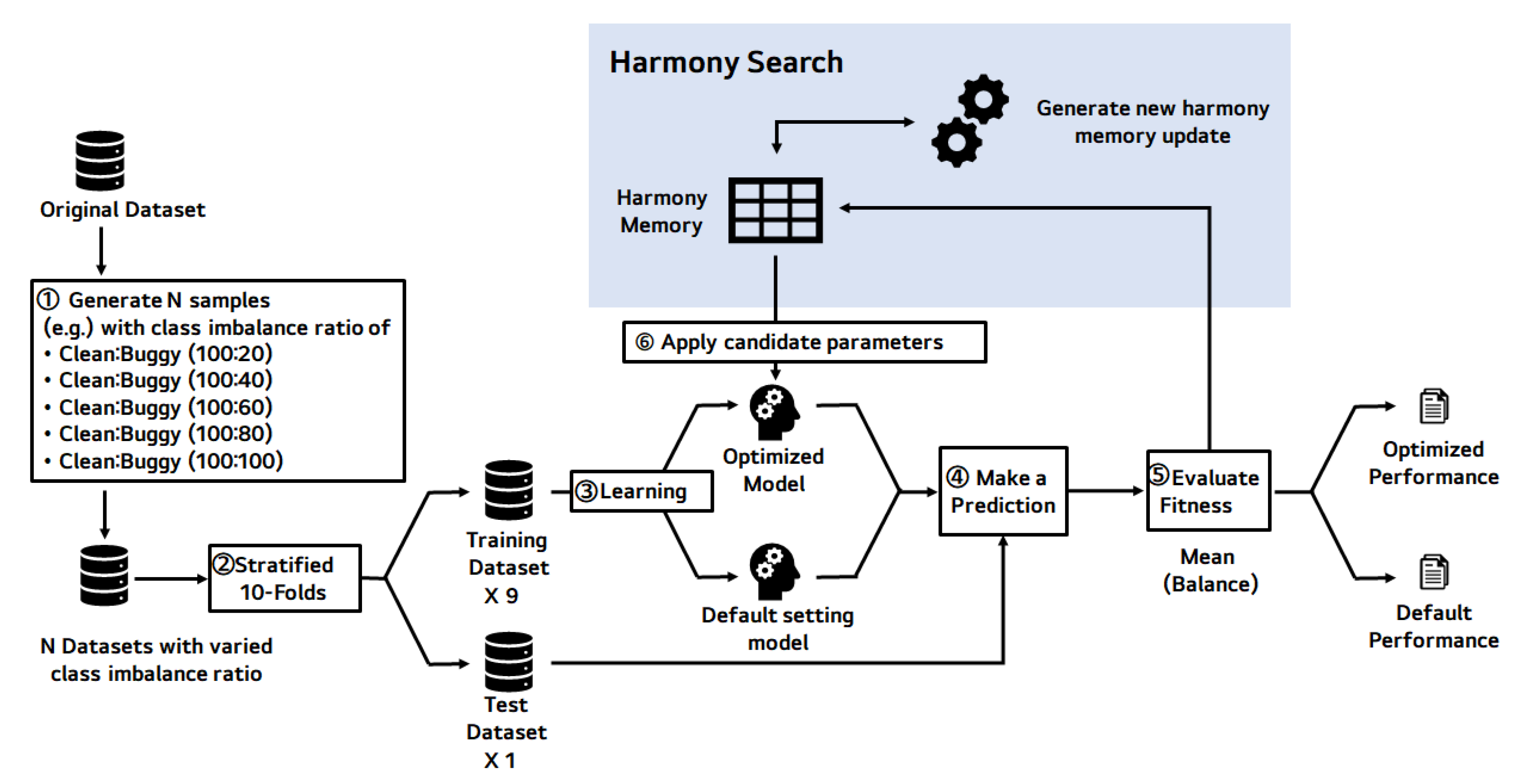

3.2. Harmony Search-Based Parameter Optimization of JIT-SDP

- In step 1, N datasets (e.g., five datasets in this article), which have different class imbalance ratio, are generated. The reason for changing the class imbalance ratio between defects and non-defects in N steps is to show that this approach can be applied in various data distributions.

- In step 2, N datasets are divided into 9 training datasets and a test dataset for a stratified 10-fold cross-validation. The stratified method divides a dataset, maintaining the class distribution of the dataset so that each fold has the same class distribution as the original dataset [36].

- Step 3 makes a prediction model with a training set on a set of parameters, i.e., the harmony, in a harmony memory. We have 10 training and test sets, and thus can make nine more prediction models with the same set of parameters.

- Each 10 generated models predict the outcome of each test dataset matched to the model and extracts the confusion matrix to calculate each model’s balance performance in step 4.

- Step 5 calculates the mean of 10 balance values and store it in the harmony memory as a harmony’s fitness value.

- Finally, it updates harmony memory and applies the harmony to the prediction model, and iterates as many times as MI.

4. Experimental Setup

4.1. Research Questions

- RQ1: How much can HS-based parameter optimization improve SDP performance in maritime domain software?

- RQ2: By how much can HS-based parameter optimization method reduce cost?

- RQ3: Which parameters are important for improving SDP performance?

- RQ4: How does the proposed technique perform in Open Source Software (OSS)?

4.2. Dataset Description

4.3. Performance Metrics

- Probability of detection (PD) is defined as percentage of defective modules that are classified correctly. PD is defined as follows: PD = TP/(TP + FN)

- Probability of false alarm (PF) is defined as proportion of non-defective modules misclassified within the non-defect class. PF is defined as PF = FP/(FP + TN)

- Balance (B) is defined as a Euclidean distance between the real (PD, PF) point and the ideal (1,0) point and means a good balance of performance between buggy and clean classes. Balance is defined as B =

- The commit inspection reduction (CIR) is defined as the ratio of reduced lines of code to inspect using the proposed model compared to a random selection to gain predicted defectiveness (PrD): CIR = (PrD − CI)/PrD, where CI and PrD are defined below.

- The commit inspection (CI) ratio is defined as the ratio of lines of code to inspect to the total lines of code for the reported defects. Lines of code (LOC) in the commits that were true positives is defined as TPLOC and similarly with TNLOC, FPLOC, and FNLOC. CI is defined as follows: CI = (TPLOC + FPLOC)/(TPLOC + TNLOC + FPLOC + FNLOC)

- The predicted defectiveness (PrD) ratio is defined as the ratio of the number of defects in the commits predicted as defective to the total number of defects. The number of defects in the commits that were true positives is defined as TPD and similarly with TND, FPD, and FND. PrD is defined as follows: PrD = TPD/(TPD + FND)

4.4. Classification Algorithms

5. Experimental Results

5.1. RQ1: How Much Can Harmony Search (HS)-Based Parameter Optimization Improve Software Defect Prediction (SDP) Performance in Maritime Domain?

5.2. RQ2: By How Much Can HS-Based Parameter Optimization Method Reduce Cost?

5.3. RQ3: Which Parameters Are Important for Improving SDP Performance?

5.4. RQ4: How Does the Proposed Technique Perform in Open Source Software (OSS)?

6. Discussion

7. Threats to Validity

8. Related Work

9. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Parameters of Decision Tree Classifier

| Parameters | Description | Search Space | Selected |

|---|---|---|---|

| criterion | The measurement function of the quality of a split. Supported criteria are

| {“gini”, “entropy”}, default = “gini” | {“gini”, “entropy”} |

| splitter | The strategy of the split at each node. Supported strategies are

| {“best”, “random”}, default = “best” | {“best”, “random”} |

| max_depth | The maximum depth of the tree. If None, then expanded until all leaves are pure or until all leaves contain less than min_samples_split samples. | int, default = None | 10~20 |

| min_samples_split | The minimum number of samples required to split an internal node | int or float, default = 2 | 2~10 |

| min_samples_leaf | The minimum number of samples required to be at a leaf node | int or float, default = 1 | 1~10 |

| min_weight_fraction_leaf | The minimum weighted fraction of the sum total of weights required to be at a leaf node | float, default = 0.0 | 0~0.5 |

| max_features | The number of features to consider | int, float, default = None | 1~Max |

| random_state | Controls the randomness of the estimator | default = None | None |

| max_leaf_nodes | Grow a tree with max_leaf_nodes in best-first fashion. If None then unlimited number of leaf nodes | int, default = None | 1~100, 0: Unlimited |

| min_impurity_decrease | A node will be split if this split induces a decrease of the impurity greater than or equal to this value | float, default = 0.0 | 0.0~1.0 |

| min_impurity_split | Threshold for early stopping in tree growth. A node will split if its impurity is above the threshold, otherwise it is a leaf. | float, default = 0 | 0 |

| class_weight | Weights associated with each class | dict, list of dict or “balanced”, default = None | Weight within 0.0~1.0 for each class |

| ccp_alpha | Complexity parameter used for Minimal Cost-Complexity Pruning. No pruning is default | non-negative float, default = 0.0 | 0.0001~0.001 |

Appendix B. Parameters of Bagging Classifier

| Parameters | Description | Search Space | Selected |

|---|---|---|---|

| base_estimator | The base estimator to fit on random subsets of the dataset. If None, then the base estimator is a decision tree. | object, default = None | default = None |

| n_estimators | The number of base estimators | int, default = 10 | 10~100 |

| max_samples | The number of samples from training set to train each base estimator | int or float, default = 1.0 | 0.0~1.0 |

| max_features | The number of features from training set to train each base estimator | int or float, default = 1.0 | 1~Max |

| bootstrap | Whether samples are drawn with replacement. If False, sampling without replacement is performed. | bool, default = True | 0,1 |

| bootstrap_features | Whether features are selected with replacement or not | bool, default = False | 0,1 |

| oob_score | Whether to use out-of-bag samples to estimate the generalization error. | bool, default = False | 0,1 |

| warm_start | Reuse the solution of the previous call to fit | bool, default = False | False |

| n_jobs | The number of jobs to run in parallel | int, default = None | None |

| random_state | Controls the random resampling of the original dataset | int or RandomState, default = None | None |

| verbose | Controls the verbosity when fitting and predicting | int, default = 0 | 0 |

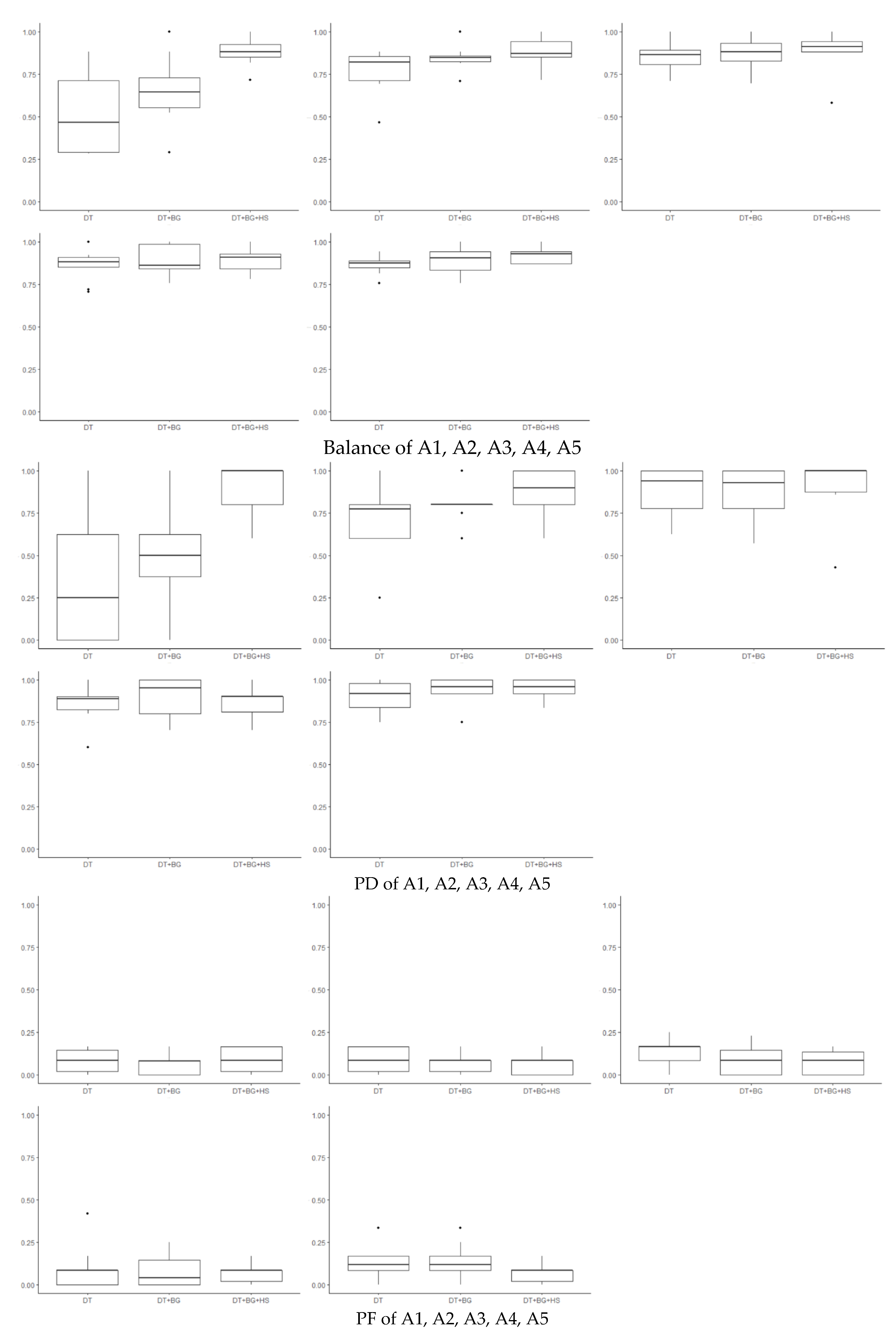

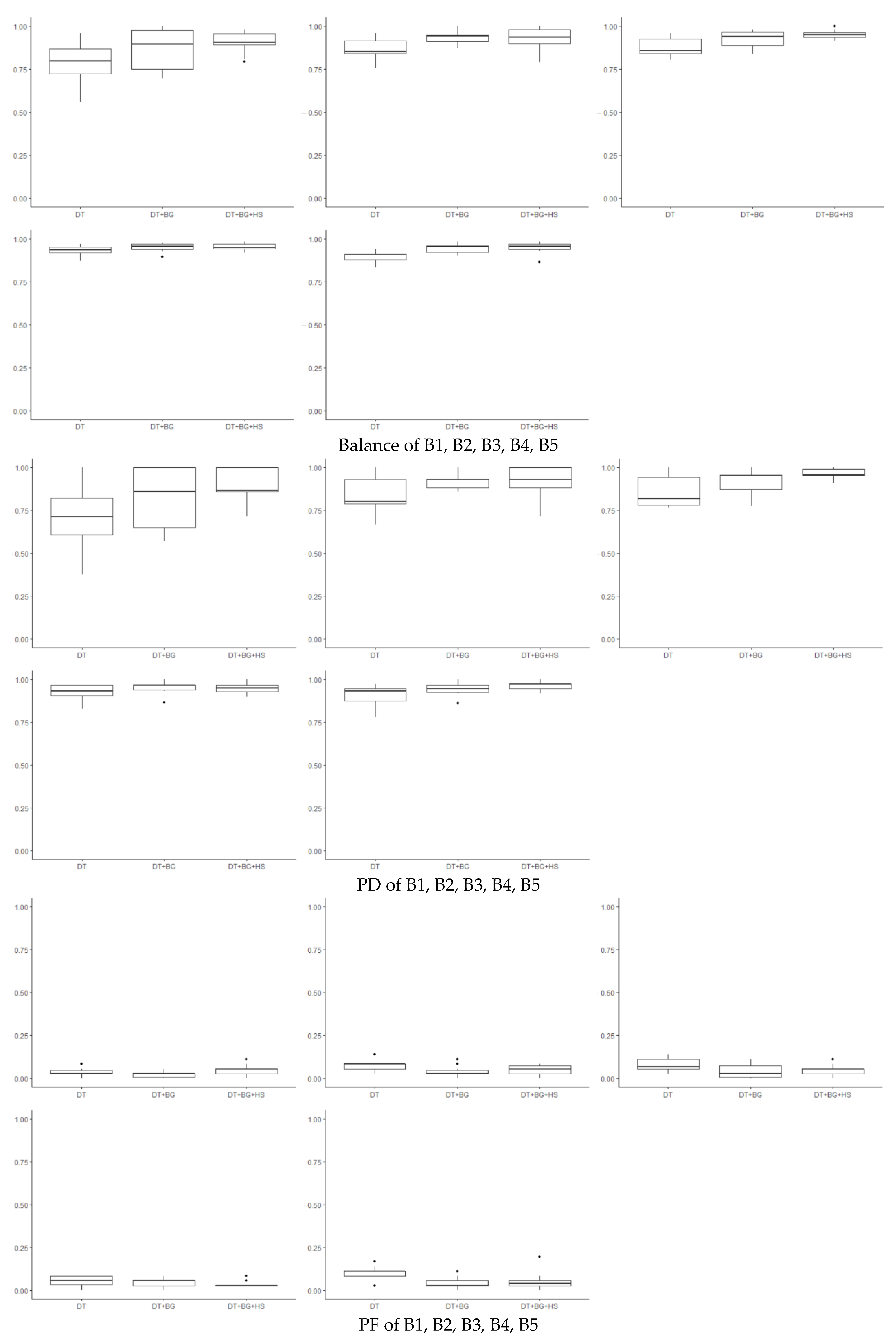

Appendix C. Box Plots of Balance, Probability of Detection (PD), and Probability of False Alarm (PF)

References

- Broy, M. Challenges in automotive software engineering. In Proceedings of the 28th International Conference on Software Engineering, Shanghai, China, 20–28 May 2006; pp. 33–42. [Google Scholar]

- Greenblatt, J.B.; Shaheen, S. Automated vehicles, on-demand mobility, and environmental impacts. Curr. Sustain. Renew. Energy Rep. 2015, 2, 74–81. [Google Scholar] [CrossRef]

- Kretschmann, L.; Burmeister, H.-C.; Jahn, C. Analyzing the economic benefit of unmanned autonomous ships: An exploratory cost-comparison between an autonomous and a conventional bulk carrier. Res. Transp. Bus. Manag. 2017, 25, 76–86. [Google Scholar] [CrossRef]

- Höyhtyä, M.; Huusko, J.; Kiviranta, M.; Solberg, K.; Rokka, J. Connectivity for autonomous ships: Architecture, use cases, and research challenges. In Proceedings of the 2017 International Conference on Information and Communication Technology Convergence (ICTC), Jeju, Korea, 18–20 October 2017; pp. 345–350. [Google Scholar]

- Abdel-Hamid, T.K. The economics of software quality assurance: A simulation-based case study. MIS Q. 1988, 12, 395–411. [Google Scholar] [CrossRef]

- Knight, J.C. Safety critical systems: Challenges and directions. In Proceedings of the 24th International Conference on Software Engineering, Orlando, FL, USA, 25 May 2002; pp. 547–550. [Google Scholar]

- Kamei, Y.; Shihab, E.; Adams, B.; Hassan, A.E.; Mockus, A.; Sinha, A.; Ubayashi, N. A large-scale empirical study of just-in-time quality assurance. IEEE Trans. Softw. Eng. 2012, 39, 757–773. [Google Scholar] [CrossRef]

- Chen, X.; Zhao, Y.; Wang, Q.; Yuan, Z. MULTI: Multi-objective effort-aware just-in-time software defect prediction. Inf. Softw. Technol. 2018, 93, 1–13. [Google Scholar] [CrossRef]

- Yang, X.; Lo, D.; Xia, X.; Zhang, Y.; Sun, J. Deep learning for just-in-time defect prediction. In Proceedings of the 2015 IEEE International Conference on Software Quality, Reliability and Security, Vancouver, BC, Canada, 3–5 August 2015; pp. 17–26. [Google Scholar]

- Jha, S.; Kumar, R.; Son, L.H.; Abdel-Basset, M.; Priyadarshini, I.; Sharma, R.; Long, H.V. Deep learning approach for software maintainability metrics prediction. IEEE Access 2019, 7, 61840–61855. [Google Scholar] [CrossRef]

- Shepperd, M.; Bowes, D.; Hall, T. Researcher bias: The use of machine learning in software defect prediction. IEEE Trans. Softw. Eng. 2014, 40, 603–616. [Google Scholar] [CrossRef]

- Singh, P.D.; Chug, A. Software defect prediction analysis using machine learning algorithms. In Proceedings of the 2017 7th International Conference on Cloud Computing, Data Science & Engineering-Confluence, Noida, India, 12–13 January 2017; pp. 775–781. [Google Scholar]

- Hoang, T.; Dam, H.K.; Kamei, Y.; Lo, D.; Ubayashi, N. DeepJIT: An end-to-end deep learning framework for just-in-time defect prediction. In Proceedings of the 2019 IEEE/ACM 16th International Conference on Mining Software Repositories (MSR), Montreal, QC, Canada, 25–31 May 2019; pp. 34–45. [Google Scholar]

- Kang, J.; Ryu, D.; Baik, J. Predicting just-in-time software defects to reduce post-release quality costs in the maritime industry. Softw. Pract. Exp. 2020. [Google Scholar] [CrossRef]

- Geem, Z.W.; Kim, J.H.; Loganathan, G.V. A new heuristic optimization algorithm: Harmony search. Simulation 2001, 76, 60–68. [Google Scholar] [CrossRef]

- Abualigah, L.; Diabat, A.; Geem, Z.W. A Comprehensive Survey of the Harmony Search Algorithm in Clustering Applications. Appl. Sci. 2020, 10, 3827. [Google Scholar] [CrossRef]

- Manjarres, D.; Landa-Torres, I.; Gil-Lopez, S.; Del Ser, J.; Bilbao, M.N.; Salcedo-Sanz, S.; Geem, Z.W. A survey on applications of the harmony search algorithm. Eng. Appl. Artif. Intell. 2013, 26, 1818–1831. [Google Scholar] [CrossRef]

- Mahdavi, M.; Fesanghary, M.; Damangir, E. An improved harmony search algorithm for solving optimization problems. Appl. Math. Comput. 2007, 188, 1567–1579. [Google Scholar] [CrossRef]

- Geem, Z.W. (Ed.) Music-Inspired Harmony Search Algorithm: Theory and Applications; Springer: Berlin/Heidelberg, Germany, 2009. [Google Scholar]

- Prajapati, A.; Geem, Z.W. Harmony Search-Based Approach for Multi-Objective Software Architecture Reconstruction. Mathematics 2020, 8, 1906. [Google Scholar] [CrossRef]

- Alsewari, A.A.; Kabir, M.N.; Zamli, K.Z.; Alaofi, K.S. Software product line test list generation based on harmony search algorithm with constraints support. Int. J. Adv. Comput. Sci. Appl. 2019, 10, 605–610. [Google Scholar] [CrossRef]

- Choudhary, A.; Baghel, A.S.; Sangwan, O.P. Efficient parameter estimation of software reliability growth models using harmony search. IET Softw. 2017, 11, 286–291. [Google Scholar] [CrossRef]

- Chhabra, J.K. Harmony search based remodularization for object-oriented software systems. Comput. Lang. Syst. Struct. 2017, 47, 153–169. [Google Scholar]

- Mao, C. Harmony search-based test data generation for branch coverage in software structural testing. Neural Comput. Appl. 2014, 25, 199–216. [Google Scholar] [CrossRef]

- Omran, M.G.H.; Mahdavi, M. Global-best harmony search. Appl. Math. Comput. 2008, 198, 643–656. [Google Scholar] [CrossRef]

- Geem, Z.W.; Sim, K.-B. Parameter-setting-free harmony search algorithm. Appl. Math. Comput. 2010, 217, 3881–3889. [Google Scholar] [CrossRef]

- Borg, M.; Svensson, O.; Berg, K.; Hansson, D. SZZ unleashed: An open implementation of the SZZ algorithm-featuring example usage in a study of just-in-time bug prediction for the Jenkins project. In Proceedings of the 3rd ACM SIGSOFT International Workshop on Machine Learning Techniques for Software Quality Evaluation, Tallinn, Estonia, 27 August 2019; pp. 7–12. [Google Scholar]

- Kotsiantis, S.B.; Kanellopoulos, D.; Pintelas, P.E. Data preprocessing for supervised leaning. Int. J. Comput. Sci. 2006, 1, 111–117. [Google Scholar]

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: Synthetic minority over-sampling technique. J. Artif. Intell. Res. 2002, 16, 321–357. [Google Scholar] [CrossRef]

- Öztürk, M.M. Comparing hyperparameter optimization in cross-and within-project defect prediction: A case study. Arab. J. Sci. Eng. 2019, 44, 3515–3530. [Google Scholar] [CrossRef]

- Yang, X.; Lo, D.; Xia, X.; Sun, J. TLEL: A two-layer ensemble learning approach for just-in-time defect prediction. Inf. Softw. Technol. 2017, 87, 206–220. [Google Scholar] [CrossRef]

- Huang, Q.; Xia, X.; Lo, D. Revisiting supervised and unsupervised models for effort-aware just-in-time defect prediction. Empir. Softw. Eng. 2019, 24, 2823–2862. [Google Scholar] [CrossRef]

- Kondo, M.; German, D.M.; Mizuno, O.; Choi, E.H. The impact of context metrics on just-in-time defect prediction. Empir. Softw. Eng. 2020, 25, 890–939. [Google Scholar] [CrossRef]

- Tantithamthavorn, C.; McIntosh, S.; Hassan, A.E.; Matsumoto, K. The impact of automated parameter optimization on defect prediction models. IEEE Trans. Softw. Eng. 2018, 45, 683–711. [Google Scholar] [CrossRef]

- Deng, W.; Chen, H.; Li, H. A novel hybrid intelligence algorithm for solving combinatorial optimization problems. J. Comput. Sci. Eng. 2014, 8, 199–206. [Google Scholar] [CrossRef]

- Zeng, X.; Martinez, T.R. Distribution-balanced stratified cross-validation for accuracy estimation. J. Exp. Theor. Artif. Intell. 2000, 12, 1–12. [Google Scholar] [CrossRef]

- Ryu, D.; Jang, J.; Baik, J. A hybrid instance selection using nearest-neighbor for cross-project defect prediction. J. Comput. Sci. Technol. 2015, 30, 969–980. [Google Scholar] [CrossRef]

- Shin, Y.; Meneely, A.; Williams, L.; Osborne, J.A. Evaluating complexity, code churn, and developer activity metrics as indicators of software vulnerabilities. IEEE Trans. Softw. Eng. 2010, 37, 772–787. [Google Scholar] [CrossRef]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; Vanderplas, J.; et al. Scikit-learn: Machine learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Buitinck, L.; Louppe, G.; Blondel, M.; Pedregosa, F.; Mueller, A.; Grisel, O.; Niculae, V.; Prettenhofer, P.; Gramfort, A.; Grobler, J.; et al. API design for machine learning software: Experiences from the scikit-learn project. arXiv 2013, arXiv:1309.0238. [Google Scholar]

- Fairchild, G. pyHarmonySearch 1.4.3. Available online: https://pypi.org/project/pyHarmonySearch/ (accessed on 28 July 2020).

- Breiman, L. Pasting small votes for classification in large databases and on-line. Mach. Learn. 1999, 36, 85–103. [Google Scholar] [CrossRef]

- Breiman, L. Bagging predictors. Mach. Learn. 1996, 24, 123–140. [Google Scholar] [CrossRef]

- Ho, T. The random subspace method for constructing decision forests. Pattern Anal. Mach. Intell. 1998, 20, 832–844. [Google Scholar]

- Louppe, G.; Geurts, P. Ensembles on Random Patches. In Machine Learning and Knowledge Discovery in Databases; Springer: Berlin/Heidelberg, Germany, 2012; pp. 346–361. [Google Scholar]

- CATOLINO, Gemma; DI NUCCI, Dario; FERRUCCI, Filomena. Cross-project just-in-time bug prediction for mobile apps: An empirical assessment. In Proceedings of the 2019 IEEE/ACM 6th International Conference on Mobile Software Engineering and Systems (MOBILESoft), Montreal, QC, Canada, 25–26 May 2019; pp. 99–110. [Google Scholar]

- Maclin, R.; Opitz, D. An empirical evaluation of bagging and boosting. AAAI/IAAI 1997, 1997, 546–551. [Google Scholar]

- Bauer, E.; Kohavi, R. An empirical comparison of voting classification algorithms: Bagging, boosting, and variants. Mach. Learn. 1999, 36, 105–139. [Google Scholar] [CrossRef]

- Pascarella, L.; Palomba, F.; Bacchelli, A. Fine-grained just-in-time defect prediction. J. Syst. Softw. 2019, 150, 22–36. [Google Scholar] [CrossRef]

- Ryu, D.; Jang, J.; Baik, J. A transfer cost-sensitive boosting approach for cross-project defect prediction. Softw. Qual. J. 2017, 25, 235–272. [Google Scholar] [CrossRef]

- Elkan, C. The foundations of cost-sensitive learning. In Proceedings of the International Joint Conference on Artificial Intelligence, Seattle, WA, USA, 4–10 August 2001; Lawrence Erlbaum Associates Ltd.: Mahwah, NJ, USA, 2001; pp. 973–978. [Google Scholar]

- Krawczyk, B.; Woźniak, M.; Schaefer, G. Cost-sensitive decision tree ensembles for effective imbalanced classification. Appl. Soft Comput. 2014, 14, 554–562. [Google Scholar] [CrossRef]

- Lomax, S.; Vadera, S. A survey of cost-sensitive decision tree induction algorithms. ACM Comput. Surv. (CSUR) 2013, 45, 1–35. [Google Scholar] [CrossRef]

- Tosun, A.; Turhan, B.; Bener, A. Practical considerations in deploying ai for defect prediction: A case study within the turkish telecommunication industry. In Proceedings of the 5th International Conference on Predictor Models in Software Engineering, Vancouver, BC, Canada, 18–19 May 2009; pp. 1–9. [Google Scholar]

- Ryu, D.; Baik, J. Effective multi-objective naïve Bayes learning for cross-project defect prediction. Appl. Soft Comput. 2016, 49, 1062–1077. [Google Scholar] [CrossRef]

- Ryu, D.; Baik, J. Effective harmony search-based optimization of cost-sensitive boosting for improving the performance of cross-project defect prediction. KIPS Trans. Softw. Data Eng. 2018, 7, 77–90. [Google Scholar]

- Kvasov, D.E.; Mukhametzhanov, M.S. Metaheuristic vs. deterministic global optimization algorithms: The univariate case. Appl. Math. Comput. 2018, 318, 245–259. [Google Scholar] [CrossRef]

- Paulavičius, R.; Sergeyev, Y.D.; Kvasov, D.E.; Žilinskas, J. Globally-biased BIRECT algorithm with local accelerators for expensive global optimization. Expert Syst. Appl. 2020, 144, 113052. [Google Scholar] [CrossRef]

- Sergeyev, Y.D.; Kvasov, D.E.; Mukhametzhanov, M.S. On the efficiency of nature-inspired metaheuristics in expensive global optimization with limited budget. Sci. Rep. 2018, 8, 1–9. [Google Scholar] [CrossRef] [PubMed]

| Parameter | Description |

|---|---|

| Harmony memory size (HMS) | The maximum number of harmonies that instrument players can refer to |

| Harmony memory considering rate (HMCR) | The probability where each player refers to a randomly selected harmony in the harmony memory |

| Pitch adjusting rate (PAR) | The probability of adjusting a harmony that was picked from the harmony memory |

| Fret width (FW) | The bandwidth of the pitch adjustment |

| Maximum improvisation (MI) | The maximum number of iterations |

| Classifier | Parameters | Candidate Search Space | Range | Interval |

|---|---|---|---|---|

| Decision Tree | criterion (Cr) | {“gini”, “entropy”}, default = “gini” | Cr = {“gini”, “entropy”} | - |

| splitter (Sp) | {“best”, “random”}, default = “best” | Sp = {“best”, “random”} | - | |

| max_depth (Dmax) | int, default = None | 5 ≤ Dmax ≤ 50, 0: Unlimited | 5 | |

| min_samples_split (SSmin) | int or float, default = 2 | 2 ≤ SSmin ≤ 10 | 1 | |

| min_samples_leaf (SLmin) | int or float, default = 1 | 1 ≤ SLmin ≤ 10 | 1 | |

| min_weight_fraction_leaf (WLmin) | float, default = 0.0 | 0.0 ≤ WLmin ≤ 0.5 | By HSA | |

| max_features (Fmax) | int, float or {“auto”, “sqrt”, “log2”}, default = None | 1 ≤ Fmax ≤ Max, Max is number of features | 1 | |

| max_leaf_nodes (Lmax) | int, default = None | 1 ≤ Lmax ≤ 100, 0: Unlimited | 10 | |

| min_impurity_decrease (Imin) | float, default = 0.0 | 0.0 ≤ Imin ≤ 1.0 | By HSA | |

| class_weight (Wc) | dict, list of dict or “balanced”, default = None | 0.0 ≤ Wc ≤ 1.0 for each class | By HSA | |

| ccp_alpha (Ca) | non-negative float, default = 0.0 | 0.0001 ≤ Ca ≤ 0.001 | By HSA | |

| Bagging | n_estimators (Enum) | int, default = 10 | 10 ≤ Enum ≤ 100 | 5 |

| max_samples (Smax) | int or float, default = 1.0 | 0.0 ≤ Smax ≤ 1.0 | By HSA | |

| max_features (Fmax) | int or float, default = 1.0 | 1 ≤ Fma ≤ Max | 1 | |

| bootstrap (B) | bool, default = True | B = {True, False} | - | |

| bootstrap_features (Bf) | bool, default = False | Bf = {True, False} | - | |

| oob_score (O) | bool, default = False | O = {True, False} | - |

| Languages | Description | Period | Number of Features | Total Number of Changes | |

|---|---|---|---|---|---|

| A | C/C++ | Maritime Project A | 10/2004–08/2019 | 14 | 931 (−) |

| B | C/C++ | Maritime Project B | 11/2015–08/2019 | 14 | 8498 (−) |

| BU | Datasets were extracted by Kamei et al. [7] | Bugzilla | 08/1998–12/2006 | 14 | 4620 (36%) |

| CO | Columba | 11/2002–07/2006 | 14 | 4455 (31%) | |

| JD | Eclipse JDT | 05/2001–12/2007 | 14 | 35,386 (14%) | |

| PL | Eclipse Platform | 05/2001–12/2007 | 14 | 64,250 (14%) | |

| MO | Mozilla | 01/2000–12/2006 | 14 | 98,275 (5%) | |

| PO | PostgreSQL | 07/1996–05/2010 | 14 | 20,431 (25%) |

| Group | Metric | Definition |

|---|---|---|

| Size | LA | Lines of code added |

| LD | Lines of code deleted | |

| LT | Lines of code in a file before the changes | |

| Diffusion | NS | Number of modified subsystems |

| ND | Number of modified directories | |

| NF | Number of modified files | |

| Entropy | Distribution of modified code across each file | |

| Purpose | FIX | Whether the change is a defect fix or not |

| History | NDEV | Number of developers that changed the modified files |

| AGE | Average time interval between the last and the current change | |

| NUC | Number of unique changes to the modified files | |

| Experience | EXP | Developer experience |

| REXP | Recent developer experience | |

| SEXP | Developer experience on a subsystem |

| Confusion Matrix | Predicted Class | ||

|---|---|---|---|

| Buggy (P) | Clean (N) | ||

| Actual class | Buggy | True Positive (TP) | False Negative (FN) |

| Clean | False Positive (FP) | True Negative (TN) | |

| Parameter | Value |

|---|---|

| HMS | 10,000 |

| HMCR | 0.75 |

| PAR | 0.5 |

| FW | 0.25 for continuous variable 10 for discrete variable |

| MI | Adjusted |

| DT | DT+BG | DT+BG+HS | Kang et al. [14] | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| PD | PF | B | PD | PF | B | PD | PF | B | PD | PF | B | |

| A1 | 0.33 | 0.08 | 0.51 | 0.50 | 0.06 | 0.63 | 0.83 | 0.15 | 0.82 | 0.54 | 0.05 | 0.67 |

| A2 | 0.72 | 0.09 | 0.77 | 0.82 | 0.07 | 0.85 | 0.88 | 0.06 | 0.90 | 0.84 | 0.05 | 0.88 |

| A3 | 0.88 | 0.13 | 0.85 | 0.87 | 0.08 | 0.86 | 0.92 | 0.07 | 0.91 | 0.89 | 0.06 | 0.91 |

| A4 | 0.87 | 0.09 | 0.86 | 0.90 | 0.08 | 0.89 | 0.95 | 0.08 | 0.93 | 0.94 | 0.07 | 0.93 |

| A5 | 0.90 | 0.12 | 0.87 | 0.94 | 0.13 | 0.88 | 0.95 | 0.06 | 0.94 | 0.94 | 0.07 | 0.93 |

| B1 | 0.72 | 0.03 | 0.79 | 0.83 | 0.03 | 0.87 | 0.93 | 0.08 | 0.92 | 0.86 | 0.02 | 0.90 |

| B2 | 0.83 | 0.08 | 0.86 | 0.92 | 0.04 | 0.94 | 0.94 | 0.05 | 0.95 | 0.93 | 0.03 | 0.95 |

| B3 | 0.86 | 0.08 | 0.88 | 0.91 | 0.04 | 0.92 | 0.96 | 0.03 | 0.96 | 0.95 | 0.05 | 0.95 |

| B4 | 0.93 | 0.06 | 0.93 | 0.95 | 0.04 | 0.95 | 0.99 | 0.04 | 0.97 | 0.97 | 0.04 | 0.96 |

| B5 | 0.91 | 0.10 | 0.90 | 0.94 | 0.04 | 0.94 | 0.98 | 0.04 | 0.97 | 0.97 | 0.05 | 0.96 |

| CIR | CI | PrD | |

|---|---|---|---|

| A1 (100:20) | 0.28 | 0.55 | 0.83 |

| A2 (100:40) | 0.32 | 0.56 | 0.88 |

| A3 (100:60) | 0.39 | 0.53 | 0.92 |

| A4 (100:80) | 0.39 | 0.56 | 0.95 |

| A5 (100:100) | 0.39 | 0.56 | 0.95 |

| B1 (100:20) | 0.42 | 0.51 | 0.93 |

| B2 (100:40) | 0.43 | 0.51 | 0.94 |

| B3 (100:60) | 0.45 | 0.52 | 0.96 |

| B4 (100:80) | 0.46 | 0.53 | 0.99 |

| B5 (100:100) | 0.44 | 0.54 | 0.98 |

| DT | DT+BG | DT+BG+HS | Kamei et al. [7] | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| PD | PF | B | PD | PF | B | PD | PF | B | PD | PF | B | |

| BU | 0.571 | 0.147 | 0.679 | 0.571 | 0.147 | 0.679 | 0.748 | 0.287 | 0.729 | 0.69 | 0.347 | 0.671 |

| CO | 0.503 | 0.244 | 0.608 | 0.468 | 0.111 | 0.616 | 0.750 | 0.322 | 0.711 | 0.67 | 0.272 | 0.698 |

| JD | 0.305 | 0.127 | 0.500 | 0.184 | 0.023 | 0.423 | 0.661 | 0.278 | 0.690 | 0.65 | 0.329 | 0.660 |

| PL | 0.217 | 0.049 | 0.445 | 0.094 | 0.005 | 0.359 | 0.747 | 0.304 | 0.720 | 0.70 | 0.304 | 0.698 |

| MO | 0.335 | 0.126 | 0.521 | 0.230 | 0.027 | 0.455 | 0.716 | 0.200 | 0.754 | 0.63 | 0.224 | 0.694 |

| PO | 0.475 | 0.181 | 0.607 | 0.451 | 0.076 | 0.608 | 0.730 | 0.241 | 0.744 | 0.65 | 0.191 | 0.718 |

| DT | DT+HS | DT+BG+HS | |||||||

|---|---|---|---|---|---|---|---|---|---|

| PD | PF | B | PD | PF | B | PD | PF | B | |

| A1 | 0.33 | 0.08 | 0.51 | 0.75 | 0.10 | 0.77 | 0.83 | 0.15 | 0.82 |

| A2 | 0.72 | 0.09 | 0.77 | 0.92 | 0.20 | 0.84 | 0.88 | 0.06 | 0.90 |

| A3 | 0.88 | 0.13 | 0.85 | 0.87 | 0.10 | 0.87 | 0.92 | 0.07 | 0.91 |

| A4 | 0.87 | 0.09 | 0.86 | 0.88 | 0.08 | 0.88 | 0.95 | 0.08 | 0.93 |

| A5 | 0.90 | 0.12 | 0.87 | 0.94 | 0.11 | 0.89 | 0.95 | 0.06 | 0.94 |

| B1 | 0.72 | 0.03 | 0.79 | 0.85 | 0.06 | 0.88 | 0.93 | 0.08 | 0.92 |

| B2 | 0.83 | 0.08 | 0.86 | 0.93 | 0.08 | 0.92 | 0.94 | 0.05 | 0.95 |

| B3 | 0.86 | 0.08 | 0.88 | 0.92 | 0.06 | 0.92 | 0.96 | 0.03 | 0.96 |

| B4 | 0.93 | 0.06 | 0.93 | 0.90 | 0.03 | 0.92 | 0.99 | 0.04 | 0.97 |

| B5 | 0.91 | 0.10 | 0.90 | 0.92 | 0.04 | 0.93 | 0.98 | 0.04 | 0.97 |

| BU | 0.57 | 0.15 | 0.68 | 0.64 | 0.26 | 0.69 | 0.77 | 0.29 | 0.73 |

| CO | 0.50 | 0.24 | 0.61 | 0.42 | 0.12 | 0.58 | 0.75 | 0.32 | 0.71 |

| JD | 0.31 | 0.13 | 0.50 | 0.30 | 0.12 | 0.50 | 0.66 | 0.28 | 0.69 |

| PL | 0.22 | 0.05 | 0.45 | 0.32 | 0.10 | 0.52 | 0.75 | 0.30 | 0.72 |

| MO | 0.34 | 0.13 | 0.52 | 0.21 | 0.04 | 0.44 | 0.72 | 0.20 | 0.75 |

| PO | 0.48 | 0.18 | 0.61 | 0.47 | 0.15 | 0.61 | 0.73 | 0.24 | 0.74 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kang, J.; Kwon, S.; Ryu, D.; Baik, J. HASPO: Harmony Search-Based Parameter Optimization for Just-in-Time Software Defect Prediction in Maritime Software. Appl. Sci. 2021, 11, 2002. https://doi.org/10.3390/app11052002

Kang J, Kwon S, Ryu D, Baik J. HASPO: Harmony Search-Based Parameter Optimization for Just-in-Time Software Defect Prediction in Maritime Software. Applied Sciences. 2021; 11(5):2002. https://doi.org/10.3390/app11052002

Chicago/Turabian StyleKang, Jonggu, Sunjae Kwon, Duksan Ryu, and Jongmoon Baik. 2021. "HASPO: Harmony Search-Based Parameter Optimization for Just-in-Time Software Defect Prediction in Maritime Software" Applied Sciences 11, no. 5: 2002. https://doi.org/10.3390/app11052002

APA StyleKang, J., Kwon, S., Ryu, D., & Baik, J. (2021). HASPO: Harmony Search-Based Parameter Optimization for Just-in-Time Software Defect Prediction in Maritime Software. Applied Sciences, 11(5), 2002. https://doi.org/10.3390/app11052002