Abstract

Edge features in point clouds are prominent due to the capability of describing an abstract shape of a set of points. Point clouds obtained by 3D scanner devices are often immense in terms of size. Edges are essential features in large scale point clouds since they are capable of describing the shapes in down-sampled point clouds while maintaining the principal information. In this paper, we tackle challenges of edge detection tasks in 3D point clouds. To this end, we propose a novel technique to detect edges of point clouds based on a capsule network architecture. In this approach, we define the edge detection task of point clouds as a semantic segmentation problem. We built a classifier through the capsules to predict edge and non-edge points in 3D point clouds. We applied a weakly-supervised learning approach in order to improve the performance of our proposed method and built in the capability of testing the technique in wider range of shapes. We provide several quantitative and qualitative experimental results to demonstrate the robustness of our proposed EDC-Net for edge detection in 3D point clouds. We performed a statistical analysis over the ABC and ShapeNet datasets. Our numerical results demonstrate the robust and efficient performance of EDC-Net.

1. Introduction

Point cloud data is a fundamental class of 3D data—a type of raw data from various 3D sensor devices. Point clouds are playing an important role in the computer vision community due to their rich geometric information and the proliferation of 3D sensors. Furthermore, a wide range of applications in robotics, autonomous-driving and virtual/augmented reality can directly be obtained the 3D point cloud data. The importance of 3D point cloud data stems from their depth information and geometric structure; 2D imagery yields a lot of ambiguities because of the lack of this information.

Edges in 3D point clouds are considered as remarkably meaningful features due to their capability of representing the topological shape of a set of points. Extracting edges from 3D point clouds is one of the fundamental shape understanding methods which is able to describe the abstract features of a point set. Edges offer an intuitive and low dimensional representation of point clouds. Therefore, extracting edges from point clouds provides a smaller chunk of data while preserving the feature information of shapes in point clouds. There are several applications of edge extraction in photogrammetry, CAD (computer-aided design) and urban scenes analyses [1,2,3]. The proliferation of algorithms for reconstructing 3D objects, e.g., [4,5], is an affirmation of the importance of the 3D information in the unreachable cases to the 3D sensors.

Deep learning algorithms have proven their robustness in various lines of research; however, there are still many challenges in applying CNNs (convolutional neural networks) for 3D point clouds since they are sets of unordered 3D points without any spatial order and regular structure. PointNet [6] and PointNet++ [7] have made fundamental improvements in this case to train a model directly through the 3D point clouds. Recently, many network architectures [8,9,10,11] were inspired by these pioneer techniques [6,7]. However, applying CNNs for the edge detection problem in point clouds is still challenging. The majority of edge detection techniques are based on signal processing and local geometry properties [1,2,3,12,13,14,15,16], although recently there was some research that applied deep learning techniques for edge detection from point clouds [17,18,19,20,21,22]. In this paper, we introduce a novel approach for edge detection from point clouds based on the main concepts of capsule networks [23]. Capsule networks recently have proven excellent performance in the several applications [24], and to the best of our knowledge, we are the first to apply capsule networks for the edge detection task of 3D point clouds. The key difference in our proposed method compared with the recent CNN-based edge detection methods [17,18,19,20,21,22] is the usage of capsule networks for edge detection. The remarkable advantage of capsule networks is based on the information that is stored at the vector level instead of scalar. Capsules are groups of neurons that act together for storing the information at the vector level. The nature of neurons in the conventional CNNs is scalar and additive, and this makes the neurons ambivalent to the spatial relationships of neurons within the kernel of the previous layer in any given layer of a network. In capsule networks the information at the neuron level is stored as vectors in lieu of scalars. These sets of neurons are defined as capsule types. Dynamic routing between capsules exploits the agreement between these capsule vectors to extract part-to-whole relationships. These vectors contain information about spatial orientation. This is a very notable factor in point clouds since the capsule layers are capable of disentangling geometrical features of point clouds. This is the reason that capsule networks recently attracted attention in 3D point cloud applications [25,26,27,28,29]; none of them, however, applied capsule networks for edge detection task. In our proposed capsule network for the edge detection, instead of applying the primary capsule activation directly to the routing process, we apply an attention module to the primary capsules and concatenate them with the features of previous convolution layers in order to combine the local and global features for a point-wise segmentation process.

Another challenging issue of training a CNN-based model for edge detection from point clouds is the lack of annotated data. In this paper, we address this problem to train edge detection models for 3D point clouds with a weakly-supervised transfer learning approach. Weakly-supervised learning is an approach of using noisy or imprecise sources as labels for large amounts of training data. Afterwards, these imprecise labels are fed into a supervised learning approach. Various methods have been proposed to extract such imperfect labels purposefully from the training data [30]. Furthermore, transfer learning has contributed to the wide range of applications [31]. It allows exploiting the knowledge from high-quality pre-trained networks when there is relatively little labeled data for training networks. A combination of weakly-supervised learning and transfer learning was proposed in [32,33]. Given the success of these networks in different applications, it might be surprising that this approach has not been applied to edge detection problems of point clouds, whereas there is a lack of diverse label data for edge detection of point clouds. To tackle this challenge, we generated the imprecise labels of ShapeNet [34] samples for edge detection based on the method of [12], while using the fact that ABC [35] is the only publicly available dataset with edge detection labels for 3D point clouds.

In a nutshell the novelties and contributions of this paper can be summarized as follows:

- We introduce EDC-Net: the edge detection capsule network for 3D point clouds, a novel architecture of capsule networks which is designed for the purpose of edge detection from 3D point clouds.

- We design a weakly-supervised transfer learning approach for edge detection of point clouds in order to tackle the challenge of lack of the diversity of annotated data.

- We formulate a loss function assigned to the edge detection problem by combining two formats of ground-truths as edge extraction and segmentation. This combination in the loss function emphasizes the prediction of edge points and boosts the training process.

- Our model is able to improve incrementally the proposed weakly-supervised transfer learning for edge detection from 3D point clouds. This aspect of our proposed method brings the capability of applying EDC-Net to any target data. This attribute of EDC-Net is remarkable for industrial applications and is currently lacking in other edge detection techniques.

The robustness of this work stems from three aspects: (1) designing capsule networks for edge detection from point clouds to disentangle geometrical features of point clouds; (2) applying weakly-supervised transfer learning to tackle the lack of annotated data; (3) formulating a loss function to improve the training process by emphasizing the prediction of edges.

The contribution of this paper does not lie in merely surpassing the previous edge detection techniques. The significant aspects of our work are introducing a novel learning solution to this community, and tackling the challenges of the lack of annotated data for edge detection tasks of point clouds. The results of the proposed method are promising and constitute the first steps towards reliable point cloud edge detection based on capsule network and weakly-supervised transfer learning approaches. Our work opens a new research path in point cloud edge detection and poses novel research questions with available trends for further improvement.

2. Related Work

2.1. Point Clouds

Point clouds recently have attracted extensive attention for broad range of applications including autonomous driving [36], 3D object detection [37,38] and recognition and classification [6,7,39,40,41]. Deep neural networks have made notable improvements in order to perform quite effectively on the raw 3D point cloud data. PointNet [6] is the initiator to learn directly the representations of point clouds by computing features for each point individually and aggregating these features with max-pooling operations. Extending the same idea and to capture the contextual information of local patterns inside point clouds, PointNet++ [7] applies sampling and grouping operations to extract features from point clusters hierarchically. In recent years, many networks for 3D point clouds were inspired by PointNet++ [7], such as [8,9,10]. A complete review of deep learning methods for point clouds can be found in [11].

2.2. Edge Detection

Edge detection from point clouds has been attracting a lot of attention in various fields of research, such as robotics, photogrammetry, CAD and urban scene analyses [1,2,3,15]. Conventional methods for edge extraction from point clouds were based on building a mesh [3,15] or building a graph [13]. Reconstructing point clouds to a mesh or graph is computationally expensive. To overcome this issue, computing the difference of eigenvalues was proposed to extract edges [12]. The same idea was extended based on a segmentation [16] approach. Furthermore, discriminative learning algorithms are employed to extract edges from unorganized point clouds through a point classifier based on the edge versus non-edge points [2]. Moreover, a spatial filtering approach based on fast Fourier transform (FFT) was applied for boundary point detection to cope with the predefined threshold values [14]. Most of the techniques in the field of the edge extraction from point clouds are based on the geometric analysis; however, recently some methods have been proposed by using deep learning approaches [17,18,19,20,21,22]. CNNs are employed to define a scalar sharpness field over the smooth underlying the moving least-squares (MLS) surface of a point cloud [22]; this technique is capable of localizing sharp features of the scanned object. An extension of of this technique was proposed in [20] by training a convolutional neural network to derive a sharpness field parametrized over the underlying smooth proxy MLS surface. Furthermore, a combination of reconstruction and edge extraction was proposed in EC-Net [21], which is an edge-aware method based on extracting local patches and training the network to learn edges in patches. EC-Net [21] introduced a deep edge-aware point cloud consolidation framework. The network is trained to reconstruct upsampled 3D point clouds. The first phase of their technique consists of point classification and regression of per-point distances to the edge. Edge points are then detected as the points with a zero point-to-edge distance. PIE-NET [17] is the pipeline of the work; the technique of this pipeline treats point cloud curve inference as a curve proposal process. In this technique, first edges and corners are identified; then the edges are extracted by curve proposal and selection approaches. A geometric attention mechanism was presented in [19] to provide normals estimating and sharp feature lines’ properties in a learnable fashion which concentrates merely on geometric properties of point clouds. PCEDNet [18] presented a classification approach of edges in point clouds, where both edges and their surroundings are described. In their work, points are parameterized with a scale-space matrix (SSM) to encode extrinsic geometric properties of a surface surrounding each point of the input point cloud at multiple scales. Their proposed SSM provides information from a neural network to learn the description of edges and use it to efficiently detect them in given point clouds. Unlike the previous approaches, in our proposed method we extract the geometrical features as a means of pre-processing to feed the network, and we define the classification process of edge detection as a point-wise segmentation problem by a capsule network approach.

2.3. Capsule Network

The primary idea of capsule networks was initially introduced in [42] as a local component of artificial neural networks in which each capsule learns to recognize an implicitly defined visual entity over a limited domain of viewing conditions. This idea of capsules was developed afterwards into a dynamic routing mechanism between capsules in [23] as CapsNet (capsule networks). In the CapsNet model, capsules are groups of neurons that act together; and the information is stored at the vector level instead of scalar level. CapsNet [23] used the concept of iterative routing-by-agreement and squashing function on the output vector to get the activation capsules. Capsule networks recently proved to be powerful tools for a broad range of problems and have been applied in various lines of research; a thorough review of capsule networks can be found in [24]. Furthermore, the concept of CapsNet recently has been applied in point cloud processing research [25,26,27,28,29]. 3D Point Capsule Networks [25] proposed an auto-encoder to process sparse 3D point clouds while maintaining spatial arrangements of the input data. The geometric capsules approach is introduced in [26] to learn object representations from 3D point clouds by bundles of geometrically interpretable hidden units based on pose and features. Quaternion equivariant capsule networks [28] proposed to learn a pose-equivariant representation of objects by building a hierarchy of local reference frames where each frame is modeled as a quaternion. 3DCapsule [29] replaced the common fully connected classifier with a 3D capsule architecture in order to determine the spatial relationship between feature vectors by mapping feature vectors to capsules. DCG-Net [27] employed the agreement voting concept of capsules to build an aggregation neighborhood graph from a raw point cloud for the tasks of segmentation and classification.

While the aforementioned approaches applied a capsule network for different problems of point cloud processing, our proposed approach concentrates on employing a capsule network for a point-wise segmentation task to detect edges of point clouds. There was recent study that employed capsule networks for the segmentation tasks [43,44,45,46,47]. Matwo-CapsNet [43] presented a multi-label semantic segmentation technique based on capsule networks. Matwo-CapsNet combines pose and appearance information and encodes them as matrices through a dual routing mechanism. In [44] a segmentation architecture was developed based on capsule networks by improving the expectation-maximization routing algorithm (EM-routing). TraceCaps [45] is a capsule-based network architecture for semantic segmentation problems. It benefits from the part-whole dependencies attribute in capsule layers. TraceCaps derives the probabilities of the class labels for each capsule through a recursive, layer-by-layer procedure to build a segmentation network. SegCaps [46,47] is a convolutional–deconvolutional capsule network for the task of object segmentation. It extended the idea of convolutional capsules with locally-connected routing and proposed the concept of deconvolutional capsules. Unlike these models that are used for 2D images and for medical image applications, our proposed EDC-Net model merely focuses on 3D point clouds and defines the problem of edge detection as a segmentation task.

3. Proposed Method

3.1. Network Architecture

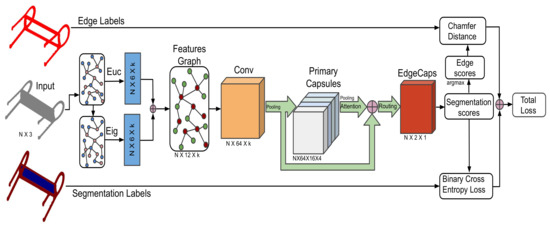

The main objective of EDC-Net is to precisely detect the sharp edge points of point clouds. To this end, we trained a capsule-based model to predict the edge regions of point clouds. EDC-Net is designed similarly to segmentation networks to classify edge and non-edge points in a point cloud. The network is fed by raw point clouds, which then pass through pre-processing steps and a convolution layer. Afterwards, the model is capable of segmenting the homogeneous edge points by capsule layers. The overview of the whole pipeline is depicted in Figure 1. In the following sections the details of EDC-Net are described.

Figure 1.

Overall overview of the proposed EDC-Net architecture. The input of this architecture is a raw point cloud of dimension . The ground-truths are edge labels and segmentation labels which distinguish edges and non-edges points as two classes. The features graph is an aggregation neighborhood graph based on a concatenation of features from euclidean and eigenvalues spaces. EdgeCaps module proceeds with point-wise segmentation to classify edge and non-edge points. ⊕ stands for concatenation.

3.1.1. Input Data

The source input of EDC-Net is a raw point cloud of dimensions where N is the number of points in the point cloud. We consider a S-dimensional point cloud with N points, denoted by . Each point of a point cloud commonly contains three coordinates , which means that . Furthermore, it is possible to include further coordinates, such as those representing color information and normal vectors. However, in this work we just consider the coordinates.

Two formats of labels are defined to be considered during the training process of EDC-Net. First, segmentation labels of the shape , where N is the number of points in a point cloud, and for each indexed point there is a corresponding value for each class of edge and non-edge. Second, edge labels are of the shape , where is the number of points that are labeled as sharp edge regions in the ground-truth of the given point cloud and each point is represented by its coordinates in three dimensions. The number of edge points is less than the number of points in a point cloud (). Segmentation labels and edge labels are applied during the training process for computing the loss. The details of loss calculation are explained in Section 3.2.

3.1.2. Features Graph

In the first layers of EDC-Net, the raw input point cloud is converted to a k-NN (k nearest neighbors) graph structure based on its euclidean and eigenvalues features. This pre-processing step is used for building a k-NN features graph before applying the first convolution operation of the network.

In order to build a neighbors graph, we select the k neighbors of each point. We extract the k nearest neighbors of each point of a raw point cloud in a pre-processing step before feeding the features into the main blocks of the network, as is shown in Figure 1. The nearest neighboring process is measured based on : , where . Once the k nearest neighbors of each point in the euclidean space are determined, we search for the nearest neighbors in the eigenvalues space. To this end, we compute the covariance matrix to extract the eigenvalues inspired from [27]. Covariance is a measurement that explores the variance of each dimension from the mean with respect to each other. From a point cloud with N points, for a 3-dimensional sample point , where , the covariance matrix is given by:

where, for instance, is the covariance of ; by k nearest neighbor points is computed as:

where is the average of the neighbors of over the first dimension and is the average over the second dimension. The eigenvalues and eigenvectors of are computed as: , where is a covariance matrix, is an eigenvector and is an eigenvalue. Then, the eigenvalues of the covariance matrix of the point cloud are defined as ; each point of a point cloud contains three values in eigenvalues space denoted by and ordered as: .

The nearest neighboring process in eigenvalues space is measured based on : , where .

We define a directed edge-weighted graph representing point cloud structure based on its euclidean and eigenvalues features, where the vertices are and the edges are . We construct as a k-NN edge-weighted graph in , where is the feature space dimension of the graph. The k nearest neighbors are based on the similarities of the points in . The similarities are exploited by norm. The edge-weighted graph is constructed by connecting all the nearest neighbors of shape . The graph includes self-loops, meaning each vertex also points to itself.

We exploit the k nearest neighbors in both the euclidean and eigenvalues spaces and concatenate the features inspired by [48]; however, the eigenvalues in this work are different since they are based on the covariance matrix. Furthermore, we consider the differences as the input features, as it is proven in [48]. Therefore, the shape of both the euclidean and eigenvalues graphs is , which means that is equal to 6. The shape of the features graph is which is the concatenation of the euclidean and eigenvalues graphs, and it means that is equal to 12 for the features graph. Afterwards, this features graph is fed into a convolutional operation.

3.1.3. Primary Capsules

Primary capsules in EDC-Net are the first capsule layer. Primary capsules transfer the features from the previous convolution layer into the capsules via convolutional filtering.

The main task of primary capsules is to transform the output of the previous convolution into an estimated pose and activation of each capsule. The primary capsule layer performs four sets of 1D convolutions with a kernel. The input channel of each convolution has 64 dimensions and each output channel has 16 dimensions. The shape of the output of primary capsules after applying max-pooling is , where N is the number of points in a point cloud. The activation values from a convolution operation in capsule networks are considered as coefficient values. These coefficients are applied to influence the information that proceeds between the capsules. Each of the capsules presents the properties of the point cloud from different aspects based on the pose and appearance information of points. The length of the capsules represents the probability of the presence of these properties. In EDC-Net an attention operation is performed on the primary capsules before applying the routing mechanism to the EdgeCaps module.

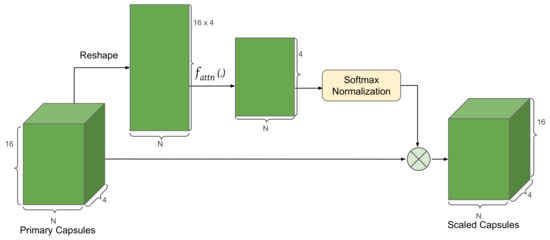

3.1.4. Attention Module

In EDC-Net, primary capsules are extracted based on the features of each point of the point cloud. In this case, if the routing mechanism is directly applied, the activations of the EdgeCaps module depend on the global features of primary capsules, which is not appropriate for a point-wise segmentation process. To this end, we apply an attention function on the primary capsules. Afterwards, we concatenate the output of attention module with the reshaped features of the previous convolution layer to concatenate the global and local features together. The attention module in EDC-Net is inspired by [49] which is a two-layer fully connected neural network . The shape of the output of primary capsules is . We then reshape the primary capsules to , which is . Afterwards, by applying the attention function , we obtain the features of a shape since we defined four capsules in the primary capsules. We apply a point-wise Softmax normalization to generate the final attention values of each capsule. Finally, we obtain the scaled capsules by multiplying the normalized attention values with primary capsules. Figure 2 illustrates the details of the structure of the attention module.

Figure 2.

The structure of the attention module. ⊗ stands for multiplication.

3.1.5. Routing Mechanism

A routing mechanism in capsule networks is an operation for updating the weights between capsules. Therefore, the properties captured by capsules can be propagated to suitable capsules, where different capsules reflect the properties of point cloud from different aspects. The task of a routing mechanism is to define the global coherence of the features and it performs by learning part-whole relationships.

We concatenate the output of the primary capsules after applying the attention module with the output of the convolutional features. The convolutional layer features are local features and the features from primary capsules after the attention module are global features. This concatenation process is required to achieve point-wise segmentation results through the routing mechanism. The shape of the output of the first convolution after max-pooling is . The shape of the output of the primary capsules after max-pooling is ; also it maintains the same shape after applying the attention module. Accordingly, we reshape the convolution of shape to in order to concatenate it with the features from the attention module of primary capsules. Therefore, the features for routing mechanism have a shape of , and are defined by where n is equal to 8; and 8 is the number of these latent capsules in the routing mechanism—there are 4 capsules for the local features and 4 capsules for the global features. Furthermore, is the feature in each one of the latent capsules of dimensions . The routing mechanism determines the connection between input and output capsules of the process. In EDC-Net, we define the dimensions of the EdgeCaps module by 2 since we have two classes of edge and non-edge points. In the routing mechanism, there are coupling coefficients denoted by C between the latent capsules and EdgeCaps capsules, where , where ; the summation of all of the coupling coefficients is equal to one. The coefficient values are computed by a routing softmax which is initially defined by . The represents the logit of i-th index of the latent capsules and j-th index of the EdgeCaps capsules. The is initialized with zero at the first iteration of the dynamic routing mechanism. Then, it is rectified iteratively by evaluating the agreement between the capsules. Hence, coupling coefficient values are computed as , where z represents the iterator through all capsules. The coupling coefficients are the log prior probabilities and they are updated by the iterative routing process. Afterwards, by multiplication of all the features f with the routing coefficient C, we obtain the list of prediction vectors for each vector of capsule . Each capsule consists of a weighted sum of all prediction vectors in order to make a connection between capsules . The list of prediction vectors represents the probability of similarities of features with respect to the features of each point. Hence, in order to define more similar prediction vectors close to and less similar prediction vectors close to , we apply a non-linear squashing function [23], , where is the vector output of capsule j and is its total input. Therefore, at each iteration of the routing mechanism, the vector length is reduced to close to zero for dissimilar features and is increased until close to one for similar features. This process is performed by updating the routing coefficients as (i.e., we make a dot product of and ). Routing mechanism classifies the similar features; then the EdgeCaps module returns the activations in two classes as a point-wise segmentation process to extract edge and non-edge points.

3.1.6. EdgeCaps

Our proposed EdgeCaps is the first use of a capsule network architecture for edge detection of point clouds in literature. We set up a similar voting agreement hierarchy to [23] and embedded it into EDC-Net to build a segmentation network. Our model is built upon a dynamic routing between capsules and treats the capsules as a probabilistic model which is capable of inferring visual entities based on the probabilistic vectors of the features. EdgeCaps captures edge points of a given point cloud and exploits this information through a recursive procedure to derive the class memberships (edge, non-edge) for individual points. Our contribution to the capsule network is that we do not apply the primary capsules’ activation directly in the routing process; but we apply an attention module to the primary capsules and concatenate them with the features of the previous convolution layer in order to combine the local and global features for a point-wise segmentation process through the EdgeCaps module. Furthermore, this modification allows us to operate on various sizes of point clouds. EdgeCaps returns the capsule activations in two classes as edge and non-edge based on the voting agreement hierarchy of the dynamic routing between the capsules. Moreover, another contribution of this work is that we do not incorporate margin or reconstruction loss; we define a specific loss function in Section 3.2 which focuses merely on the point-wise edge segmentation problem.

3.2. Loss Function

We have considered a combination of two loss functions in EDC-Net as edge loss and segmentation loss based on some weighted values in order to classify edge and non-edge points while emphasizing extracting edges from a point cloud. The details of each loss function are as follows:

3.2.1. Edge Loss

The edge loss is measured in terms of Chamfer distance (CD) [50]. Given two point clouds of ground-truth and predicted edges:

where is the set of the ground-truth edges in a given point cloud and contains points, . Each point of is comprised of three coordinates , . Furthermore, is the set of the predicted edge points by EDC-Net model from a given point cloud. contains points denoted by , ; and each point of contains three coordinates , . For the first summation, given a point in the ground-truth point cloud , find the nearest corresponding point in the predicted point cloud . A similar process is performed from to for the second summation. and do not necessarily contain the same number of points. Chamfer distance is differentiable and is computationally efficient.

3.2.2. Segmentation Loss

We consider a class-weighted binary cross-entropy loss function for the segmentation task. We have two classes of edge and non-edge. In almost all of the point cloud shapes, the number of points for edges is much lower than the number of non-edge points. Therefore, this class-weighted approach is beneficial in order to determine a balance between these two classes. is the weight for each one of the classes:

where is frequency of the class c and is total frequency of all the classes. Therefore, the class-weighted binary cross-entropy is calculated as:

where y is the label, is the predicted probability and is the weight of each class; in this point-wise segmentation process, there are two classes of edge and non-edge; hence, is equal to .

3.2.3. Total Loss

The training procedure of EDC-Net model is defined based on the combination of the two aforementioned loss functions:

where is the edge loss and is the segmentation loss; we have considered a weight for each one of the losses in order to emphasize more the correctness of extracting the edge points during the training process. In our experiments we set and . In Section 4.5 we describe the reason for selecting these parameters as the weights for computing loss.

3.3. Training Process

We first trained EDC-Net in a fully-supervise learning process on ABC dataset [35] with the provided labels. Afterwards, since ABC dataset [35] is the only public dataset available for point cloud edge detection, we boosted the training process of EDC-Net model based on a weakly-supervised fashion which is described in Section 3.3.1. In this stage, we used the samples of ShapeNet dataset [34] and annotated the edge points based on the implementation of [12] and transferred the learning of the model trained by ABC dataset [35].

3.3.1. Weakly-Supervised Transfer Learning

Transfer learning is the concept of applying pre-trained models to fine-tune them on other target data. Transfer learning performs a projection of all the new inputs through a pre-trained model. For instance, we have a pre-trained model function and attempt to learn a new function . To this end, we simplify by ; in this way perceives all the data through . In this work, we first trained EDC-Net on the ABC dataset [35], and then considered this model as a pre-trained model. Afterwards, in order to improve the performance of the model with various types of shapes, we fine-tuned the model on ShapeNet [34] (the details about the datasets are explained in Section 4.1). Since there is no available edge detection ground-truth for ShapeNet, we considered [12] as a baseline edge detection technique (the implementation is publicly available from [51]). The edge detection of the aforementioned technique is not perfectly precise; therefore, this stage is considered as weakly-supervised learning since we trained the model with these imprecise labels.

4. Experimental Results

4.1. Dataset

We performed a statistical analysis over the ABC [35] and ShapeNet [34] datasets. ABC [35] is a large-scale CAD dataset containing ground-truth annotations for edge points. The ABC ground-truth classification was produced using the vertices each associated with a sharp feature. The sharp feature labels of ABC dataset are based on the distances to the closest feature lines of point clouds. In our experiments, in order to train a point-wise segmentation model, we required hard labels to classify edge and non-edge points. Therefore, we set a threshold () as 0.1 (); if the distance (d) of a point to the closest feature lines of point clouds was smaller than the threshold (), then the point was assigned as an edge point; otherwise, () the point was assigned as a non-edge point. The threshold value () is not a sensitive hyperparameter for training processes, since this value is rather a parameter for setting up the dataset and the same value was applied on the training set, validation set and test set of ABC dataset.

ABC dataset contains 81,920 high resolution annotated point clouds for the sharp feature detection task. We split this dataset into 57,344 point clouds for training (70%), 8192 point clouds for validation (10%) and 16,384 point clouds for testing (20%). Each point cloud provided by the ABC dataset contains 4096 points; in our experiments we sampled 1024 and 2048 points from each point cloud via uniform point sampling and then transferred the annotation model to the point clouds.

For the weakly-supervised learning process, we utilized ShapeNetCore.v2, which is a subset of the full ShapeNet [34] dataset. ShapeNetCore.v2 contains 51,127 pre-aligned 3D shapes from 55 common object categories categories, which are split into 35,708 (70%) shapes for training, 5158 (10%) shapes for validation and 10,261 (20%) shapes for testing.

In our experiments, we sampled 1024 and 2048 points from ShapeNet models. We followed the iterative farthest point sampling (FPS) configuration of [7] to sample points uniformly from the point clouds in both the ABC and ShapeNet datasets.

4.2. Implementation Details

We trained the EDC-Net model by ADAM optimizer [52] with a learning rate of , momentum of 0.9, and reduced the learning rate by cosine annealing until . Cosine annealing is a learning rate scheduler that has the effect of starting with a large learning rate that is relatively rapidly decreased to a minimum value before being increased rapidly again [53]. Furthermore, we applied weight regularization by a weight-decay equal to to reduce the overfitting problem in our network. In all experiments, the number of neighbors (k) to build the features graph was either equal to 20 or to 40 when we respectively sampled either 1024 or 2048 points. We have performed three iterations in dynamic routing procedure between capsules. We kept the batch-size as 32 or 16 during training and testing respectively, and did the training for 300 epochs on ABC and 200 epochs on ShapeNet for the weakly-supervised transfer learning experiments.

All the experiments of this paper were conducted on a system with a single GeForce RTX 2080 Ti GPU and EDC-Net implementation is based on Pytorch library.

4.3. Edge Detection Results

We considered score as an evaluation measurement to quantitatively compare the results of the different techniques: where precision is defined as the proportion of correctly detected points by EDC-Net model and recall is defined as the proportion of labeled edge points in the ground-truth. Precision and recall are computed as: , where stands for true positives representing the number of correctly detected points; stands for false positives representing the number of wrongly detected points; stands for false negatives, representing the number of false rejections, i.e., edge points in the ground-truth that are not detected as edges by EDC-Net model. The terms precision and recall in some references are defined as correctness and completeness [54].

We have done extensive experiments to evaluate the performance of our proposed EDC-Net. The quantitative results are summarized in Table 1. The models that are denoted by WSL in Table 1 were trained on ShapeNet dataset in a weakly-supervised learning fashion as explained in Section 3.3.1; the rest of the models were merely trained on ABC dataset samples. We have trained EC-Net [21] according to the publicly available code [55] on the datasets described in Section 4.1 and not the dataset used by the authors in the original paper [21].

Table 1.

Results on ABC [35] and ShapeNet [34] samples. WSL stands for weakly-supervised learning. P and R are represented for precision and recall respectively. The highest score at each line is shown in bold.

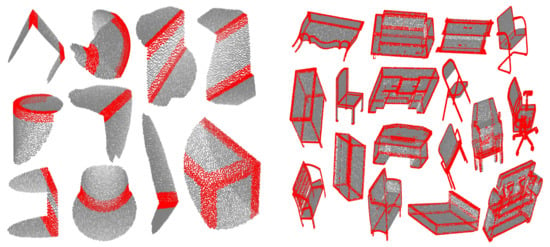

We have provide some qualitative results of EDC-Net model on ABC [35] datasets and ShapeNet [34], the results are illustrated in Figure 3.

Figure 3.

Edge detection results on ABC [35] (left) and ShapeNet [34] (right) samples. The detected edge points are assigned with red and not-edge points with gray.

4.4. Robustness to Noise

Point clouds often contain certain amounts of noise in a real scene when they are captured by scanners. In order to evaluate our EDC-Net on noisy point clouds, we applied various perturbations of noise levels on point clouds from ABC. We randomly applied different perturbations to the point samples along the surface normal direction with a scale factor in the − range, where we tested five values of . The results of these experiments are summarized in Table 2. Precision, recall and F1 scores were computed similar as the explanations in Section 4.3.

Table 2.

The behavior of EDC-Net with different perturbed levels of noise on ABC dataset.

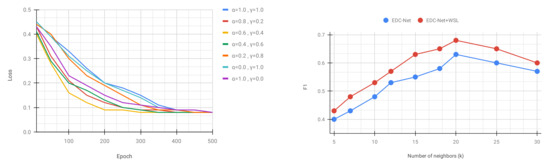

4.5. Ablation Study

We performed an ablation study based on the two hyper-parameters of and for computing the loss function during the training process (as explained in Section 3.2). The summary of this study is depicted in Figure 4 (left). All the experiments in this ablation study were performed on the validation set of the ABC dataset. This study demonstrates the importance of the weights for calculating the loss during the training process and how it accelerates the training process to converge the loss earlier.

Figure 4.

(Left) Training loss of EDC-Net for various and during training on the ABC dataset. (Right) Comparison of the accuracy () of EDC-Net on ShapeNet dataset based on various numbers of neighbors (K) and a comparison to demonstrate the importance of WSL (weakly-supervised learning) to improving the accuracy on ShapeNet dataset.

Furthermore, we performed another ablation study to analyze the effectiveness of the weakly-supervised transfer learning approach and the adequate number of neighbors (k) of our proposed EDC-Net. In all these experiments we sampled 1024 sample points from each point cloud, and we trained EDC-Net by different numbers of neighbors; we applied the test set of the ShapeNet dataset for evaluating the trained models. EDC-Net refers to the models that just trained on the ABC dataset and EDC-Net+WSL refers to the models that applied the weakly-supervised learning by imprecise labels of ShapeNet dataset. The results of these experiments are summarized in Figure 4 (right). This study demonstrates the adequate number of neighbors that we defined in our experiments; furthermore, it demonstrates the robustness of weakly-supervised learning for boosting the performance of EDC-Net on the ShapeNet dataset.

4.6. Complexity Analysis

We analyzed the complexity of EDC-Net based on two criteria: (1) time consumption for point classification; (2) model size. The results are summarized in Table 3.

Table 3.

Complexity analysis of EDC-Net.

For all the experiments performed by EDC-Net, the average running time was about 0.3 s for point classification per point cloud. In comparison, the average running times for point classification by EC-Net [21] and PIE-NET [17] were 0.8 and 0.5 s respectively.

The size of the EDC-Net model is significantly smaller than the size of the EC-Net [21] model, and it achieved better performance; this is a notable advantage of EDC-Net that determined it as a light-weighted model and proved its capability to fit into devices with limited resources.

5. Discussion

The advantages of our proposed EDC-Net for point cloud edge detection are based on the design of capsule network for edge detection from point clouds to disentangle geometrical features of point clouds. Furthermore, our proposed loss function improves the training process by emphasizing the prediction of edges and boosts the training for an earlier convergence.

The notable contribution of our work lies in presenting a novel learning framework for detecting point clouds edges, and tackling the challenges of the lack of annotated data for this task. However, there are still multiple limitations in this work—namely, large-scale point clouds require different implementations and network architectures and yet there is not any public dataset available for the edge detection of large-scale point clouds. In this work, we considered the scale of point clouds based on 1024 and 2048 point samples, which are the most common point sample scales in this community for single object point clouds. Building a framework to tackle multiple objects in a large-scale point clouds is still challenging. Therefore, for the future work, we plan to apply this technique to some large-scale scene point clouds instead of just focusing on single object CAD models. Furthermore, we plan to apply some unsupervised techniques inspired by [56] in order to train an edge detection model regardless of the ground-truth data.

Overall, the results of our proposed method are promising and constitute the first steps towards reliable point cloud edge detection based on capsule network and weakly-supervised transfer learning approaches. Our work opens a new research path in point cloud edge detection and poses novel research questions with available trends for further improvement.

6. Conclusions

In this paper, we introduced EDC-Net, a novel framework for edge detection based on capsule network structures. We designed a point-wise segmentation pipeline to classify the edge and non-edge points. We formulated a loss function specifically tailored to extract edge points in highly unbalanced point clouds where the number of non-edge points is often much larger than the number of edge points. We proved that our proposed loss function significantly boosts the training process in terms of time and accuracy. Moreover, we built a weakly-supervised transfer learning structure to set up a flexible and robust pipeline applicable to unlabeled datasets.

We provided extensive experimental results on ABC and ShapeNet datasets. We demonstrated that our proposed EDC-Net performs accurately on edge detection tasks, and we furthermore proved its robustness on the noisy data. Moreover, we proved that our proposed weakly-supervised transfer learning improved the performance on the ShapeNet dataset, for which there are no available edge labels. Experimental results demonstrated that our proposed EDC-Net performs efficiently in terms of the execution time while maintaining high accuracy. Moreover, EDC-Net provides a compressed model with a small model size, which is important for a better deployment to fit high-performing models into devices with constrained resources.

Our contribution does not focus merely on surpassing the edge detection state-of-the-art techniques, but we also introduced a novel learning approach for edge detection tasks based on capsule network structures. Furthermore, we proposed a new approach that tackles the challenge of a lack of annotated data for training models in this community.

Author Contributions

Methodology, D.B.; Writing—review and editing, D.B. and M.E.P. All authors have read and agreed to the published version of the manuscript.

Funding

This research was done in the framework of “Geodèsia i Navegació (GEON)” project (reference: 2017 SGR 820) by AGAUR, Generalitat de Catalunya, through the Emerging Research Group.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Ahmed, S.; Tan, Y.; Chew, C.; Mamun, A.; Wong, F. Edge and Corner Detection for Unorganized 3D Point Clouds with Application to Robotic Welding. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 7350–7355. [Google Scholar]

- Hackel, T.; Dirk, J.; Schindler, K. Contour Detection in Unstructured 3D Point Clouds. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 1610–1618. [Google Scholar]

- Ni, H.; Lin, X.; Ning, X.; Zhang, J. Edge Detection and Feature Line Tracing in 3D-Point Clouds by Analyzing Geometric Properties of Neighborhoods. Remote. Sens. 2016, 8, 710. [Google Scholar] [CrossRef]

- Kulikajevas, A.; Maskeliūnas, R.; Damaševičius, R.; Ho, E. 3D Object Reconstruction from Imperfect Depth Data Using Extended YOLOv3 Network. Sensors 2020, 20, 2025. [Google Scholar] [CrossRef]

- Kulikajevas, A.; Maskeliūnas, R.; Damaševičius, R.; Misra, S. Reconstruction of 3D Object Shape Using Hybrid Modular Neural Network Architecture Trained on 3D Models from ShapeNetCore Dataset. Sensors 2019, 19, 1553. [Google Scholar] [CrossRef]

- Qi, C.; Su, H.; Mo, K.; Guibas, L. PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 652–666. [Google Scholar]

- Qi, C.; Yi, L.; Su, H.; Guibas, L. PointNet++: Deep Hierarchical Feature Learning on Point Sets in a Metric Space. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017; pp. 5099–5108. [Google Scholar]

- Wang, W.; Yu, R.; Huang, Q.; Neumann, U. SGPN: Similarity Group Proposal Network for 3D Point Cloud Instance Segmentation. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 2569–2578. [Google Scholar]

- Jaritz, M.; Gu, J.; Su, H. Multi-view PointNet for 3D Scene Understanding. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision Workshop (ICCVW), Seoul, Korea, 27–28 October 2019; pp. 3995–4003. [Google Scholar]

- Mo, K.; Zhu, S.; Chang, A.; Yi, L.; Tripathi, S.; Guibas, L.; Su, H. PartNet: A Large-Scale Benchmark for Fine-Grained and Hierarchical Part-Level 3D Object Understanding. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–21 June 2019; pp. 909–918. [Google Scholar]

- Guo, Y.; Wang, H.; Hu, Q.; Liu, H.; Liu, L.; Bennamoun, M. Deep learning for 3D point clouds: A survey. arXiv 2019, arXiv:1912.12033. [Google Scholar]

- Bazazian, D.; Casas, J.R.; Ruiz-Hidalgo, J. Fast and Robust Edge Extraction in Unorganized Point Clouds. In Proceedings of the 2015 International Conference on Digital Image Computing: Techniques and Applications (DICTA), Adelaide, Australia, 23–25 November 2015; pp. 1–8. [Google Scholar]

- Weber, C.; Hahmann, S.; Hagen, H. Sharp Feature Detection in Point Clouds. In Proceedings of the Shape Modeling International Conference, Aix en Provence, France, 21–23 June 2010; pp. 175–186. [Google Scholar]

- Mineo, C.; Gareth, S.; Summan, R. Novel algorithms for 3D surface point cloud boundary detection and edge reconstruction. Comput. Des. Eng. 2018, 6, 81–89. [Google Scholar] [CrossRef]

- Demarsin, K.; Vanderstraeten, D.; Volodine, T.; Roose, D. Detection of closed sharp edges in point clouds using normal estimation and graph theory. Comput. Aided Des. 2007, 39, 276–283. [Google Scholar] [CrossRef]

- Bazazian, D.; Casas, J.R.; Ruiz-Hidalgo, J. Segmentation-based Multi-scale Edge Extraction to Measure the Persistence of Features in Unorganized Point Clouds. In Proceedings of the 12th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISAPP), Porto, Portugal, 27 February–1 March 2017; pp. 317–325. [Google Scholar]

- Wang, X.; Xu, Y.; Xu, K.; Tagliasacchi, A.; Zhou, B.; Mahdavi-Amiri, A.; Zhang, H. PIE-NET: Parametric Inference of Point Cloud Edges. In Proceedings of the 34th Conference on Neural Information Processing Systems (NeurIPS 2020), Vancouver, BC, Canada, 6–12 December 2020; Volume 33. [Google Scholar]

- Himeur, C.; Lejemble, T.; Pellegrini, T.; Paulin, M.; Barthe, L.; Mellado, N. PCEDNet: A Neural Network for Fast and Efficient Edge Detection in 3D Point Clouds. arXiv 2020, arXiv:2011.01630. [Google Scholar]

- Matveev, A.; Artemov, A.; Burnaev, E. Geometric Attention for Prediction of Differential Properties in 3D Point Clouds. arXiv 2020, arXiv:2007.02571. [Google Scholar]

- Raina, P.; Mudur, S.; Popa, T. Sharpness Fields in Point Clouds using Deep Learning. Comput. Graph. 2019, 78, 37–53. [Google Scholar] [CrossRef]

- Yu, L.; Li, X.; Fu, C.; Cohen-or, D.; Heng, P. EC-Net: An Edge-aware Point set Consolidation Network. In Proceedings of the 15th European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 1–17. [Google Scholar]

- Raina, P.; Mudur, S.; Popa, T. MLS2: Sharpness Field Extraction Using CNN for Surface Reconstruction. In Proceedings of the 44th Graphics Interface Conference, Toronto, ON, Canada, 8–11 May 2018; pp. 57–66. [Google Scholar]

- Sabour, S.; Frosst, N.; Hinton, G. Dynamic Routing Between Capsules. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017; pp. 3856–3866. [Google Scholar]

- Patrick, M.; Adekoya, A.; Mighty, A.; Edward, B. Capsule networks—A survey. J. King Saud Univ. Comput. Inf. Sci 2019, 1319–1578. [Google Scholar] [CrossRef]

- Zhao, Y.; Birdal, T.; Deng, H.; Tombari, F. 3D Point-Capsule Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 19–21 June 2019; pp. 1009–1018. [Google Scholar]

- Srivastava, N.; Goh, H.; Salakhutdinov, R. Geometric Capsule Autoencoders for 3D Point Clouds. arXiv 2019, arXiv:1912.03310. [Google Scholar]

- Bazazian, D.; Nahata, D. DCG-Net: Dynamic Capsule Graph Convolutional Network for Point Clouds. IEEE Access 2020, 8, 188056–188067. [Google Scholar] [CrossRef]

- Zhao, Y.; Birdal, T.; Lenssen, J.; Menegatti, E.; Guibas, L.; Tombari, F. Quaternion equivariant capsule networks for 3D point clouds. arXiv 2019, arXiv:1912.12098. [Google Scholar]

- Cheraghian, A.; Petersson, L. 3DCapsule: Extending the capsule architecture to classify 3D point clouds. In Proceedings of the 2019 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa Village, HI, USA, 7–11 January 2019; pp. 1194–1202. [Google Scholar]

- Chan, L.; Hosseini, M.S.; Plataniotis, K.N. A comprehensive analysis of weakly-supervised semantic segmentation in different image domains. Int. J. Comput. Vis. 2020, 1–24. [Google Scholar] [CrossRef]

- Zhuang, F.; Qi, Z.; Duan, K.; Xi, D.; Zhu, Y.; Zhu, H.; Xiong, H.; He, Q. A comprehensive survey on transfer learning. arXiv 2020, arXiv:1911.02685. [Google Scholar] [CrossRef]

- Hwang, S.; Kim, H.E. Self-transfer learning for fully weakly supervised object localization. arXiv 2016, arXiv:1602.01625. [Google Scholar]

- Fang, F.; Xie, Z. Weak Supervision in the Age of Transfer Learning for Natural Language Processing; cs229 Stanford: Stanford, CA, USA, 2019. [Google Scholar]

- Chang, A.; Funkhouser, T.; Guibas, L.; Hanrahan, P.; Huang, Q.; Li, Z.; Savarese, S.; Savva, M.; Song, S.; Su, H.; et al. ShapeNet: An Information-Rich 3D Model Repository. arXiv 2015, arXiv:1512.03012. [Google Scholar]

- Koch, S.; Matveev, A.; Jiang, Z.; Williams, F.; Artemov, A.; Burnaev, E.; Alexa, M.; Zorin, D.; Panozzo, D. ABC: A Big CAD Model Dataset for Geometric Deep Learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 19–21 June 2019; pp. 9593–9603. [Google Scholar]

- Qi, C.; Liu, W.; Wu, C.; Su, H.; Guibas, L. Frustum PointNets for 3D Object Detection from RGB-D Data. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 918–927. [Google Scholar]

- Qi, C.; Litany, O.; He, K.; Guibas, L. Deep Hough Voting for 3D Object Detection in Point Clouds. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 9277–9286. [Google Scholar]

- Yang, B.; Luo, W.; Urtasun, R. PIXOR: Real-Time 3D Object Detection from Point Clouds. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 7652–7660. [Google Scholar]

- Li, J.; Chen, B.; Lee, G. SO-Net: Self-Organizing Network for Point Cloud Analysis. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 9397–9406. [Google Scholar]

- Xu, Y.; Fan, T.; Xu, M.; Zeng, L.; Qiao, Y. SpiderCNN: Deep Learning on Point Sets with Parameterized Convolutional Filters. In Proceedings of the 15th European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 90–105. [Google Scholar]

- Zhang, Z.; Hua, B.; Yeung, S. ShellNet: Efficient Point Cloud Convolutional Neural Networks using Concentric Shells Statistics. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 1607–1616. [Google Scholar]

- Hinton, G.; Krizhevsky, A.; Wang, S. Transforming auto-encoders. In Proceedings of the 24th International Conference on Neural Information Processing Systems (NIPS), Granada, Spain, 12–17 December 2011; pp. 44–51. [Google Scholar]

- Bonheur, S.; Štern, D.; Payer, C.; Pienn, M.; Olschewski, H.; Urschler, M. Matwo-capsnet: A multi-label semantic segmentation capsules network. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Shenzhen, China, 13–17 October 2019; pp. 664–672. [Google Scholar]

- Survarachakan, S.; Johansen, J.S.; Aarseth, M.; Pedersen, M.A.; Lindseth, F. Capsule Nets for Complex Medical Image Segmentation Tasks. Online Colour and Visual Computing Symposium. 2020. Available online: http://ceur-ws.org/Vol-2688/paper13.pdf (accessed on 23 December 2020).

- Sun, T.; Wang, Z.; Smith, C.D.; Liu, J. Trace-back along capsules and its application on semantic segmentation. arXiv 2019, arXiv:1901.02920. [Google Scholar]

- LaLonde, R.; Bagci, U. Capsules for object segmentation. arXiv 2018, arXiv:1804.04241. [Google Scholar]

- LaLonde, R.; Xu, Z.; Irmakci, I.; Jain, S.; Bagci, U. Capsules for biomedical image segmentation. Med Image Anal. 2020, 68, 101889. [Google Scholar] [CrossRef]

- Xu, M.; Zhou, Z.; Qiao, Y. Geometry sharing network for 3D point cloud classification and segmentation. arXiv 2019, arXiv:1912.10644. [Google Scholar] [CrossRef]

- Xinyi, Z.; Chen, L. Capsule graph neural network. In Proceedings of the International Conference on Learning Representations (ICLR), Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Liu, M.Y.; Tuzel, O.; Veeraraghavan, A.; Chellappa, R. Fast directional chamfer matching. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), San Francisco, CA, USA, 13–18 June 2010; pp. 1696–1703. [Google Scholar]

- Bazazian, D. Edge_Extraction: GitHub Repository. 2015. Available online: https://github.com/denabazazian/Edge_Extraction (accessed on 23 December 2020).

- Kingma, D.; Ba, J. ADAM: A method for stochastic optimization. In Proceedings of the 3rd International Conference for Learning Representations (ICLR), San Diego, CA, USA, 7–9 May 2015; pp. 1–15. [Google Scholar]

- Loshchilov, I.; Frank, H. SGDR: Stochastic gradient descent with warm restarts. In Proceedings of the 5th International Conference on Learning Representations (ICLR), Toulon, France, 24–26 April 2017; pp. 1–16. [Google Scholar]

- Rutzinger, M.; Rottensteiner, F.; Pfeifer, N. A comparison of evaluation techniques for building extraction from airborne laser scanning. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2009, 2, 11–20. [Google Scholar] [CrossRef]

- Yu, L. EC-Net: GitHub Repository. 2018. Available online: https://github.com/yulequan/EC-Net (accessed on 23 December 2020).

- Kosiorek, A.; Sabour, S.; Teh, Y.W.; Hinton, G.E. Stacked capsule autoencoders. In Proceedings of the NeurIPS, Vancouver, BC, Canada, 8–14 December 2019; pp. 15512–15522. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).