Anomalous Event Recognition in Videos Based on Joint Learning of Motion and Appearance with Multiple Ranking Measures

Abstract

1. Introduction

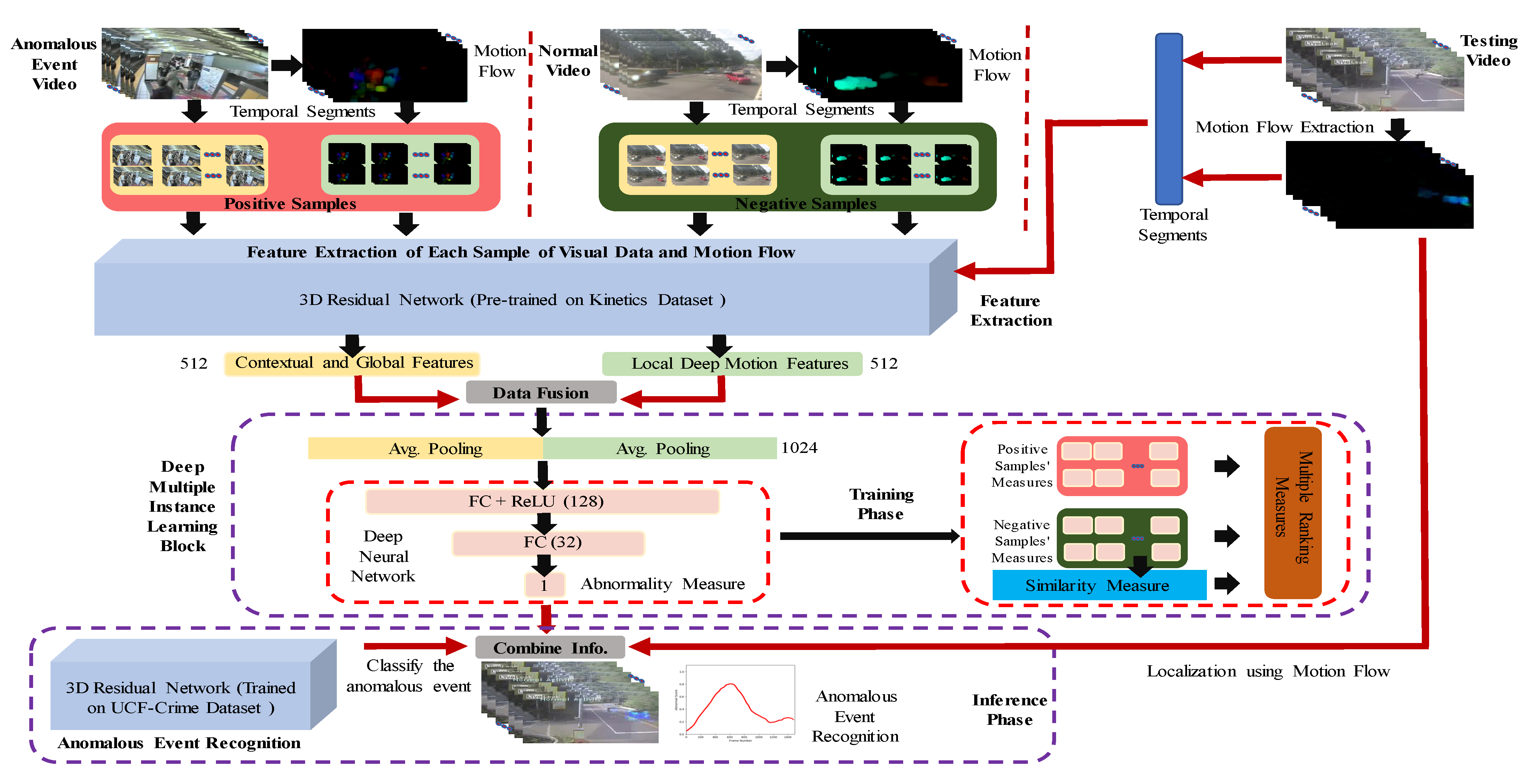

- A pre-trained 3D ResNet is used, not only to extract global, context-based features of moving objects but also to extract the deep local motion-related information from their motion flow maps. The network is trained to learn the context-dependency or the relationship between spatial-time-dependent features and deep motion features using joint learning.

- A new objective function is proposed for DMIL using MRMs. Anomalous events are detected with their class (type) and with the localization of moving objects using motion flow maps.

- The proposed framework has been tested on the two most recent and challenging datasets–UCF-Crime [14] and ShanghaiTech [5]. The results show that our method improves by AUC on the UCF-Crime dataset compared with the state-of-the-method [14] and gives AUC on the ShanghaiTech. The ablation study demonstrates the effectiveness of our proposed framework.

2. Related Works

3. The Proposed Framework for Anomalous Event Recognition

3.1. Extraction of Motion Flow Maps

3.2. Extraction of Deep Motion and Appearance Features

3.3. Proposed Joint Learning Model and Multiple Ranking Measures (MRMs)

3.3.1. Data Fusion

3.3.2. Deep Multiple Instance Learning Block

3.3.3. Proposed Multiple Ranking Measures

4. Experimentation

4.1. Datasets

4.2. Implementation Details

4.3. Evaluation Metrics

4.4. Experiment Results and Ablation Study

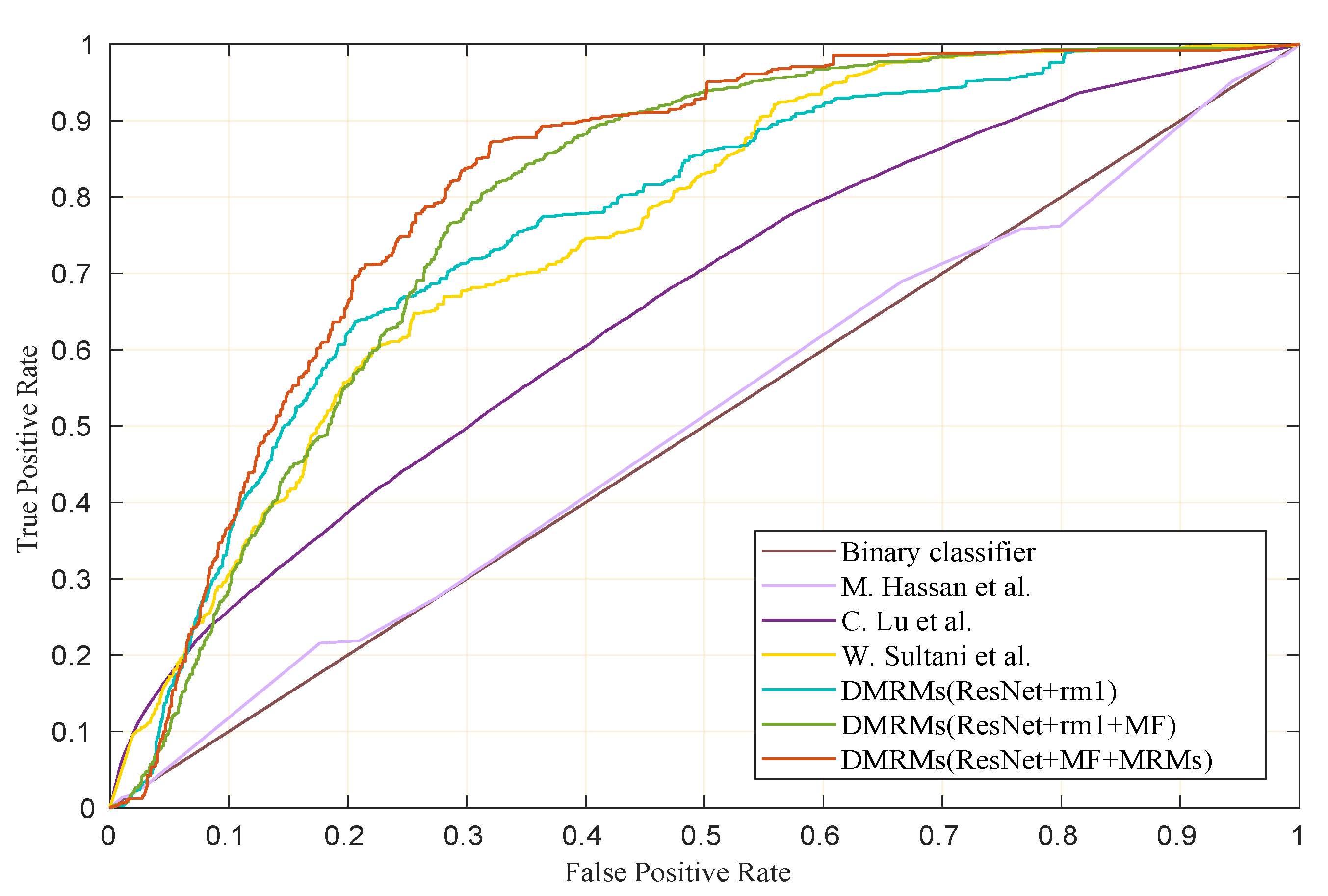

4.4.1. Comparison among State-of-the-Art Methods

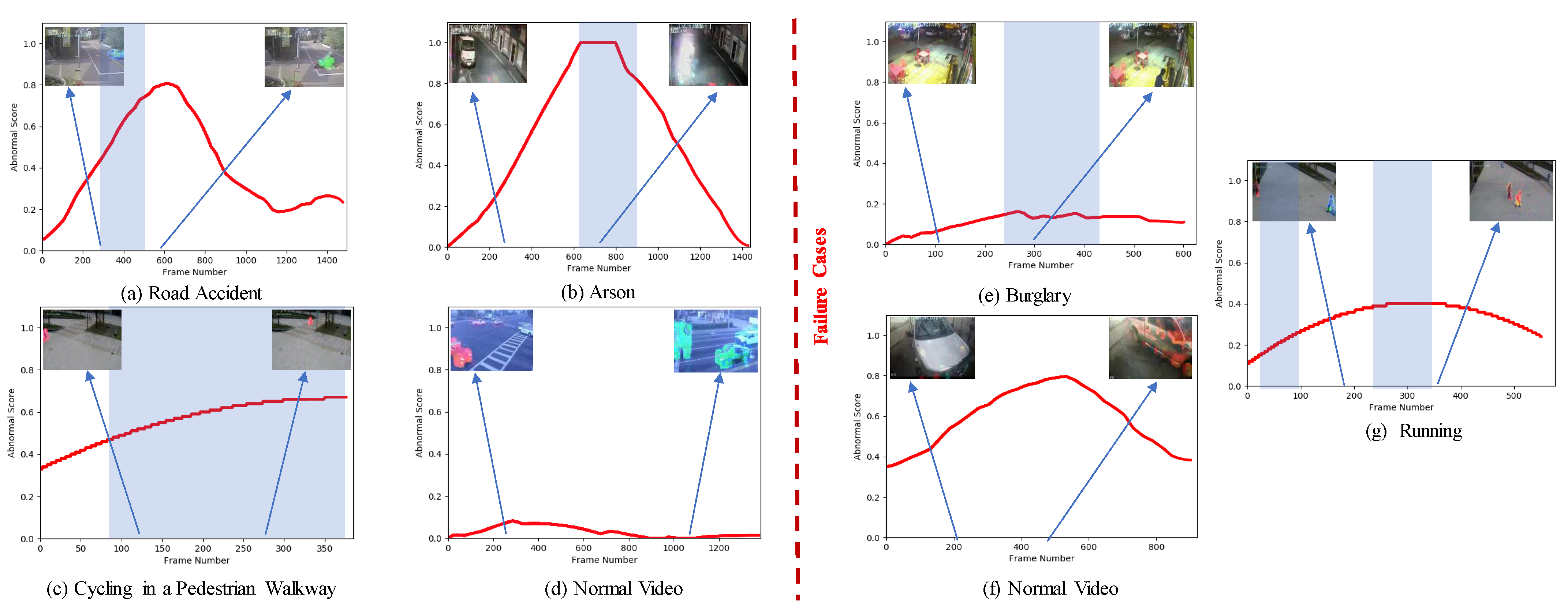

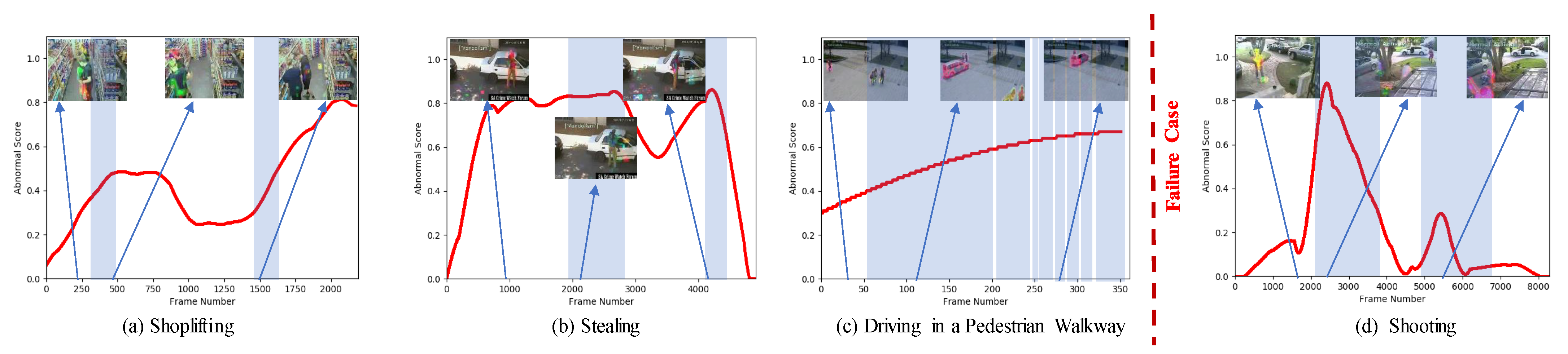

4.4.2. Qualitative Results and Demonstration of Context-Dependency Learning of DMRMs

4.4.3. Ablation Study

4.4.4. Anomalous Event Recognition and Localization of Moving Objects

4.4.5. Analysis of Computational Cost of The Proposed DMRMs

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wang, L.; Zhou, F.; Li, Z.; Zuo, W.; Tan, H. Abnormal Event Detection in Videos Using Hybrid Spatio-Temporal Autoencoder. In Proceedings of the IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018; pp. 2276–2280. [Google Scholar] [CrossRef]

- Perera, P.; Nallapati, R.; Xiang, B. OCGAN: One-Class Novelty Detection Using GANs with Constrained Latent Representations. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–21 June 2019; pp. 2893–2901. [Google Scholar]

- Chong, Y.S.; Tay, Y.H. Abnormal Event Detection in Videos Using Spatiotemporal Autoencoder. In Advances in Neural Networks—ISNN 2017; Springer: Cham, Japan, 2017; pp. 189–196. [Google Scholar] [CrossRef]

- Medel, J.; Savakis, A. Anomaly Detection in Video Using Predictive Convolutional Long Short-Term Memory Networks. arXiv 2016, arXiv:1612.00390. [Google Scholar]

- Luo, W.; Liu, W.; Gao, S. A Revisit of Sparse Coding Based Anomaly Detection in Stacked RNN Framework. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 341–349. [Google Scholar] [CrossRef]

- Zhao, B.; Fei-Fei, L.; Xing, E.P. Online Detection of Unusual Events in Videos via Dynamic Sparse Coding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Colorado Springs, CO, USA, 20–25 June 2011. [Google Scholar]

- Mahadevan, V.; LI, W.X.; Bhalodia, V.; Vasconcelos, N. Anomaly Detection in Crowded Scenes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), San Francisco, CA, USA, 13–18 June 2010; pp. 1975–1981. [Google Scholar]

- Lu, C.; Shi, J.; Jia, J. Abnormal event detection at 150 FPS in MATLAB. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Sydney, Australia, 1–8 December 2013; pp. 2720–2727. [Google Scholar] [CrossRef]

- Liu, W.; Luo, W.; Lian, D.; Gao, S. Future Frame Prediction for Anomaly Detection—A New Baseline. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 6536–6545. [Google Scholar]

- Xu, K.; Sun, T.; Jiang, X. Video Anomaly Detection and Localization Based on an Adaptive Intra-Frame Classification Network. IEEE Trans. Multimed. 2020, 22, 394–406. [Google Scholar] [CrossRef]

- Morais, R.; Le, V.; Tran, T.; Saha, B.; Mansour, M.; Venkatesh, S. Learning Regularity in Skeleton Trajectories for Anomaly Detection in Videos. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 11988–11996. [Google Scholar]

- Xu, D.; Yan, Y.; Ricci, E.; Sebe, N. Detecting anomalous events in videos by learning deep representations of appearance and motion. Comput. Vis. Image Underst. 2017, 156, 117–127. [Google Scholar] [CrossRef]

- Hasan, M.; Choi, J.; Neumann, J.; Roy-Chowdhury, A.K.; Davis, L.S. Learning Temporal Regularity in Video Sequences. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 733–742. [Google Scholar]

- Sultani, W.; Chen, C.; Shah, M. Real-World Anomaly Detection in Surveillance Videos. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 6479–6488. [Google Scholar] [CrossRef]

- Dubey, S.; Boragule, A.; Jeon, M. 3D ResNet with Ranking Loss Function for Abnormal Activity Detection in Videos. In Proceedings of the International Conference on Control, Automation and Information Sciences (ICCAIS), Chengdu, China, 23–26 October 2019. [Google Scholar]

- Hinami, R.; Mei, T.; Satoh, S. Joint Detection and Recounting of Abnormal Events by Learning Deep Generic Knowledge. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 3639–3647. [Google Scholar]

- Luo, W.; Liu, W.; Gao, S. Remembering history with convolutional LSTM for anomaly detection. In Proceedings of the IEEE International Conference on Multimedia and Expo (ICME), Hong Kong, China, 10–14 July 2017; pp. 439–444. [Google Scholar]

- Zhong, J.X.; Li, N.; Kong, W.; Liu, S.; Li, T.H.; Li, G. Train a plug-and-play action classifier for anomaly detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Babenko, B. Multiple Instance Learning: Algorithms and Applications; Tech. Rep.; University of California: San Diego, CA, USA, 2008. [Google Scholar]

- Andrews, S.; Tsochantaridis, I.; Hofmann, T. Support Vector Machines for Multiple-Instance Learning. In Advances in Neural Information Processing Systems 15 (NIPS); MIT Press: Cambridge, MA, USA, 2003; pp. 577–584. [Google Scholar]

- Hara, K.; Kataoka, H.; Satoh, Y. Learning Spatio-Temporal Features with 3D Residual Networks for Action Recognition. In Proceedings of the IEEE International Conference on Computer Vision Workshop (ICCV), Venice, Italy, 22–29 October 2017; pp. 3154–3160. [Google Scholar] [CrossRef]

- Hara, K.; Kataoka, H.; Satoh, Y. Can Spatiotemporal 3D CNNs Retrace the History of 2D CNNs and ImageNet? In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 6546–6555. [Google Scholar] [CrossRef]

- Wang, L.; Xu, Y.; Cheng, J.; Xia, H.; Yin, J.; Wu, J. Human Action Recognition by Learning Spatio-Temporal Features With Deep Neural Networks. IEEE Access 2018, 6, 17913–17922. [Google Scholar] [CrossRef]

- Bird, N.; Atev, S.; Caramelli, N.; Martin, R.; Masoud, O.; Papanikolopoulos, N. Real time, online detection of abandoned objects in public areas. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Orlando, FL, USA, 15–19 May 2006; pp. 3775–3780. [Google Scholar]

- Kamijo, S.; Matsushita, Y.; Ikeuchi, K.; Sakauchi, M. Traffic monitoring and accident detection at intersections. In Proceedings of the 199 IEEE/IEEJ/JSAI International Conference on Intelligent Transportation Systems, Tokyo, Japan, 5–8 October 1999; pp. 703–708. [Google Scholar]

- Wei, J.; Zhao, J.; Zhao, Y.; Zhao, Z. Unsupervised Anomaly Detection for Traffic Surveillance Based on Background Modeling. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2018; pp. 129–1297. [Google Scholar] [CrossRef]

- Bajestani, M.F.; Abadi, S.S.H.R.; Fard, S.M.D.; Khodadadeh, R. AAD: Adaptive Anomaly Detection through traffic surveillance videos. arXiv 2018, arXiv:1808.10044. [Google Scholar]

- Mohammadi, S.; Perina, A.; Kiani, H.; Murino, V. Angry Crowds: Detecting Violent Events in Videos. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 8–16 October 2016. [Google Scholar]

- Cong, Y.; Yuan, J.; Liu, J. Sparse reconstruction cost for abnormal event detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Colorado Springs, CO, USA, 20–25 June 2011; pp. 3449–3456. [Google Scholar]

- Wu, S.; Moore, B.E.; Shah, M. Chaotic invariants of Lagrangian particle trajectories for anomaly detection in crowded scenes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), San Francisco, CA, USA, 13–18 June 2010; pp. 2054–2060. [Google Scholar]

- Tung, F.; Zelek, J.S.; Clausi, D.A. Goal-based trajectory analysis for unusual behaviour detection in intelligent surveillance. Image Vis. Comput. 2011, 29, 230–240. [Google Scholar] [CrossRef]

- Dalal, N.; Triggs, B.; Schmid, C. Human Detection Using Oriented Histograms of Flow and Appearance. In Proceedings of the European Conference on Computer Vision (ECCV), Graz, Austria, 7–13 May 2006; pp. 428–441. [Google Scholar]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), San Diego, CA, USA, 20–26 June 2005; Volume 1, pp. 886–893. [Google Scholar]

- Zhang, D.; Gatica-perez, D.; Bengio, S.; Mccowan, I. Semi-supervised adapted hmms for unusual event detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), San Diego, CA, USA, 20–26 June 2005; pp. 611–618. [Google Scholar]

- Kim, J.; Grauman, K. Observe locally, infer globally: A space-time MRF for detecting abnormal activities with incremental updates. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Miami, FL, USA, 20–25 June 2009; pp. 2921–2928. [Google Scholar]

- Yu, J.; Kim, D.Y.; Yoon, Y.; Jeon, M. Action Matching Network: Open-set Action Recognition using Spatio-Temporal Representation Matching. Vis. Comput. 2019, 36, 1457–1471. [Google Scholar] [CrossRef]

- Yoon, Y.; Yu, J.; Jeon, M. Spatio-Temporal Representation Matching-Based Open-Set Action Recognition by Joint Learning of Motion and Appearance. IEEE Access 2019, 7, 165997–166010. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. (TPAMI) 2016, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Sun, S.; Akhtar, N.; Song, H.; Mian, A.; Shah, M. Deep Affinity Network for Multiple Object Tracking. IEEE Trans. Pattern Anal. Mach. Intell. (TPAMI) 2018, 43, 104–119. [Google Scholar] [CrossRef] [PubMed]

- Ciaparrone, G.; Sańchez, F.L.; Tabik, S.; Troiano, L.; Tagliaferri, R.; Herrera, F. Deep learning in video multi-object tracking: A survey. Neurocomputing 2020, 381, 61–88. [Google Scholar] [CrossRef]

- Wu, D.; Zheng, S.J.; Zhang, X.P.; Yuan, C.A.; Cheng, F.; Zhao, Y.; Lin, Y.J.; Zhao, Z.Q.; Jiang, Y.L.; Huang, D.S. Deep learning-based methods for person re-identification: A comprehensive review. Neurocomputing 2019, 337, 354–371. [Google Scholar] [CrossRef]

- Chen, Y.; Zhu, X.; Gong, S. Person Re-identification by Deep Learning Multi-scale Representations. In Proceedings of the IEEE International Conference on Computer Vision Workshops (ICCVW), Venice, Italy, 22–29 October 2017; pp. 2590–2600. [Google Scholar]

- Yu, J.; Yow, K.C.; Jeon, M. Joint Representation Learning of Appearance and Motion for Abnormal Event Detection. Mach. Vis. Appl. 2018, 29, 1157–1170. [Google Scholar] [CrossRef]

- Sohrab, F.; Raitoharju, J.; Gabbouj, M.; Iosifidis, A. Subspace Support Vector Data Description. In Proceedings of the IEEE International Conference on Pattern Recognition (ICPR), Beijing, China, 20–24 August 2018. [Google Scholar]

- Abati, D.; Porrello, A.; Calderara, S.; Cucchiara, R. Latent Space Autoregression for Novelty Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Luo, W.; Liu, W.; Lian, D.; Tang, J.; Duan, L.; Peng, X.; Gao, S. Video Anomaly Detection With Sparse Coding Inspired Deep Neural Networks. IEEE Trans. Pattern Anal. Mach. Intell. (TPAMI) 2019. [Google Scholar] [CrossRef] [PubMed]

- Tran, D.; Bourdev, L.; Fergus, R.; Torresani, L.; Paluri, M. Learning Spatiotemporal Features with 3D Convolutional Networks. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Lan, Z.Z.; Bao, L.; Yu, S.I.; Liu, W.; Hauptmann, A.G. Double Fusion for Multimedia Event Detection. In Advances in Multimedia Modeling; Springer: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Farnebäck, G. Two-Frame Motion Estimation Based on Polynomial Expansion. In Image Analysis; Springer: Berlin/Heidelberg, Germany, 2003. [Google Scholar]

- Lucas, B.D.; Kanade, T. An Iterative Image Registration Technique with an Application to Stereo Vision (DARPA). In Proceedings of the 1981 DARPA Image Understanding Workshop, Washington, DC, USA, 23 April 1981; pp. 121–130. [Google Scholar]

- Kay, W.; Carreira, J.; Simonyan, K.; Zhang, B.; Hillier, C.; Vijayanarasimhan, S.; Viola, F.; Green, T.; Back, T.; Natsev, P.; et al. The Kinetics Human Action Video Dataset. arXiv 2017, arXiv:705.06950. [Google Scholar]

- Nazaré, T.S.; de Mello, R.F.; Ponti, M.A. Are pre-trained CNNs good feature extractors for anomaly detection in surveillance videos? arXiv 2018, arXiv:1811.08495. [Google Scholar]

- Ding, C.; Tao, D. Robust Face Recognition via Multimodal Deep Face Representation. IEEE Trans. Multimed. 2015, 17, 2049–2058. [Google Scholar] [CrossRef]

- LI, W.X.; Mahadevan, V.; Vasconcelos, N. Anomaly Detection and Localization in Crowded Scenes. IEEE Trans. Pattern Anal. Mach. Intell. (TPAMI) 2013, 36, 18–32. [Google Scholar] [CrossRef]

- Adam, A.; Rivlin, E.; Shimshoni, I.; Reinitz, D. Robust Real-Time Unusual Event Detection using Multiple Fixed-Location Monitors. IEEE Trans. Pattern Anal. Mach. Intell. (TPAMI) 2008, 30, 555–560. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.; Cherian, A. GODS: Generalized One-Class Discriminative Subspaces for Anomaly Detection. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Seoul, Korea, 27–28 October 2019. [Google Scholar]

- Hou, R.; Chen, C.; Shah, M. Tube Convolutional Neural Network (T-CNN) for Action Detection in Videos. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 5823–5832. [Google Scholar]

| Hyper-Parameters | lr | UCF-Crime | ShanghaiTech | ||||

|---|---|---|---|---|---|---|---|

| 1 | 81.21 | 65.47 | |||||

| 2 | 77.23 | - | |||||

| 3 | 78.53 | 68.50 | |||||

| 4 | - | 65.83 | |||||

| 5 | 78.44 | - | |||||

| 6 | - | 67.32 | |||||

| 7 | 77.80 | - | |||||

| 8 | - | 65.70 | |||||

| 9 | 79.51 | - | |||||

| 10 | - | 65.83 | |||||

| 11 | 81.40 | - | |||||

| 12 | - | 67.72 | |||||

| 13 | 78.56 | - | |||||

| 14 | - | 67.86 | |||||

| 15 | 81.62 | - | |||||

| 16 | - | 67.75 | |||||

| 17 | 78.12 | - | |||||

| 18 | - | 67.68 | |||||

| 19 | 78.52 | - | |||||

| 20 | - | 67.84 | |||||

| 21 | - | 67.71 | |||||

| 22 | - | 67.82 | |||||

| 23 | - | 67.85 | |||||

| 24 | 78.98 | - | |||||

| 25 | 78.62 | - | |||||

| 26 | 80.64 | - | |||||

| 27 | 81.48 | - | |||||

| 28 (ours) | 81.91 | - | |||||

| - | 68.50 |

| Algorithm | UCF-Crime | ShanghaiTech |

|---|---|---|

| SVM Binary Classifier | 50.00 | - |

| M. Hasan et al. [13] | 50.60 | 60.90 * |

| F. Sohrab et al. [44] | 58.50 | - |

| C. Lu et al. [8] | 65.51 | - |

| Luo et al. [5] | - | 60.73 |

| GODS [56] | 70.46 | - |

| Autoreg-ConvAE (LLK) [45] | - | 70.30 |

| Liu et al. [9] | - | 73.04 |

| Autoreg-ConvAE (NS) [45] | - | 73.85 |

| MPED-RNN [11] | - | 76.24 |

| W. Sultani et al. [14] | 75.41 | - |

| Ours DMRMs | 81.91 | 68.50 |

| Segments | 8 | 16 | 32 | 48 | 64 |

|---|---|---|---|---|---|

| UCF-Crime (AUC) | - | 81.91 | 81.17 | 80.71 | 80.98 |

| ShanghaiTech (AUC) | 68.20 | 68.50 | 65.60 | 66.20 | 66.00 |

| Ranking Measures | UCF-Crime | ShanghaiTech |

|---|---|---|

| 3D ResNet-34 + rm1 | 76.20 | 61.20 |

| 3D ResNet-34 + rm1 + MF | 78.68 | 65.30 |

| 3D ResNet-34 + rm1 + MF + rm2 | 78.60 | 64.90 |

| 3D ResNet-34 + rm1 + MF + rm3 | 80.08 | 66.10 |

| 3D ResNet-34 + rm1 + MF + rm4 | 80.01 | 65.60 |

| 3D ResNet-34 + rm1 + MF + rm2 +rm3 | 80.92 | 67.70 |

| 3D ResNet-34 + rm1 + MF + rm2 +rm4 | 79.80 | 65.40 |

| 3D ResNet-34 + rm1 + MF + rm2 + rm3 + rm4 | 80.10 | 67.00 |

| 3D ResNet-34 + MF + MRM | 81.12 | 67.91 |

| DMRMs 3D ResNet-34 + MF + MRM + + | 81.91 | 68.50 |

| Algorithm | M. Hasan et al. [13] | C. Lu et al. [8] | W. Sultani et al. [14] | Ours DMRMs |

|---|---|---|---|---|

| FAR | 27.20 | 3.10 | 1.90 | 0.85 |

| Algorithm | C3D [47] | TCNN [57] | 3D ResNet-34 [21] |

|---|---|---|---|

| Accuracy | 23.0 | 28.4 | 27.2 |

| Computational Process | UCF-Crime | ShanghaiTech |

|---|---|---|

| Extraction of Motion Flow Maps | 0.016 | 0.03 |

| 16 Temporal Segments and Pre-process. | 0.0012 | 0.0016 |

| 32 Temporal Segments and Pre-process. | - | 0.0019 |

| Feature Extraction from Visual Data | 0.011 | 0.012 |

| Feature Extraction from Motion Maps | 0.011 | 0.012 |

| Detection | 0.0004 | 0.0005 |

| Total | 0.0396 | 0.0561 |

| FPS | ≈ 26 | ≈ 18 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dubey, S.; Boragule, A.; Gwak, J.; Jeon, M. Anomalous Event Recognition in Videos Based on Joint Learning of Motion and Appearance with Multiple Ranking Measures. Appl. Sci. 2021, 11, 1344. https://doi.org/10.3390/app11031344

Dubey S, Boragule A, Gwak J, Jeon M. Anomalous Event Recognition in Videos Based on Joint Learning of Motion and Appearance with Multiple Ranking Measures. Applied Sciences. 2021; 11(3):1344. https://doi.org/10.3390/app11031344

Chicago/Turabian StyleDubey, Shikha, Abhijeet Boragule, Jeonghwan Gwak, and Moongu Jeon. 2021. "Anomalous Event Recognition in Videos Based on Joint Learning of Motion and Appearance with Multiple Ranking Measures" Applied Sciences 11, no. 3: 1344. https://doi.org/10.3390/app11031344

APA StyleDubey, S., Boragule, A., Gwak, J., & Jeon, M. (2021). Anomalous Event Recognition in Videos Based on Joint Learning of Motion and Appearance with Multiple Ranking Measures. Applied Sciences, 11(3), 1344. https://doi.org/10.3390/app11031344