Pose Measurement for Unmanned Aerial Vehicle Based on Rigid Skeleton

Abstract

1. Introduction

- A method framework based on the structural characteristics of a rigid skeleton of the aircraft is proposed to solve the position and attitude of short-range UAVs without knowledge of the aircraft model and size.

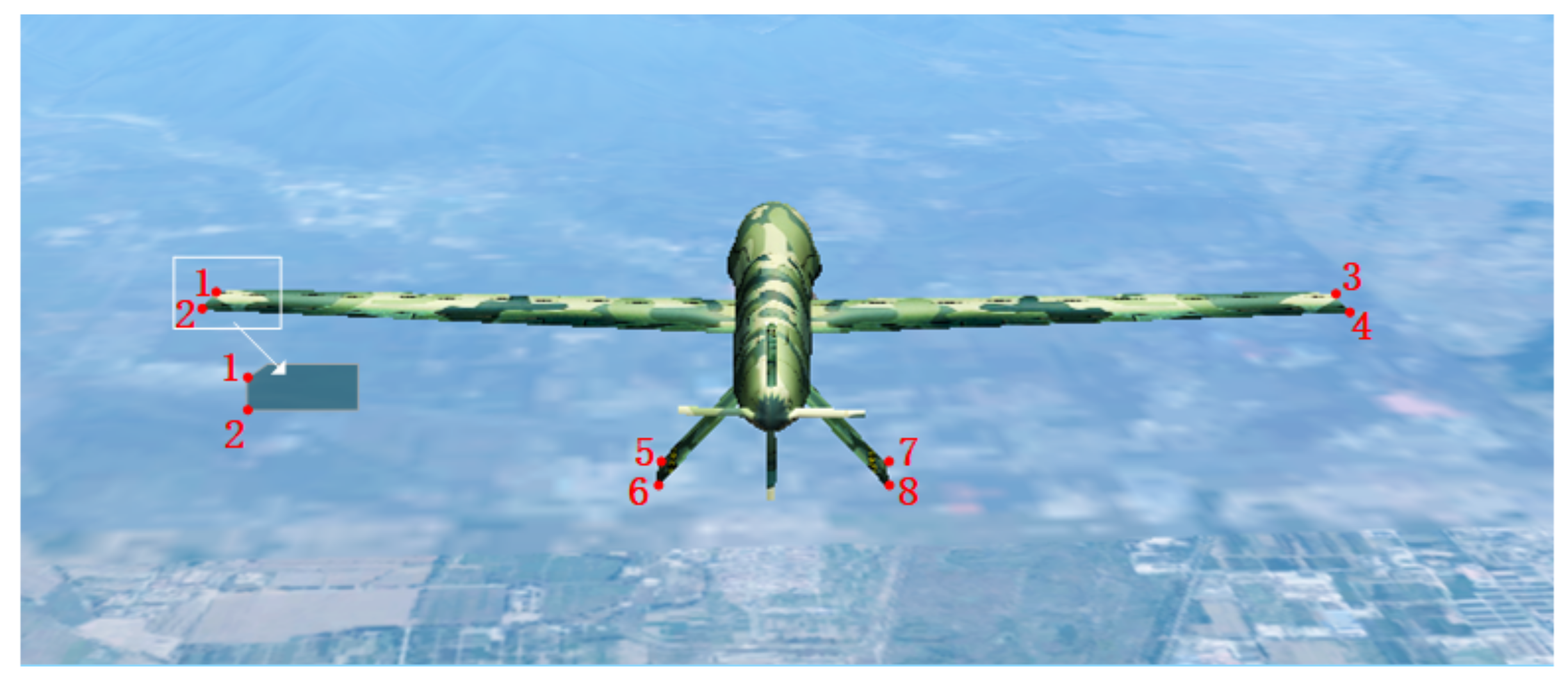

- A key point screening method that combines 2D and 3D information is proposed for aircraft pose measurement. The method in this paper is based on a stereo vision measurement system. Depth information is combined with edge contour and corner point information to reduce the mismatch of aircraft key feature points effectively.

- The proposed method can solve the online attitude of UAVs without identification and other auxiliary equipment, thereby effectively improving the reliability and robustness of the attitude calculation algorithm. Thus, the method can be applied to low-consumption airborne environments.

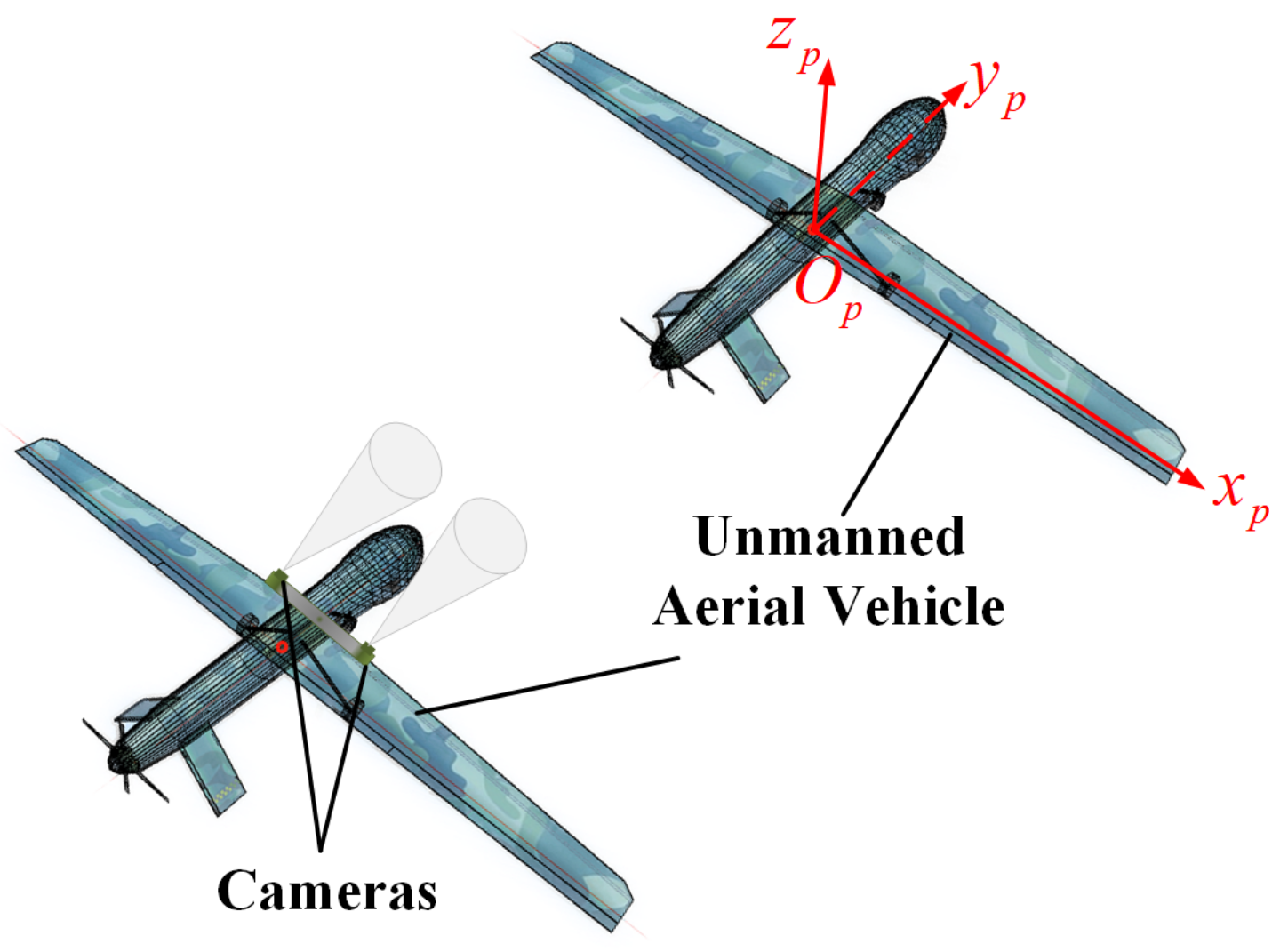

2. System Framework

2.1. Definition of Aircraft Attitude Angle

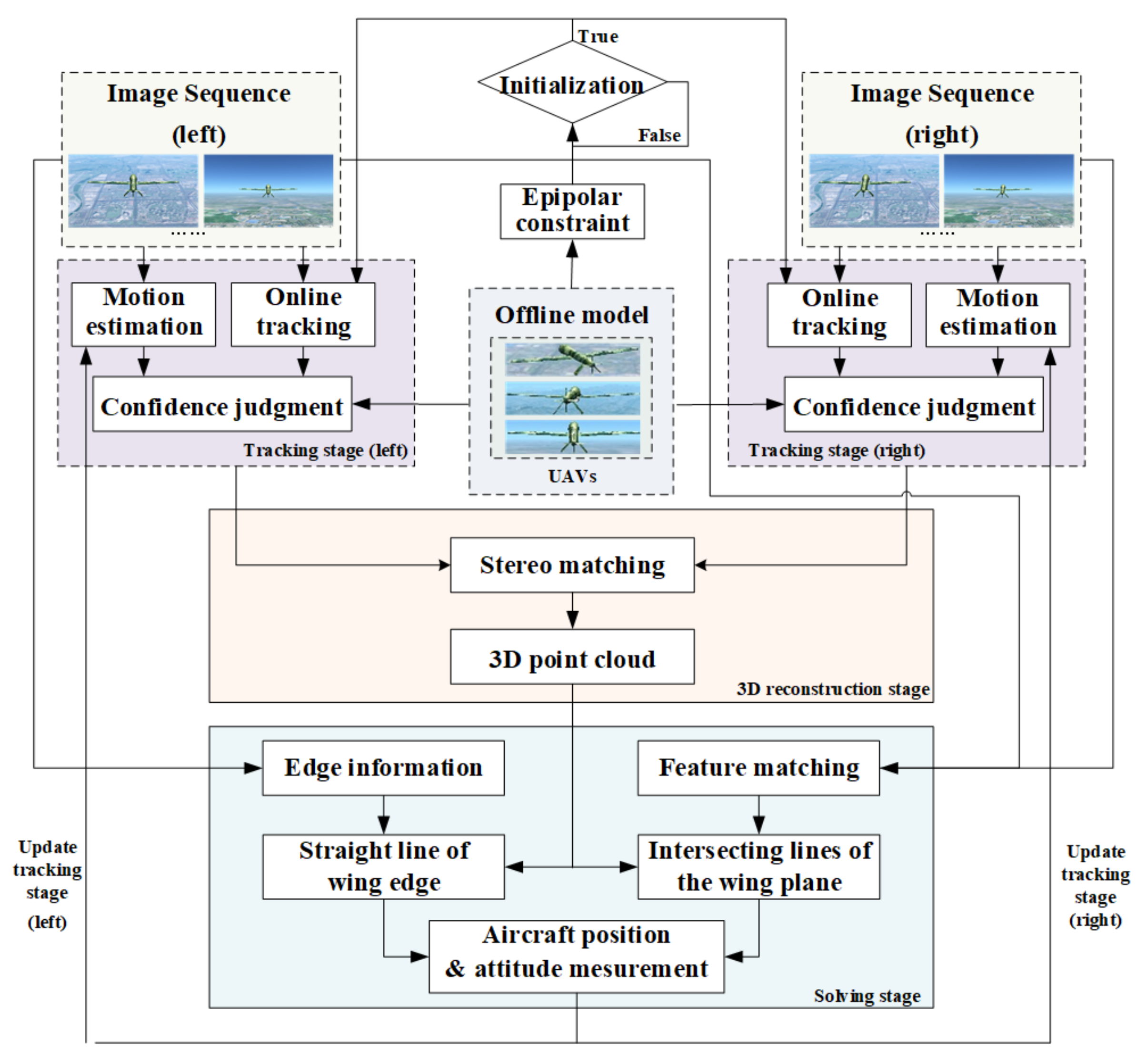

2.2. Proposed Architecture

3. Research Methodology

3.1. Initialization Stage

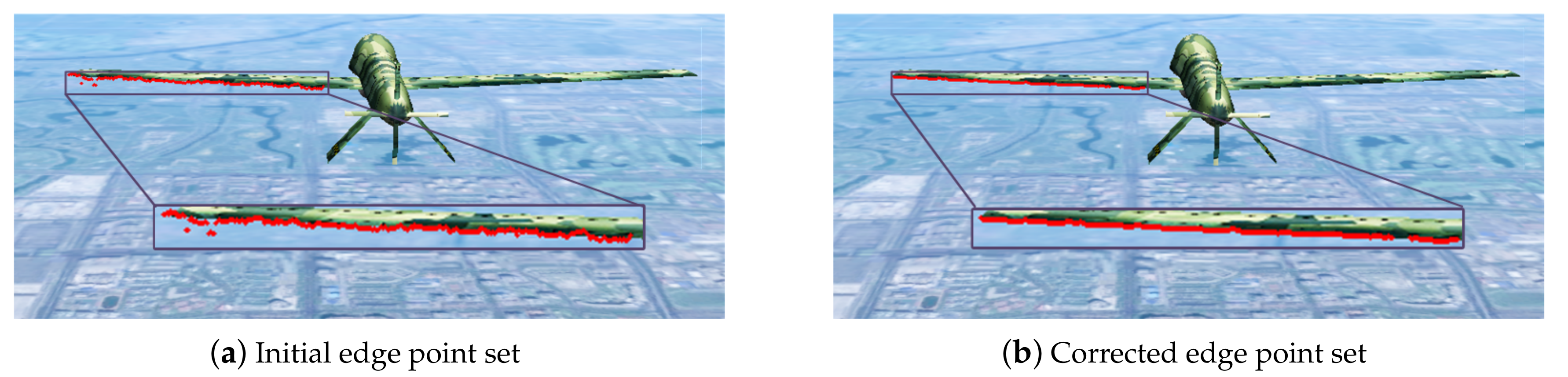

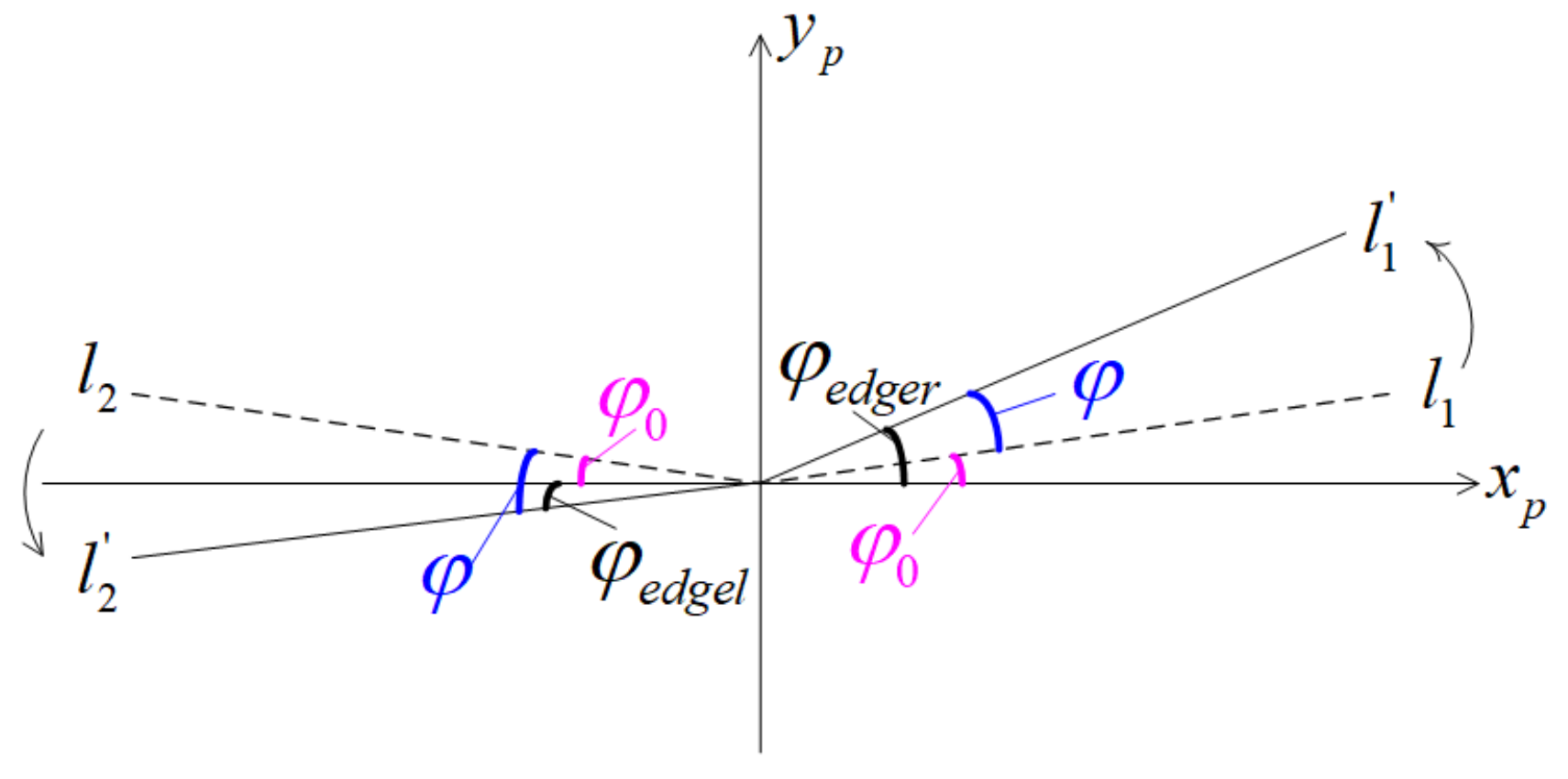

3.2. Tracking Stage

3.3. 3D Reconstruction Stage

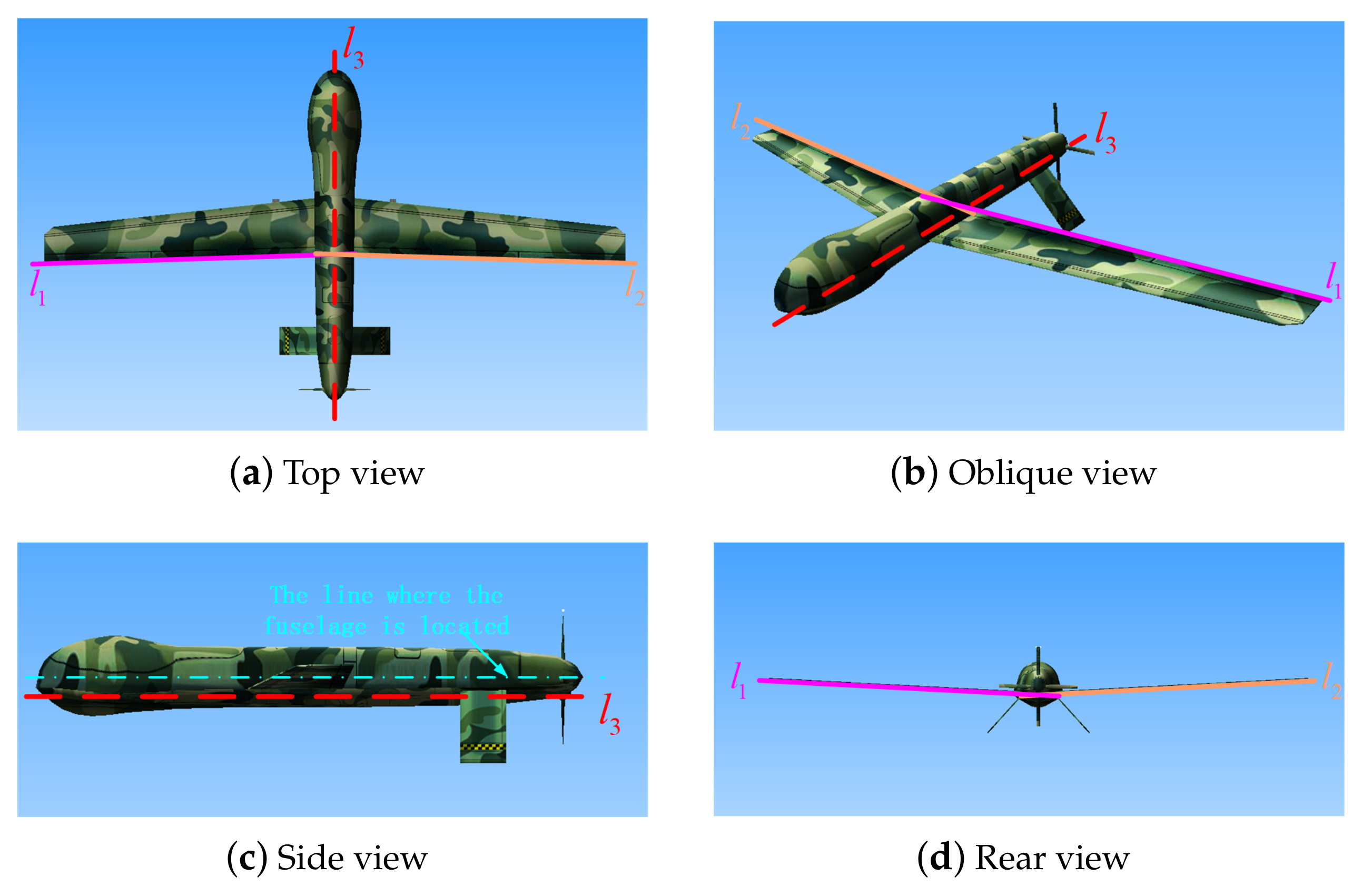

3.4. Solving Stage

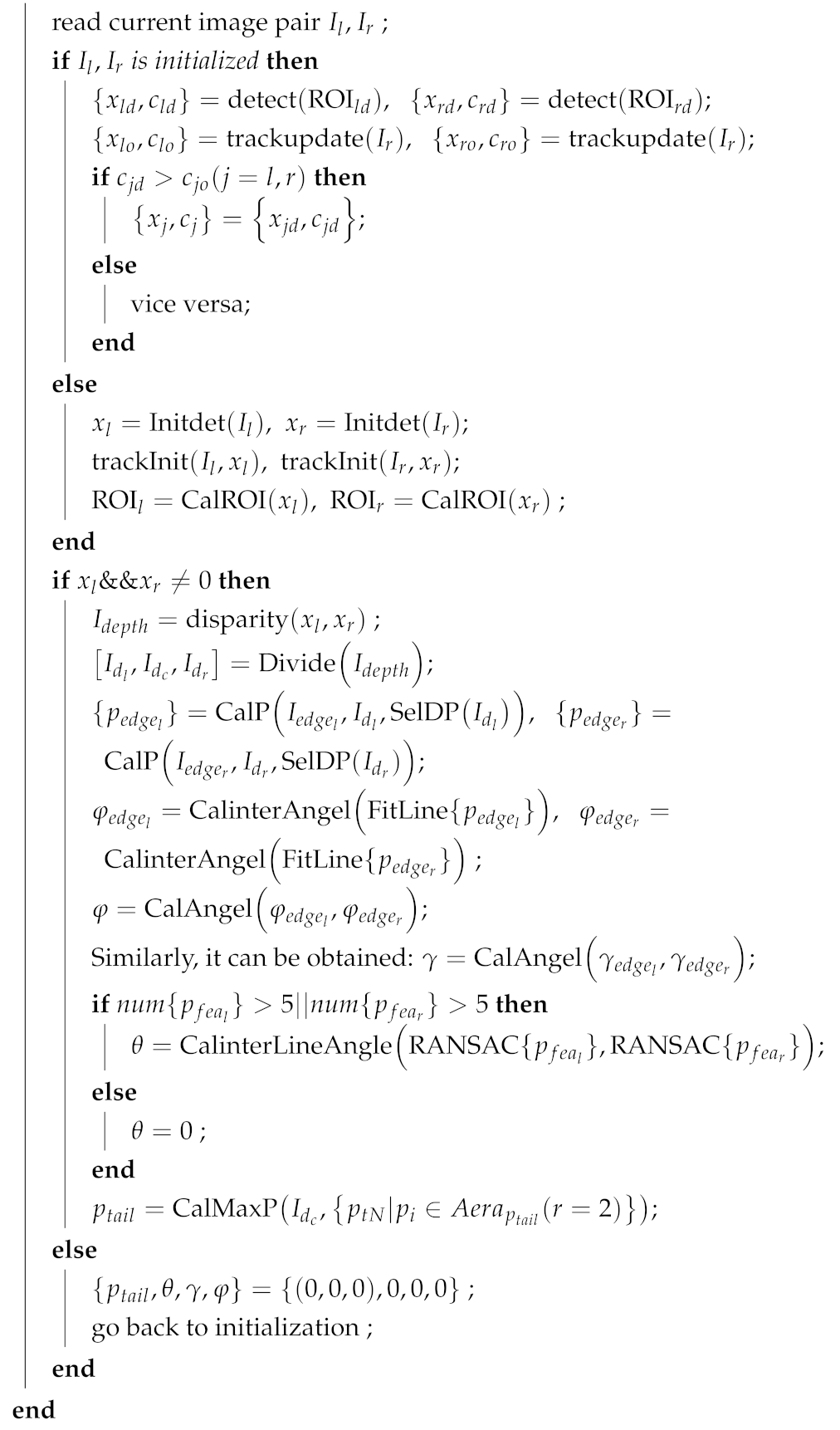

| Algorithm 1: Measuring Position and Orientation of Aircraft. |

| Input: Frame Sequence |

| Output: Location , Pitch , Roll and Yaw |

| while not end of sequence do |

|

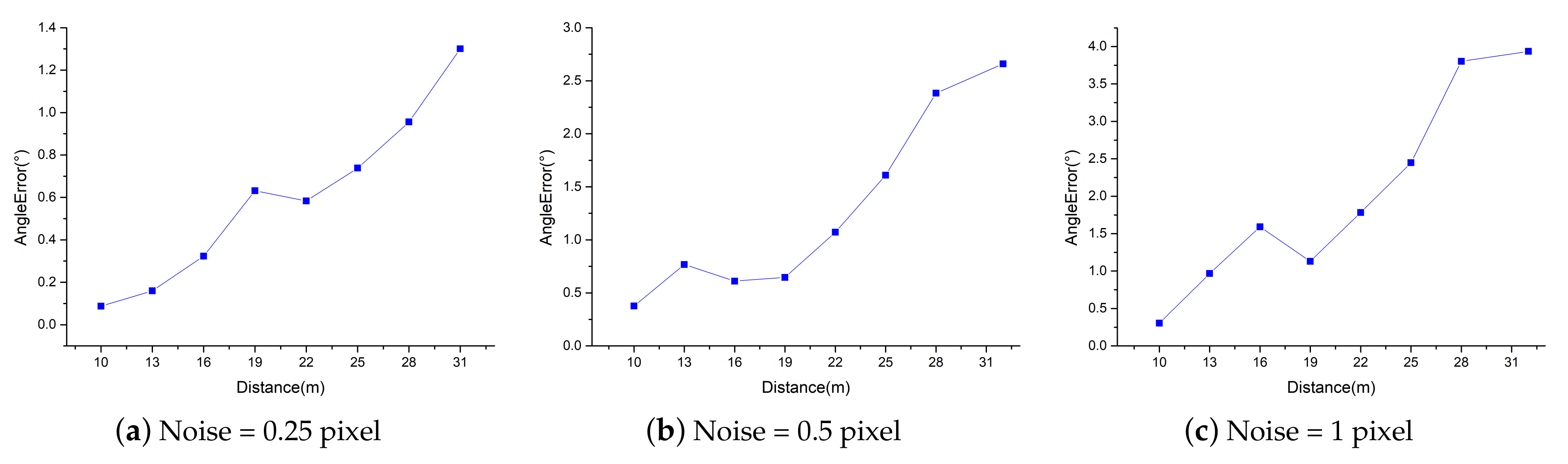

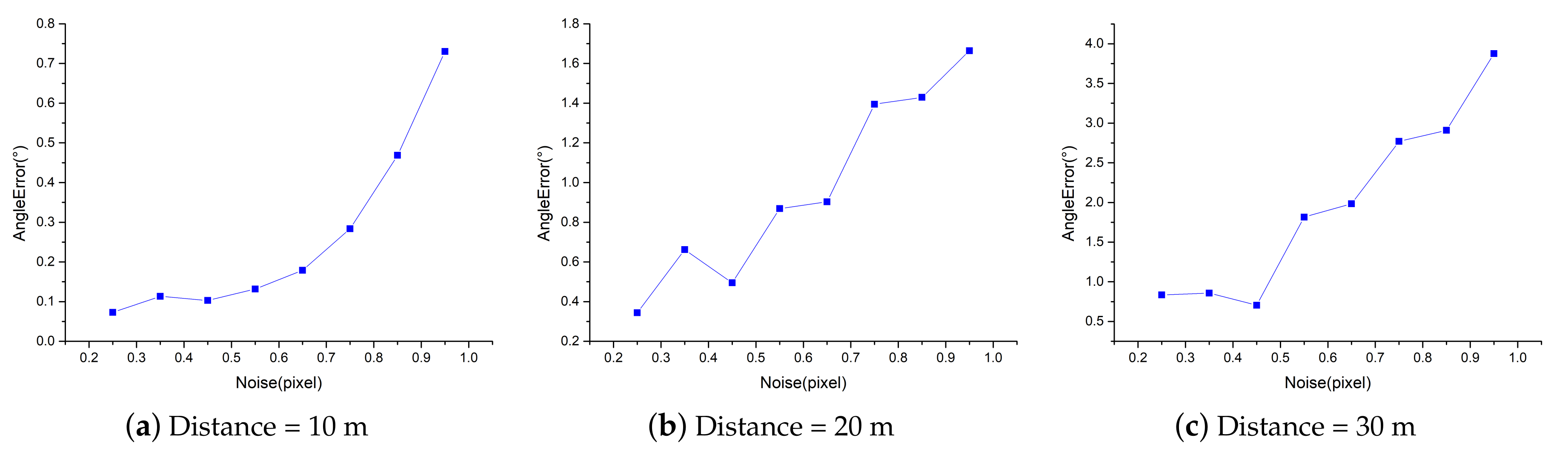

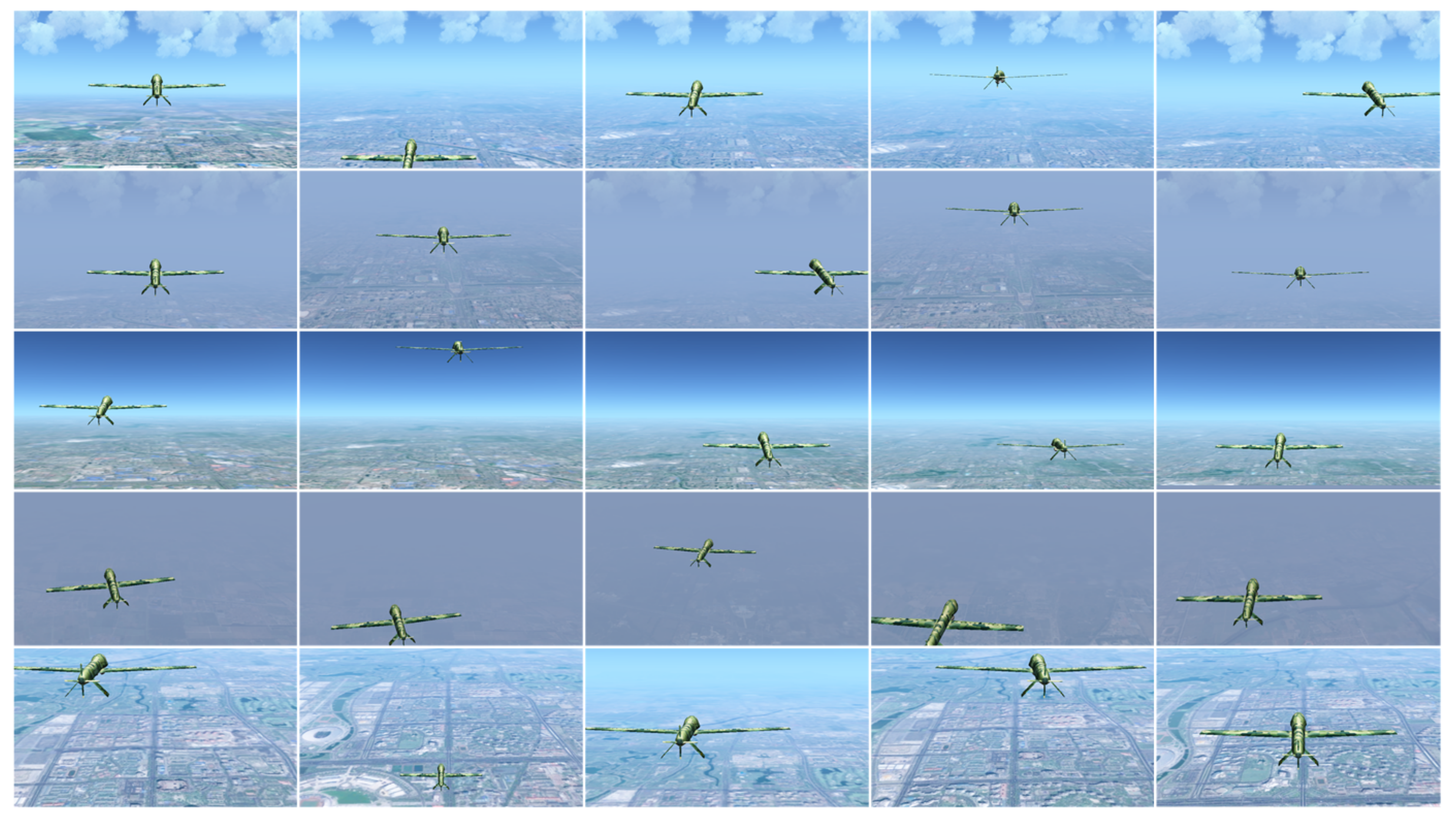

4. Simulation Experiment

5. Physical Experiment

5.1. Implementation Details

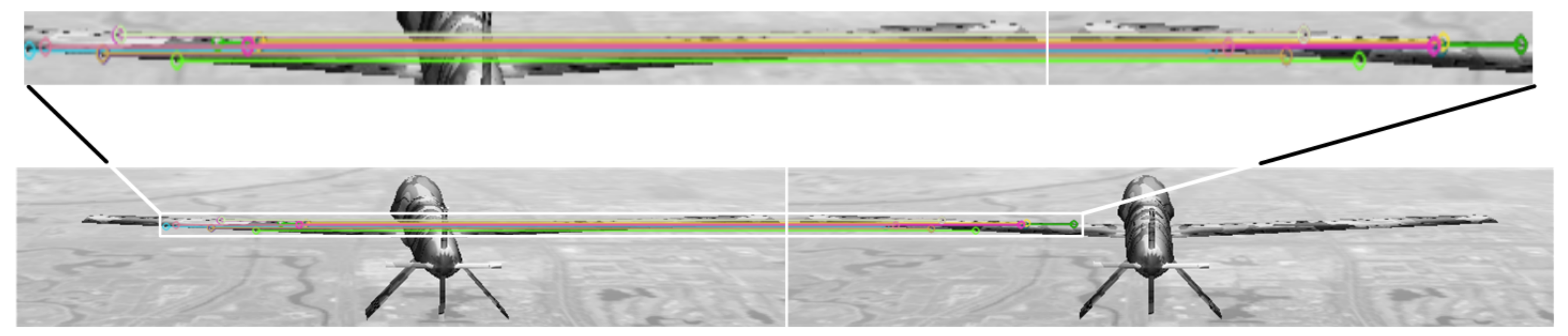

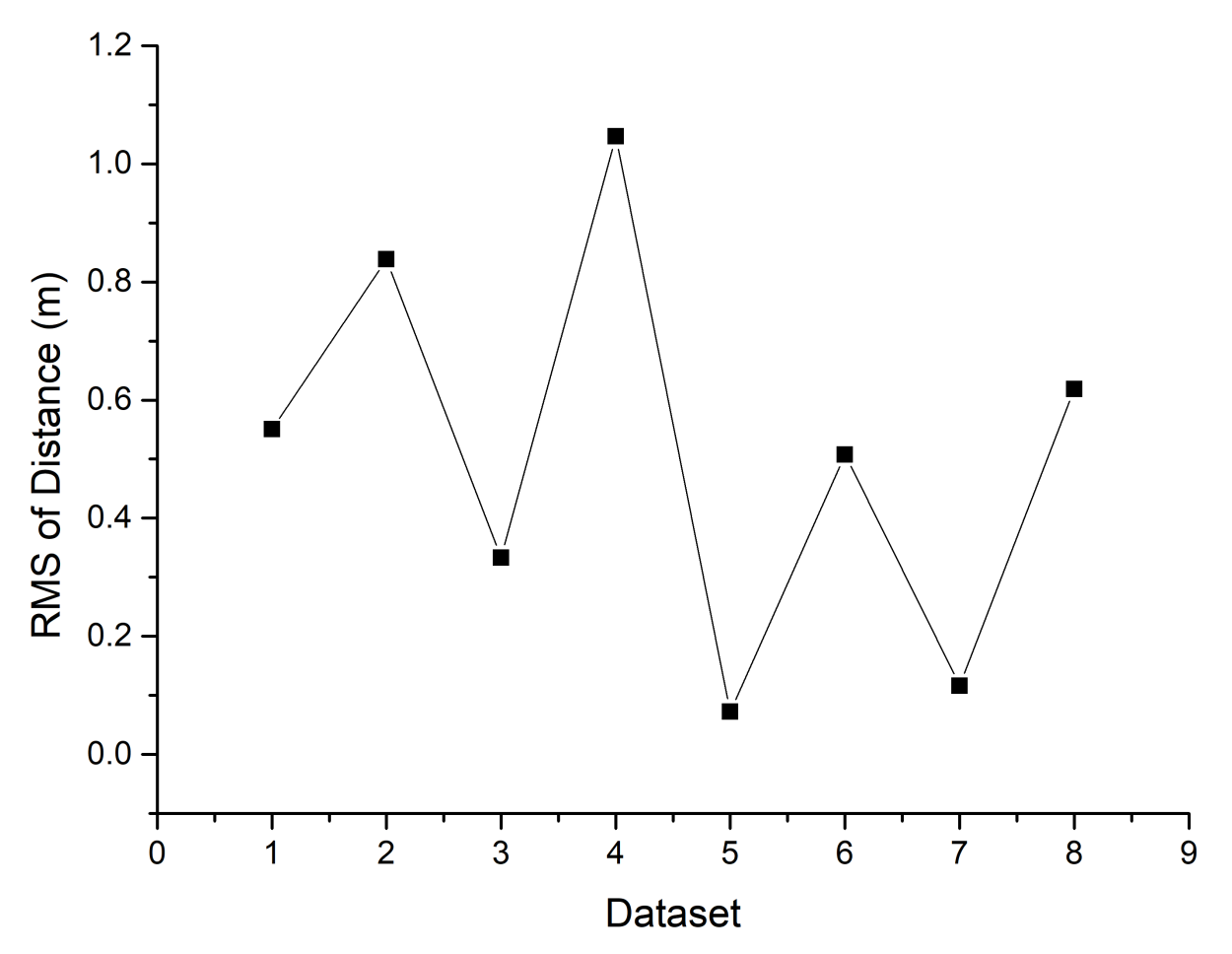

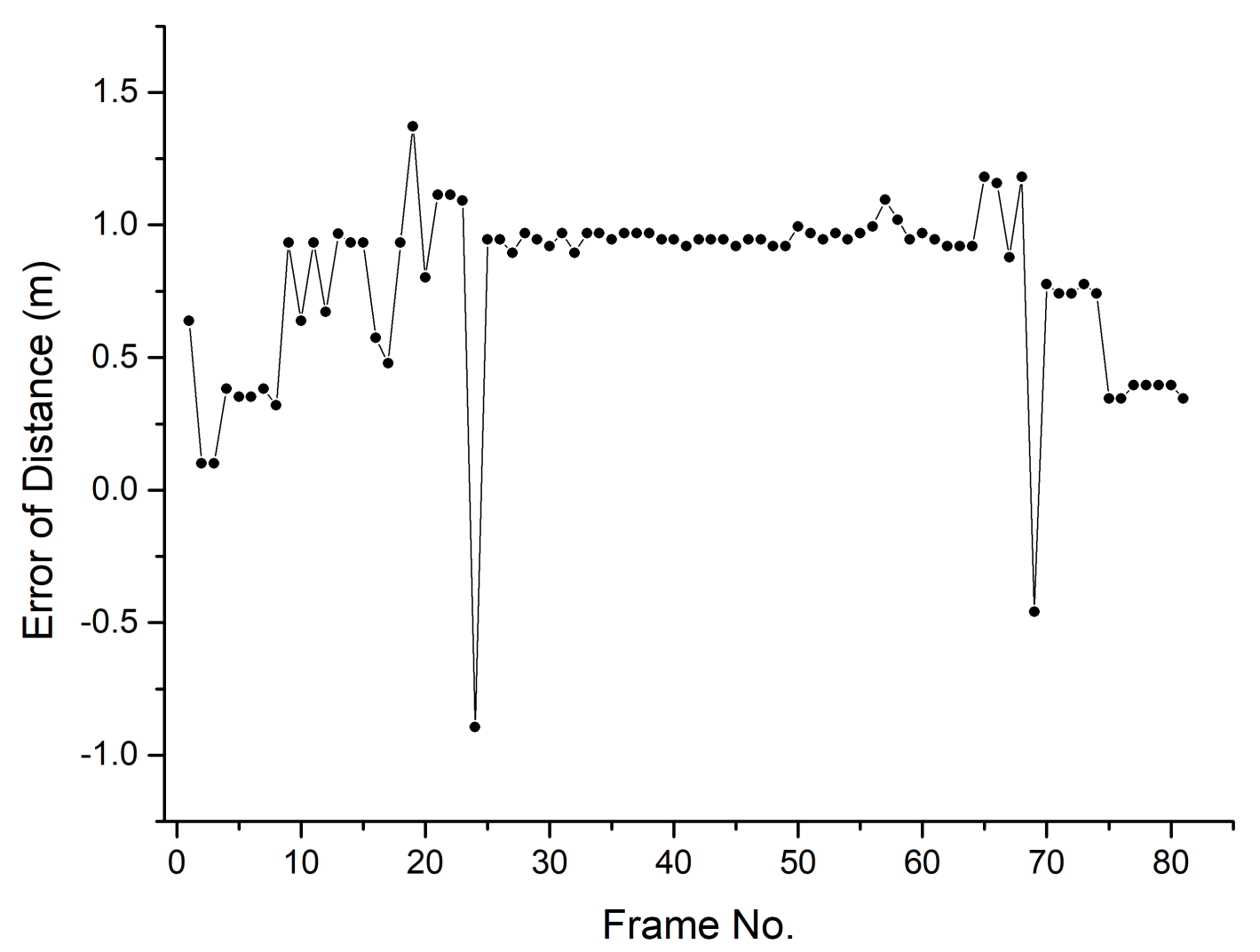

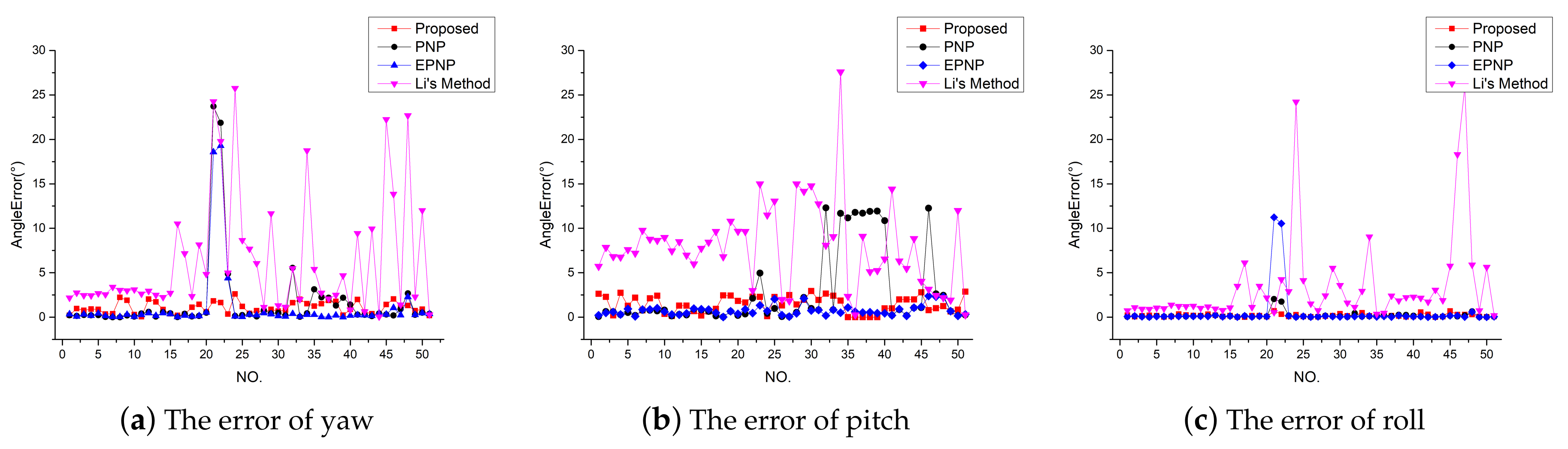

5.2. Measurement Accuracy Evaluation

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| UAV | Unmanned Aerial Vehicle |

| AAR | Automated Aerial Refueling |

References

- Chiang, K.W.; Tsai, M.L.; Chu, C.H. The Development of an UAV Borne Direct Georeferenced Photogrammetric Platform for Ground Control Point Free Applications. Sensors 2012, 12, 9161–9180. [Google Scholar] [CrossRef] [PubMed]

- MartínezdeDios, J.R.; Merino, L.; Ollero, A.; Ribeiro, L.M.; Viegas, X. Multi-UAV Experiments: Application to Forest Fires. Springer Tracts Adv. Robot. 2007, 37, 207–228. [Google Scholar]

- Chao, Z.; Zhou, S.L.; Ming, L.; Zhang, W.G. UAV Formation Flight Based on Nonlinear Model Predictive Control. Math. Probl. Eng. 2012, 2012, 181–188. [Google Scholar] [CrossRef]

- De Marina, H.G.; Espinosa, F.; Santos, C. Adaptive UAV Attitude Estimation Employing Unscented Kalman Filter, FOAM and Low-Cost MEMS Sensors. Sensors 2012, 12, 9566–9585. [Google Scholar] [CrossRef]

- Gross, J.; Gu, Y.; Rhudy, M. Fixed-Wing UAV Attitude Estimation Using Single Antenna GPS Signal Strength Measurements. Aerospace 2016, 3, 14. [Google Scholar] [CrossRef]

- Michael, S.; Sergio, M. Coupled GPS/MEMS IMU Attitude Determination of Small UAVs with COTS. Electronics 2017, 6, 15–31. [Google Scholar]

- Koksal, N.; Jalalmaab, M.; Fidan, B. Adaptive Linear Quadratic Attitude Tracking Control of a Quadrotor UAV Based on IMU Sensor Data Fusion. Sensors 2018, 19, 46. [Google Scholar] [CrossRef]

- Christian, E.; Lasse, K.; Heiner, K. Real-Time Single-Frequency GPS/MEMS-IMU Attitude Determination of Lightweight UAVs. Sensors 2015, 15, 26212–26235. [Google Scholar]

- David, P.; Dementhon, D.; Duraiswami, R.; Samet, H. SoftPOSIT: Simultaneous Pose and Correspondence Determination. Int. J. Comput. Vis. 2004, 59, 259–284. [Google Scholar] [CrossRef]

- Fischler, M.A.; Bolles, R.C. Random Sample Consensus: A Paradigm for Model Fitting with Applications to Image Analysis and Automated Cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Hinterstoisser, S.; Lepetit, V.; Ilic, S.; Holzer, S.; Navab, N. Model Based Training, Detection and Pose Estimation of Texture-Less 3D Objects in Heavily Cluttered Scenes. In Asian Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2012; pp. 548–562. [Google Scholar]

- Liebelt, J.; Schmid, C.; Schertler, K. Viewpoint-Independent Object Class Detection using 3D Feature Maps. In Proceedings of the 2008 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR 2008), Anchorage, AK, USA, 24–26 June 2008; pp. 978–986. [Google Scholar]

- Luo, J.; Teng, X.; Zhang, X.; Zhong, L. Structure extraction of straight wing aircraft using consistent line clustering. In Proceedings of the 2017 2nd International Conference on Image, Vision and Computing (ICIVC), Chengdu, China, 2–4 June 2017. [Google Scholar]

- Wang, B. Research on the Algorithm of Aircraft 3D Attitude Measurement. Ph.D. Thesis, Chinese Academy of Sciences University, Beijing, China, 2012. [Google Scholar]

- Li, G. Three-Dimensional Attitude Measurement of Complex Rigid Flying Target Based on Perspective Projection Matching. Ph.D. Thesis, Harbin Institute of Technology, Harbin, China, 2015. [Google Scholar]

- Haiwen, Y.; Changshi, X.; Supu, X.; Yuanqiao, W.; Chunhui, Z.; Qiliang, L. A New Combined Vision Technique for Micro Aerial Vehicle Pose Estimation. Robotics 2017, 6, 6. [Google Scholar]

- Zhang, L.; Zhu, F.; Hao, Y.; Pan, W. Optimization-based non-cooperative spacecraft pose estimation using stereo cameras during proximity operations. Appl. Opt. 2017, 56, 4522–4531. [Google Scholar] [CrossRef] [PubMed]

- Zhang, L.; Zhu, F.; Hao, Y.; Pan, W. Rectangular-structure-based pose estimation method for non-cooperative rendezvous. Appl. Opt. 2018, 57, 6164–6173. [Google Scholar] [CrossRef] [PubMed]

- Teng, X.; Yu, Q.; Luo, J.; Zhang, X.; Wang, G. Pose Estimation for Straight Wing Aircraft Based on Consistent Line Clustering and Planes Intersection. Sensors 2019, 19, 342. [Google Scholar] [CrossRef] [PubMed]

- Hu, M.; Liu, Z.; Zhang, J.; Zhang, G. Robust object tracking via multi-cue fusion. Signal Process. 2017, 139, 86–95. [Google Scholar] [CrossRef]

- Zhang, J.; Liu, Z. Tracking and Position of Drogue for Autonomous Aerial Refueling. In Proceedings of the 2018 IEEE 3rd Optoelectronics Global Conference (OGC), Shenzhen, China, 4–7 September 2018. [Google Scholar]

- Zhang, J.; Liu, Z.; Gao, Y.; Zhang, G. Robust Method for Measuring the Position and Orientation of Drogue Based on Stereo Vision. IEEE Trans. Ind. Electron. 2020. Early Access Article. [Google Scholar] [CrossRef]

- Yoav, F.; Robert, E.S. A Decision-Theoretic Generalization of On-Line Learning and an Application to Boosting. J. Comput. Syst. Sci. 1997, 55, 119–139. [Google Scholar]

- Henriques, J.F.; Caseiro, R.; Martins, P.; Batista, J. High-Speed Tracking with Kernelized Correlation Filters. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 37, 583–596. [Google Scholar] [CrossRef]

- Kalman, R.E. A New Approach To Linear Filtering and Prediction Problems. J. Basic Eng. 1960, 82D, 35–45. [Google Scholar] [CrossRef]

- Hirschmuller, H. Stereo Processing by Semiglobal Matching and Mutual Information. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 328–341. [Google Scholar] [CrossRef]

- Bellman, R.E.; Dreyfus, S.E. Dynamic Programming; Dover Publications, Incorporated: New York, NY, USA, 2003; pp. 348–358. [Google Scholar]

- Canny, J. A Computational Approach to Edge Detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, PAMI-8, 679–698. [Google Scholar] [CrossRef]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2012. [Google Scholar]

- Lepetit, V.; Moreno-Noguer, F.; Fua, P. EPnP: An Accurate O(n) Solution to the PnP Problem. Int. J. Comput. Vis. 2009, 81, 155–166. [Google Scholar] [CrossRef]

- Li, R.; Zhou, Y.; Chen, F.; Chen, Y. Parallel vision-based pose estimation for non-cooperative spacecraft. Adv. Mech. Eng. 2015, 7, 1687814015594312. [Google Scholar] [CrossRef]

| contact measurement | GNSS | In ref. [5,6], GNSS is used to solve the aircraft pose, but the data update frequency of GNSS is slow, and the signal transmission is easily affected. |

| (attitude internal measurement) | IMU | In ref. [7,8], IMU is used to calculate aircraft pose, but the positioning error of IMU increases with time, and the long-term accuracy is poor. |

| Ref. [9] proposed SoftPOSIT, which can solve pose parameters iteratively. | ||

| Ref. [10] proposed the N-point perspective problem, which is a PNP problem. | ||

| Monocular | Ref. [11] proposed a method to generate a template to estimate the target pose. | |

| non-contact measurement | Ref. [12] performed pose measurement based on contour matching method. | |

| Ref. [13] recognized the lines of aircraft to realize the structure extraction. | ||

| Ref. [14] proposed a method to solve aircraft pose by using the line feature. | ||

| (attitude external measurement) | Ref. [15] proposed a method to compare simulated images with real model. | |

| Binocular or multiocular | Ref. [16] proposed a combined vision technology based on a multi-camera. | |

| Refs. [17,18] proposed optimization-based methods to estimate aircraft pose. | ||

| Ref. [19] extracted and clustered image line features to solve aircraft pose. | ||

| Proposed Method proposes pose measurement based on rigid skeleton. |

| NO. | Measurement Result() | True Value() | Error() | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Yaw | Pitch | Roll | Yaw | Pitch | Roll | Yaw | Pitch | Roll | |

| 1 | −0.9 | 17.7 | 0.2 | 0.0 | 15.0 | 0.0 | −0.9 | 2.7 | 0.2 |

| 2 | 0.7 | −9.7 | 0.1 | 0.0 | −9.0 | 0.0 | 0.7 | −0.7 | 0.1 |

| 3 | −12.2 | 25.1 | −2.3 | −13.2 | 23.7 | 0.0 | 1.0 | 1.4 | −2.3 |

| 4 | −16.3 | 23.6 | 1.5 | −14.8 | 20.8 | 0.0 | −1.5 | 2.8 | 1.5 |

| 5 | −3.5 | 25.5 | 1.8 | −4.1 | 24.2 | 0.0 | −0.6 | 1.3 | 1.8 |

| 6 | 1.3 | 21.6 | −0.4 | 0.0 | 20.0 | 0.0 | 1.3 | 1.6 | −0.4 |

| 7 | −9.8 | 24.4 | −2.1 | −8.2 | 23.5 | 0.0 | −1.7 | 0.8 | −2.1 |

| 8 | 2.0 | 21.8 | 1.3 | 2.3 | 23.6 | 0.0 | 0.3 | −1.8 | 1.3 |

| 9 | 5.7 | 20.7 | −1.9 | 5.5 | 23.3 | 0.0 | 0.2 | −2.6 | −1.9 |

| 10 | 5.5 | 21.0 | 2.1 | 7.7 | 23.1 | 0.0 | −2.2 | −2.1 | 2.1 |

| 11 | −0.6 | 13.5 | −0.2 | 0.0 | 11.0 | 0.0 | −0.6 | 2.5 | −0.2 |

| 12 | 1.6 | 7.9 | 0.3 | 0.0 | 6.0 | 0.0 | 1.6 | 1.9 | 0.3 |

| Method | RMSE of Yaw () | RMSE of Pitch () | RMSE of Roll () | RMSE of Distance (m) |

|---|---|---|---|---|

| Proposed | 1.1 | 2.1 | 0.8 | 0.61 |

| EPNP | 2.0 | 0.8 | 1.8 | 1.33 |

| PNP | 2.4 | 2.7 | 0.3 | 0.31 |

| Li’s Method | 4.6 | 3.2 | 4.7 | 0.57 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, J.; Liu, Z.; Zhang, G. Pose Measurement for Unmanned Aerial Vehicle Based on Rigid Skeleton. Appl. Sci. 2021, 11, 1373. https://doi.org/10.3390/app11041373

Zhang J, Liu Z, Zhang G. Pose Measurement for Unmanned Aerial Vehicle Based on Rigid Skeleton. Applied Sciences. 2021; 11(4):1373. https://doi.org/10.3390/app11041373

Chicago/Turabian StyleZhang, Jingyu, Zhen Liu, and Guangjun Zhang. 2021. "Pose Measurement for Unmanned Aerial Vehicle Based on Rigid Skeleton" Applied Sciences 11, no. 4: 1373. https://doi.org/10.3390/app11041373

APA StyleZhang, J., Liu, Z., & Zhang, G. (2021). Pose Measurement for Unmanned Aerial Vehicle Based on Rigid Skeleton. Applied Sciences, 11(4), 1373. https://doi.org/10.3390/app11041373